Interval centred form for proving stability of non-linear discrete-time systems

Abstract

In this paper, we propose a new approach to prove stability of non-linear discrete-time systems. After introducing the new concept of stability contractor, we show that the interval centred form plays a fundamental role in this context and makes it possible to easily prove asymptotic stability of a discrete system. Then, we illustrate the principle of our approach through theoretical examples. Finally, we provide two practical examples using our method : proving stability of a localisation system and that of the trajectory of a robot.

1 Introduction

Proving properties of Cyber Physical Systems (CPS) is an important topic that should be considered when designing reliable systems [3, 8, 21, 29]. Among those properties, stability is often wanted for a dynamical system : achieving stability around a given setpoint in the state space of the latter is one of the aims of control theory. Proving stability of a dynamical system can be done rigorously [12], which is of major importance when applied to real-life systems. Indeed, a stable system is considered safe, since its behaviour is predictable.

Let us recall the definition of stability of a dynamical system. Consider the non-linear discrete-time system

| (1) |

According to Lyapunov’s definition of stability [7, 28], the system (1) is stable if

| (2) |

The system is asymptotically stable (or Lyapunov stable) if there exists a neighbourhood of such that any initial state taken in this neighbourhood yields a trajectory which converges to ,

| (3) |

Finally, the system is exponentially stable if for a given norm

| (4) |

A classical method to prove stability of a system is to linearise the latter around and check if the eigenvalues are inside the unit disk. However, due the inherent uncertainties of a real-life system’s model, no guarantee can be obtained without using interval analysis [26]. The Jury criterion [10] can also be used on the linearised system in this context, but again, interval computation has to be performed to get a proof of stability [24]. Moreover, to our knowledge, none of the existing methods is able to give an approximation for the neighbourhoods and used in Lyapunov’s definition. Now, finding values for and is needed in practice, for instance to initialize algorithms which approximate basins of attraction [14, 22, 27] or reachable sets [16].

This paper proposes an original approach to prove Lyapunov stability of a non-linear discrete system, but also to find values for and . It uses the centred form, a classical concept in interval analysis [17]. Moreover, it does not need the introduction of any Lyapunov function, Jury criterion, linearisation, or any other classical tool used in control theory.

Section 2 briefly presents interval analysis and the notations used in the rest of this paper. Section 3 introduces the new notion of stability contractor and gives a theorem which explains why this concept is useful for stability analysis. Section 4 recalls the definition of the centred form. It provides a recursive version of the centred form in the case where the function to enclose is the solution of a recurrence equation. It also shows that the centred form can be used to build stability contractors. Section 5 shows that the approach is able to reach a conclusion for a large class of stable systems and provides a procedure for proving stability. Some examples are given to illustrate graphically the properties of the approach. Section 7 concludes the paper and proposes some perspectives.

2 Introduction to interval analysis

The method presented in this paper is based on interval analysis. In short, interval analysis is a field of mathematics where intervals, i.e. connected subsets of , are used instead of real numbers to perform computations. Doing so allows to enclose all types of uncertainties (from floating-point round-off to modelling errors) of a system and therefore yield a guaranteed enclosure for the solution of a problem related to that system. In this section, we briefly introduce the notations and important concepts used later in this paper. More details about interval analysis and its applications can be found in [11, 19].

An interval is a set delimited by a lower bound and an upper bound such that :

Intervals can be stacked into vectors, and are thus denoted by

We write the interval vector of size , all the components of which are equal to .

Vectors of intervals are often called boxes or interval vectors. The set of axis-aligned boxes of is denoted by . Similarly, interval vectors can be concatenated into interval matrices.

Intervals, interval vectors (or matrices) can be multiplied by a real as such :

We denote by the width of :

The width of an interval vector is given by

The absolute value of an interval is

And the norm of an interval vector is defined as follows

Later in this paper, we will use the following implication

| (5) |

The usual arithmetic operators () can be defined over intervals (as well as boxes and interval matrices). Operations involving intervals, interval vectors and interval matrices can therefore be naturally deduced from their real counterpart.

Extending a real function to intervals (and equivalently to interval vectors/matrices) can also be achieved as follows :

Except in trivial cases, usually cannot be written as an interval, whence the use of inclusion functions. An inclusion function associated with yields an interval (or interval vector/matrix) enclosing the set :

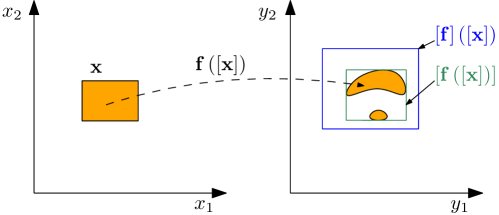

is said to be minimal if is the smallest interval (or interval vector/matrix) enclosing the set (see Figure 1). The minimal inclusion function associated with will be denoted by .

An inclusion function is said to be natural when it is expressed by replacing its variables and its elementary functions and operators by their interval counterparts.

Example 1.

Consider the function such that for

The natural inclusion function of is :

where and are the inclusion functions of and .

3 Stability contractor

In this section, we present the concept of stability contractor, a tool that can be used to rigorously prove the stability of a dynamical system. The rigour of the method comes from the use of interval analysis.

The following new definition adapts the definition of a contractor, as given in [4], to stability analysis.

Definition 1.

Consider a box of . A stability contractor of rate is an operator which satisfies

| (6) |

for all boxes inside . For denotes the iterated function . If , denotes the identity function.

Example 2.

If is an interval, the operator is a stability contractor whereas the operator is not.

Proposition 1.

If is a stability contractor of rate , then

| (7) |

Proof.

A consequence of this proposition is that getting a stability contractor allows us to prove stability of a system without performing an infinitely long set-membership simulation. It suffices to have one box such that , to conclude that the system is asymptotically stable for all . In other words, the system is proven to be Lyapunov stable in the neighbourhood .

Now, if one can build a stability contractor for a given dynamical system, then proving Lyapunov stability of the latter for a given initial condition comes down to applying proposition 1. Building such a contractor is addressed in the next section.

4 Centred form of an interval function

The aim of this section is to present a general method for building a stability contractor for discrete dynamical systems. It is based on the concept on centred form, proposed by Moore in [18]. Additionally, it proposes an algorithm to compute iteratively the centred form of an iterated function.

Consider a function , with a Jacobian matrix . Consider a box and one point in . For simplicity, and without loss of generality, we assume that and that .

Remark 1.

If this condition is not satisfied, i.e. or , the problem can be transformed into an equivalent problem satisfying the latter :

| (8) |

i.e.

| (9) |

Now, consider the new problem where . Since and , the new problem satisfies the condition stated above.

Let us recall the definition of the centred form,as given by [18] :

Definition 2.

The centred form associated to the function is given by

| (10) |

where is the natural extension of .

Proposition 2.

According to [18], for all ,

| (11) |

Additionally, the centred form tends towards the minimal inclusion function when is sufficiently small :

| (12) |

Theorem 1.

Consider a function with and with Jacobian matrix . Denote by and the natural inclusion functions for and . The centred form associated to is given by the following sequence

| (13) |

Proof.

Remark 2.

From Proposition 2, for a given , we have

| (16) |

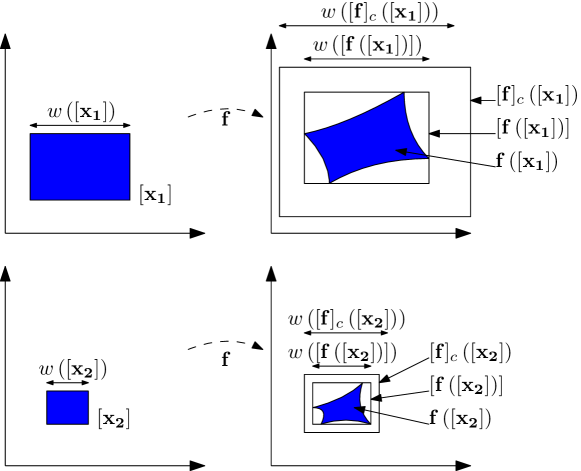

which means that tends towards the minimal inclusion function when . Now, for a given , even very small, we generally observe the following :

| (17) |

In other words, the pessimism introduced by the centred form increases with .

Theorem 2.

Consider a function with . If there exist a box and a real such that then the interval operator is a stability contractor inside .

Proof.

Properties (6, i), (6, ii) and (6, iii) of definition 1 are easily checked. Now, let us prove property (6, iv) by induction.

Let us define the sequence

Now, assume that the property also holds for a , i.e.

Let us show that

∎

Theorem 2 shows that the centred form can be used in a very general way to build stability contractors. Other integration methods such as the one proposed by Lohner [15] or the ones based on affine forms [2] do not have this property, even if they usually yield a tighter enclosure of the image set of a function.

5 Proving stability using the centred form

In this section, we show how the centred form can be used to prove stability of non-linear dynamical systems.

5.1 Method

Consider the system described by Equation (1), where .

In short, the methods consists in finding an initial box in the state space of the system such that the centred form of is a stability contractor. This implies that iterating this centred form onto that initial box will converge towards . Since the centred form is an inclusion function for , that implies that the system will also converge towards .

More specifically, if for a given box with width there exist and such that , then is a stability contractor of rate (according to theorem 2). Now, since proposition 1 states

and since proposition 2 asserts that

it follows that

which implies asymptotic stability of the system (see Equation 3).

Furthermore, let us define the sequence

| (18) |

Thus, substituting Equation (18) in property (6, iv) and applying Equation (5) yields

where . This implies exponential stability of the system (see Equation 4).

Our method is summarized by algorithm 1. It takes as inputs the function describing the system, the initial box , and a maximum number of iterations . The latter is necessary, as stated in remark 2 to avoid looping indefinitely. Usually, does not need to be larger than , since the iterated centred form tends to diverge with the number of iterations.

It is important to remember that if the previous algorithm yields a false value, that does not necessarily mean that the system is unstable. Indeed, bisecting the initial box into smaller boxes and running the algorithm on them could prove stability of the system in the neighbourhood defined by those boxes.

5.2 Completeness of the method

Now, let us show that whenever a system actually is exponentially stable, our method will be able to prove it. Given , we denote by the set of all hypercubes centred at and such that .

Proposition 3.

If the system is exponentially stable around , then

Proof.

Let us assume exponential stability of the system described by around , i.e. there exists a neighbourhood of , such that

This property translates into

for all cubes in centred in , where is the smallest box which contains the set . Take one of these cubes and denote by its width . If is sufficiently small, the pessimism of the centred form becomes arbitrarily small and we have

∎

6 Application

6.1 Example 1 : Proving stability of the logistic map

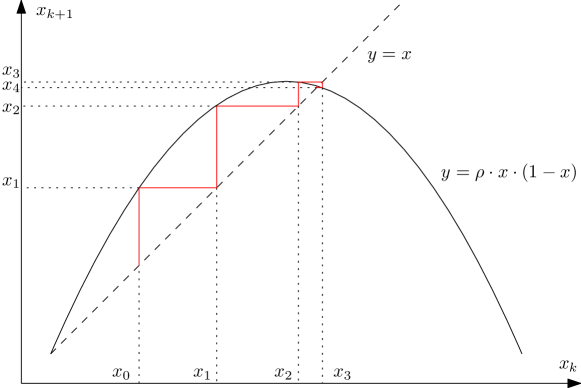

The aim of this example is to illustrate the section 5.2. The logistic map is a simple recurrence relation which behaviour can be highly complex or chaotic :

| (19) |

The parameter influences the dynamics of the system. For , the system is stable, but shows an oscillating behaviour around its equilibrium point (see figure 3).

Note that the equilibrium point is not and we thus need to centre our problem to apply the stability method as described in this paper (see remark 1).

Figure 4 displays the behaviour of our algorithm for an initial box . Even if is not contained in , we have . We have thus shown that for an initial state chosen in , the trajectory will converge towards the stable point.

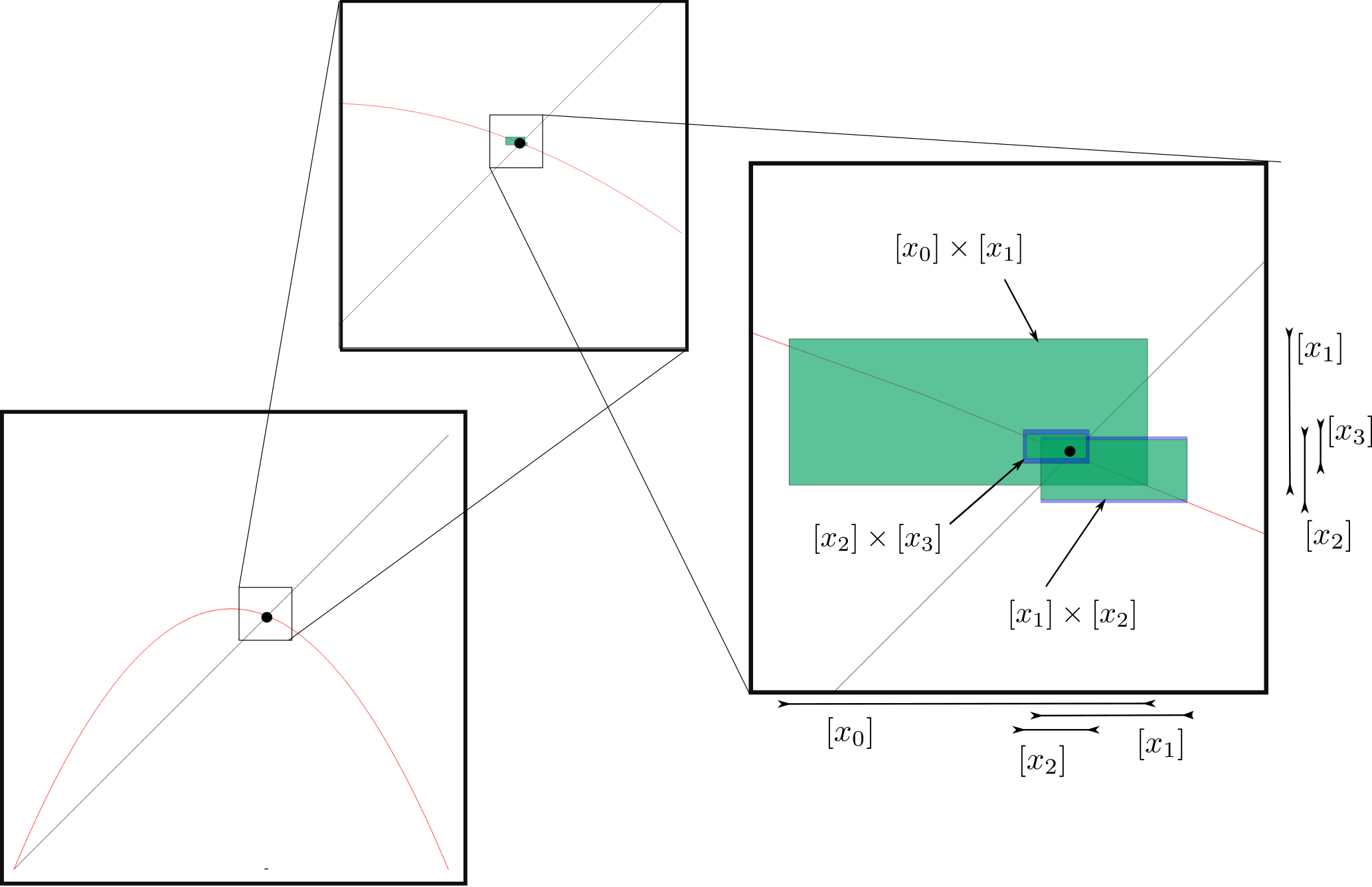

6.2 Example 2 : Proving stability of a three dimensional map

The aim of this example is to illustrate the steps of algorithm 1 in a higher dimensional problem.

Let us consider the following discrete system:

| (20) |

where is the rotation matrix parametrized by roll (, around axis ), pitch (, around ) and yaw (, around ) angles.

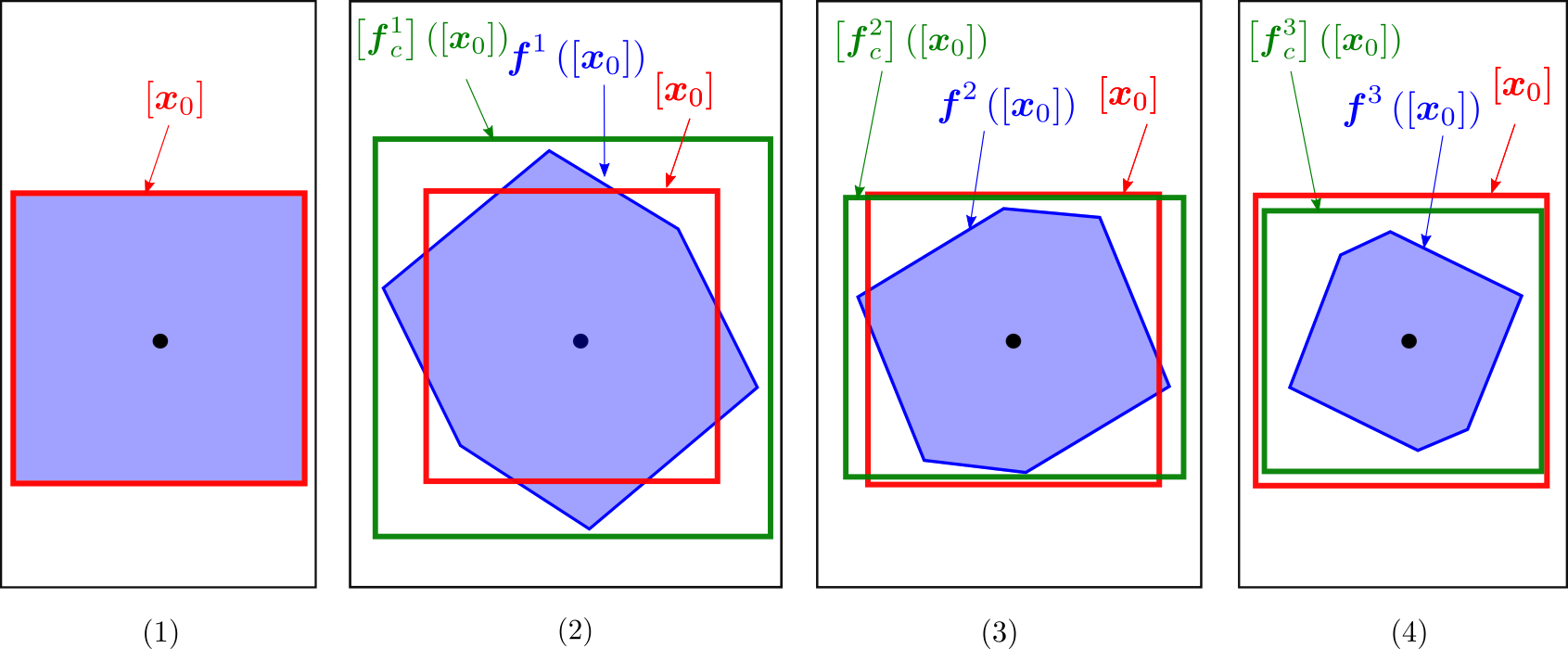

With our approach, we show that the discrete system is stable, as depicted by Figure 5 which is a projection of the 3-dimensional system across steps of our algorithm. With , where , the algorithm needs 3 iterations to get captured inside the initial box. We thus have proved the stability of the system. The blue sets correspond to the image of by and has been obtained using a Monte-Carlo method for visualization purposes. The point in the centre corresponds to the origin .

Considering the shape of the set , we understand that zonotope-based methods [6, 30] or Lohner integration methods [15, 31] could get a stronger convergence. However, these efficient operators cannot be used to prove the stability except if we are able to prove that they correspond to a stability contractor, which is not the case yet.

6.3 Example 3 : Validation of a localisation system

The aim of this example is to illustrate how our method could be applied to prove stability of an existing localisation system, before integrating the latter in a robot for example.

Remark 3.

Proving the stability of such a localisation system is of importance in robotics. Indeed, the commands are usually computed using the robot’s state, estimated by the localisation system. If the latter is not stable, i.e. it does not converge towards the actual position of the robot, then control is useless. Note that additionally to stability, accuracy and precision are also wanted features for a localisation system, that we won’t address here.

We also briefly explain how our method can be coupled with a paving algorithm to characterize the stability region of the localisation system. The latter corresponds to the acceptable set of parameters of the system, in the sense that it remains stable regardless of the parameters picked in that set.

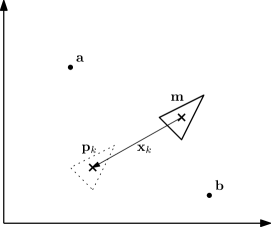

Consider a range-only localisation system using two landmarks as represented on Figure 6. A static robot is located at position , and is able to measure the exact distance between itself and the landmarks.

More precisely, we assume that we have the following measurements

Assume also that, at iteration , the robot believes to be at position whereas it actually is at position . We define the Newton sequence as

where

We have

where is the localisation error. We want to show that for in a given region of the plane, the sequence defined by is stable, i.e., converges to .

Remark 4.

Of course, this localisation system could be greatly improved. But let us imagine this localisation system has been implemented on a robot and working for years. Its performances are known and trusted, and changing it is not possible or desired. The goal here is to validate the existing system, not to build a new one. The guarantee of stability is a property we can check with our approach.

Now, let us introduce the concept of stability region.

Definition 3.

The sequence parametrized by is robustly stable if

| (21) |

Definition 4.

We define the stability region as

| (22) |

To calculate an inner approximation of , we decompose the -space into small boxes. Take one of these small boxes and a box around . The box should be small, but large compared to . If there exists such that the system is stable, i.e. , then .

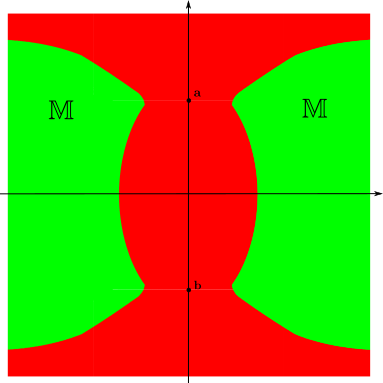

Consider a situation where the landmarks are at positions . To characterize the set , we build a paving of the plane with small boxes of width . Taking and , we get the results depicted on Figure 7. The green area is proved to be inside . As a consequence, if the robot is located in the green area and if its initial localisation error is smaller than , then the classical Newton method will converge towards the actual position of the robot. On the other hand, we are not able to conclude anything about the stability of the localisation algorithm in the red area: this could require to take smaller boxes in the -space, a smaller initial condition , or this might also mean that the localisation system is not stable when initialised inside that specific choice of parameters.

6.4 Example 4 : Stability of a cyclic trajectory

This last example illustrates how our method can be applied to a real-life problem : a robot controlled by a state-machine is deployed in a lake; we will prove that the trajectory of the latter is stable.

6.4.1 Presentation of the problem

Let us consider a robot (e.g. an Autonomous Underwater Vehicle (AUV)) cruising in a closed area (e.g. a lake) at a constant speed. We consider that the robot is moving in a plane, for the sake of simplicity. The robot is controlled by an automaton and a heading controller. It also embeds a sensor (e.g. a sonar) to detect if the shore is close by. In this case, an event is triggered and the automaton changes its states : a new heading command is sent to the controller. Consider for instance the following sequence for the automaton:

-

1.

Head East during 25 sec.

-

2.

Head North until reaching the shore

-

3.

Go South for sec

-

4.

Head West until reaching the shore

-

5.

Go to 1.

6.4.2 Proving stability of the robot’s trajectory

The goal is to prove that the robot’s trajectory will converge towards a stable cycle.

Assume that the border is modelled by the function

| (23) |

Given the starting position of the robot , the positions of the robot after each of the four transitions are respectively given by the following functions :

where denotes the cruising speed of the robot. Each of these functions can be modified to take model and sensor uncertainties into account, as we consider that the robot is cruising in dead-reckoning. Usually, these uncertainties grow with time if the robot does not perform exteroceptive measurements.

Let us define the cycle function , given by Equation (24):

| (24) |

Proving stability of the cycle comes down to proving that the discrete-time system is stable.

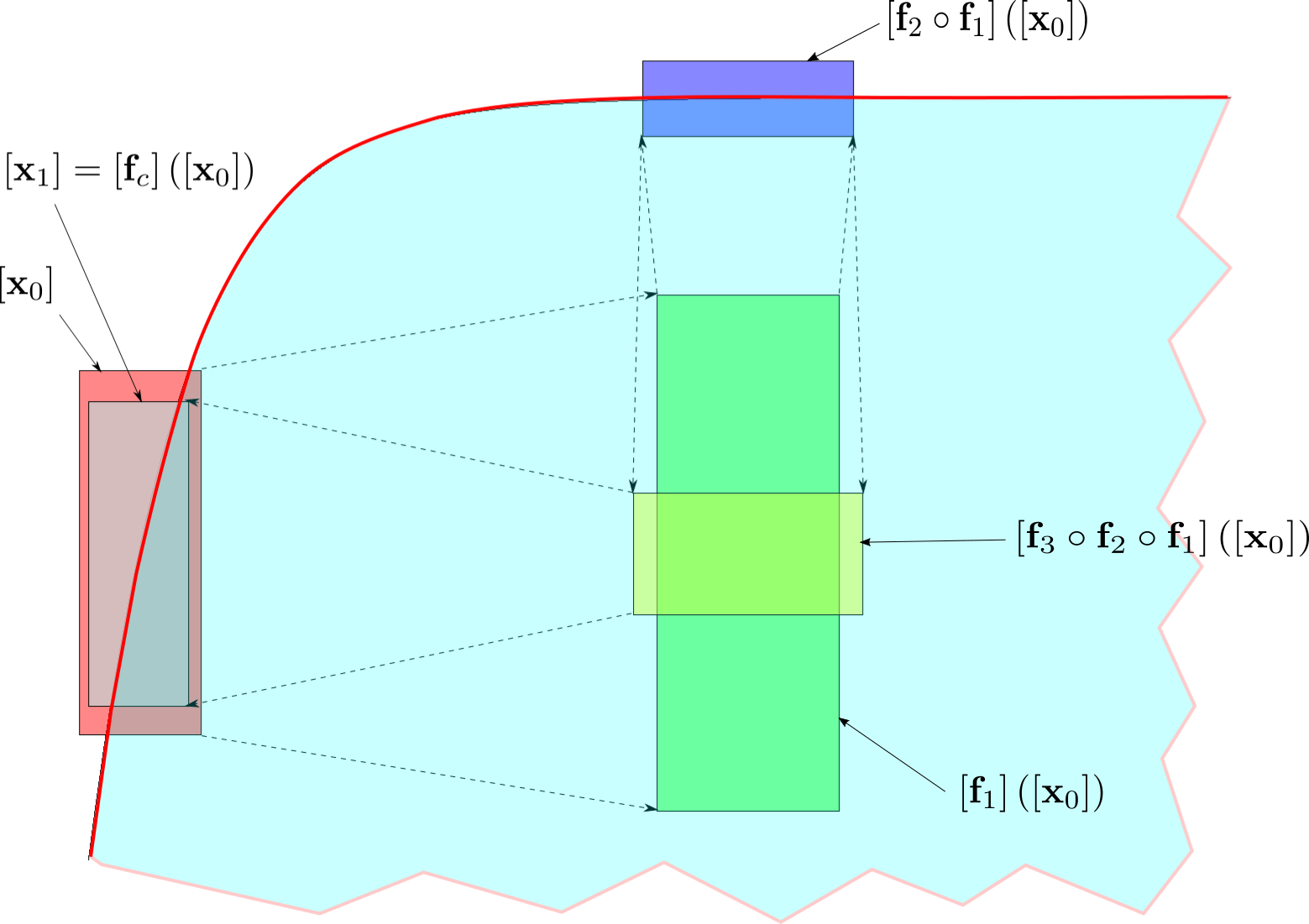

Figure 8 represents the evolution of over steps of integration, as well as the intermediate steps. The initial box is , and we model the robot getting lost by adding an uncertainty to each intermediate function , where is the cruising speed of the robot and is the speed at which the robot is getting lost, i.e. the inflating rate of the robot’s estimated position.

Since we have , we can conclude that the robot, equipped with the sensors named earlier and controlled by the given automaton, is going to perform a stable cycle in the lake.

7 Conclusion

In this paper, we have proposed a new approach to prove Lyapunov stability of a non-linear discrete-time system in a given neighbourhood , regardless of the system’s uncertainties and the floating-point round-off errors. The principle is to perform a simulation based on the interval centred form, from an initial box and until the current box is strictly enclosed in . From the properties of the centred form we have proposed, we are able to conclude that a system is stable. The method applies to a large class of systems and can be used for arbitrary large dimensions, which is not the case for methods based on linearisation which are very sensitive to the dimension of the state vector. Additionally, contrary to the existing methods, ours is able to find a neighbourhood of radius inside which the system is stable.

The next step is to extend this approach to deal with continuous-time systems described by differential equations. This extension will require the introduction of interval integration methods [5, 23, 25, 31].

The interval community can be decomposed into two worlds :

-

•

On the one hand, small intervals [20] are used in combination with linearisation methods and are able to propagate efficiently small uncertainties such as those related to round-off errors. They can solve high dimensional problems and do not require any bisections.

- •

This work belongs to the first world and makes use of small intervals. This is why the centred form is very efficient and why we do not perform any bisection. Now, in practice, the approaches developed by both communities can be used jointly : to solve real-life problems such as approximating basins of attraction, we will have to combine the two methodologies appropriately.

References

- [1]

- [2] M. V. A. Andrade, J. L. D. Comba & J. Stolfi (1994): Affine Arithmetic. In: Interval’94, St Petersburg.

- [3] E. Asarin, T. Dang & A. Girard (2007): Hybridization methods for the analysis of non-linear systems. Acta Informatica 7(43), pp. 451–476, 10.1007/s00236-006-0035-7.

- [4] G. Chabert & L. Jaulin (2009): A Priori Error Analysis with Intervals. SIAM Journal on Scientific Computing 31(3), pp. 2214–2230, 10.1137/070696982.

- [5] A. Chapoutot, J. Alexandre Dit Sandretto & O. Mullier (2015): Validated Explicit and Implicit Runge-Kutta Methods. In: Summer Workshop on Interval Methods.

- [6] C. Combastel (2005): A State Bounding Observer for Uncertain Non-linear Continuous-time Systems based on Zonotopes. In: CDC-ECC ’05, 10.1109/CDC.2005.1583327.

- [7] I. Fantoni & R. Lozano (2001): Non-linear control for underactuated mechanical systems. Springer-Verlag, 10.1007/978-1-4471-0177-2.

- [8] G. Frehse (2008): PHAVer: Algorithmic Verification of Hybrid Systems. International Journal on Software Tools for Technology Transfer 10(3), pp. 23–48, 10.1007/978-3-540-31954-2_17.

- [9] E. R. Hansen (1992): Global Optimization using Interval Analysis. Marcel Dekker, New York, NY.

- [10] L. Jaulin & J. Burger (1999): Proving stability of uncertain parametric models. Automatica, pp. 627–632, 10.1016/S0005-1098(98)00201-5.

- [11] L. Jaulin, M. Kieffer, O. Didrit & E. Walter (2001): Applied Interval Analysis, with Examples in Parameter and State Estimation, Robust Control and Robotics. Springer-Verlag, London, 10.1007/978-1-4471-0249-6.

- [12] Luc Jaulin & Fabrice Bars (2020): Characterizing Sliding Surfaces of Cyber-Physical Systems. Acta Cybernetica 24, pp. 431–448, 10.14232/actacyb.24.3.2020.9.

- [13] R. B. Kearfott & V. Kreinovich, editors (1996): Applications of Interval Computations. Kluwer, Dordrecht, the Netherlands, 10.1007/978-1-4613-3440-8.

- [14] M. Lhommeau, L Jaulin & L. Hardouin (2007): Inner and outer approximation of capture basins using interval analysis. ICINCO 2007.

- [15] R. Lohner (1987): Enclosing the solutions of ordinary initial and boundary value problems. In E. Kaucher, U. Kulisch & Ch. Ullrich, editors: Computer Arithmetic: Scientific Computation and Programming Languages, BG Teubner, Stuttgart, Germany, pp. 255–286.

- [16] T. Le Mézo, L. Jaulin & B. Zerr (2019): Bracketing backward reach sets of a dynamical system. In: International Journal of Control, 10.1080/00207179.2019.1643910.

- [17] R. E. Moore (1966): Interval Analysis. Prentice-Hall, Englewood Cliffs, NJ.

- [18] R. E. Moore (1979): Methods and Applications of Interval Analysis. SIAM, Philadelphia, PA, 10.1137/1.9781611970906.

- [19] Ramon E Moore, R Baker Kearfott & Michael J Cloud (2009): Introduction to interval analysis. SIAM, 10.1137/1.9780898717716.

- [20] A. Neumaier (1991): Interval Methods for Systems of Equations. Cambridge University Press, Cambridge, UK, 10.1017/CBO9780511526473.

- [21] N. Ramdani & N. Nedialkov (2011): Computing Reachable Sets for Uncertain Nonlinear Hybrid Systems using Interval Constraint Propagation Techniques. Nonlinear Analysis: Hybrid Systems 5(2), pp. 149–162, 10.1016/j.nahs.2010.05.010.

- [22] S. Ratschan & Z. She (2010): Providing a Basin of Attraction to a Target Region of Polynomial Systems by Computation of Lyapunov-Like Functions. SIAM Journal on Control and Optimization 48(7), pp. 4377–4394, 10.1137/090749955.

- [23] N. Revol, K. Makino & M. Berz (2005): Taylor models and floating-point arithmetic: proof that arithmetic operations are validated in COSY. Journal of Logic and Algebraic Programming 64, pp. 135–154, 10.1016/j.jlap.2004.07.008.

- [24] J. Rohn (1996): An algorithm for checking stability of symmetric interval matrices. IEEE Trans. Autom. Control 41(1), pp. 133–136, 10.1109/9.481618.

- [25] S. Rohou, L. Jaulin, L. Mihaylova, F. Le Bars & S. Veres (2019): Reliable robot localization. ISTE Group, 10.1002/9781119680970.

- [26] S. M. Rump (2001): INTLAB - INTerval LABoratory. In J. Grabmeier, E. Kaltofen & V. Weispfennig, editors: Handbook of Computer Algebra: Foundations, Applications, Systems, Springer-Verlag, Heidelberg, Germany.

- [27] P. Saint-Pierre (2002): Hybrid kernels and capture basins for impulse constrained systems. In C.J. Tomlin & M.R. Greenstreet, editors: in Hybrid Systems: Computation and Control, 2289, Springer-Verlag, pp. 378–392, 10.1007/3-540-45873-5_30.

- [28] J.J. Slotine & W. Li (1991): Applied nonlinear control. Prentice Hall, Englewood Cliffs (N.J.). Available at http://opac.inria.fr/record=b1132812.

- [29] W. Taha & A. Duracz: Acumen: An Open-source Testbed for Cyber-Physical Systems Research. In: CYCLONE’15, 10.1007/978-3-319-47063-4_11.

- [30] Jian Wan (2007): Computationally reliable approaches of contractive model predictive control for discrete-time systems. PhD dissertation, Universitat de Girona, Girona, Spain.

- [31] D. Wilczak & P. Zgliczynski (2011): Cr-Lohner algorithm. Schedae Informaticae 20, pp. 9–46, 10.4467/20838476SI.11.001.0287.