Interacting Dreaming Neural Networks

Abstract

We study the interaction of agents, where each one consists of an associative memory neural network trained with the same memory patterns and possibly different reinforcement-unlearning dreaming periods. Using replica methods, we obtain the rich equilibrium phase diagram of the coupled agents. It shows phases such as the student-professor phase, where only one network benefits from the interaction while the other is unaffected; a mutualism phase, where both benefit; an indifferent phase and an insufficient phase, where neither are benefited nor impaired; a phase of amensalism where one is unchanged and the other is damaged. In addition to the paramagnetic and spin glass phases, there is also one we call the reinforced delusion phase, where agents concur without having finite overlaps with memory patterns. For zero coupling constant, the model becomes the reinforcement and removal dreaming model, which without dreaming is the Hopfield model. For finite coupling and a single memory pattern, it becomes a Mattis version of the Ashkin-Teller model.

Keywords: Neural Networks, Associative Memories, Learning algorithms

The authors declare that they have no conflict of interest.

Partial Funding from: CNPq National Council for Scientific and Technological Development and Fapesp, the São Paulo Research Foundation.

’This is the Accepted Manuscript version of an article accepted for publication in Journal of Statistical Mechanics: Theory and Experiment: J. Stat. Mech. (2023) 043401. Neither SISSA Medialab Srl nor IOP Publishing Ltd is responsible for any errors or omissions in this version of the manuscript or any version derived from it. The Version of Record is available online at : http://dx.doi.org/10.1088/1742-5468/acc72b

1 Introduction

Neural networks are supposed to implement meaningful information processing based on data. Meaningful, of course, has to be defined. We are interested in the behavior of interacting neural networks, where the data available to each network is partially provided by other neural networks. Shared meaning emerges from this interaction. We obtain analytical results, for a system of two interacting agents, each one modeled by an associative memory. Each agent receives the same memory patterns from the environment, however they may undergo different levels of post-learning dynamics, in the form of dreaming, i.e. unlearning or reinforcing by relearning. Hopfield, Feinstein and Palmer [11], following ideas from Crick and Mitchinson [6], introduced a learning algorithm based on unlearning that improved significantly the performance of the Hopfield [16, 12] network with pure Hebbian learning. This anti-Hebbian mechanism was inspired by the suggestion [6] that rapid eye moving (REM) type dreaming, permits mammalians to forget spurious memories, increasing their capacity to retrieve relevant memories. Further analysis and simulations by van Hemmen et al. [10] and [20], showed that iterating this algorithm initially increases the network’s capacity. However, if iterated beyond a certain threshold, retrieval is destroyed. It was shown that this increment occurs in part due to the fact that the synaptic matrix approaches the pseudo-inverse synaptic matrix [14], despite not converging to it. Dotsenko [7] used a similar algorithm to justify a new symmetric synaptic matrix, that allowed reaching the theoretical upper bound in the thermodynamic limit: number of patterns , equal to the number of units , but only at .

Fachechi et al. [8] showed analytically that beneficial unlearning plus the enhancement of the pure states, which they called reinforcement, can increase the stability of the patterns, increasing the critical capacity. Their model has a much larger retrieval phase than the Hopfield model, not only saturating the maximal capacity at but also increasing the stability of the model to temperature changes. Most importantly, there is no catastrophic upper bound on the length of the dreaming process. This algorithm resembles developments by Plakhov, Semenov and Shuvalova [19] [18], but results in a more interesting and varied synaptic matrix. Also relevant for this discussion are [5],[3],[9], where they manage to connect effectively learning in Hopfield models with learning in Boltzmann machines.

Dreaming, which is a process internal to the neural network, represents an extra study period of the available information by the agent. We allow agents to interact through a coupling that extends this internal dreaming to an externally driven process, that also acts on the minima of the Hamiltonian. We show that this process has the potential to make the networks perform better or worse, depending on the conditions of the system.

2 Model of interacting neural networks

2.1 Introduction of the model

We work on a statistical mechanics problem in the canonical formalism with temperature . The components of the quenched memory patterns , are drawn independently from a uniform Bernoulli distribution. The Hamiltonian of an isolated agent is [8]

| (1) |

where quantifies the length of the dreaming process. is the matrix with elements , is an Ising variable. For the Hopfield Hebbian Hamiltonian is recovered and as , acts as a Tikhonov regularizer, leading to the pseudo-inverse model [17][14]. The two member group Hamiltonian is

is the coupling constant of the interaction, the sums over run from to , the sums over from to . We study this problem in equilibrium or offline with quenched disorder. A related problem, the inverse problem of learning online a spin glass Hamiltonian, was studied in [15].

The first two terms of the Hamiltonian in expression 2.1 represent two distinct neural network agents, the third term connects them.

This interaction is similar to dreaming since it changes the couplings from and , i.e. they pick up a Hebbian contribution from the other agent. However, we emphasize the fact that they are different, as in the interaction all states are included, and not only the stables ones, as it is done in the unlearning algorithm.

For , this is the model of reinforcement and removal model [8]; and with and , the infinite range Askhin-Teller model[13].

Without any loss, we take and refer to the neural network agents as NN1 and NN2 respectively.

2.2 Order parameters

The free-energy is obtained using the replica method, where the Hamiltonian is a sum of the individual replica Hamiltonians. The calculation follows roughly those for the single agent case by Fachechi et al. [8], except for a few important steps where it is necessary to be careful. The expression of the free energy and the self-consistent equations for the order parameters appear in [1]. Since we are interested in the memory retrieval phases, we have not looked in detail into the spin-glass phase, hence, in order to understand global features of the five dimensional phase diagram (), the important order parameters for replica are , and , where the double brackets represent the thermal and quenched average over the patterns. We will also consider the changes induced by the interaction, i.e. the difference between the order parameters for and , for a more careful identification of the different phases. Other order parameters of the form , , and are related to the overlap between the elements, where is an index that refers to either agent , or or to their overlap .

We assume the following replica symmetry ansatz:

| (2) | |||

where

| (3) |

and is the auxiliary variable of , so it has a similar physical meaning.

The first line of (2) is not hard to justify, since there are no reasons to expect that any replica is privileged.

The Ansatz related to and for deserves a comment. It represents the idea that the correlations between the agents will be different for the same replica than for different replicas, because the interaction is not between different replicas, but within the same, so we expect a greater intrareplica similarity of the agents than the interreplica similarity.

A homogeneous Ansatz (), leads to the puzzling result that the free energy does not depend on , which indicates that this is not the right Ansatz. This can be seen from the fact that the dependence of and on the free energy occurs via the differences and , so using a homogeneous Ansatz would lead to no dependence on .

It is important to recognize that does not have the exact same meaning as the original Edwards-Anderson parameter. Its additional complex part inside the average leads to different values, and in particular, is different from for , despite having some similarity. It also explains why already in the original article [8] the usual choice was not used. Still, this parameter gives us a measure of the correlations in the system, and can still be used to determine the existence of the spin-glass state.

We emphasize that despite using the same Ansatz for different ’s, the justification behind them is distinct. The replica symmetric free energy depends on 9 independent order parameters (, , , , , , , ). We also have 6 dependent variables (, , , , , ), which can be fully eliminated from the equations of state.

Table 1 shows the different phases, obtained from the possible regimes of and, in addition, a measure of the benefit or damage due to the interaction, given by

| (4) | |||

| Student-professor | ||||||

| Mutualism | ||||||

| Disordered | ||||||

| Reinforced delusion | ||||||

| Insufficient | 0 | |||||

| Indifferent | ||||||

| Amensalism |

Before entering into details in section 2.3, we give a rough description of the types of phases where the system may be found. The insufficient phase occurs when NN2 is in the retrieval phase and NN1 is not and is small enough such that no relevant changes in the learning occurs. As the interaction increases, it can transition to the student-professor phase, where the performance of only NN1 is enhanced significantly. Further increase of can result in the mutualism phase, where both neural networks have noticeable increases in their capacity.

The disordered phase includes the paramagnetic and spin glass phases, where the interaction cannot lead to interesting results. It is not hard to detect the spin glass phase, since for , the role of vanishes, hence the line of the spin glass phase is simply the same obtained in [2].

The indifferent phase occurs where, even without interaction, both neural networks could already process information in an adequate manner. The reinforced delusion phase indicates the creation of an alternate ordered state, where the agents, despite having no overlap with the memory patterns, are similar to each other. From their own perspective, the agents are in a retrieval ferromagnetic phase. For a third party, the individual agents cannot process information, and seem to be in a disordered phase. The interaction still preserves the glassy state, so it is still a spin glass phase. The last phase is the amensalism, where while NN1 is not significantly affected by the interaction, NN2 ends up losing the understanding of the situation, replicating an interaction of amensalism.

2.3 Phase diagrams and discussion

We analyze numerically the self-consistent equations obtained from the extreme conditions of the free energy. Three types of 2-dimensional cuts are used to describe the five dimensional parameter space, yielding phase diagrams in the: (i) , (ii) and (iii) planes, shown respectively in panels 1, 2 and 3. In all diagrams, since , we have .

2.3.1 - plane

For fixed and , is varied to find the maximum capacity such that or . We compare them to the non-interacting neural networks. The main results can be seen in panel 1.

The behavior can be understood by comparing the difference between the properties of an interacting agent with or , with the properties of the non-interacting agents with the same amount of dreaming.

Diagram 1.B shows the no dreaming interacting agents, . The interaction yields a higher critical capacity and both agents benefit equally from the interaction. Diagram 1.A represents the typical situation of having a high value of the dreaming disparity . Agent 1 always benefits, since its capacity is always larger than without interaction. For small interaction, , agent 2 slightly benefits for low but is damaged by the interaction at higher values of . For high values of the interaction, say , they have the same value of critical capacity for all temperatures. Agent 2 benefits considerably from the interaction at low , but is again damaged at higher loads, because it is learning from the spurious minima of the low dreaming agent 1, which is able to benefit only up to a certain point by the interaction.

Diagram 1.C represents the typical situation of having an intermediate and high dreaming load, . Above a certain , and in general the interaction is substantially beneficial for both neural networks, except at very low temperatures , where there is a slight decrease in the pattern retrieval critical capacity of agent 2.

Diagram 1.D presents the case where both agents have dreamt abundantly and equally . Again by symmetry, and the interaction increases significantly the phase of the pattern retrieval, and differently from the previous situation it is always beneficial. As in diagram 1.A, interacting with a peer, brings an advantage to both agents, independently of dream load.

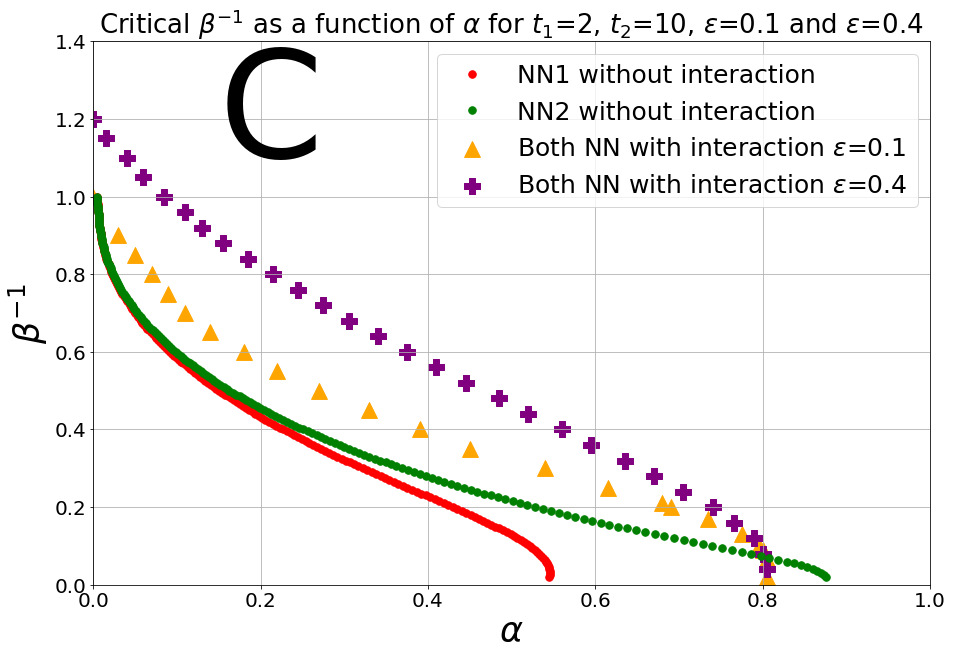

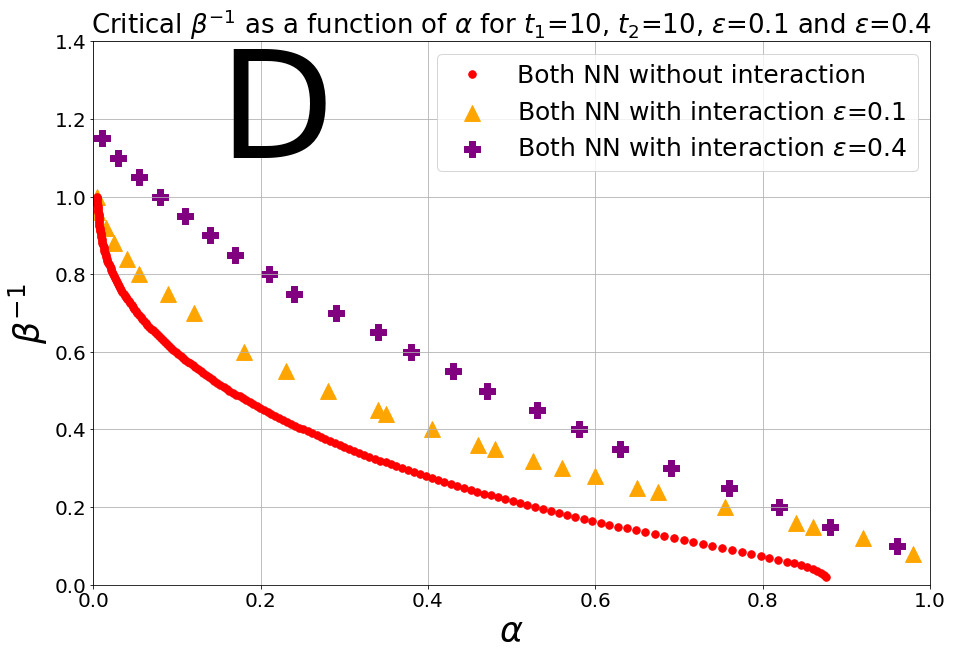

2.3.2 - plane

For and fixed, we varied and to obtain the value of such that for all we have . In particular, we have significant differences at low temperatures and high temperatures (). The main results can be seen in panel 2.

The first 2 diagrams 2.A and 2.B represent the high temperature region. While the second diagram shows the mutual benefits of the interaction for networks with high difference of , the first shows that with enough interaction, it is possible to extend the capacity to regions where it was impossible before the interaction. This retrieval above occurs because the coupled system acts as a system with a higher number of neurons. An interesting detail shown in diagram 2.B is that for low values of the interaction, only agent 1 has an increase in the capacity, while agent 2 is unchanged. It only modifies its retrieval capacity when the capacities start matching. The former student reaches the level of the professor and both can profit from the interaction.

Panels 2.C and 2.D show the behavior for the low temperature region . Diagram 2.D shows that for low differences of we still have a qualitatively similar behavior as in diagram 2.B. However, for big differences in dreaming load, there is a discontinuous transition at high values of the interaction in which the capacity of agent 2 decreases substantially. It is easy to see that this behavior is consistent with diagram 1.B. Of course, the critical capacity of agent 2, despite having no benefit from the interaction, is still larger than in the high temperature case shown in figure 2.B. For the parameters where the two agents are beneficial to each other, the increase in their interaction is not detrimental. We see this in the increase of the upper boundary of the yellow region. But this cannot improve forever, and the alphas (figure 2.A, 2.B and 2.D) tend to a limit value.

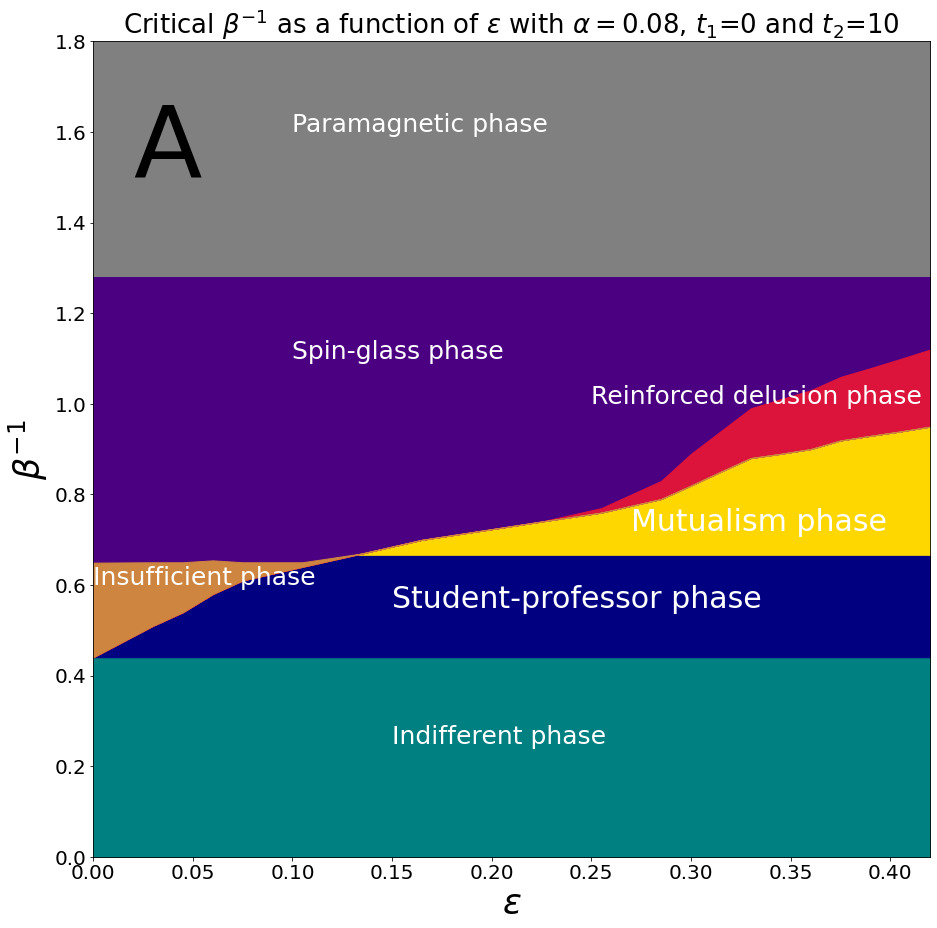

2.3.3 - plane

Here, we fixed and varied and to obtain the value of such that for we have or . In these, differently from the previous diagrams, we incorporated the denomination of different phases mentioned in subsection II.B, as they are easier to visualize. The main results can be seen in panel 3:

The indifferent phase is usually small and only appears in models where the and difference is high, in our examples it can only be visualized in the first case. The mutualism phase only increases as the interaction increases, and tends to a plateau for high values, as expected. The reinforced delusion only appears for high values of interaction and increases beyond the mutualism phase plateau. It is important to explain that the behavior visualized in diagram 3.A is not in contradiction with diagram 2.C, as in the diagram 3.A, we have a low value, so the phenomenon of decreasing magnetization with interaction does not occur. Additionally, we do not see the amensalism phase in panel 3 because we are only considering relatively low capacities, where this phase is not present.

To get a more clear view of the phase changes in this model, we add panel 4 that shows how , and change with temperature for fixed , , and

3 Conclusions

Statistical Mechanics techniques were used to model and study the interaction between information processing machines. Simple exposure to information, without the benefit of post-processing, in the form of dreaming, is not efficient. The agents received the same information in the form of patterns. The removal and reinforcement of minima can be thought of as a further exercise, of duration and , that the agents undergo after being exposed to the information in the memory patterns; in a metaphorical sense, the agents ponder, meditate, think over, dream about the received information. In addition to the individual learning process, further changes in their properties are elicited by an interaction quantified by , and a rich and non-trivial behavior ensues; interactions can be irrelevant, beneficial or harmful to the information retrieval. We return to what is meaningful information processing? It depends on who assigns meaning. If the retrieval is used to gauge the success of the machines, as an independent third party would, the region of reinforced delusion shows no meaningful information processing. However, from the perspective of the agents, it seems to be fine. They agree on their version of what is correct, despite it doesn’t reflect the objectively relevant information in the memory patterns. The ubiquitous use of machines that learn demands the study of their interactions, not only with other machines, but with humans too. Modifications, generalizations or simplifications of our approach are needed.

References

- [1] , See Supplemental Material at [URL will be inserted by publisher] for free energy and equations of state., 2022

- [2] Elena Agliari, Francesco Alemanno, Adriano Barra and Alberto Fachechi “Dreaming neural networks: rigorous results” In Journal of Statistical Mechanics: Theory and Experiment 2019.8 IOP Publishing, 2019, pp. 083503 DOI: 10.1088/1742-5468/ab371d

- [3] Francesco Alemanno et al. “Supervised Hebbian learning” In Europhysics Letters IOP Publishing, 2023

- [4] D.J Amit, Hanoch Gutfreund and H.Sompolinsky “Storing Infinite Numbers of Patterns in a Spin-Glass Model of Neural Networks” In Physical Review letters, 1985

- [5] Adriano Barra, Alberto Bernacchia, Enrica Santucci and Pierluigi Contucci “On the equivalence of Hopfield Networks and Boltzmann Machines” In Neural networks : the official journal of the International Neural Network Society 34, 2012, pp. 1–9 DOI: 10.1016/j.neunet.2012.06.003

- [6] F Crick and G Mitchinson “The function of dream sleep” In Nature, 1983

- [7] V.. Dotsenko “Statistical mechanics of Hopfield-like neural networks with modified interactions” In J. Phys. A: Math. Gen., 1991

- [8] A. Fachechi, E. Agliari and A. Barra “Dreaming neural networks: Forgetting spurious memories and reinforcing pure ones” In Neural Networks, 2019

- [9] Alberto Fachechi, Adriano Barra, Elena Agliari and Francesco Alemanno “Outperforming RBM Feature-Extraction Capabilities by “Dreaming” Mechanism” In IEEE Transactions on Neural Networks and Learning Systems 35, 2022, pp. 1–10 DOI: 10.1109/TNNLS.2022.3182882

- [10] Leo Hemmen and Nikolaus Klemmer “Unlearning and Its Relevance to REM Sleep: Decorrelating Correlated Data” In Neural Network Dynamics, 1992

- [11] J. Hopfield, D.. Feinstein and R.. Palmer “Unlearning has a stabilizing effect in collective memories” In Nature, 1983

- [12] John J Hopfield “Neural networks and physical systems with emergent collective computational abilities” In Proceedings of the national academy of sciences 79.8 National Acad Sciences, 1982, pp. 2554–2558

- [13] Leo Kadanoff and Franz Wegner “Some critical properties of the eight-vertex model” In Physical review B, 1971

- [14] I Kanter and H Sompolinsky “Associative recall of memory without errors” In Phys Rev A 35 American Physical Society, pp. 380–392 DOI: 10.1103/PhysRevA.35.380

- [15] S. Kuva, O. Kinouchi and N. Caticha “Learning a spin glass: determining Hamiltonians from metastable states” In Journal of Physics A, 1997

- [16] LA Pastur and AL Figotin “Theory of disordered spin systems” In Theor Math Phys 35, 1978, pp. 403–414 URL: https://doi.org/10.1007/BF01039111

- [17] Dreyfus G. Personnaz L. “Information storage and retrieval in spin-glass like neural networks” In Journal Physics Letters, 1985

- [18] A. Plakhov and S. Semenov “Neural networks: iterative unlearning algorithm converging to the projector rule matrix” In Journal de Physique I, 1994

- [19] S. Semenov and I. Shuvalova “Some results on convergent unlearning algorithm” In Advances in Neural Information Processing Systems 8 (NIPS 1995), 1995

- [20] S. Wimbauer, N. Klemmer and J Leo van Hemmen “Universality of unlearning” In Neural Networks, 1994

Supplemental Material

Interacting Dreaming Neural Networks

Pietro Zanin and Nestor Caticha

Instituto de Fisica, Universidade de Sao Paulo

Appendix A Details of the solution

The average over the patterns of the replicated partition function is

| (5) | |||

Introducing integrals to remove the inverse matrix and considering that we are only dealing with one condensed pattern () :

| (6) | |||

The average over the patterns can be done explicitly when we have only one condensed pattern, as it turns out to be a sum of exponentials:

| (7) | |||

where in the third equality we introduced Edward-Anderson variables and their auxiliary variables via the integral representation of delta function.

Now we look at the integrals in the non-condensed part:

| (8) | |||

| (9) | |||

where is a square matrix with dimension and the vector of dimension :

| (10) |

We have that

| (11) |

We rescale the auxiliary Edwards-Anderson variables and the magnetization variables:

| (12) | |||

With these changes, we get the following expression for the average of the replicated partition function:

| (13) | |||

Now we apply the replica symmetry ansatz:

| (14) | |||

with

| (15) |

The matrix has 4 eigenvalues, their degeneracies and values are

| (16) | |||

where

| (17) | |||

The last step is to do two Hubbard-Stratonovich transformations on the quadratic variables, but first we need to massage to terms to get an useful formula:

| (18) | |||

Only now we do the Hubbard-Stratonovich transformations in these quadratic terms. If we have an arbitrary number of agents , we can always do an analogous transformation, as it is equivalent to a change of coordinate system such that the with arbitrary coefficients , but it is not hard to notice that this procedure leads to many additional terms, whose number scales faster than linear in the number of agents. For comparison, the formula that should be used with 3 agents is the following:

| (19) |

This is the main reason why we believe that it is not easy to generalize our results for interactions between 3,4, or more neural networks.

After that manipulation, we can sum over the spin states and get the free energy.

Appendix B Free energy expression and equations of state

The free energy for two interacting agents is

| (20) | |||

where we use the auxiliary definitions

| (21) | |||

Using the variational principle that the partial derivatives of the free energy must be 0 at the equilibrium point, we get 15 different equations for 15 different variables. We can simplify them by writing 6 of the variables as functions of the other 9, we call them former dependent and the latter independent.

The equations of the 6 dependent variables are

| (22) | |||

| (23) | |||

| (24) | |||

| (25) | |||

| (26) | |||

| (27) | |||

The equations of the 9 independent variables are

| (28) | |||

| (29) | |||

| (30) | |||

| (31) | |||

| (32) | |||

| (33) | |||

| (34) | |||

| (35) | |||

| (36) | |||

Appendix C Zero temperature equations

To obtain zero temperature equations, it is necessary to deal with the three following combinations:

| (37) |

They become

| (38) | |||

It is possible to remove one of the integrals as it is done in [4] and [8], but in this case the equations would become substantially bigger and there would not be a visible simplification. Besides that change, it is necessary to substitute by in the same way that it is done in [4] and [8]. We did not find any particularly interesting property in this region, so we did not focus on it.