Inter-frame Accelerate Attack against Video Interpolation Models

Abstract.

Deep learning based video frame interpolation (VIF) method, aiming to synthesis the intermediate frames to enhance video quality, have been highly developed in the past few years. This paper investigates the adversarial robustness of VIF models. We apply adversarial attacks to VIF models and find that the VIF models are very vulnerable to adversarial examples. To improve attack efficiency, we suggest to make full use of the property of video frame interpolation task. The intuition is that the gap between adjacent frames would be small, leading to the corresponding adversarial perturbations being similar as well. Then we propose a novel attack method named Inter-frame Accelerate Attack (IAA) that initializes the perturbation as the perturbation for the previous adjacent frame and reduces the number of attack iterations. It is shown that our method can improve attack efficiency greatly while achieving comparable attack performance with traditional methods. Besides, we also extend our method to video recognition models which are higher level vision tasks and achieves great attack efficiency.

1. Introduction

Deep Neural Networks (DNNs) have been shown the vulnerability against the adversarial examples, which are added imperceptible small perturbations. Recent years, the adversarial robustness of higher level and some lower level vision scenarios such as image classification(Goodfellow et al., 2014; Madry et al., 2017), semantic segmentation and object detection(Xie et al., 2017) and super-resolution(Choi et al., 2019) has been investigated.

Meanwhile, video frame interpolation (VFI), a lower level vision task, has emerged as a popular research field in recent years, aiming to achieve video temporal super-resolution by generating smooth transitions between consecutive frames. At the beginning, most algorithms concentrate on motion estimation and motion-compensate frame interpolation, such as (Ha et al., 2004; Choi et al., 2007; Kang et al., 2007). And the quality of motion estimation determines the performance of video frame interpolation results. With the development of deep learning, various deep learning-based VIF methods emerges(Bao et al., 2019; Choi et al., 2020; Lee et al., 2020; Ding et al., 2021; Sim et al., 2021; Li et al., 2020). Although there are many VIF algorithms based on deep learning, their robustness to adversarial attack has not been investigated.

As mentioned above, while most researches focus on image classification and processing models, several video attacks(Wei et al., 2019; Chen et al., 2021, 2022) are also proposed recently. However, existing video attack methods cannot be applied directly to VIF models since attacking VIF models requires attacker to destruct most of the synthesized intermediate frames in the videos. In this paper, we first evaluate the adversarial robustness of VIF models. We propose a PGD-based attack for VIF models. We generate invisible adversarial perturbations for the previous and next one frame of the intermediate frame which can lead to a great degradation in the quality of synthesized intermediate frames.

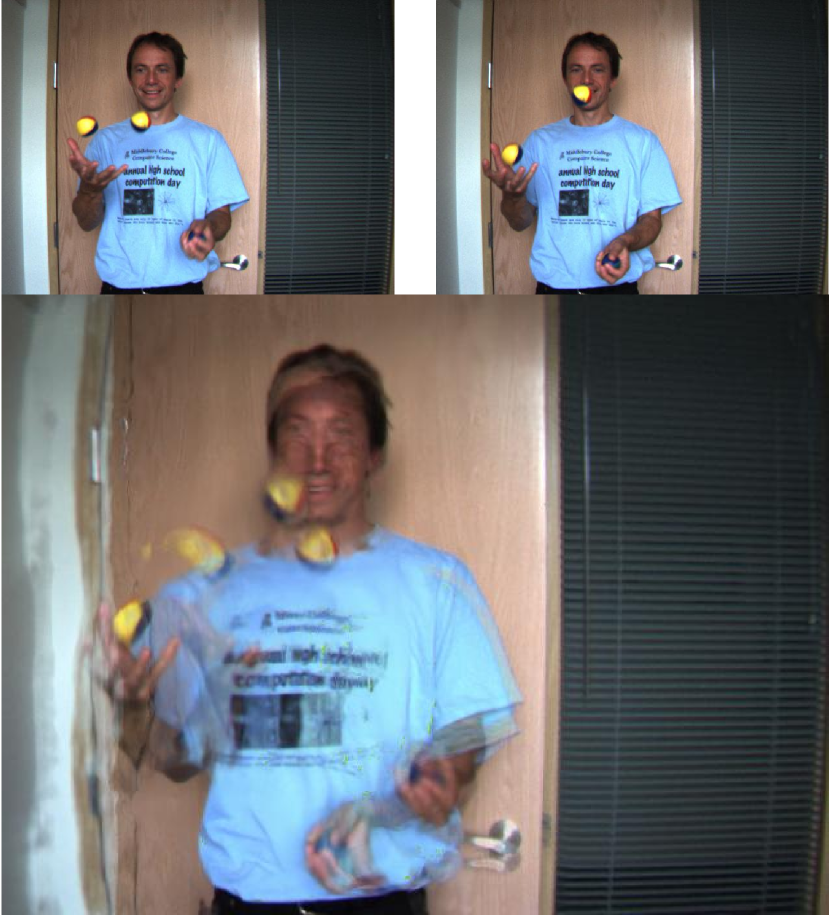

Ground truth

However, even if the attack achieves great performance on VIF models, the attack cost appears to be unacceptable in application scenarios due to number of frames in the videos. Thus, we proposed a novel attack for VIF models to improve attack efficiency. We find that the difference between consecutive frames in the video are very small and the corresponding adversarial perturbations are similar as well. Figure 2 shows the visualization of consecutive frames in the video and the perturbations generated by the aforementioned attack. Motivated by that, instead of initializing the adversarial perturbations for each intermediate frame as zero, we propose to initialize the perturbations as the ones for the previous frames while reduce the iteration of attack. Our experiments show that our improved attack method can greatly reduce the time to generate adversarial samples under the premise of achieving the same attack performance. Besides, we use targeted attack to pursue more destructive visual attack performance and extend the attack from VIF models to higer level tasks such as video recognition models.

Our main contributions are summarized as follows:

-

•

We apply adversarial attack to VIF models. Then we investigate a comprehensive evaluation of the adversarial robustness of the VIF models. We adopt various advanced video interpolation models based on deep learning, such as QVI(Xu et al., 2019), CAIN(Choi et al., 2020), AdaCoF(Lee et al., 2020), to evaluate the vulnerability of video interpolation models against adversarial attacks.

-

•

We propose Inter-frame Accelerate Attack (IAA) for VIF models to improve the attack efficiency. The experiments show that our proposed attack can accelerate the attack to generate adversarial samples while achieving comparable attack performance at the same time.

-

•

We further explore target attack for VIF models and extend our method to higher level vision scenario like video recognition models. The extended experiments shows the effectiveness of our proposed method.

2. Related Work

2.1. Adversarial Attack

Recent studies have researched how to generate adversarial examples for multiple tasks such as image classification and super-resolution. Szegedy et al. (Szegedy et al., 2013) first propose an optimization-based attack algorithm which shows that adversarial examples generating by adding a small amount of perturbation to the original images can fool CNNs successfully. Goodfellow et al. (Goodfellow et al., 2014) show the fast sign gradient sign method (FGSM) which shows a great performance by using the sign of gradients of the model. Kurakin et al. (Kurakin et al., 2018) further develop an iterative vserion of FGSM called I-FGSM which shows a higher performance than FGSM. Madry et al. (Madry et al., 2017) investigate a gradient-based method named projected gradient descent (PGD). Moosavi-Dezfooli et al. (Moosavi-Dezfooli et al., 2017) study the universality of adversarial examples and propose the universal attack based on images. Liu et al. (Liu et al., 2016) show the transferability of adversarial images and developed an ensemble-based algorithm. While most works are for higer level vision task, (Choi et al., 2019) evaluates the adversarial robustness of the super-resolution model which is a lower level vision task.

Besides, when all these studies above are based on images, Wei et al. (Wei et al., 2019) extend adversarial attack to videos by using the temporal propagation of perturbations. Chen et al.(Chen et al., 2021) propose appending adversarial frames for video recognition task. And Chen and Wei et al.(Chen et al., 2022) suggest a bullet-screen comments adversarial frame for specific videos. In this paper, we propose a different attack method on video interpolation which is a lower level vision task compared to other video recognition, to accelerate the generation process of adversarial examples.

2.2. Video Frame Interpolation

Video Frame interpolation has been widely studied for a long time. Long et al. (Long et al., [n. d.]) propose a CNN to synthesize the intermediate frame directly. And Meyer et al. (Meyer et al., 2015) show a phase-based video interpolation approach to combine all of the phase information. Liu et al. (Liu et al., 2017) develop a 3D optical flow across space and time to generate the intermediate frame. Other than rely on optical flow, Niklaus et al. (Niklaus et al., 2017a, b) study the kernel-based methods to synthesize pixels for intermediate frames from a large neighborhood. Then Bao et al. (Bao et al., 2019) further combine the flow-based and kernel-based approaches to achieve a more considerable performance. Xu et al. (Xu et al., 2019) take acceleration information into consideration so that the network can perform better in large-motion condition. To handle complex motion in videos, Lee et al. (Lee et al., 2020) propose adaptive collaboration of flows. Besides, Choi et al. (Choi et al., 2020) introduce channel attention to video interpolation task which performs very well. Ding et al. (Ding et al., 2021) investigate a compression-driven design for video interpolation networks and implement it based on (Lee et al., 2020). Other than focus on the size of network, Kalluri et al. (Kalluri et al., 2020) propose 3D space-time convolutions to enable end-to-end learning and inference which largely improved the efficiency of video interpolation. However, the adversarial robustness of these VIF models is not investigated yet.

3. Methodology

In this section, we introduce the adversarial attack (Basic Attack) on VIF models and our Inter-frame Accelerate Attack (IAA) method for generating adversarial examples on VIF models. Our proposed method is based on the basic attack and it can improve attack efficiency evidently.

3.1. Basic Attack

In order to make video frame interpolation models fail to generate high quality frames, we develop an attack algorithm based on Projected Gradeient Descent (PGD)(Madry et al., 2017) method, which is one of the most effective adversarial attacks for image tasks. For video frame interpolation model, we customize PGD attack by generating adversarial perturbation pairs for the previous and next one frame of the intermediate frame.

Let denote the -th frame pair of the video and the corresponding attacked frame pair , and each frame pair contains two frames which are used to synthesis the intermediate frame. From these frames and video frame interpolation models , we obtain intermediate frames and . Then our goal is to maximize the loss between ground-truth and attacked frames. We can describe the problem as the following function:

| (1) |

Then we apply the PGD algorithm to generate , which maximizes with the -norm constraint. In the process, we update the perturbation iteratively added into original images which is denoted as as the following function:

| (2) |

where represents the amount of perturbation generated by each iteration and calculates the gradient of . And denotes the -th iteration.

| (3) |

The parameter limits the amount of perturbation added into original images so that we can ensure the perturbation is invisible. And can be defined as

| (4) |

By iteratively updating , we can obtain the final adversarial example by:

| (5) |

where is the number of iterations.

3.2. Inter-frame Accelerate Attack

Non-targeted Attack. Although the basic attack (BA) method can generate imperceptible adversarial perturbations for video interpolation task, it costs too much time and computation resources to generate adversarial examples for each frame in the video. And unlike video recognition task which only need to mislead the model to output negative labels, to attack VIF models, we have to destroy every frame in the video which disables other video attacks. We propose a new method named Inter-frame Accelerate Attack (IAA) to accelerate the attack process. The intuition here is to make full use of the similarity between consecutive frames in the video. Due to the temporal continuity of adjacent frames in the video and the difference between them is often very small, it is possible for us to generate based on . Algorithm 1 shows the process of our proposed IAA method.

Let denote the perturbation added to -th input frame pair. In basic attack method, we set up the initial value for before the first iteration as zero. As can be seen in Algorithm 1, in IAA, we set the initial value as

| (6) |

By inheriting the perturbation information from the previous frame pair, it will be possible for us to reduce the amount of iteration while reaching the same attack performance. In our experiment, we halve the number of the attack iterations for input frames other than the first frame. By this way, we can save almost 50% attack time in the video frame interpolation task theoretically.

Targeted Attack. Although the basic attack and Inter-frame Accelerate Attack for VIF models can degrade the quality of generated intermediate frames, the deterioration is measured by PSNR and SSIM. We want to further explore the attack method to degrade the visual quality of intermediate frames. Targeted attack in image classification task aims to mislead the classifier to specific labels. And in super-resolution task, the targeted attack is to make the model generate images that are more similar to the target than the original ground-truth (Choi et al., 2019). Here, we apply targeted attack to VIF models so that the generated intermediate frame can be more similar to the target. To make that, we simply modify the Eq. 2 as

| (7) |

where is the target image.

3.3. Attack Transferability to Video Recognition Models

Video frame interpolation task is a lower level vision task compared to video recognition task. We further extend our attack method to higher level vision tasks such as video recognition task. For this, we divide the frames in a single video to multiple frame groups , where the number of frames in is more than the minimum required input frames for the video recognition model. Our goal is to fool the model to misclassify the video by generating adversarial perturbations based on gradients. For each group, we obtain the perturbations by:

| (8) |

where is the ground-truth label of the video, denotes the -th attack iteration and perturbation is limited within [], the same as Algorithm 1. Instead of initializing the perturbations as zeros, we use the perturbation values of the previous group which is from the same video as the initialization value.

| (9) |

where denotes the -th group frames in the video and denotes the maximum iteration of -th frame group.

(a) UCF-101,

(b) UCF-101,

(c) UCF-101,

(d) UCF-101,

(e) UCF-101,

(f) UCF-101,

(g) UCF-101,

(h) UCF-101,

(a) Groundtruth

(b) AdaCoF

(c) FLAVR

(d) CAIN

(e) RRIN

(f) CDFI

(g) QVI

(h) XVFI

4. Experiments

4.1. Experiment Settings

Datasets. We use three datasets that are widely used for video frame interpolation methods: Middlebury, Vimeo90K, and UCF-101. The UCF-101 (Soomro et al., 2012) used in our experiments is the standard dataset collected from Youtube, which contains 13,320 videos with 101 action classes covering a broad set of activities. For ease of our experiments, we streamline it and take the first 50 frames of the first video in the 101 categories, the dataset applied in our experiments contains 101 sets of data, each set of data has 50 consecutive frames. The Vimeo90K (Xue et al., 2019) is a large-scale high-quality video dataset for lower-level video processing. Our work adopts the Septuplet part of the Vimeo90K dataset, which contains 7824 videos, each of which contains 7 consecutive frames. The Middlebury (Baker et al., 2011) consists of high-resolution stereo sequences with complex geometry and pixel-accurate ground-truth disparity data. We use the Middlebury-other dataset, after excluding data containing only 2 frames. The existing dataset contains 10 groups of data, each with 9 consecutive frames.

Metrics. We use Peak Signal-to-Noise Ratio (PSNR), Structural Similarity (SSIM) and attack time to measure the robustness of the VIF models against our adversarial attacks. For different datasets and VIF models, we respectively calculate the PSNR/SSIM values between the ground truth and the generated intermediate frame. Then, the PSNR/SSIM values between the ground truth and the video frame attacked by the attacks are calculated. Besides, we assist in understanding the performance of basic attack and IAA by recording the attack time. For different VIF models, the attack time of basic attack method is recorded for 15 and 30 iterations respectively. In our experiment settings, it can be concluded that the time of IAA method is similar to that of basic attack method for 15 iterations, but far less than that of 30 iterations, so it can be judged that our proposed IAA method has good performance.

| Method | parameters | layers | class |

|---|---|---|---|

| QVI (Xu et al., 2019) | 29.2M | 81 | flow-based |

| FLAVR (Kalluri et al., 2020) | 42.1M | 37 | kernel-based |

| CAIN (Choi et al., 2020) | 42.7M | 247 | kernel-based |

| CDFI (Ding et al., 2021) | 4.9M | 128 | kernel-based |

| AdaCoF (Lee et al., 2020) | 21.8M | 59 | kernel-based |

| RRIN (Li et al., 2020) | 19.1M | 81 | flow-based |

| XVFI (Sim et al., 2021) | 5.6M | 34 | flow-based |

Video Interpolation Models. Our experiments use seven advanced deep learning-based VIF methods with various model sizes and properties, including RRIN (Li et al., 2020), FLAVR (Kalluri et al., 2020), QVI (Xu et al., 2019), CAIN (Choi et al., 2020), CDFI (Ding et al., 2021), AdaCoF (Lee et al., 2020) and XVFI (Xu et al., 2019). QVI is a flow-based model, while FLAVR, CAIN, CDFI and AdaCoF are kernel-based. And XVFI is the first method proposed for 4K videos with large motion. In the experiments, we employ the pre-trained models provided by original authors. Table 1 shows their characteristics in terms of the number of model parameters, the number of convolutional layers, and class according to (Dong et al., 2022).

Implementation details. For all our methods, we generate two adversarial perturbations for the previous and next frame of the intermediate frame. We set the and . And we set for each value. Our adversarial attack methods are implemented on the pytorch framework, and running on one Nvidia V100-32GB GPU.

4.2. Attack Performance

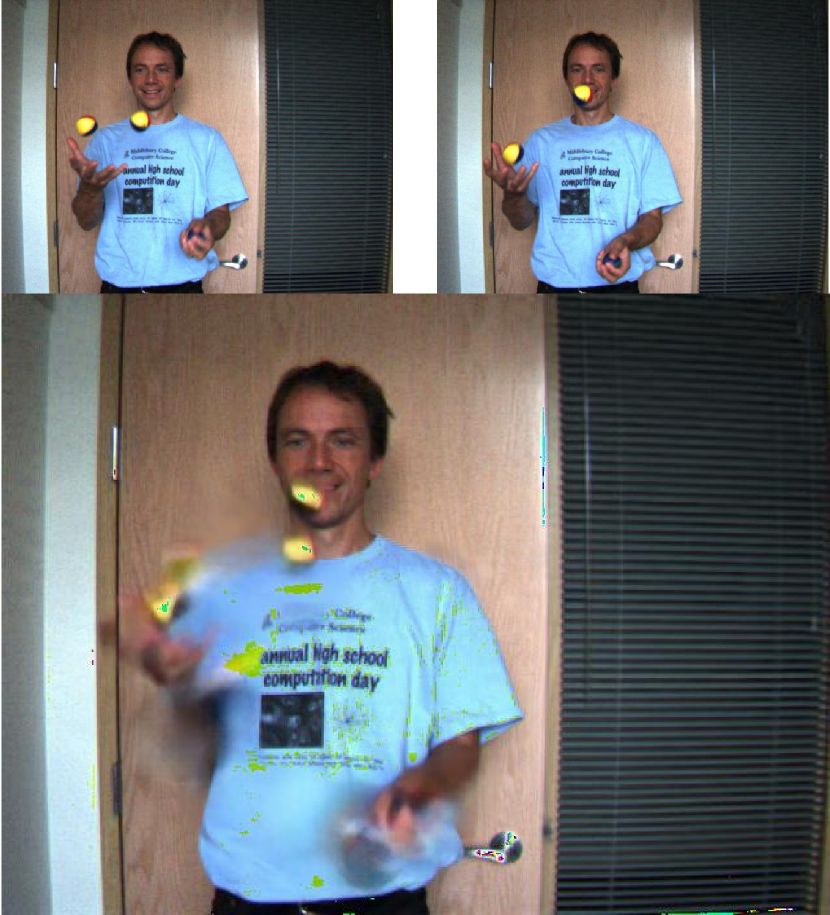

We evaluate the performance of basic attack and our proposed attack algorithm with UCF-101, Vimeo90K and Middlebury benchmark. Figure 4 shows the IAA attack performance on UCF-101 dataset in terms of PSNR and SSIM with different values. PSNR and SSIM both decreases rapidly as increases on all video interpolation models which is consistent with our intuition. For example, in terms of AdaCoF model, the PSNR/SSIM values for = 0.01 and 0.08 are 20.14/0.76 and 13.61/0.45 with our IAA method, respectively. Figure 3 shows the visualized results of attacked intermediate frames as increasing. It is noticeable that among all VIF models, FLAVR is specially vulnerable to adversarial examples. This may be contributed to its 3D space-time convolutions which contain the least convolutional layers. The statistics reveal that the VIF models are vulnerable to adversarial attacks.

When it comes to the comparison of different attack methods, Figure 4 also shows that our proposed method exhibits comparable performance to basic attack while decreasing the attack cost. When is , the quality degradation even becomes much more severe with our method which reduces the PSNR/SSIM values of most tested models except XVFI more than basic attack on UCF-101 dataset. This result is attributed to the exploitation of similarity between consecutive frames which means the adversarial perturbation inherits properties from previous frames. Figure 5 shows the visualized results of IAA attack on different video frame interpolation models. Though is only , the generated intermediate frames are wrapped so severe that it is impossible to be used in the video quality enhancement task.

Transferability. Figure 7 summarizes the transferability for deep learning-based video interpolation models on UCF-101 benchmark when = 0.02. Transferability represents the possibility that a misclassified adversarial example is also misclassified by another classifier in image classification task. In this paper, we also evaluate the transferability of video interpolation task by using adversarial examples from other source models as inputs for the target model. And we measure the PSNR and SSIM value of the output intermediate frames in the experiment. The figure 7 shows that the adversarial examples do not transfer very well between different models. But we can see that the adversarial examples generated by QVI, XVFI, CAIN and RRIN can transfer better than the rest methods. One possible reason for the poor transferability is that lower level vision tasks like video frame interpolation generalize poorly on its original task. The inherit property determines the poor transferability on the task. However, the outputs’ PSNR values are still been declined by the perturbation generated by other source models to some extent.

Relation to model size. It can be seen that the adversarial robustness of different video frame interpolation models is slightly related to their model sizes. Figure 6 shows the relationship between model size and model’s robustness in terms of the number of model parameters and convolutional layers. For example, CDFI, a compressed version based on AdaCoF, performs better than its larger version in terms of PSNR values on adversarial examples. However, for models with different network architectures, the model size and robustness of deep learning-based video interpolation models is almost irrelevant.

Attack time. The major goal of our IAA method is to improve attack efficiency for generating adversarial examples for tons of frames in the video for frame interpolation tasks. We record the time cost to obtain high-performance adversarial examples on UCF-101 dataset. Figure 8 shows that our method only spends almost half of the time to complete the attack process while achieving the same degradation on PSNR/SSIM values which means that it has become much easier for us to attack a whole video in practice.

| Target Model | Methods | PSNR | SSIM |

|---|---|---|---|

| QVI (Xu et al., 2019) | 27.56 | 0.8705 | |

| CAIN (Choi et al., 2020) | 29.46 | ||

| 0.8522 | |||

| RRIN (Li et al., 2020) | 27.17 | ||

| 0.8596 | |||

| XVFI (Sim et al., 2021) | 31.50 | ||

| 0.7202 | |||

| CDFI (Ding et al., 2021) | 31.32 | 0.8748 | |

| AdaCoF (Lee et al., 2020) | 29.55 | 0.8407 | |

| FLAVR (Kalluri et al., 2020) | 16.95 | 0.4795 | |

4.3. Ablation Study

Experiments above has shown the effectiveness of IAA on VIF models, it achieves the best attack performance on most models in terms of PSNR, SSIM and attack time. We then do the ablation study to investigate the role of our proposed improved part playing in the attack. Based on basic attack, we initialize perturbation pairs for each intermediate frame as and constrain it in . Noted that, to evaluate the contribution of IAA, the attack iteration for basic attack here is the same as IAA which is 15.

Table 2 shows the results of the attack with on UCF-101. Although each initialized perturbation in are given a value which is comparable to IAA, the attack performance is not been improved evidently. For example, on CDFI model which is a compressed version of AdaCoF, the PSNR and SSIM is 31.32 and 0.8748 which means that the generated intermediate frames are still of high quality after attack. But under the same settings, the metrics deteriorate to 19.81 and 0.7281 at the same time with IAA. In general, our proposed IAA performs much better than which shows that it is our proposed initialization method improve the attack performance.

5. Advanced topic

5.1. Targeted Attack

In our experiments, we apply white image as the target image because such target images with extreme values usually make people uncomfortable. Figure 9 shows the visualization results of the targeted attack. It is obvious that the visually attack performance for targeted attack is better than non-targeted attack. And it can be observed that the first two attack performance is relatively similar visually compared to the IAA. When it comes to IAA based attack, the visual attack performance become much better and The latter frames in the video is destroyed much more serious than the former ones visually. The same results are not observed on the other two attacks. The main reason is that for BA-based targeted attack, the attack iteration is not enough. But IAA-based targeted attack successfully inherit the information from previous ones which make up for the drawbacks of attack iteration.

Table 3 shows the comparison of PSNR and SSIM values of VIF models under targeted attacks on UCF-101. When , IAA performs the best on most VIF models except XVFI. The PSNR and SSIM results for targeted attack is similar to non-targeted attacks in which IAA achieves better attack performance while recuding the attack cost.

| Target Model | BA(T=15) | BA(T=30) | IAA | |||

|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

| QVI (Xu et al., 2019) | 27.95 | 0.8976 | 21.85 | 0.8631 | ||

| CAIN (Choi et al., 2020) | 26.91 | 0.8373 | 23.95 | 0.8003 | ||

| RRIN (Li et al., 2020) | 26.82 | 0.8493 | 23.50 | 0.8033 | ||

| XVFI (Sim et al., 2021) | 29.08 | 0.3185 | 28.27 | 0.3131 | ||

| CDFI (Ding et al., 2021) | 32.31 | 0.9105 | 31.20 | 0.8818 | ||

| AdaCoF (Lee et al., 2020) | 29.93 | 0.8914 | 25.96 | 0.8320 | ||

| FLAVR (Kalluri et al., 2020) | 18.46 | 0.6375 | 12.50 | 0.4154 | ||

| Methods | Target Model | ||

|---|---|---|---|

| No attack | C3D | 78.67 | 3781 |

| BA(T=15) | C3D | 27.27 | |

| BA(T=30) | C3D | 20.53 | 56923 |

| IAA | C3D | 33131 |

5.2. Attack Transferability to Video Recognition Models

We adopt C3D as the target model to attack in our experiments. The model is trained on sports-1m dataset and fine-tuned on UCF-101 training set. We evaluate the accuracy and attack time of basic attack (BA) and IAA and on UCF-101 test set. We adopt the iteration number as .

| 0 | 0.01 | 0.02 | 0.04 | 0.08 | |

|---|---|---|---|---|---|

| 78.67 | 52.02 | 24.82 | 7.48 | 4.52 | |

| 3792 | 33157 | 33501 | 33131 | 33212 |

Table 5 reveals the results of our proposed method with different on C3D. With the increase of , the attack performance improves. The classification accuracy of C3D deteriorates to when and with which means that our attack has disabled the classifier successfully. When it comes to the comparsion of basic attack and IAA method, the experiments shows IAA still performs better than basic attack. Table 4 shows the details of the attack performance in terms of different attack methods. Contributed to the inherited information from former frames, IAA’s attack performance achieves better than BA of while spending the same time as BA of . In summary, the experiments show that IAA transfers very well to video recognition models and it can successfully fool video recognition models while cost much less computation.

6. Conclusion

We first customize Projected Gradient Descent (PGD) method, a widely used adversarial attack, to deep learning-based video interpolation (VIF) models. Then we investigate the adversarial robustness of deep learning-based VIF models with different model properties. For improving attack efficiency, we propose a novel attack named Inter-frame Accelerate Attack (IAA) to accelerate the attack process on VIF models by making full use of the similarity between consecutive frames in the video. Our experiments show that VIF models are vulnerable to adversarial attacks and our proposed method IAA achieves better performance than basic attack while saving considerable computation resources. We show that targeted attack performs better visual quality degradation on VIF models. Furthermore, we show the great transferability of our proposed attack to higher level vision task such as video recognition. In other words, though our method is simple, it shows excellent performance on VIF models and great tranferability to the video recognition model.

References

- (1)

- Baker et al. (2011) Simon Baker, Daniel Scharstein, JP Lewis, Stefan Roth, Michael J Black, and Richard Szeliski. 2011. A database and evaluation methodology for optical flow. International journal of computer vision 92 (2011), 1–31.

- Bao et al. (2019) Wenbo Bao, Wei-Sheng Lai, Chao Ma, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019. Depth-Aware Video Frame Interpolation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019), 3698–3707.

- Chen et al. (2022) Kai Chen, Zhipeng Wei, Jingjing Chen, Zuxuan Wu, and Yu-Gang Jiang. 2022. Attacking video recognition models with bullet-screen comments. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36. 312–320.

- Chen et al. (2021) Zhikai Chen, Lingxi Xie, Shanmin Pang, Yong He, and Qi Tian. 2021. Appending adversarial frames for universal video attack. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 3199–3208.

- Choi et al. (2007) Byeong-Doo Choi, Jong-Woo Han, Chang-Su Kim, and Sung-Jea Ko. 2007. Motion-compensated frame interpolation using bilateral motion estimation and adaptive overlapped block motion compensation. IEEE Transactions on Circuits and Systems for Video Technology 17, 4 (2007), 407–416.

- Choi et al. (2019) Jun-Ho Choi, Huan Zhang, Jun-Hyuk Kim, Cho-Jui Hsieh, and Jong-Seok Lee. 2019. Evaluating robustness of deep image super-resolution against adversarial attacks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 303–311.

- Choi et al. (2020) Myungsub Choi, Heewon Kim, Bohyung Han, Ning Xu, and Kyoung Mu Lee. 2020. Channel Attention Is All You Need for Video Frame Interpolation. Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) 34, 07 (2020), 10663–10671.

- Ding et al. (2021) Tianyu Ding, Luming Liang, Zhihui Zhu, and Ilya Zharkov. 2021. CDFI: Compression-Driven Network Design for Frame Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 8001–8011.

- Dong et al. (2022) Jiong Dong, Kaoru Ota, and Mianxiong Dong. 2022. Video Frame Interpolation: A Comprehensive Survey. ACM Transactions on Multimedia Computing, Communications and Applications (2022).

- Goodfellow et al. (2014) Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. 2014. Explaining and Harnessing Adversarial Examples. arXiv e-prints, Article arXiv:1412.6572 (2014).

- Ha et al. (2004) Taehyeun Ha, Seongjoo Lee, and Jaeseok Kim. 2004. Motion compensated frame interpolation by new block-based motion estimation algorithm. IEEE Transactions on Consumer Electronics 50, 2 (2004), 752–759.

- Kalluri et al. (2020) Tarun Kalluri, Deepak Pathak, Manmohan Chandraker, and Du Tran. 2020. FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation. arXiv e-prints, Article arXiv:2012.08512 (2020).

- Kang et al. (2007) Suk-Ju Kang, Kyoung-Rok Cho, and Young Hwan Kim. 2007. Motion compensated frame rate up-conversion using extended bilateral motion estimation. IEEE Transactions on Consumer Electronics 53, 4 (2007), 1759–1767.

- Kurakin et al. (2018) Alexey Kurakin, Ian J Goodfellow, and Samy Bengio. 2018. Adversarial examples in the physical world. In Artificial intelligence safety and security. 99–112.

- Lee et al. (2020) Hyeongmin Lee, Taeoh Kim, Tae-Young Chung, Daehyun Pak, Yuseok Ban, and Sangyoun Lee. 2020. AdaCoF: Adaptive Collaboration of Flows for Video Frame Interpolation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020), 5315–5324.

- Li et al. (2020) Haopeng Li, Yuan Yuan, and Qi Wang. 2020. Video frame interpolation via residue refinement. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 2613–2617.

- Liu et al. (2016) Yanpei Liu, Xinyun Chen, Chang Liu, and Dawn Song. 2016. Delving into transferable adversarial examples and black-box attacks. arXiv preprint arXiv:1611.02770 (2016).

- Liu et al. (2017) Ziwei Liu, Raymond A. Yeh, Xiaoou Tang, Yiming Liu, and Aseem Agarwala. 2017. Video Frame Synthesis Using Deep Voxel Flow. In Proceedings of the IEEE International Conference on Computer Vision (ICCV).

- Long et al. ([n. d.]) Gucan Long, Laurent Kneip, Jose M. Alvarez, Hongdong Li, Xiaohu Zhang, and Qifeng Yu. [n. d.]. Learning Image Matching by Simply Watching Video. In Computer Vision – ECCV 2016. Cham, 434–450.

- Madry et al. (2017) Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. 2017. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083 (2017).

- Meyer et al. (2015) Simone Meyer, Oliver Wang, Henning Zimmer, Max Grosse, and Alexander Sorkine-Hornung. 2015. Phase-Based Frame Interpolation for Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Moosavi-Dezfooli et al. (2017) Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, Omar Fawzi, and Pascal Frossard. 2017. Universal adversarial perturbations. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). 1765–1773.

- Niklaus et al. (2017a) Simon Niklaus, Long Mai, and Feng Liu. 2017a. Video Frame Interpolation via Adaptive Convolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Niklaus et al. (2017b) Simon Niklaus, Long Mai, and Feng Liu. 2017b. Video Frame Interpolation via Adaptive Separable Convolution. In Proceedings of the IEEE International Conference on Computer Vision (ICCV).

- Sim et al. (2021) Hyeonjun Sim, Jihyong Oh, and Munchurl Kim. 2021. Xvfi: extreme video frame interpolation. In Proceedings of the IEEE/CVF international conference on computer vision (ICCV). 14489–14498.

- Soomro et al. (2012) Khurram Soomro, Amir Roshan Zamir, and Mubarak Shah. 2012. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402 (2012).

- Szegedy et al. (2013) Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. 2013. Intriguing properties of neural networks. arXiv e-prints, Article arXiv:1312.6199 (2013).

- Wei et al. (2019) Xingxing Wei, Jun Zhu, Sha Yuan, and Hang Su. 2019. Sparse adversarial perturbations for videos. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Vol. 33. 8973–8980.

- Xie et al. (2017) Cihang Xie, Jianyu Wang, Zhishuai Zhang, Yuyin Zhou, Lingxi Xie, and Alan Yuille. 2017. Adversarial examples for semantic segmentation and object detection. In Proceedings of the IEEE international conference on computer vision (ICCV). 1369–1378.

- Xu et al. (2019) Xiangyu Xu, Liu Siyao, Wenxiu Sun, Qian Yin, and Ming-Hsuan Yang. 2019. Quadratic video interpolation. In NeurIPS.

- Xue et al. (2019) Tianfan Xue, Baian Chen, Jiajun Wu, Donglai Wei, and William T Freeman. 2019. Video Enhancement with Task-Oriented Flow. International Journal of Computer Vision (IJCV) 127, 8 (2019), 1106–1125.