Intelligent Home 3D: Automatic 3D-House Design

from Linguistic Descriptions Only

Abstract

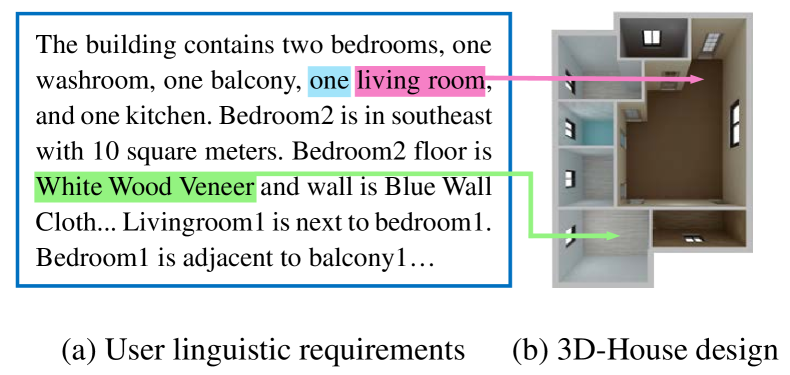

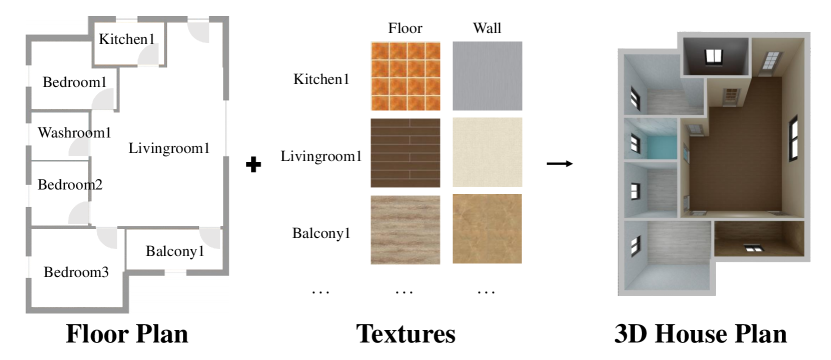

Home design is a complex task that normally requires architects to finish with their professional skills and tools. It will be fascinating that if one can produce a house plan intuitively without knowing much knowledge about home design and experience of using complex designing tools, for example, via natural language. In this paper, we formulate it as a language conditioned visual content generation problem that is further divided into a floor plan generation and an interior texture (such as floor and wall) synthesis task. The only control signal of the generation process is the linguistic expression given by users that describe the house details. To this end, we propose a House Plan Generative Model (HPGM) that first translates the language input to a structural graph representation and then predicts the layout of rooms with a Graph Conditioned Layout Prediction Network (GC-LPN) and generates the interior texture with a Language Conditioned Texture GAN (LCT-GAN). With some post-processing, the final product of this task is a 3D house model. To train and evaluate our model, we build the first Text–to–3D House Model dataset.

1 Introduction

Everyone wants a dream home, but not everyone can design home by themselves. Home design is a complex task that is normally done by certificated architects, who have to receive several years of training on designing, planning and using special designing tools. To design a home, they typically start by collecting a list of requirements for a building layout. Then, they use trial-and-error to generate layouts with a combination of intuition and prior experience. This usually takes from a couple of days to several weeks and has high requirements for professional knowledge.

It will be fantastic if we can design our own home by ourselves. We may not have home design knowledge and have no idea how to use those complicated professional designing tools, but we have strong linguistic ability to express our interests and desire. Thus, for time-saving and allowing people without expertise to participate in the design, we propose to use linguistic expressions as the guidance to generate home design plans, as shown in Figure 1. Thanks to the fast development of Deep Learning (DL) [6, 9, 11, 43, 44, 48, 49, 50, 52], especially Generative Adversarial Network (GAN) [3, 4, 8, 10] and vision-language research [13, 45], we can turn this problem into a text-to-image generation problem, which has been studied in [22, 29, 31, 41, 42]. However, it is non-trivial to directly apply these methods on our new task because there exist two new technical challenges: 1) A floor plan is a structured layout which pays more attention to the correctness of size, direction, and connection of different blocks, while the conventional text-to-image task focuses more on pixel-level generation accuracy. 2) The interior texture such as floor and wall needs neater and more stable pixel generation than general images and should be well aligned with the given descriptions.

To tackle the above issues, we propose a House Plan Generative Model (HPGM) to generate home plans from given linguistic descriptions. The HPGM first uses a Stanford Scene Graph Parser [32] to parse the language to a structural graph layout, where nodes represent room types associated with size, room floor (wall) colour and material. Edges between nodes indicate whether rooms are connected or not. We then divide the house plan generation process into two sub-tasks: building layout generation and texture synthesis. Both of them are conditioned on the above extracted structural graph. Specifically, we design a Graph Conditioned Layout Prediction Network (GC-LPN) which applies a Graph Convolutional Network [20] to encode the graph as a feature representation and predicts the room layouts via bounding box regressions. The predicted room layouts are sent to a floor plan post-processing step, which outputs a featured floor plan with doors, windows, walls, etc. To generate floor and wall textures, we design a Language Conditioned Texture GAN (LCT-GAN) that takes the encoded text representations as input and generates texture images with three designed adversarial, material-aware, and colour-aware losses. The generated floor plan and texture images are sent to an auto 3D rendering system to produce the final rendered 3D house plan.

For 3D house generation from linguistic description, we build the first Text–to–3D House Model dataset that contains a 2D floor plan and two texture (floor and wall) patches for each room in the house. We evaluate the room layout generation and texture generation ability of our model separately. The room layout accuracy is evaluated based on the IoU (Intersection over Union) between the predicted room bounding boxes and the ground-truth annotation. The generated interior textures are evaluated with popular image generation metrics such as Fréchet Inception Distance (FID) [12] and Multi-scale Structural Similarity (MS-SSIM) [37]. Our proposed GC-LPN and LCT-GAN outperform the baseline and state-of-the-art models in a large margin. Besides, a generalisation ability evaluation of our LCT-GAN is carried out. We also perform a human evaluation on our final products – 3D house plans, which shows that pass it.

We highlight our principal contributions as follows:

-

•

We propose a novel architecture, called House Plan Generative Model (HPGM), which is able to generate 3D house models with given linguistic expressions. To reduce the difficulty, we divide the generation task into two sub-tasks to generate floor plans and interior textures, separately.

-

•

To achieve the goal of synthesising 3D building model from the text, we collect a new dataset consisting of the building layouts, texture images, and their corresponding natural language expressions.

-

•

Extensive experiments show the effectiveness of our proposed method on both qualitative and quantitative metrics. We also study the generalisation ability of the proposed method by generating unseen data with the given new texts.

2 Related Work

Building layout design.

Several existing methods have been proposed for generating building layouts automatically [1, 5, 24, 28, 38]. However, most of these methods generate the building layouts by merely adjusting the interior edges in a given building outline. Specifically, Merrel et al. [24] generate residential building layouts using a Bayesian network trained in architectural programs. Based on an initial layout, Bao et al. [1] formulate a constrained optimisation to characterise the local shape spaces and then link them to a portal graph to obtain the objective layout. Peng et al. [28] devise a framework to yield the floor plan by tiling an arbitrarily shaped building outline with a set of deformable templates. Wu et al. [38] develop a framework that generates building interiors with high-level requirements. More recently, Wu et al. [39] propose a data-driven floor plan generating system by learning thousands of samples. However, the above methods require either a given building outline or a detailed structured representation as the input while we generate the room layouts with human verbal commands.

Texture synthesis.

Many existing works in terms of texture generation focus on transferring a given image into a new texture style [16, 21, 35] or synthesising a new texture image based on the input texture [7, 34, 40]. Different from that, we aim to solve the problem that generates texture images with given linguistic expressions. The closest alternative to our task is texture generation from random noise [2, 15]. Specifically, Jetchev et al. [15] propose a texture synthesis method based on GANs, which can learn a generating process from the given example images. Recently, to obtain more impressive images, Bergmann et al. [2] incorporate the periodical information into the generative model, which makes the model have the ability to synthesise periodic texture seamlessly. Even if these methods have a strong ability to produce plausible images, they have limited real-world applications due to the uncontrollable and randomly generated results. We use natural language as the control signal for texture generation.

Text to image generation.

For generating an image from text, many GAN-based methods [17, 23, 29, 31, 41, 42, 46, 47, 51] have been proposed in this area. Reed et al. [31] transform the given sentence into text embedding and then generate image conditioning on the extracted embedding. Furthermore, to yield more realistic images, Zhang et al. [47] propose a hierarchical network, called StackGAN, which generates images with different sizes (from coarse to fine). Meanwhile, they introduce a conditioning augmentation method to avoid the discontinuity in the latent manifold of text embedding. Based on StackGAN, Xu et al. [41] develop an attention mechanism, which ensures the alignment between generated fine-grained images and the corresponding word-level conditions. More recently, to preserve the semantic consistency, Qiao et al. [29] consider both text-to-image and image-to-text problems jointly.

3 Proposed Method

In this paper, we focus on 3D-house generation from requirements, which seeks to design a 3D building automatically conditioned on the given linguistic descriptions. Due to the intrinsic complexity of 3D-house design, we divide the generation process into two sub-tasks: building layout generation and texture synthesis, which produce floor plan and corresponding room features (i.e., textures of each room), respectively.

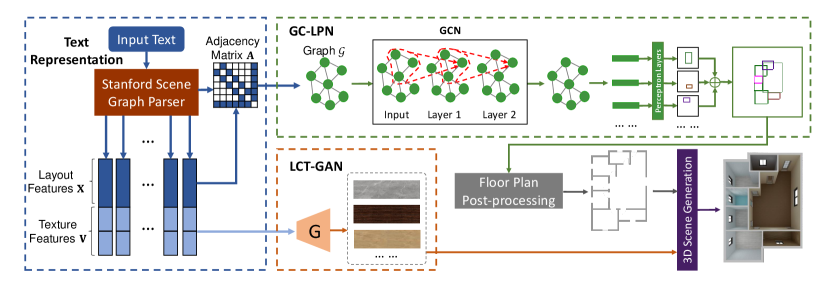

To complete the above two tasks, we propose a House Plan Generative Model (HPGM) to automatically generate a 3D home design conditioned on given descriptions. As shown in Figure 2, the proposed HPGM consists of five components: 1) text representation block, 2) graph conditioned layout prediction network (GC-LPN), 3) floor plan post-processing, 4) language conditioned texture GAN (LCT-GAN), and 5) 3D scene generation and rendering.

In Figure 2, the text representation is to capture the structural text information from given texts using a Stanford Scene Graph Parser [32]. Based on the text representations, GC-LPN is devised to produce a coarse building layout. To obtain a real-world 2D floor plan, we send the generated layout to a floor plan post-processing step to refine the coarse building layout to yield a floor plan with windows and doors. To synthesise the interior textures of each room, we further devise a Language Conditioned Texture GAN (LCT-GAN) to yield the controllable and neat images according to the semantic text representations. Last, we feed the generated floor plan with room features into a 3D rendering system for 3D scene generation and rendering. The details of each component are depicted below.

3.1 Text Representation

The linguistic descriptions of the building include the description of the number of rooms and room types, followed by the connections between rooms, and the designing patterns of each room. Although it follows a weakly structural format, directly using the template-based language parser is impractical due to the diversity of the linguistic descriptions. Instead, we employ the Stanford Scene Graph Parser [32] with some post-processing and merging to parse the linguistic descriptions to a structural graph format. For such a constructed graph, each node is a room with some properties (e.g., the room type, size, interior textures). The edge between nodes indicates the connectivity of two rooms. More details of the scene graph parser can be found in the supplementary materials.

We use different representation as inputs in building layout generation and texture synthesis, since these two tasks require different semantic information. In building layout generation, we define input vectors as , where refers to the number of nodes (i.e., rooms) in each layout and denotes the feature dimension. Each node feature is a triplet, where is the type of room (e.g., bedroom), is the size (e.g., 20 squares) and is the position (e.g., southwest). All features are encoded as one-hot vectors except the size is a real value. Moreover, to exploit the topological information elaborately, following [20], we convert the input features to an undirected graph via introducing an adjacency matrix .

In the texture synthesis task, for a given text, we transform the linguistic expression to a collection of vectors , where refers to the number of textures in each layout and denotes the dimension of each feature vector. For , we design , where indicates the material (e.g., log, mosaic or stone brick) and refers to the colour. We pre-build a material and colour word vocabulary from training data so that we can classify the parsed attributes into the material or colour set.

3.2 Graph Conditioned Layout Prediction Network

To generate the building layouts satisfying the requirements, we propose a Graph Conditioned Layout Prediction Network (GC-LPN). We incorporate the adjacent information into the extracted features via a GCN, which facilitates the performance when generating the objective layouts.

Graph convolutional network.

In order to process the aforementioned graphs in an end-to-end manner, we use a graph convolutional network composed of two graph convolutional layers. Specifically, we take the feature matrix as inputs and produce a new feature matrix, where each output vector is an aggregation of a local neighbourhood of its corresponding input vector. In this way, we obtain a new feature matrix, which introduces the information across local neighbourhoods of the inputs. Note that, since we only focus on generating the layouts of resident building, the order and size of corresponding graph are small. Therefore, it is sufficient to leverage a two-layer GCN model (as shown in Figure 2) when introducing the information of adjacent rooms. Mathematically, we have

| (1) |

where and are the weights of two graph convolutional layers. Note that the adjacency matrix only contains 1 and 0, which indicates whether pairs of nodes (rooms) are adjacent or not. is the structured feature. Then, we add the extracted feature with the input feature to get the feature

| (2) |

where “” is the element-wise addition.

Bounding box regression.

After reasoning on the graph with GCNs, we gain a set of embedding vectors, where each vector aggregates the information across the adjacent rooms. In order to produce the building layout, we must transform these vectors from the graph domain to the image domain. Thus, we define each room as a coarse 2D bounding box, which can be represented as . In this way, we cast the problem to that bounding box generation from given room embedding vectors.

In practice, we first feed the well-designed feature into a two-layer perceptron network and predict the corresponding bounding box of each node . Then, we integrate all the predicted boxes and obtain the corresponding building layout. For training the proposed model, we minimise the objective function

| (3) |

where is the ground-truth bounding box for node (i.e., the bounding box that covers the room).

3.3 Floor Plan Post-processing

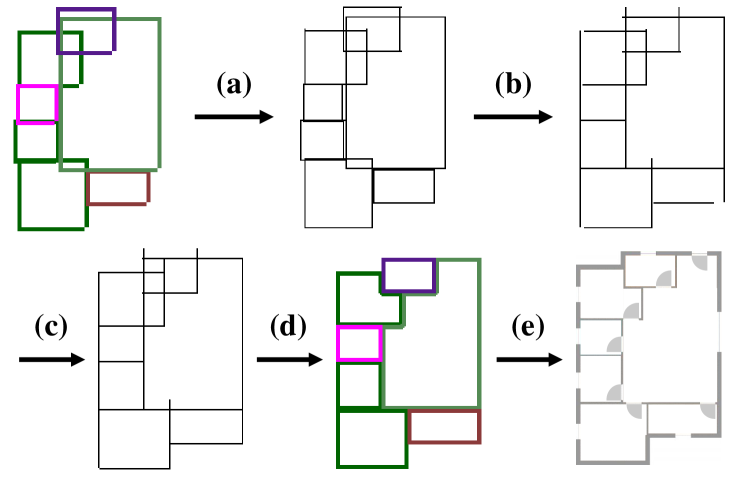

To transform the bounding box layout to a real-world 2D floor plan, we propose a floor plan post-processing (shown in Figure 3), which consists of five steps, i.e., (a)(e). To be specific, in Step (a), we first extract boundary lines of all generated bounding boxes and then merge the adjacent segments together in Step (b). In Step (c), we further align the line segments with each other to obtain the closed polygon. In Step (d), we judge the belonging of each closed polygon based on a weight function:

| (4) |

where is the original box (room) while is the aligned polygon. denotes the weight of polygon belonging to room . and indicate the central position while and are the half width and height of the bounding box. and are the coordinates in the aligned polygon. We assign the polygon with the room type, according to the corresponding original bounding box, which has maximum weight .

Finally, in Step (e), we apply a simple rule-based method to add doors and windows in rooms. Specifically, a door or open wall is added between the living room and any other room. We set the window on the longest wall of each room and set the entrance on the wall of the biggest living room. We find these rules work in most cases and good enough to set reasonable positions, but a learning-based method may improve this process and we leave it as the future work.

3.4 Language Conditioned Texture GAN

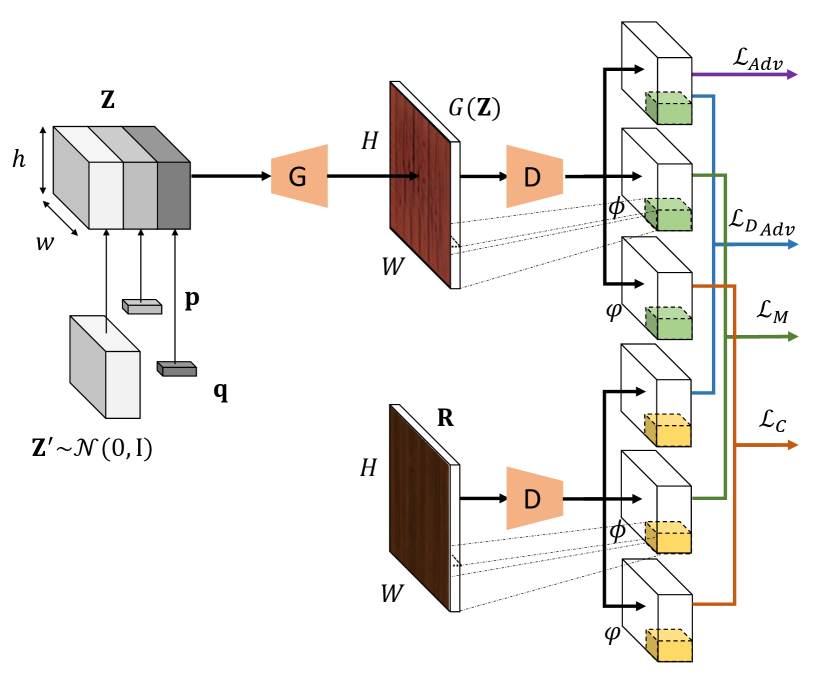

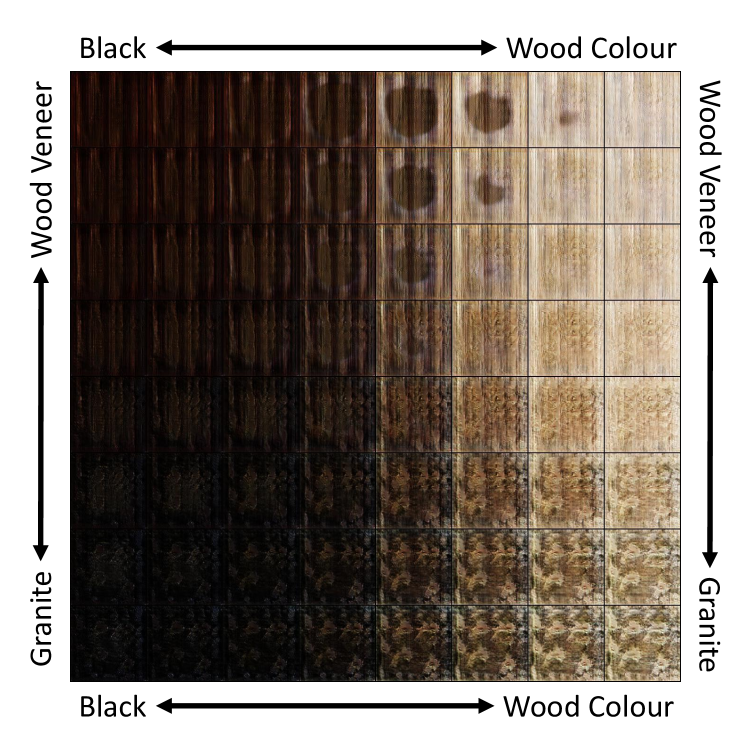

For better controlling the details of textures, we consider the texture images in terms of two fields, i.e., material and colour. In this way, we design a Language Conditioned Texture GAN (LCT-GAN) (in Figure 4), which can generate texture images that align with the given expressions.

Texture generator.

We first obtain the input noise from Gaussian distribution . After that, to incorporate the conditional information, we extend the aforementioned material and colour vectors and as the same size with the noise and then concatenate them together to obtain the objective input .

Conditioning on the input tensor , we generate the corresponding texture image by , where and denote the width and height of the generated image, respectively. Note that, in order to generate arbitrary size of texture, we design the generator with a fully convolutional network (FCN), which allows input with various sizes when inferring. In practice, we establish our FCN model with only five blocks, where each block consists of a upsampling interpolation, a convolutional layer, a batch normalisation [14] and an activation function. Due to the page limit, we put more details in the supplementary.

On the other hand, to generate texture from an expression, the generator must: 1) ensure the generated images are natural and realistic ; and 2) preserve the semantic alignment between given texts and texture images. To satisfy the above requirements, we propose an optimisation mechanism consisting of three losses , and , which indicate the adversarial loss, material-aware loss and colour-aware loss, respectively. Overall, the final objective function of the texture generator is

| (5) |

where and are trade-off parameters. In experiments, we set and to 1 by default. We will elaborate on the modules that lead to these losses in the following sections.

Adversarial loss.

To synthesise the natural images, we follow the traditional GAN [8], where the generator and discriminator compete in a two-player minimax game. Specifically, the generator tries to fool the discriminator while tries to distinguish whether the given image is real or fake/generated. Based on that, for our task, when optimising the discriminator , we minimise the loss

| (6) |

where and denote the distributions of real samples and noise, respectively. refers to the input of , as mentioned before, consisting of noise and conditions and . On the other hand, when optimising network , we use

| (7) |

Material-aware loss.

To preserve the semantic alignment between generated textures and given texts, we propose a material-aware loss, which is sensitive to fine-grained material categories. To be specific, as mentioned in Section 3.1, we transform the linguistic descriptions to a structural format, which includes a label for each node to indicate its floor/wall material categories. We then add a material classifier on top of , called , which imposes the generated texture into the right category. In this way, we obtain the posterior probability of each entry image, where refers to the category of material. Thus, we minimise the training loss of and as

| (8) |

Colour-aware loss.

Similar to the above material-aware loss, instead of focusing on materials, colour-aware loss pays more attention to colour categories. Based on the given expressions of texture colour, we cast the colour alignment as a classification problem. Specifically, we reuse the discriminator as the feature extractor and replace the last layer to be a colour classifier . Then, in both and , we try to minimise the loss

| (9) |

where is the posterior probability conditioning on the given texture image .

3.5 3D Scene Generation and Rendering

For the better visualisation of the generated floor plan with textures, we introduce a 3D scene generator followed with a photo-realistic rendering process. Given generated floor plan and textures as shown in Figure 5, we generate walls from boundaries of rooms with fixed height and thickness. We set the height of walls to 2.85m and the thickness of interior walls to 120mm. The thickness of the exterior wall is set to 240mm while the length of the door is 900mm and the height is 2000mm. We simply set the length of the window to thirty percent of the length of the wall it belongs to. Besides, we develop a photo-realistic rendering based on Intel Embree [36], an open-source collection of high-performance ray tracing kernels for x86 CPUs. Photo-realistic renderer is implemented with Monte Carlo path tracing. By following the render equation [18], the path tracer simulates real-world effects such as realistic material appearance, soft shadows, indirect lighting, ambient occlusion and global illumination. In order to visualise the synthetic scenes, we deploy a virtual camera on the front top of each scene and capture a top-view render image.

4 Experiments

4.1 Experimental Settings

Dataset. To generate 3D building models from natural language descriptions, we collect a new dataset, which contains houses, rooms and 111Some rooms have same textures so this number is smaller than the total number of rooms. texture images with corresponding natural language descriptions. These descriptions are firstly generated from some pre-defined templates and then refined by human workers. The average length of the description is and there are unique words. In our experiments, we use pairs for training while for testing in the building layout generation. For texture synthesis, we use data for training and data for testing. More dataset analysis can be found in the supplementary materials.

Evaluation metrics. We quantitatively evaluate our model and compare it with other models in threefold: layout generation accuracy, texture synthesis performance, and final 3D house floor plans. We measure the precision of the generated layout by Intersection-over-Union (IoU), which indicates the overlap between the generated box and ground-truth one, where the value is from 0 to 1. For the evaluation of textures, we use Fréchet Inception Distance (FID) [12]. In general, the smaller this value is, the better performance the method will have. Besides, to test the pair-wise similarity of generated images and identify mode collapses reliably [26], we use Multi-scale Structural Similarity (MS-SSIM) [37] for further validation. A lower score indicates a higher diversity of generated images (i.e., fewer model collapses). Note that, following the settings in [46], for a fair comparison, we resize all the images to before computing FID and MS-SSIM. For the 3D house floor plans, which are our final products, we run a human study to evaluate them.

Implementation details. In practice, we set input of LCT-GAN with , , , and . All the weights of models (GC-LPN and LCT-GAN) are initialised from a normal distribution with zero-mean and standard deviation of . In training, we use Adam [19] with to update the model parameters of both GC-LPN and LCT-GAN. We optimise our LCT-GAN to generate texture images of size with mini-batch size and learning rate .

4.2 Building Layout Generation Results

Compared methods.

We evaluate the generated layout and compare the results with baseline methods. However, there is no existing work on our proposed text-guided layout generation task, which focuses on generating building layouts directly from given linguistic descriptions. Therefore, our comparisons are mainly to ablated versions of our proposed network. The compared methods are:

MLG: In “Manually Layout Generation” (MLG), we draw the building layouts directly using a program with the predefined rules, according to the given input attributes, such as type, position and size of the rooms. Specifically, we first roughly locate the central coordinates of each room conditioning on the positions. After that, we randomly pick the aspect ratio for different rooms, and then get the exact height and width by considering the size of each room. Finally, we draw the building layouts with such centre, height, width and type of each room.

C-LPN: In “Conditional Layout Prediction Network” (C-LPN), we simply remove the GCN in our proposed model. That means, when generating building layouts, the simplified model can only consider the input descriptions and ignore the information from neighbourhood nodes.

RC-LPN: In “Recurrent Conditional Layout Prediction Network” (RC-LPN), we yield the outline box of rooms sequentially like [33]. To be specific, we replace GCN with an LSTM and predict the building layout by tracking the history of what has been generated so far.

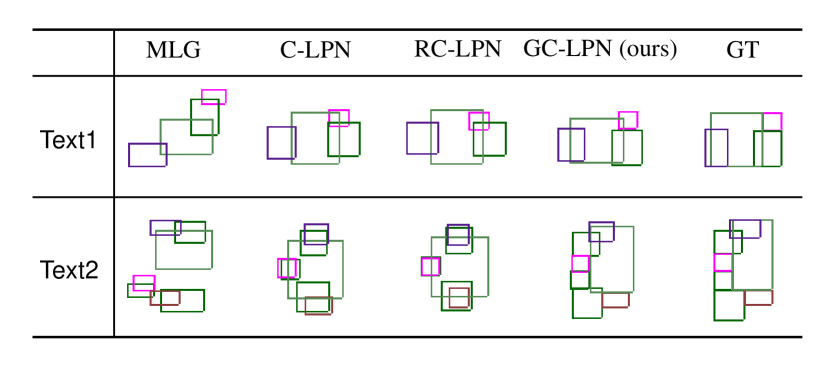

Quantitative evaluation.

| MLG | C-LPN | RC-LPN | GC-LPN (ours) | |

| IoU | 0.7208 | 0.8037 | 0.7918 | 0.8348 |

We evaluate the performance of our proposed GC-LPN by calculating the average IoU value of the generated building layouts. From Table 1, compared with the baseline methods, GC-LPN obtains higher value in IoU, which implies that the GC-LPN has the capacity to locate the outline of layout more precisely than other approaches. Models without our graph-based representation, such as C-LPN and RC-LPN, have lower performance.

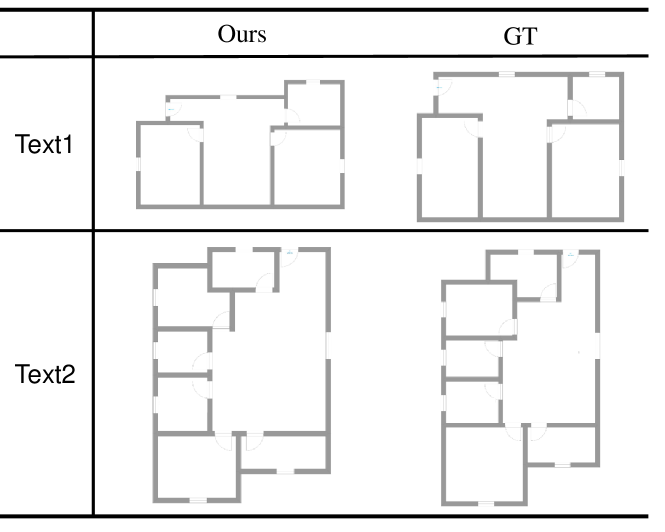

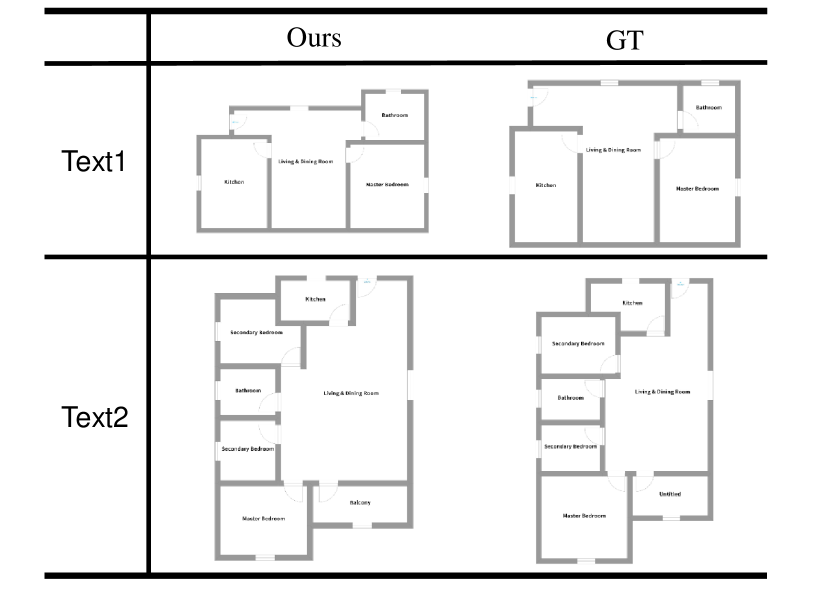

Qualitative evaluation. Moreover, we investigate the performance of our GC-LPN by visual comparison. From Figure 6, we provide two layout samples corresponding to “Text1222The building contains one washroom, one bedroom, one livingroom, and one kitchen. Specifically, washroom1 has 5 squares in northeast. bedroom1 has 14 square meters in east. Besides, livingroom1 covers 25 square meters located in center. kitchen1 has 12 squares in west. bedroom1, kitchen1, washroom1 and livingroom1 are connected. bedroom1 is next to washroom1. ” and “Text2333Due to page limit, we put the content of “Text2” in the supplementary.” respectively. The results show that compared with the baseline methods, GC-LPN obtains more accurate layouts, whether simple or complex. We also present the generated 2D floor plans after post-processing, and the corresponding ground-truths in Figure 7.

4.3 Texture Synthesis Results

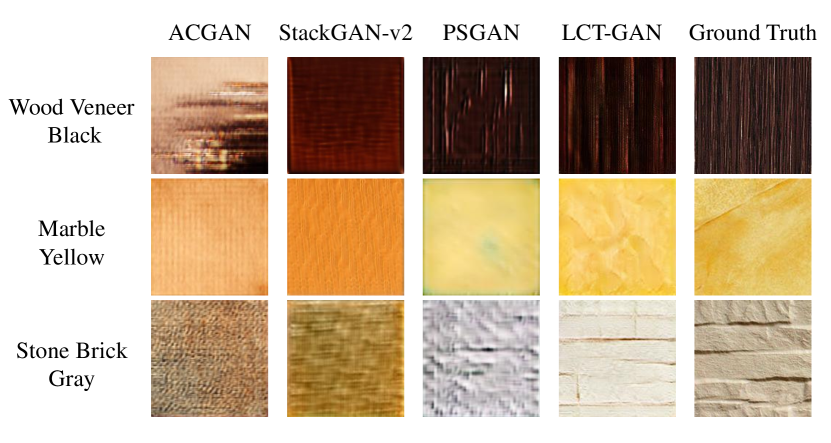

Compared methods.

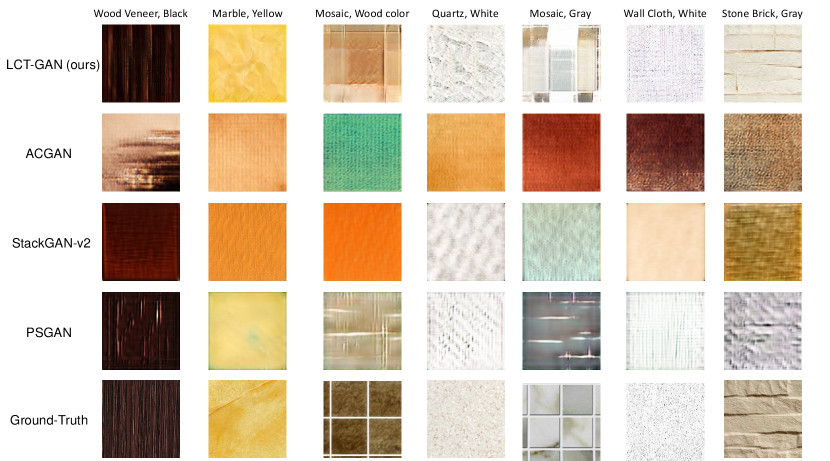

For the conditional texture generation task, we compare the performance of our proposed method with several baselines, including ACGAN [26], StackGAN-v2 [46] and PSGAN [2]. Note that PSGAN can only generate image from random noise. Thus, to generate images in a controlled way, we design a variant of PSGAN, which introduces the conditional information when synthesising the objective texture like [25].

Quantitative evaluation. In this part, we compare the performance of different methods on our proposed dataset in terms of FID and MS-SSIM. In Table 2, our LCT-GAN achieves the best performance in FID, which implies that our method is able to yield more photo-realistic images than others. Moreover, for MS-SSIM, our LCT-GAN obtains the competitive result compared with PSGAN, which is also designed specifically for texture generation. It suggests that our method has the ability to ensure the diversity of synthesised images when preserving realism.

| Methods | Train Set | Test Set | ||

|---|---|---|---|---|

| FID | MS-SSIM | FID | MS-SSIM | |

| ACGAN [26] | 198.07 | 0.4584 | 220.18 | 0.4601 |

| StackGAN-v2 [46] | 182.96 | 0.6356 | 188.15 | 0.6225 |

| PSGAN [2] | 195.29 | 0.4162 | 217.12 | 0.4187 |

| LCT-GAN (ours) | 119.33 | 0.3944 | 145.16 | 0.3859 |

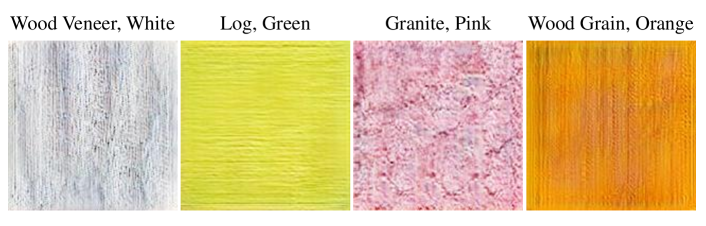

Qualitative evaluation. For further evaluating the performance of LCT-GAN, we provide several visual results of the generated textures. From Figure 8, compared with the baselines, our synthesised images contain more details while preserving the semantic alignment with the conditional descriptions. The results demonstrate LCT-GAN has the ability to semantically align the given texts and capture more detailed information than other approaches. We put more results in the supplementary.

Ablation studies. To test the effect of each proposed loss, we conduct an ablation study to compare the generated results by removing some losses and show the quantitative results in Table 3. Note that the model only using adversarial loss can not yield controllable images. Thus, we combine with the other two losses (i.e., and ) to investigate the performance. The results show that based on , and are able to improve the performance very well. When using all the three losses into our model, we obtain the best results on both FID and MS-SSIM.

| Train Set | Test Set | ||||||

|---|---|---|---|---|---|---|---|

| FID | MS-SSIM | FID | MS-SSIM | ||||

| LCT-GAN | 134.06 | 0.4189 | 157.01 | 0.4191 | |||

| 134.61 | 0.4310 | 158.20 | 0.4263 | ||||

| 119.33 | 0.3944 | 145.16 | 0.3859 | ||||

Generalisation ability. In this section, we conduct two experiments to verify the generalisation ability of our proposed method. We first investigate the landscape of the latent space. Following the setting in [30], we conduct the linear interpolations between two input embeddings and feed them into the generator . As shown in Figure 9, the generated textures change smoothly when the input semantics (i.e., material or colour) vary. On the other hand, to further evaluate the generalisation ability of our LCT-GAN, we feed some novel descriptions, which are not likely to be seen in the real world, into the generator . From Figure 10, even with such challenging semantic setting, our proposed method is still able to generate meaningful texture images. Both the two experiments suggest that LCT-GAN generalises well to novel/unseen images rather than simply remembering the existing data in the training set.

4.4 3D House Design

Qualitative results.

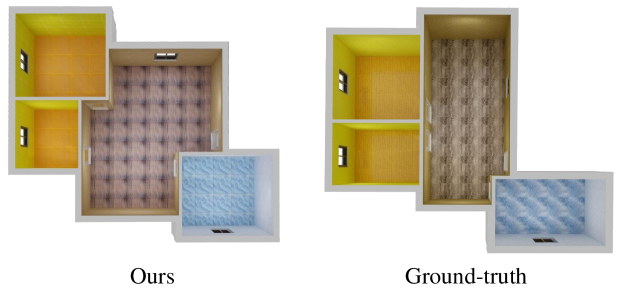

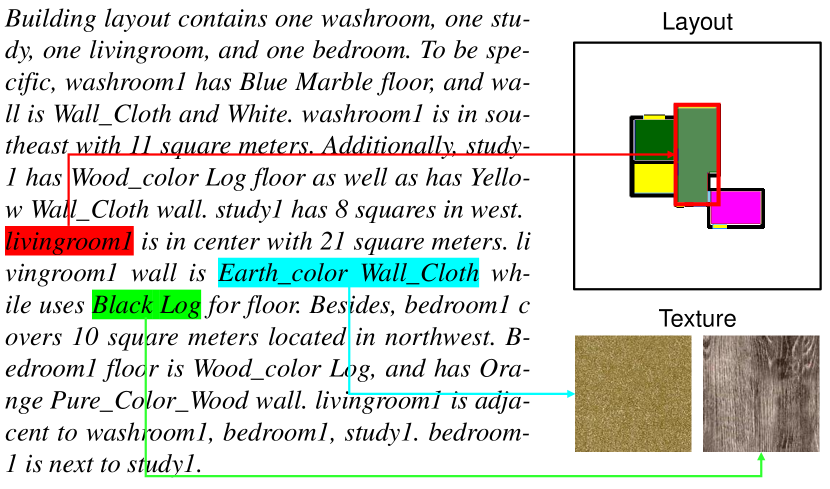

For quality evaluation, we present the 3D house plans (in Figure 11) generated by our HPGM and the ground-truth counterparts with conditional text444Building layout contains one washroom, one study, one livingroom, and one bedroom. To be specific, washroom1 has Blue Marble floor, and wall is Wall_Cloth and White. washroom1 is in southeast with 11 square meters. Additionally, study1 has Wood_color Log floor as well as has Yellow Wall_Cloth wall. study1 has 8 squares in west. livingroom1 is in center with 21 square meters. livingroom1 wall is Earth_color Wall_Cloth while uses Black Log for floor. Besides, bedroom1 covers 10 square meters located in northwest. bedroom1 floor is Wood_color Log, and has Orange Pure_Color_Wood wall. livingroom1 is adjacent to washroom1, bedroom1, study1. bedroom1 is next to study1. , where the floor plan and corresponding room textures are drawn by architects. Our method has ability to produce competitive visual results, even compared with the human-made plans. We will put more results in the supplementary.

| HPGM (ours) | Human | Tie | |

| Choice (%) | 39.41 | 47.94 | 12.65 |

Human study. Since the automatic metrics can not fully evaluate the performance of our method, we perform a human study on the house plans. Inspired by [23, 27], we conduct a pairwise comparison between HPGM and human beings, using house plans pairs with their corresponding descriptions. Then, we ask human subjects (university students) to distinguish which is designed by human beings. Finally, we calculate the ratio of choice and obtain the final metrics. From Table 4, generated samples pass the exam, which implies that compared with the manually designed samples, the machine-generated ones are exquisite enough to confuse the evaluators.

5 Conclusion

3D house generation from linguistic descriptions is non-trivial due to the intrinsic complexity. In this paper, we propose a novel House Plan Generative Model (HPGM), dividing the generation process into two sub-task: building layout generation and texture synthesis. To tackle these problems, we propose two modules (i.e., GC-LPN and LCT-GAN), which focus on producing floor plan and corresponding interior textures from given descriptions. To verify the effectiveness of our method, we conduct a series of experiments, including quantitative and qualitative evaluations, ablation study, human study, etc. The results show that our method performs better than the competitors, which indicates the value of our approach. We believe this will be a practical application with further polish.

References

- [1] Fan Bao, Dong-Ming Yan, Niloy J Mitra, and Peter Wonka. Generating and exploring good building layouts. ACM Transactions on Graphics (TOG), 32(4):122, 2013.

- [2] Urs Bergmann, Nikolay Jetchev, and Roland Vollgraf. Learning texture manifolds with the periodic spatial gan. In Proc. Int. Conf. Mach. Learn., pages 469–477, 2017.

- [3] Jiezhang Cao, Yong Guo, Qingyao Wu, Chunhua Shen, Junzhou Huang, and Mingkui Tan. Adversarial learning with local coordinate coding. In Proc. Int. Conf. Mach. Learn., 2018.

- [4] Jiezhang Cao, Langyuan Mo, Yifan Zhang, Kui Jia, Chunhua Shen, and Mingkui Tan. Multi-marginal wasserstein gan. In Proc. Advances in Neural Inf. Process. Syst., pages 1774–1784, 2019.

- [5] Stanislas Chaillou. Archigan: a generative stack for apartment building design. 2019.

- [6] Peihao Chen, Chuang Gan, Guangyao Shen, Wenbing Huang, Runhao Zeng, and Mingkui Tan. Relation attention for temporal action localization. IEEE Trans. Multimedia, 2019.

- [7] Leon Gatys, Alexander S Ecker, and Matthias Bethge. Texture synthesis using convolutional neural networks. In Proc. Advances in Neural Inf. Process. Syst., pages 262–270, 2015.

- [8] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. In Proc. Advances in Neural Inf. Process. Syst., pages 2672–2680, 2014.

- [9] Yong Guo, Jian Chen, Jingdong Wang, Qi Chen, Jiezhang Cao, Zeshuai Deng, Yanwu Xu, and Mingkui Tan. Closed-loop matters: Dual regression networks for single image super-resolution. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2020.

- [10] Yong Guo, Qi Chen, Jian Chen, Qingyao Wu, Qinfeng Shi, and Mingkui Tan. Auto-embedding generative adversarial networks for high resolution image synthesis. IEEE Trans. Multimedia, 2019.

- [11] Yong Guo, Yin Zheng, Mingkui Tan, Qi Chen, Jian Chen, Peilin Zhao, and Junzhou Huang. Nat: Neural architecture transformer for accurate and compact architectures. In Proc. Advances in Neural Inf. Process. Syst., pages 735–747, 2019.

- [12] Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Proc. Advances in Neural Inf. Process. Syst., pages 6626–6637, 2017.

- [13] Deng Huang, Peihao Chen, Runhao Zeng, Qing Du, Mingkui Tan, and Chuang Gan. Location-aware graph convolutional networks for video question answering. In Proc. Conf. AAAI, 2020.

- [14] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. Proc. Int. Conf. Mach. Learn., 2015.

- [15] Nikolay Jetchev, Urs Bergmann, and Roland Vollgraf. Texture synthesis with spatial generative adversarial networks. arXiv preprint arXiv:1611.08207, 2016.

- [16] Justin Johnson, Alexandre Alahi, and Li Fei-Fei. Perceptual losses for real-time style transfer and super-resolution. In Proc. Eur. Conf. Comp. Vis., pages 694–711. Springer, 2016.

- [17] Justin Johnson, Agrim Gupta, and Li Fei-Fei. Image generation from scene graphs. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 1219–1228, 2018.

- [18] James T Kajiya. The rendering equation. In ACM SIGGRAPH computer graphics, volume 20, pages 143–150. ACM, 1986.

- [19] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. Proc. Int. Conf. Learn. Representations, 2015.

- [20] Thomas N Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. Proc. Int. Conf. Learn. Representations, 2017.

- [21] Chuan Li and Michael Wand. Precomputed real-time texture synthesis with markovian generative adversarial networks. In Proc. Eur. Conf. Comp. Vis., pages 702–716. Springer, 2016.

- [22] Wenbo Li, Pengchuan Zhang, Lei Zhang, Qiuyuan Huang, Xiaodong He, Siwei Lyu, and Jianfeng Gao. Object-driven text-to-image synthesis via adversarial training. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 12174–12182, 2019.

- [23] Yitong Li, Zhe Gan, Yelong Shen, Jingjing Liu, Yu Cheng, Yuexin Wu, Lawrence Carin, David Carlson, and Jianfeng Gao. Storygan: A sequential conditional gan for story visualization. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 6329–6338, 2019.

- [24] Paul Merrell, Eric Schkufza, and Vladlen Koltun. Computer-generated residential building layouts. In ACM Transactions on Graphics (TOG), volume 29, page 181. ACM, 2010.

- [25] Mehdi Mirza and Simon Osindero. Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784, 2014.

- [26] Augustus Odena, Christopher Olah, and Jonathon Shlens. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 2642–2651. JMLR. org, 2017.

- [27] Yingwei Pan, Zhaofan Qiu, Ting Yao, Houqiang Li, and Tao Mei. To create what you tell: Generating videos from captions. In Proceedings of the 25th ACM international conference on Multimedia, pages 1789–1798. ACM, 2017.

- [28] Chi-Han Peng, Yong-Liang Yang, and Peter Wonka. Computing layouts with deformable templates. ACM Transactions on Graphics (TOG), 33(4):99, 2014.

- [29] Tingting Qiao, Jing Zhang, Duanqing Xu, and Dacheng Tao. Mirrorgan: Learning text-to-image generation by redescription. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 1505–1514, 2019.

- [30] Alec Radford, Luke Metz, and Soumith Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. Proc. Int. Conf. Learn. Representations, 2016.

- [31] Scott Reed, Zeynep Akata, Xinchen Yan, Lajanugen Logeswaran, Bernt Schiele, and Honglak Lee. Generative adversarial text to image synthesis. Proc. Int. Conf. Mach. Learn., 2016.

- [32] Sebastian Schuster, Ranjay Krishna, Angel Chang, Li Fei-Fei, and Christopher D Manning. Generating semantically precise scene graphs from textual descriptions for improved image retrieval. In Proceedings of the fourth workshop on vision and language, pages 70–80, 2015.

- [33] Fuwen Tan, Song Feng, and Vicente Ordonez. Text2scene: Generating compositional scenes from textual descriptions. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 6710–6719, 2019.

- [34] Matthew Tesfaldet, Marcus A Brubaker, and Konstantinos G Derpanis. Two-stream convolutional networks for dynamic texture synthesis. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 6703–6712, 2018.

- [35] Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. Improved texture networks: Maximizing quality and diversity in feed-forward stylization and texture synthesis. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 6924–6932, 2017.

- [36] Ingo Wald, Sven Woop, Carsten Benthin, Gregory S Johnson, and Manfred Ernst. Embree: a kernel framework for efficient cpu ray tracing. ACM Transactions on Graphics (TOG), 33(4):143, 2014.

- [37] Zhou Wang, Eero P Simoncelli, and Alan C Bovik. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, volume 2, pages 1398–1402. Ieee, 2003.

- [38] Wenming Wu, Lubin Fan, Ligang Liu, and Peter Wonka. Miqp-based layout design for building interiors. In Computer Graphics Forum, volume 37, pages 511–521. Wiley Online Library, 2018.

- [39] Wenming Wu, Xiao-Ming Fu, Rui Tang, Yuhan Wang, Yu-Hao Qi, and Ligang Liu. Data-driven interior plan generation for residential buildings. ACM Transactions on Graphics (SIGGRAPH Asia), 38(6), 2019.

- [40] Wenqi Xian, Patsorn Sangkloy, Varun Agrawal, Amit Raj, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. Texturegan: Controlling deep image synthesis with texture patches. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 8456–8465, 2018.

- [41] Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, and Xiaodong He. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2018.

- [42] Guojun Yin, Bin Liu, Lu Sheng, Nenghai Yu, Xiaogang Wang, and Jing Shao. Semantics disentangling for text-to-image generation. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 2327–2336, 2019.

- [43] Runhao Zeng, Chuang Gan, Peihao Chen, Wenbing Huang, Qingyao Wu, and Mingkui Tan. Breaking winner-takes-all: Iterative-winners-out networks for weakly supervised temporal action localization. IEEE Trans. Image Process., 28(12):5797–5808, 2019.

- [44] Runhao Zeng, Wenbing Huang, Mingkui Tan, Yu Rong, Peilin Zhao, Junzhou Huang, and Chuang Gan. Graph convolutional networks for temporal action localization. In Proc. IEEE Int. Conf. Comp. Vis., Oct 2019.

- [45] Runhao Zeng, Haoming Xu, Wenbing Huang, Peihao Chen, Mingkui Tan, and Chuang Gan. Dense regression network for video grounding. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., June 2020.

- [46] Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaogang Wang, Xiaolei Huang, and Dimitris Metaxas. Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell., 2018.

- [47] Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaogang Wang, Xiaolei Huang, and Dimitris N Metaxas. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proc. IEEE Int. Conf. Comp. Vis., 2017.

- [48] Yifan Zhang, Hanbo Chen, Ying Wei, Peilin Zhao, Jiezhang Cao, et al. From whole slide imaging to microscopy: Deep microscopy adaptation network for histopathology cancer image classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 360–368. Springer, 2019.

- [49] Yifan Zhang, Ying Wei, Peilin Zhao, Shuaicheng Niu, Qingyao Wu, Mingkui Tan, and Junzhou Huang. Collaborative unsupervised domain adaptation for medical image diagnosis. In Medical Imaging meets NeurIPS, 2019.

- [50] Yifan Zhang, Peilin Zhao, Qingyao Wu, Bing Li, Junzhou Huang, and Mingkui Tan. Cost-sensitive portfolio selection via deep reinforcement learning. IEEE Trans. Knowl. Data Eng., 2020.

- [51] Minfeng Zhu, Pingbo Pan, Wei Chen, and Yi Yang. Dm-gan: Dynamic memory generative adversarial networks for text-to-image synthesis. In Proc. IEEE Conf. Comp. Vis. Patt. Recogn., pages 5802–5810, 2019.

- [52] Zhuangwei Zhuang, Mingkui Tan, Bohan Zhuang, Jing Liu, Yong Guo, Qingyao Wu, Junzhou Huang, and Jinhui Zhu. Discrimination-aware channel pruning for deep neural networks. In Proc. Advances in Neural Inf. Process. Syst., pages 875–886, 2018.

Intelligent Home 3D: Automatic 3D-House Design

from Linguistic Descriptions Only

(Supplementary Material)

Appendix A Details of Scene Graph Parser

Given a series of linguistic requirements, we extract the corpus from dataset and then construct the scene graphs based on the corresponding expressions like [32]. First, we distribute the words in corpus into three categories: object , relation and attribute . Given an input sentence , we convert this sentence to a scene graph . is the objects with attributes, which have been mentioned in sentence . Specifically, consists of and (i.e., ), where denotes the object of while is the attribute of . denotes the set of relations between two objects. Each relation , where .

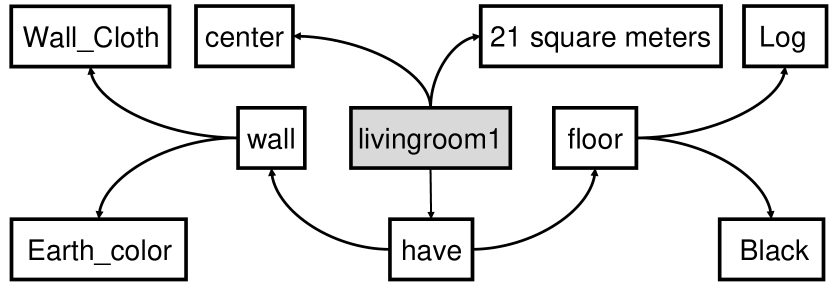

Scene graph of each room.

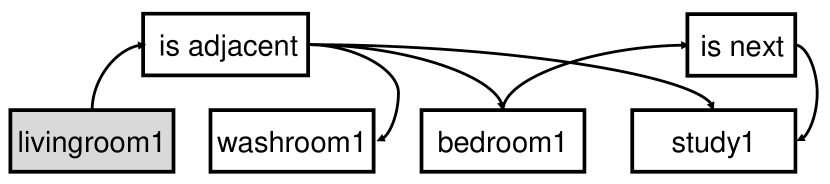

For example, for a given room (e.g., “livingroom1”), we have the linguistic descriptions “livingroom1 is in center with 21 square meters” and “livingroom1 wall is Earth_color Wall_Cloth while uses Black Log for floor”. We first transform to an object node and the relation . For the sentence , we extract the objects , and . The corresponding relations are and . Since and have the same object (i.e., “livingroom1”), we merge them together and obtain . Thus, we finally get the objective scene graph (shown in Figure A).

Scene graph of adjacency between rooms.

In addition, the descriptions on our dataset also contain the adjacent information between the rooms, such as “livingroom1 is adjacent to washroom1, bedroom1, study1” or “bedroom1 is next to study1”. In order to make use of these messages, we construct another scene graph, which focuses on the relations among the rooms mentioned in given sentence. For example, given the sentences “livingroom1 is adjacent to washroom1, bedroom1, study1” and “bedroom1 is next to study1”, we first transform according to the aforementioned rules and obtain the objects and relations: , , and ; , and . For sentence , the objects are and while the relation is . Due to and , we replace and by and , respectively. Therefore, can be reformulated as . We exhibit the visualised scene graph of these expressions in Figure B.

Appendix B Dataset Analysis

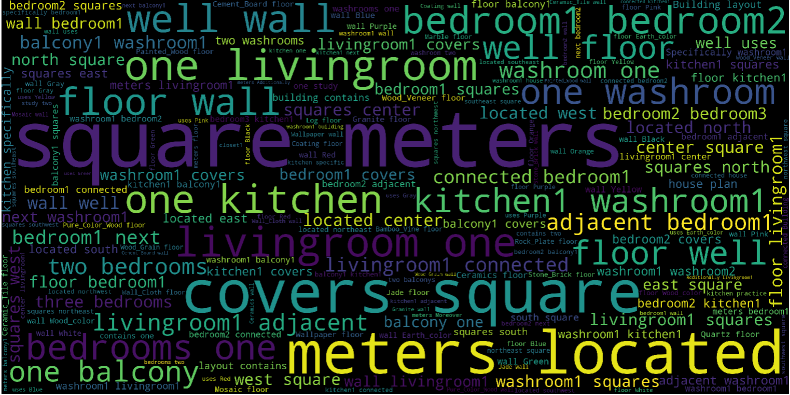

To generate 3D building models from natural language descriptions, we collect a new Text–to–3D House Model dataset, which contains houses, rooms and 555Some rooms have same textures so this number is smaller than the total number of rooms. texture images with corresponding natural language descriptions. These descriptions are firstly generated from some pre-defined templates and then refined by human workers. The average length of the description is and there are unique words. All the building layouts are designed on the canvas with the pixel size of , which represents square meters in the real world. We take an example from our proposed dataset and show in Figure C. Moreover, we also provide the word cloud of our dataset and the visualised results are shown in Figure D.

Appendix C Details of Generator

In this section, we provide more details of the generator in our proposed LCT-GAN and show the detailed architecture in Table A.

| Module | Module details | Input shape | Output shape |

|---|---|---|---|

| Upsample | Upsampling | (, h, w) | (, 2h, 2w) |

| Conv2d | kernel=(5, 5), stride=(1, 1), padding=(2, 2) | (, 2h, 2w) | (8F, 2h, 2w) |

| BN+ReLU | – | (8F, 2h, 2w) | (8F, 2h, 2w) |

| Upsample | Upsampling | (8F, 2h, 2w) | (8F, 4h, 4w) |

| Conv2d | kernel=(5, 5), stride=(1, 1), padding=(2, 2) | (8F, 4h, 4w) | (4F, 4h, 4w) |

| BN+ReLU | – | (4F, 4h, 4w) | (4F, 4h, 4w) |

| Upsample | Upsampling | (4F, 4h, 4w) | (4F, 8h, 8w) |

| Conv2d | kernel=(5, 5), stride=(1, 1), padding=(2, 2) | (4F, 8h, 8w) | (2F, 8h, 8w) |

| BN+ReLU | – | (2F, 8h, 8w) | (2F, 8h, 8w) |

| Upsample | Upsampling | (2F, 8h, 8w) | (2F, 16h, 16w) |

| Conv2d | kernel=(5, 5), stride=(1, 1), padding=(2, 2) | (2F, 16h, 16w) | (F, 16h, 16w) |

| BN+ReLU | – | (F, 16h, 16w) | (F, 16h, 16w) |

| Upsample | Upsampling | (F, 16h, 16w) | (F, 32h, 32w) |

| Conv2d | kernel=(5, 5), stride=(1, 1), padding=(2, 2) | (F, 32h, 32w) | (3, 32h, 32w) |

| Tanh | – | (3, 32h, 32w) | (3, 32h, 32w) |

Appendix D Contents of “Text1” and “Text2”

In this section, we give the specific descriptions of “Text1” and “Text2”, which have been mentioned in Figures 6 and 7 of the submitted manuscript. For convenience, in Figure E, we exhibit the visual results of 2D floor plan corresponding to “Text1” and “Text2”, respectively.

“Text1”: The building contains one washroom, one bedroom, one livingroom, and one kitchen. Specifically, washroom1 has 5 squares in northeast. bedroom1 has 14 square meters in east. Besides, livingroom1 covers 25 square meters located in center. kitchen1 has 12 squares in west. bedroom1, kitchen1, washroom1 and livingroom1 are connected. bedroom1 is next to washroom1.

“Text2”: The house has three bedrooms, one washroom, one balcony, one livingroom, and one kitchen. In practice, bedroom1 has 13 squares in south. bedroom2 has 9 squares in north. bedroom3 covers 5 square meters located in west. washroom1 has 4 squares in west. balcony1 is in south with 6 square meters. livingroom1 covers 30 square meters located in center. kitchen1 is in north with 6 square meters. livingroom1 is adjacent to bedroom1, bedroom2, balcony1, kitchen1, bedroom3, washroom1. balcony1, bedroom3 and bedroom1 are connected. bedroom2 is next to kitchen1, washroom1. bedroom3 is adjacent to washroom1.

Appendix E More Qualitative Results

In this section, we will provide more qualitative results of our proposed LCT-GAN and baseline methods, which have been mentioned in the paper. From Figure F, the results show that our method is able to produce neater and sharper textures than the baseline methods. Besides, the generated images are more consistent with the given semantic expressions that other baselines.

Appendix F More Qualitative Results of 3D House Plan

| Linguistic Requirements | Ours | Ground-truth | ||

|---|---|---|---|---|

| 2D Floor Plan | 3D House Plan | 2D Floor Plan | 3D House Plan | |

| The building layout contains one washroom, one study, one livingroom, and one bedroom. To be specific, washroom1 has Blue Marble floor, and wall is Wall_Cloth and White. washroom1 is in southeast with 11 square meters. Additionally, study1 has Wood_color Log floor as well as has Yellow Wall_Cloth wall. study1 has 8 squares in west. livingroom1 is in center with 21 square meters. livingroom1 wall is Earth_color Wall_Cloth while uses Black Log for floor. Besides, bedroom1 covers 10 square meters located in northwest. bedroom1 floor is Wood_color Log, and has Orange Pure_Color_Wood wall. livingroom1 is adjacent to washroom1, bedroom1, study1. bedroom1 is next to study1. |

|

|

|

|

| The house has one washroom, one livingroom, one storage, two bedrooms, one kitchen, and one balcony. In practice, washroom1 is in west with 5 square meters. wall of washroom1 is Coating and Yellow, and has White Wood_Veneer floor. Moreover, livingroom1 has Yellow Marble floor as well as wall is Wall_Cloth and Black. livingroom1 is in center with 26 square meters. Besides, storage1 is in northwest with 9 square meters. storage1 has Wood_color Wood_Grain floor while wall is White Wall_Cloth. bedroom1 has 13 squares in southwest. bedroom1 uses Black Log for floor, and wall is White Wall_Cloth. bedroom2 uses Black Log for floor while has White Wall_Cloth wall. bedroom2 has 7 squares in northeast. Moreover, kitchen1 uses White Wood Veneer for floor, and wall is Coating and Yellow. kitchen1 has 5 squares in north. balcony1 has 5 squares in south. balcony1 has Black Wall_Cloth wall as well as has Yellow Marble floor. livingroom1 is adjacent to bedroom1, storage1, bedroom2, washroom1, balcony1, kitchen1. washroom1, balcony1 and bedroom1 are connected. storage1 is next to washroom1, kitchen1. bedroom2 is adjacent to kitchen1. |

|

|

|

|

| The house plan has two bedrooms, one washroom, one balcony, one livingroom, and one kitchen. More specifically, bedroom1 has Pink Wall_Cloth wall, and floor is Wood_Veneer and White. bedroom1 covers 13 square meters located in north. In addition, bedroom2 has 11 squares in southwest. bedroom2 floor is White Wood_Veneer as well as wall is Wall_Cloth and Pink. Moreover, floor of washroom1 is Jade and Blue, and wall is White Wall_Cloth. washroom1 covers 5 square meters located in south. Additionally, balcony1 has 4 squares in northeast. balcony1 uses Wood_color Wood_Grain for floor as well as wall is Wall_Cloth and Earth_color. In addition, livingroom1 covers 28 square meters located in east. floor of livingroom1 is Wood_Grain and Wood_color, and wall is Wall_Cloth and Earth_color. Additionally, kitchen1 has 5 squares in center. kitchen1 has Blue Jade floor as well as wall is White Wall_Cloth. livingroom1 is adjacent to bedroom1, bedroom2, kitchen1, washroom1, balcony1. kitchen1 and bedroom1 are connected. bedroom2 is adjacent to kitchen1, washroom1. |

|

|

|

|

In this section, we report more visual results of 2D and 3D house plans corresponding to the given linguistic requirements, and show these results in Figure G.