Inpainting CMB maps using Partial Convolutional Neural Networks

Abstract

We present a novel application of partial convolutional neural networks (PCNN) that can inpaint masked images of the cosmic microwave background. The network can reconstruct both the maps and the power spectra to a few percent for circular and irregularly shaped masks covering up to 10% of the image area. By performing a Kolmogorov-Smirnov test we show that the reconstructed maps and power spectra are indistinguishable from the input maps and power spectra at the 99.9% level. Moreover, we show that PCNNs can inpaint maps with regular and irregular masks to the same accuracy. This should be particularly beneficial to inpaint irregular masks for the CMB that come from astrophysical sources such as Galactic foregrounds. The proof of concept application shown in this paper shows that PCNNs can be an important tool in data analysis pipelines in cosmology.

1 Introduction

Observations of the cosmic microwave background (CMB) have provided crucial experimental tests for theoretical models describing the origin and evolution of the universe. We currently have a model that describes the observations from the CMB and other cosmological probes extremely well, called the CDM model. Obtaining precision constraints on cosmological parameters requires a thorough understanding of instrumental and astrophysical systematic errors. Of particular importance for CMB measurements, are astrophysical foregrounds; non-CMB sources of microwave emission from our own galaxy and from extra-galactic sources that obfuscate CMB observations [1]. Typically, pixels that are completely dominated by foregrounds are removed from the maps and inferences are made from masked maps using cut-sky power spectrum estimators. One of the most widely-used estimators, largely due to their computational efficiency, are pseudo- estimators [2, 3]. Pseudo- methods construct unbiased, but sub-optimal power spectrum estimators on the cut-sky. One may ask whether the missing values can be replaced by physically or statistically motivated values and whether that can allow for better power spectrum estimation than the cut-sky pseudo- estimators. This question has previously been considered in [4], where it was found that using a linear inpainting scheme could significantly outperform pseudo- methods. In this paper we demonstrate the ability of our machine learning based inpainting scheme at recovering the correct input power spectra as a proof of concept. However, this is likely to be particularly advantages when attempting to construct potential non-Gaussian features in masked CMB maps.

The general procedure of replacing missing values through inpainting is a common problem in a variety of fields in addition to cosmology such as computer vision, satellite imaging etc. A review of inpainting algorithms can be found in [5]. Machine learning techniques have become ubiquitous in solving inpainting problems as they do not rely on an a-priori model that describes the data. Instead they learn statistical features about the data from training data. Of course, it is possible that a forward model is required to create a training set in the first place - this is indeed the case for CMB maps, as we only have a single realisation of the CMB map.

Simulating a CMB map requires a set of cosmological parameters that will determine the statistical properties of the map. The observed CMB will come from the “true" cosmological parameters222This implicitly assumes that the CDM model is a the correct model of the universe.. Since we do not have access to the true parameters, in order to infer the actual statistical properties of the map we need to sample a range of cosmological parameters and see which one of them best matches the observations. The training set we make for inpainting CMB maps includes maps that are evaluated for different cosmological parameters.

The traditional approach to inpainting missing values is to use a Gaussian constrained realisation of the map [6]. There have been various studies that have recently attempted to use machine learning techniques to inpaint CMB images333Machine learning techniques have also previously been used more generally in the context of CMB data analysis [7, 8, 9, 10, 11]. In [12] a variational auto-encoder is used to reconstruct CMB maps. This study compared the difference between the reconstructed image and the original image at the map level, however, in cosmology the more relevant function is the power spectrum as that is the object typically used for inference of cosmological parameters. Further studies have used different machine learning methods and also compared power spectra between the true images and the inpainted images. In [13] three separate machine learning methods are used to inpaint galactic foreground maps. One of the most popular techniques in computer vision is called Generative Adversarial Networks (GANs) [14] and it has been used to inpaint CMB maps in [15]. Moreover, the symmetrical properties of the sphere are taken advantage of by using a neural network architecture that preserves SO(3) symmetry in [16] to inpaint CMB images for foreground removal. Traditional deep learning methods use both the masked (empty) pixels and the surrounding pixels with signals in an image to inpaint the masked region. This is known to lead to bluriness in the inpainted image [17]. In this paper we use a novel concept of partial convolutions in convolutional neural networks [17] to inpaint CMB maps. The strength of using partial convolutions is that they only use the non-masked pixels to draw statistical information and inpaint the masked pixels with that information. This is done by masking the convolution matrix itself. Our model outperforms the other recently developed ML methods that inpaint pure CMB maps. Specifically, we obtain mean square error (), mean absolute error () and peak signal to noise ratio () equal to , and , as opposed to , and obtained in [12] for similar mask sizes444The numerical values for the three parameters presented here correspond to the average values obtained from comparing 500 ground-truth and inpainted CMB patches from the test set and masked with (see 2.2.2 for more details on this).. Moreover, we are able to reproduce the CMB patch power spectra to percent accuracy up to for irregularly shaped masks that cover of the total area, compared to obtained in [15] for regular masks of equivalent size.

This paper is structured as follows: Section 2 described the machine learning method of partial convolutions that is used for inpainting. The results, for the pixel distribution and the power spectra of inpainted maps, are shown in section 3 and finally we conclude in section 4 with a summary of our results and potential future avenues of research using partial convolutions.

2 Machine learning algorithm

Inpainting refers to the process of reconstructing lost or deteriorated parts of an image without introducing significant differences. Several algorithms have been developed to solve such tasks. They can be classified into two approaches:

- •

- •

We focus on the second approach in this paper. Convolutional neural networks (CNNs) have proven particularly effective in image analysis, with their network architecture being specifically designed for the extraction of information from image data through a series of convolutional layers.

Each layer consists of a set of learnable filters which are convolved with the input image, sliding across its pixels and identifying key features in the image. The deeper the network, the more features that can be extracted, such that, by building a CNN with a sufficiently large number of layers, one is able to recognize even the most intricate images. This, however, can also lead to overfitting and thus has to be accounted for by techniques such as dropout [27]. Given that the CNN filter responses are conditioned on both valid pixels as well as the substitute values in the masked holes, a CNN algorithm that fills in masked parts of an image suffers from the dependence on the initial hole values. This dependency on the hole values can result in several imperfections in the output image such as color discrepancy, lack of texture in the hole regions and blurriness [17]. These artifacts are typically removed by downstream processing of the resulting images which increases the computational cost of inpainting [28, 29].

In an attempt to solve such inaccuracies in the image reconstruction, whilst minimising the inpainting time and cost, a deep learning algorithm based on partial convolutional555Sometimes also called segmentation-aware convolutions. neural networks (PCNNs) has been developed in [17, 30]. This new approach is also motivated by its ability to deal with random irregular holes in place of the traditional rectangular masks which may lead to over-fitting and limit the usefulness of the algorithm. The main extension introduced by PCNNs is that the convolution is masked and re-normalized in order to be conditioned only on valid pixels. This is accomplished with the introduction of the automatic mask update step, which removes any masking where the partial convolution was able to operate on an unmasked value. Thus, with this method, the convolutional results depend only on the non-hole regions at every layer such that, given sufficient successive layers, even the largest masks eventually shrink away, leaving only valid responses in the feature map.

Typically, inpainting in relation to masked CMB maps consists of generating constrained Gaussian realizations to fill in the masked regions [31, 6]. However this approach fails to deal with non-Gaussian components in the map and can be computationally expensive. Recently, machine learning algorithms, specifically deep learning using CNNs and GANs, have been increasingly applied to this problem with promising results [15, 13, 16]. Nevertheless, all of these approaches have so far only focused on neural networks with pure convolutional layers that fill in square or circular masks. In this paper, we decide to adapt the PCNN algorithm developed in [17] to learn the statistical properties of CMB maps and efficiently fill in irregularly masked areas, avoiding biases caused by the initial hole values and the shape of the masks.

2.1 Network Architecture

The proposed model is U-Net [32] like and characterized by two main elements: the partial convolutional layer and the loss function. The details are given below and we follow the notation in [17].

2.1.1 Partial Convolutional Layer

The key element in this network is the partial convolutional layer. Given the convolutional filter weights , its corresponding bias and a binary mask of 0s and 1s ; the partial convolution on the current pixel values at each layer, is defined as:

| (2.1) |

where corresponds to the updated pixel values and is the element-wise multiplication. The scaling factor applies some appropriate scaling to adjust for the varying amount of valid (unmasked) inputs. After each convolution, the mask is removed at the location where convolution was able to condition its output on the valid input. The updated mask pixel values are expressed as:

| (2.2) |

2.1.2 U-Net structure

The model is characterized by a U-Net architecture where all the convolutional layers are replaced with partial convolutional layers using nearest neighbor up-sampling in the decoding stage. The input images taken in are pixels. The coding and decoding stages are made of 7 layers where in the coding phase, the number of filters in each convolution steadily increases from 32 to 128 meanwhile the kernel size decreases from 7 to 3. In the decoding stage, the kernel size is kept constant at 3 meanwhile the number of filters in each convolution decreases steadily until the final concatenation with the input image, where it is equal to 3. The last layer’s concatenation with the original input image and mask serves to allow the model to copy non-hole pixels. Details of the PCNN architecture can be found in the Appendix Tab.2.

2.1.3 Loss Function

The loss function aims to evaluate both per-pixel reconstruction accuracy and composition. The full form of the loss is taken from [17] and is presented in detail in appendix A.2.

| (2.3) | |||||

where , are respectively the losses on the output for the non-hole and the hole pixels; is based on ImageNet pre-trained VGG-16 (pool1, pool2 and pool3 layers); is similar to the perceptual loss where it’s first performed an auto-correlation on each feature; and finally refers to the smoothing penalty on , where is the region of 1-pixel dilation of the hole region.

2.2 Training

2.2.1 Dataset

We use CAMB [33] to generate 50,000 Gaussian, temperature-only, random CMB realizations for training, validation, and testing. Maps are generated with Healpix [34] with the Planck 2018 CDM parameters [35]. Images are cut out and projected onto a flat sky, with 128x128, 6.87 arcminute square pixels. Each image is therefore approximately 14.65 degrees on a side. We generate 10,000 images for each of 5 different values of the scalar index , between 0.8 and 0.96. We chose the spectral index to be a free parameter because it’s the one that, when changed , shows the most significant differences in the CMB patterns. This makes it easier for the program to classify the CMB patches, and for the reader to distinguish visually between the different classes666 also has a similar affect however, as it is a multiplicative scaling parameter, it is unlikely to make the PCNN more generalisable.. Each set of 10,000 images is divided into training, validation and test sets, with 7,000, 1,500, and 1,500 images respectively. The PCNN architecture takes 3 RGB 8-bit inputs. We convert the pixel temperature values to 8-bit RGB values using a linear mapping (e.g. the images are represented as 128x128x3 8-bit integers for a total of 24 bits of effective precision). This compression does not impact the results as the true and reconstructed maps are compared post-compression. The mapping parameters are saved in order to reconstruct the original temperature scale after inpainting.

2.2.2 Masking

The masking is performed through two independent methods.

-

•

Irregular masks: The first generates masks composed of ellipses, circles and lines in random order, amount and size to cover about 10% and 25% of the input pixel image. We will refer to them as and and they are used, respectively, for the fidelity evaluation and in the training process.

-

•

Regular masks The second method generates centered circular masks of various radius that can cover from 0 to roughly 100% of the input 128x128 pixel image. This last masking is instead used in the fidelity evaluation both to facilitate the comparison with previous results and to measure the ability of the algorithm to reconstruct the image in terms of the percentage of area covered. We will refer to these masks as where is equal to the radius of the circle in pixel units.

2.2.3 Training Procedure

At first we initialize the weights using the pre-trained VGG 16 weights from ImageNet [36]. This is done for image classification purposes and recognize the different classes of input CMB patches based on the value of the spectral index, . The training is then performed using the Adam optimizer [37] in two stages. The first stage has a learning rate of and enables batch normalization in all layers. The second stage has a learning rate of and only enables the batch normalization in the decoding layers. This is done to avoid the incorrect mean and variance issues that occur with batch normalization in the masked convolutions (as the mean and variance will be computed for the hole pixels as well) and also helps us to achieve faster convergence.

Overall, the program trained for 29 epochs, with batch size of 5 and respectively 10,000 training steps and 1,500 validation steps. The total computational cost was roughly 100 GPU hours.

3 Results

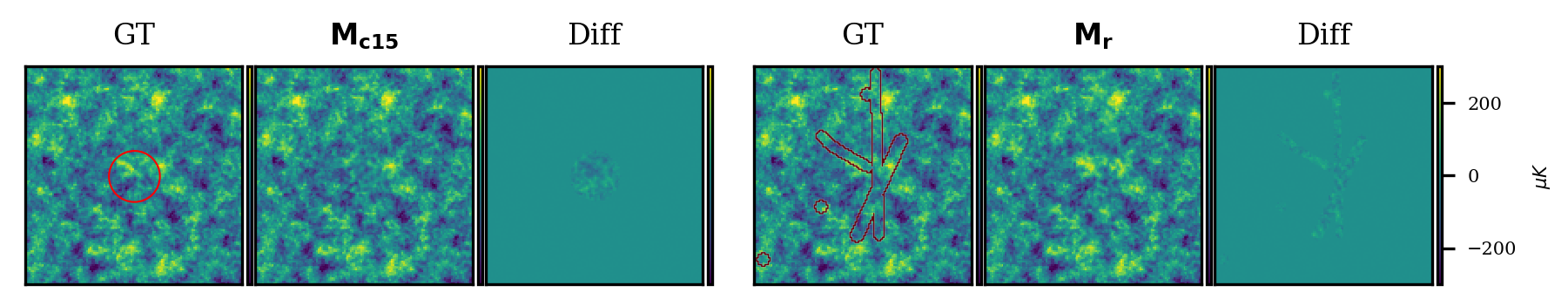

Figures 1, 5 and 6 show examples of maps extracted from the test set and reconstructed via the PCNN algorithm for the different masking methods outlined in Section 2.2.2.

To determine the efficiency of the algorithm in reconstructing the CMB patches, we follow the approach from [38, 39, 13] and focus on evaluating the ability to replicate the summary statistics of the underlying signal that needs to be reconstructed [13]. Specifically, for each value, we compare 500 ground-truth CMB patches from the test set with their corresponding inpainted maps, through two metrics: (1) the pixel intensity distribution; (2) the angular power spectra. Numerically, we perform this comparison by running the Kolmogorov-Smirnov (KS) two-sample test which assesses the likelihood that the distribution of inpainted CMB patches is drawn from the ground-truth distribution.

3.1 Pixel intensity distribution

To compute the pixel intensity distribution, all the sample maps are scaled with MinMax rescaling to [-1, 1] as in [13]. This is done in order to more easily compare the difference between the ground-truth and reconstructed maps and Gaussian distributions. The left plots in Fig.2 show the resulting histograms of the pixel intensities for each value, when masked respectively by (2(a)) and (2(b)). The differences between the pixel distributions are negligible and for both and and all the values. The resulting test -values are for both and , which shows that the PCNN algorithm is able to accurately reproduce the ground-truth CMB patterns.

3.2 Angular power spectrum

| 0.80 | (15) | (13) |

|---|---|---|

| 0.84 | (14) | (15) |

| 0.88 | (15) | (15) |

| 0.92 | (09) | (13) |

| 0.96 | (11) | (13) |

The angular power spectrum in each flat square CMB patch is estimated with Pixell777https://github.com/simonsobs/pixell. It is binned into equally spaced multipoles with and maximum multipole , chosen to correspond roughly to the available sky fraction and Pixell power spectrum accuracy. The right plots in Fig.2 show the resulting average power spectra for each value, when masked respectively by (2(a)) and (2(b)). The power spectra residuals for both and are in the same order as the corresponding pixel distribution residuals. At high , we note that the residuals are always negative, which implies that PCNN underestimates the original power spectrum at this multipole range. This could be caused by additional smoothing in the inpainted region, leading to reduced small-scale power. That said, it is important to emphasize that the obtained residuals are fully consistent with zero, as shown by the associated error bars, defined as the standard deviation from the mean value of the power spectrum at each bin.

To assess more quantitatively the ability to reproduce the power spectrum at a given bin, we perform the test on the distribution in each bin of the 500 spectra, after having bootstrap resampled it 5000 times. The obtained test p-values are summarized in Tab.1, where we also report in parenthesis the number of bins with p-value . We find that the lowest p-value with negligible differences between and and all the values. Hence, we don’t find any deviation from the ground truth and we can conclude that the PCNN algorithm is able to coherently reproduce the angular correlations of the underlying CMB signal.

3.3 Performance in terms of mask size

To assess the performance of the PCNN algorithm in terms of the percentage of CMB patch area covered, we use the circular masks with increasing radius from to pixels, in steps of pixels. We use the same two metrics as for the fidelity evaluation (pixel intensity distribution and angular power spectrum) and compare 500 ground-truth CMB patches from the test set with their corresponding inpainted maps, for each masking. The choice of the test over the other values is arbitrary. In fact, as presented in subsections 3.1 and 3.2, the obtained test -values are independent of the value under consideration.

The results of this analysis are plotted in Fig. 3 and show that the worst test -values for the pixel intensity distribution and the angular power spectrum are similar for 15% of masked area and diverge significantly for 20% of masked area. This discrepancy between the obtained test -values for the pixel intensity distribution and the angular power spectrum is most likely caused by the fact that the loss function we use aims to optimize the pixel values of the images.

Finally, we repeat the same analysis for the irregular masks and and note that the shape of the masking has no effect on the algorithm performance. In fact, as emphasized in Fig.3, the test -values obtained for and are the same as those for the circular masks of equivalent size for all the metrics under consideration.

4 Summary & Conclusion

In this paper we have found a novel application for the deep learning algorithm, PCNN: to inpaint masked CMB maps. Inpainting CMB maps is normally done on regularly shaped masks. There have been a variety of machine learning and other statistical methods to inpaint these types of shapes (circles, squares etc.). By using PCNNs we can inpaint the CMB maps with regular and irregularly shaped masks.

We train the PCNN by simulating maps of the CMB using known cosmological parameters. Our method can reconstruct the pixel distribution and the power spectrum of a CMB patch to an accuracy respectively of and , for masks covering up to of the image. Furthermore, for the power spectra we see that the reconstruction error is consistent with zero when the corresponding power spectrum uncertainty is taken into account. PCNNs work equally well on regularly and irregularly shaped masks. To quantify how well the PCNN can reconstruct the original images, we perform a KS test and find that the p-value’s achieved by the PCNN based on the pixel values of the original and reconstructed images are for different mask sizes. Since in cosmology we are typically interested in the correlation function, as opposed to the amplitude of the pixel values, we also perform a KS test on the true and reconstructed power spectrum, obtaining comparable results. This shows that inpainting CMB maps with PCNNs, and computing the power spectra is a viable alternative to standard cut-sky power spectrum estimation using e.g., pseudo- estimators.

The results we have obtained so far have been on a pure CMB map without any noise. In reality, any additional sources of noise and other contaminants would have to be included in the forward model used to generate the training dataset. We expect this to have no significant impact on the performance of the proposed algorithm, which is particularly suited to inpaint highly non-Gaussian patterns, as shown already in [17] on images from the ImageNet [36], Places2 [40] and CelebA-HQ dataset [41].

Overall, our results show that PCNNs can be a powerful method in reconstructing CMB maps to percent level accuracy with irregular masks, in addition to the more common circular and other regular shaped masks. It would be prudent to extend the application of PCNN architectures to inpainting irregular masks on the sphere, as we encounter when performing large sky area CMB analyses. Alongside the map-level reconstruction, we successfully recover the input power spectra from the inpainted maps. It will be interesting to explore further potential biases induced in the power spectrum estimation by the PCNN inpainting process, and studying the use of inpainted power spectrum estimates in cosmological parameter inference. We leave this and attempts to incorporate non-Gaussian foregrounds into our model for future projects.

Acknowledgments

MHA acknowledges support from the Beecroft Trust and Dennis Sciama Junior Research Fellowship at Wolfson College. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 693024). We thank David Alonso, Simone Aiola, Harry Desmond and Mathias Gruber for valuable discussions.

References

- [1] Y. Akrami, M. Ashdown, J. Aumont, C. Baccigalupi, M. Ballardini, A.J. Banday et al., Planck 2018 results, Astronomy & Astrophysics 641 (2020) A4.

- [2] E. Hivon, K. Gorski, C. Netterfield, B. Crill, S. Prunet and F. Hansen, Master of the cosmic microwave background anisotropy power spectrum: a fast method for statistical analysis of large and complex cosmic microwave background data sets, Astrophys. J. 567 (2002) 2 [astro-ph/0105302].

- [3] A. Challinor and G. Chon, Error analysis of quadratic power spectrum estimates for CMB polarization: Sampling covariance, Mon. Not. Roy. Astron. Soc. 360 (2005) 509 [astro-ph/0410097].

- [4] H. Gruetjen, J. Fergusson, M. Liguori and E. Shellard, Using inpainting to construct accurate cut-sky cmb estimators, Physical Review D 95 (2017) .

- [5] R.T. Pushpalwar and S.H. Bhandari, Image inpainting approaches - a review, in 2016 IEEE 6th International Conference on Advanced Computing (IACC), pp. 340–345, 2016.

- [6] M. Bucher and T. Louis, Filling in cosmic microwave background map missing data using constrained gaussian realizations, Monthly Notices of the Royal Astronomical Society 424 (2012) 1694.

- [7] M. Münchmeyer and K.M. Smith, Fast wiener filtering of cmb maps with neural networks, 2019.

- [8] J. Caldeira, W. Wu, B. Nord, C. Avestruz, S. Trivedi and K. Story, Deepcmb: Lensing reconstruction of the cosmic microwave background with deep neural networks, Astronomy and Computing 28 (2019) 100307.

- [9] N. Krachmalnicoff and G. Puglisi, Forse: a gan based algorithm for extending cmb foreground models to sub-degree angular scales, 2020.

- [10] M.A. Petroff, G.E. Addison, C.L. Bennett and J.L. Weiland, Full-sky cosmic microwave background foreground cleaning using machine learning, The Astrophysical Journal 903 (2020) 104.

- [11] K. Aylor, M. Haq, L. Knox, Y. Hezaveh and L. Perreault-Levasseur, Cleaning our own dust: simulating and separating galactic dust foregrounds with neural networks, Monthly Notices of the Royal Astronomical Society 500 (2020) 3889–3897.

- [12] K. Yi, Y. Guo, Y. Fan, J. Hamann and Y.G. Wang, CosmoVAE: Variational Autoencoder for CMB Image Inpainting, 2001.11651.

- [13] G. Puglisi and X. Bai, Inpainting Galactic Foreground Intensity and Polarization maps using Convolutional Neural Network, 2003.13691.

- [14] I.J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair et al., Generative Adversarial Networks, arXiv e-prints (2014) arXiv:1406.2661 [1406.2661].

- [15] A.V. Sadr and F. Farsian, Inpainting via Generative Adversarial Networks for CMB data analysis, 2004.04177.

- [16] M.A. Petroff, G.E. Addison, C.L. Bennett and J.L. Weiland, Full-sky Cosmic Microwave Background Foreground Cleaning Using Machine Learning, 2004.11507.

- [17] G. Liu, F.A. Reda, K.J. Shih, T. Wang, A. Tao and B. Catanzaro, Image inpainting for irregular holes using partial convolutions, CoRR abs/1804.07723 (2018) [1804.07723].

- [18] C. Barnes, E. Shechtman, A. Finkelstein and D.B. Goldman, Patchmatch: A randomized correspondence algorithm for structural image editing, ACM Trans. Graph. 28 (2009) .

- [19] Levin, Zomet and Weiss, Learning how to inpaint from global image statistics, .

- [20] S.M. Muddala, R. Olsson and M. Sjöström, Spatio-temporal consistent depth-image-based rendering using layered depth image and inpainting, EURASIP Journal on Image and Video Processing 2016 (2016) .

- [21] Q. Fan and L. Zhang, A novel patch matching algorithm for exemplar-based image inpainting, Multimedia Tools and Applications 77 (2017) 10807.

- [22] J. Yu, Z. Lin, J. Yang, X. Shen, X. Lu and T.S. Huang, Generative image inpainting with contextual attention, 1801.07892.

- [23] S. Iizuka, E. Simo-Serra and H. Ishikawa, Globally and locally consistent image completion, ACM Trans. Graph. 36 (2017) .

- [24] J. Zhao, Z. Chen, L. Zhang and X. Jin, Unsupervised learnable sinogram inpainting network (sin) for limited angle ct reconstruction, 1811.03911.

- [25] N. Cai, Z. Su, Z. Lin, H. Wang, Z. Yang and B.W.-K. Ling, Blind inpainting using the fully convolutional neural network, The Visual Computer 33 (2015) 249.

- [26] Y. Chen and H. Hu, An improved method for semantic image inpainting with GANs: Progressive inpainting, Neural Processing Letters 49 (2018) 1355.

- [27] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever and R. Salakhutdinov, Dropout: A simple way to prevent neural networks from overfitting, J. Mach. Learn. Res. 15 (2014) 1929–1958.

- [28] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva and A. Torralba, Places: A 10 million image database for scene recognition, IEEE Transactions on Pattern Analysis and Machine Intelligence 40 (2018) 1452.

- [29] P. Pérez, M. Gangnet and A. Blake, Poisson image editing, ACM Trans. Graph. 22 (2003) 313–318.

- [30] A.W. Harley, K.G. Derpanis and I. Kokkinos, Segmentation-aware convolutional networks using local attention masks, 1708.04607.

- [31] Y. Hoffman and E. Ribak, Constrained realizations of gaussian fields - a simple algorithm, The Astrophysical Journal 380 (1991) L5.

- [32] O. Ronneberger, P. Fischer and T. Brox, U-net: Convolutional networks for biomedical image segmentation, 2015.

- [33] A. Lewis, A. Challinor and A. Lasenby, Efficient computation of CMB anisotropies in closed FRW models, Astrophys. J. 538 (2000) 473 [astro-ph/9911177].

- [34] K.M. Gorski, E. Hivon, A.J. Banday, B.D. Wandelt, F.K. Hansen, M. Reinecke et al., Healpix: A framework for high-resolution discretization and fast analysis of data distributed on the sphere, The Astrophysical Journal 622 (2005) 759–771.

- [35] Planck collaboration, Planck 2018 results. VI. Cosmological parameters, 1807.06209.

- [36] K. Simonyan and A. Zisserman, Very deep convolutional networks for large-scale image recognition, arXiv 1409.1556 (2014) .

- [37] D.P. Kingma and J. Ba, Adam: A method for stochastic optimization, 2017.

- [38] M. Mustafa, D. Bard, W. Bhimji, Z. Lukić, R. Al-Rfou and J.M. Kratochvil, Cosmogan: creating high-fidelity weak lensing convergence maps using generative adversarial networks, Computational Astrophysics and Cosmology 6 (2019) .

- [39] K. Aylor, M. Haq, L. Knox, Y. Hezaveh and L. Perreault-Levasseur, Cleaning our own dust: Simulating and separating galactic dust foregrounds with neural networks, 2019.

- [40] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva and A. Torralba, Places: A 10 million image database for scene recognition, IEEE Transactions on Pattern Analysis and Machine Intelligence 40 (2018) 1452.

- [41] Z. Liu, P. Luo, X. Wang and X. Tang, Deep learning face attributes in the wild, in Proceedings of International Conference on Computer Vision (ICCV), December, 2015.

Appendix A Details of PCNN Algorithm

A.1 Network architecture

| Module Name | Filter Size | # Channels | Stride Size | BatchNorm | Nonlinearity |

| PConv1 | 32 | 2 | - | ReLu | |

| PConv2 | 64 | 2 | - | ReLu | |

| PConv3 | 128 | 2 | Y | ReLu | |

| PConv4 | 128 | 2 | Y | ReLu | |

| PConv5 | 128 | 2 | Y | ReLu | |

| PConv6 | 128 | 2 | Y | ReLu | |

| PConv7 | 128 | 2 | Y | ReLu | |

| PConv8 | 128 | 2 | Y | ReLu | |

| NearestUpSample1 | 128 | 2 | - | - | |

| Concat1 (w/ PConv7) | 128+128 | - | - | ||

| PConv9 | 128 | 1 | Y | LeakyReLu (0.2) | |

| NearestUpSample2 | 128 | 2 | - | - | |

| Concat2 (w/ PConv6) | 128+128 | - | - | ||

| PConv10 | 128 | 1 | Y | LeakyReLu(0.2) | |

| NearestUpSample3 | 128 | 2 | - | - | |

| Concat3 (w/ PConv5) | 128+128 | - | - | ||

| PConv11 | 128 | 1 | Y | LeakyReLu(0.2) | |

| NearestUpSample4 | 128 | 2 | - | - | |

| Concat4 (w/ PConv4) | 128+128 | - | - | ||

| PConv12 | 128 | 1 | Y | LeakyReLu(0.2) | |

| NearestUpSample5 | 128 | 2 | - | - | |

| Concat5 (w/ PConv3) | 128+128 | - | - | ||

| PConv13 | 128 | 1 | Y | LeakyReLu(0.2) | |

| NearestUpSample6 | 128 | 2 | - | - | |

| Concat6 (w/ PConv2) | 128+64 | - | - | ||

| PConv14 | 64 | 1 | Y | LeakyReLu(0.2) | |

| NearestUpSample7 | 64 | 2 | - | - | |

| Concat7 (w/ PConv1) | 64+32 | - | - | ||

| PConv15 | 32 | 1 | Y | LeakyReLu(0.2) | |

| NearestUpSample8 | 32 | 2 | - | - | |

| Concat8 (w/ Input) | 32+3 | - | - | ||

| PConv16 | 3 | 1 | Y | - |

A.2 Loss Function Terms

Given ground truth image , initial binary mask , PCNN prediction , we can express the losses on the output for the non-hole () and hole pixels () as:

where and , and are the channel size, height and width of [17]. We then define equal to the raw output image with the non-hole pixels set to ground truth. The perceptual loss () computes the distances between both , and , after projecting these images into higher level feature spaces using an ImageNet-pretrained VGG-16 [36]:

is the activation map of the th selected layer given input , with equal to its total number of elements. The layers used in this loss are , and [17]. The style loss term () is equivalent to the perceptual loss on which it’s first performed an autocorrelation (Gram matrix) on each feature map [17].

where is the normalization factor for the th selected layer. The final loss term is the total variation loss () expressed as:

where is the region of 1-pixel dilation of the hole region [17].

A.3 PSNR and evolution

Appendix B Supplementary Plots