1 2 3

Initial Value Problem Enhanced Sampling for Closed-Loop Optimal Control Design with Deep Neural Networks

Abstract

Closed-loop optimal control design for high-dimensional nonlinear systems has been a long-standing challenge. Traditional methods, such as solving the associated Hamilton-Jacobi-Bellman equation, suffer from the curse of dimensionality. Recent literature proposed a new promising approach based on supervised learning, by leveraging powerful open-loop optimal control solvers to generate training data and neural networks as efficient high-dimensional function approximators to fit the closed-loop optimal control. This approach successfully handles certain high-dimensional optimal control problems but still performs poorly on more challenging problems. One of the crucial reasons for the failure is the so-called distribution mismatch phenomenon brought by the controlled dynamics. In this paper, we investigate this phenomenon and propose the initial value problem enhanced sampling method to mitigate this problem. We theoretically prove that this sampling strategy improves over the vanilla strategy on the classical linear-quadratic regulator by a factor proportional to the total time duration. We further numerically demonstrate that the proposed sampling strategy significantly improves the performance on tested control problems, including the optimal landing problem of a quadrotor and the optimal reaching problem of a 7 DoF manipulator.

1 Introduction

Optimal control aims to find a control for a dynamical system over a period of time such that a specified loss function is minimized. Generally speaking, there are two types of optimal controls: open-loop optimal control and closed-loop (feedback) optimal control. Open-loop optimal control deals with the problem with a given initial state, and its solution is a function of time for the specific initial data, independent of the other states of the system. In contrast, closed-loop optimal control aims to find the optimal control policy as a function of the state that gives us optimal control for general initial states.

By the nature of the problem, solving the open-loop control problem is relatively easy and various open-loop control solvers can handle nonlinear problems even when the state lives in high dimensions (Betts, 1998; Rao, 2009). Closed-loop control is much more powerful than open-loop control since it can cope with different initial states, and it is more robust to the disturbance of dynamics. The classical approach to obtaining a closed-loop optimal control function is by solving the associated Hamilton-Jacobi-Bellman (HJB) equation. However, traditional numerical algorithms for HJB equations such as the finite difference method or finite element method face the curse of dimensionality (Bellman, 1957) and hence can not deal with high-dimensional problems.

Since the work Han & E (2016) for stochastic optimal control problems, there have been growing interest on making use of the capacity of neural networks (NNs) in approximating high-dimensional functions to solve the closed-loop optimal control problems (Nakamura-Zimmerer et al., 2021a; b; 2020; Böttcher et al., 2022; E et al., 2022). Generally speaking, there are two categories of methods in this promising direction. One is policy search approach (Han & E, 2016; Ainsworth et al., 2021; Böttcher et al., 2022; Zhao et al., 2022), which directly parameterizes the policy function by neural networks, computes the total cost with various initial points, and minimizes the average total cost. When solving problems with a long time span and high nonlinearity, the corresponding optimization problems can be extremely hard and may get stuck in local minima (Levine & Koltun, 2014). The other category of methods is based on supervised learning (Nakamura-Zimmerer et al., 2021a; b; 2020; 2022). Combining various techniques for open-loop control, one can solve complex high-dimensional open-loop optimal control problems; see Betts (1998); Rao (2009); Kang et al. (2021) for detailed surveys. Consequently, we can collect optimal trajectories for different initial points as training data, parameterize the control function (or value function) using NNs, and train the NN models to fit the closed-loop optimal controls (or optimal values). This work focuses on the second approach and aims to improve its performance through adaptive sampling.

As demonstrated in Nakamura-Zimmerer et al. (2021b); Zang et al. (2022), NN controllers trained by the vanilla supervised-learning-based approach can perform poorly even when both the training error and test error on collected datasets are fairly small. Some existing works attribute this phenomenon to the fact that the learned controller may deteriorate badly at some difficult initial states even though the error is small in the average sense. Several adaptive sampling methods regarding the initial points are hence proposed (see Section 4 for a detailed discussion). However, these methods all focus on choosing optimal paths according to different initial points and ignore the effect of dynamics. This is an issue since the paths controlled by the NN will deviate from the optimal paths further and further over time due to the accumulation of errors. As shown in Section 6, applying adaptive sampling only on initial points is insufficient to solve challenging problems.

This work is concerned with the so-called distribution mismatch phenomenon brought by the dynamics in the supervised-learning-based approach. This phenomenon refers to the fact that the discrepancy between the state distribution of the training data and the state distribution generated by the NN controller typically increases over time and the training data fails to represent the states encountered when the trained NN controller is used. Such phenomenon has also been identified in reinforcement learning (Kakade & Langford, 2002; Long & Han, 2022) and imitation learning (Ross & Bagnell, 2010). To mitigate this phenomenon, we propose the initial value problem (IVP) enhanced sampling method to make the states in the training dataset more closely match the states that the controller reaches. In the IVP enhanced sampling method, we iteratively re-evaluate the states that the NN controller reaches by solving IVPs and recalculate new training data by solving the open-loop control problems starting at these states. Our sampling method is very versatile to be combined with other techniques like a faster open-loop control solver or better neural network structures. The resulting supervised-learning-based approach empowered by the IVP enhanced sampling can be interpreted as an instance of the exploration-labeling-training (ELT) algorithms (Zhang et al., 2018; E et al., 2021) for closed-loop optimal control problems (see Appendix A for more discussions). At a high level, the ELT algorithm proceeds iteratively with the following three steps: (1) exploring the state space and examining which states need to be labeled; (2) solving the control problem to label these states and adding them to the training data; (3) training the machine learning model.

The main contributions of the paper can be summarized as follows. (1) We investigate the distribution mismatch phenomenon brought by the controlled dynamics in the supervised-learning-based approach, which explains the failure of this approach for challenging problems. We propose the IVP enhanced sampling method to update the training data, which significantly alleviates the distribution mismatch problem. (2) We show that the IVP enhanced sampling method can significantly improve the performance of the learned closed-loop controller on a uni-dimensional linear quadratic control problem (theoretically and numerically) and two high-dimensional problems (numerically), the quadrotor landing problem and the reaching problem of a 7-DoF manipulator. (3) We compare the IVP enhanced sampling method with other adaptive sampling methods and show that the IVP enhanced method gives the best performance.

2 Preliminary

2.1 Open-loop and Closed-loop Optimal Control

We consider the following deterministic controlled dynamical system:

| (1) |

where denotes the state, denotes the control with being the set of admissible controls, is a smooth function describing the dynamics, denotes the initial time, and denotes the initial state. Given a fixed and , solving the open-loop optimal control problem means to find a control path to minimize

| (2) |

where and are the running cost and terminal cost, respectively. We use and to denote the optimal state and control with the specified initial time and initial state , which emphasizes the dependence of the open-loop optimal solutions on the initial time and state. We assume the open-loop optimal control problem is well-posed, i.e., the solution always exists and is unique.

In contrast to the open-loop control being a function of time only, closed-loop control is a function of the time-state pair . Given a closed-loop control , we can induce a family of the open-loop controls with all possible initial time-state pairs : where is defined by the following initial value problem (IVP):

| (3) |

To ease the notation, we always use the same character to denote the closed-loop control function and the induced family of the open-loop controls. The context of closed-loop or open-loop control can be inferred from the arguments and will not be confusing. It is well known in the classical optimal control theory (see, e.g. Liberzon (2011)) that there exists a closed-loop optimal control function such that for any and , which means the family of the open-loop optimal controls with all possible initial time-state pairs can be induced from the closed-loop optimal control function. Since IVPs can be easily solved, one can handle the open-loop control problems with all possible initial time-state pairs if a good closed-loop control solution is available. Moreover, the closed-loop control is more robust to dynamic disturbance and model misspecification, and hence it is much more powerful in applications. In this paper, our goal is to find a near-optimal closed-loop control such that for with being the set of initial states of interest, the associated total cost is near-optimal, i.e.,

2.2 Supervised-learning-based Approach for Closed-loop Optimal Control Problem

Here we briefly explain the idea of the supervised-learning-based approach for the closed-loop optimal control problem. The first step is to generate training data by solving the open-loop optimal control problems with zero initial time and initial states randomly sampled in . Then, the training data is collected by evenly choosing points in every optimal path:

where and are the number of sampled training trajectories and the number of points chosen in each path, respectively. Finally, a function approximator (mostly neural network, as considered in this work) with parameters is trained by solving the following regression problem:

| (4) |

and gives the NN controller .

3 IVP Enhanced Sampling Method

Although the vanilla supervised-learning-based approach can achieve a good performance in certain problems (Nakamura-Zimmerer et al., 2021a), it is observed that its performance on complex problems is not satisfactory (see Nakamura-Zimmerer et al. (2021b); Zang et al. (2022) and examples below). One of the crucial reasons that the vanilla method fails is the distribution mismatch phenomenon. To better illustrate this phenomenon, let be the distribution of the initial state of interest and be a closed-loop control function. We use to denote the distribution of generated by : Note that in the training process (4), the distribution of the state at time is , the state distribution generated by the closed-loop optimal control. On the other hand, when we apply the learned NN controller in the dynamics, the distribution of the input state of at time is . The error between state driven by and accumulates and makes the discrepancy between and increases over time. Hence, the training data fails to represent the states encountered in the controlled process, and the error between and dramatically increases when is large. See Figures 1 (left) and 2 below for an illustration of this phenomenon.

To overcome this problem, we propose the following IVP enhanced sampling method. The key idea is to improve the quality of the NN controller iteratively by enlarging the training dataset with the states seen by the NN controller at previous times. Given predesigned (not necessarily even-spaced) temporal grid points , we first generate a training dataset by solving open-loop optimal control problems on the time interval starting from points in , a set of initial points sampled from , and train the initial model . Under the control of , the generated trajectory deviates more and more from the optimal trajectory. So we stop at time , i.e., compute the IVPs using as the closed-loop control and points in as the initial points on the time interval , and then on the interval solve new optimal paths that start at the end-points of the previous IVPs. The new training dataset is then composed of new data (between and T) and the data before time in the dataset , and we train a new model using . We repeat this process to the predesigned temporal grid points until end up with . In other words, in each iteration, the adaptively sampled data replaces the corresponding data (defined on the same time interval) in the training dataset (the size of training data remains the same). The whole process can be formulated as Algorithm 1, and we refer to Figure 1 for an illustration of the algorithm’s mechanism. We call this method IVP enhanced sampling method because the initial points of the open-loop optimal control problems are sampled by solving the IVP with the up-to-date NN controller. It is worth noting that the later iterations require less effort in labeling data as the trajectories are shorter and thus easier to solve.

It is worthwhile mentioning that the IVP enhanced sampling method is versatile enough to combine other improvements for closed-loop optimal control problems, such as efficient open-loop control problem solvers (Kang et al., 2021; Zang et al., 2022) or specialized neural network structures (Nakamura-Zimmerer et al., 2020; 2021b; 2022). One design choice regarding the network structure in the IVP enhanced sampling method is whether to share the same network among different time intervals. We choose to use the same network for all the time intervals in the following numerical examples, but the opposite choice is also feasible.

4 Comparison with Other Adaptive Sampling Methods

In this section, we review existing literature on adaptive sampling methods for the closed-loop optimal control problem.

We start with the methods in imitation learning (Hussein et al., 2017), which aim to learn the expert’s control function. Our task can be viewed as an imitation problem if we take the optimal control function as the expert’s control. With the same argument, we know the distribution mismatch phenomenon also exists therein. However, there is a key difference regarding the mechanism of data generation between the two settings: in imitation learning, it is often assumed that one can easily access the expert’s behavior at every time-state pair while in the optimal control problem, it is much more computationally expensive to access since one must solve an open-loop optimal control problem. This difference affects algorithm design fundamentally. Take the forward training algorithm (Ross & Bagnell, 2010), a popular method in imitation learning for mitigating distribution mismatch, as an example. To apply it to the closed-loop optimal control problem, we first need to consider a discrete-time version of the problem with a sufficiently fine time grid: . At each time step , we learn a policy function where the state in the training data are generated by sequentially applying and the labels are generated by solving the open-loop optimal solutions with as the initial time-state pair. Hence, the open-loop control solver is called with numbers proportionally to the discretized time steps , and only the first value on each optimal control path is used for learning. In contrast, in Algorithm 1, we can use much more values over the optimal control paths in learning, which allows its temporal grid for sampling to be much coarser than the grid in the forward training algorithm, and the total cost of solving open-loop optimal control problems is much lower.

Another popular method in imitation learning is DAGGER (Dataset Aggregation) (Ross et al., 2011), which can also be applied to help sampling in the closed-loop optimal control problem. In DAGGER, in order to improve the current closed-loop controller , one solves IVPs using over starting from various initial states and collect the states on a time grid . The open-loop control problems are then solved with all the collected time-state pairs as the initial time-state pair, and all the corresponding optimal solutions are used to construct a dataset for learning a new controller. The process can be repeated until a good controller is obtained. The time-state selection in DAGGER is also related to the distribution mismatch phenomenon, but somehow different from the IVP enhanced sampling. Take the data collection using the controller in the first iterative step for example. The IVP enhanced sampling focuses on the states at the time grid while DAGGER collects states at all the time grids. If is still far from optimal, the data collected at later time grids may be irrelevant to or even mislead training due to error accumulation in states. In Appendix G, we reports more theoretical and numerical comparison between DAGGER and IVP enhanced sampling, which indicates DAGGER performs less satisfactorily.

Except for the forward training algorithm and DAGGER, there are other adaptive sampling methods for the closed-loop optimal control problems. Nakamura-Zimmerer et al. (2021a) propose an adaptive sampling method that prefers to choose the initial points with large gradients of the value function as the value function tends to be steep and hard to learn around these points. Landry et al. (2021) propose to sample the initial points on which the errors between predicted values from the NN and optimal values are large. These two adaptive sampling methods both focus on finding points that are not learned well but ignore the influence of the accumulation of the distribution mismatch over time brought by controlled dynamics. We will show in Section 6 that the IVP enhanced sampling method can outperform such sampling methods.

5 Theoretical Analysis on an LQR Example

In this section, we analyze the superiority of the IVP sampling method by considering the following uni-dimensional linear quadratic regulator (LQR) problem:

| s.t. |

where is a positive integer, and . Classical theory on linear quadratic control (see, e.g. Sontag (2013)) gives the following explicit linear form of the optimal controls:

| (open-loop optimal control) | |||||

| (closed-loop optimal control) |

We consider the following two models to approximate the closed-loop optimal control function with parameter :

| (5) | |||

| (6) |

Since there will be no error in learning a linear model when the data is exact, to mimic the errors encountered when learning neural networks, throughout this section, we assume the data has certain noise. To be precise, for any and , the open-loop optimal control solver gives the following approximated optimal path:

where is a small positive number to indicate the scale of the error and is a normal random variable whose mean is and variance is . In other words, the obtained open-loop control is still constant in each path, just like the optimal open-loop control, but perturbed by a random constant. The random variables in different approximated optimal paths starting from different or are assumed to be independent.

We compare the vanilla supervised-learning-based method and IVP enhanced sampling method theoretically for the first model and numerically for the second model (in Appendix B). In the vanilla method, we randomly sample initial points from a standard normal distribution and use corresponding optimal paths to learn the controller. In the IVP enhanced sampling method, we randomly sample initial points from a standard normal distribution, set the temporal grid points for sampling as , and perform Algorithm 1. In both methods, the open-loop optimal control solver is called times in total.

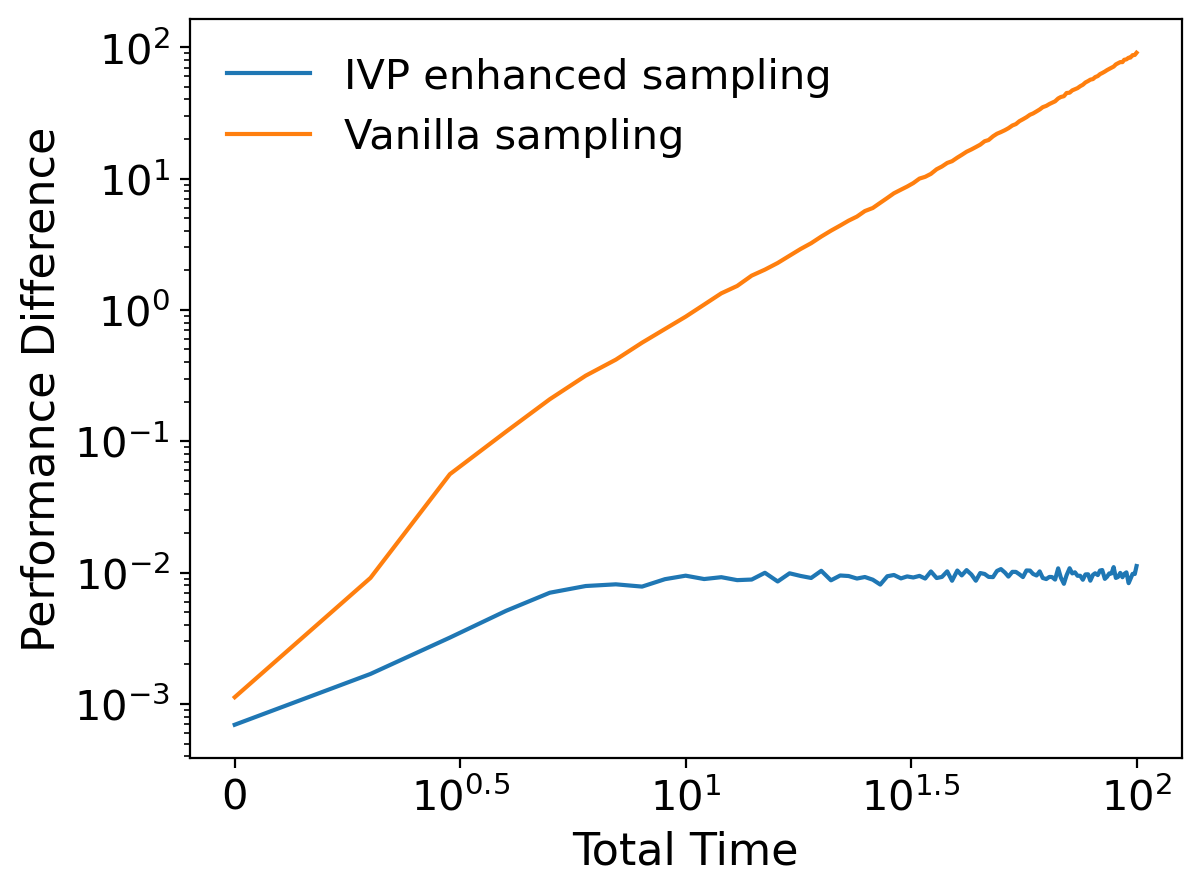

Theorem 1 compares the performance of the vanilla method and the IVP enhanced sampling method under Model 1 (5) . The more detailed statement and proof can be found in Appendix B. This theorem shows that both the distribution difference and performance difference with respect to the optimal solution for the vanilla method will increase when increases, while they are always constantly bounded for the IVP enhanced sampling method. Therefore, compared to the vanilla method, the IVP enhanced sampling method mitigates the distribution mismatch phenomenon and significantly improves the performance when is large.

Theorem 1.

Under Model 1 (5), let , and be the optimal controller, the controller learned by the vanilla method, and the controller learned by the IVP enhanced sampling method, respectively. Define IVPs:

-

1.

If is a random variable following a standard normal distribution, which is independent of the initial points and noises in the training process. Let and be the state variables in the training data of the vanilla method and the last iteration of the IVP enhanced sampling method. Then, , , and are normal random variables, and

(7) -

2.

If is a fixed initial point, define the total cost

Then,

6 The Optimal Landing Problem of Quadrotor

In this section, we test the IVP enhanced sampling method on the optimal landing problem of a quadrotor. We consider the full quadrotor dynamic model with 12-dimensional state variable and 4-dimensional control variable (Bouabdallah et al., 2004; Madani & Benallegue, 2006; Mahony et al., 2012). We aim to find optimal landing paths from some initial states to a target state with minimum control efforts during a fixed time duration . The open-loop optimal solutions are obtained by solving the corresponding two-point boundary value problems with the space-marching technique (Zang et al., 2022). See Appendix C, D and E for more details.

We sample initial points for generating training data and use a fully-connected neural network to approximate the optimal control. The temporal grid points on which we do IVP enhanced sampling is . After learning, we use learned models to run the initial value problem at 500 training initial points and show the similarity between paths controlled by the NN controller and their corresponding training data. In Figure 2, the left sub-figure shows the average pointwise distance between data reached by the NN controller and corresponding training data at different times. The right sub-figure shows the maximum mean discrepancy (Borgwardt et al., 2006) between these two datasets using Gaussian kernel . In both figures, there are jumps at and since the NN-controlled path is continuous across time while training data is discontinuous at locations where we do IVP enhanced sampling. It can be seen that without adaptive sampling (after iteration 0), the discrepancy between the states reached by the NN controller and training data is large. With our method, they get closer to each other as the iteration goes.

Next, we check the performance of the learned NN controller. We repeat experiments five times using different random seeds. Figure 3 (left) shows the cumulative distribution function of the ratio between NN-controlled cost and optimal cost on 200 test initial points. The light solid line represents the mean of the cumulative distribution for five experiments, while the shaded area represents the mean cost ratio the standard deviation. We also test the performance of the ensembles, which use the average output of five networks. The ensemble results from three iterations are represented by the three dark curves in the figure. Without any adaptive sampling, the performance of model is poor. In contrast, the NN model is very close to the optimal policy after two rounds of adaptive sampling. The ensemble of networks after iteration 2 performs the best. More statistics of the results are provided in Appendix E.

Then we compare our method with four other methods. We also repeat every experiment five times with different random seeds. As data generation is the most time-consuming part, for the sake of fairness, we keep the number of solving open-loop problems the same (1500) among all the methods (except the last method). The first method is training a model on directly sampled 1500 optimal paths (called vanilla sampling). The second method is the adaptive sampling (AS) method proposed by Nakamura-Zimmerer et al. (2021a) that chooses initial points with large gradient norms. This is equivalent to choosing initial points whose optimal control has large norms, and we refer to this method as AS w. large u. With a little modification, the third method, AS w. large v, is to choose initial points whose total costs are large under the latest NN controller. The last adaptive sampling method, AS w. bad v, is a variant of the SEAGuL algorithm (Sample Efficient Adversarially Guided Learning) (Landry et al., 2021). The original SEAGuL algorithm proposes to use a few gradient ascent updates to find initial points with large gaps between the learned values and optimal values. Here we give this method more computational budget to solve more open-loop optimal control problems to find such initial points. The cumulative distribution functions of cost ratios of the above methods are shown in Figure 3 (right), which clearly demonstrate the superiority of the IVP enhanced sampling method. More details about the implementation and results of these methods are provided in Appendix E.

We also test the NN controllers obtained from different sampling methods in the presence of observation noises. The detailed results are provided in Appendix E. It is observed that when measurement errors exist, closed-loop controllers are more reliable than the open-loop controller and the one trained by the IVP enhanced sampling method performs best among all the considered methods. Finally, we test four different choices of temporal grid points in Algorithm 1. The results listed in Appendix E show that our algorithm is robust to the choice of temporal grid points.

7 The Optimal Reaching Problem of a 7-DoF Manipulator

In this section, we consider the optimal reaching problem on a 7-DoF torque-controlled manipulator, the KUKA LWR iiwa R820 14 (Kuka, ; Bischoff et al., 2010). See the figure in appendix F for an illustration of this task. Let be the state of the system where are joint angles and velocities of the manipulator, respectively. Our goal is to find the optimal torque that drives the manipulator from to in seconds and minimizes a quadratic type cost. See Appendix F for details of the problem and the experiment configurations. To obtain training data, we use differential dynamic programming (Jacobson & Mayne, 1970) implemented in the Crocoddy library (Mastalli et al., 2020).

We use the QRNet (Nakamura-Zimmerer et al., 2020; 2021b) as the backbone network in this example (see Appendix F for details) and evaluate networks trained in six different ways: four of them are trained using Algorithm 1 with different choices of temporal grid points for adaptive sampling and two of them are trained by vanilla sampling method with (Vanilla300) and (Vanilla900) trajectories separately. All four networks (AS1–AS4) trained by the IVP enhanced sampling have initial training data of trajectories and three iterations (), i.e., each of them requires solving the open-loop solution times in total for generating training data. The difference of the four networks lies in that they use different temporal grids ( and ) for enhanced sampling. Each experiment has been independently run five times. We again plot the cumulative distribution functions of cost ratios (clipped at 2.0) between the NN-controlled cost and optimal cost in Figure LABEL:fig:manipulator (left: mean standard deviation and ensemble of AS4 in different iterations; middle: average of final networks trained by different schemes). More details and results are provided in Appendix F. We find that adding more data in the vanilla sampling method has very limited effects on improvement while the IVP enhanced sampling greatly improves the performance. Furthermore, such improvement is again robust to different choices of temporal grid points (AS1–AS4).

We additionally test the performance of the network trained by our adaptive sampling method in the presence of measurement errors. At each simulation timestep, the network’s input states are corrupted by a noise uniformly sampled from for . See Figure LABEL:fig:manipulator (right) for the results on the best model trained from AS1–AS4. The NN controller performs well at , and there are more than of cases in which our controller achieves a ratio less than at .

8 Conclusion and Future Work

In this work, we propose the IVP enhanced sampling method to overcome the distribution mismatch problem in the supervised-learning-based approaches for the closed-loop optimal control problem. Both theoretical and numerical results show that the IVP enhanced sampling method significantly improves the performance of the learned NN controller and outperforms other adaptive sampling methods. There are a few directions worth exploring in future work. In the IVP enhanced sampling method, one choice we need to make is the temporal grid points for adaptive sampling. We recommend that at each iteration, one can compute the distance between the training data and data reached by the NN controller at different times (see Figure 2 for an example) and choose the time at which the distance starts to increase quickly as the temporal grid for adaptive sampling. We observe that the IVP enhanced sampling method performs well using this strategy. It will be ideal to make this process more systematic. Another direction is to design more effective approaches utilizing the training data. In Algorithm 1 (lines 8–9), at each iteration, we replace parts of the training data with the newly collected data, and hence some optimal labels are thrown away, which are costly to obtain. An alternative choice is to augment data directly, i.e., setting in line 9. Numerically, we observe that this choice gives similar performance to the version used in Algorithm 1, which suggests that so far the dropped data provides little value for training; see Appendix E and F for details. But it is still possible to find smarter ways to utilize them to improve performance. We also need to evaluate the IVP enhanced sampling method for problems with other features like state/control constraints. Furthermore, the IVP enhanced sampling method can be straightforwardly applied to learning general dynamics from multiple trajectories as the controlled system under the optimal policy can be viewed as a special dynamical system. It is of interest to investigate its performance in such general settings. Finally, theoretical analysis beyond the LQR setting is also an interesting and important problem.

References

- Ainsworth et al. (2021) Samuel Ainsworth, Kendall Lowrey, John Thickstun, Zaid Harchaoui, and Siddhartha Srinivasa. Faster policy learning with continuous-time gradients. In Learning for Dynamics and Control, pp. 1054–1067. PMLR, 2021.

- Bellman (1957) Richard E. Bellman. Dynamic Programming. Princeton University Press, 1957.

- Betts (1998) John T. Betts. Survey of numerical methods for trajectory optimization. Journal of Guidance, Control, and Dynamics, 21(2):193–207, 1998.

- Bischoff et al. (2010) Rainer Bischoff, Johannes Kurth, Günter Schreiber, Ralf Koeppe, Alin Albu-Schäffer, Alexander Beyer, Oliver Eiberger, Sami Haddadin, Andreas Stemmer, Gerhard Grunwald, et al. The kuka-dlr lightweight robot arm-a new reference platform for robotics research and manufacturing. In ISR 2010 (41st international symposium on robotics) and ROBOTIK 2010 (6th German conference on robotics), pp. 1–8. VDE, 2010.

- Borgwardt et al. (2006) Karsten M Borgwardt, Arthur Gretton, Malte J Rasch, Hans-Peter Kriegel, Bernhard Schölkopf, and Alex J Smola. Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics, 22(14):e49–e57, 2006.

- Böttcher et al. (2022) Lucas Böttcher, Nino Antulov-Fantulin, and Thomas Asikis. AI pontryagin or how artificial neural networks learn to control dynamical systems. Nature communications, 13(1):1–9, 2022.

- Bouabdallah et al. (2004) Samir Bouabdallah, Pierpaolo Murrieri, and Roland Siegwart. Design and control of an indoor micro quadrotor. In IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA’04. 2004, volume 5, pp. 4393–4398. IEEE, 2004.

- Carpentier & Mansard (2018) Justin Carpentier and Nicolas Mansard. Analytical derivatives of rigid body dynamics algorithms. In Robotics: Science and Systems, 2018.

- Carpentier et al. (2015–2021) Justin Carpentier, Florian Valenza, Nicolas Mansard, et al. Pinocchio: fast forward and inverse dynamics for poly-articulated systems. https://stack-of-tasks.github.io/pinocchio, 2015–2021.

- Carpentier et al. (2019) Justin Carpentier, Guilhem Saurel, Gabriele Buondonno, Joseph Mirabel, Florent Lamiraux, Olivier Stasse, and Nicolas Mansard. The pinocchio c++ library: A fast and flexible implementation of rigid body dynamics algorithms and their analytical derivatives. In 2019 IEEE/SICE International Symposium on System Integration (SII), pp. 614–619. IEEE, 2019.

- Clevert et al. (2016) Djork-Arné Clevert, Thomas Unterthiner, and Sepp Hochreiter. Fast and accurate deep network learning by exponential linear units (elus). In Yoshua Bengio and Yann LeCun (eds.), 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings, 2016.

- E et al. (2021) Weinan E, Jiequn Han, and Linfeng Zhang. Machine-learning-assisted modeling. Physics Today, 74:36–41, 2021. ISSN 0031-9228. doi: 10.1063/PT.3.4793.

- E et al. (2022) Weinan E, Jiequn Han, and Jihao Long. Empowering optimal control with machine learning: A perspective from model predictive control. arXiv preprint arXiv:2205.07990, 2022.

- Glorot & Bengio (2010) Xavier Glorot and Y. Bengio. Understanding the difficulty of training deep feedforward neural networks. Journal of Machine Learning Research - Proceedings Track, 9:249–256, 01 2010.

- Han & E (2016) Jiequn Han and Weinan E. Deep learning approximation for stochastic control problems. arXiv preprint arXiv:1611.07422, 2016.

- Hussein et al. (2017) Ahmed Hussein, Mohamed Medhat Gaber, Eyad Elyan, and Chrisina Jayne. Imitation learning: A survey of learning methods. ACM Computing Surveys (CSUR), 50(2):1–35, 2017.

- Jacobson & Mayne (1970) David H Jacobson and David Q Mayne. Differential dynamic programming. Number 24 in Modern analytic and computational methods in science and mathematics. Elsevier Publishing Company, 1970.

- Kakade & Langford (2002) Sham Kakade and John Langford. Approximately optimal approximate reinforcement learning. In In Proc. 19th International Conference on Machine Learning. Citeseer, 2002.

- Kang et al. (2021) Wei Kang, Qi Gong, Tenavi Nakamura-Zimmerer, and Fariba Fahroo. Algorithms of data generation for deep learning and feedback design: A survey. Physica D: Nonlinear Phenomena, 425:132955, 2021.

- Kierzenka & Shampine (2001) Jacek Kierzenka and Lawrence F Shampine. A bvp solver based on residual control and the maltab pse. ACM Transactions on Mathematical Software (TOMS), 27(3):299–316, 2001.

- Kingma & Ba (2015) Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In Yoshua Bengio and Yann LeCun (eds.), 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015.

- (22) Kuka. Kuka ag, 2022. URL https://www.kuka.com/.

- Landry et al. (2021) Benoit Landry, Hongkai Dai, and Marco Pavone. Seagul: Sample efficient adversarially guided learning of value functions. In Learning for Dynamics and Control, pp. 1105–1117. PMLR, 2021.

- Levine & Koltun (2014) Sergey Levine and Vladlen Koltun. Learning complex neural network policies with trajectory optimization. In International Conference on Machine Learning, pp. 829–837. PMLR, 2014.

- Liberzon (2011) Daniel Liberzon. Calculus of variations and optimal control theory: a concise introduction. Princeton university press, 2011.

- Long & Han (2022) Jihao Long and Jiequn Han. Perturbational complexity by distribution mismatch: A systematic analysis of reinforcement learning in reproducing kernel hilbert space. Journal of Machine Learning vol, 1:1–34, 2022.

- Madani & Benallegue (2006) Tarek Madani and Abdelaziz Benallegue. Control of a quadrotor mini-helicopter via full state backstepping technique. In Proceedings of the 45th IEEE Conference on Decision and Control, pp. 1515–1520. IEEE, 2006.

- Mahony et al. (2012) Robert Mahony, Vijay Kumar, and Peter Corke. Multirotor aerial vehicles: Modeling, estimation, and control of quadrotor. IEEE Robotics and Automation magazine, 19(3):20–32, 2012.

- Mastalli et al. (2020) Carlos Mastalli, Rohan Budhiraja, Wolfgang Merkt, Guilhem Saurel, Bilal Hammoud, Maximilien Naveau, Justin Carpentier, Ludovic Righetti, Sethu Vijayakumar, and Nicolas Mansard. Crocoddyl: An efficient and versatile framework for multi-contact optimal control. In 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 2536–2542. IEEE, 2020.

- Nakamura-Zimmerer et al. (2020) Tenavi Nakamura-Zimmerer, Qi Gong, and Wei Kang. QRnet: optimal regulator design with lqr-augmented neural networks. IEEE Control Systems Letters, 5(4):1303–1308, 2020.

- Nakamura-Zimmerer et al. (2021a) Tenavi Nakamura-Zimmerer, Qi Gong, and Wei Kang. Adaptive deep learning for high-dimensional Hamilton–Jacobi–Bellman equations. SIAM Journal on Scientific Computing, 43(2):A1221–A1247, 2021a.

- Nakamura-Zimmerer et al. (2021b) Tenavi Nakamura-Zimmerer, Qi Gong, and Wei Kang. Neural network optimal feedback control with enhanced closed loop stability. arXiv preprint arXiv:2109.07466, 2021b.

- Nakamura-Zimmerer et al. (2022) Tenavi Nakamura-Zimmerer, Qi Gong, and Wei Kang. Neural network optimal feedback control with guaranteed local stability. arXiv preprint arXiv:2205.00394, 2022.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems, 32, 2019.

- Pontryagin (1987) Lev Semenovich Pontryagin. Mathematical theory of optimal processes. CRC press, 1987.

- Rao (2009) Anil V. Rao. A survey of numerical methods for optimal control. Advances in the Astronautical Sciences, 135(1):497–528, 2009.

- Ross & Bagnell (2010) Stéphane Ross and Drew Bagnell. Efficient reductions for imitation learning. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, pp. 661–668. JMLR Workshop and Conference Proceedings, 2010.

- Ross et al. (2011) Stéphane Ross, Geoffrey Gordon, and Drew Bagnell. A reduction of imitation learning and structured prediction to no-regret online learning. In Proceedings of the fourteenth international conference on artificial intelligence and statistics, pp. 627–635. JMLR Workshop and Conference Proceedings, 2011.

- Sontag (2013) Eduardo D Sontag. Mathematical control theory: deterministic finite dimensional systems, volume 6. Springer Science & Business Media, 2013.

- Tedrake & the Drake Development Team (2019) Russ Tedrake and the Drake Development Team. Drake: Model-based design and verification for robotics, 2019.

- Zang et al. (2022) Yaohua Zang, Jihao Long, Xuanxi Zhang, Wei Hu, Weinan E, and Jiequn Han. A machine learning enhanced algorithm for the optimal landing problem. In 3rd Annual Conference on Mathematical and Scientific Machine Learning, pp. 1–20. PMLR, 2022.

- Zhang et al. (2018) Linfeng Zhang, Han Wang, and Weinan E. Reinforced dynamics for enhanced sampling in large atomic and molecular systems. The Journal of chemical physics, 148(12):124113, 2018.

- Zhao et al. (2022) Zhigen Zhao, Simiao Zuo, Tuo Zhao, and Ye Zhao. Adversarially regularized policy learning guided by trajectory optimization. In Learning for Dynamics and Control Conference, pp. 844–857. PMLR, 2022.

Appendix A Supervised-learning-based Approach through the Lens of ELT Algorithm

As pointed out in the introduction, the supervised-learning-based approach empowered by the adaptive sampling can be interpreted as an instance of the exploration-labeling-training (ELT) algorithms (Zhang et al., 2018; E et al., 2021) for closed-loop optimal control problems. Through the lens of the ELT algorithm, there are at least three aspects to improve the efficiency of the supervised-learning-based approach for the closed-loop optimal control problem:

-

•

Use the adaptive sampling method. Adaptive sampling methods aim to sequentially choose the time-state pairs based on previous results to improve the performance of the NN controller. This corresponds to the first step in the ELT algorithm and is the main focus of this work.

-

•

Improve the efficiency of data generation, i.e., solving the open-loop optimal control problems. Although the open-loop optimal control problem is much easier than the closed-loop optimal control problem, its time cost cannot be neglected and the efficiency varies significantly with different methods. This corresponds to the second step in the ELT algorithm and we refer to Kang et al. (2021) for a detailed survey.

-

•

Improve the learning process of the neural networks. This corresponds to the third step in the ELT algorithm. The recent works Nakamura-Zimmerer et al. (2020; 2021b; 2022) focus on the structure of the neural networks and design a special ansatz such that the NN controller is close to the linear quadratic controller around the equilibrium point to improve the stability of the NN controller.

Appendix B Detailed Analysis of the LQR Example

In this section, we give the detailed settings for the comparison in Section 5 and the detailed statement and proof of Theorem 1. Through this section, all symbols having a hat are open-loop optimal paths sampled for training, e.g. . Let denote a single state instead of a state trajectory. The clean symbol without hat or tilde is the IVP solution generated by specific controllers which are specified in the subscript; e.g. are trajectories generated by which are optimal, vanilla, and IVP enhanced controllers, respectively. The positive integer in the subscript always denotes the index of the optimal path. Symbols with superscript are related to the -th iteration of the IVP enhanced sampling method.

For the vanilla method, we first randomly sample initial states from a standard normal distribution where is a positive integer (recalling is a positive integer). Then approximated optimal paths are collected starting at :

| (8) |

where are i.i.d. normal random variables with mean and variance , and independent of initial states.

Finally, the parameters are learned by solving the following least square problems:

| (9) |

Optimizing independently for each , we have

for the first and second models, respectively. We will use to denote the closed-loop controller determined in this way.

For the IVP enhanced sampling method, we choose and the temporal grid points for . We first sample initial points from the normal standard distribution, denote the parameters optimized at -th iteration as and initialize . At the -th iteration (), we use to solve the IVPs on the time horizon

| (10) |

and collect as . Here we omit the controller subscript for simplicity, i.e. . We then compute approximated optimal paths starting from at :

| (11) |

where are i.i.d. normal random variables with mean and variance , and independent of . Note that and are only defined in (for ), we then fill their values in interval with values from previous iteration,

| (12) |

Finally, we solve the least square problems to determine :

| (13) |

We will use to denote the closed-loop controller , the closed-loop controller generated in the -th iteration by the IVP enhanced sampling method. The theorem below gives the performance of and when using Model 1 (5).

Theorem 1’.

Under Model 1 (5), define the IVPs generated by , and as follows:

-

1.

If is a random variable following a standard normal distribution, which is independent of the initial points / and noises / in the training process, the state variables in the training data and in the IVP from the vanilla method follow normal distributions and satisfy:

(14) On the other hand, the state variables in the training data and in the IVP from the IVP enhanced sampling method also follow and satisfy:

(15) -

2.

If is a fixed initial point, define the total cost

Then,

(16) (17)

Proof.

We first give the closed-form expressions of and using Model 1 (5). Recalling and given in equation (8), we have

Therefore, recalling is learned through the least square problem (9), we have

| (18) |

where

To compute , recalling equations (11) and (12), when , and , we have

| (19) | |||

| (20) |

Therefore,

Hence, recalling is learned through the least square problem (13), we have, when

| (21) |

where

Equation (21) also holds when and .

We then compute the starting points in the IVP enhanced sampling method. By equation (12), we know that when and , . Together with equation (10), we know that when , for , and for , which implies . Therefore, for , when , we have

Solving the above ODE, we get the solution

| (22) |

Hence, by definition, for ,

Utilizing the above recursive relationship, we obtain, for 111In this section, we take by convention that summation if .,

| (23) |

Now we are ready to prove the main results of the Theorem. First, for equation (14), using the control (18), we have

Solving this ODE gives

| (24) |

Combining the last equation with the fact that

, and are independent normal random variables and

we know that and are normal random variables with

| (25) |

Next, we prove the equation (15). for and , using the control (21), we have

Solving the above ODE with the initial condition , we can get the solution

| (26) |

when and . The above equation also holds when and .

On the other hand, combining equations (20) and (23), we know that when and or and ,

The above equation also holds when and .

Combining the last equation with equation (26) and the fact that , and are independent normal random variables and

we know that and are normal random variables with

We then prove equations (16) and (17). First, with the optimal solution

we have

Recalling equation (24) and plugging (24) into (18), we know that

Hence,

which gives

To compute the difference between and , we first notice that

| (27) | ||||

| (28) |

By the independence of , we know that

Meanwhile, noticing that

it is straightforward to compute that

| (29) |

where

| (30) | ||||

| (31) | ||||

| (32) |

and

| (33) | ||||

| (34) | ||||

| (35) | ||||

| (36) |

Therefore,

| (37) |

∎

Next we present the numerical results when we use Model 2 (6) to fit the closed-loop optimal control.

In the following experiments, we set , and . Figure 5 (left) compares the optimal path with and , the IVPs generated by the controllers learned by the vanilla method and IVP enhanced sampling method (all three paths start at ). In this experiment, we set and . Figure 5 (middle) shows how the time influences the differences of the second order moments between the state distribution of the training data and the state distribution of the IVP generated by learned controllers in the vanilla method and the IVP enhanced sampling method. We set the total time and . Figure 5 (right) compares the performance of the vanilla method and IVP enhanced sampling method on different total times . The performance difference is an empirical estimation of and when follows a standard normal distribution. In this experiment, for each method, we set and learn 10 different controllers with different realizations of the training data and calculate the average of the performance difference on 1000 randomly sampled initial points (from a standard normal distribution) and 10 learned controllers.

Appendix C Full Dynamics of Quadrotor

In this section, we introduce the full dynamics of quadrotor (Bouabdallah et al., 2004; Madani & Benallegue, 2006; Mahony et al., 2012) that are considered in Section 6. The state variable of a quadrotor is where is the position of quadrotor in Earth-fixed coordinates, is the velocity in body-fixed coordinates, (roll, pitch, yaw) is the attitude in terms of Euler angles in Earth-fixed coordinates, and is the angular velocity in body-fixed coordinates. Control is composed of total thrust and body torques from the four rotors. Then we can model the quadrotor’s dynamics as

| (38) |

with matrix and defined as

The constant mass and inertia matrix are the parameters of the quadrotor, where , and are the moments of inertia of the quadrotor in the -axis, -axis, and -axis, respectively. We set and which are the same system parameters as in (Madani & Benallegue, 2006). The constants denote the gravity vector where is the acceleration of gravity on Earth. The direction cosine matrix represents the transformation from the Earth-fixed coordinates to the body-fixed coordinates:

and the attitude kinematic matrix relates the time derivative of the attitude representation with the associated angular rate:

Note that in practice the quadrotor is directly controlled by the individual rotor thrusts , and we have the relation with

where is the distance from the rotor to the UAV’s center of gravity and is a constant that relates the rotor angular momentum to the rotor thrust (normal force). So once we obtain the optimal control , we are able to get the optimal immediately by the relation .

Appendix D PMP and Space Marching Method

In this section we introduce the open-loop optimal problem solver used for solving the optimal landing problem of a quadrotor. The solver is based on Pontryagin’s minimum principle (PMP) (Pontryagin, 1987) and space-marching method (Zang et al., 2022). The optimal landing problem is defined as

| (39) | ||||

| s.t. |

where and denote the state trajectory and control trajectory, respectively, and is the full dynamics of quadrotor introduced in Appendix C. By PMP, problem (39) can be solved through solving a two-point boundary value problem (TPBVP). Introduce costate variable and Hamiltonian

| (40) |

The TPBVP is defined as

| (41) |

We have the boundary conditions:

| (42) |

and the optimal control should minimize Hamiltonian at each :

| (43) |

We use solve_bvp function of scipy (Kierzenka & Shampine, 2001) to solve TPBVP (41)-(43) and set tolerance to , max_nodes to . We note that when the initial state is far from the target state , solving the TPBVP directly often fails. Thus we use the space-marching method proposed in Zang et al. (2022). We uniformly select points in the line segment from to , and denote them as according to their increasing distances to (). These TPBVPs will be solved in order and at every step we use the previous solution as the initial guess to the current problem.

Appendix E Experiment Details and More Results of the Optimal Landing Problem

In this section, we give more details about implementation and numerical results in Section 6. We aim to find the optimal controls to steer the quadrotor from some initial states to a target state . The distribution of the initial state of interest is the uniform distribution on the set . We consider a quadratic running cost:

| (44) |

where represents the reference control that balances with gravity and represents the weight matrix characterizing the cost of deviating from the reference control. The terminal cost is

where . We set the entries in the terminal cost larger than the running cost as we want to give the endpoint more penalty for deviating from the landing target.

We sample initial points for training. On every optimal path, we select time-state-action tuples with time step . Thus the number of training data is always at every iteration. Note that when solving BVPs and IVPs, we use denser time grids to ensure the solution is accurate enough. The neural network models in all quadrotor experiments have the same structure with 13-dimensional input (12 for states and 1 for time) and 4-dimensional output. The networks are fully-connected with 2 hidden layers; each layer has 128 hidden neurons and we use as the activation function. The inputs are scaled to where the upper bound and lower bound are the maximum and minimum of the training dataset. Since the activation fucntion is , we adapt Xavier initialization (Glorot & Bengio, 2010) before training. We train the neural network by the Adam (Kingma & Ba, 2015) optimizer with learning rate , batch size , and epochs. At every iteration of IVP enhanced sampling method, we train a new neural network from scratch. Our model and training programs are implemented by PyTorch (Paszke et al., 2019). The experiments of quadrotor are conducted on a Macbook Pro with a Apple M1 pro core. We repeat every experiment 5 times with different random seeds.

In the first experiment, we use our IVP enhanced sampling method and choose temporal grid points . In this experiment, average time of training a neural network is about 6.6 minutes. It takes about 5.1s to solve an optimal path whose total time at iteration 0, takes about 1.91s to solve an optimal path whose total time at iteration 1, and takes about 1.3s at iteration 2. The performance of networks obtained in different iterations is shown in Figure 3 (left). More statistics of the ratios between the NN-controlled costs and the optimal costs during three iterations are reported in Table 1 (a). Table 1 (b) shows the results of ensemble of networks, which uses the average of output from five networks as its output. When the original network’s performance is not too poor, the results after ensemble generally improve. However, when the original network performs extremely poorly, there won’t be significant changes in the results after ensemble. We also test an alternative way to construct dataset during the IVP enhanced sampling as discussed in Section 8, i.e., setting in Algorithm 1 line 9. We denote the final policy obtained in this approach as and report the statistics of corresponding cost ratios in Table 1 as well. All ratios are clipped at . The performance of is similar to that of , suggesting that so far the dropped data provides little value for training. Additionally, as we always train networks 1000 epochs and this alternative approach has more training data, the training time of is 1.5 times that of others.

We also illustrate the trajectories of states controlled by learned policy and optimal policy in Figure 6. The path controlled by matches the optimal path at the beginning but deviates around . Then the path controlled by fits the optimal path more and deviates around . Finally, the path controlled by matches the optimal path till the terminal time. Note that the cost of three controlled paths is 3296.18, 119.91, 6.69, respectively, and the optimal cost is 6.32.

| Policy | Mean | 90% | 75% | Median |

| 16.43 ( 6.16 ) | 17.60 ( 4.80 ) | 16.85 ( 6.29 ) | 16.54 ( 6.92 ) | |

| 3.69 ( 1.62 ) | 9.11 ( 5.54 ) | 4.09 ( 2.16 ) | 2.06 ( 0.72 ) | |

| 1.17 ( 0.09 ) | 1.22 ( 0.09 ) | 1.11 ( 0.04 ) | 1.06 ( 0.02 ) | |

| 1.20 ( 0.11 ) | 1.15 ( 0.03 ) | 1.07 ( 0.01 ) | 1.03 ( 0.00 ) |

| Policy | Mean | 90% | 75% | Median |

| ensemble | ||||

| ensemble | ||||

| ensemble |

In the experiments of comparing different methods, the three adaptive sampling methods are processed as follows. The initial network is the same policy as in the IVP enhanced sampling method, i.e., the policy after iteration 0 in the IVP enhanced sampling method, which is trained on 500 optimal paths. Then we will sequentially add 400, 300, 300 paths to training data so we use 1500 optimal paths to train the final network (solving 1500 open-loop optimal control problems namely). When sampling new training paths, we will randomly sample 2 initial points, calculate some indices using the latest policy and preserve the preferable one. For AS w. large u, we will calculate the norms of the control variables of these two points at time 0 and choose the one with larger norms. For AS w. large v, we will solve IVPs to obtain the NN-controlled costs and choose the larger one. For AS w. bad v, we will solve 2 TPBVPs corresponding to these two points and choose the one with a large difference between the NN-controlled value and optimal value. Note that in all other methods, the time span of the solved open-loop problem is always . Nevertheless, in our method, the time span of the optimal paths to be solved is getting shorter and shorter along iterations, which means it takes less time to solve. More statistics are shown in Table 2.

| Methods | Mean | 90% | 75% | Median |

| IVP enhanced sampling | 1.17 ( 0.09 ) | 1.22 ( 0.09 ) | 1.11 ( 0.04 ) | 1.06 ( 0.02 ) |

| AS w. bad v | 1.45 ( 0.18 ) | 1.93 ( 0.33 ) | 1.47 ( 0.21 ) | 1.24 ( 0.12 ) |

| AS w. large v | 1.94 ( 0.09 ) | 2.97 ( 0.38 ) | 1.92 ( 0.19 ) | 1.46 ( 0.08 ) |

| AS w. large u | 1.75 ( 0.33 ) | 2.39 ( 0.67 ) | 1.76 ( 0.40 ) | 1.44 ( 0.23 ) |

| Vanilla sampling | 2.15 ( 0.96 ) | 3.18 ( 1.71 ) | 2.45 ( 1.20 ) | 1.89 ( 0.78 ) |

We further test the performance of NN controllers obtained from different sampling methods in the presence of observation noises, considering that the sensors have errors in reality. During simulation, we add a disturbance to the input of the network, where (including the disturbance of time) is uniformly sampled from . We test and the numerical results are shown in Figure 7. We also test the performance of the open-loop optimal controller under perturbation where a disturbance is added to the input time. Figure 7 shows that when disturbance exists, closed-loop controllers are more reliable than the open-loop controller and the one trained by the IVP enhanced sampling method performs best among all the methods.

Finally we consider the impact of different choices of temporal grid points in Algorithm 1. We test 4 experiments and repeat 5 times using the same random seeds. The results listed in Table 3 show that our algorithm is robust to the choice of temporal grid points.

| Temporal grid points () | |||||

| iter | 4 | 8 | 10 | 12 | 14 |

| 2 | 1.36 ( 0.08) | ||||

| 3 | 3.69 ( 1.62) | 1.17 ( 0.09) | |||

| 4 | 10.86 ( 2.27) | 1.72 ( 0.49) | 1.17 ( 0.07) | ||

| 6 | 14.39 ( 1.85) | 12.14 ( 5.64) | 6.30 ( 2.67) | 1.73 ( 0.46) | 1.22 ( 0.04) |

Appendix F Experiment Details and More Results of the Manipulator

We can write down the dynamics of the manipulator as,

where is the control torque, , is the joint angles, is the joint velocities, is the acceleration of joint angles.

The acceleration is given by the (non-linear) forward dynamics

| (45) |

Here is the generalized inertia matrix, represents the centrifugal forces and Coriolis forces, and is the generalized gravity.

The reaching task is to move the manipulator from the initial states near to the terminal states . In the experiments, we take with

See Figure 8 for an illustration of the task. Besides, we take the running cost and terminal cost to be

where is the torque to balance gravity at state , i.e. . Under this setting, is an equilibrium of the system, i.e. and .

In the experiment, we take where we use large weights to ensure the reaching goal is approximately achieved.

The backbone network for this example is the QRNet (Nakamura-Zimmerer et al., 2020; 2021b). QRNet exploits the solution corresponding to the LQR problem at equilibrium and thus improves the network performance around the equilibrium. The usage of other network structures also demonstrates the genericness/versatility of the IVP enhanced sampling method. Suppose we have the linear quadratic regulator (LQR) for the problem with linearized dynamics and quadratized costs at , the QRNet can be formulated as

| (46) |

where is any neural network with trainable parameters , and is a saturating function that satisfies . The used in this example is defined coordinate-wisely as

| (47) |

where with being minimum and maximum values for . Here and are the corresponding values at each coordinate of , respectively.

To get the LQR, we need to expand the dynamics linearly as

| (48) |

and the term related to acceleration in the running cost quadratically as

| (49) | ||||

| (50) | ||||

| (51) |

where , and we exploit and . The derivatives boil down to and which can be analytically computed in the Pinocchio library (Carpentier et al., 2015–2021; 2019; Carpentier & Mansard, 2018). In the experiment, we solve the LQR by the implementation in the Drake library (Tedrake & the Drake Development Team, 2019).

In the simulation and open-loop solver, we take time step and use the semi-implicit Euler discretization. The initial positions are sampled uniformly and independently in a -dimensional cube centered at with side length . Initial velocities are set to zero. Other than directly applying the open-loop solver to collected initial states, we first sample another mini-batch of initial states and call the open-loop solver on it. We then pick one solution of the lowest cost and use it as an initial guess later. This can not only speed up the data generation process but also avoid sampling trajectories that fall into bad local minima. We use the differential dynamic programming solver implemented in (Mastalli et al., 2020), which is a second-order algorithm that favors a good initial guess. The dataset is then created from the (discrete) optimal trajectories warm-started by the initial guess. Each trajectory has data points that are pairs of -dimensional input states including time and -dimensional output controls. The validation dataset and test dataset contain and optimal trajectories, respectively.

Finally, all the QRNets are trained by minimizing a mean square error (4) over the training dataset with the Adam optimizer (Kingma & Ba, 2015) with learning rate , batch size and epochs . is a fully-connected network with hidden layers; each layer has neurons. The first three layers use the function as activation while the last three layers use ELU (Clevert et al., 2016). Network and training are implemented in PyTorch (Paszke et al., 2019). During iterations, all networks are trained from scratch, i.e. a new network with random weights instead of inheriting weights from the previous iteration. After each epoch of training, we compute the loss on the validation dataset. The network with the least validation loss is then used for data generation in the next iteration or as the final policy (at the last iteration).

Training a neural network on an NVIDIA 3070 Ti GPU requires approximately 7769 seconds. Moreover, the time required for sampling decreases as the time grids approach the terminal time. For instance, when employing a single process with an Intel 12700KF CPU, it takes roughly seconds to sample 100 optimal trajectories at a time grid of , while it takes about seconds to sample 100 optimal trajectories at a time grid of . These reported sampling times include solving both the IVPs from 0 to and open-loop optimal control problems on . Note that we use the previously optimal solution to warm up the new open-loop optimal control problems.

We compare the ratio of policy cost over the optimal cost, see Table 4 for the mean ratio over all 1200 trajectories of the test dataset. The ratio has been clipped at for each trajectory. The results demonstrate that the IVP enhanced sampling method has great improvement over the vanilla supervised-learning-based method. It also shows the IVP enhanced sampling is not sensible to the choices of the temporal grid points. Besides, we also try augmenting the dataset with newly collected data instead of replacing them, as detailed in Section 8. Through the comparison between AS3 and AS3* in Table 4, we find that the alternative approach does not bring further improvement.

| time grid | iter 0 | iter 1 | iter 2 | |

| Vanilla300 | 1.92 ( 0.06) | |||

| Vanilla900 | 1.89 ( 0.09) | |||

| AS1 | 0.16 - 0.48 - 0.8 | 1.81 ( 0.16) | 1.29 ( 0.21) | 1.09 ( 0.08) |

| AS2 | 0.16 - 0.56 - 0.8 | 1.94 ( 0.07) | 1.34 ( 0.17) | 1.15 ( 0.14) |

| AS3 | 0.16 - 0.64 - 0.8 | 1.92 ( 0.08) | 1.37 ( 0.21) | 1.07 ( 0.11) |

| AS3* | 0.16 - 0.64 - 0.8 | 1.96 ( 0.05) | 1.40 ( 0.17) | 1.24 ( 0.28) |

| AS4 | 0.16 - 0.72 - 0.8 | 1.96 ( 0.03) | 1.29 ( 0.18) | 1.13 ( 0.10) |

Appendix G Comparison with DAGGER

In this section, we give a comprehensive comparison between DAGGER (Dataset Aggregation) (Ross et al., 2011) and the IVP enhanced sampling, in terms of concepts, theoretical results, and numerical results. When referring to DAGGER, we mostly mean the single iteration version of DAGGER (i.e., augmenting dataset once) unless otherwise stated explicitly.

Concept.

Both DAGGER and IVP enhanced sampling methods solve IVPs using the policy from the previous iteration to generate time-state pairs as new initial time-state pairs and call the open-loop solver to label the trajectories starting from these pairs. Their main differences are as follows. In the -th iteration, the IVP enhanced sampling method only solves the IVPs till the -th time grid and uses the time and the visited states at the -th time grid as the initial time-state pairs to collect new open-loop optimal data. In contrast, in each iteration, the DAGGER method needs to solve until the penultimate temporal grid , collect the states on all the time grids , and use all the collected time-state pairs as the initial time-state pair to generate new open-loop optimal data. In the IVP enhanced sampling method, the later times are only visited by networks trained from later iterations. Hopefully, networks from later iterations perform better and generate relevant time-state pairs at later time grids. In contrast, each network from the DAGGER method acts on the dynamical system until the final grid. For stiff dynamics that accumulate errors fast, the time-state pairs at later time grids generated by a network from earlier iterations can deviate much from what the optimal policy will visit. These data might then deteriorate the performance instead, as supported by the numerical results below.

Computation cost.

With the same time grids (say ), we argue that the efforts of training the IVP enhanced sampling method and single iteration DAGGER are approximately the same since the cost for data labeling and training are approximately the same, respectively.

First, in terms of the computation cost of data labeling, the IVP enhanced sampling method requires labeling dataset of trajectories. The single iteration DAGGER labels the same amounts of data. Though DAGGER requires fewer efforts in solving the IVPs as the IVP enhanced sampling method always solves IVPs from . However, for many problems, the time spent in solving IVPs is negligible compared to that in solving the open-loop optimal control problems. Specifically, in our first numerical example of optimal landing, it takes about 2.5 hours to do the BVP computation in total while it only takes 7 minutes to do IVP integration in total. In the second numerical example of the reaching problem, to solve 100 trajectories, it takes 308 seconds to solve the open-loop solution through DDP while it only takes 3 seconds to do IVP integration. Therefore, the cost for data labeling is approximately the same.

Second, in terms of training time, let us assume that the initial dataset contains trajectories and each trajectory contributes time-state-action tuples. The IVP enhanced sampling method needs to train networks; each network is trained on an enhanced dataset with trajectories. Then, there are in total time-state-action tuples visited in the IVP enhanced sampling method (same data is counted repeatedly when training different networks, the same below). DAGGER trains two networks, one with trajectories and the other with trajectories. The former dataset contains time-state-action tuples while the latter one contains approximately

time-state-action tuples. Then, for time grids (e.g. the landing problem of a quadrotor below), DAGGER visits approximately fewer data than the IVP enhanced sampling method; for time grids and (e.g. the reaching problem of the manipulator below), DAGGER visits the same amounts of data as and approximately more than the IVP enhanced sampling method, respectively; Therefore, with the same number of epochs in training each network, the training efforts do not differ much in the two methods.

In the following, we compare the IVP enhanced sampling method with DAGGER. We will see that, for the LQR example in Section 5 and Appendix B, the IVP enhanced sampling method surpasses DAGGER both theoretically and numerically, especially for large . For the landing and reaching problem studied previously (see Appendix F and E), both methods perform similarly well. However, in more difficult settings of both problems, the IVP enhanced sampling method outperforms DAGGER.

Results on the LQR example.

We first investigate the performance of DAGGER on the LQR example. With a slight abuse of notation, we will use and to denote the open-loop optimal paths sampled for training, to denote the initial states used to generate the training trajectories, to denote the closed-loop controller learned by DAGGER, and , to denote the IVP solution generated by the closed-loop controller .

In DAGGER, we again choose and the temporal grid points for . We first sample initial points from the normal standard distribution and then generated approximated optimal paths starting at :

| (52) |

where are i.i.d. normal random variables and independent with initial states whose mean is and variance is . We then train the closed-loop controller by solving the following least square problems:

| (53) |

Then, we use to solve the IVPs on the whole time horizon with initial states :

| (54) |

and collect as for . At each time step , we then compute approximated optimal paths starting from :

| (55) |

where are i.i.d. normal random variables and independent with and whose mean is and variance is . Finally, we collect the optimal paths to train the closed-loop controller by solving the following least square problems:

| (56) |

for .

Theorem 2.

Under Model 1 (5), define IVP generated by :

| (57) |

and the total cost:

| (58) |

If is a fixed point, then

| (59) |

Proof.

With the same approach of computing in (18), we have

| (60) |

where

| (61) |

Recalling the definition of in equation (54), we have

| (62) |

Hence, for , we have

| (63) |

Plugging the last equation into equation (55), we have that for

| (64) | |||

| (65) |

Therefore,

| (66) |

We can then compute the least square problem (56) to obtain that for and , we have

| (67) |

where

| (68) |

for . We can then solve the ODE (57), we have that when and

| (69) |

where . Therefore, when and ,

| (70) |

Define

| (71) |

then

| (72) | ||||

| (73) | ||||

| (74) |

Therefore

| (75) |

We can then compute that

| (76) | ||||

| (77) | ||||

| (78) | ||||

| (79) |

Therefore,

| (80) | ||||

| (81) | ||||

| (82) | ||||

| (83) | ||||

| (84) |

On the other hand, noticing that

| (85) |

we have

| (86) | ||||

| (87) | ||||

| (88) | ||||

| (89) |

Combining equations (75), (80) and (86), we can conclude our result. ∎

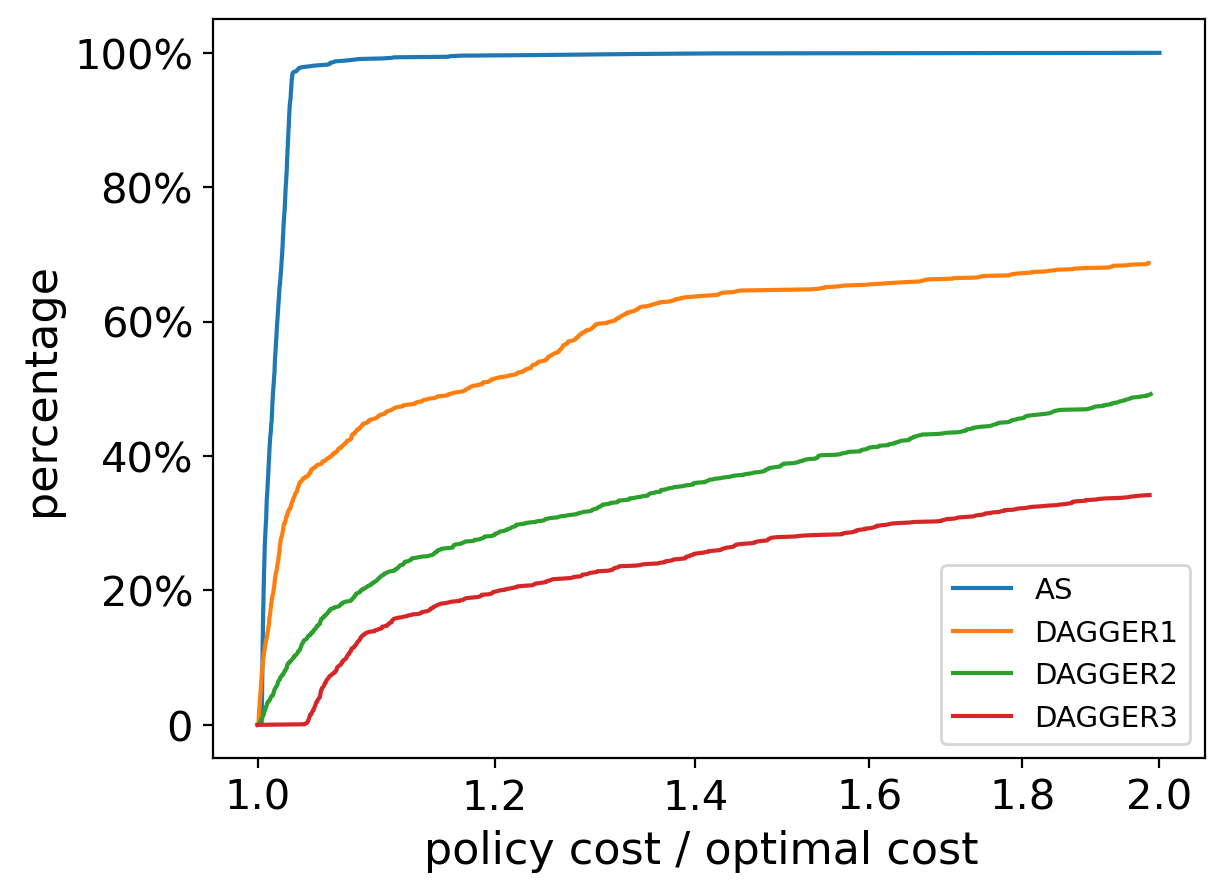

We then numerically compare the performance of DAGGER with the vanilla method and the IVP enhanced sampling method on Model 2 (6). In these experiments, we again set , and . We present the paths generated by the optimal controller and the controllers learned by the vanilla method, the IVP enhanced sampling method, and DAGGER in Figure 9 (left). Figure 9 (middle) compares the performance of these three methods on different time horizons . We also test DAGGER with multiple iterations in Figure 9 (right). Here we set total time . The experiment shows that the performance of the learned controller does not improve with more iterations. The detailed settings are identical to the numerical experiments in Appendix B.

Results on the landing problem.

Following the same settings as in Appendix E, we train a policy by DAGGER with additional sampling at time and . The results are summarized in Table 5. It shows that DAGGER performs closely to the IVP enhanced sampling method. However, if we decrease the number of trajectories in the initial dataset from to to train controllers using these two methods, we observe more decreases in performance in the DAGGER method, which implies that DAGGER is more sensitive to the data amount. The main reason is that DAGGER demands enough data at the beginning to have a good initial controller to explore the state over the whole time interval. However, in complicated control problems, we do not have such privilege and indeed require adaptive sampling to improve the controller.

| of iterations | result | |

| AS | 3 | 1.092 |

| DAGGER | 2 | 1.069 |

| AS-300 | 3 | 1.287 |

| DAGGER-300 | 2 | 1.405 |

Results on the reaching problem.

For the problem detailed in Appendix F, by additionally sampling at time and in one iteration, the DAGGER algorithm achieves a policy cost / optimal cost ratio of on the test dataset, which is close to that achieved by the IVP enhanced sampling method.

We then increase the difficulty of the control problem by increasing the moving distance. Following the configurations in Appendix F, we change the center of initial position and the terminal position to

Besides, as the DAGGER will generate states in a wider range, we modify in QRNet to in order to avoid saturation. We also increase the size of the initial dataset from 100 trajectories to 200 trajectories. Each network is trained with 1500 epochs. The other settings are the same as that in Appendix F.

Each method has been run 5 times independently, and we report their average and best performance. The results are summarized in Table 6 and Figure 10. As we can see, the IVP enhanced sampling method is capable of finding a closed-loop controller with an average ratio between policy cost and optimal cost achieving . However, the DAGGER algorithm cannot yield such a satisfactory result.

In DAGGER 1, we apply the DAGGER method with time grids , an earlier final grid compared to DAGGER 2 which has time grids . We see an average improvement from 1.8528 to 1.6327. DAGGER 1 performs similarly to the network trained at the second iteration of AS, which implies that the extra data sampled at does not help much. Furthermore, we conduct an additional iteration of the DAGGER method, which requires solving the open-loop problem to get more trajectories and extra training of the network with trajectories in total. It performs worse, which also confirms the arguments we made at the beginning that the uncarefully collected data may deteriorate the performance.

| Temporal grid points () | ||||

| of iterations | 0.16 | 0.48 | 0.64 | |

| AS | 3 | 1.6592 (1.5417) | 1.3610 (1.0155) | |

| DAGGER 1 | 2 | 1.6327 (1.4004) | ||

| DAGGER 2 | 2 | 1.8528 (1.6364) | ||

| DAGGER 3 | 3 | 1.9293 (1.7478) | ||