Information-Theoretic Characterization of the Generalization Error for Iterative Semi-Supervised Learning

Abstract

Using information-theoretic principles, we consider the generalization error (gen-error) of iterative semi-supervised learning (SSL) algorithms that iteratively generate pseudo-labels for a large amount of unlabelled data to progressively refine the model parameters. In contrast to most previous works that bound the gen-error, we provide an exact expression for the gen-error and particularize it to the binary Gaussian mixture model. Our theoretical results suggest that when the class conditional variances are not too large, the gen-error decreases with the number of iterations, but quickly saturates. On the flip side, if the class conditional variances (and so amount of overlap between the classes) are large, the gen-error increases with the number of iterations. To mitigate this undesirable effect, we show that regularization can reduce the gen-error. The theoretical results are corroborated by extensive experiments on the MNIST and CIFAR datasets in which we notice that for easy-to-distinguish classes, the gen-error improves after several pseudo-labelling iterations, but saturates afterwards, and for more difficult-to-distinguish classes, regularization improves the generalization performance.

Keywords: Generalization error, Semi-supervised learning, Pseudo-label, Information theory, Binary Gaussian mixture.

1 Introduction

In real-life machine learning applications, it is relatively easy and inexpensive to obtain large amounts of unlabelled data, while the number of labelled data examples is usually small due to the high cost of annotating them with true labels. In light of this, semi-supervised learning (SSL) has come to the fore (Chapelle et al., 2006; Zhu, 2008; Van Engelen and Hoos, 2020). SSL makes use of the abundant unlabelled data to augment the performance of learning tasks with few labelled data examples. This has been shown to outperform supervised and unsupervised learning under certain conditions. For example, in a classification problem, the correlation between the additional unlabelled data and the labelled data may help to enhance the accuracy of classifiers. Among the plethora of SSL methods, pseudo-labelling (Lee, 2013) has been observed to be a simple and efficient way to improve the generalization performance empirically. In this paper, we consider the problem of pseudo-labelling a subset of the unlabelled data at each iteration based on the previous output parameter and then refining the model progressively, but we are interested in analysing this procedure theoretically. Our goal in this paper is to understand the impact of pseudo-labelling on the generalization error.

A learning algorithm can be viewed as a randomized map from the training dataset to the output model parameter. The output is highly data-dependent and may suffer from overfitting to the given dataset. In statistical learning theory, the generalization error (gen-error), or generalization bias, is defined as the expected gap between the test and training losses, and is used to measure the extent to which the algorithms overfit to the training data (Russo and Zou, 2016; Xu and Raginsky, 2017; Kawaguchi et al., 2017). In SSL problems, the unlabelled data are expected to improve the generalization performance in a certain manner and thus, it is a worthy endeavor to investigate the behaviour theoretically. Although there exist many works studying the gen-error for supervised learning problems, the gen-error of SSL algorithms is yet to be explored.

1.1 Related Works

The extensive literature review is categorized into three aspects.

Semi-supervised learning:

There have been many existing results discussing about various methods of SSL. The book by Chapelle et al. (2006) presented a comprehensive overview of the SSL methods both theoretically and practically. Chawla and Karakoulas (2005) presented an empirical study of various SSL techniques on a variety of datasets and investigated sample-selection bias when the labelled and unlabelled data are from different distributions. Zhu (2008) partitioned SSL methods into six main classes: generative models, low-density separation methods, graph-based methods, self-training and co-training. Pseudo-labelling is a technique among the self-training and co-training (Zhu and Goldberg, 2009). In self-training, the model is initially trained by the limited number of labelled data and generate pseudo-labels to the unlabelled data. Subsequently, the model is retrained with the pseudo-labelled data and repeats the process iteratively. It is a simple and effective SSL method without restrictions on the data samples (Triguero et al., 2015). A variety of works have also shown the benefits of utilizing the unlabelled data. Singh et al. (2008) developed a finite sample analysis that characterized how the unlabelled data improves the excess risk compared to the supervised learning, with respect to the number of unlabelled data and the margin between different classes. Li et al. (2019) studied multi-class classification with unlabelled data and provided a sharper generalization error bound using the notion of Rademacher complexity that yields a faster convergence rate. Zhu (2020) considered the general SSL setting by assuming the loss function to be -exponentially concave or the - loss, and used a Bayesian method for prediction instead of empirical risk minimization which we consider. The author presented an upper bound for the excess risk and the learning rate in terms of the number of labelled and unlabelled data examples. Carmon et al. (2019) proved that using unlabelled data can help to achieve high robust accuracy as well as high standard accuracy at the same time. Dupre et al. (2019) considered iteratively pseudo-labelling the whole unlabelled dataset with a confidence threshold and showed that the accuracy converges relatively quickly. Oymak and Gülcü (2021), in which part of our analysis hinges on, studied SSL under the binary Gaussian mixture model setup and characterized the correlation between the learned and the optimal estimators concerning the margin and the regularization factor. Recently, Aminian et al. (2022) considered the scenario where the labelled and unlabelled data are not generated from the same distribution and these distributions may change over time, exhibiting so-called covariate shifts. They provided an upper bound for the gen-error and proposed the Covariate-shift SSL (CSSL) method which outperforms some previous SSL algorithms under this setting. However, these works do not investigate how the unlabelled data affects the generalization error over the iterations.

Generalization error bounds:

The traditional way of analyzing generalization error involves using the Vapnik–Chervonenkis or VC dimension (Vapnik, 2000) and the Rademacher complexity (Boucheron et al., 2005). Recently, Russo and Zou (2016) proposed using the mutual information between the estimated output of an algorithm and the actual realized value of the estimates to analyze and bound the bias in data analysis, which can be regarded equivalent to the generalization error. This new approach is simpler and can handle a wider range of loss functions compared to the abovementioned methods and other methods such as differential privacy. It also paves a new way to improving generalization capability of learning algorithms from an information-theoretic viewpoint. Following Russo and Zou (2016), Xu and Raginsky (2017) derived upper bounds on generalization error of learning algorithms with mutual information between the input dataset and the output hypothesis, which formalizes the intuition that less information that a learning algorithm can extract from training dataset leads to less overfitting. Later Pensia et al. (2018) derived generalization error bounds for noisy and iterative algorithms and the key contribution is to bound the mutual information between input data and output hypothesis. Negrea et al. (2019) improved mutual information bounds for Stochastic Gradient Langevin Dynamics (SGLD) via data-dependent estimates compared to distribution-dependent bounds.

However, one major shortcoming of the aformentioned mutual information bounds is that the bounds go to infinity for (deterministic) learning algorithms without noise, e.g., Stochastic Gradient Descent (SGD). Some other works have tried to overcome this problem. Lopez and Jog (2018) derived upper bounds on the generalization error using the Wasserstein distance involving the distributions of input data and output hypothesis, which are shown to be tighter under some natural cases. Esposito et al. (2021) derived generalization error bounds via Rényi-, -divergences and maximal leakage. Steinke and Zakynthinou (2020) proposed using the Conditional Mutual Information (CMI) to bound the generalization error; the CMI is useful as it possesses the chain rule property. Bu et al. (2020) provided a tightened upper bound based on the individual mutual information (IMI) between the individual data sample and the output. Wu et al. (2020) extended Bu et al. (2020)’s result to transfer learning problems and characterized the upper bound based on IMI and KL-divergence. In a similar manner, Jose and Simeone (2021) provided a tightened bound on transfer generalization error based on the Jensen–Shannon divergence. Moreover, recently, Aminian et al. (2021) and Bu et al. (2022) recently derived the exact characterization of gen-error for supervised learning and transfer learning with the Gibbs algorithm.

Regularization is an important technique to reduce the model variance (Anzai, 2012), but there are few works that theoretically analyse the relationship between the gen-error and regularization. Moody (1992) characterized the gen-error as a function of the regularization parameter in supervised nonlinear learning systems and showed that the gen-error decreases as the parameter increases. Bousquet and Elisseeff (2002) provided a stability-based gen-error upper bound in terms of the regularization parameter in supervised learning. Mignacco et al. (2020) studied how the regularization affects the expected accuracy in high-dimensional GMM supervised classification problem.

Gaussian mixture models (GMM):

The GMM is a popular, simple but non-trivial model that has been studied by many researchers. The performance of GMM classification problems depends on the data structure. The classical work of Castelli and Cover (1996) studied the classification problem in a binary mixture model with known conditional distributions but unknown mixing parameter and characterized the relative value of labelled and unlabelled data in improving the convergence rate of classification error probability. Akaho and Kappen (2000) characterized the generalization bias of general GMMs in supervised learning and discussed its dependency on data noise. Watanabe and Watanabe (2006) considered GMM in Bayesian learning and provided bounds for variational stochastic complexity. Wang and Thrampoulidis (2022) and Muthukumar et al. (2021) studied the dependence of the bGMM classification performance (using the - loss) on the structure of data covariance by considering SVM and linear interpolation.

However, all these aforementioned works do not investigate the generalization performance of SSL algorithms.

1.2 Contributions

Our main contributions are as follows.

-

1.

In Section 3, we leverage results by Bu et al. (2020) and Wu et al. (2020) to derive an information-theoretic gen-error bound at each iteration for iterative SSL; see Theorem 2.A. Moreover, in contrast to most previous works that bound the gen-error, we derive an exact characterization of gen-error at each iteration for negative log-likelihood (NLL) loss functions (see Theorem 2.B).

-

2.

In Section 4, we particularize Theorem 2.B to the binary Gaussian mixture model (bGMM) with in-class variance . We show that for any fixed number of data samples, there exists a critical value such that when the data variance (representing the overlap between classes) , the gen-error decreases in the iteration count and converges quickly with a sufficiently large amount of unlabelled data. When , the gen-error increases instead, which means using the unlabelled data does not help to reduce the gen-error across the SSL iterations. The empirical gen-error corroborates the theoretical results, which suggests that the characterization serves as a useful rule-of-thumb to understand how the gen-error changes across the SSL iterations and it can be used to establish conditions under which unlabelled data can help in terms of generalization.

-

3.

In Section 5, we theoretically and empirically show that for difficult-to-classify problems with large overlap between classes, regularization can effectively help to mitigate the undesirable increase of the gen-error across the SSL iterations.

-

4.

In Section 6, we implement the pseudo-labelling procedure on the MNIST and CIFAR datasets with few labelled data and abundant unlabelled data. The experimental results corroborate the phenomena for the bGMM that the gen-error decreases quickly in the early pseudo-labelling iterations and saturates thereafter for easy-to-distinguish classes but increases for hard-to-distinguish classes. By adding -regularization to the hard-to-distinguish problem, we also observe improvements to the gen-error similar to that for the bGMM.

2 Problem Setup

Let the instance space be , the model parameter space be and the loss fucntion be , where . We are given a labelled training dataset drawn from , where each is independently and identically distributed (i.i.d.) from . For any , is a vector of features and is a label indicating the class to which belongs. However, in many real-life machine learning applications, we only have a limited number of labelled data while we have access to a large amount of unlabelled data, which are expensive to annotate. Then we can incorporate the unlabelled training data together with the labelled data to improve the performance of the model. This procedure is called semi-supervised learning (SSL). We are given an independent unlabelled training dataset , where each is generated i.i.d. from . Typically, .

In the following, we consider the iterative self-training with pseudo-labelling in SSL setup, as shown in Figure 1. Let denote the iteration count. In the initial round (), the labelled data are first used to learn an initial model parameter . Next, we split the unlabelled dataset into disjoint equal-size sub-datasets , where . In each subsequent round , based on trained from the previous round, we use a predictor to assign a pseudo-label to the unlabelled sample for all . Let denote the pseudo-labelled dataset. After pseudo-labelling, both the labelled data and the pseudo-labelled data are used to learn a new model parameter . The procedure is then repeated iteratively until the maximum number of iterations is reached.

This setup is a classical and widely-used model in the realm of self-training in SSL (Chapelle et al., 2006; Zhu, 2008; Zhu and Goldberg, 2009; Lee, 2013), where in each iteration, only a subset of the unlabelled data are used. Furthermore, as discussed by Arazo et al. (2020), this method is less likely to overfit to incorrect pseudo-labels, compared to using all the unlabelled data in each iteration (also see Figure 10). Under this setup of iterative SSL, during each iteration , our goal is to find a model parameter that minimizes the population risk with respect to the underlying data distribution

| (1) |

Since is unknown, cannot be computed directly. Hence, we instead minimize the empirical risk. The procedure is termed empirical risk minimization (ERM). For any model parameter , the empirical risk of the labelled data is defined as

| (2) |

and for , the empirical risk of pseudo-labelled data as

| (3) |

We set for . For a fixed weight , the total empirical risk can be defined as the following linear combination of and :

| (4) |

In the usual case where the algorithm minimizes the average of the empirical training losses, one should set . An SSL algorithm can be characterized by a randomized map from the labelled and unlabelled training data , to a model parameter according to a conditional distribution . Then at each iteration , we can use the sequence of conditional distributions with to represent an iterative SSL algorithm. The generalization error at the -th iteration is defined as the expected gap between the population risk of and the empirical risk on the training data:

| (5) | |||

| (6) |

When and , the definition of the generalization error reduces to that of vanilla supervised learning. Based on this definition, the expected population risk can be decomposed as

| (7) |

where the first term on the right-hand side of this equation is what the algorithm minimizes and reflects how well the output hypothesis fits the dataset, and the second term is used to measure the extent to which the iterative learning algorithm overfits the training data at the -th iteration. To minimize , we need both terms in (7) to be small, but there exists a natural trade-off between them. While the algorithm aims to minimize the empirical risk , studying and controlling can also help to reduce the population risk , which is the ultimate goal of learning. Instead of focusing on the total generalization error induced during the entire process, we are interested in the following questions. How does evolve as increases? Do the unlabelled data examples in help to improve the generalization error?

3 General Results

Inspired by the information-theoretic generalization results in Bu et al. (2020, Theorem 1) and Wu et al. (2020, Theorem 1), we derive an upper bound on the gen-error in terms of the mutual information between input data samples (either labelled or pseudo-labelled) and the output model parameter , as well as the KL-divergence between the data distribution and the joint distribution of feature vectors and pseudo-labels (cf. Theorem 2.A). Furthermore, by considering the NLL loss function (MacKay, 2003; Goodfellow et al., 2016), we derive the exact characterization for the gen-error (cf. Theorem 2.B).

Recall that for a given , is an -sub-Gaussian random variable (Vershynin, 2018) if its cumulant generating function for all . If is -sub-Gaussian, we write this as . Furthermore, let us recall the following somewhat non-standard information quantities (Negrea et al., 2019; Haghifam et al., 2020).

Definition 1.

For random variables , and , define the disintegrated mutual information between and given as , and the disintegrated KL-divergence between and given as . These are -measurable random variables. It follows that the conditional mutual information and the conditional KL-divergence .

For distributions , and , define the cross-entropy as and the divergence between the cross-entropies as .

Let for any and for . In iterative SSL, we can characterize the gen-error as shown in Theorem 3 by applying the law of total expectation.

Theorem 2.A (Gen-error upper bound for iterative SSL).

Suppose under for all , then for any ,

| (8) |

where , , and .

Theorem 2.B (Exact gen-error for iterative SSL).

Consider the NLL loss function , where is the likelihood of under parameter . For any ,

| (9) |

where

| (10) | ||||

| (11) |

The proof of Theorem 2.A is provided in Appendix A, in which we provide a general upper bound not only applicable to sub-Gaussian loss functions. The proof of Theorem 2.B is provided in Appendix B. Specifically, for NLL loss functions, Theorem 2.B provides an exact characterization of the gen-error at each iteration. This is in stark contrast to most works on information-theoretic generalization error in which only bounds are provided.

In contrast to Bu et al. (2020, Theorem 1) and Wu et al. (2020, Theorem 1) which pertain to supervised learning, Theorem 3 characterizes the gen-error at each iteration during the pseudo-labelling and training process. Note that the quantities in Theorem 2.B satisfy and . Thus, it is plausible that the upper bound based on and in Theorem 2.A can help to understand and control the exact gen-error. Intuitively, the mutual information between the individual input data sample and the output model parameter in Theorem 2.A and the cross-entropy divergences , in Theorem 2.B both measure the extent to which the algorithm is sensitive to each data example at each iteration . The KL-divergence between the underlying and pseudo-labelled distribution in Theorem 2.A and the cross-entropy divergence in Theorem 2.B measure how effectively the pseudo-labelling process works. As and , we show that the mutual information (as well as and ) vanishes but the divergences and do not, which reflects the impact of pseudo-labelling on the gen-error.

In iterative learning algorithms, by applying the law of total expectation and conditioning the information-theoretic quantities on the output model parameters from previous iterations, we are able to calculate the gen-error iteratively. In the next section, we apply the exact iterated gen-error in Theorem 2.B to a classification problem under a specific generative model—the bGMM. This simple model allows us to derive a tractable characterization on the gen-error as a function of iteration number that we can compute numerically.

4 Main Results on bGMM

We now particularize the iterative semi-supervised classification setup to the bGMM. We evaluate (9) to understand the effect of multiple self-training rounds on the gen-error.

4.1 Iterative SSL under bGMM

Fix a unit vector and a scalar . Under the bGMM with mean and standard deviation (std. dev.) (bGMM()), we assume that the distribution of any labelled data example can be specified as follows. Let , , and , where is the identity matrix of size .

The random vector is distributed according to the mixture distribution

In the unlabelled dataset , each for is drawn i.i.d. from .

Let such that . For any , under the bGMM(), the joint distribution of any pair of is given by . The NLL loss function can be expressed as

| (12) |

The population risk minimizer is given by .

Under this setup, the iterative SSL procedure is shown in Figure 1, but the labelled dataset is only used to train in the initial round ; we discuss the reuse of in all iterations in Corollary 10. That is, in (4), we set . The algorithm operates in the following steps.

-

•

Step 1: Initial round with : By minimizing the empirical risk of labelled dataset

(13) where means that both sides differ by a constant independent of , we obtain the minimizer

(14) -

•

Step 2: Pseudo-label data in : At each iteration , for any , we use to assign a pseudo-label for , that is, .

-

•

Step 3: Refine the model: We then use the pseudo-labelled dataset to train the new model. By minimizing the empirical risk of

(15) we obtain the new model parameter

(16) If , go back to Step 2.

4.2 Definitions

To state our result succinctly, we first define some non-standard notations and functions. From (14), we know that and inspired by Oymak and Gülcü (2021), we can decompose as

where , , and is perpendicular to and independent of (the details of this decomposition are provided in Appendix C).

Given a pair of vectors , define their correlation coefficient as . The correlation coefficient between the estimated and true parameters is

| (17) |

Let . We abbreviate and to and respectively in the following. Then the normalized vector can be decomposed as follows

| (18) |

where . Let , which is a vector perpendicular to .

Let . Define the correlation evolution function that quantifies the increase to the correlation (between the current model parameter and the optimal one) and improvement to the generalization error as the iteration counter increases from to :

| (19) | ||||

| (20) | ||||

| (21) |

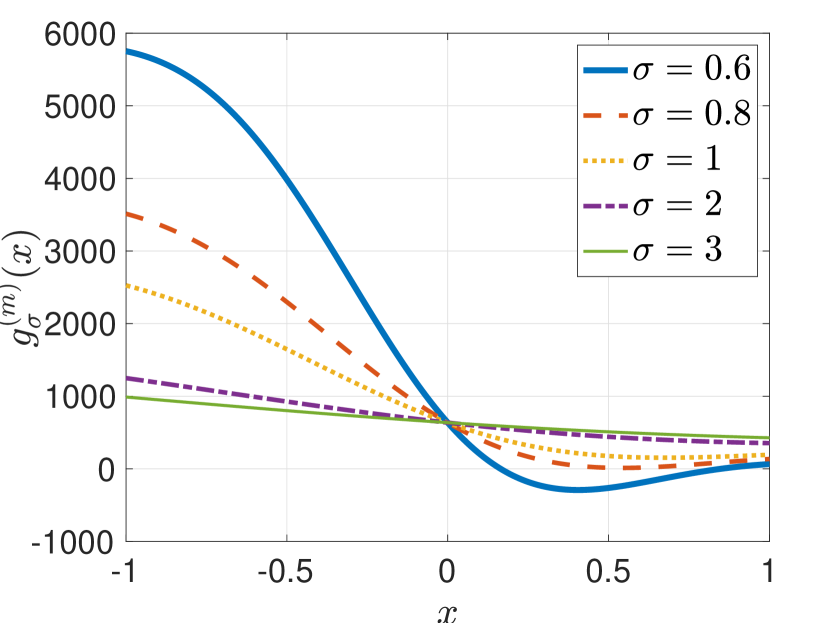

The iterate of the function is defined recursively as with . As shown in Figure 2, for any fixed , we can see that for and for . It can also be easily deduced that for any , for any and for any . This important observation implies that if the correlation , defined in (17), is positive, increases with ; and vice versa. Moreover, as shown in Figure 12 in Appendix C, by varying , we observe that a smaller results in a larger .

4.3 Main Theorem

By applying the result in Theorem 2.B, the following theorem provides an exact characterization for the generalization error at each iteration for large enough.

Theorem 3 (Exact gen-error for iterative SSL under bGMM).

Fix any , . The gen-error at is given by

| (22) |

Let . For each , for almost all sample paths (i.e., almost surely),

| (23) |

where is a term that vanishes as .

First, the gen-error at corresponds to the asymptotic result of supervised maximum likelihood estimation in works by Akaho and Kappen (2000) and Aminian et al. (2021). We numerically plot the quantity in (23), , for in Figure 12 in Appendix C, which shows that for all , when . From (17), we can see that is close to 1 of high probability, which means that is monotonically increasing in with high probability. As a result, (23) increases as increases. This is consistent with the intuition that when the training data have larger overlap between classes, it is more difficult to generalize well. Moreover, is also close to 1 of high probability, and thus (23) saturates with quickly.

Second, by ignoring the term, we compare the theoretical (cf. (22) and (23)) and the empirical gen-error from the repeated synthetic experiments with , and , as shown in Figure 3. It can be seen that the theoretical matches the empirical gen-error well, which means that the characterization in (23) serves as a useful rule-of-thumb for how the gen-error changes over the SSL iterations. When the variance is small (e.g., ), as shown in Figure 3(a), the gen-error decreases significantly from to and then quickly converges to a non-zero constant. Recall the correlation evolution function in (19). Given any pair of , if , for all , as shown in Figure 2. This means that if the quality of the labelled data is reasonably good, by using which is learned from , the generated pseudo-labels for the unlabelled data are largely correct. Then the subsequent parameters for learned from the large number of pseudo-labelled data examples can improve the generalization error. With sufficiently large amount of training data, algorithm converges at very early stage. In addition, for more general cases (e.g., non-diagonal class covariance matrices), it takes more iterations for the gen-error to reach a plateau, as shown in Figure 5.

When the variance is large (e.g. ), as shown in Figure 3(b), the gen-error increases with iteration . The result shows that when the overlap between different classes is large enough, using the unlabelled data may not be able to improve the generalization performance. The intuition is that at the initial iteration with a limited number of labelled data, the learned parameter cannot pseudo-label the unlabelled data with sufficiently high accuracy. Thus, the unlabelled data is not labelled well by the pseudo-labelling operation and hence, cannot help to improve the generalization error. To gain more insight, in Figure 5, we numerically plot versus different values of under the same setting. It is interesting to find that there exists a such that for , , which means the gen-error can be reduced with the help of abundant unlabelled data, while for , using the unlabelled data can even harm the generalization performance.

Third, let us examine the effect of , the number of labelled training samples. By expanding , defined in (17), using a Taylor series, we have

| (24) |

It can be seen that as increases, converges to in probability. Suppose the dimension and . Then where . By letting , the gen-error (cf. (23)) can be rewritten as

| (25) |

where and thus, is a decreasing function of . We further deduce that for any , is decreasing in .

Fourth, we consider an “enhanced” scenario in which the labelled data in are reused in each iteration. Set in (4). We can extend Theorem 3 to Corollary 10 provided in Appendix F. It can be seen from Figure 17 that still decreases from to and saturates afterwards. We find that when , , , the gen-error is almost the same as that one in Figure 3(a), which means that for large enough , reusing the labelled data does not necessarily help to improve the generalization performance. Moreover, when , is higher than that for , which coincides with the intuition that increasing the number of unlabelled data helps to reduce the generalization error.

Fifth, it is natural to wonder what the effect is when , the number of unlabelled data examples, is held fixed and , the number of labelled data examples, increases. In Figure 6, we numerically plot in (22) and (the theoretical) in (23) for ranging from to , , and . As increases, and both decrease, which is as expected. However, when is larger than a certain value ( in this case), we find that becomes smaller than . This implies that with sufficiently many labelled training data, the generalization error based on the labelled training data is already sufficiently low, and incorporating the pseudo-labelled data in fact adversely affects the generalization error. Understanding this phenomenon precisely is an interesting avenue for future work.

Finally, to verify the validity of the gen-error upper bound in Theorem 2.A, we further apply the bound to this setup and prove that the upper bound exhibits similar behaviour of the evolution of gen-error as increases. See Appendix D.

5 Improving the Gen-Error for Difficult Problems via Regularization

In Section 4.3, it is shown that for difficult classification problems with large class conditional variance, the gen-error increases after using pseudo-labelled data. The reason is that the learned initial parameter can only generate low-accurate pseudo-labels and thus the pseudo-labelled data cannot help improve the generalization performance. In this section, we prove that by adding regularization to the loss function, we can mitigate the undesirable increase of gen-error across the pseudo-labelling iterations.

Since in (22) does not depend on data variance , here we focus on subsequent iterations . By considering the regularization (i.e., adding to (15)), we obtain the new parameters (cf. (16)) as follows

| (26) |

The derivations are provided in Appendix H. By applying Theorem 3, the following theorem provides a characterization for the gen-error of the case with regularization at each iteration for large enough. Let denotes the gen-error of the case with regularization and we drop the fixed quantities for notational simplicity.

Theorem 4 (Gen-error with regularization).

Fix any , and . The gen-error at any is

| (27) |

The proof of Theorem 4 is provided in Appendix H. From (27), we observe that as increases, the gen-error decreases. In Figure 8, we first empirically show that regularization can help mitigate the increase of gen-error during SSL iterations for hard-to-distinguish classes by comparing the empirical gen-error under when and . Then in Figure 8, we plot the theoretical gen-error in (27) versus when for the cases with small and large variances, i.e., and . We also compare the theoretical results with the empirical gen-errors, which turn out to corroborate the theoretical ones. For the case with smaller variance, the improvement on gen-error is barely visible as increases. For the case with larger variance, the decrease of the gen-error is more pronounced, which implies that -regularization can effectively mitigate the impact on the gen-error induced by large class overlapping and pseudo-labels with low accuracy.

The adept reader might naturally wonder why one would not set in (27), which results in the gen-error tending to zero, which presumably is a desirable phenomenon. However, ultimately, what we wish to control is the expected population risk, which, according to (7), is the sum of the expected empirical risk on the training data and the gen-error. Even if the gen-error is zero, the expected population risk might be large. Hence, as increases, we see a tradeoff between the gen-error and the empirical risk.

| Classes | RGB-mean distance | RGB-variance distance | Difficulty |

| horse-ship | 0.0180 | 3.90e-05 | Easy |

| automobile-truck | 0.0038 | 7.06e-05 | Moderate |

| cat-dog | 0.0007 | 4.95e-05 | Challenging |

| Classes | horse ship | ship horse | automobile truck | truck automobile | cat dog | dog cat |

| Number | 17 | 3 | 61 | 64 | 93 | 137 |

| Difficulty | Easy | Moderate | Challenging | |||

6 Experiments on Benchmark Datasets

To further illustrate that our theory is indeed behind the empirical behaviour of the iterative self-training with pseudo-labelling, in this section, we conduct experiments on real-world benchmark datasets, which demonstrates that our theoretical results on the bGMM example can also reflect the training dynamics on more realistic real-world tasks. The code to reproduce all the experiments can be found at https://github.com/HerianHe/GenErrorSSL_2022.git.

Recall that in the bGMM example, a higher standard deviation represents a higher in-class variance, larger class-overlap, and consequently higher difficulty in classification. By a whitening argument, this also holds for bGMMs with non-isotropic covariance matrices. In our experiments on real-world data, we use the difficulty level of classification to mimic different in-class variances of bGMM. We pick two easy-to-distinguish class pairs (“automobile” and “truck”, “horse” and “ship”) from the CIFAR-10 dataset (Krizhevsky, 2009) as an analogy to bGMM with small in-class variance, and one difficult-to-distinguish class pair (“cat” and “dog”) from the same dataset as an analogy to bGMM with large in-class variance. Furthermore, to extend the analogy to multi-class classification, we conduct experiments on the 10-class MNIST dataset to gain more intuition.

We train deep neural networks (DNNs) via an iterative self-learning strategy (under the same setting as Figure 1) to perform binary and multi-class classification. In the first iteration, we only use a few labelled data examples to initialize the DNN with a sufficient number of epochs. In the subsequent iterations, we first sample a subset of unlabelled data and generate pseudo-labels for them via the model trained from the previous iteration. Then we update the model for a small number of epochs with both the labelled and pseudo-labelled data.

Experimental settings: For binary classification, we collect pairs of classes of images, i.e., “automobile” and “truck”, “horse” and “ship”, and “cat” and “dog” from the CIFAR10 (Krizhevsky, 2009) dataset. In this dataset, each class has images for training and images for testing. For each selected pair of classes, we manually divide the training images into two sets: the labelled training set with images and the unlabelled training set with images. We train a convolutional neural network, ResNet-10 (He et al., 2016), and use stochastic gradient descent (SGD) optimizer to minimize the cross-entropy loss. In PyTorch, the cross-entropy loss is defined as the negative logarithm of the output softmax probability corresponding to the true class, which is analogous to the NLL of the data under the parameters of the neural network. For the task with -regularization, we train the neural network by setting different weight decay parameters (equivalent to learning rate). In each pseudo-labelling iteration, we sample unlabelled images. The complete training procedure lasts for self-training iterations.

We further validate our theoretical contributions on a multi-class classification problem in which we train a ResNet-6 model with the cross-entropy loss to perform -class handwritten digits classification on the MNIST (LeCun et al., 1998) dataset. We sample images from the training set, which contains images for each of the ten classes. We divide them into two sets, i.e., a labelled training set with images and an unlabelled set with images. The optimizer and training iterations follow those in the aforementioned binary classification tasks without regularization.

Experimental observations: We perform each experiment times and report the average test and training (cross entropy) losses, the gen-error, and test and training accuracies in Figures 9(d). To illustrate the difficulty level of classification for each pair, we first calculated the mean and variance of the RGB (i.e., the red-green-blue color values) values of the images to show the difference of the images between the two classes. In Table 2, we display the RGB means and variances of the test data in six classes taken from the CIFAR10 dataset. We observe that the RGB variances of each pair are almost (and small compared to the RGB-mean distances), and thus, the RGB-mean distance is indicative of the difficulty of the classification task. Indeed, a smaller RGB-mean distance implies a higher overlap of the two classes and consequently, greater difficulty in distinguishing them. Therefore, the “cat-dog” pair, which is more difficult to disambiguate compared to the “horse-ship” and “automobile-truck” pairs, is analogous to the bGMM with large variance (i.e. large overlap between the positive and negative classes). Furthermore, in Table 2, we quote the commonly-used confusion matrix for the CIFAR10 dataset in (Liu and Mukhopadhyay, 2018, Fig. 7), which quantifies how many out of 1000 images of each class are misclassified to any other class. It is obvious that fewer misclassified images indicates lower classification difficulty, which corresponds with Table 2. These two tables provide an indication of the level of difficulty to distinguish different pairs of classes.

In Figures 9(a)–9(c), for easier-to-distinguish classes (based on the high classification accuracy and low loss, as well as Tables 2 and 2), the gen-error appears to have relatively large reduction in the early training iterations and then fluctuates around a constant value afterwards. For example, in Figure 9(a), the gen-error converges to around after iterations; in Figure 9(b), it converges to around after iterations. For multi-classification of MNIST in Figure 9(c), the gen-error also converges to around after iterations. These results corroborate the theoretical and empirical analyses in the bGMM case with small variance, which again verifies that Theorem 3 and Corollary 10 can shed light on the empirical gen-error on benchmark datasets. It also reveals that the generalization performance of iterative self-training on real datasets from relatively distinguishable classes can be quickly improved with the help of unlabelled data. In Figures 9(e), 9(f) and 9(g), we also show that the test accuracy increases with the iterations and has significant improvement compared to the initial iteration when only labelled data are used.

In Figures 9(d) and 9(h), we perform another binary classification experiment on the harder-to-distinguish pair, “cat” and “dog” (based on low accuracy at the initial point as well as Tables 2 and 2). We observe that the gen-error (and the test loss) does not decrease across the self-training iterations even though the test accuracy increases. This again corroborates the result in Figure 3(b) for the bGMM with large variance. The fact that both the test loss and test accuracy appear to increase with the iteration is, in fact, not contradictory. To intuitively explain this, in binary classification using the softmax (hence, logistic) function to predict the output classes, suppose the learned probability of a data example belonging to its true class is , the classification is correct. In other words, the accuracy is 100%. However, when (i.e., the classification confidence) decreases towards , the corresponding decision margin (Cao et al., 2019) also decreases and the test loss increases commensurately. Thus, when the decision margin is small, even though the test accuracy may increase as the iteration counter increases, the test loss may also increase at the same time; this represents our lack of confidence.

We further investigate the effect of -regularization on “cat-dog” classification. In Figures 9(i) and 9(j), we show that by setting the weight decay parameter to be , the increase of gen-error for the “cat-dog” classification task can be mitigated and the test accuracy is improved by 0.6% as well; compare this to Figures 9(d) and 9(h). In Figure 9(k), we plot the average gen-error over the last 10 iterations versus the weight decay parameter; this is shown to decrease as the weight decay increases (compared to Figure 8). In summary, our above observations correspond to that for the bGMM, namely that the unlabelled data do not always help to improve the gen-error but adding regularization can help to compensate for the undesirable impact.

Furthermore, we study the effect of reusing all the unlabelled data at each iteration. Under the same experimental setup as above, we conduct an additional experiment on the “horse-ship” pair in the CIFAR-10 dataset by using all unlabelled images in each iteration. The self-training procedure lasts for iterations. Figure 10 compares the gen-error of this additional experiment with the one of the same experiment in Figure 9(a). We find that when using the unlabelled data all at once, as the pseudo-labelling iteration increases, the gen-error is even higher than that for our original setup. This can possibly be attributed to overfitting.

7 Concluding Remarks and Future Work

In this paper, we have analyzed the gen-error of iterative SSL algorithms that pseudo-label large amounts of unlabelled data to progressively refine the parameters of a given model. We particularized the general bounds and exact expressions on the gen-error for the bGMM to gain some theoretical insight into the problem. These were then corroborated by experiments on benchmark datasets. The theoretical analyses and experimental results reinforce the main message of this paper—namely, that in the low-class-overlap or easy-to-classify scenario, pseudo-labelling can help to reduce the gen-error. On the other hand, for the high-class-overlap or difficult-to-classify scenario, pseudo-labelling can in fact hurt. Thus, the key takeaway from our paper is that practitioners should be judicious in adopting pseudo-labelling techniques, for they may degrade the overall performance.

There are three avenues for future research. First, our analytical results are only applicable to the bGMM. This yields valuable insights, but the model is admittedly restrictive. Generalizing our analyses to other statistical models for classification such as logistic regression will be instructive. Secondly, our work focuses on the gen-error. Often bounds on the population risk are desired as the population risk is the key determinant of the performance of classification algorithms. Bounding the population risk in the SSL setting would thus be interesting. Finally, analyzing other families of SSL algorithms beyond those that utilize pseudo-labelling would provide a clearer theoretical picture about the utility of SSL.

Acknowledgements

We sincerely thank the reviewers and action editor for their meticulous reading and insightful comments that led to a significantly improved version of the paper.

This research/project is supported by the National Research Foundation Singapore and DSO National Laboratories under the AI Singapore Programme (AISG Award No: AISG2-RP-2020-018), by an NRF Fellowship (A-0005077-01-00), and by Singapore Ministry of Education (MOE) AcRF Tier 1 Grants (A-0009042-01-00 and A-8000189-01-00).

Appendices

Appendix A Proof of Theorem 2.A

We commence with some notation. For any convex function , its Legendre dual is defined as for all . According to Boucheron et al. (2013, Lemma 2.4), when , is a nonnegative convex and nondecreasing function on . Moreover, for every , its generalized inverse function is concave and can be rewritten as .

We first introduce the following theorem that is applicable to more general loss functions.

Theorem 5.

For any , let and be convex functions of and . Assume that for all and for under distribution , where and . Let and . We have

| (28) |

and

| (29) |

where for any , and , and .

Proof Consider the Donsker–Varadhan variational representation of the KL-divergence between any two distributions and on :

| (30) |

where the supremum is taken over the set of measurable functions in .

Recall that and are independent copies of and respectively, such that , . For any iterative SSL algorithm, by applying the law of total expectation, the generalization error can be rewritten as

| (31) | |||

| (32) | |||

| (33) |

Note that and are convex, and so their Legendre duals , , and the corresponding inverses are well-defined.

Let . We have the fact that for any . Again, by the Donsker–Varadhan variational representation of the KL-divergence, for any fixed and any , we have

| (34) | |||

| (35) | |||

| (36) | |||

| (37) | |||

| (38) | |||

| (39) |

where (36) follows from the definition of in (49), (37) follows from the assumption that for all , and (38) follows because . Thus, we have

| (40) |

Similarly, for ,

| (41) |

By applying the same techniques, for any pair of pseudo-labelled random variables used at iteration and any , we have

| (42) | |||

| (43) | |||

| (44) | |||

| (45) | |||

| (46) |

where (44) follows from Jensen’s inequality. Thus, we get

| (47) |

and

| (48) |

The proof is completed by applying inequalities (40), (41), (47) and (48) to the expansion of in (33).

Let and be independent copies of and respectively, such that , where is the marginal distribution of . For any fixed , let the cumulant generating function (CGF) of be

| (49) |

Appendix B Proof of Theorem 2.B

Recall that and are independent copies of and respectively, such that , .

Appendix C Proof of Theorem 3

In the following, we abbreviate as , if there is no risk of confusion. When the labelled data are not reused in the subsequent iterations, for , .

-

•

Iteration : Since , we have . The gen-error is given by

(58) (59) (60) (61) (62) (63) (64) -

•

Pseudo-label using : For any and , the pseudo-label is

(65) Given any pair of , is fixed and are conditionally i.i.d. from . Recall the pseudo-labelled dataset is defined as .

Since , inspired by Oymak and Gülcü (2021), we can decompose it as follows:

(66) where , , , and is independent of .

Recall the correlation between and given in (17), the decomposition of in (18) and . Since , in the following we can analyze the normalized parameter instead.

Given any , is fixed, and for any , let us define a Gaussian noise vector and decompose it as follows

(67) where , , , , and are mutually independent.

For any sample , we can decompose it as

(68) Then we have

(69) (70) (71) Note that for any . Similarly, for any sample , we have

(72) and

(73) Denote the true label of as and . The probability that the pseudo-label is equal to is given by

(74) (75) (76) (77) We also have , and so .

-

•

Iteration : Recall (16) and the new model parameter learned from the pseudo-labelled dataset is given by

(78) First let us calculate the conditional expectation of given . Given any , for any , let and denotes the probability measure under the parameters .

The expectation can be calculated as follows:

(79) (80) (81) In contrast to (67), here we decompose the Gaussian random vector in another way

(82) where , , and are mutually independent and .

Then we decompose and as

(83) (84) Then we have

(85) (86) (87) where (87) follows since is independent of and .

For the first term in (87), recall and we have

(88) For the second term in (87), we have

(89) By combining (88) and (89), we have

(90) and similarly,

(91) Thus, recall and defined in (20) and (21), and is given by

(92) (93) (94) From (9), the gen-error at is given by

(95) Next, we calculate the two terms in (95) respectively.

-

–

Calculate :

(96) (97) (98) (99) (100) (101) (102) -

–

Calculate : Given any , in the following, we drop the condition on for notational simplicity. Since (cf. (77)), we have

(103) (104) Since given , is a Gaussian distribution, for any , we have

(105) (106) (107) (108) Thus,

(109)

Finally, the gen-error at can be characterized as follows:

(110) (111) -

–

-

•

Pseudo-label using : Let . For any , the pseudo-labels are given by

(112) It can be seen that the pseudo-labels are conditionally i.i.d. given and let us denote the conditional distribution under fixed as . The pseudo-labelled dataset is denoted as .

For any fixed , let be decomposed as , where . In addition, let and . In the following, we use , , , and for the above quantities if there is no risk of confusion.

Recall the decomposition of and in (68) and (71). Similarly, we have

(113) where . Note that and then the conditional probability can be given by

(114) (115) and , where denotes the probability measure under parameter .

Figure 11: versus for .

Figure 12: versus for . -

•

Iteration : Recall (16) and the new model parameter learned from the pseudo-labelled dataset is given by

(116) where are conditionally i.i.d. random variables given .

Similar to (94), the expectation of conditioned on is given by

(117) (118) As , by the strong law of large numbers, almost surely. By the continuous mapping theorem, we also have almost surely. Equivalently, for almost all sample paths, there exists a vanishing sequence (i.e., as ) such that .

The gen-error at is given by

(119) By applying the same techniques in the iteration , we obtain the exact characterization of gen-error at as follows. By the uniform continuity of , for any vanishing sequence , there exist such that and , where as . The same result holds for .

Finally we can obtain the gen-error as follows. For almost all sample paths, there exists a vanishing sequence (i.e., as ), such that

(120) (121) where and stands for .

-

•

Iteration : By iteratively implementing the calculation, we finally obtain the characterization of as follows. For almost all sample paths, there exists a vanishing sequence ( as ) , such that

(122) where and stands for .

The proof is thus completed.

Appendix D Applying Theorem 2.A to bGMMs

In anticipation of leveraging Theorem 2.A together with the sub-Gaussianity of the loss function for the bGMM to derive generalization bounds in terms of information-theoretic quantities (just as in Russo and Zou (2016); Xu and Raginsky (2017); Bu et al. (2020)), we find it convenient to show that and are bounded w.h.p.. By defining the ball , we see that

| (123) |

where is the Gaussian cumulative distribution function. By choosing appropriately, the failure probability can be made arbitrarily small.

To show that is bounded with high probability, define the set for some . For any , we have

| (124) | ||||

| (125) |

For any from the bGMM() and any , the probability that belongs to the interval (, depend on ) can be lower bounded by

| (126) |

Thus, according to Hoeffding’s lemma, with probability at least , under for all , i.e., for all ,

| (127) |

Recall the definition of in (17) and the decomposition of in (18). Define the KL-divergence between the pseudo-labelled data distribution and the true data distribution after the first iteration , as follows:

| (128) |

where

| (129) |

, , is independent of and perpendicular to . Note that is the Gaussian probability density function with mean and variance truncated to the interval , and similarly for . In general, when is small, so is the generalization error.

By applying the result in Theorem 2.A, the following theorem provides an upper bound for the generalization error at each iteration for large enough.

Theorem 7.

Fix any , , and . With probability at least , the absolute generalization error at can be upper bounded as follows

| (130) |

For each , for large enough, with probability at least ,

| (131) |

First, we compare the upper bounds for and , as shown in Figures 13(a) and 13(b). For any fixed , when is sufficiently small, the upper bound for is greater than that for . As increases, the upper bound for surpasses that of , as shown in Figure 13(b). This is consistent with the intuition that when the labelled data is limited, using the unlabelled data can help improve the generalization performance. However, as the number of labelled data increases, using the unlabelled data may degrade the generalization performance, if the distributions corresponding to classes and have a large overlap. This is because the labelled data is already effective in learning the unknown parameter well and additional pseudo-labelled data does not help to further boost the generalization performance. Furthermore, by comparing Figures 13(a) and 13(b), we can see that for smaller , the improvement from to is more pronounced. The intuition is that when decreases, the data samples have smaller variance and thus the pseudo-labelling is more accurate. In this case, unlabelled data can improve the generalization performance. Let us examine the effect of , the number of labelled training samples. Recall the Taylor expansion of in (24). It can be seen that as increases, converges to in probability. Suppose the dimension and . Then where . The upper bound for the absolute generalization error at can be rewritten as

| (132) |

which is a decreasing function of , as shown in Figures 13(a) and 13(b).

Second, in Figure 14(a), we plot the theoretical upper bound in (131) by ignoring . Unfortunately it is computationally difficult to numerically calculate the bound in (131) for high dimensions (due to the need for high-dimensional numerical integration), but we can still gain insight from the result for . It is shown that the upper bound for decreases as increases and finally converges to a non-zero constant. The gap between the upper bounds for and for decreases as increases and shrinks to almost 0 for . The intuition is that as , there are sufficient data at each iteration and the algorithm can converge at very early stage. In the empirical simulation, we let , , and iteratively run the self-training procedure for iterations and rounds. We find that the behaviour of the empirical generalization error (the red ‘-x’ line) is similar to the theoretical upper bound (the blue ‘-o’ line), which almost converges to its final value at . This result shows that the theoretical upper bound in (131) serves as a useful rule-of-thumb for how the generalization error changes over iterations. In Figure 14(b), we plot the theoretical bound and result from the empirical simulation based on the toy example for but larger and . This figure shows that when we increase and , using unlabelled data may not be able to improve the generalization performance. The intuition is that for large enough, merely using the labelled data can yield sufficiently low generalization error and for subsequent iterations with the pseudo-labelled data, the reduction in the test loss is negligible but the training loss will decrease more significantly (thus causing the generalization error to increase). When is larger, the data samples have larger variance and the classes have a larger overlap, and thus, the initial parameter learned by the labelled data cannot produce pseudo-labels with sufficiently high accuracy. Thus, the pseudo-labelled data cannot help to improve the generalization error significantly.

Remark 8 (Numerical plot of ).

To gain more insight, we plot when and in Figure 15. Under these settings, depends only on and hence, we can rewrite it as . As shown in Figure 15 in Appendix D, for all , there exists an such that for all , . From (17), we can see that is close to 1 of high probability, which means that is monotonically increasing in with high probability. As a result, increases as increases. This is consistent with the intuition that when the training data has larger in-class variance, it is more difficult to generalize well.

Remark 9 (Discussion on Theorem 7).

As , and almost surely, which means that the estimator converges to the optimal classifier for this bGMM. However, since there is no margin between two groups of data samples, the error probability (which is the Bayes error rate) and the disintegrated KL-divergence between the estimated and underlying distributions cannot converge to .

In the other extreme case, when and , the error probability (for all ) and , so in this other extreme (flipped) scenario, we have more mistakes than correct pseudo-labels. The reason why is finite is that when is small, it means that is far from both and , and then is also small. Thus, is also small.

Appendix E Proof of Theorem 7

For simplicity, in the following, we abbreviate as .

-

1.

Initial round : Since , we have and for some constant ,

(133) By choosing large enough, can be made arbitrarily small. Consider and as independent copies of and , respectively, such that . Then the probability that under is given as follows

(134) (135) (136) (137) Fix some , and . There exists , such that for all , , , and then with probability at least , the absolute generalization error can be upper bounded as follows

(138) Then mutual information can be calculated as follows

(139) (140) (141) (142) (143) Thus we obtain (130).

-

2.

Pseudo-label using : The same as those in Appendix C.

-

3.

Iteration : Recall in (78), the new model parameter learned from the pseudo-labelled dataset .

-

(a)

Recall the condition expectation of in (94). The norm between and can be upper bounded by

(144) (145) where is a constant only dependent on .

-

(b)

Next, we need to calculate the probability that under .

Let . For any , let , , denote the -th components of , and , respectively. Recall the decompositions in (83) and in (84). Suppose the basis of is denoted by and let . Then we have

(146) where for any and are mutually independent. We also let .

Given any , the moment generating function (MGF) of is given as follows: for any ,

(147) (148) The final equality follows from the fact that the MGF of a zero-mean univariate Gaussian truncated to is . The second derivative of is given as

(149) (150) For and any , the MGF of is given as

(151) (152) and the second derivative of is given by

(153) Fix . According to Taylor’s theorem, we have

(154) for some and . Then the Cramér transform of can be lower bounded as follows: for any ,

(155) Let , which is a finite constant only dependent on . Since are i.i.d. random variables conditioned on , by applying Chernoff-Cramér inequality, we have for all

(156) (157) (158) (159) (160) (161) where as and does not depend on .

Choose some ( defined in (145)). We have

(162) Consider as an independent copy of and independent of . Then the probability that under is given as follows

(163) (164) (165)

Thus, for some , with probability at least , the absolute generalization error can be upper bounded as follows:

(166) (167) (168) where and denotes the marginal distribution of under parameters .

In the following, we derive closed-form expressions of the mutual information and KL-divergence in (168). For any :

-

•

Calculate : For arbitrary random variables and , we define the disintegrated conditional differential entropy of given as

(169) This is a -measurable random variable. Conditioned on a certain pair , the mutual information between and is

(170) (171) (172) As , almost surely and hence, in probability. Thus, for any , and there exists such that for all ,

(173) -

•

Calculate : First of all, since (cf. (77)) regardless of the values of , the disintegrated conditional KL-divergence can be rewritten as

(174) Recall the decomposition of a Gaussian vector in (82). Note that .

For any pair of labelled data sample , from (83), we similarly decompose as , where and . Let and denote the probability density functions of and , respectively. For any , the joint probability distribution at is given by

(175) (176) (177) (178) Similarly, for any , the joint probability density evaluated at is given by

(179) Second, we have . The conditional probability distribution can be calculated as follows

(180) where the last equality follows since . Since (cf. (84)), we have

(181) and similarly,

(182) Thus, we conclude that

(185) To calculate the conditional probability distribution , recall the decomposition of and in (83) and (84). Since the event is equivalent to and , the conditional density of given is given by

(186) Similarly, for any

(187) (188) (189) For any , given , the conditional probability distribution at is given by

(190) (191) (192) where (192) follows since and are mutually independent and .

Since we can decompose as

(193) given , the conditional probability distribution at is given by

(194) (195) (196) Similarly, for any , given , the conditional distribution at is given by

(197) (198) and given ,

(199) (200) Furthermore, for any , we have

(201) (202) (203) for any , we have

(204) (205)

Thus, by combining the aforementioned results, we get the closed-form expression of the upper bound for . Indeed, if we fix some , and , there exists , , , such that for all , , , and with probability at least ,

(209) -

(a)

-

4.

Pseudo-label using : The same as those in Appendix C.

-

5.

Iteration : Recall in (116), the new model parameter learned from the pseudo-labelled dataset .

Given any , for any , let and denotes the probability measure under the parameters . Following the similar steps that derive (161), for any , we have

(210) From (145), no matter what is, we always have . Then, for some ,

(211) With probability at least , the absolute generalization error can be upper bounded as follows:

(212) (213) (214) where .

Similar to (173), for any and , there exists such that for all ,

(215) Recall (115) that . For any fixed , recall can be decomposed as .

By following the similar steps in the first iteration, the disintegrated conditional KL-divergence between pseudo-labelled distribution and true distribution is given by

(216) (217) Given any pair of , recall the decomposition of in (94). Then the correlation between and is given by

(218) (219) By the strong law of large numbers, we have as . Then for any and , there exists such that for all ,

(220) Therefore, fix some , and . There exists , , , such that for all , , , and then with probability at least , the absolute generalization error at can be upper bounded as follows:

(221) -

6.

Any iteration : By similarly repeating the calculation in iteration , we obtain the upper bound for in (131).

Appendix F Reusing in Each Iteration

If the labelled data are reused in each iteration and (cf. (4)), for each , the learned model parameter is given by

| (222) | ||||

| (223) |

Similarly to , let us define the enhanced correlation evolution function as follows:

| (224) |

From Theorem 7, we can obtain similar characterization for .

Corollary 10.

Fix any , and . For almost all sample paths,

| (225) |

Recall the definition of the function in (224). Let the -th iterate of be denoted as with initial condition . As shown in Figure 17, we can see that for any fixed , has a similar behaviour as as increases, which implies that the gen-error in (225) in Corollary 10 also decreases as increases. As a result, represents the improvement of the model parameter over the iterations.

As shown in Figure 17, under the same setup as Figure 14(a), when the labelled data are reused in each iteration,

the upper bound for is also a decreasing function of . When , is almost the same as the gen-error when the labelled data are not reused in the subsequent iterations, which means that for large enough , reusing the labelled data does not necessarily help to improve the generalization performance. Moreover, when , is higher than that for , which coincides with the intuition that increasing the number of unlabelled data helps to reduce the generalization error.

Appendix G Proof of Corollary 10

Following similar steps as in Appendix D, we first derive the upper bound for .

At , from (66) and (94), the expectation is rewritten as

| (226) | ||||

| (227) |

Then the correlation between and is given by

| (228) |

The gen-error is given by

| (229) | ||||

| (230) |

-

•

Calculate :

(231) (232) (233) (234) (235) -

•

Calculate :

(236) (237) (238) (239) (240) -

•

Calculate : Since given any fixed , is a Gaussian distribution, for any , we have

(241) (242) (243) (244) and then

(245)

Therefore, the gen-error at can be exactly characterized as follows

| (246) | ||||

| (247) |

For , similar to the derivation in Appendix C, by iteratively implementing the calculation, we only need to replace with the correlation evolution function (cf. (224)) and then the gen-error for any is characterized as follows. For almost all sample paths, there exists a vanishing sequence ( as ) , such that

| (248) |

where as and stands for .

The proof of Corollary 10 is thus completed.

Appendix H Proof of Theorem 4

Theorem 4 can be proved similarly from the proof of Theorem 7. For simplicity, in the following proofs, we abbrviate as . With -regularization, the algorithm operates in the following steps. Let be the regularization parameter.

-

•

Step 1: Initial round with : By minimizing the regularized empirical risk of labelled dataset

(249) where means that both sides differ by a constant independent of , we obtain the minimizer

(250) -

•

Step 2: Pseudo-label data in : At each iteration , for any , we use to assign a pseudo-label for , that is, .

-

•

Step 3: Refine the model: We then use the pseudo-labelled dataset to train the new model. By minimizing the empirical risk of

(251) we obtain the new model parameter

(252) If , go back to Step 2.

-

1.

Characterization of :

From (250), we still have and

(253) From (253), we can rewrite (252) as follows

(254) Thus, the expectation of conditioned on is given by

(255) (256) (257) (258) Recall given in (95). In the case with regularization, the gen-error has the same definition as . To derive , we need to calculate the following two terms.

-

•

Calculate :

(259) (260) (261) (262) (263) (264) -

•

Calculate : Given any , in the following, we drop the condition on for notational simplicity. Since given , is a Gaussian distribution, for any , we have

(265) (266) (267) Thus, we have

(268)

Finally, the gen-error at can be characterized as follows:

(269) where stands for .

-

•

-

2.

Iteration : Since , by iteratively applying the same techniques in iteration and in Appendix C, the gen-error at any can be characterized as follows

(270)

Theorem 4 is thus proved.

References

- Akaho and Kappen (2000) Shotaro Akaho and Hilbert J Kappen. Nonmonotonic generalization bias of Gaussian mixture models. Neural Computation, 12(6):1411–1427, 2000.

- Aminian et al. (2021) Gholamali Aminian, Yuheng Bu, Laura Toni, Miguel Rodrigues, and Gregory Wornell. An exact characterization of the generalization error for the Gibbs algorithm. Advances in Neural Information Processing Systems, 34, 2021.

- Aminian et al. (2022) Gholamali Aminian, Mahed Abroshan, Mohammad Mahdi Khalili, Laura Toni, and Miguel Rodrigues. An information-theoretical approach to semi-supervised learning under covariate-shift. In Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, volume 151, pages 7433–7449. PMLR, 28–30 Mar 2022.

- Anzai (2012) Yuichiro Anzai. Pattern Recognition and Machine Learning. Elsevier, 2012.

- Arazo et al. (2020) Eric Arazo, Diego Ortego, Paul Albert, Noel E O’Connor, and Kevin McGuinness. Pseudo-labeling and confirmation bias in deep semi-supervised learning. In 2020 International Joint Conference on Neural Networks (IJCNN), pages 1–8. IEEE, 2020.

- Boucheron et al. (2005) Stéphane Boucheron, Olivier Bousquet, and Gábor Lugosi. Theory of classification: A survey of some recent advances. ESAIM: Probability and Statistics, 9:323–375, 2005.

- Boucheron et al. (2013) Stéphane Boucheron, Gábor Lugosi, and Pascal Massart. Concentration Inequalities: A Nonasymptotic Theory of Independence. Oxford University Press, 2013.

- Bousquet and Elisseeff (2002) Olivier Bousquet and André Elisseeff. Stability and generalization. The Journal of Machine Learning Research, 2:499–526, 2002.

- Bu et al. (2020) Yuheng Bu, Shaofeng Zou, and Venugopal V Veeravalli. Tightening mutual information based bounds on generalization error. IEEE Journal on Selected Areas in Information Theory, 1(1):121–130, 2020.

- Bu et al. (2022) Yuheng Bu, Gholamali Aminian, Laura Toni, Gregory W. Wornell, and Miguel Rodrigues. Characterizing and understanding the generalization error of transfer learning with Gibbs algorithm. In Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, volume 151, pages 8673–8699. PMLR, 28–30 Mar 2022.

- Cao et al. (2019) Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. Learning imbalanced datasets with label-distribution-aware margin loss. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, pages 1567–1578, 2019.

- Carmon et al. (2019) Yair Carmon, Aditi Raghunathan, Ludwig Schmidt, Percy Liang, and John C Duchi. Unlabeled data improves adversarial robustness. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, pages 11192–11203, 2019.

- Castelli and Cover (1996) Vittorio Castelli and Thomas M Cover. The relative value of labeled and unlabeled samples in pattern recognition with an unknown mixing parameter. IEEE Transactions on Information Theory, 42(6):2102–2117, 1996.

- Chapelle et al. (2006) Olivier Chapelle, Bernhard Schölkopf, and Alexander Zien, editors. Semi-Supervised Learning. The MIT Press, 2006.

- Chawla and Karakoulas (2005) Nitesh V Chawla and Grigoris Karakoulas. Learning from labeled and unlabeled data: An empirical study across techniques and domains. Journal of Artificial Intelligence Research, 23:331–366, 2005.

- Dupre et al. (2019) Robert Dupre, Jiri Fajtl, Vasileios Argyriou, and Paolo Remagnino. Improving dataset volumes and model accuracy with semi-supervised iterative self-learning. IEEE Transactions on Image Processing, 29:4337–4348, 2019.

- Esposito et al. (2021) Amedeo Roberto Esposito, Michael Gastpar, and Ibrahim Issa. Generalization error bounds via Rényi-, -divergences and maximal leakage. IEEE Transactions on Information Theory, 67(8):4986–5004, 2021.

- Goodfellow et al. (2016) Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT press, 2016.

- Haghifam et al. (2020) Mahdi Haghifam, Jeffrey Negrea, Ashish Khisti, Daniel M Roy, and Gintare Karolina Dziugaite. Sharpened generalization bounds based on conditional mutual information and an application to noisy, iterative algorithms. Advances in Neural Information Processing Systems, 33:9925–9935, 2020.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 770–778, 2016.

- Jose and Simeone (2021) Sharu Theresa Jose and Osvaldo Simeone. Information-theoretic bounds on transfer generalization gap based on Jensen–Shannon divergence. In 2021 29th European Signal Processing Conference (EUSIPCO), pages 1461–1465. IEEE, 2021.

- Kawaguchi et al. (2017) Kenji Kawaguchi, Leslie Pack Kaelbling, and Yoshua Bengio. Generalization in deep learning. arXiv preprint arXiv:1710.05468, 2017.

- Krizhevsky (2009) Alex Krizhevsky. Learning multiple layers of features from tiny images. Master’s thesis, University of Toronto, 2009.

- LeCun et al. (1998) Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Lee (2013) Dong-Hyun Lee. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Workshop on Challenges in Representation Learning, ICML, volume 3, page 896, 2013.

- Li et al. (2019) Jian Li, Yong Liu, Rong Yin, and Weiping Wang. Multi-class learning using unlabeled samples: Theory and algorithm. In International Joint Conference on Artificial Intelligence (IJCAI), pages 2880–2886, 2019.

- Liu and Mukhopadhyay (2018) Qun Liu and Supratik Mukhopadhyay. Unsupervised learning using pretrained CNN and associative memory bank. In 2018 International Joint Conference on Neural Networks (IJCNN), pages 01–08. IEEE, 2018.

- Lopez and Jog (2018) Adrian Tovar Lopez and Varun Jog. Generalization error bounds using Wasserstein distances. In 2018 IEEE Information Theory Workshop (ITW), pages 1–5. IEEE, 2018.

- MacKay (2003) David J. C. MacKay. Information Theory, Inference and Learning Algorithms. Cambridge University Press, 2003.

- Mignacco et al. (2020) Francesca Mignacco, Florent Krzakala, Yue Lu, Pierfrancesco Urbani, and Lenka Zdeborova. The role of regularization in classification of high-dimensional noisy Gaussian mixture. In International Conference on Machine Learning, pages 6874–6883. PMLR, 2020.

- Moody (1992) John Moody. The effective number of parameters: An analysis of generalization and regularization in nonlinear learning systems. Advances in Neural Information Processing Systems, 4, 1992.

- Muthukumar et al. (2021) Vidya Muthukumar, Adhyyan Narang, Vignesh Subramanian, Mikhail Belkin, Daniel Hsu, and Anant Sahai. Classification vs regression in overparameterized regimes: Does the loss function matter? Journal of Machine Learning Research, 22(222):1–69, 2021.

- Negrea et al. (2019) Jeffrey Negrea, Mahdi Haghifam, Gintare Karolina Dziugaite, Ashish Khisti, and Daniel M Roy. Information-theoretic generalization bounds for SGLD via data-dependent estimates. In Advances in Neural Information Processing Systems, pages 11013–11023, 2019.

- Oymak and Gülcü (2021) Samet Oymak and Talha Cihad Gülcü. A theoretical characterization of semi-supervised learning with self-training for Gaussian mixture models. In International Conference on Artificial Intelligence and Statistics, pages 3601–3609. PMLR, 2021.

- Pensia et al. (2018) Ankit Pensia, Varun Jog, and Po-Ling Loh. Generalization error bounds for noisy, iterative algorithms. In 2018 IEEE International Symposium on Information Theory (ISIT), pages 546–550. IEEE, 2018.

- Russo and Zou (2016) Daniel Russo and James Zou. Controlling bias in adaptive data analysis using information theory. In Arthur Gretton and Christian C. Robert, editors, Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, volume 51, pages 1232–1240, Cadiz, Spain, 09–11 May 2016. PMLR.

- Singh et al. (2008) Aarti Singh, Robert Nowak, and Jerry Zhu. Unlabeled data: Now it helps, now it doesn’t. Advances in Neural Information Processing Systems, 21:1513–1520, 2008.

- Steinke and Zakynthinou (2020) Thomas Steinke and Lydia Zakynthinou. Reasoning About Generalization via Conditional Mutual Information. In Jacob Abernethy and Shivani Agarwal, editors, Proceedings of Thirty Third Conference on Learning Theory, volume 125, pages 3437–3452. PMLR, 09–12 Jul 2020.

- Triguero et al. (2015) Isaac Triguero, Salvador García, and Francisco Herrera. Self-labeled techniques for semi-supervised learning: taxonomy, software and empirical study. Knowledge and Information Systems, 42(2):245–284, 2015.

- Van Engelen and Hoos (2020) Jesper E Van Engelen and Holger H Hoos. A survey on semi-supervised learning. Machine Learning, 109(2):373–440, 2020.

- Vapnik (2000) Vladimir Vapnik. The Nature of Statistical Learning Theory. Springer, 2000.

- Vershynin (2018) Roman Vershynin. High-Dimensional Probability: An Introduction with Applications in Data Science. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, 2018.

- Wang and Thrampoulidis (2022) Ke Wang and Christos Thrampoulidis. Binary classification of gaussian mixtures: Abundance of support vectors, benign overfitting, and regularization. SIAM Journal on Mathematics of Data Science, 4(1):260–284, 2022.

- Watanabe and Watanabe (2006) Kazuho Watanabe and Sumio Watanabe. Stochastic complexities of Gaussian mixtures in variational Bayesian approximation. The Journal of Machine Learning Research, 7:625–644, 2006.

- Wu et al. (2020) Xuetong Wu, Jonathan H Manton, Uwe Aickelin, and Jingge Zhu. Information-theoretic analysis for transfer learning. In 2020 IEEE International Symposium on Information Theory (ISIT), pages 2819–2824. IEEE, 2020.

- Xu and Raginsky (2017) Aolin Xu and Maxim Raginsky. Information-theoretic analysis of generalization capability of learning algorithms. In Advances in Neural Information Processing Systems, pages 2524–2533, 2017.

- Zhu (2020) Jingge Zhu. Semi-supervised learning: the case when unlabeled data is equally useful. In Proceedings of the 36th Conference on Uncertainty in Artificial Intelligence (UAI), volume 124, pages 709–718. PMLR, 03–06 Aug 2020.