Information Control Barrier Functions:

Preventing Localization Failures in Mobile Systems Through Control

Abstract

This paper develops a new framework for preventing localization failures in mobile systems that must estimate their state using measurements. Safety is guaranteed by imposing the nonlinear least squares optimization solved in modern localization algorithms remains well-conditioned. Specifically, the eigenvalues of the Hessian matrix are made to be always positive via two methods that leverage control barrier functions to achieve safe set invariance. The proposed method is not constrained to any specific measurement or system type, offering a very general solution to the safe mobility with localization problem. The efficacy of the approach is demonstrated on a system being provided range-only and heading-only measurements for localization.

I Introduction

Localization involves acquiring and processing measurements in real-time to determine the state of a mobile platform in a given frame of reference. Localization often dictates the overall performance of mobile systems because control, planning, and autonomy heavily rely on accurate state estimates. As a result, the field of localization has seen considerable theoretical and practical advances over the past decade, especially as sensors and processors have become lighter, smaller, and more powerful. However, despite major progress, localization failures—the inability to determine a system’s state—still occur and are usually catastrophic when there are decision making algorithms downstream of the localization stack. Since localization failures can occur rapidly, there is little to no time for corrective action to restore localization so either i) the localization algorithm must be guaranteed to never fail no matter the environment or ii) the system must predict when a failure is about to occur and take preemptive corrective actions. Fundamentally, odometry algorithms rely on geometric or visual features distributed throughout the environment [1], meaning the performance of these algorithms is inherently state-dependent, and this state-dependency is ignored as the system is moving through the environment leading to localization failures. This work addresses the need for safety in online localization for mobile systems by developing a new framework that leverages the simplicity and effectiveness of control barrier functions (CBFs) in conjunction with matrix calculus and nonlinear least squares to ensure a system never enters a state where localization may fail.

Prior works in planning have investigated designing trajectories through “measurement rich” parts of the environment that improve the observability of certain states/model parameters. Belief-space planning [2, 3] solves (approximately) a partially observable Markov decision making process that must balance information gain about system states with reaching a final goal location. Perception-aware planning [4, 5] frameworks for visual-inertial odometry design trajectories that require sufficient visual features stay in the field-of-view such that localization is well-constrained. Rather than attempting to reduce estimation covariances, observability-aware planning [6] designs motions that maximize the Observability Gramian for specific states or model parameters. One limitation of the aforementioned works is the reliance on a priori information in the form of a global or local map of the environment which is unrealistic in many applications. Another is that localization quality is treated as a soft constraint when making control or planning decisions so safety cannot be formally guaranteed. More recently, CBFs have been proposed in specific instances of this problem such as visual servoing [7] and in conjunction with the observability matrix [8]. However, these methods are tailored to specific applications and do not provide a general approach for ensuring accurate localization across a broad class of mobile platforms.

This letter introduces a novel framework for achieving safe mobility with online localization for nonlinear systems through safety-critical control. Specifically, we formulate a set invariance condition via CBFs that imposes a minimum threshold on the eigenvalue of the Hessian matrix associated with the optimization solved in modern localization algorithms. In other words, our formulation prevents online nonlinear least squares from becoming ill-conditioned by taking preventative actions via control. Importantly, the method does not rely on proxies for localization performance but directly guarantees sufficient conditions for safe operation. It achieves this without requiring a priori information about the environment, so it can be used in unknown environments and be immediately combined with an existing trajectory planner. Our framework leverages the state-dependency of the minimum eigenvalue of the Hessian to achieve safety so it is necessary to guarantee that the minimum eigenvalue is sufficiently smooth. To ensure this, we present two methods: one that ensures the eigenvalues are always differentiable even in the non-simple, i.e., repeated, case and another that forces the eigenvalues to always remain simple. Our approach can be applied to systems of high relative degree in addition to situations where avoiding detection is the primary objective rather than localization performance. The proposed approach is validated on a double integrator system using range-only and heading-only localization with beacons.

Notation: The set of strictly-positive scalars is denoted . The set of real symmetric matrices of dimension is denoted , with the set of positive definite matrices . The eigenvalues of matrix are and the smallest eigenvalue is . A matrix that is a function of and is denoted as and is smooth if all of its elements are smooth functions of and . The Lie derivative of vector field along the flow of another vector field is denoted . The norm of vector with is .

II Problem Formulation and Preliminaries

II-A Overview

Consider the nonlinear system

| (1) | ||||

with state , control input , measured output , dynamics , control input matrix , and nonlinear measurement model . There are several methods for generating an estimate of the state using output , e.g., Kalman filtering and state observers. However, recent works in the robotics literature have shown the superiority of optimization-based approaches that involve solving a nonlinear least squares problem in an online fashion [9, 1, 10]. In particular, if measurements are available, then one can use numerical optimization to find the optimal state estimate by solving

| (2) |

where is a state-dependent positive definite matrix that represents the level of confidence in . We allow to be state-dependent as a way to capture measurement degradation/dropout; this will be discussed more in Section IV. If the measurement model does not contain enough information to estimate the full state directly by solving Equation 2, then the nonlinear least squares optimization can be performed over a window of measurements with a motion model. In either case, the proposed approach is applicable. The manifestation of Equation 2 can be traced to nonlinear Maximum A Posteriori (MAP) estimation [1] where maximizing the log likelihood function is more convenient when the likelihood function belongs to the exponential family, i.e., likelihood function is approximately normal. The goal of this work is to ensure the optimization Equation 2 is well-constrained (to be defined later) so a unique state estimate is always available; a guarantee to be achieved through control. In the sequel, we will denote the nonlinear least squares cost as .

II-B Control Barrier Functions

This work will employ controlled invariant sets to ensure the optimization Equation 2 always produces a unique estimate . Specifically, set invariance will be achieved with CBFs. The following definitions can be found in many previous works [11, 12] and are stated here for completeness.

Definition 1.

The set is forward invariant if for every for all .

Definition 2.

The nominal system is safe with respect to set if the set is forward invariant.

Definition 3.

A continuous function is an extended class function if it is strictly increasing, , and is defined on the entire real line.

Definition 4 (cf. [11]).

Let be the 0-superlevel set of a continuously differentiable function defined on the open and connected set . The function is a control barrier function for Equation 1 if there exists an extended class function for all such that

II-C Other Useful Definitions

This work will also make use of the following definitions from optimization theory, linear algebra, and analysis.

Definition 5.

Let be a twice differentiable function with critical point where the gradient of at vanishes, i.e., . The Hessian of given by is said to be degenerate at the critical point if at least one eigenvalue of is zero.

Remark 1.

An alternative to Definition 5 is that the Hessian matrix is degenerate if its determinant is zero. This can be trivially shown to be equivalent to the eigenvalue condition.

Definition 6.

An eigenvalue of a matrix is simple if it has an algebraic multiplicity of one. In other words, a simple eigenvalue only appears once in the roots of the characteristic polynomial of the matrix under consideration.

Definition 7 (cf. [13]).

Let . The function is analytic on if for all there exists an open interval , such that there exists a power series which has radius of convergence greater than or equal to and which converges to on .

Remark 2.

A function being analytic implies that it is infinitely differentiable, but the converse does not hold. This makes being analytic a stronger condition than being infinitely differentiable.

III Main Results

III-A Overview

In this section, we present a new approach that prevents the nonlinear least squares optimization defined in Equation 2 from becoming ill-conditioned through the use of CBFs. Central to our approach is the following result that establishes a connection between the uniqueness of a critical point for a twice differentiable function and the eigenvalues of its corresponding Hessian.

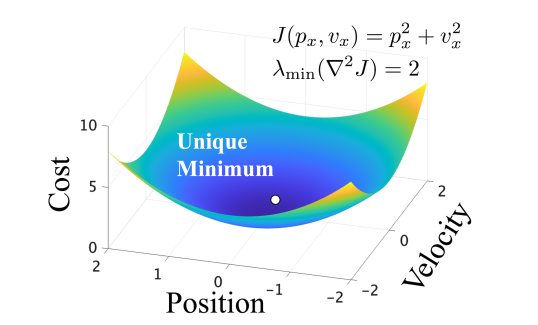

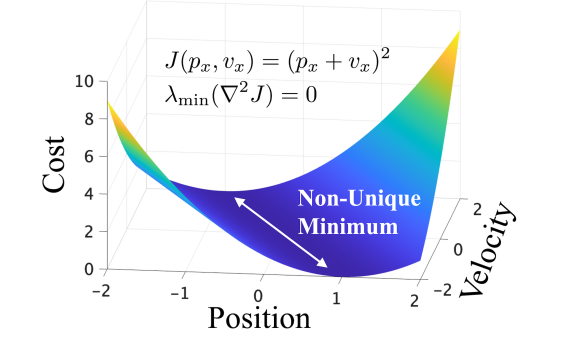

Proposition 1.

Let be a critical point of a twice differentiable function . If the eigenvalues of are all positive locally then is a unique local minimum of .

Proof.

Let be a critical point of , then by definition. Consider a local perturbation around , denoted as , then . Since and then so is a unique local minimum of . ∎

The following corollary follows directly from Proposition 1.

Corollary 1.

If the Hessian of a twice differentiable function is degenerate at a critical point then the critical point may not be unique locally.

Corollary 1 has an important implication for optimization-based localization algorithms: if the Hessian of Equation 2 is degenerate at a critical point, then the computed estimate may not be unique. From a localization perspective, a non-unique solution to Equation 2 is analogous to elements of the state vector being unobservable; a situation that can have devastating consequences for mobile systems that base decisions and control actions on estimates computed via Equation 2. Consider, e.g., Fig. 1, which shows examples of cost functions that have a non-degenerate and degenerate Hessian in Fig. 1(a) and Fig. 1(b), respectively. The cost function shown in Fig. 1(b) has infinitely many minima that are all valid solutions, any of which can be computed by the localization algorithm and used in feedback. A controller using a non-unique estimate will behave erratically as the estimate is not an accurate representation of the system’s true state, and would likely lead to any number of catastrophic events such state/actuator constraint violations or collisions with obstacles.

Remark 3.

It is important to note that a unique critical point (or estimate) might still be obtainable even when the Hessian is degenerate. In other words, a degenerate Hessian is not equivalent to the non-uniqueness of critical points. Nonetheless, requiring that the Hessian never becomes degenerate is sufficient to guarantee that a critical point is unique.

III-B Information Control Barrier Function

Given the discussion above, we define the special class of CBFs that will be the focus for the remainder of this letter.

Definition 8.

Let be the Hessian of the nonlinear least squares cost function Equation 2. An information control barrier function (I-CBF) is a control barrier function that renders the set safe where .

Remark 4.

The minimum eigenvalue of the Hessian and its derivatives are amenable to numerical computation if the Hessian of Equation 2 is a complicated function.

Remark 5.

A noteworthy alternative to in Definition 8 is which ensures the Hessian is always nearly degenerate. This can be used for detection avoidance applications (see Section IV).

The minimum eigenvalue of the Hessian of Equation 2 is a measure of how much information about the state is contained in the nonlinear least squares cost function. As such, the class of control barrier functions in Definition 8 ensures that localization information is sufficient for safe operation, i.e., the system’s state can be discerned from the available measurements. A natural choice for a function to define the safe set in I-CBF is

| (3) |

For Equation 3 to be a valid CBF (based on Definition 4), it must be continuously differentiable and so must be continuously differentiable. Handling the (potential) non-differentiability of the min operator is fairly straightforward, with approaches presented in [14, 15]. Establishing differentiability of the eigenvalues of the Hessian is more nuanced and requires a thorough investigation. If other degeneracy measures were to be employed instead of Equation 3, e.g., the determinant or condition number of the Hessian, the question of differentiability of the eigenvalues of the Hessian must still be answered. Because of this, proving to be sufficiently smooth is integral to the proposed method.

The following proposition states an important result for matrices whose eigenvalues are simple, i.e., unique.

Proposition 2.

Let be an -times continuously differentiable real symmetric matrix with simple eigenvalues. Then, the eigenvalues are -times continuously differentiable with respect to its argument.

Proof.

Using Theorem 5.3 from [16] there are analytic functions and which are the eigenvalues and eigenvectors respectively. Given that is -times continuously differentiable finishes the result. The key element in the proof in [16] comes from the implicit function theorem, which requires simple eigenvalues. ∎

If the eigenvalues are non-simple (i.e. they cross111Interestingly, eigenvalue crossing has been a polarizing topic in quantum mechanics and other related fields, as the question of the so-called avoidance crossing is still debated.) then they still might be differentiable. When the eigenvalues are non-simple, the following theorem can be used to guarantee differentiability but at the expense of stronger assumptions.

Proposition 3.

Let be a real symmetric matrix which is analytic in its argument. Then, the eigenvalues are analytic with respect to its argument.

Proof.

The proof is a sub-case of one presented in [17]. ∎

Given Propositions 2 and 3, the Hessian of Equation 2 must now be studied to determine if either or when the aforementioned propositions are applicable. The first property that needs to be established is that, for the Hessian to be continuously differentiable in time, so too must and . Two assumptions are made to ensure is continuously differentiable.

Assumption 1.

The nonlinear least squares cost function is twice continuously differentiable in .

Assumption 2.

The state and measurement (and so to the measurement model ) are continuously differentiable.

With Assumptions 1 and 2, the Hessian is continuously differentiable in time but, as noted above, this alone is not enough to guarantee differentiability of the eigenvalues everywhere. From Proposition 3, if depends analytically on a single parameter then the eigenvalues of are differentiable even in the non-simple case. These two cases lead to two different methods of constructing valid I-CBFs: one where is analytic and another where the eigenvalues of are always simple, i.e., the eigenvalues never cross.

III-C Analytic Hessian

The goal of this section is to prove that the eigenvalues of the Hessian are sufficiently smooth. From Proposition 3, the eigenvalues of are analytic (and therefore smooth) provided that itself is an analytic function of only a single parameter. In our framework, this single parameter is time , as the implementation of a controller renders all system dynamics solely time-dependent. To formalize this and prove differentiability for Equation 1, we assume the following.

Assumption 3.

The dynamics, measurement model, and control in Equation 1 are all analytic.

Theorem 1.

Consider the nonlinear system Equation 1 that satisfies Assumption 3. If the nonlinear least squares cost function in Equation 2 and measurements are sufficiently smooth then the eigenvalues of the Hessian are analytic with respect to time .

Proof.

The Cauchy-Kovalevskaya lemma guarantees that the solution to Equation 1 is analytic locally as the dynamics and control input are analytic by assumption. Since the solution to Equation 1 is a local analytic function of , then locally. Now that is a function of a single parameter , Proposition 3 applies, so the eigenvalues of the Hessian are guaranteed to be differentiable. ∎

Remark 6.

One of the stronger assumptions made in Theorem 1 is that the control input is analytic, as it is often computed via a quadratic program for safety-critical control. While conditions under which quadratic programs yield analytic control are discussed in [19], often quadratic programs produce only continuous control. Cohen et al. [20] explore cases where this issue can be mitigated by creating a smooth version of the controller that still satisfies the CBF condition but at the expense of possibly more control effort. One solution discussed in [20] involves modifying the closed-form solution to the quadratic program to make the controller analytic. If the quadratic program is of the form

|

(4) |

where is convex in , then it admits a closed form solution which can be found using KKT conditions [21]. The closed form solution to Equation 4 is, in general, not analytic as it is often piecewise. For instance, when , the closed-form solution for can be expressed as where , which is not differentiable at . However, an arbitrarily close analytic approximation of the ReLu function can be constructed while ensuring the CBF condition is satisfied. For example, one analytic approximation of is

| (5) |

for always satisfies the conditions for a CBF at the expense of conservatism. The function along with parameter can be adjusted to offset the conservatism.

Having shown the eigenvalues of the Hessian are analytic (and hence smooth) under the conditions needed for Theorem 1, the only remaining item to address is the differentiability of the min operator in Equation 3. We adopt the strategy in [15] that utilizes a smooth under-approximation of the min operator to achieve differentiability without losing set invariance. Specifically, let be an eigenvalue of the Hessian . The smooth under-approximation to Equation 3 which ensures that the set invariance condition is achieved is , . Hence, replacing with in the set invariance condition stated in Definition 4 will ensure the minimum eigenvalue of the Hessian never becomes less than (or, as mentioned in Remark 5 greater than) the specified threshold . To summarize, we smooth the barrier function from Equation 3 to obtain a differentiable alternative to circumvent the non-differentiability of the min operator. We then apply Equation 5 to obtain an analytic control input so that the eigenvalues of the Hessian are themselves analytic and therefore differentiable.

III-D Anti-Crossing Control Barrier Function

Requiring the dynamics and controller to be analytic is needed for the eigenvalues to remain differentiable if the minimum eigenvalue is non-simple. However, if it can be shown the eigenvalues are always simple, i.e., never cross, then the analytic requirement can be eliminated and the differentiability of the eigenvalues is guaranteed. Furthermore, the initial minimum eigenvalue will always be the minimum eigenvalue so the min operator can be removed from Equation 3 and the smoothing under-approximation modification outlined in the previous subsection is no longer necessary. It is therefore desirable to prevent eigenvalue crossing. We now introduce an additional CBF that works in conjunction with an I-CBF to prevent the eigenvalues from crossing. Fundamentally, the anti-crossing CBF maintains a small gap between each pair of eigenvalues ensuring the difference between them is at least . This can be imposed by defining the CBF

| (6) |

Since the eigenvalues are now required to not cross, we have and so is positive when thereby defining the desired super-level set. There are several alternatives to Equation 6 but the advantage of our choice is its simplicity and effectiveness (as was also the case for Equation 3).

To show Equation 6 is minimally invasive, we leverage the following result that provides insights about the structure of the manifold on which the eigenvalues could cross.

Proposition 4.

The eigenvalues of an real symmetric matrix can only cross on a manifold of dimension .

Proof.

A detailed proof can be found in [22], but the core idea of the proof is based on the comparison between the number of independent parameters in a symmetric matrix and the number of parameters in its diagonalized representation. ∎

The anti-crossing CBFs are minimally invasive as eigenvalues of the Hessian can only cross on a manifold of size where is the size of the Hessian as stated in Proposition 4. This property implies that the system’s trajectory will avoid this manifold without significantly deviating from the desired control input or the desired state , as it can circumvent the lower dimensional manifold with minimal change. For instance, if , the points to avoid are one-dimensional, meaning that the trajectory must avoid specific lines in three-dimensional space.

Regarding implementation, the analytic method requires stronger assumptions (Assumption 3) but can be computationally faster as seen in Section IV while the anti-crossing method is more intuitive and uses fewer hyperparameters.

III-E Predictive Measurement Models

A subtle but important element of the proposed approach is the need to have information regarding the rate of change of measurements with respect to time. In other words, the approach utilizes a form of measurement prediction to achieve set invariance in a similar manner to how the system’s dynamics are used. For the sake of clarity, let be simple. Then, from Equation 3 and Definition 8, where the time variation of the measurements must be accounted for to achieve set invariance. Intuitively, the derivative can be thought of as providing an instantaneous prediction of the next measurement. In practice, one rarely has access to , so it must be estimated directly or approximated via . Future works will investigate this trade-off, but we expect the latter—which we call using predictive measurement models—will be a fruitful research area given the various sensing modalities, such as vision, LiDAR, event camera, etc., used on mobile systems. This work will utilize the approximation .

III-F Higher Relative Degree I-CBFs

The previous analysis can be extended to control barrier functions that have a relative degree greater than one. Various approaches for constructing CBFs suitable for higher relative-degree systems have been suggested in the literature [23, 24, 25]. Among these, one method that works well for I-CBFs is that presented in [24]. In this method, a new CBF is constructed with a relative degree of one that in the limit has the same safe set as the original . This new is then an implementable I-CBF. In the previous analysis, if the relative degree for the original is , Assumption 2 needs to be modified to -times continuously differentiable, to ensure that the eigenvalues are sufficiently smooth. This method for higher relative degrees works for both the analytic Hessian and anti-crossing CBF, as is demonstrated in Section IV.

IV Illustrative Example

The proposed method is demonstrated on a 2-D double integrator system that uses beacons at known locations for localization. This system has a relative degree of two so we employ [24] to achieve set invariance. Specifically for the analytic method, we use CBF where and . For the anti-crossing method we use and . At each time step the system’s state is computed by solving a nonlinear least squares problem via gradient descent, with either range-only or bearing-only measurements as these are common in practice. The estimated state and the Hessian of the nonlinear least squares cost are then used by the safety controller to override an LQR controller that is trying to drive the system to a desired location. The gradient of the minimum eigenvalue was computed analytically. Per Section III-E, the measurement model was used to predict the rate of change of the measurements. The position of the system is with beacons located at for .

IV-A Range-Only Measurements

The measurement model for range-only beacons is for . The covariance matrix for each measurement was chosen to be to capture measurement dropout due to distance from the beacon. The off-diagonal entries were set to zero.

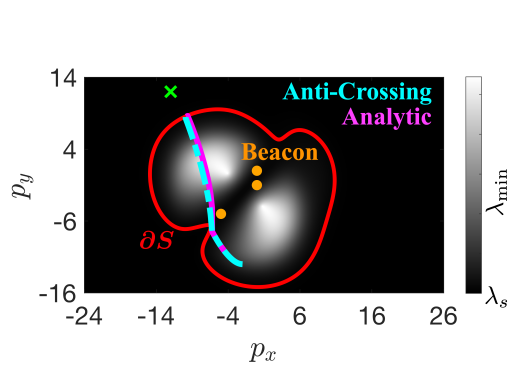

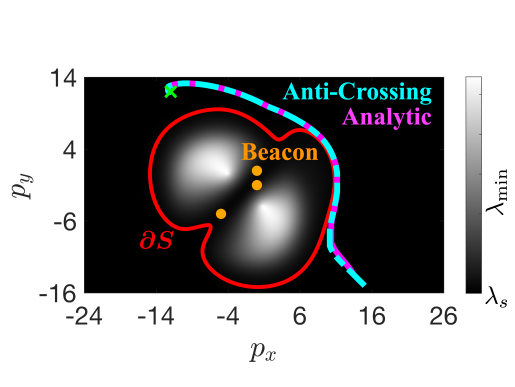

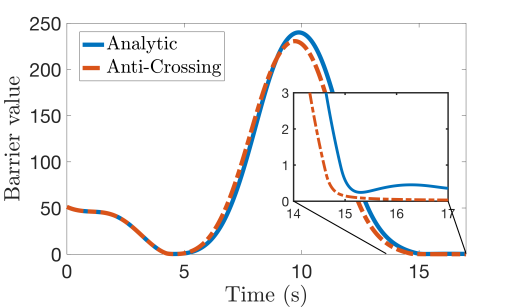

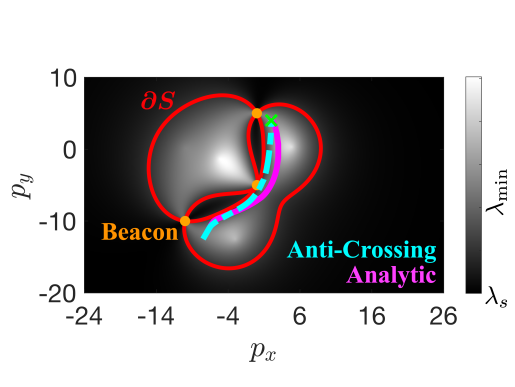

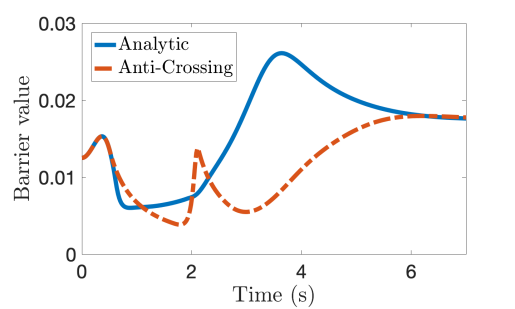

Localization. For localization, the safe region where is shown as the region inside the red line in Fig. 2(a) with the grey scale that denotes the value of as a function of and . Both the analytic and anti-crossing methods demonstrated good performance in Fig. 2(c), ensuring that remained positive and that the minimum eigenvalue stayed above the specified threshold. The anti-crossing method was more aggressive as the system took a more direct path to the goal and subsequently got closer to the boundary of . Moreover, the max control effort was twice as large in this method compared with the analytic I-CBF, highlighting the trade-off between the analytic and anti-crossing formulations. The average per-step computation time was 0.7 ms for the analytic method and 35.6 ms for the anti-crossing method. The majority of the computation time for the anti-crossing method came from the optimization while formulating the Hessian and its derivatives took only 0.08 ms.

Detection Avoidance. As noted in Remark 5 the method in this letter can also be used for detection avoidance by ensuring that the minimum eigenvalue of the hessian remains sufficiently small. The safe set where detection can be avoided is and is indicated by the red line in Fig. 2(b). The system remained outside of the detectable set as the value of the barrier function remained positive Fig. 2(d). Like with the localization, the anti-crossing method was more aggressive as compared to the analytic method.

IV-B Bearing-Only Measurements

Next, we demonstrate the method using bearing-only measurements for localization. The measurement model is for and the weighting matrix is since the eigenvalues naturally decayed as the distance from the beacons increased thereby eliminating the need to model measurement degradation/dropout separately.

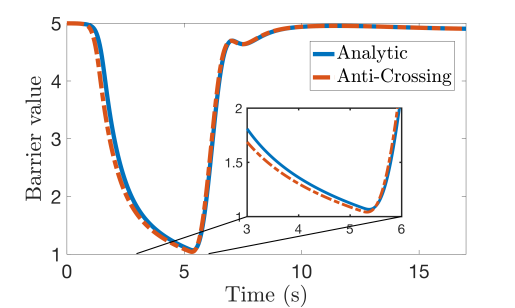

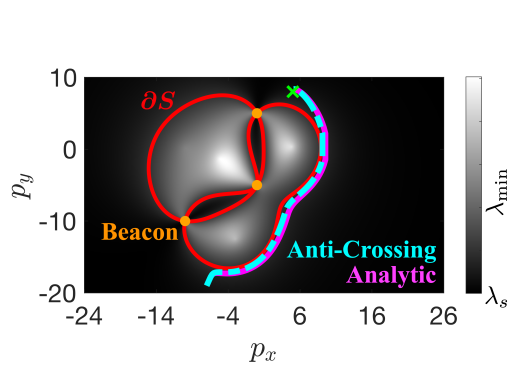

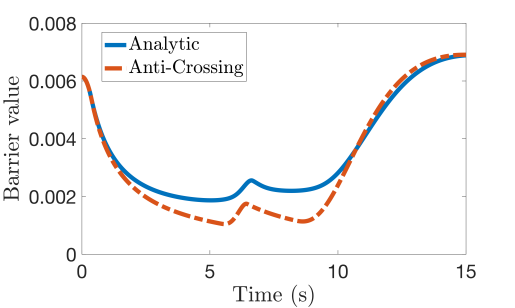

Localization. For safe localization the safe region, where is shown in Fig. 3(a) by the red line. Looking at Fig. 3(c), both the analytic and anti-crossing methods maintained above zero, but their values deviated substantially. As with the range-only measurements, the analytic method was more conservative, but the peak control effort was approximately five times less. The average per-step computation time for the analytic and anti-crossing methods was 0.8 ms and 19.4 ms respectively, in the anti-crossing computing the Hessian and its derivatives took only 0.06 ms. Both methods ensured the eigenvalue of the Hessian did not drop below the specified threshold, even with the complex shape of the safe set .

Detection Avoidance. The bearing-only measurements can also be used in a detection avoidance formulation. For detection avoidance, the safe region is where is shown in Fig. 3(b) by the red line. Both the anti-crossing method and the analytic methods kept the system in the safe set as indicated by the value of the barrier function Fig. 3(d) with the anti-crossing exhibiting more aggressive behavior.

V Conclusion

This letter presented a method for maintaining reliable localization in mobile systems by leveraging Information Control Barrier Functions (I-CBFs) to impose constraints on the eigenvalues of the Hessian in optimization-based localization algorithms. Unlike previous approaches, which often require prior knowledge of the environment or are tailored to specific situations, this framework offers a general solution applicable to various systems. Future work will focus on applying this method to vision and LiDAR-based localization [10] and exploring how some of the assumptions in this letter might be relaxed. Additionally, integrating robust, stochastic, and adaptive methods [26] could broaden its applicability and provide safety guarantees for uncertain systems. The method was demonstrated through simulations on range-only and heading-only beacon localization, showing that both proposed methods preserve localization safety.

VI Appendix

Here we include derivations and formulas for computing the derivatives of an simple eigenvalue of a symmetric matrix for completeness. If and are the eigenvalue and (normalized) eigenvector of a time-dependent matrix then by definition which can be differentiated to get . Multiplying both sides by one obtains . Using this relation, the first derivative of is where is the psuedoinverse. Differentiating and again, and

Simulation parameters are listed in Tables I, II, III and IV. Unless otherwise noted the simulation time was s and all initial and final velocities were zero.

| Analytic | Non-Coalescing | |

| , | ||

| (m) | ||

| (m) | N/A | |

| N/A | ||

| N/A | ||

| (ms-1) | -1 | -1 |

| Analytic | Non-Coalescing | |

| , | ||

| (m) | ||

| (m) | N/A | |

| N/A | ||

| N/A |

| Analytic | Non-Coalescing | |

| , | ||

| (m) | ||

| (m) | N/A | |

| N/A | ||

| N/A |

| Analytic | Non-Coalescing | |

| , | ||

| (m) | ||

| (m) | N/A | |

| N/A | ||

| N/A |

References

- [1] T. D. Barfoot, State estimation for robotics. Cambridge Univ. Press, 2024.

- [2] S. Prentice and N. Roy, “The belief roadmap: Efficient planning in belief space by factoring the covariance,” Int. J. Robot. Res., vol. 28, no. 11-12, pp. 1448–1465, 2009.

- [3] R. Platt Jr et al., “Belief space planning assuming maximum likelihood observations.” in Robotics: Science and Systems, vol. 2, 2010.

- [4] G. Costante et al., “Perception-aware path planning,” arXiv:1605.04151, 2016.

- [5] D. Falanga et al., “Pampc: Perception-aware model predictive control for quadrotors,” in IEEE Int. Conf. Intell. Robots Syst., 2018, pp. 1–8.

- [6] K. Hausman et al., “Observability-aware trajectory optimization for self-calibration with application to uavs,” IEEE Robot. Autom. Lett., vol. 2, no. 3, pp. 1770–1777, 2017.

- [7] I. Salehi, G. Rotithor, R. Saltus, and A. P. Dani, “Constrained image-based visual servoing using barrier functions,” in Proc. - IEEE Int. Conf. Robot. Autom., 2021, pp. 14 254–14 260.

- [8] D. Coleman, S. D. Bopardikar, and X. Tan, “Using control barrier functions to incorporate observability: Application to range-based target tracking,” J. Dyn. Syst. Meas. Control Trans. ASME, vol. 146, no. 4, 2024.

- [9] S. Leutenegger et al., “Keyframe-based visual–inertial odometry using nonlinear optimization,” Int. J. Robot. Res., vol. 34, no. 3, pp. 314–334, 2015.

- [10] K. Chen, R. Nemiroff, and B. T. Lopez, “Direct lidar-inertial odometry and mapping,” arXiv:2305.01843, 2023.

- [11] A. D. Ames et al., “Control barrier function based quadratic programs for safety critical systems,” IEEE Trans. Autom. Control, vol. 62, no. 8, pp. 3861–3876, 2016.

- [12] ——, “Control barrier functions: Theory and applications,” in Proc. Euro. Control Conf. IEEE, 2019, pp. 3420–3431.

- [13] T. Tao, “Power series,” in Analysis II. Springer, 2006, pp. 474–490.

- [14] P. Glotfelter, J. Cortés, and M. Egerstedt, “Nonsmooth barrier functions with applications to multi-robot systems,” IEEE Control Syst. Lett., vol. 1, no. 2, pp. 310–315, 2017.

- [15] T. G. Molnar and A. D. Ames, “Composing control barrier functions for complex safety specifications,” IEEE Control Syst. Lett., 2023.

- [16] D. Serre, Matrices. Springer, 2010.

- [17] A. Kriegl, P. W. Michor, and A. Rainer, “Denjoy–carleman differentiable perturbation of polynomials and unbounded operators,” Integral Equ. Oper. Theory, vol. 71, no. 3, p. 407, 2011.

- [18] P. D. Lax, Linear Algebra and Its Applications. Wiley, 2007.

- [19] P. Mestres, A. Allibhoy, and J. Cortés, “Regularity properties of optimization-based controllers,” arXiv:2311.13167, 2023.

- [20] M. H. Cohen et al., “Characterizing smooth safety filters via the implicit function theorem,” IEEE Control Syst. Lett., 2023.

- [21] S. Boyd and L. Vandenberghe, Convex optimization. Cambridge Univ. Press, 2004.

- [22] J. R. Magnus and H. Neudecker, Matrix differential calculus with applications in statistics and econometrics. Wiley, 2019.

- [23] Q. Nguyen and K. Sreenath, “Exponential control barrier functions for enforcing high relative-degree safety-critical constraints,” in Proc. Am. Control Conf., 2016, pp. 322–328.

- [24] W. Xiao and C. Belta, “Control barrier functions for systems with high relative degree,” in Proc. IEEE Conf. Decis. Control, 2019, pp. 474–479.

- [25] M. H. Cohen, R. K. Cosner, and A. D. Ames, “Constructive safety-critical control: Synthesizing control barrier functions for partially feedback linearizable systems,” IEEE Control Syst. Lett., 2024.

- [26] B. T. Lopez and J.-J. E. Slotine, “Unmatched control barrier functions: Certainty equivalence adaptive safety,” in Proc. Am. Control Conf. IEEE, 2023, pp. 3662–3668.