InfoPrompt: Information-Theoretic Soft Prompt Tuning for Natural Language Understanding

Soft prompt tuning achieves superior performances across a wide range of few-shot tasks. However, the performances of prompt tuning can be highly sensitive to the initialization of the prompts. We also empirically observe that conventional prompt tuning methods cannot encode and learn sufficient task-relevant information from prompt tokens. In this work, we develop an information-theoretic framework that formulates soft prompt tuning as maximizing mutual information between prompts and other model parameters (or encoded representations). This novel view helps us to develop a more efficient, accurate and robust soft prompt tuning method InfoPrompt. With this framework, we develop two novel mutual information based loss functions, to (i) discover proper prompt initialization for the downstream tasks and learn sufficient task-relevant information from prompt tokens and (ii) encourage the output representation from the pretrained language model to be more aware of the task-relevant information captured in the learnt prompt. Extensive experiments validate that InfoPrompt can significantly accelerate the convergence of the prompt tuning and outperform traditional prompt tuning methods. Finally, we provide a formal theoretical result for showing to show that gradient descent type algorithm can be used to train our mutual information loss.

1 Introduction

Soft prompt tuning has shown great successes in a wide range of natural language processing tasks, especially in low-resource scenarios [42, 24, 43]. With a relatively small portion of tunable prompt parameters appended to the input of the context, the language model can be trained on the downstream tasks with the large scale pretrained parameters frozen. Compared with conventional fine tuning methods, prompt tuning requires less memory and computational resources to update these significantly smaller sized prompt parameters. In addition, in low-shot learning scenarios, prompt tuning can prevent the language model overfitting on the training data, and thus maintain the generalization ability of pretrained language models.

However, recent works reveal that it is non-trivial to find proper initialization of the prompt tokens. Several works have investigated the effect of prompt initialization on the prompt tuning performances [65, 72] and showed that the performances of prompt tuning are highly sensitive to the prompt initialization. However, since the prompt initialization can be variant to different downstream tasks and pretrained language models, in low-resource tasks, we can hardly find very accurate knowledge to guide us to obtain proper initialization [15].

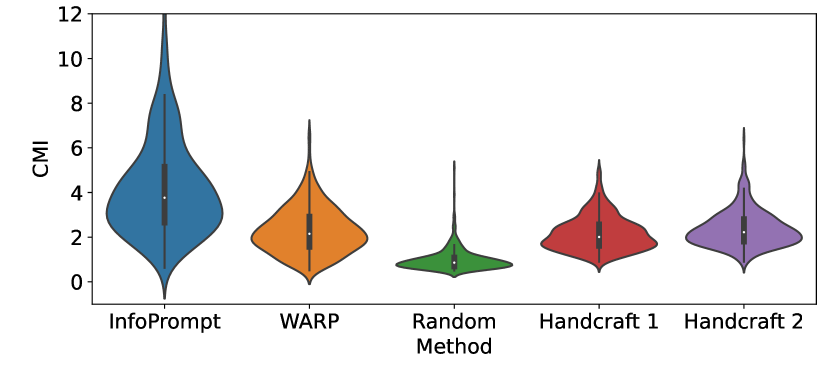

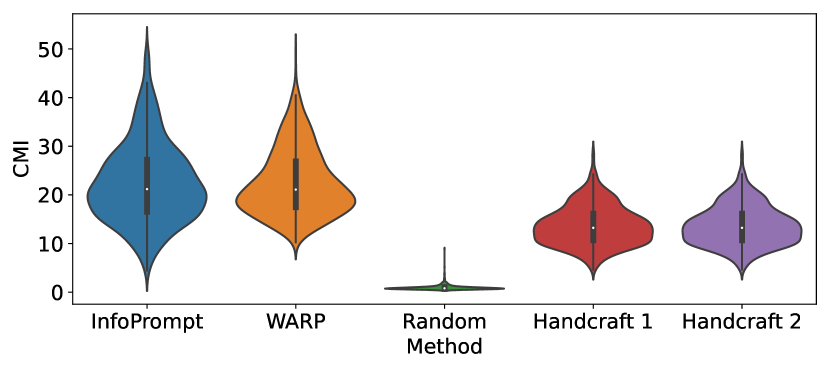

In addition to the above limitations of soft prompt tuning in the initialization, we also empirically observe that conventional prompt tuning methods cannot effectively learn sufficient task-relevant information from prompt tokens. Specifically, conventional prompt tuning methods cannot locate prompts with task-relevant information encoded by the frozen language models conditioned on the input context. To understand the relevance between the prompt tokens and downstream tasks, we calculate the conditional mutual information (CMI) between the prompt tokens and the latent representation from the language model conditioned on the input context. We follow [33] to determine the positions of prompt tokens inserted between the input sentence. Figure 1 shows the distribution of CMI of the prompts learned or handcrafted from different methods. The randomly sampled prompts have the lowest CMI. The prompts learned by a soft prompt tuning method, WARP [33], can have relatively better CMI than handcrafted prompts. By directly maximizing the CMI, our InfoPrompt (detailed in Section 3) can encode more informative prompts. Without the guidance of task-relevant information, randomly exploring the prompts through the large continuous space of the prompt tokens can be inefficient, where a similar challenge is discussed in [58]. Some related results [58, 24, 62] show that prompt tuning takes much larger numbers of epochs to converge comparing to conventional fine tuning methods. Our method can converge more easily than traditional soft prompt tuning method and we provide the theoretical guarantees of the convergence of the proposed losses which are trained using conventional gradient decent type algorithms.

In this paper, to address the above limitations, we develop an information-theoretic framework that formulates soft prompt tuning as maximizing mutual information between prompts and other model parameters (or encoded representations). With this framework, we develop InfoPrompt with two novel mutual information based loss functions. (i) To discover proper prompt initialization for the downstream tasks and learn sufficient task-relevant information from prompt tokens, we optimize the head loss which maximizes the mutual information between the prompt and the language head. By optimizing this head loss, the prompt can effectively learn task-relevant information from the downstream tasks, since the language head usually contains the information from the downstream tasks. Besides, the mutual information loss can help to guide the learning of the prompt tokens in the early steps to tackle the initialization challenge, since language head parameters are more likely to learn the downstream task information more quickly. (ii) To further encourage the output representation from the pretrained language model (i.e., encoded representation) to be more aware of the task-relevant information captured in the learnt prompt, we optimize the representation loss which maximizes the conditional mutual information between the prompt tokens and the feature representations conditioned on the input context.

Our contributions are summarized as:

-

•

We revisit the limitations of soft prompt tuning in the initialization and discover that existing prompt tuning methods cannot learn sufficient task-relevant information from prompt tokens. We propose to jointly solve these limitations from an information-theoretic perspective.

-

•

We propose an information-theoretic prompt tuning framework InfoPrompt. We develop two novel loss functions to find proper prompt initialization and learn sufficient task-relevant information from prompt tokens, without requiring any prior knowledge.

-

•

Extensive experiments on 6 tasks and 3 datasets validate that, without any prior knowledge, InfoPrompt can significantly accelerate the convergence of the prompt tuning and outperform traditional prompt tuning methods.

-

•

We provide a formal theoretical result to show that gradient descent type algorithm can be used to train our mutual information loss.

2 Preliminary

2.1 Prompt Tuning

Soft prompt tuning has shown great successes in a wide range of NLP tasks [42, 24, 43]. Let denote the encoder of a pretarined language model, e.g., Roberta-Large [47]. Assume is a text sequence of length and is its label. By prompting, we add extra information for the model to condition on during its prediction of . is a sequence of token embeddings. is the number of prompt token. , , is a embedding vector with dimension and is the embedding dimension of the pretrained language model. We first embed each token of into its corresponding token embedding from the pretrained language model. is inserted into the the resulting embedding sequence of to form a template, which is further encoded by the pretrained encoder into the representation space of the pretrained langauge model. The template is detailed with the experiments. Formally, we denote such a process as , with being the encoded representation. The model prediction for is made on top of with a trainable language modeling head (parameterized by ), s.t., the output is the distribution over all possible labels for classification. The whole model is generally trained via minimizing the following loss function,

| (1) |

The pretrained encoder is frozen during training. Different from previous works [42] where the prompts are learnable parameters, in our approach, the prompts are encoded from the input , so that the prompts can encode task-relevant information from the training text . We will elaborate on how the prompts are encoded from input in Section 4.

2.2 Mutual Information

Mutual information (MI) is a metric in information theory [60, 14], which measures the amount of shared information between two random variables. The mutual information between two random variables and is

| (2) |

Inspired by [63], we use MI as the criterion for comparing prompts and other model parameters. MI has also been applied to measure the similarity between the masked token and the corresponding context in the pretraining of Multilingual Masked Language Modeling [9], the relevance between the document and a sentence in document summarization [55] and the source sentence and the target translated sentence in Neural Machine Translation (NMT) [80].

3 Our Method: InfoPrompt

As mentioned above, we want to the learnt prompts to be task-relevant. To achieve this, we notice that the language model head is trained with both the data representation and the classification labels, thus show be relevant to the task to be trained. In encouraging the task-relevancy of the learnt prompts, we consider maximizing the mutual information between the prompt and the parameters of he language model head, denoted as . By maximizing such mutual information, the learnt prompt will be more correspondent with the training data with which the language model head is trained, thus captures more task-relevant information from training. Further, in order for the pretrained language model to properly encode the task-relevant information in the prompt, we additionally maximize the mutual information between the prompt and the representation from the pretrained language model, so that the encoded representation can be aware of the task-relevant information captured by the prompt. In addition, we also provide theoretical guarantees of the convergence of those losses trained by conventional gradient decent type algorithms, which enables our method to converge more easily than traditional soft prompt tuning methods and avoid the problem of large training epochs. Below, we denote the negative mutual information between the prompt and parameters of the language model head as the head loss. The negative mutual information between the prompt and the representation from the pretrained language model is denoted as the representation loss.

3.1 The Head Loss

the head loss is the negative mutual information between the prompt and parameters , i.e., . In maximizing , we follow [52] that approximate it with the following lower bound,

| (3) |

is a constant and is a Noise Contrastive Estimation (NCE) of mutual information,

| (4) |

are the negative prompt samples for contrastive learning. In practice, we randomly sample tokens from the context as the negative samples, i.e., and .

We model the score function as a standard bilinear function with the learnable matrix

| (5) |

where and are encoded from . is a trainable matrix. Since the language model head is learnt on top of the output from the last layer of the pretrained language model, the learning of its parameters is easier than the learning of the prompt (the input layer of the pretrained language model). Therefore, may capture more task-relevant information than in the early stage of training. By maximizing the mutual information between and , the task-relevant information can be transferred to in the initial training steps. In this way, can be more task-relevant especially in the early training stage. Experiments in Section 6 also show that our head loss, , can facilitate the training of the initial training steps.

3.2 The Representation Loss

The representation loss, denoted as , is defines as the negative of mutual information between the prompt and the encoded representation from the pretrained language model, i.e., . Similar to the head loss, we approximate the representation loss with its lower bound,

| (6) |

and,

| (7) |

are the negative samples. Here, we overload the notations of InfoNCE loss and score function for conciseness. Let be a trainable matrix, the function for the representation loss is defined by,

| (8) |

We use variational inference methods [29] to recover the latent distribution of . Specifically, we assume that the latent distribution is , where is the normal distribution with mean and diagonal covariance matrix . We model and via,

| (9) |

and are trainable fully connected layers. Since the negative samples of , i.e., , should not be paired with , we assume the are drawn from , s.t.,

| (10) |

In contrast to , we have so that are not paired with . By maximizing the representation loss , we encourage the encoded representation to be more aware of the prompt , so that the task-relevant information in can be properly encoded by the pretrained language model in producing .

3.3 Overall Objective

We minimize the following objective in prompt tuning:

| (11) |

We denote as the task loss. and are balancing parameters for the proposed representation loss and head loss, respectively. We denote our approach as InfoPrompt.

3.4 Theoretical Guarantees

We state our main theoretical result as follows. Due to the space limit, we delay the proof into Appendix.

Theorem 3.1.

Given the Loss function (Eq. (11)) and conditions specified in Appendix C.1 and D, using gradient descent type of greedy algorithm, we can find the optimal solution of that loss function.

We provide theoretical guarantees of the convergence of those losses trained by conventional gradient decent type algorithms, which enables our method to converge more easily than traditional soft prompt tuning methods and avoid the problem of large training epochs.

4 Experiments

4.1 Datasets

We conduct experiments with datasets of sequence classification from the GLUE benchmark [74], along with those of relation extraction tasks and NER tasks. We choose four seqience classification tasks from the GLUE benchmark: RTE (Recognizing Textual Entailment, [4]), MRPC (Microsoft Research Paraphrase Corpus, [16]), CoLA (Corpus of Linguistic Acceptability, [73]) and SST-2 (Sentence Sentiment Treebank, [61]). We choose these tasks because their datasets are in smaller sizes and prompt tuning is comparably more effective in low-resource setting [33, 45]. For the task of relation extraction, we evaluate our method on ACE2005 corpus and Semeval-2010 datasets [32]. We also use the ACE2005 corpus for the task of NER. Note that the entity spans for NER have been given ACE2005. Unlike the standard NER model that learns to predict the entity span and entity type simultaneously from the raw text sequence, our model only predicts the entity type based on the given entity span. We follow the same data splitting strategy for ACE2005 corpus as the previous work [78, 54]. For the Semeval-2010 tasks, we follow the official data partition [32].

4.2 Experiment Settings

We follow the resource constrained scenario in [33] that train each task with only 64 or 256 samples. We experiment with and prompt tokens for each task. The prompt tokens are inserted into the template for each task. Similar to [33], we adopt the RoBERTa-large model as our pretrained encoder. We freeze pretrained parameters are only trained with parameters of the prompt head and prompt tokens. During training, we empirically set and . The number of negative of negative samples . The learning rate is and the batch size is 8. For each task, we report the results after 30 epoches, averaged over 5 random seeds. To encode the prompt from , we first encode into via . For each , we have , , .

For the tasks of sequence classification and relation extraction, we follow the template of [33] that contains a token. The representation is the token from the last layer of the Roberta-Large encoder. For the tasks of named entity recognition, we have the token before the given entity span, with the rest being the same as for sequence classification.

| CoLa () | CoLa () | RTE () | RTE () | MRPC () | MRPC () | SST2 () | SST2() | Average | |

|---|---|---|---|---|---|---|---|---|---|

| Finetuning | 0.6131 | 0.7798 | 0.8873 | 0.9427 | 0.8057 | ||||

| Adapter | 0.5552 | 0.5776 | 0.6814 | 0.9472 | 0.6904 | ||||

| WARP [33] | 0.5282 | 0.5911 | 0.6282 | 0.6426 | 0.8039 | 0.8186 | 0.9507 | 0.9587 | 0.7403 |

| IDPG [76] | 0.5556 | 0.5646 | 0.6282 | 0.6534 | 0.7941 | 0.8039 | 0.9587 | 0.9587 | 0.7396 |

| InfoPrompt | 0.5631 | 0.6018 | 0.6751 | 0.6968 | 0.8039 | 0.8137 | 0.9576 | 0.9599 | 0.7590 |

| 0.5699 | 0.5853 | 0.6751 | 0.6787 | 0.7941 | 0.8137 | 0.9495 | 0.9587 | 0.7531 | |

| 0.5546 | 0.5579 | 0.6065 | 0.6318 | 0.7892 | 0.7966 | 0.9472 | 0.9610 | 0.7306 | |

| 0.5032 | 0.5732 | 0.6173 | 0.6029 | 0.7917 | 0.7672 | 0.9495 | 0.9564 | 0.7202 | |

| RE () | RE () | NER() | NER() | SemEval () | SemEval () | Average | |

| Finetuning | 0.8119 | 0.9054 | 0.8506 | 0.8560 | |||

| Adapter | 0.5073 | 0.8329 | 0.6570 | 0.6657 | |||

| WARP [33] | 0.6384 | 0.6596 | 0.8174 | 0.8607 | 0.6702 | 0.7284 | 0.7291 |

| IDPG [76] | 0.6079 | 0.6132 | 0.8360 | 0.8931 | 0.6408 | 0.6776 | 0.7114 |

| InfoPrompt | 0.6914 | 0.7616 | 0.8526 | 0.8962 | 0.7563 | 0.7917 | 0.7916 |

| 0.6914 | 0.7285 | 0.8452 | 0.8635 | 0.7471 | 0.7865 | 0.7770 | |

| 0.6967 | 0.7470 | 0.8351 | 0.8698 | 0.7449 | 0.7538 | 0.7746 | |

| 0.5364 | 0.7285 | 0.8512 | 0.8661 | 0.7490 | 0.7799 | 0.7519 | |

4.3 Baselines and Ablations

As mentioned above, our method with (11) in denoted as InfoPrompt. In the experiments, we compare our method with the following baselines:

-

•

Fine Tuning: We fine tune all the parameters from the pretrained encoder on each task. Fine Tuning is included as the upper bound for the model performance, since it is more computational expensive compared with only training the prompt parameters.

-

•

Adapter: Similar to prompt tuning, this is also a way of parameter-efficient training for pretrained language models. Specifically, instead of adding the prompt tokens in the input, we add adaptors after the last linear layer in each transformer layer.

-

•

WARP [33]: Different from our approach, the prompt tokens of WARP are not generated from the input sequence. the prompt tokens are insert into the input sequence. During training, the pretrained encoder is frozen and only the prompt tokens are trainable.

-

•

IDPG [76]: Similar to our approach, the prompt tokens are generated from the input sequence. The pretrained encoder is frozen and the prompt generator is trainable.

In evaluating the effectiveness of our proposed loss functions, we consider the following two ablations:

-

•

: We disable the head loss during training via , while keeping .

-

•

: We disable the head loss during training via , while keeping .

-

•

: We disable both the losses. The prompt parameters are trained with .

5 Experimental Results

5.1 Training with the Full dataset

Table 1 and 2 show that results of training with the full dataset for each task. We can observe that the results with our InfoPrompt is generally higher than the other parameters-efficient baselines that freeze the pretrained Roberta-Large parameters (e.g., WARP and Adapter). Fine Tuning generally have better performance than the other approaches. This is because it allows training with all the model parameters, which is at the expense of more computation cost during training. As mentioned above Fine Tuning is intended to be included as the upper bound for performance. Moreover, we can find the performance with and is lower than that of InfoPrompt, indicating shows that it is beneficial to learn task-relevant prompt tokens with the propose head loss and representation loss. Further, the performance gap between / and shows that the proposed functions are effective when added to naive prompt tuning, i.e., with only .

5.2 Training with the Few-Shot Datasets

| CoLa (64) | CoLa (256) | RTE (64) | RTE (256) | MRPC (64) | MRPC(256) | SST2 (64) | SST2(256) | Average | |

| Finetuning | 0.1746 | 0.4086 | 0.4801 | 0.6787 | 0.7107 | 0.7819 | 0.8027 | 0.8853 | 0.6153 |

| Adapter | 0.0627 | 0.2486 | 0.5487 | 0.5668 | 0.5931 | 0.625 | 0.4908 | 0.664 | 0.4750 |

| WARP [33] | 0.0749 | 0.0785 | 0.5596 | 0.5812 | 0.7083 | 0.7083 | 0.5872 | 0.7638 | 0.5077 |

| InfoPrompt | 0.1567 | 0.1750 | 0.6137 | 0.6580 | 0.7059 | 0.7377 | 0.6697 | 0.7305 | 0.5559 |

| 0.1479 | 0.1447 | 0.5776 | 0.6318 | 0.6936 | 0.7328 | 0.664 | 0.7294 | 0.5402 | |

| 0.1372 | 0.1433 | 0.5812 | 0.5957 | 0.6838 | 0.7132 | 0.5631 | 0.656 | 0.5092 | |

| 0.0919 | 0.1397 | 0.5668 | 0.5523 | 0.6985 | 0.7108 | 0.5505 | 0.6296 | 0.4925 |

| RE (64) | RE (256) | NER (64) | NER (256) | SemEval(64) | SemEval(256) | Average | |

| Finetuning | 0.1285 | 0.4013 | 0.3033 | 0.4358 | 0.2223 | 0.4829 | 0.3290 |

| Adapter | 0.1086 | 0.1815 | 0.2345 | 0.2437 | 0.1211 | 0.177 | 0.1777 |

| WARP [33] | 0.1404 | 0.2556 | 0.3082 | 0.4369 | 0.1708 | 0.3684 | 0.2801 |

| InfoPrompt | 0.2119 | 0.2993 | 0.3331 | 0.4739 | 0.2113 | 0.4034 | 0.3222 |

| 0.2026 | 0.2834 | 0.3225 | 0.4776 | 0.2153 | 0.3739 | 0.3126 | |

| 0.2013 | 0.2874 | 0.3208 | 0.4615 | 0.2072 | 0.3629 | 0.3069 | |

| 0.1974 | 0.2728 | 0.3142 | 0.4662 | 0.2278 | 0.3276 | 0.3010 |

The results for training with few-shot datasets are listed in Table 3 and 4. Compared with training with the full dataset (Table 1 and 2), we can find that the performance gap between our propose InfoPrompt and the baselines is generally larger in the few-shot setting. Unlike the full datasets, the few-shot datasets contains much less information regarding the task to be learnt. As the result, the prompts learnt with solely the task loss (e.g., with WARP or ) may easily overfit to the task-irrelvant information given few-shot datasets. In such a scenario, it would be important to explicitly encourage the learnt prompt to be task-relevant, i.e., via our proposed loss functions based on mutual information maximization. This explains why InfoPrompt yields larger performance gain when trained with few-shot datasets. Similar to training with the full datasets, the performance gains of InfoPrompt compared with InfoPrompt () and InfoPrompt () show the effectuveness of our proposed loss functions in the few-shot scenario.

6 Analysis

6.1 Loss Landscapes

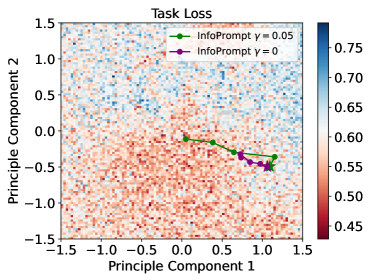

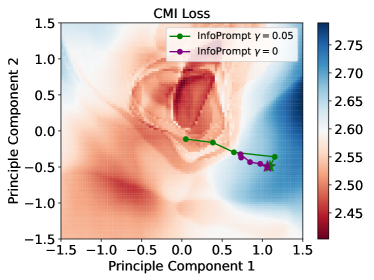

To provide a more comprehensive understanding on the effectiveness of our proposed loss functions, we plot the landscapes of the loss functions in the parameter space of the prompt tokens. The landscapes illustrates how the values of loss functions varies with the input prompt tokens. Since the prompt tokens are high-dimensional vertors, i.e., each token has the dimension of 1024 for RoBerta-Large, we visualize their associated loss values via projecting the prompt tokens into a 2D subspace. Specifically, we follow previous work of token embedding analysis [10] that project the prompt tokens into the top-2 principled component computed from the pretrained token embeddings of Roberta-Large. We only insert one prompt token into the input sequence during visualization.

Taking the task of MRPC as an example, we plot the 2D landscapes of the task loss and the representation loss in Figure 2(a) and 2(b), respectively. All the two figures are ploted with the same scale, i.e., with the same values of the prompt token. The axis values are the offset from the mean of the pretrained Roberta-Large token embeddings. The loss values shown in the figures are the average of 20 random samples from MRPC. In Figure 2(a), we can find that there are a lot of local minimum in the landscapes of the task loss. This is consistent with the observations of the previous works [24, 65] that prompt tuning is difficult to be optimized with and sensitive to initialization, e.g., the optimization can get easily overfit to a local minimum without proper initialization. From Figure 2(b), we can observe that the values of our proposed info loss is much smoother compared with the task loss in Figure 2(a). With smoother landscapes, the optimization with our proposed loss functions can be more stable (also shown in Section 6.2), i.e.. less likely being trapped in a local minimum and also guaranteed to converge by our theoretical results (see Theorem 3.1). Additionally, we plot the trajectory of the first 500 steps during training for InfoPrompt () (green) and (purple) in Figure 2(a) and 2(b). The stars in the plot indicate the initial value of the prompt before training. We find that training with can render a larger descent for both the task loss and representation loss, compared with . As analyse in Section 3.1, the language head is easier to be learnt than the prompt. As the result, parameters of the language head may contain more task-relevant information during the earlier stage of training. By maximization the mutual information between the head parameter and prompt via the proposed head loss (weighted by ), we encourage the learnt prompt to capture more task-relevant information in the initial training steps, thus resulting to have a larger descent than in the trajectories shown in Figure 2(a) and 2(b). Note that our propose two loss functions are unsupervised and do not required additional labels.

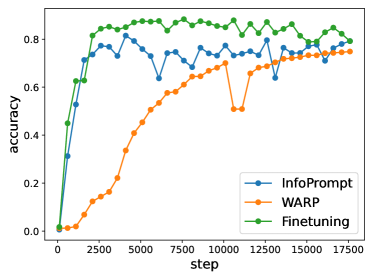

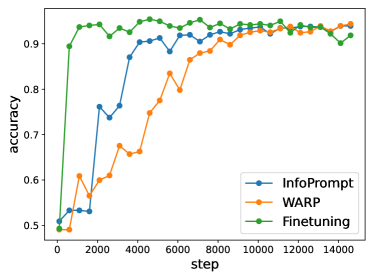

6.2 Learning Curve

We plot the training curve for the task of NER and SST-2 in Figure 3(a) and 3(b), respectively. Unlike WARP [33] and Fine Tuning that train with solely the task loss , our InfoPrompt also train with the representation loss and head loss. We can observe that the training of our our InfoPrompt is more stabilized and converges faster, compared with WARP. This can be explained from the landscape plots in Section 6.1. Since the landscape of the task loss is not smooth (Figure 2(a)), the training curve of WARP may exhibit significant perturbation when the optimization overfits to a local minimum, e.g., the 10000th in Figure 3(a). Comparably, our proposed InfoPrompt can smooth the optimization landscape, thus stabilize the training and result in faster convergence, which is guaranteed by our theoretical results. We observe that Fine Tuning generally converges faster and end up with a higher accuracy than InfoPrompt. This is because Fine Tuning, which trains with all the model parameters, have much larger model capacity during training than prompt tuning (InfoPrompt and WARP). Such results for Fine Tuning is at the expense of larger computation cost, i.e., we need to calculate the gradient for all the model parameters (354M) instead of only the prompt parameters (1.3M).

7 Related Work

7.1 Soft Prompt Tuning

Soft prompt tuning becomes a new paradigm in NLP. Based on some large pretrained models (e.g., BERT [17], RoBERTa [47]), a relatively small number of trainable parameters can be added to the input, while the parameters of backbones are fixed. Many works have demonstrated the effectiveness of soft prompt tuning in a wide range of NLP downstream tasks [42, 33, 56], especially in low-resource regions [62, 43, 24]. Some recent works also found the transferable power of soft prompts across domains [72, 65, 68], across language models [65] and for zero-shot generalization [77]. To further enhance the efficiency of soft prompt parameters and enable better generalization abilities, many works consider multi-task learning [2, 23, 68, 36], or multilingual [11, 34]. Some works also try to explore the prompt with prior knowledge encoded [30, 35, 15]. While most of the initial attempts of soft prompt tuning are not context-aware, some recent works suggest that the soft prompt tokens should be conditioned on the input context. Hyperprompt [36] proposes a hyper-network structure to generate prompt tokens based on task indexes. [76] and [7] suggest some context-aware soft prompt generation methods. [46] proposes a structured soft prompt tuning method.

7.2 Information-theoretic Methods in NLP

Information-theoretic methods are widely used in many NLP tasks [38, 69, 59, 40, 53]. [69] and [38] propose information-theoretic methods for text memorization. [53] suggests an information-theoretic method for dialogue. [40] views the multimodal NMT problem in an information-theoretic point of view. For model pretraining, [75] proposes Infobert to improve the robustness of the BERT model. INFOXLM [9] proposes a cross-lingual language model based on an information-theoretic framework. For model fine-tuning, [51] proposes an information bottleneck model method for low-resource fine-tuning. [63] proposes an information-theoretic method to engineer discreet prompts.

7.3 Theoretical Attention Computation

Softmax is one of the major unit in the attention scheme of most recent NLP large language models. Computing the attention matrix faster is a practically interesting question [12, 71, 41]. Recently, a number of theoretical work have tried to study the softmax/attention unit from theoretical perspective. The softmax attention computation can be formally defined as follows: suppose we are given three matrices (the query), (the key), and (the value), the goal is to compute where the diagonal matrix is . Here denote the transpose of matrix . The work of [79, 1] consider the static setting, and the work of [8] considers the dynamic setting. [1] proposed a tight algorithm for computing and provided a lower bound result based on the strong exponential time hypothesis. The work [8] shows a tight positive result and a negative result. In [8], they provide an upper bound via lazy update techniques [13]. In [8], they also present a lower bound result which is based on the Hinted MV conjecture [5]. The work of [22] proposes two sparsification algorithm to compute attention matrix when the feature dimension the length of sentence. [27] shows how to provide a differentially private algorithm for computing attention matrix under differential privacy framework [21, 18].

8 Conclusion

We revisit the limitations of soft prompt tuning in the initialization. We also empirically discover that conventional prompt tuning methods cannot learn sufficient task-relevant information from prompt tokens. We tackle these limitations from an information-theoretic perspective and propose an information-theoretic prompt tuning method InfoPrompt. Two novel loss functions are designed to (i) discover proper prompt initialization for the downstream tasks and learn sufficient task-relevant information from prompt tokens and (ii) encourage the output representation from the pretrained language model to be more aware of the task-relevant information captured in the learnt prompt. With extensive experiments, without any prior expert knowledge, InfoPrompt can significantly accelerate the convergence of the prompt tuning and achieve more accurate and robust performances than traditional prompt tuning methods.

9 Limitation

Our proposed InfoPropmt is an approach that targets parameter-efficient training, i.e., reducing the trainable parameters via freezing the pretrained parameters and only train prompts. Though the computational cost is significant reduced compared with fine tuning, the computation for the model inference still remains the same. Future works may further consider the synergy between the task of parameter-efficient training and model compression (e.g. distillation or pruning), so that the resulting model can also benefit from reduced computation cost during inference.

Appendix

Roadmap. In Section A, we provide a number of basic notations. In Section B, we provide several basic definitions and discuss some related work about previous theoretical softmax regression results. In Section C, we provide a complete proof for our major theoretical result in this paper. We present our final result in Section D.

Appendix A Preliminaries

For any positive integer , we use to denote set . For any function , we use to denote .

Vector

For a length- vector , we use to denote a length- vector that its -th entry is .

For a length- vector , we use to represent its norm, i.e., . For a length- vector , we use to denote .

For a length- vector , we use to generate a diagonal matrix where each entry on the ()-diagonal is for every .

We use to represent a length- vector where all the coordinates are ones. Similarly, we use to represent a length- vector where all the values are zeros.

PSD

We say (positive semi-definite) if for all vector .

Matrix Related

For an by matrix , we use to denote the number of non-zero entries of , i.e.,

For a diagonal matrix , we say is a -sparse diagonal matrix, i.e., .

For any matrix , we denote the spectral norm of by , i.e.,

For a matrix , we use to denote the largest singular value of . We use to denote the smallest singular value of .

Matrix Computation

Calculus Related

Appendix B Related Work about Theoretical Attention Regression Results

In this paper, we focus on the direction of regression tasks [25, 50, 19, 48, 64]. The goal of this section will review the linear regression (Definition B.1), exponential regression (Definition B.2), rescaled softmax regression (Definition B.3), softmax regression (Definition B.2).

Definition B.1 (Linear regression).

Given a matrix and , the goal is to solve

For convenient, let us to denote .

Definition B.2 (Exponential Regression, see [25, 50]).

Suppose we are given a length vector , and an by size matrix , our goal is to optimize

Definition B.3 (Rescaled Softmax Regression, see [28]).

Suppose we are given a length vector , and an by size matrix , our goal is to optimize

Appendix C Theoretical Guarantees

In Section C.1, we provide several basic definitions. In Section C.2, we explain how to compute the gradient of function . In Section C.3, we show how to compute the gradient of function . In Section C.4, we explain how to compute the Hessian of function . In Section C.5, we compute the hessian of inner product between and . In Section C.6, we compute the Hessian of cross entropy loss function. In Section C.7, we show Hessian is positive definite. In Section C.8, we prove that Hessian is Lipschitz.

C.1 Function Definition

We define

Definition C.1.

We define as follows

-

•

Definition C.2.

We define as follows

-

•

Previous [50] studies three types of hyperbolic functions , and . We mainly focus on function.

Definition C.3 (Normalized coefficients, Definition 5.4 in [19]).

We define as follows

We define function softmax as follows

Definition C.4 (Function , Definition 5.1 in [19]).

Suppose that we’re given an matrix . Let denote a length- vector that all the coordinates are ones. We define prediction function as follows

Fact C.5.

Let be defined as Definition C.4. Then we have

-

•

Part 1.

-

•

Part 2.

-

•

Part 3.

Proof.

The proof is straightforward. For more details, we refer the readers to [19]. ∎

We define the loss

Definition C.6.

We define

Previous work [64] only considers entropy, here we consider cross entropy instead.

Definition C.7 (Cross Entropy).

We define ,

Definition C.8.

Suppose we’re given an matrix and where is a vector, we define

C.2 Gradient Computation for Function

C.3 Gradient Computation for Function

In this section, we explain how to compute the gradient of .

Lemma C.10.

If the following condition holds

-

•

Suppose that function is defined in Definition C.4.

We have

-

•

Part 1.

-

•

Part 2.

-

•

Part 3.

Proof.

Proof of Part 1.

For all index , we can compute the gradient with respect to

Then we group the coordinates, we get

Proof of Part 2. We have

where the first step follows from Part 1 and the second step follows from simple algebra.

Proof of Part 3. The proof directly follows from Part 2 and Definition of (See Definition C.7). ∎

C.4 Hessian Computation for Function

In this section, we will show how to compute the Hessian for function .

Lemma C.11.

If the following conditions hold

-

•

Let be defined as Definition C.4.

Then we have

-

•

Part 1.

-

•

Part 2.

C.5 Hessian Computation for Function

The goal of this section is to prove Lemma C.12.

Lemma C.12.

If the following conditions hold

-

•

Let be defined as Definition C.4.

Then we have

-

•

Part 1.

-

•

Part 2.

Proof.

The proof directly follows from Lemma C.11. ∎

C.6 Hessian Computation for Function

For convenient of analyzing the Hessian matrix, we will start with defining matrix .

Definition C.13.

We define as follows

Lemma C.14.

C.7 Hessian is Positive Definite

Previous work [19] doesn’t consider cross entropy into the final loss function. Here we generalize previous lemma so that cross entropy is also being considered.

Lemma C.15 (A cross entropy generalization of Lemma 6.3 in [19]).

Suppose the following conditions hold

-

•

Let , , , suppose that

-

•

-

•

Let

Then we have

-

•

Part 1. , then we have

-

•

Part 2. , then we have

C.8 Hessian is Lipschitz

Previous work [19] doesn’t consider cross entropy into the final loss function. Here we generalize previous lemma so that cross entropy is also being considered.

Lemma C.16 (A cross entropy version of Lemma 7.1 in [19]).

Suppose the following condition holds

-

•

Let and

-

•

Suppose that , and

-

•

Then we have

Proof.

Appendix D Main Theoretical Guarantees

Previous work [19] has proved the similar result without considering the cross entropy. We generalize the techniques in previous paper [19] from only considering task loss to considering both task loss and cross entropy loss ( see formal definition in Definition C.7). Our algorithm is a version of approximate Newton method, such methods have been widely used in many optimization tasks [13, 49, 6, 37, 66, 39, 20, 26, 67, 31, 57, 19]. In this work, we focus on the approximate Newton method along the line of [67, 19].

Theorem D.1 (Formal version of Theorem 3.1).

Let denote an length- vector that is satisfying,

Suppose the following conditions are holding:

-

•

, .

-

•

, , .

-

•

.

-

•

-

•

Suppose that is the final and is the failure probability.

-

•

Suppose satisfy condition .

-

•

Suppose that

Then there is a randomized algorithm (Algorithm 1) such that

-

•

it runs iterations

-

•

in each iteration, it spends time111Here denotes the exponent of matrix multiplication. Currently .

-

•

generates a vector that is satisfying

-

•

the succeed probability is

Proof.

The high level framework of our theorem is similar to previous work about exponential regression [50], softmax regression [19] and rescaled softmax regression [28]. Similarly as previous work [50, 19, 28, 64], we use the approximate newton algorithm (for example see Section 8 in [19]). So in the proof, we only focus on the difference about the Hessian positive definite lower bound and Hessian Lispchitz property.

References

- AS [23] Josh Alman and Zhao Song. Fast attention requires bounded entries. arXiv preprint arXiv:2302.13214, 2023.

- ASPH [22] Akari Asai, Mohammadreza Salehi, Matthew E. Peters, and Hannaneh Hajishirzi. Attempt: Parameter-efficient multi-task tuning via attentional mixtures of soft prompts, 2022.

- AW [21] Josh Alman and Virginia Vassilevska Williams. A refined laser method and faster matrix multiplication. In Proceedings of the 2021 ACM-SIAM Symposium on Discrete Algorithms (SODA), pages 522–539. SIAM, 2021.

- BCDG [09] Luisa Bentivogli, Peter Clark, Ido Dagan, and Danilo Giampiccolo. The fifth pascal recognizing textual entailment challenge. In TAC, 2009.

- BNS [19] Jan van den Brand, Danupon Nanongkai, and Thatchaphol Saranurak. Dynamic matrix inverse: Improved algorithms and matching conditional lower bounds. In 2019 IEEE 60th Annual Symposium on Foundations of Computer Science (FOCS), pages 456–480. IEEE, 2019.

- Bra [20] Jan van den Brand. A deterministic linear program solver in current matrix multiplication time. In Proceedings of the Fourteenth Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), pages 259–278. SIAM, 2020.

- BSH [22] Rishabh Bhardwaj, Amrita Saha, and Steven CH Hoi. Vector-quantized input-contextualized soft prompts for natural language understanding. arXiv preprint arXiv:2205.11024, 2022.

- BSZ [23] Jan van den Brand, Zhao Song, and Tianyi Zhou. Algorithm and hardness for dynamic attention maintenance in large language models. arXiv preprint arXiv:2304.02207, 2023.

- CDW+ [21] Zewen Chi, Li Dong, Furu Wei, Nan Yang, Saksham Singhal, Wenhui Wang, Xia Song, Xian-Ling Mao, He-Yan Huang, and Ming Zhou. Infoxlm: An information-theoretic framework for cross-lingual language model pre-training. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 3576–3588, 2021.

- CHBC [20] Xingyu Cai, Jiaji Huang, Yuchen Bian, and Kenneth Church. Isotropy in the contextual embedding space: Clusters and manifolds. In International Conference on Learning Representations, 2020.

- CHH [22] Yuxuan Chen, David Harbecke, and Leonhard Hennig. Multilingual relation classification via efficient and effective prompting. arXiv preprint arXiv:2210.13838, 2022.

- CKLM [19] Kevin Clark, Urvashi Khandelwal, Omer Levy, and Christopher D Manning. What does bert look at? an analysis of bert’s attention. In Proceedings of the 2019 ACL Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, pages 276–286, 2019.

- CLS [19] Michael B Cohen, Yin Tat Lee, and Zhao Song. Solving linear programs in the current matrix multiplication time. In STOC, 2019.

- Cov [99] Thomas M Cover. Elements of information theory. John Wiley & Sons, 1999.

- CZX+ [22] Xiang Chen, Ningyu Zhang, Xin Xie, Shumin Deng, Yunzhi Yao, Chuanqi Tan, Fei Huang, Luo Si, and Huajun Chen. Knowprompt: Knowledge-aware prompt-tuning with synergistic optimization for relation extraction. In Proceedings of the ACM Web Conference 2022, pages 2778–2788, 2022.

- DB [05] Bill Dolan and Chris Brockett. Automatically constructing a corpus of sentential paraphrases. In Third International Workshop on Paraphrasing (IWP2005), 2005.

- DCLT [19] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics.

- DKM+ [06] Cynthia Dwork, Krishnaram Kenthapadi, Frank McSherry, Ilya Mironov, and Moni Naor. Our data, ourselves: Privacy via distributed noise generation. In Annual International Conference on the Theory and Applications of Cryptographic Techniques, pages 486–503. Springer, 2006.

- DLS [23] Yichuan Deng, Zhihang Li, and Zhao Song. Attention scheme inspired softmax regression. arXiv preprint arXiv:2304.10411, 2023.

- DLY [21] Sally Dong, Yin Tat Lee, and Guanghao Ye. A nearly-linear time algorithm for linear programs with small treewidth: A multiscale representation of robust central path. In Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing, pages 1784–1797, 2021.

- DMNS [06] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of Cryptography: Third Theory of Cryptography Conference, TCC 2006, New York, NY, USA, March 4-7, 2006. Proceedings 3, pages 265–284. Springer, 2006.

- DMS [23] Yichuan Deng, Sridhar Mahadevan, and Zhao Song. Randomized and deterministic attention sparsification algorithms for over-parameterized feature dimension. arxiv preprint: arxiv 2304.03426, 2023.

- DWL+ [22] Kun Ding, Ying Wang, Pengzhang Liu, Qiang Yu, Haojian Zhang, Shiming Xiang, and Chunhong Pan. Prompt tuning with soft context sharing for vision-language models. arXiv preprint arXiv:2208.13474, 2022.

- GHLH [22] Yuxian Gu, Xu Han, Zhiyuan Liu, and Minlie Huang. Ppt: Pre-trained prompt tuning for few-shot learning. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8410–8423, 2022.

- GMS [23] Yeqi Gao, Sridhar Mahadevan, and Zhao Song. An over-parameterized exponential regression. arXiv preprint arXiv:2303.16504, 2023.

- GS [22] Yuzhou Gu and Zhao Song. A faster small treewidth sdp solver. arXiv preprint arXiv:2211.06033, 2022.

- [27] Yeqi Gao, Zhao Song, and Xin Yang. Differentially private attention computation. arXiv preprint arXiv:2305.04701, 2023.

- [28] Yeqi Gao, Zhao Song, and Junze Yin. An iterative algorithm for rescaled hyperbolic functions regression. arXiv preprint arXiv:2305.00660, 2023.

- HCD+ [16] Rein Houthooft, Xi Chen, Yan Duan, John Schulman, Filip De Turck, and Pieter Abbeel. Vime: Variational information maximizing exploration. Advances in neural information processing systems, 29, 2016.

- HDW+ [21] Shengding Hu, Ning Ding, Huadong Wang, Zhiyuan Liu, Juanzi Li, and Maosong Sun. Knowledgeable prompt-tuning: Incorporating knowledge into prompt verbalizer for text classification. arXiv preprint arXiv:2108.02035, 2021.

- HJS+ [22] Baihe Huang, Shunhua Jiang, Zhao Song, Runzhou Tao, and Ruizhe Zhang. Solving sdp faster: A robust ipm framework and efficient implementation. In 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS), pages 233–244. IEEE, 2022.

- HKK+ [19] Iris Hendrickx, Su Nam Kim, Zornitsa Kozareva, Preslav Nakov, Diarmuid O Séaghdha, Sebastian Padó, Marco Pennacchiotti, Lorenza Romano, and Stan Szpakowicz. Semeval-2010 task 8: Multi-way classification of semantic relations between pairs of nominals. arXiv preprint arXiv:1911.10422, 2019.

- HKM [21] Karen Hambardzumyan, Hrant Khachatrian, and Jonathan May. Warp: Word-level adversarial reprogramming. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 4921–4933, 2021.

- HMZ+ [22] Lianzhe Huang, Shuming Ma, Dongdong Zhang, Furu Wei, and Houfeng Wang. Zero-shot cross-lingual transfer of prompt-based tuning with a unified multilingual prompt. arXiv preprint arXiv:2202.11451, 2022.

- HWSW [22] Keqing He, Jingang Wang, Chaobo Sun, and Wei Wu. Unified knowledge prompt pre-training for customer service dialogues. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, pages 4009–4013, 2022.

- HZT+ [22] Yun He, Steven Zheng, Yi Tay, Jai Gupta, Yu Du, Vamsi Aribandi, Zhe Zhao, YaGuang Li, Zhao Chen, Donald Metzler, et al. Hyperprompt: Prompt-based task-conditioning of transformers. In International Conference on Machine Learning, pages 8678–8690. PMLR, 2022.

- JKL+ [20] Haotian Jiang, Tarun Kathuria, Yin Tat Lee, Swati Padmanabhan, and Zhao Song. A faster interior point method for semidefinite programming. In 2020 IEEE 61st annual symposium on foundations of computer science (FOCS), pages 910–918. IEEE, 2020.

- JLK+ [21] Jiaxin Ju, Ming Liu, Huan Yee Koh, Yuan Jin, Lan Du, and Shirui Pan. Leveraging information bottleneck for scientific document summarization. In Findings of the Association for Computational Linguistics: EMNLP 2021, pages 4091–4098, 2021.

- JSWZ [21] Shunhua Jiang, Zhao Song, Omri Weinstein, and Hengjie Zhang. Faster dynamic matrix inverse for faster lps. In STOC. arXiv preprint arXiv:2004.07470, 2021.

- JZZ+ [22] Baijun Ji, Tong Zhang, Yicheng Zou, Bojie Hu, and Si Shen. Increasing visual awareness in multimodal neural machine translation from an information theoretic perspective. arXiv preprint arXiv:2210.08478, 2022.

- KKL [20] Nikita Kitaev, Łukasz Kaiser, and Anselm Levskaya. Reformer: The efficient transformer. arXiv preprint arXiv:2001.04451, 2020.

- LARC [21] Brian Lester, Rami Al-Rfou, and Noah Constant. The power of scale for parameter-efficient prompt tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 3045–3059, 2021.

- LBL+ [22] Xiaochen Liu, Yu Bai, Jiawei Li, Yinan Hu, and Yang Gao. Psp: Pre-trained soft prompts for few-shot abstractive summarization. arXiv preprint arXiv:2204.04413, 2022.

- LG [14] François Le Gall. Powers of tensors and fast matrix multiplication. In Proceedings of the 39th international symposium on symbolic and algebraic computation, pages 296–303, 2014.

- LL [21] Xiang Lisa Li and Percy Liang. Prefix-tuning: Optimizing continuous prompts for generation. arXiv preprint arXiv:2101.00190, 2021.

- LLY [22] Chi-Liang Liu, Hung-yi Lee, and Wen-tau Yih. Structured prompt tuning. arXiv preprint arXiv:2205.12309, 2022.

- LOG+ [19] Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: A robustly optimized bert pretraining approach. ArXiv, abs/1907.11692, 2019.

- LSX+ [23] Shuai Li, Zhao Song, Yu Xia, Tong Yu, and Tianyi Zhou. The closeness of in-context learning and weight shifting for softmax regression. arXiv preprint arXiv:2304.13276, 2023.

- LSZ [19] Yin Tat Lee, Zhao Song, and Qiuyi Zhang. Solving empirical risk minimization in the current matrix multiplication time. In COLT, 2019.

- LSZ [23] Zhihang Li, Zhao Song, and Tianyi Zhou. Solving regularized exp, cosh and sinh regression problems. arXiv preprint arXiv:2303.15725, 2023.

- MBH [21] Rabeeh Karimi Mahabadi, Yonatan Belinkov, and James Henderson. Variational information bottleneck for effective low-resource fine-tuning. arXiv preprint arXiv:2106.05469, 2021.

- MC [18] Zhuang Ma and Michael Collins. Noise contrastive estimation and negative sampling for conditional models: Consistency and statistical efficiency. arXiv preprint arXiv:1809.01812, 2018.

- MCC+ [19] Kory W Mathewson, Pablo Samuel Castro, Colin Cherry, George Foster, and Marc G Bellemare. Shaping the narrative arc: An information-theoretic approach to collaborative dialogue. arXiv preprint arXiv:1901.11528, 2019.

- NCG [16] Thien Huu Nguyen, Kyunghyun Cho, and Ralph Grishman. Joint event extraction via recurrent neural networks. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 300–309, 2016.

- PH [21] Vishakh Padmakumar and He He. Unsupervised extractive summarization using pointwise mutual information. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pages 2505–2512, 2021.

- QE [21] Guanghui Qin and Jason Eisner. Learning how to ask: Querying lms with mixtures of soft prompts. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 5203–5212, 2021.

- QSZZ [23] Lianke Qin, Zhao Song, Lichen Zhang, and Danyang Zhuo. An online and unified algorithm for projection matrix vector multiplication with application to empirical risk minimization. In AISTATS, 2023.

- QWS+ [21] Yujia Qin, Xiaozhi Wang, YuSheng Su, Yankai Lin, Ning Ding, Zhiyuan Liu, Juanzi Li, Lei Hou, Peng Li, Maosong Sun, and Jie Zhou. Exploring low-dimensional intrinsic task subspace via prompt tuning. CoRR, abs/2110.07867, 2021.

- SDJS [22] Victor Steinborn, Philipp Dufter, Haris Jabbar, and Hinrich Schütze. An information-theoretic approach and dataset for probing gender stereotypes in multilingual masked language models. In Findings of the Association for Computational Linguistics: NAACL 2022, pages 921–932, 2022.

- Sha [48] Claude Elwood Shannon. A mathematical theory of communication. The Bell system technical journal, 27(3):379–423, 1948.

- SPW+ [13] Richard Socher, Alex Perelygin, Jean Wu, Jason Chuang, Christopher D Manning, Andrew Y Ng, and Christopher Potts. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 conference on empirical methods in natural language processing, pages 1631–1642, 2013.

- SRdV [22] Nathan Schucher, Siva Reddy, and Harm de Vries. The power of prompt tuning for low-resource semantic parsing. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 148–156, 2022.

- SRR+ [22] Taylor Sorensen, Joshua Robinson, Christopher Rytting, Alexander Shaw, Kyle Rogers, Alexia Delorey, Mahmoud Khalil, Nancy Fulda, and David Wingate. An information-theoretic approach to prompt engineering without ground truth labels. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 819–862, 2022.

- SSZ [23] Ritwik Sinha, Zhao Song, and Tianyi Zhou. A mathematical abstraction for balancing the trade-off between creativity and reality in large language models. arXiv preprint, 2023.

- SWQ+ [22] Yusheng Su, Xiaozhi Wang, Yujia Qin, Chi-Min Chan, Yankai Lin, Huadong Wang, Kaiyue Wen, Zhiyuan Liu, Peng Li, Juanzi Li, et al. On transferability of prompt tuning for natural language processing. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 3949–3969, 2022.

- SY [21] Zhao Song and Zheng Yu. Oblivious sketching-based central path method for solving linear programming problems. In 38th International Conference on Machine Learning (ICML), 2021.

- SYYZ [22] Zhao Song, Xin Yang, Yuanyuan Yang, and Tianyi Zhou. Faster algorithm for structured john ellipsoid computation. arXiv preprint arXiv:2211.14407, 2022.

- VLC+ [22] Tu Vu, Brian Lester, Noah Constant, Rami Al-Rfou, and Daniel Cer. Spot: Better frozen model adaptation through soft prompt transfer. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 5039–5059, 2022.

- WHBC [19] Peter West, Ari Holtzman, Jan Buys, and Yejin Choi. Bottlesum: Unsupervised and self-supervised sentence summarization using the information bottleneck principle, 2019.

- Wil [12] Virginia Vassilevska Williams. Multiplying matrices faster than coppersmith-winograd. In Proceedings of the forty-fourth annual ACM symposium on Theory of computing, pages 887–898, 2012.

- WLK+ [20] Sinong Wang, Belinda Z Li, Madian Khabsa, Han Fang, and Hao Ma. Linformer: Self-attention with linear complexity. arXiv preprint arXiv:2006.04768, 2020.

- WS [22] Hui Wu and Xiaodong Shi. Adversarial soft prompt tuning for cross-domain sentiment analysis. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2438–2447, 2022.

- WSB [19] Alex Warstadt, Amanpreet Singh, and Samuel R Bowman. Neural network acceptability judgments. Transactions of the Association for Computational Linguistics, 7:625–641, 2019.

- WSM+ [18] Alex Wang, Amanpreet Singh, Julian Michael, Felix Hill, Omer Levy, and Samuel R Bowman. Glue: A multi-task benchmark and analysis platform for natural language understanding. arXiv preprint arXiv:1804.07461, 2018.

- WWC+ [20] Boxin Wang, Shuohang Wang, Yu Cheng, Zhe Gan, Ruoxi Jia, Bo Li, and Jingjing Liu. Infobert: Improving robustness of language models from an information theoretic perspective. arXiv preprint arXiv:2010.02329, 2020.

- WWG+ [22] Zhuofeng Wu, Sinong Wang, Jiatao Gu, Rui Hou, Yuxiao Dong, VG Vydiswaran, and Hao Ma. Idpg: An instance-dependent prompt generation method. arXiv preprint arXiv:2204.04497, 2022.

- YJK+ [22] Seonghyeon Ye, Joel Jang, Doyoung Kim, Yongrae Jo, and Minjoon Seo. Retrieval of soft prompt enhances zero-shot task generalization. arXiv preprint arXiv:2210.03029, 2022.

- YM [16] Bishan Yang and Tom Mitchell. Joint extraction of events and entities within a document context. arXiv preprint arXiv:1609.03632, 2016.

- ZHDK [23] Amir Zandieh, Insu Han, Majid Daliri, and Amin Karbasi. Kdeformer: Accelerating transformers via kernel density estimation. arXiv preprint arXiv:2302.02451, 2023.

- ZLM+ [22] Songming Zhang, Yijin Liu, Fandong Meng, Yufeng Chen, Jinan Xu, Jian Liu, and Jie Zhou. Conditional bilingual mutual information based adaptive training for neural machine translation. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2377–2389, 2022.