Infinitely Wide Tensor Networks as Gaussian Process

Abstract

Gaussian Process is a non-parametric prior which can be understood as a distribution on the function space intuitively. It is known that by introducing appropriate prior to the weights of the neural networks, Gaussian Process can be obtained by taking the infinite-width limit of the Bayesian neural networks from a Bayesian perspective. In this paper, we explore the infinitely wide Tensor Networks and show the equivalence of the infinitely wide Tensor Networks and the Gaussian Process. We study the pure Tensor Network and another two extended Tensor Network structures: Neural Kernel Tensor Network and Tensor Network hidden layer Neural Network and prove that each one will converge to the Gaussian Process as the width of each model goes to infinity. (We note here that Gaussian Process can also be obtained by taking the infinite limit of at least one of the bond dimensions in the product of the chain of tensor nodes, and the proofs can be done with the same ideas in the proofs of the infinite-width cases.) We calculate the mean function (mean vector) and the covariance function (covariance matrix) of the finite dimensional distribution of the induced Gaussian Process by the infinite-width tensor network with a general set-up. We study the properties of the covariance function and derive the approximation of the covariance function when the integral in the expectation operator is intractable. In the numerical experiments, we implement the Gaussian Process corresponding to the infinite limit tensor networks and plot the sample paths of these models. We study the hyperparameters and plot the sample path families in the induced Gaussian Process by varying the standard deviations of the prior distributions. As expected, the parameters in the prior distribution namely the hyper-parameters in the induced Gaussian Process controls the characteristic lengthscales of the Gaussian Process.

1 Introduction

Gaussian Process (GP) as the infinite neural network function prior attracts remarkable attention recently ever since the discovery of the equivalence between GP and the infinite-width one hidden layer Neural Network (Neal, 1995). The idea of setting the number of neurons in the hidden layer of neural network to the infinite limit is motivated by answering the following question: how our knowledge (background information) can be used to suggest the prior distribution? It turns out that as the number of neurons in the hidden layer goes to infinity, the response of the infinite-width hidden layer neural network with weights prior converges to the GP. The interesting point of this limit is that we can obtain a prior distribution on the function space by the limiting process, namely the distribution of the response function by introducing a prior distribution on the weights. More concretely, by achieving the infinite-width limit of the neural network, the response function is sampled from the function space with density function rather than parameterized by a fixed function form with the parameters to be learned from the data set.

In some sense, the GP is more flexible which has stronger representation power than the neural networks. So it is suggested to work on the non-parametric Gaussian Process model which is parameterized arbitrarily before the data arrive instead of the neural network with fixed parameterized function. D. MacKay has a nice review discussing the neural networks and Gaussian Process, see (MacKay, 1998). In the work by Williams (Williams, 1997), the GPs induced by the limit of the neural network with specific neurons, namely the sigmoid and gaussian neurons, are constructed. The following work studied the infinite limit of the neural network with more general structures: the general shallow infinite-width neural network, the convolutional neural network and other structures (Roux and Bengio, 2007; Novak et al., 2018; Garriga-Alonso et al., 2018; Yang, 2019). In (Hazan and Jaakkola, 2015; Lee et al., 2017; Matthews et al., 2018), the equivalence between the infinite-width deep neural network and the GP is proved and the performance of the infinite-width deep neural network induced GP is compared with the normal model. Recently, the connection between kernel machine and sufficiently wide neural network is studied in a series work, see (Cho and Saul, 2009; Daniely et al., 2016; Daniely, 2017; Jacot et al., 2018).

2 Preliminaries: Gaussian Process by Neural Networks

2.1 Basis of Gaussian Process

As is well known, Gaussian Process can be understood as the generalization of the multi-variate Gaussian distribution on the vector space to infinite dimensional function space which is defined by the mean function and the covariance function . Here represents the mean of the random variable with index , and the covariance represents the covariance between two random variable and with index and . In the application case with finite size training data set, the finite dimensional distribution (f.d.d.s.) of the Gaussian Process is used to model the data set, and then the mean function degenerate to the finite dimensional vector and the covariance function degenerate to the covariance matrix which defines the f.d.d.s. of the GP, namely the multi-variates Gaussian distribution .

The nice point of the GP is that the Bayesian updating of the GP prior is analytically tractable and simple. For a GP prior as follows,

| (1) |

We can get the joint distribution of the training data and the easily as

| (2) |

The predictive posterior distribution can be obtained analytically as

| (3) |

It is easy to extend above analysis to the noisy label model, namely introducing noise to the label .

For a non-parametric model, it can adapt itself to simulate the data after the data comes but we can still use the Maximum Likelihood Estimator (M.L.E.) or the Maximum A Posterior (M.A.P.) to optimize the hyper-parameters. We can get the nice marginal likelihood as follows,

| (4) |

where .

The training data only get into first term of above formula which evaluates how good our model fits the given training data, however the second term only depends on the covariance matrix which is a model complexity penalty term. We can undertand the competition between the data fitting and the model capacity by considering the length-scale. The model capacity will increase as the length-scale decrease, namely the model complexity penalty will increase although the data-fitting will get better. From the computation perspective, we know to get the optimal point of the marginal likelihood, we need to compute the inverse of the covariance matrix which is of order , where is the training sample size. For application in the big data set, the inverse matrix computation will be highly time-consuming so we can consider using matrix approximation method such as outer-product approximation. See (Williams and Rasmussen, 2006) for a good review on GP application in Machine Learning.

For an infinite-width neural network with response function as

| (5) |

where and represent the weights and the bias in the ’th layer and represents the activation function. Since all parameters in the neural network are independent and identically distributed (i.i.d.), by the Central Limit Theorem (C.L.T.), we know as ,

| (6) |

where belongs to the Normal distribution. Since is normal and independent with , we know is normal random variable. We will get a sequence of random variables by evaluating the infinite neural network on the data set. For the neural network with several special non-linear activation functions, analytical expressions of the Gaussian Process are obtained (Williams, 1997).

Above discussion is an intuition explaining of the equivalence between GP and infinite-width neural networks, here we will propose our first theorem on the equivalence between GP and neural networks and provide a strict proof in the Appendix A.

Theorem 1.

Let the response of the one hidden layer neural network with neurons as

| (7) |

where

As goes to infinity, converge to a Gaussian Process, with , and as the mean and covariance function.

3 Infinite Limits of Tensor Networks

3.1 Introduction of Tensor Network

For a nodes Tensor Network, actually Matrix Product States (M.P.S.) (Biamonte and Bergholm, 2017; Orús, 2014; Cichocki, 2014), the scalar response is as follows,

| (8) |

where

Here represents the function which maps each component of the input data into a higher dimensional feature space. represents the rank tensor node, where is the physical index which contracts with the index in the kernel . is the bond index by which we can tune the representation power of the tensor network.

M.P.S. is introduced to approximate a quantum state efficiently in quantum physics (Orús, 2019; Chabuda et al., 2020; Schröder et al., 2019; McMahon et al., 2020). To fully describe a -party quantum state , we need basis which grows exponentially with respecting to . By expanding the -party quantum state with a chain of product of matrices, we can approximate the original quantum state with less parameters. For the M.P.S., the number of the basis grows linearly as increase.

| (9) |

Here we write a general n-party state as a matrix product chain with parameters.

Tensor Networks as a powerful machine has been widely studied to construct statistical learning model in Machine Learning community recently and also great achievements have been obtained in different tasks such as supervised pattern recognition (classification and regression), natural language processing and density estimation (unsupervised generative model) (Stoudenmire and Schwab, 2016; Han et al., 2018; Pestun et al., 2017; Novikov et al., 2018). Since the special structure of tensor network, the model easily blows out or decays to dead nodes during training process, efficient and robust initialization strategy was proposed in (Guo and Draper, 2021). It is known that the correlation function of tensor networks decays exponentially (Evenbly and Vidal, 2011b, a), so it is discussed to extend the tensor network to more complicated structures such as multi-scale tree structure to reduce the correlation decay problem in machine learning application (Stoudenmire, 2018). More extension work of tensor network can be found in (Cheng et al., 2020; Li and Zhang, 2018; Cheng et al., 2020; Liu et al., 2018; Glasser et al., 2018).

3.2 Infinite-Width Pure Tensor Networks

3.2.1 Theory

Since the special structure of the MPS which is the contraction of a chain of tensor nodes, we can obtain a ’trivial’ Gaussian Process pure MPS without activation. So our first theorem on the infinite-width MPS equivalent GP is as follows,

Theorem 2.

For an infinite-width MPS with independent random tensor nodes (we note here i.i.d. is not necessary), namely

| (10) |

with at lease one of the bond index whose dimension , as the number of the tensor nodes goes to infinity, the response will converge to the Gaussian Process,

| (11) |

where is the response of the infinite-width MPS, is the mean function and is the covariance function.

Note: the GP limit can also be obtained by taking the infinite limit of at lease one of bond index , where if the width of the tensor network is fixed to be finite.

A proof of Theorem 2 is provided in Appendix B.1 Proof of Theorem 2. Without hidden layer and activation, the response of MPS is linear with respect to the tensor weights which means the sample path of the equivalent GP is linear function. We can write down the joint distribution of the sequence of random variables, namely the response of the MPS evaluating at different training points .

| (12) |

where , is the coefficient of the linear transformation between and , namely and is the normal distribution. It is easy to verify that each random variable is normal and then the sequence is GP.

Formally we can write down the joint distribution of and as

where

Since any two random variables and in the sequence are related by a linear transformation, we know the correlation of these two random variables is or . Using the condition probability rule, we have

where

So in the pure MPS case, the GP is ’trivial’ since the uncertainty band of given is , and then the conditional distribution is a generalized function which is defined as the limit of a sequence of normal distributions showed above.

We can get the sample path of the GP as follows,

where and are two responses of the MPS evaluating at data points and .

3.2.2 Discussion on Equivalent GP induced by Specific Kernel

We can get the mean function and the covariance function of the equivalent GP of pure tensor network as follows,

| (13) | |||

| (14) |

where

Here we consider a specific normal prior and kernel as follows,

we get the mean function and the covariance function as

To avoid the power explosion problem, in application case we need to set that

namely,

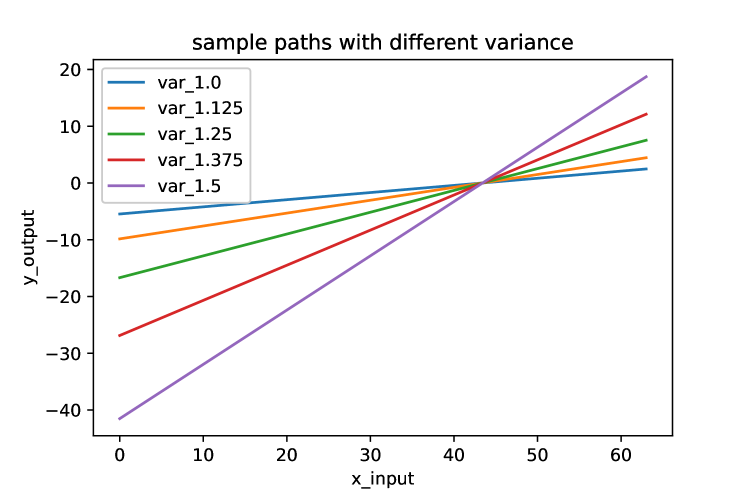

In the infinite limit, we should set to be . Actually this means in the case when the tensor network is very wide, we need to fine tune the standard deviation of the prior distribution to keep the network in stable range which is discussed in (Guo and Draper, 2021). In Fig. 1, we show the sample path of the pure MPS and also plot the sample paths sampled from the MPS with different standard deviation .

3.3 Hybrid Tensor Neural Networks

For the infinite-width pure MPS, no activation functions and no latent variables are introduced and then the response function is linear respecting to the weights in each tensor node. The representation power of the pure tensor network is limited since the pure MPS can only represent the linear function. To increase the representation capacity of MPS, we can introduce another layer of neural network with activation functions. Here we propose two methods to combine the MPS with the neural network:

-

•

Neural network kernel MPS;

-

•

MPS hidden layer neural network.

For the neural network kernel MPS, we use one layer neural network as the kernel function and ’non-linearity’ is introduced by the hidden layer and the activation function in neural network. For the MPS hidden layer neural network, the response of tensor network is fed into the neural network by which the linearity is injected into the model.

In the following sections, we will discuss these two hybrid tensor neural network and the theorems on their Gaussian Process equivalence properties.

3.3.1 Infinite-width Neural kernel Tensor Networks

The set-up of neural network kernel MPS is as follows,

| (15) | |||

| (16) |

where

where is the weights of the neural kernel, is the output of the hidden neural network. After we get the response of the neural network, we reshape the output of our neural network to tensor and feed it into the MPS.

The following theorem states the equivalence between the infinite-width neural kernel tensor network and GP.

Theorem 3.

For a neural kernel tensor network with independent (we note here that i.i.d. is not necessary) tensor nodes whose distribution are

and also with neural weights whose components are independent following the distribution as

with at lease one of the bond index whose dimension . As the number of the tensor nodes and also the number of the nodes of the hidden layer and the output layer of the neural network go to infinity, the response of the neural kernel tensor network will converge to a GP,

where is the response of the infinite-width MPS, is the mean function and is the covariance function.

Note: the GP limit can also be obtained by taking the infinite limit of at lease one of bond index , where if the width of the tensor network is fixed to be finite.

A proof of above Theorem 3 is given in Appendix B.2 Proof of Theorem 3. The interesting point of above theorem is that we just need to assume that the tensor nodes in tensor network to be independent with each other and also all the components of neural weights to be independent, then the respond of the model will converge to GP without assuming any other condition such as identical distribution requirements in the infinite-width neural network case. Roughly, the reason why the identical distribution requirement is not needed in our case is the factorization property of the tensor network which decompose a high rank tensor into a series of production of low rank tensor nodes. Thanks for the bond index in the tensor network, the sum of the infinite i.i.d. random variables is obtained and by C.L.T, we will get a sequence of normal random variables.

The mean function and covariance function of the neural kernel tensor network is as follows,

3.4 Infinite-Width MPS hidden layer Neural Networks

3.4.1 Theory

In this part, we feed the output of the MPS with an activation into a fully connected neuron layer. We write down the formula of the dimensional one hidden layer MPS Neural Network as follows,

| (17) | |||

| (18) |

where is the activation function, usually it is the Sigmoid, Relu or Tanh function.

In the following part, we will state the theorem on the equivalence between the infinite-width MPS hidden layer Neural Network and the GP.

Theorem 4.

For the MPS hidden layer Neural Network whose tensor nodes are all independent with each other, and also all the components of the neural weights are all independent with each other with following distributions,

| (19) | |||

| (20) |

with at lease one of the bond index whose dimension . As the number of the tensor nodes goes to to infinity, the response will converge to a GP,

where is the response of the infinite-width MPS, is the mean function and is the covariance function.

Note: the GP limit can also be obtained by taking the infinite limit of at lease one of bond index , where if the width of the tensor network is fixed to be finite.

A proof of Theorem 4 will be given in the appendix B.3 Proof of Theorem 4. We note here as the width of the tensor network gets to infinity, each component of the respond of the tensor network will converge to a GP and they are independent with each other. The number of neurons in the neural network will get to infinity as the infinite-width of the tensor network is obtained, so C.L.T. can be used to show that the sequence of the random variables with the data point indices is a GP.

We calculate the mean function and the variance function as

| (21) | |||

| (22) |

In computation of the covariance matrix, the neural activations of different data points are coupled with each other which means the analytical result is difficult to be obtained. We can use the Monte Carlo simulation to compute the matrix element of the covariance function evaluated at different data pairs by sampling hierarchically. We can get the sample activations from neural network by sampling from the prior and then plugging the prior sample into the hybrid MPS neural network which is straightforward. If the tensor network structure is not huge, the time complexity is acceptable.

3.4.2 Approximation of Covariance matrix

For one hidden layer neural network, when the activation is chosen as the Sigmoidal or Gaussian function, analytical result of the covariance function was obtained (Williams, 1997). However, in our MPS hidden neural network case, the covariance integral is much more complicated since different component of the MPS couples with each other by the bond dimension of the tensor network. We obtain the approximation of by expanding the square terms around the mean using Taylor expansion.

where is the diagonal part of the covariance matrix of random vector , namely the variance vector every component of which is the variance of each component of the tensor nodes vector . See the Appendix C. Approximation of Covariance Function to get more details of the calculation.

4 Experiments

We implement all three tensor network induced GPs in the numerical experiments. We study the sample paths of each GP and also explore the sample paths family by varying hyperparameters, namely the standard deviations and of the tensor nodes and the neural weights prior.

In Fig. 1(a), we sample several random paths from the pure MPS GP. In Fig. 1(b), we plot the sample paths family of pure MPS GP with different standard deviation . We find that the slope of the sample path increases hugely as the standard deviation of the prior of the tensor nodes increases.

In Fig. 2, we plot the numerical experiment of the neural kernel MPS. In Fig. 2(a), we plot the sample paths sampled from the neural kernel MPS. Since the introduction of neural hidden layer and the non-linear activation, the sample paths are not linear and more complicated than the sample paths by pure MPS model. In Fig. 2(b), we plot one sample path varying as we increase the standard deviation . As expected, the variation of the sample path increases as we increase the standard deviation of the tensor nodes prior. In the dimensional data vector case, the sample path is dimensional surface. In Fig. 2(c) and 2(d), we plot the sample path of the neural kernel MPS model with two dimensional data points. Now the sample path is two dimensional surface.

In Fig. 3(a) and Fig. 3(b), we compare the sample paths from MPS hidden neural network with increased standard deviation from to . We find that as we increase the standard deviation of the prior, the sample paths become more complicated which captures greater data fitting ability. In Fig. 3(c) and Fig. 3(d), the standard deviation is increased and model complexity increases at accordingly.

5 Conclusion

We study the infinite-width limit of tensor networks, acutally the pure MPS and the extended hybrid neural tensor networks: combining the MPS layer with neural layers. We prove that the pure MPS and the hybrid tensor networks converge to GP as the width of tensor network goes to infinity. For the pure MPS, since no non-linearity is injected into the model, the equivalent GP induced by the pure MPS is trivial in which each gaussian random variable indexed by a specific data point has zero uncertainty band. However, we can extend the pure MPS to more complicated structures by introducing hidden layers or using neural kernels. So we study the infinite limit of two kinds of hybrid tensor neural networks. We proved that these two structures converge to GP as the their widths go to infinity and calculate the equivalent GP of the infinite-width tensor network. In the numerical experiment, we explore the sample path and the properties of hyper-parameters of the tensor network induced GP and plot the sample path families by varying the standard deviations of the prior. The tensor networks have rich structures (intuitively the exponentially increasing contraction ways as the number of tensor nodes increases) and powerful fitting ability, so the function space supported by tensor networks has profound structures which allows well behaved limit processes. From the application perspective, over-parameterization and huge function space supported by tensor networks also lead to training instability, poor generalization and scaling difficulties which are essential problems in applying tensor network to big data set analysis.

Acknowledgements

The authors wish to thank David Helmbold, Hongyun Wang, Qi Gong, Torsten Ehrhardt, Hai Lin and Francois Monard for their helpful discussions. Erdong Guo is grateful for the financial support by Ming-Ren Teahouse and Uncertainty Quantification LLC for this work.

References

- Biamonte and Bergholm (2017) Biamonte, J. and Bergholm, V. (2017). Tensor networks in a nutshell. arXiv preprint arXiv:1708.00006.

- Chabuda et al. (2020) Chabuda, K., Dziarmaga, J., Osborne, T. J. and Demkowicz-Dobrzański, R. (2020). Tensor-network approach for quantum metrology in many-body quantum systems. Nature Communications, 11 1–12.

- Cheng et al. (2020) Cheng, S., Wang, L. and Zhang, P. (2020). Supervised learning with projected entangled pair states. arXiv preprint arXiv:2009.09932.

- Cho and Saul (2009) Cho, Y. and Saul, L. (2009). Kernel methods for deep learning. Advances in neural information processing systems, 22 342–350.

- Cichocki (2014) Cichocki, A. (2014). Era of big data processing: A new approach via tensor networks and tensor decompositions. arXiv preprint arXiv:1403.2048.

- Daniely (2017) Daniely, A. (2017). Sgd learns the conjugate kernel class of the network. In Advances in Neural Information Processing Systems.

- Daniely et al. (2016) Daniely, A., Frostig, R. and Singer, Y. (2016). Toward deeper understanding of neural networks: The power of initialization and a dual view on expressivity. In Advances In Neural Information Processing Systems.

- Evenbly and Vidal (2011a) Evenbly, G. and Vidal, G. (2011a). Quantum criticality with the multi-scale entanglement renormalization ansatz, chapter in” strongly correlated systems, numerical methods”, edited by adolfo avella and ferdinando mancini. Springer Series in Solid-State Sciences, 176.

- Evenbly and Vidal (2011b) Evenbly, G. and Vidal, G. (2011b). Tensor network states and geometry. Journal of Statistical Physics, 145 891–918.

- Garriga-Alonso et al. (2018) Garriga-Alonso, A., Rasmussen, C. E. and Aitchison, L. (2018). Deep convolutional networks as shallow gaussian processes. arXiv preprint arXiv:1808.05587.

- Glasser et al. (2018) Glasser, I., Pancotti, N. and Cirac, J. I. (2018). Supervised learning with generalized tensor networks. arXiv preprint arXiv:1806.05964.

- Guo and Draper (2021) Guo, E. and Draper, D. (2021). The bayesian method of tensor networks. arXiv preprint arXiv:2101.00245.

- Han et al. (2018) Han, Z.-Y., Wang, J., Fan, H., Wang, L. and Zhang, P. (2018). Unsupervised generative modeling using matrix product states. Physical Review X, 8 031012.

- Hazan and Jaakkola (2015) Hazan, T. and Jaakkola, T. (2015). Steps toward deep kernel methods from infinite neural networks. arXiv preprint arXiv:1508.05133.

- Jacot et al. (2018) Jacot, A., Gabriel, F. and Hongler, C. (2018). Neural tangent kernel: Convergence and generalization in neural networks. In Advances in neural information processing systems.

- Lee et al. (2017) Lee, J., Bahri, Y., Novak, R., Schoenholz, S. S., Pennington, J. and Sohl-Dickstein, J. (2017). Deep neural networks as gaussian processes. arXiv preprint arXiv:1711.00165.

- Li and Zhang (2018) Li, Z. and Zhang, P. (2018). Shortcut matrix product states and its applications. arXiv preprint arXiv:1812.05248.

- Liu et al. (2018) Liu, D., Ran, S.-J., Wittek, P., Peng, C., Garcıa, R. B., Su, G. and Lewenstein, M. (2018). Machine learning by two-dimensional hierarchical tensor networks: A quantum information theoretic perspective on deep architectures. stat, 1050 23.

- MacKay (1998) MacKay, D. J. (1998). Introduction to gaussian processes. NATO ASI Series F Computer and Systems Sciences, 168 133–166.

- Matthews et al. (2018) Matthews, A. G. d. G., Rowland, M., Hron, J., Turner, R. E. and Ghahramani, Z. (2018). Gaussian process behaviour in wide deep neural networks. arXiv preprint arXiv:1804.11271.

- McMahon et al. (2020) McMahon, N. A., Singh, S. and Brennen, G. K. (2020). A holographic duality from lifted tensor networks. npj Quantum Information, 6 1–13.

- Neal (1995) Neal, R. M. (1995). BAYESIAN LEARNING FOR NEURAL NETWORKS. Ph.D. thesis, University of Toronto.

- Novak et al. (2018) Novak, R., Xiao, L., Lee, J., Bahri, Y., Yang, G., Hron, J., Abolafia, D. A., Pennington, J. and Sohl-Dickstein, J. (2018). Bayesian deep convolutional networks with many channels are gaussian processes. arXiv preprint arXiv:1810.05148.

- Novikov et al. (2018) Novikov, A., Trofimov, M. and Oseledets, I. (2018). Exponential machines. Bulletin of the Polish Academy of Sciences. Technical Sciences, 66.

- Orús (2014) Orús, R. (2014). A practical introduction to tensor networks: Matrix product states and projected entangled pair states. Annals of Physics, 349 117–158.

- Orús (2019) Orús, R. (2019). Tensor networks for complex quantum systems. Nature Reviews Physics, 1 538–550.

- Pestun et al. (2017) Pestun, V., Terilla, J. and Vlassopoulos, Y. (2017). Language as a matrix product state. arXiv preprint arXiv:1711.01416.

-

Roux and Bengio (2007)

Roux, N. L. and Bengio, Y. (2007).

Continuous neural networks.

In Proceedings of the Eleventh International Conference on

Artificial Intelligence and Statistics (M. Meila and X. Shen, eds.), vol. 2

of Proceedings of Machine Learning Research. PMLR, San Juan, Puerto

Rico.

http://proceedings.mlr.press/v2/leroux07a.html - Schröder et al. (2019) Schröder, F. A., Turban, D. H., Musser, A. J., Hine, N. D. and Chin, A. W. (2019). Tensor network simulation of multi-environmental open quantum dynamics via machine learning and entanglement renormalisation. Nature communications, 10 1–10.

- Stoudenmire and Schwab (2016) Stoudenmire, E. and Schwab, D. J. (2016). Supervised learning with tensor networks. In Advances in Neural Information Processing Systems.

- Stoudenmire (2018) Stoudenmire, E. M. (2018). Learning relevant features of data with multi-scale tensor networks. Quantum Science and Technology, 3 034003.

- Williams (1997) Williams, C. K. (1997). Computing with infinite networks. In Advances in neural information processing systems.

- Williams and Rasmussen (2006) Williams, C. K. and Rasmussen, C. E. (2006). Gaussian processes for machine learning, vol. 2. MIT press Cambridge, MA.

- Yang (2019) Yang, G. (2019). Scaling limits of wide neural networks with weight sharing: Gaussian process behavior, gradient independence, and neural tangent kernel derivation. arXiv preprint arXiv:1902.04760.

A. Infinite Width Neural Networks

In this appendix we prove the equivalence of the infinite width one hidden layer Neural Net and GP.

Lemma 1 For a sequence of random variable ,

If , then

Proof. Since , we have

We can write down the characteristic function of the random vector as

which is just the characteristic function of multi-variate normal distribution .

Theorem 1

Let the response of the one hidden layer neural network with neurons as

where

As goes to infinity, converge to the Gaussian Process,

where , and are the mean and covariance function.

Proof. Since all components of the neural layer weights are i.i.d., we know that all the components of the output of the first layer network are i.i.d.,

then we can easily verify that

In neural networks, the activation functions are mostly well behaved, so the mean and the variance of the output are bounded,

By the central limit theorem, we know that as goes to , the infinite sum will converge to a random variable with gaussian distribution, namely

where

Since random variable belongs to the gaussian distribution, then the sum of two gaussian variables , namely

We can set to be fixed constant during the limit process to keep well behaved. Now we have already proved that for a finite index set which is just the training set , there exists a sequence of gaussian random variables . To extend the finite sequence to the infinite index sequence such as the interval , we need to verify that the finite dimensional distributions satisfy the Kolmogorov consistency (extension) theorem. However, it is known that as long as the f.d.d.s. is a consistent distribution, then the stochastic process exists. In our neural network case, the f.d.d.s. also satisfies the two consistent conditions, then we proved that infinite width one hidden layer neural networks is equivalent to the gaussian process.

B. Infinite Width Tensor Networks

In this appendix, we will prove the theorems on the equivalence of the infinite width tensor network and GP. The main idea of the proofs are similar to the proof of Theorem 1.

B.1 Proof of Theorem 2

There are three indices in each tensor node , so at first step we can contract the kernel with the indices sequence in tensor network, and then we get

| (23) |

where

| (24) |

It is easy to verify that all the components of the are i.i.d. since all components of one specific tensor node are i.i.d. We note here that in our assumption of Theorem 2, we just assume that all tensor nodes are independent, but not necessary to be i.i.d. For two specific implementations of the bond indices, namely two different sequences of value of the indices, and , the product of each chain of the nodes are i.i.d.. We introduce a new notation for the production of the chain,

| (25) |

We know all the different possible evaluation of the indices sequence of are i.i.d. As the width of the tensor network goes to infinity, the number of all the possible implementation of the indices sequence goes to infinity. By the C.L.T, converges to a GP.

B.2 Proof of Theorem 3

Based on the work of Theorem 2 proof, to prove Theorem 3, we just need to show that all the components of the neural kernel are independent. It is obvious that all component of the output of the neural kernel are independent. By reshaping the output of the neural kernel , we get the kernel and all the components are independent. Then by setting the width of the MPS to be infinite, using C.L.T and Theorem 3, the response of the neural kernel mps will converge to GP.

B.3 Proof of Theorem 4

By taking the infinite width limit of the hidden layer MPS, the response of the MPS are all i.i.d. which follow the normal distribution by Theorem 2, then it is easy to verify that follows gaussian distribution by C.L.T. and then is normal random variable. So converge to the GP as goes to infinity.

C. Approximation of Covariance Function

In this appendix, we will explain the calculation of the approximation of the covariance function of mps hidden neural network in detail. For a general function , the expectation can be written as

| (26) | ||||

| (27) |

where

We write the second derivative matrix as

| (28) |

where is the formal square root of the the second derivative matrix . Since is real symmetric matrix, then exists and is symmetric.

| (29) | ||||

| (30) | ||||

| (31) | ||||

| (32) | ||||

| (33) | ||||

| (34) |

Here we use to represent the diagonal part of the covariance matrix , and to represent the diagonal part of the second derivative matrix of the integrated function .