Infinitely Repeated Quantum Games and Strategic Efficiency

Abstract

Repeated quantum game theory addresses long term relations among players who choose quantum strategies. In the conventional quantum game theory, single round quantum games or at most finitely repeated games have been widely studied, however less is known for infinitely repeated quantum games. Investigating infinitely repeated games is crucial since finitely repeated games do not much differ from single round games. In this work we establish the concept of general repeated quantum games and show the Quantum Folk Theorem, which claims that by iterating a game one can find an equilibrium strategy of the game and receive reward that is not obtained by a Nash equilibrium of the corresponding single round quantum game. A significant difference between repeated quantum prisoner’s dilemma and repeated classical prisoner’s dilemma is that the classical Pareto optimal solution is not always an equilibrium of the repeated quantum game when entanglement is sufficiently strong. When entanglement is sufficiently strong and reward is small, mutual cooperation cannot be an equilibrium of the repeated quantum game. In addition we present several concrete equilibrium strategies of the repeated quantum prisoner’s dilemma.

1 Introduction and Summary

In our study on repeated quantum games, we aim at exploring long term relations among players and efficient quantum strategies that may make their payoff maximal and can become equilibria of the repetition of quantum games. The first work of an infinitely repeated quantum game was presented by one of the authors in 2020QuIP…19…25I , where infinitely repeated quantum games are explained in terms of quantum automata and optimal transformation and equilibria of some strategies are provided. The previous work is a quantum extension of a classical prisoner’s dilemma where behavior is recognized by signals which can be monitored imperfectly or perfectly. To explore more on quantum properties of games, we give a different formulation of infinitely repeated games and investigate a long term relation between players. In this article we give a general definition of infinitely repeated quantum games. In particular we address an infinitely repeated quantum prisoner’s dilemma and show the folk theorem of the repeated quantum game. The main contributions of our work to the quantum game theory are Theorem 3.7 and Theorem 3.8. By Theorem 3.7, we claim that mutual cooperation cannot be an equilibrium when players are maximally entangled and reward for cooperating is not enough. This fact distinguishes a repeated quantum game from a repeated classical game. Note that mutual cooperation is an equilibrium of the classical repeated prisoner’s dilemma. By Theorem 3.8, we present the quantum version of the Folk theorem, which assures the existence of equilibrium strategies for any degree of entanglement. Our model will also be useful in discussing complex dynamic processes and evolutionary processes in many economic or interdisciplinary fields from a game theoretical perspective. In economics, the negotiation of a contract can be regarded as a repeated game if it continues for a long time, and the recent Internet auction is basically a repeated game. Most recently, a quantum model of contracts, moral hazard, and incentive contracts was first formulated in Ikeda_cat2021 . It would be very interesting to consider such a model in terms of repeated games. Furthermore, considering the evolutionary nature of ecological systems will be a new challenge doi:10.1098/rspa.2021.0397 . For further motivation to consider repeated quantum games, please consult 2020QuIP…19…25I ; 2020arXiv201014098A for example.

In the sense of classical games, players try to maximize reward by choosing strategies based on opponents’ signals obtained by measurement. In a single stage prisoner’s dilemma, mutual cooperation is not a Nash equilibrium Nash48 but a Pareto optimal, however when repetition of the game is allowed, such a Pareto optimal strategy can be an equilibrium of the infinitely repeated game. A study on an infinitely repeated game aims at finding such a non-trivial equilibrium solution that can be established in a long term relationship. From this viewpoint repeated games play a fundamental role in a fairly large part of the modern economics. Hence repeated quantum games will become important when quantum systems become prevalent in many parts of the near future society.

There are various definitions of quantum game Eisert:1998vv ; 1999PhRvL..82.1052M and it is as open to establish the foundation. Indeed, many authors work on quantum games with various motivations Li2013 ; Li13 . Recent advances in quantum games are reviewed in 2018arXiv180307919S . Most of the conventional works on quantum games focus on some single stage quantum games, where each one plays a quantum strategy only one time. However, in a practical situation, a game is more likely played repeatedly, hence it is more meaningful to address repeated quantum games. Repeated games are categorized into (1) finitely repeated games and (2) infinitely repeated games. However, equilibria of finitely repeated classical games are completely the same as those of single round classical games. This is also true for at most finitely repeated quantum games and the Nash equilibrium of finite repeated quantum prisoner’s dilemma is the same as the single stage case 2002PhLA..300..541I ; 2012JPhA…45h5307F .

On the other hand, in infinitely repeated (classical) games or unknown length games, there is no fixed optimum strategy, hence they are very different from single stage games 10.2307/1911077 ; 10.2307/1911307 . It is widely known that in infinitely repeated (classical) prisoner’s dilemma, mutual cooperation can be an equilibrium. So it is natural to investigate similar strategic behavior of quantum players. But less has been known for infinitely repeated quantum games. To our best knowledge, this is the first article which gives a formal definition of infinitely repeated games and investigates the general Folk theorem of the infinitely repeated prisoner’s dilemma.

This work is organized as follows. In Sec.2, we address single round quantum prisoner’s dilemma and investigate equilibria of the game. We first prepare terminologies and concepts used for this work. We present novel relations between payoff and entanglement in Sec.2.2. In Sec.3 we establish the concept of a generic repeated quantum game (Definition 3.1) and present some equilibrium strategies (Lemma 3.3 and 3.5). Our main results (3.7 and 3.8) are described in Sec.3.3. This work is concluded in Sec.4.

2 Single Round Quantum Games

2.1 Setup

We consider the quantum prisoner’s dilemma Eisert:1998vv ; 1999PhRvL..82.1052M . Let be two normalized orthogonal states that represent "Cooperative" and "Non-cooperative" states, respectively. Then a general state of the game is a complex linear combination, spanned by the basis vectors . Each player chooses a unitary strategy and the game is in a state

| (1) |

where gives some entanglement. In what follows we use

| (2) |

where represents entanglement and two states become maximally entangled at . We assume a quantum strategy of a player has a representation

| (3) |

where the coefficients obey .

| Bob:C | Bob:D | |

|---|---|---|

| Alice:C | (,) | (,) |

| Alice:D | (,) | (,) |

Alice receives payoff , where is her payoff matrix

| (4) |

Without loss of generality, we can always proceed with the discussion as if necessary. Taking into account of (3), the average payoff of Alice is a function of

| (5) | ||||

Since the game is symmetric for two players, is satisfied.

Definition 2.1.

A quantum strategy is called pure if a player chooses any single qubit operator.

Definition 2.2.

A quantum strategy is called mixed if a player choose one from with some probability 111The most general definition of a single-qubit mixed strategy is given as (6) where is a Haar measure on and is non-negative and satisfies . Throughout this article, we intend to explore mixed strategies based on Def. 2.2. .

Although our mixed strategies are probabilistic mixtures of the four Pauli operators , we show the game is powerful enough to obtain stronger results compared with classical prisoner’s dilemma with mixed strategy.

The classical pure strategic prisoner’s dilemma allows players to choose one of and it becomes a mixed strategy game if the state is written as

| (7) |

where is non-negative and satisfies . Note that for a single round quantum game, a generic state is represented as

| (8) |

where is a complex number and a state is measured with the probability . However as long as one considers a single round game, the quantum game is resemble the classical mixed strategy game, where a strategy is chosen stochastically with a given probability distribution.

Considering repeated quantum games is a way to make quantum games much more meaningful, since transition of quantum states is different from that of classical states. To begin with, we first address single round quantum prisoner’s dilemma and equilibria of the game.

2.2 Equilibria

2.2.1 Without Entanglement

The case without entanglement corresponds to the classical prisoner’s dilemma. Table 2 presents the correspondence between operators and classical actions.

| I | X | Y | Z | |

|---|---|---|---|---|

| I | (C,C) | (C,D) | (C,D) | (C,C) |

| X | (D,C) | (D,D) | (D,D) | (D,C) |

| Y | (D,C) | (D,D) | (D,D) | (D,C) |

| Z | (C,C) | (C,D) | (C,D) | (C,C) |

Then the strategy is simply a mixed strategy of the pure strategies , therefore average payoff of Alice is

| (9) | ||||

As a result, is the equilibrium.

2.2.2 Maximally Entangled

In contrast to the case without entanglement, the strategy part of the maximally entangled case becomes

| (10) | ||||

| (11) |

Therefore the correspondence between the operators and actions becomes non-trivial as exhibited in Table 3.

| I | X | Y | Z | |

|---|---|---|---|---|

| I | (C,C) | (D,C) | (C,D) | (D,D) |

| X | (C,D) | (D,D) | (C,C) | (D,C) |

| Y | (D,C) | (C,C) | (D,D) | (C,D) |

| Z | (D,D) | (C,D) | (D,C) | (C,C) |

Pure Quantum Strategy

We first consider the pure strategy game. The average payoff of Alice is

| (12) | ||||

This can be written as

| (13) |

where

| (14) | ||||

Note that the equation (14) is a coordinate transformation on , hence there is a that makes for all . In other words, Alice can find a stronger strategy for any strategy of Bob, and vice versa. Therefore there is no equilibrium for this case. In this sense this looks like "Rock-paper-scissors" .

Mixed Quantum Strategy

We can find equilibria when we allow Alice and Bob play mixed quantum strategies. That means Alice chooses a strategy from with probability . Alice can execute her strategy in such a way that

| (15) |

Then the average payoff of Alice is given by

| (25) |

We find that the above form can be written as

| (30) |

If , the average payoff of Alice does not depend on and it becomes

| (31) |

So one of the equilibria is

| (34) |

In addition, we have another equilibrium.

Proposition 2.3.

If , then

| (39) |

is an equilibrium. The average payoff at the equilibrium is .

Proof.

Repeating the same discussion, we can show the following statement.

Proposition 2.4.

If , then

| (48) |

is an equilibrium. The average payoff at the equilibrium is .

2.2.3 In-between

Pure Quantum Strategy

We consider a general case with entanglement . Without entanglement , both are always stronger than , but is sometimes stronger than when there is some entanglement. is always stronger than . So in what follows we first assume Bob plays , which corresponds to . In this case, the payoff of Alice is

Note that the first term is always positive, the third and fourth terms are always negative since . The second term could be positive if . Therefore Alice’s best reaction to is and the corresponding payoff is

| (49) |

Similarly one can find that Bob’s best reaction to is . So the pair of and is an equilibrium since they satisfy

| (50) | ||||

More generally one can find that

| (53) |

is an equilibrium for all and the corresponding average payoff of Alice is

| (54) |

Equilibrium strategies do not exist if , which is consistent with our previous discussion that the maximal entanglement case does not have any equilibrium while only pure quantum strategies are played.

Mixed Quantum Strategy

In general the average payoff of Alice can be written as

| (60) | ||||

| (65) |

We define

| (70) |

and

| (71) | ||||

Proposition 2.5.

is an equilibrium

Proof.

The equation (65) can be decomposed into

| (77) | ||||

| (86) |

One can show that the second term vanishes at . Therefore is satisfied for any . Since the same argument is held for Bob’s average payoff, is an equilibrium. ∎

In addition, we can find another equilibrium for and . We first address the case where .

Proposition 2.6.

If , the following pairs of are equilibria.

| (89) | |||

| (92) |

Proof.

We first consider the case where holds. We write . Then Alice’s average payoff is

| (93) |

Note that the second term is positive for . Therefore for all . In the same way, the average payoff of Bob also respects .

Note that for and at the equilibrium, the average payoff of Alice is smaller than that of Bob: .

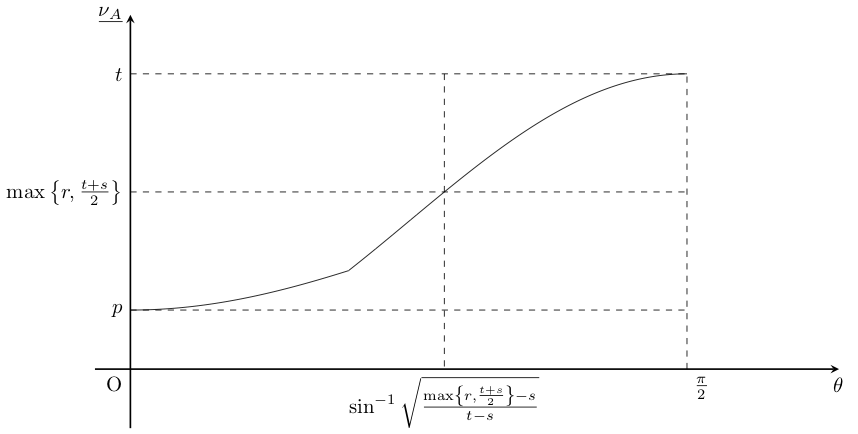

In summary, the average payoff of Alice is exhibited in Fig.1.

We next consider the case where . One can show the following statement as we did before.

Proposition 2.7.

If , the following pairs of are equilibria.

| (97) | |||

| (100) |

Since is always true, they satisfy . Therefore (97) is an equilibrium for all . In summary, the average payoff of Alice is exhibited in Fig.2 and 3.

3 Repeated Quantum Games

3.1 Setup

A generic repeated quantum game is summarized as follows.

Definition 3.1 (Repeated Quantum Game).

Let be a set of positive integers and be a family of quantum states. For each , put . Let be an orthonormal basis and be a set of complex numbers such that . With respect to each , suppose that reward to a player is given by a certain operator in such a way that and for . Then a general -persons quantum repeated game proceeds as follows:

-

1.

At any , the game is in a state

(101) -

2.

The time-evolution of the game is given by unitary operators

(102) -

3.

At time , reward is evaluated and given to players by a

(103) where is a function such that . It is a collective expression of the interest and other costs incurred during that period of .

-

4.

The total payoff of a player at time is defined by

(104) and in the limit it is .

Now let us consider the repeated quantum prisoner’s dilemma. Let be a state at the -th round. Let be a set of positive integers. We assume measurement of quantum states is done at the end of the round for each . For example, for all means we measure quantum states every round. Suppose are operators of Alice, then her strategy is and the game is in a state

| (105) |

which should be measured at the end of round. The payoff could depend on :

| (106) |

where is a certain function of . In repeated games, the total payoff is written with discount factor and in our case it can be introduced in such a way that

| (107) |

An interpretation of the discount factor is that the importance of the future payoffs decreases with time. The measurement periods can be defined randomly or predeterminedly. When game’s state is measured every round, the probability of monitoring signals is classical (see (15)). In order to enjoy full quantum games, a game should evolve with unitary operation.

Since this work is the first study on infinitely repeated quantum games, we focus on the most fundamental case and investigate a role of entanglement. We will work on a generic case elsewhere. For this purpose, in what follows, we put for all and . Currently, we are considering only the prisoner’s dilemma, but there are frequent cases when the game is repeated, for example in negotiation games. Negotiations usually involve thinking not only about the pie on the table today, but also about the pie after tomorrow. It is natural that interest may accrue and costs may change during negotiations. The function represents the change in cost that can occur during the period between the finalization of one state and the transition to the next state. Negotiation games are the basic repeated games in contract theory. The formulation of contract theory as quantum games has been recently given in Ikeda_cat2021 . Further developing that model as a game theory is a very interesting problem.

Then the game evolves in such a way that

| (108) |

where is her quantum strategy for the th round. Then her average payoff is

| (109) |

3.2 Equilibria

We define a trigger strategy as follows.

Definition 3.2 (Trigger 1).

-

1.

Alice and Bob play at time if cooperative relation is maintained before time .

-

2.

If Alice (Bob) deviates from this cooperative strategy at , then Bob (Alice) chooses either or with equal probability at time .

We show that Trigger 1 is an equilibrium of the repeated quantum prisoner’s dilemma.

Lemma 3.3.

Trigger 1 is an equilibrium of the repeated quantum prisoner’s dilemma if either one of the following conditions are satisfied:

-

1.

-

2.

and

.

Proof.

Suppose they play for all . Then Alice’s total payoff is

| (110) | ||||

Now assume that they play before and Alice plays at . Since Bob plays at , her maximal payoff is

| (111) | ||||

where . Note that, according to Propositions 2.6 and 2.7, is an equilibrium of the game.

Choosing cannot be her incentive if , which is equilibrium to

| (112) |

Since is always positive, this inequality is satisfied for a large . The greatest lower bound of such is obtained as

| (113) |

Since is smaller than 1, no deviation occurs.

If Alice or Bob deviates from cooperative strategy, they choose or with equal probability along trigger 1. According to Proposition 2.6 and 2.7, choosing or with equal probability each other is an equilibrium when condition 1,2 are satisfied. So no deviation occurs, as long as Alice and Bob choose or with equal probability.

Therefore, trigger 1 can be an equilibrium of the repeated game. ∎

Fig.4 and 5 present the relation between and entanglement. The bigger becomes, the more profit the players can receive, therefore the discount factor should be sufficiently big in order to maintain the cooperative relation when their states are strongly entangled.

Definition 3.4 (Trigger 2).

-

1.

Alice and Bob play at time if cooperative relation is maintained before time .

-

2.

If Bob (Alice) deviates from the cooperative strategy at time , then Alice (Bob) chooses either or with the equal probability at time .

-

3.

If Alice and Bob plays and (or and ) respectively at time , then at time Alice (Bob) repeats the same strategy, otherwise chooses either or with the equal probability at time .

-

4.

If Alice and Bob repeat and (or and ) respectively before time and Bob (Alice) deviates from the repetition at time , then Alice (Bob) chooses either or with the equal probability at time .

Deviation 1 means that Alice (Bob) deviates the cooperation and chooses or . Deviation 2 means that Alice (Bob) chooses or when they should choose or with the equal probability. According to Proposition 2.6, it can happen when . Deviation 3 means that Alice (Bob) chooses or when Alice should play () and Bob should play , respectively. It can happen at . This is because that if an opponent chooses , choosing against can yield more profit.

We can modify Trigger 1 and redefine Trigger 2 so that for all , it can be a subgame perfect equilibrium.

Lemma 3.5 (Trigger 2).

Trigger 2 is an equilibrium of the repeated game for .

Proof.

We first address the case where Alice choose deviation 1. Her total expected payoff when she plays is . If Alice chosen deviation 1, Alice and Bob choose or with the equal probability. can occur with the equal probability. In this round, the expected payoff is . If or is played, they should choose or in the next round. If or is played, they should choose the same quantum strategy as last time in the next round. Therefore her maximal expected payoff for choosing deviation 1 is

| (114) | ||||

She does not have an incentive to choose deviation 1 when , which can happen is is large.

Regarding deviation 2, Alice’s total expected payoff when she choose either or with the equal probability is

| (115) |

and her maximal expected payoff for choosing deviation 2 is

| (116) |

She does not have an incentive to choose deviation 2 if , which is satisfied for a large .

On deviation 3, Alice’s total expected payoff for choosing () while Bob choosing () is

| (117) | ||||

Her maximal expected payoff for choosing deviation 3 is

| (118) |

since Alice and Bob choose or with the equal probability along trigger 2. She does not have an incentive to choose deviation 3 if , which is satisfied for a large .

For all cases, one can find that does not endows a player with an incentive for deviation. ∎

The curves in Fig. 7 presents the relation between and the greatest lower bound of that respects the condition . Such lives in the colored region in the figure.

3.3 Folk Theorem

3.3.1 Pure Quantum Strategy

We first consider both players choose pure strategies. Let be a set of strategies of Alice and Bob. We write a pair of Alice and Bob’s profit for a strategy . And let be the profit per single round of the repeated game. Then it turns out that they can receive profit in (Fig.8 and 9).

| (119) |

where is a Haar measure on .

Alice’s mini-max value is defined as

| (120) |

which is shown in Fig.10. If is smaller than , then there exists a subgame perfect equilibrium. This can be summarized as follows.

Theorem 3.6.

While players choose pure quantum strategies, there exists a subgame perfect equilibrium of the repeated quantum prisoner’s dilemma if

| (121) |

3.3.2 Mixed Quantum Strategy

The mini-max value for the mixed strategy case is defined as

| (122) |

which is shown in Fig11 and 12. There is a subgame perfect equilibrium since is always satisfied. Note that, for all entanglement parameter there exists non-empty region

| (123) |

which we call the feasible and individually rational payoff set. If Alice and Bob are entangled, the mini-max value becomes large and the area of gets small. Especially, the area of becomes the smallest when states are maximally entangled and is exhibited as the dark parts of Fig.13 and 14. It does not contain when , therefore a notable difference between classical and quantum repeated prisoner’s dilemma is the following.

Theorem 3.7 (Anti-Folk Theorem).

When Alice and Bob are maximally entangled, cooperation and cooperation cannot be realized unless .

In the classical repeated prisoner’s game, cooperation and cooperation is the Pareto optimal solution and can be an equilibrium of the repeated game, called the Folk theorem, whereas it is not true for the quantum repeated game. For the case of our quantum game, one can always find a better solution that makes their payoff larger than choosing cooperation and cooperation. So in order to establish the cooperative relation, they require a sufficiently large reward for cooperating.

Based on a similar argument for the classical Folk theorem, we obtain its quantum version.

Theorem 3.8 (Quantum Folk Theorem).

For all entanglement parameter , there exists an appropriate discount factor such that any payoff in the feasible and individually rational payoff are in the set .

Proof.

Let be Alice’s mixed strategy that endows Bob with the mini-max value. Then if the round-average payoff for playing is in , the following is an equilibrium.

-

1.

Choose unless deviation occurs.

-

2.

If deviation is observed, the both play for the next rounds, then the both play .

-

3.

Once if is not chosen in the rounds, the both again choose for the succeeding rounds.

Here is chosen as

| (124) |

where .

One can complete the proof of the above as did in 10.2307/1911307 . ∎

4 Conclusion and Future Directions

In this work we established the concept of a generic repeated quantum game and addressed repeated quantum prisoner’s dilemma. Based on Definition 3.1, we addressed the repeated quantum prisoner’s dilemma for the case of for all . Even though the game evolves in a classical manner, repetition of the quantum game is much different from the classical repeated prisoner’s dilemma (Theorem 3.7) and obtained a novel feasible and individually rational set for the repeated quantum prisoner’s dilemma (Theorem 3.8).

As stated at the beginning of this article, repeated games play a very important role in game theory. In many cases, human activities are followed by long-term strategic relationships. In particular, in order to form a sustainable market, it is necessary to consider the interests and strategies of market participants and develop rules from the perspective of repeated games. It is a market on complex Hilbert space, which is not envisioned by conventional economic theory, but will be realized in the near future with the realization of the quantum Internet. As this paper has shown, the equilibrium solution of a classical game is not necessarily the solution in an infinite quantum game. This clearly shows that infinite iteration quantum games are not an extension of classical theory, and suggests that quantum economics on complex Hilbert spaces is completely different from conventional economic theory. From this point of view, it is clear that the economics of quantum money 2020arXiv201014098A , market design and contract theory with quantum operations Ikeda_cat2021 need to be seriously investigated, and that these are new and challenging issues in the quantum age.

There are many research directions of the repeated quantum games. For example, it will be interesting to address cases where . In this work we focused on the case and as a result we clarified a role of entanglement . From a viewpoint of quantum computation, the complex probability coefficients are important as well as entanglement. Taking into account of it, studying more on a generic time-evolution of the quantum game is crucial and it is necessary to introduce general review periods which the players should not know a priori.

Furthermore, it is important to address games with three or more persons from a viewpoint of repeated quantum games. As Morgenstern and Neumann described morgenstern1953theory , games with three or more persons can be much different from games with two persons, since they have a chance to form a coalition. In quantum games, there are various ways to introduce entanglement which makes games more complicated. In the future quantum network, people will use entangled strategies in general for various purposes. Therefore it will be more practical to investigate quantum games where many strategies are entangled. More recently the authors investigated a quantum economy where three persons create, consume and exchange some entangled quantum information, using entangled strategies 2020arXiv201014098A . According to it, quantum people can use stronger strategies than people using only classical resources, and their strategic behavior in a quantum market can be much more complicated in general. So as emphasized in 2020arXiv201014098A , economic in the quantum era will become essentially different from that today in the literature. From this perspective, investigating a long term relation among quantum players will be significant.

Moreover it is also interesting to apply quantum repeated games not only to economics but also to other fields, since game theoretic perspectives are used in various disciplines such as business administration, psychology, biology, and computer science. Studying quantum effects of strategies and human behavior in a global quantum system will require advanced quantum technologies, but quantum games will also shed new light on a study completed in a local quantum system. For example, recent advances in applications of quantum algorithm are crucial for potential speedup of machine learning, which can be regarded as a repeated game. Generative adversarial networks (GANs) could be such a typical example. In general, GANs have multiple and complicated equilibria and it will be interesting to explore QGANs PhysRevLett.121.040502 in terms of repeated quantum games.

We leave it an open question to investigate a Nash equilibrium in general repeated quantum games. For single stage non-cooperative games, the equilibria in pure quantum strategies are addressed in 2018arXiv180102053S and those in mixed quantum strategies are given in 1999PhRvL..82.1052M , by using Glicksburg’s fixed-point theorem.

Acknowledgement

This work was supported by PIMS Postdoctoral Fellowship Award (K. I.)

References

- (1) K. Ikeda, Foundation of quantum optimal transport and applications, Quantum Information Processing 19 (2020) 25 [1906.09817].

- (2) K. Ikeda, Quantum contracts between schrödinger and a cat, Quantum Information Processing 20 (2021) 313.

- (3) S. N. Chowdhury, S. Kundu, M. Perc and D. Ghosh, Complex evolutionary dynamics due to punishment and free space in ecological multigames, Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 477 (2021) 20210397 [https://royalsocietypublishing.org/doi/pdf/10.1098/rspa.2021.0397].

- (4) K. Ikeda and A. Shoto, Theory of Quantum Games and Quantum Economic Behavior, arXiv e-prints (2020) arXiv:2010.14098 [2010.14098].

- (5) J. F. Nash, Equilibrium points in n-person games, Proceedings of the National Academy of Sciences 36 (1950) 48 [https://www.pnas.org/content/36/1/48.full.pdf].

- (6) J. Eisert, M. Wilkens and M. Lewenstein, Quantum games and quantum strategies, Phys. Rev. Lett. 83 (1999) 3077 [quant-ph/9806088].

- (7) D. A. Meyer, Quantum Strategies, Physical Review Letters 82 (1999) 1052 [quant-ph/9804010].

- (8) Q. Li, M. Chen, M. Perc, A. Iqbal and D. Abbott, Effects of adaptive degrees of trust on coevolution of quantum strategies on scale-free networks, Scientific Reports 3 (2013) 2949 EP .

- (9) Q. Li, A. Iqbal, M. Perc, M. Chen and D. Abbott, Coevolution of quantum and classical strategies on evolving random networks, PloS one 8 (2013) e68423.

- (10) F. Shah Khan, N. Solmeyer, R. Balu and T. Humble, Quantum games: a review of the history, current state, and interpretation, Quantum Information Processing 17 (2018) 309.

- (11) A. Iqbal and A. H. Toor, Quantum repeated games, Physics Letters A 300 (2002) 541 [quant-ph/0203044].

- (12) P. Frackiewicz, Quantum repeated games revisited, Journal of Physics A Mathematical General 45 (2012) 085307 [1109.3753].

- (13) D. Abreu, On the theory of infinitely repeated games with discounting, Econometrica 56 (1988) 383.

- (14) D. Fudenberg and E. Maskin, The folk theorem in repeated games with discounting or with incomplete information, Econometrica 54 (1986) 533.

- (15) O. Morgenstern and J. Von Neumann, Theory of games and economic behavior. Princeton university press, 1953.

- (16) S. Lloyd and C. Weedbrook, Quantum generative adversarial learning, Phys. Rev. Lett. 121 (2018) 040502.

- (17) F. S. Khan and T. S. Humble, Nash embedding and equilibrium in pure quantum states, in International Workshop on Quantum Technology and Optimization Problems, pp. 51–62, Springer, 2019.