0.0mm

Infiltrating the Sky: Data Delay and Overflow Attacks in Earth Observation Constellations

Abstract

Low Earth Orbit (LEO) Earth Observation (EO) satellites have changed the way we monitor Earth. Acting like moving cameras, EO satellites are formed in constellations with different missions and priorities, and capture vast data that needs to be transmitted to the ground for processing. However, EO satellites have very limited downlink communication capability, limited by transmission bandwidth, number and location of ground stations, and small transmission windows due to high-velocity satellite movement. To optimize resource utilization, EO constellations are expected to share communication spectrum and ground stations for maximum communication efficiency.

In this paper, we investigate a new attack surface exposed by resource competition in EO constellations, targeting the delay or drop of Earth monitoring data using legitimate EO services. Specifically, an attacker can inject high-priority requests to temporarily preempt low-priority data transmission windows. Furthermore, we show that by utilizing predictable satellite dynamics, an attacker can intelligently target critical data from low-priority satellites, either delaying its delivery or irreversibly dropping the data. We formulate two attacks, the data delay attack and the data overflow attack, design algorithms to assist attackers in devising attack strategies, and analyze their feasibility or optimality in typical scenarios. We then conduct trace-driven simulations using real-world satellite images and orbit data to evaluate the success probability of launching these attacks under realistic satellite communication settings. We also discuss possible defenses against these attacks.

Index Terms:

Satellite constellation, Earth observation, Security, Attack, Buffer overflow, SchedulingI Introduction

Low Earth Orbit (LEO) Earth observation (EO) constellations have revolutionized Earth monitoring by employing numerous low-orbit, low-cost small satellites, rather than a few high-cost, high-altitude large satellites targeted only at specific regions. EO constellations enable continuous, high-resolution imaging of the entire Earth’s surface, significantly reducing revisit times and enhancing observation frequency. EO data is widely used in various fields, such as agriculture, forestry, urban planning, and disaster management [1].

As EO satellites continuously scan Earth’s surface, they generate vast monitoring data that must be transmitted to the ground for processing and analysis. However, several factors hinder efficient satellite data downlinking. Firstly, the geographical requirements and high costs of constructing ground stations limit their number and distribution, resulting in few available windows for downlinking data [2]. Secondly, the high velocity of satellites results in short contact windows with ground stations, typically lasting only 7-10 minutes [3, 4]. Third, due to the physical limitations of radio frequency spectrum allocation and atmospheric absorption, data rates from satellite to ground station remain limited to an average of 160 Mbit/s [5], even with a variety of bands and advanced antenna design or modulation and coding schemes. These factors lead to insufficient downlink resources for the satellite data, necessitating onboard storage and resulting in delayed transmission, ranging from a few hours to several days [6].

A trend in EO involves multiple types of constellations collaboratively achieving mission goals. For instance, a low-priority EO constellation consists of satellites with low-resolution cameras sweeping the Earth’s surface for continuous monitoring, while a much smaller high-priority constellation with high-resolution cameras focus on specific Areas of Interest (AoIs) based on results from low-priority satellites and/or requests from users [6]. Furthermore, constellations are increasingly supporting user-scheduled time-sensitive sensing tasks to enable critical use cases such as rapid disaster response or real-time emergency management [7], and will prioritize transmission of such user-scheduled high-priority tasks over the default (low-priority) monitoring tasks via fast tracked data delivery to the ground [8].

In face of limited ground stations, the low- and high-priority constellations may share communication channels and ground stations to maximize the chance and data rate of downlink communication [9]. When there is a high-priority request such as monitoring the spread of forest fires at a specific location from a user, the low-priority satellites may be preempted of their communication resources to prioritize high-value data transmission, in which case the low-priority monitoring data must be stored until there is a future transmission opportunity.

This paper investigates the possibility that an attacker may utilize the sharing of communication resources and opportunities among mixed-priority EO satellites to launch intelligent attacks targeting critical data captured by low-priority EO satellites. Such attacks include, for instance, delaying the downlink of target data captured at a certain time and location, or dropping such data from the internal storage of a satellite before it can be downlinked. In doing so, for instance, the attacking entity (potentially a nation or organization) may aim to prevent downlink and analysis of sensitive information, such as regional warfare strategies or illegal operations, without launching large-scale, easily detectable attacks such as disabling a large number of ground stations (as happened at the beginning of the Russia-Ukraine conflict) [10] or jamming all satellite communication channels [11, 12].

We explore a new attack surface that exploits the competition for limited downlink resources between different types of EO satellites. Specifically, when an attacker deliberately schedules tasks from high-priority satellites that shares the limited downlink resources with a low-priority satellite, the latter will have to wait for the next transmission opportunity, leading to data on the low-priority satellite being delayed. If data accumulated on the low-priority satellite exceeds the internal storage limit, some data will be dropped. Specifically, by leveraging the predictable dynamics of satellites, including orbit information, scheduling policies, and queue status on the satellite, an attacker can intelligently select its attack strategy, such as how many/which transmission windows to attack, to make its attack more viable and less detectable. In this paper, we formalize data delay and data overflow problems, where an attacker targets a specific piece of data to delay or drop, and design two algorithms to solve these problems. Our attack can be planned or even launched before the target data is captured by a satellite, and utilize only legitimate services provided by different EO constellations, thus making the attack easy to launch and the attack target hard to locate or protect.

The contribution of this paper is listed as follows:

-

•

We explore and propose a new type of attack targeting data captured by certain EO satellites that share communication resources with others, utilizing the shared communication resources to delay or prevent the downlink of the target data via legitimate EO service requests.

-

•

We formulate two possible attack goals of an adversary: the data delay attack and the data overflow attack, and devise algorithms to find feasible attack strategies considering orbital dynamics, bandwidth, storage, and attack costs. We analyze the feasibility or optimality of our algorithms in typical attack scenarios.

-

•

We evaluate the attacks using real-world satellite orbital dynamics and imaging data, demonstrating their practical implications and effectiveness.

Organization. §II introduces background and related work. §III formalizes the system model. §IV describes the overview of two attacks. §V and §VI present the data delay attack and the data overflow attack. §VII addresses practical considerations of launching the attacks. §VIII presents the evaluation results. §IX describes some countermeasures against the proposed attacks. §X concludes the paper. § Appendix provides proofs of lemmas and theorems of this paper.

II Background and Related Work

II-A Earth Observation Constellations

Satellites positioned at altitudes below 2000km are called LEO satellite. When used for Earth observation, these satellites are formed in EO constellations that continuously monitor the Earth’s surface with high resolution and low costs, and can offer detailed and frequent data for tracking environmental changes, managing disasters, monitoring resources, etc.

Here, we use the constellations operated by Planet Labs [6] as an example to illustrate the structure of EO constellations111 While our attacks are motivated by Planet Labs, they can be applied to any constellations that (partially) share ground stations for data downlink.. Planet Labs hosts the world’s largest commercial EO constellations, with over 150 satellites currently in LEO orbits [9]. As shown in Fig. 1, Planet Labs currently has two constellations: the Dove constellation and the SkySat constellation. The Dove constellation offers continuous imaging with daily medium-resolution imagery of 3.7 meters per pixel, covering the entire Earth’s surface. The Dove constellation has a daily data collection capability of 200 million km2 [2] and captures 120TB of data per day [4]. The SkySat constellation, comprising 21 satellites, focuses on high-resolution imagery of areas of interest at 0.65 meters per pixel, and offers on-demand collection with less than 15% cloud cover, prioritizing areas with high demand. This constellation allows users to submit tasks with flexible scheduling options, including pre-scheduled, monthly, weekly, daily, or sub-daily for specified areas and time frames, featuring different delivery strategies for various orders [6, 13]. Specifically, the two constellations can work collaboratively for an EO user. For instance, a user may first use the large amount of data generated by Dove satellites to locate areas of interest, and then use the SkySat satellites to capture high-quality data of those areas at specific times. Currently, there are no Inter-Satellite Links (ISLs) implemented for the constellations [2].

Ground stations of the constellations utilize S-band for uplink and X-band for downlink communications [14]. Currently, there are only a handful of ground stations globally to support downlink transmissions [15, 4]. A typical pass, during which a downlink X-band lock is established and maintained with a ground station for reliable communication and data transfer, lasts only 7-10 minutes and requires precise antenna pointing with errors of less than 0.2 degrees [16]. The data rates from satellite to ground station are limited to an average of 160 Mbit/s [5]. Given the limited communication resources and windows, it is expected that most of a satellite’s captured data needs to be stored in its internal storage for some period of time before being downlinked via a ground station. This has motivated the study of buffer management in these satellites.

II-B Related Work

Our attacks are related to existing works that study buffer management for satellites/spacecrafts given the limited communication capabilities. While many works have studied scheduling data collection, downlink or in-orbit computing for improving throughput and reducing latency and data loss [4, 17, 18, 19, 20, 21, 22, 23, 24], their scope is limited to a single satellite or constellation, and neglects the inter-play among satellites between different constellations. Beyond buffers in the space, queuing analysis has been widely studied in traditional and wireless sensor networks. These studies focus on evaluating the performance of network systems by examining the delay and throughput characteristics of data packets [25, 26, 27, 28, 29, 30, 31, 32]. Some works have targeted the utilization of queue dynamics for buffer overflow distribution analysis [33, 34], but they are limited to specific queue models with certain input and output patterns. Some works have examined buffer attacks on single-node High Performance Computing (HPC) systems or targeted the memory bus of a single machine [35, 36], which address a inherently different problem space from ours. To our knowledge, no existing work has studied buffer management through an adversarial lens, where an adversary can utilize the knowledge towards the scheduling policy to launch data-oriented attacks.

III System Model

III-A EO Constellations in Low Earth Orbit

As shown in Fig. 2, we consider two EO constellations of LEO satellites: a high-priority constellation and a low-priority constellation . They use the same set of ground stations with a limited number of antennas, and compete for limited downlink resources over the same spectrum bands. Data collected by high-priority satellites has a higher priority and needs to be transmitted to ground stations as quickly as possible. Data is collected on a per data unit (e.g., per image) basis. The system time is discretized into time slots . The data amount collected from at is denoted as .

Each satellite has a limited internal storage with capacity . As shown in Fig. 3, captured Earth data is stored in the storage before being transmitted to a ground station. We define the onboard queue of a satellite as a waiting line where data units are held until they can be transmitted out or dropped due to limited storage capacity.

We assume the onboard queue is a first-in-first-out (FIFO) queue; in other words, the data in the queue is transmitted or dropped in the order of arrival. For the satellite-ground station assignment, ground station access for low-priority satellites are prioritized based on proximity and assigned using the Hungarian algorithm [37], which is most commonly used in assigning communication channels between satellites and ground stations [17, 4]; satellites closer to the ground station within a time slot receive superior access, ensuring more stable communication with less signal attenuation. High-priority satellites, when contending for a ground station with low-priority satellites, are given precedence to utilize any idle antenna. In the absence of an available idle antenna, high-priority satellites are authorized to occupy antennas initially assigned to low-priority satellites. Consequently, displaced low-priority satellites are unable to use the occupied antennas until they become available again. The transmissible time slot set of satellite is denoted as , which is the set of time slots during which the satellite has the opportunity to transmit data to one of the ground stations. Note that, while we describe our attacks using a typical queue model (FIFO) and satellite-ground station assignment scheme, our attacks can also be applied to other types of queue models and assignment algorithms, as long as these are known to the attacker.

III-B Threat Model

Given a set of target data, denoted as , the data consists of a series of images, video fragments, or other types of data that can be captured and transmitted by a target low-priority satellite at a certain time and location. We consider an active attacker seeking to either delay the downlink of or drop before it reaches the ground.

The attacker can only use legitimate services provided by the high-priority EO constellation, which allow their customers to collect and downlink data according to their interests. The attacker cannot access any other undisclosed information or functions. Specifically, the attacker knows all satellites’ orbit dynamics, the location and configuration of shared ground stations, and the data capture pattern, image size, and average downlink rate of low-priority satellites from public sources; we justify these assumptions in §VII.

The attacker deliberately schedules downlink tasks for the satellites in the high-priority constellation, causing them to occupy ’s originally allocated downlink resource at certain time slots, thereby disrupting the data transmission of and delaying the downlink of . With continuously generating new data and onboard data being queued by the attacker’s designed strategy, can be deliberately dropped. We consider the most challenging scenario for the attacker, where high-priority satellites lack data to preempt ’s downlink resource, as natural preemption would lower the attacker’s cost.

All time slots that the attacker decides to attack constitute the attack strategy . A time slot is “attackable” if there are a sufficient number of high-priority satellites available to occupy ’s downlink resource. All the attackable time slots of form the attackable time slot set . Fig. 2 shows an example of the transmissible and attackable status for 4 low-priority satellites in one time slot. Satellite A cannot transfer data out since no ground station is available, making the current time slot neither transmissible nor attackable to it. Satellite B connects to one ground station, with only one high-priority satellite competing, making it transmissible but not attackable. Satellites C and D can connect to a ground station, making the time slot transmissible, but competition from high-priority satellites makes it attackable.

The cost of attacking a time slot is denoted as . Different cost functions can be applied depending on how services are priced in the real world and/or the amount of additional resources (such as fake user accounts) an attacker requires to launch an attack. In our evaluation, we consider costs that are proportional to the number of high-priority satellites involved, assuming that scheduling a service request for a particular satellite at a given time has a fixed price. The costs for all time slots are denoted as .

Overall, the attacker can conduct two types of attacks:

-

•

Data Delay Attack. The attacker aims to prevent the target data from being downlinked to the ground station until a specified later time, with minimum cost.

-

•

Data Overflow Attack. The attacker aims to target data being dropped due to the limited onboard queue capacity.

III-C Queue Evolution Model

As mentioned in §III-A, we consider a FIFO queue for the onboard data. Since data is collected on a per data unit basis, we use to represent the number of data units in and use to denote the data unit in that is captured at time . Specifically, denotes the last data unit in . The onboard queue of at time slot under the attack strategy is denoted as with overall length , and the sub-queue including and preceding is denoted as with length . Given an attack start time , the state of the EO constellations with respect to without any attack for can be represented as .

To model the two ways data is consumed in the onboard queue, we define the data amount downlinked to the ground station and the dropped data amount at time slot from as and , respectively. We round up to if to ensure that the delay time caused by the attack is per time slot. A data overflow occurs at time slot when the onboard queue reaches its full capacity and has to drop some data, i.e., .

The queue length at time slot of is updated as

The sub-queue length at time slot of is

where .

To describe how the attack strategy affects the final downlink time of , we define the expected downlink time of under attack strategy as

If is dropped due to the attack strategy , then . We define a metric called the attack strength for a time slot given an attack strategy with respect to as This measures the additional delay when is additionally attacked, compared to attacking only the time slots in . The overall attack strength of for is , measuring the total delay for caused by compared to no attack.

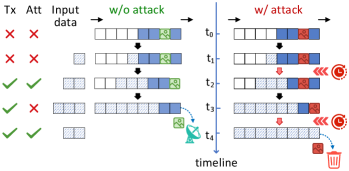

IV Overview

In this section, we give an overview of data delay attack and data overflow attack. The position of the target data in the onboard queue of satellite A under a data delay attack and in the onboard queue of satellite B under a data overflow attack is shown in Fig. 4.

The data delay attack is devised to prolong the delay of target data transmission from a low-priority satellite to the ground station. By targeting specific data and its corresponding satellite, the attacker schedules tasks to high-priority satellites, thereby occupying the time slots typically used by the target low-priority satellite for downlink. Consequently, the targeted data must wait longer for additional available time slots to downlink, resulting in significant delays in data retrieval and potential mission-critical impacts. We design an algorithm to enable the attacker to find the minimum cost attack strategy in §V. We show that when targeting unit data, our algorithm can always find a feasible attack strategy when one exists, and is optimal in terms of cost by proving its optimality when the queue is not empty upon initialization—an assumption typically met in practice due to limited communication resources.

The data overflow attack, exploiting the limited onboard capacity of satellites, poses a more severe threat than the data delay attack and leads to the dropping of the target data, rendering it irrecoverable. As EO satellites continually collect new data, the objective of an attacker is to find an attack strategy that keeps the target data onboard until it is dropped when new data occupy its space. This requires more delicate control over when and which time slots to attack, as attacking more slots may not result in a higher attack success probability. We devise an algorithm to search for the attack strategy, and for unit data as the target, our algorithm can return a feasible attack strategy whenever one exists. See §VI for more details.

Proofs for the lemma and theorems of our theoretical analysis are provided in the Appendix.

V Data Delay Attack

An attacker aiming to execute a data delay attack seeks to prevent critical data on the target satellite from being downlinked to the ground station before a specific time. This data may involve real-time and highly urgent commands, such as those required for disaster response or key military operations. Delaying this data can cause confusion and disrupt crucial decisions. The attacker needs to choose suitable time slots and schedule high-priority satellite tasks to compete with the target satellite for ground station access. This competition results in the target satellite having no downlink resources available at the attacked time slots, forcing it to queue data onboard. The data queued before the target data takes longer to downlink, thereby delaying the target data’s downlink time. An example of the data delay attack is shown in Fig. 5.

An attacker’s goal is to find an attack strategy that can delay the target data beyond a certain threshold, called the target downlink time , with the minimum attack cost. We define the Data Delay problem of finding the optimal data delay attack strategy as follows:

Definition 1.

Given the state of EO constellations at the attack start time for the target data on satellite , the Data Delay problem is to identify an attack strategy that prevents from being downlinked to the ground station until the target downlink time , with minimum cost. This can be formulated as follows:

Intuitively, if the satellite’s internal storage is infinite, then attacking a transmissible time slot before the target data downlink will directly result in the target data’s downlink time being delayed by one transmissible time slot. However, what complicates the attack strategy is that if an attack results in the onboard queue being full at this time slot or some time later than the attacked slot but before the target downlink time, then further attacking any slot before or during the onboard queue being full will have no impact on further delaying the downlink time of the target data. As shown in Fig. 6, regardless of whether an attack occurs at or not, by the end of , the amount of data ahead of the target data is the same as it would be if no attack had occurred up to . To model the queue full condition, we define as the last time slot when the queue is full before the data unit is transmitted under the attack strategy . If no data overflow occurs before is transmitted out, then .

The following lemma shows that attacks on time slots at or before the queue is full do not delay the data unit .

Lemma 1.

Given a data unit , an attack strategy and a set , for all .

Based on Lemma 1, we devise Algorithm 1 to find a feasible data delay attack strategy and prove its optimality for unit target data. We first initialize the attack strategy . Then for each data unit , we get the expected downlink time and the last queue full time without any attack (lines 1-1). If is dropped without any attack, then we do not need to conduct the delay attack (line 1). If the evacuation time is smaller than the target duration , which means needs more attack time slots to delay longer, we then begin to find the minimum cost attack strategy for (line 1). We first construct a set that contains all the attackable time slots that potentially have attack strength for (line 1). Then we find the minimum cost attack time slot in as (line 1). If multiple attackable slots have the same cost, the earliest one is chosen. If the set is empty, indicating the absence of any time slot that can be attacked, then the attack fails and can be transmitted before no matter how the attacker attacks (line 1). If is found, we add to the attack strategy and update the expected downlink time and the last queue full time (lines 1-1). These two times need to be updated because additional attacks on might influence whether further exploration of new attack time slots is needed and exclude time slots that definitely do not have attack strength. We repeat the process until the delay target is met (line 1), or if the target data is dropped (line 1).

EO satellites operate on predefined orbits, covering large regions of the Earth at fixed times. If the attacker’s goal is to delay or drop data of a specific location at a specific time, due to imaging frequency limitations, only a few or possibly just one data unit may be a uniquely valuable resource. In the following, we analyze the completeness and optimality of Algorithm 1 in finding the minimum cost attack strategy for single-unit target data, which suffices for many attack tasks.

Theorem 1.

When and for all , if there exists a feasible solution that can delay the target data until the target downlink time, then Algorithm 1 will always terminate with a feasible attack strategy which has the minimum cost for the Data Delay problem.

VI Data Overflow Attack

For the data overflow attack, given target data , the goal of the attacker is to induce data overflow on the target satellite so that the is dropped. The attacker disrupts the downlink process of by blocking specific attackable time slots, consequently delaying the expected downlink time for all data that is scheduled to be downlinked in subsequent slots. With the satellite continuously capturing new data and the attacker-caused delays resulting in additional data stored onboard, the limited onboard storage will eventually fill up, causing a data overflow. As the input data of the onboard queue is not controlled by the attacker, the key point of the data overflow attack is that the attacker needs to ensure that the target data remains stored onboard until a data overflow occurs, and that when the data overflow happens, is at the head of the queue, thereby ensuring that can be dropped. Fig. 7 gives an example of data overflow attack.

An attacker’s goal is to find a feasible attack strategy to drop the onboard target data, preventing it from being downlinked to the ground station. We define the Data Overflow problem of finding the feasible data overflow attack strategy as follows:

Definition 2.

Given the state of EO constellations at the attack start time for the target data on satellite , the Data Overflow problem is to find an attack strategy that satisfies the following conditions: .

We design Algorithm 2 to solve the Data Overflow problem. The attack begins by initializing the attack strategy as an empty set (line 2). For each data unit , we calculate its expected downlink time and the initial queue full time without any attack (lines 22). In addition, we generate two sets of time slots: is the set of attackable time slots at and after the initial image downlink time that are sorted in ascending order, and is the set of attackable time slots before the initial image downlink time that are sorted in descending order (lines 22). and are the next attack time slot in and , respectively (line 2). If , which means we can attack the time slot and keep the target data onboard and at the top of the queue, then we update the expected downlink time based on the new attack strategy that includes the time slot and update to the next element in (lines 22). Else if , which means the next attackable time slot in cannot contribute to delaying the downlink time of the target data, we need to attack the time slot in to delay the downlink time of to make sure remains onboard (lines 22). If is empty or new data overflow happens due to the attack on , then the attack fails (line 2). This means there is no available attackable time slot to delay further; is downlinked before it can be dropped. This process repeats for each target unit in until all are dropped. Theorem 2 shows the completeness of Algorithm 2 for targeting a single data unit.

Theorem 2.

When , Algorithm 2 terminates with a feasible attack strategy for the Data Overflow problem whenever a feasible solution exists.

VII Practical Considerations

In this section, we analyze the feasibility of the attacks and discuss noise tolerance in real-world scenarios.

Our attacks’ feasibility depends on three main conditions: (1) contention for limited communication resources between high- and low-priority satellites, (2) the attacker’s ability to schedule transmission of high-priority tasks for specific satellites at specific times, and (3) the attacker’s knowledge of orbit information, scheduling policies, and the target satellite’s queue status. We next explain why these conditions are very likely met by current and future constellations.

(1) Resource contention. Given the high cost and environmental requirements for building ground stations, sharing of ground stations among multiple constellations are becoming increasingly common for minimizing cost and maximizing performance [38, 39]. For instance, the Ground Station Operations team at Planet Labs Inc. has begun integrating software and hardware architectures across different constellations to support multiple missions through their ground station networks, aiming to reduce on-orbit reaction latency and increase the utilization of individual ground stations [9].

(2) Attacker’s ability to schedule transmission of high-priority tasks. Critical applications such as rapid disaster response or real-time emergency management require the delivering of user-scheduled high-priority, time-sensitive sensing data to the ground, which is being supported by more and more EO constellations. Such services can then be utilized by an attacker to preempt low-priority transmission during shared communication windows. For instance, Planet Labs allows users to schedule Assured Tasking Orders at specific times when satellites pass over designated areas [7, 40], and have the data delivered with minimum delay via its Fast Track Delivery service [8]. The data of such orders will be transmitted as soon as possible in the next communication windows after being generated, which opens up a possible avenue for adversarial scheduling of such orders to launch an attack. The attacker can further profile the delay in launching an attack, by submitting multiple high-priority tasks and observing their downlink/delivery times prior to launching the actual attack.

(3) Attacker’s knowledge of satellite orbits, scheduling, and queues. Firstly, the orbit information for both low- and high-priority satellites is publicly accessible [41], and since they must adhere to the orbit parameters claimed before their launch in FCC regulations, their future orbits are also predictable. Secondly, an attacker could obtain the communication scheduling policy via various methods. For instance, there has been extensive research on scheduling policy inference from measurement data, using deep learning [42] or probabilistic inference [43]. An attacker could also compromise a satellite (possibly even an outdated or decommissioned one), a ground station, or even a cloud-hosted copy of the satellite communication codebase, to uncover the scheduling policy. An insider attack from within the operator’s organization is also possible. Thirdly, an attacker can use various information sources to assist in determining the initial queue’s data volume. Fig. 8 shows the data collection pattern of a single satellite over three days. The satellite’s data collection is predictable and periodically repeats, focusing solely on gathering data above specific areas of the Earth’s surface. For a specific satellite, the dynamics of data generation rate and captured images can be reliably predicted. So if an attacker can determine the size of the queue at a certain moment and utilize the queue’s data generation and downlink patterns, it can relatively accurately determine the initial queue size at the start of the attack.

To determine the queue size at a specific time, an attacker with access to the satellite and ground station channel can infer that a satellite’s queue is empty if it does not downlink data during a transmissible window with no competition. Without channel access, the attacker can infer this from publicly available data via the open-source global network of satellite ground stations [44]. Other starting points for the attacker include evolving the queue from the target satellite’s initial launch. Other methods could also be used to infer queue status at a specific time, for instance, using input/output communication patterns with fuzzy inference systems [45] or other queue length estimation algorithms [46].

Dealing with estimation noise. An attacker may mis-estimate data size and data rate in the real-world, which may lead to failure of attacks if they are calculated based on inaccurate information. Heuristic strategies can be employed to deal with noise in such estimation. For instance, the attacker can increase the attack’s robustness against noise by attacking extra data units both before and after its primary target of units. If it can find an attack strategy that is feasible for all data units as the target, then even if noise in queue dynamics or data rate estimation causes the attack to be unsuccessful on some data at the beginning or the end, there is still a high chance that the true target of units is successfully attacked. We show that this strategy can significantly increase both attacks’ robustness to estimation noise in §VIII.

There are other approaches to mitigate the impact of noise. For example, in a data delay attack, the attacker can generate a strategy to delay the target data past the target downlink time by a few time slots. Alternatively, redundantly attacking multiple time slots near the target data’s downlink time can also help combat noise. Since attack strategies can be generated and tested through simulation, the attacker can tailor multiple strategies for specific target data and select the most robust one against noise for a real-world attack.

VIII Performance Evaluation

VIII-A Experiment Settings

We conducted a trace-driven simulation to evaluate the proposed attacks. We obtained the real-world metadata of the Planet Labs Dove satellite image data for January 2024 from the Planet API [47]. We selected the top 10 Dove satellites with the highest data generation on January 1st from the 118 satellites collecting data in January 2024 as the target satellites. From these satellites, we randomly sampled 1000 images (100 images from each satellite) among the total of 321,744 images generated by the entire Dove constellation.

Currently, the SkySat constellation consists of 21 satellites. As more satellites are planned for launch in the future, we extended the high-priority constellation to include 50 satellites to explore potential future attack performance. The additional 29 high-priority satellites were selected from other companies’ EO constellations [48], prioritizing those with the most overlap in ground station sharing with Dove satellites. We also assessed the attack performance by varying the number of high-priority satellites, with the default setting as 50.

We utilized real-world orbit Two Line Elements (TLEs) information [41] of all the satellites to simulate the satellite constellation’s orbital dynamics. Twelve ground stations are distributed around the world, each equipped with 4 antennas, with a visibility threshold set at 5 degrees [4, 15]. The average downlink rate from satellite to ground station was set to 160Mbit/s, aligned with the real-world settings [5].

The onboard storage of a satellite is mainly limited by volume and weight, and is non-upgradable after launched to the space. We set the onboard storage to be 2000GB, which is aligned with the existing Dove satellite [4]. We used image as the type of data captured by the satellite; the data could be any other type such as hyperspectral, radar, etc. The default settings had an image size of 200MB, for 4 images as target data, an initial queue size of 500 images, and a data rate of 160Mbit/s. We varied parameters such as image size (ranging from 200MB to 500MB), data rate (ranging from 80Mbit/s to 320Mbit/s), high-priority satellites number (ranging from 21 to 50), and cost budget (ranging from 500 to 4000) to evaluate the impact of these factors on the attack performance. Gaussian noise was added to simulate real-world conditions where the attacker may not have precise knowledge of the image size that may vary due to the nature of the data collected by the satellite or the different types of tasks, or the data rate that fluctuates due to the weather or other factors. The default setting had a standard deviation that was times the true value, and we also varied this ratio from to . To simulate the scenario where the attacker lacks precise information regarding the initial queue size, we incorporated random noise of 10 images for the initial queue. For the data delay attack, we explored delay times ranging from 1 hour to 24 hours, with 24 hours as the default value. Each experiment was conducted with 10 different seeds to mitigate randomness.

VIII-B Evaluation Results

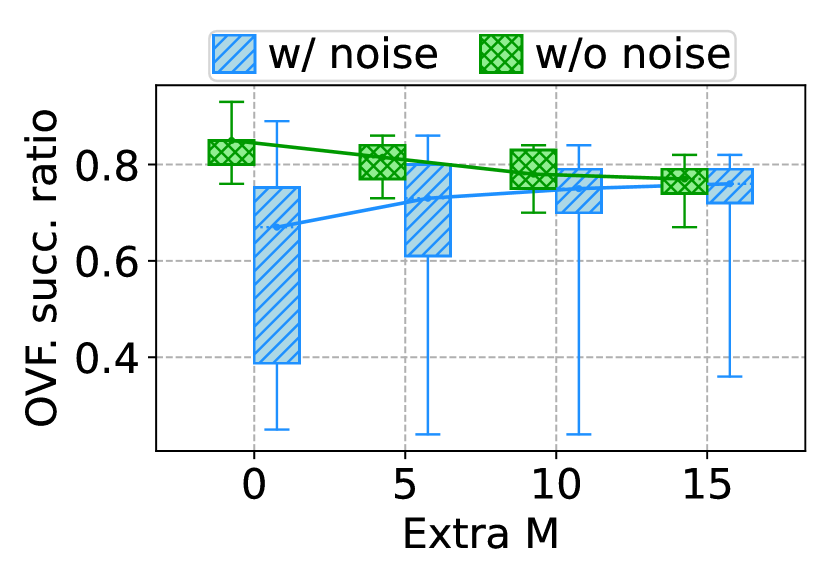

We used Delay succ. ratio and OVF. succ. ratio to denote the data delay attack success ratio and data overflow attack success ratio, which were calculated by the number of successful attacks divided by the total number of attacks attempted.

Vary image size. We evaluated the success ratio of the data delay attack and data overflow attack under different image sizes with and without noise, as illustrated in Fig. 9. The box in this and the following figures extended from the first quartile to the third quartile of the success ratio, with a line at the median [49]. The whiskers extended from the box to the maximum and minimum of the success ratio. We connected the median of each box to show the trend as the x-axis changes.

Overall, attacks with noise had a lower success ratio than those without, as the attack strategy was generated by the attacker for a noise-free environment. For the data delay attack, the success ratio increased as the image size increased. This was because larger images required more time to downlink, giving the attacker more opportunities to block the downlink slots of the target satellite. The slight decrease in success ratio observed at the image size of 300MB was attributed to the increased likelihood of data overflow occurrences at this particular image size. Consequently, the available attackable time slots were reduced, making it more challenging to identify an attack strategy capable of delaying the target data for 24 hours. The data overflow attack success ratio had a similar trend, with the success ratio increasing as the image size increased.

Vary data rate. We evaluated success ratio of the two attacks under different data rates, as shown in Fig. 10. For the data delay attack, the success ratio with noise decreased as the data rate increased. This was because a higher data rate allowed the target satellite to downlink images faster, reducing the attacker’s chances of blocking the downlink slots. For the data delay attack without noise, although the success ratio remained relatively stable at 0.8, its trend varied with changes in data rate. Specifically, it decreased from 80Mbps to 160Mbps, then increased from 160Mbps to 320Mbps. This pattern arose because at a data rate of 160Mbps, data overflow incidents were more likely to occur, leading to a reduction in available attackable windows and consequently a decrease in success ratio. However, as the data rate increased, a larger volume of data was transferred to the ground, making data overflow less likely. This resulted in an increase in available attackable time slots, leading to a higher success ratio. The data overflow attack with and without noise showed a similar trend.

We also analyzed the trend of data overflow success ratio with changes in image size and data rate, both with and without the need for attack (natural data overflow), as shown in Fig. 11. As shown in the left subfigure, as the image size increased, the overall data overflow success ratio increased, along with a significant increase in natural data overflow success ratio. This means that natural data overflow contributed a significant portion to the all data overflow success ratio. This was because as the image size increased and the downlink data rate remained unchanged, more data dropped directly due to natural data overflow, without the need for an attack. Conversely, in the right subfigure, with the increase in data rate, there was an increase in the amount of downlink data per unit time, leading to a decrease in data overflow instances that could occur without the need for an attack. As a result, the majority of data overflow successes required the attacker to generate a corresponding attack strategy.

Vary number of high-priority satellites. We evaluated success ratio of the two attacks with varying numbers of high-priority satellites, as shown in Fig. 12. The number of high-priority satellites significantly impacted the success ratio of both attacks. More high-priority satellites provided more attackable time slots, increasing the success ratio.

Vary cost budget. We evaluated success ratio of the two attacks under different cost budgets, as shown in Fig. 13. The results showed that for an attacker with a limited budget, a higher cost budget allowed the attacker to launch more high-priority tasks, thereby increasing the success ratio of the attack. The data overflow attack success ratio had a similar trend.

Vary target duration. We evaluated success ratio of the data delay attack under different target durations, as shown in Fig. 14. The results indicated that for different target delay times, if the attacker aimed to delay the target data for a longer duration, more attackable time slots were necessary to intercept. However, as the duration increased, more unattackable time slots emerged, potentially allowing the target data to transfer out. Consequently, the effective attackable time slots capable of causing continued delay to the target data decreased, leading to a lower success ratio.

Vary Gaussian noise ratio. We evaluated the impact of Gaussian noise on the success ratio of the two attacks by varying the standard deviation ratio from 0.1 to 0.4. The results showed that as the standard deviation ratio increased, the success ratio of the data delay attack and data overflow attack decreased. This was because the noise introduced uncertainty into the environment, making it more challenging for the attacker to identify the optimal attack strategy, thereby reducing the success ratio of the attack.

Vary extra attack target. As discussed in §VII, there are several ways to help the attacker improve the success ratio of the attack in the scenario where the attacker has imprecise knowledge of the initial queue size, image size in the queue and the data rate varies. We evaluated one of the strategies which is to attack extra images around the target data to increase the success ratio of the attack. As depicted in Fig. 16, without noise, both the success ratios of the data delay attack and data overflow attack decreased because the algorithms needed to generate attack sets targeting more images. This increased demand on the attack strategy led to a lower likelihood of finding suitable attack sets. With noise, as the number of extra attack targets increased, the success ratio of the data delay attack and data overflow attack increased. This was because the attacker generated attack sets that had redundant attack windows to defend against the noise, thereby increasing the success ratio of the attack.

IX Countermeasures

In this section, we discuss several potential countermeasures to the proposed data delay and overflow attacks.

Dynamic priority assignment. When scheduling high-priority tasks, consideration should be given to the potential impact on future low-priority tasks. Instead of consistently prioritizing high-priority satellites for data transmission, priority can be dynamically adjusted based on various factors, such as the waiting time of low-priority satellites.

Introducing unpredictable queue dynamics. Our attacks rely on the adversary’s ability to predict queue dynamics of the target satellite. Introducing unpredictable factors will make launching a successful attack much more difficult. Examples include data-specific defenses, such as transferring only the most important images, or assigning higher priority to different portions of the image at different time slots. Data-agnostic defenses might involve using random scheduling policies instead of FIFO (or other deterministic ones), random collaborative data collection across satellites, or random communication scheduling between low- and high-priority constellations.

Anomaly Detection. Our algorithms may generate specific (malicious) task scheduling patterns that can be detected by an anomaly detector , such as by continuously monitoring the average delay or data overflow rate of a satellite.

Hardware expansion. Increasing the onboard storage capacity, deploying more ground stations, enhancing the downlink bandwidth, deploying inter-satellite links, and utilizing spectrum sharing [50] can all enhance the system’s robustness.

X Conclusion

In this paper, we explore a new attack surface that exploits the competition for limited downlink resources among different types of EO satellites. We identify two distinct attacks unique to EO constellations: the data delay attack and the data overflow attack. In addition, we propose two algorithms aimed at conducting these attacks by finding the minimum-cost attack strategy for data-delay attacks and identifying feasible strategies for data overflow attacks. We conducted trace-driven simulations using real-world satellite image and orbit data to evaluate attack success ratios under realistic satellite communication settings, demonstrating the effectiveness of the proposed attacks. We hope this work will inspire future research on the new attack surface and the deployment of pro-active countermeasures by constellation operators.

Acknowledgment

We thank our shepherd Prof. Andrei Gurtov and the anonymous reviewers for their valuable comments. The research of Xiaojian Wang and Ruozhou Yu was sponsored in part by NSF grants 2045539 and 2433966. The research of Guoliang Xue was sponsored in part by the Army Research Laboratory and was accomplished under Cooperative Agreement Number W911NF-23-2-0225. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Laboratory or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein. This work utilized data made available through the NASA CSDA Program.

Proof of Lemma 1

-

Proof.

Given an attack strategy , let be an arbitrary slot in at which the queue is full under , i.e., . Further let be the last slot before satisfying the same condition as (when queue is full); if no such slot exists then let .

To prove Lemma 1, we show that adding any attackable time slot to attack strategy will result in the same queue evolution in both and as before adding , for any .

First, consider any slot . By definition, when , the full queue , where is the additional residual data amount due to transmission slot being attacked. Similarly, the subqueue before target data will become .

If , we have a full queue , and so . In this case, the downlink data amount decreases to due to the attack, and the dropped amount increases to due to additional queue overflow. Hence, the subqueue before target data also remains the same: . Since both and remains unchanged at , the queue evolution after is unaffected.

If , the remaining queue at time is increased by , that is, . By induction, we have for each time slot . At time , since , this leads to the same derivation as above where . Similarly, we have for each time slot due to the downlink amount being reduced by at time . At time , the dropped amount increased by due to queue overflow, and hence the subqueue still remains the same. Hence, the queue evolution after remains unaffected.

Summarizing the above, since the queue evolution of both the full queue and the subqueue before remains unchanged after , the expected evacuation time of the target data remains unchanged. By definition of , this means attacking any single slot before results in no attack strength. The conclusion extends to an arbitrary set of attack slots before by induction over the set, thus proving Lemma 1. ∎

Proof of Theorem 1

-

Proof.

We prove Theorem 1 by induction.

Let be the next transmissible time slot after time . Let be the number of transmissible time slots between the original (non-attacked) evacuation time and the delay target . Assume the data delay attack problem is feasible for a target delay , we define a minimal attack strategy (MAS) to be a feasible attack strategy for target delay , such that removing any slot from results in it no longer being feasible for . By definition, a MAS has the following properties:

-

1.

A MAS for must have size equal to ;

-

2.

A MAS for has exactly one more time slot than a MAS for ;

-

3.

A minimum-cost attack strategy for must be a MAS (but not vice versa).

The third property above is true because, if a minimum-cost attack strategy is not a MAS, then there exists at least one time slot in , such that removing it still results in a feasible attack strategy with less cost.

Based on the above, we prove the following statement by induction: in every iteration of Algorithm 1, it finds a minimum-cost attack strategy with target delay equal to the -th transmissible time slot after .

Base step: Before the first iteration (), the statement is true, since is a minimum-cost attack strategy for target delay equal to the original evacuation time .

Induction step: Let be the -th transmissible time slot after . Assume target delay is feasible, and our algorithm has found a minimum-cost attack strategy for at iteration . Further assume target delay is also feasible. We will prove that our algorithm finds a minimum-cost attack strategy for at iteration .

Our induction proof is divided into two parts: (1) Algorithm 1 finds a minimal (and thus feasible) attack strategy for at iteration . (2) The attack strategy has the minimum cost among all feasible strategies for .

Part (1): Feasibility and Minimality.

Let be an arbitrary MAS (which is feasible) for target delay .

We now describe a step-by-step process for transforming into an MAS for that has the exact shape of , i.e., the solution we found in iteration plus an extra element .

Each step replaces one element but not in with one , until when .

During the transformation, we prove that after each step:

(a) the attack strategy is still feasible;

(b) the attack strategy is still minimal (having size );

(c) the intersection between and increases by .

Consider , and let . Since until termination, such must exist. Two cases may arise: either results in the evacuation time of the target data until —resulting in an extra delay of the target data—or the evacuation remains the same at . Both cases are discussed below:

Case 1: adding results in additional delay. In this case, we find any , and remove from . Such must exist since . After this:

(a) Since before removing , the strategy delays target data until , then after removing , the expected evacuation time is still at least , proving feasibility of the new for target delay .

(b) The size of remains unchanged before adding versus after removing , and hence is still minimal.

(c) Replacing with results in one more element that and have in common.

Case 2: adding results in no additional delay. This means a buffer overflow has happened after , resulting in zero attack strength of according to Lemma 1. Let be the earliest time slot after that has a full queue before adding to . Assume we can find such that , then removing from will result in the same queue evolution after , following the same proof logic of Lemma 1. In this case, we have: (a) the resulting is still feasible since removing does not result in reduction of evacuation time; (b) is still minimal; (c) has one more common element with .

We now show that there must exist such a and . Assume such a does not exist. This means that even without any attack before , the queue is still full at . In this case, adding to any attack strategy—including —will result in zero attack strength by Lemma 1. This contradicts being minimal in the induction hypothesis. Therefore, there must exist at least one element that is in the attack strategy .

To summarize, in whichever case above, one can always find a , and replace it with a , which preserves both feasibility and minimality of . After up to steps, the attack strategy becomes a MAS in the shape of .

Remark: Note that the above transformation is valid not just between and , but also any pair of MASs. This fact will be used in Part (2) of this proof, as well as the next proof for Theorem 2 that is based on a similar idea.

Finally, to complete the proof of Part (1), as long as a feasible solution exists for target delay , Algorithm 1 can always find such a in Line 1. To show this, note that if exists before in the algorithm, then by Lemma 1 it will have zero attack strength. In this case, adding to leads to a non-minimal attack strategy, violating our proof above that is a MAS. Hence (which earlier proof shows to exist) must be in the range that the algorithm searches within, proving to be non-empty. By finding a slot with non-zero attack strength, the algorithm finds one such , leading to a MAS feasible to in iteration .

Part (2): Optimality. To prove optimality, we show during the induction step that, if iteration generates that is a minimum-cost MAS for target delay , then iteration generates a that is a minimum-cost MAS for target delay , by adding in iteration . Below, we drop the superscript when referring to a solution for target delay for simplicity.

Let be a minimum-cost MAS for . Define two sets of time slots: , and . Let be the cost of an arbitrary strategy . Proving the algorithm’s output is minimum-cost means showing that the cost of is at most the cost of the minimum-cost , in other words, .

Assume by contradiction . Consider two cases: if is a subset of (the iteration- solution), or not.

Case 1: . Following the same procedure in Part (1) of the proof, we can step-by-step replace all elements in with all elements in from . This results in a feasible solution to target delay with less cost than , contradicting that is minimum-cost in the induction hypothesis.

Case 2: where . In this case, the lower cost of may be due to either or . Based on the replacement procedure in Part (1), if we can find a time slot whose cost is lower than and who can replace without affecting feasibility, this time slot must be in the range during iteration , which contradicts the fact that our algorithm picks the minimum-cost attackable slot in .

Assume such a slot cannot be found in . It then means there is a subset , such that: (i) , and (ii) replacing all elements in with all elements in from results in a feasible solution for target delay with less cost. This again contradicts with being minimum-cost.

Summing up the above cases, by induction, the solution output by the algorithm in iteration- is a minimum-cost attack strategy for target delay . ∎

Proof of Theorem 2

- Proof.

Similar to the previous proof, we define two concepts: A minimal attack strategy (MAS) is a feasible attack strategy of the data overflow attack, removing any element from which results in infeasibility of the attack. An earliest attack strategy (EAS) is a feasible attack strategy that results in the earliest time slot at which the target data is dropped. Both types of strategy must exist if data overflow attack is feasible for the target data. Our proof specifically focuses on strategies that are both a MAS and an EAS: a minimal earliest attack strategy (MEAS).

We now show that all MEASs for dropping the target data have the same size. If the target data is dropped without any attack, then the only MEAS is an empty strategy . Assuming target data is not dropped when no attack happens, we have , meaning the attack is feasible due to the target data’s downlink time being delayed until at least . Any MEAS of overflow attack must be a MAS of the data delay attack that delays the target data until the next transmissible time slot—denoted by —on or after . To see this, note that if the evacuation time under a strategy is before , the target data would be downlinked and the attack fails. If the evacuation time is after , then because target data would not be at the queue head at time , it would not be dropped due to buffer overflow at , making such a strategy either infeasible or not an EAS. Then, by the same argument as in the last proof, all MEASs have the same size .

Given the above, a MEAS for the data overflow attack is always a MAS for the data delay attack for a fixed target delay (assuming, with a slight modification of definition, that the queue does not overflow between and and keeps the target data on-board instead). By the same induction argument as in Part (1) of the last proof, we can immediately prove that any MAS to such a data delay attack can be transformed to a (data delay) MAS that our algorithm can find, starting from an empty MAS for the no attack scenario. By a similar argument as in Part (2) of the last proof, we can also prove that our algorithm finds a MEAS of the data overflow attack.

References

- [1] G. Le Cozannet, M. Kervyn, S. Russo, C. Ifejika Speranza, P. Ferrier, M. Foumelis, T. Lopez, and H. Modaressi, “Space-based earth observations for disaster risk management,” Surveys in geophysics, vol. 41, pp. 1209–1235, 2020.

- [2] “Planet’s communication network,” accessed 2024-05-24. [Online]. Available: https://leoconn.github.io/slides/leoconn_2021_day1_kiruthika.pdf

- [3] K. Devaraj, R. Kingsbury, M. Ligon, J. Breu, V. Vittaldev, B. Klofas, P. Yeon, and K. Colton, “Dove high speed downlink system,” in Small Satellite Conference, 2017.

- [4] B. Tao, M. Masood, I. Gupta, and D. Vasisht, “Transmitting, fast and slow: Scheduling satellite traffic through space and time,” in ACM MobiCom, 2023, pp. 1–15.

- [5] “Planet - Flock imaging constellation,” accessed 2024-05-24. [Online]. Available: https://www.eoportal.org/satellite-missions/planet#spacecraft

- [6] “Intro to planet,” 2024, accessed 2024-05-24. [Online]. Available: https://university.planet.com/introduction-to-planet

- [7] “Assured tasking,” accessed 2024-08-13. [Online]. Available: https://university.planet.com/introduction-to-skysat/1570959/scorm/3mnblic710vtf

- [8] “Fast track delivery,” accessed 2024-09-07. [Online]. Available: https://developers.planet.com/docs/tasking/basics/

- [9] K. Colton, J. Breu, B. Klofas, S. Marler, C. Norgan, and M. Waldram, “Merging diverse architecture for multi-mission support,” in Small Satellite Conference, 2020.

- [10] “Satellite hack on eve of ukraine war was a coordinated, multi-pronged assault,” accessed 2024-05-24. [Online]. Available: https://cyberscoop.com/viasat-ka-sat-hack-black-hat/

- [11] C. A. Hofmann and A. Knopp, “Satellite downlink jamming propagation measurements at ku-band,” in IEEE MILCOM, 2018, pp. 853–858.

- [12] G. Taricco and N. Alagha, “On jamming detection methods for satellite internet of things networks,” International Journal of Satellite Communications and Networking, vol. 40, no. 3, pp. 177–190, 2022.

- [13] “Tasking overview,” accessed 2024-05-24. [Online]. Available: https://developers.planet.com/docs/tasking/

- [14] “Planet labs ground station network,” 2024, accessed 2024-05-24. [Online]. Available: http://mstl.atl.calpoly.edu/~workshop/archive/2016/Spring/Day%202/Session%204/1_BryanKlofas.pdf

- [15] “So you launched a satellite… now what?” 2024, accessed 2024-05-24. [Online]. Available: https://www.planet.com/pulse/so-you-launched-a-satellite-now-what/

- [16] C. Foster, H. Hallam, and J. Mason, “Orbit determination and differential-drag control of planet labs cubesat constellations,” arXiv preprint arXiv:1509.03270, 2015.

- [17] D. Vasisht, J. Shenoy, and R. Chandra, “L2d2: Low latency distributed downlink for leo satellites,” in ACM SIGCOMM, 2021, pp. 151–164.

- [18] B. Tao, O. Chabra, I. Janveja, I. Gupta, and D. Vasisht, “Known knowns and unknowns: Near-realtime earth observation via query bifurcation in serval,” in USENIX NSDI, 2024.

- [19] A. Herrmann and H. Schaub, “Autonomous onboard planning for earth-orbiting spacecraft,” in IEEE AERO, 2022, pp. 1–9.

- [20] G. Rabideau, S. Chien, F. Nespoli, and M. Costa, “Managing spacecraft memory buffers with overlapping store and dump operations,” in AAAI ICAPS SPARK, 2016, pp. 69–75.

- [21] R. Knight, C. Chouinard, G. Jones, and D. Tran, “Leveraging multiple artificial intelligence techniques to improve the responsiveness in operations planning: Aspen for orbital express,” Ai Magazine, vol. 35, no. 4, pp. 26–36, 2014.

- [22] G. Rabideau, S. Chien, M. Galer, F. Nespoli, and M. Costa, “Managing spacecraft memory buffers with concurrent data collection and downlink,” Journal of Aerospace Information Systems, vol. 14, no. 12, pp. 637–651, 2017.

- [23] W.-C. Lin, D.-Y. Liao, C.-Y. Liu, and Y.-Y. Lee, “Daily imaging scheduling of an earth observation satellite,” IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, vol. 35, no. 2, pp. 213–223, 2005.

- [24] M. Lyu, Q. Wu, Z. Lai, H. Li, Y. Li, and J. Liu, “Falcon: Towards fast and scalable data delivery for emerging earth observation constellations,” in IEEE INFOCOM, 2023, pp. 1–10.

- [25] A. Kesselman, Z. Lotker, Y. Mansour, B. Patt-Shamir, B. Schieber, and M. Sviridenko, “Buffer overflow management in qos switches,” in ACM STOC, 2001, pp. 520–529.

- [26] Y. Xu, Y. Zhou, and D.-M. Chiu, “Analytical qoe models for bit-rate switching in dynamic adaptive streaming systems,” IEEE Transactions on Mobile Computing, vol. 13, no. 12, pp. 2734–2748, 2014.

- [27] K. Gao, C. Sun, S. Wang, D. Li, Y. Zhou, H. H. Liu, L. Zhu, and M. Zhang, “Buffer-based end-to-end request event monitoring in the cloud,” in USENIX NSDI, 2022, pp. 829–843.

- [28] J. Song, J. Liu, P. Gao, G. Liu, and H. J. Chao, “Inversion impact of approximate pifo to start-time fair queueing,” Computer Networks, vol. 240, p. 110164, 2024.

- [29] H. Tan, C. Zhang, C. Xu, Y. Li, Z. Han, and X.-Y. Li, “Regularization-based coflow scheduling in optical circuit switches,” IEEE/ACM Transactions on Networking, vol. 29, no. 3, pp. 1280–1293, 2021.

- [30] H. Tan, S. H.-C. Jiang, Y. Li, X.-Y. Li, C. Zhang, Z. Han, and F. C. M. Lau, “Joint online coflow routing and scheduling in data center networks,” IEEE/ACM Transactions on Networking, vol. 27, no. 5, pp. 1771–1786, 2019.

- [31] S. Huang, M. Wang, and Y. Cui, “Traffic-aware buffer management in shared memory switches,” IEEE/ACM Transactions on Networking, vol. 30, no. 6, pp. 2559–2573, 2022.

- [32] P. Gao, A. Dalleggio, Y. Xu, and H. J. Chao, “Gearbox: A hierarchical packet scheduler for approximate weighted fair queuing,” in USENIX NSDI, 2022, pp. 551–565.

- [33] W. M. Kempa and R. Marjasz, “Distribution of the time to data overflow in the M/G/1/N-type queueing model with batch arrivals and multiple vacation policy,” Journal of the Operational Research Society, vol. 71, no. 3, pp. 447–455, 2020.

- [34] W. M. Kempa, “On time-to-buffer overflow distribution in a single-machine discrete-time system with finite capacity,” Mathematical Modelling and Analysis, vol. 25, no. 2, pp. 289–302, 2020.

- [35] S. Zhang, H. Shan, Q. Wang, J. Liu, Q. Yan, and J. Wei, “Tail amplification in n-tier systems: a study of transient cross-resource contention attacks,” in IEEE ICDCS, 2019, pp. 1527–1538.

- [36] C. Hunger, M. Kazdagli, A. Rawat, A. Dimakis, S. Vishwanath, and M. Tiwari, “Understanding contention-based channels and using them for defense,” in IEEE HPCA, 2015, pp. 639–650.

- [37] H. W. Kuhn, “The hungarian method for the assignment problem,” Naval research logistics quarterly, vol. 2, no. 1-2, pp. 83–97, 1955.

- [38] “Ground station services,” accessed 2024-08-13. [Online]. Available: https://satsearch.co/ground-station-services

- [39] “Aws ground station,” accessed 2024-08-13. [Online]. Available: https://aws.amazon.com/ground-station/

- [40] “High-resolution imagery with planet satellite tasking,” accessed 2024-08-13. [Online]. Available: https://www.planet.com/products/high-resolution-satellite-imagery/

- [41] “Space-track.org,” accessed 2024-05-24. [Online]. Available: https://www.space-track.org/

- [42] Y. Chen and G. Casale, “Deep learning models for automated identification of scheduling policies,” in IEEE MASCOTS, 2021, pp. 1–8.

- [43] T. Gehr, S. Misailovic, P. Tsankov, L. Vanbever, P. Wiesmann, and M. Vechev, “Bayonet: probabilistic inference for networks,” ACM SIGPLAN Notices, vol. 53, no. 4, pp. 586–602, 2018.

- [44] “Satnogs, open source global network of satellite ground-stations,” accessed 2024-05-24. [Online]. Available: https://satnogs.org/

- [45] X. Tan, H. Li, X. Xie, L. Guo, N. Ansari, X. Huang, L. Wang, Z. Xu, and Y. Liu, “Reinforcement learning based online request scheduling framework for workload-adaptive edge deep learning inference,” IEEE Transactions on Mobile Computing, 2024.

- [46] S. C. Chan, K. Chan, K. Liu, and J. Y. Lee, “On queue length and link buffer size estimation in 3g/4g mobile data networks,” IEEE Transactions on Mobile Computing, vol. 13, no. 6, pp. 1298–1311, 2013.

- [47] “Planet APIs,” accessed 2024-05-24. [Online]. Available: https://developers.planet.com/docs/apis/

- [48] “Earth resources,” accessed 2024-05-24. [Online]. Available: https://celestrak.org/NORAD/elements/table.php?GROUP=resource&FORMAT=tle

- [49] “matplotlib.pyplot.boxplot,” accessed 2024-05-24. [Online]. Available: https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.boxplot.html

- [50] W. Li, L. Jia, Q. Chen, and Y. Chen, “A game theory-based distributed downlink spectrum sharing method in large-scale hybrid satellite constellations,” IEEE Transactions on Communications, 2024.