Inferring Topology of Networked Dynamical Systems by Active Excitations

Abstract

Topology inference for networked dynamical systems (NDSs) has received considerable attention in recent years. The majority of pioneering works have dealt with inferring the topology from abundant observations of NDSs, so as to approximate the real one asymptotically. Leveraging the characteristic that NDSs will react to various disturbances and the disturbance’s influence will consistently spread, this paper focuses on inferring the topology by a few active excitations. The key challenge is to distinguish different influences of system noises and excitations from the exhibited state deviations, where the influences will decay with time and the exciatation cannot be arbitrarily large. To practice, we propose a one-shot excitation based inference method to infer -hop neighbors of a node. The excitation conditions for accurate one-hop neighbor inference are first derived with probability guarantees. Then, we extend the results to -hop neighbor inference and multiple excitations cases, providing the explicit relationships between the inference accuracy and excitation magnitude. Specifically, the excitation based inference method is not only suitable for scenarios where abundant observations are unavailable, but also can be leveraged as auxiliary means to improve the accuracy of existing methods. Simulations are conducted to verify the analytical results.

I Introduction

Networked dynamical systems (NDSs) have been extensively used in numerous applications in the last decades, e.g., electric power systems [1], transportation systems [2], and multi-robot systems [3]. The topology of NDSs is fundamental to characterizing interactions between individual nodes and determines the system convergence. Inferring the topology from observations provides insightful interpretability about NDSs and associated task implementations, and has become a hotspot research topic.

In the literature, plenty of works have been developed to address the topology inference problem from different aspects [4]. For instance, in terms of static topology, [5, 6, 7, 8] focus on inferring the causality/dependency relationships between nodes, while [9, 10, 11] reconstruct the topology by finding the most suitable eigenvalues and eigenvectors from the sample covariance matrix. Considering the topology is time-varying by rules, available methods include graphical Lasso-based methods [12] and SEM models [13], which take the varying topology as a sequence of static topologies and infer them, respectively. In addition to the dynamic topology inference, many kernel-based methods are proposed to deal with cases with nonlinear system models [14, 15].

Despite the tremendous advances of the above works, almost all of the approaches are based on a large scale of observations over the systems. In other words, the feasibility lies in digging up the regularity of the dynamical evolution process from the observation sequences, which corresponds to the common intuition that more data make the interpretability better [16]. Unfortunately, when the observations over the NDS are very limited, the aforementioned methods cannot work well. For example, for a linear time-invariant NDS of nodes, at least groups of consecutive global observations are required to obtain a unique least square estimate of the topology matrix. When more observations are not allowable due to some practical limitations, directly inferring the topology from observations will be extremely difficult.

Inspired by the phenomenon that a thrown stone into water will cause waves, we are able to proactively inject inputs into the systems to excite corresponding reaction behaviors, i.e., the injected inputs on one node will spread to other neighbor nodes. Related examples include using Traceroute to probe the routing topology of the Internet [17], or utilizing inverters to probe the electric distribution network [18]. Therefore, it is possible to reveal the underlying topology of NDSs by investigating the relationships between the excitations and reactions [19, 20, 21]. This idea has motivated the study of this paper, where we aim to leverage a few active excitations to do the inference tasks. It is worth noting that if the excitations are allowed to be abundant, then the problem falls into the realm of typical system identification [22, 23], which is not the focus of this paper.

Few excitations indicate small inference costs but incur new challenges. On the one hand, the influence of the excitation is closely coupled with that of stochastic noises, making it hard to directly distinguish their difference. On the other hand, the spreading effect of the excitation will decay with time and the excitation cannot be arbitrarily large, limiting the scope and accuracy of the inferred topology. To address these issues, we introduce the probability measurement to infer the topology from a local node, and demonstrate how to determine whether the information flow between two nodes exists. The main contributions are summarized as follows.

-

•

We investigate the possibility of inferring the topology of NDSs by a few active excitations, taking both the process and measurement noises into account. Specifically, we utilize hypothesis test to establish criteria of how to determine the connections between nodes from the exhibited state deviations after excitations.

-

•

Considering the spreading effects of excitations in NDSs, we first propose one-shot excitation based method to infer one-hop neighbors of a single node. Then, we prove the critical excitation condition given tolerable misjudgment probability, providing reliable excitation design guidance.

-

•

Based on the one-hop inference procedures, we extend the theoretical analysis to multi-hop neighbor inference by one-shot excitation and multiple excitation cases, respectively. The relationship between inference accuracy and excitation magnitude is derived with probability guarantees. Simulations verify our theoretical results.

The proposed inference method by a few active excitations applies to situations where the observations about NDSs are not sufficient. It can also be leveraged as an auxiliary measure to enhance the accuracy of existing methods by large scales of observations, by treating the inferred results as the constraints in counterpart problem modeling. The remainder of this paper is organized as follows. In Section II, some preliminaries of NDSs and problem modeling are presented. The inference method and performance analysis are provided in Section III. Simulation results are shown in Section IV. Finally, Section V concludes the paper.

II Preliminaries and Problem Formulation

II-A Graph Basics and Notations

Let be a directed graph that models the networked system, where is the finite set of nodes and is the set of interaction edges. An edge indicates that will use information from . The adjacency matrix of is defined such that if exists, and otherwise. Denote as the in-neighbor set of , and as its in-degree. Throughout this paper, let and be all-zero and all-one matrices in compatible dimensions, and be the smallest and largest eigenvalues of the matrix , respectively.

II-B System Model

Consider the following networked dynamical model

| (1) | ||||

where and represents the system state and corresponding observation at time , is the interaction topology matrix related to the adjacent matrix , and and represent the process and observation noises, satisfying the following Gauss-Markov assumption.

Assumption 1.

and are i.i.d. Gaussian noises, subject to and , respectively. They are also independent of and .

Next, we characterize the stability of (1) by defining

| (2) | ||||

Then, is called asymptotically stable if , or marginally stable matrix if . Concerning its setup, popular choices include the Laplacian and Metropolis rules [24], which are given by

| (3) | ||||

| (4) |

where the auxiliary parameter satisfies . Note that if is specified by either one of the two rules, then . A typical matrix in can be directly obtained via multiplying (3) and (4) by a factor , which is common in adaptive diffusion networks [25]. Based on (1), the observation can be recursively expanded as

| (5) |

Considering different stabilities, it holds that

| (6) |

where . Therefore, if , is strictly bounded in the expectation sense.

II-C Inference Modeling and Problem of Interest

Since the information flow between nodes is specified by the topology of NDSs, we first define the -hop neighbor of a single node.

Definition 1 (-hop out-neighbor).

Node is a -hop out-neighbor of node if the minimal edge number of an acyclic path from to is , satisfying

| (7) |

where node and . All the -hop out-neighbors are represented by the set .

Note that when the topology is undirected, there is no need to differentiate the in/out-neighbors. If , node is also called the -hop in-neighbor of node . Unless otherwise specified, we mainly focus on the -hop out-neighbors of a node in the following. To present an explicit expression for , we first define as the node set where all the nodes can reached from node within hops. Then, is recursively formulated as

| (8) |

When , . The following assumption is made throughout this paper.

Assumption 2.

The topology matrix , and the elements of are all non-negative. For all , there exists a lower bound such that .

Finally, the problem of interest is formulated as follows. Consider that there are no sufficient observations of the NDS model (1) to support existing estimation or regression methods of inferring topology, e.g., the causality based estimator in [7]. Leveraging the characteristic that a NDS is easily subjected to various disturbances and exhibits state deviation, we aim to reduce the inference dependence on observation scales, and propose an active excitation based method to infer the topology from limited new observations. Mathematically, let be the excitation input on node at time , and be the observation of at time . Then, the goal of this paper is to find from the limited observations .

The above problem is very challenging, as the stochastic process and measurement noises will also affect and even accumulate. We will address these issues from the following aspects.

-

•

To make the excitation’s impact differentiable, we resort to the tool of hypothesis testing to derive the conditions for excitation magnitude to guarantee arbitrary misjudgement probability of one-hop neighbor inference.

-

•

To overcome the decaying effects of excitations, we establish the probabilistic relationship between the one-shot excitation magnitude and the accuracy of -hop neighbor inference.

-

•

We further extend the analysis to multiple excitations cases and illustrate how to use excitations to improve the accuracy of existing topology inference methods.

III Excitation-based Inference Method

In this section, we first analyze the reaction behavior of a NDS under excitation inputs. Then, we focus on how to infer the one-hop neighbors by one-shot excitation and characterize the inference accuracy in probability. Finally, we discuss how to infer the -hop neighbors and multiple excitations cases.

III-A Observation Modeling Under excitation

Since only are directly available, for every two adjacent observations, it follows that

| (9) |

where , satisfying . Besides, is independent of all and . We point out that (III-A) only represents the quantitative relationship between adjacent observations, not a causal dynamical process.

Similar to (III-A), the observation at time can be recursively written as

| (10) |

where is the -step translation matrix. For ease notation, let . Then, the deviation between and is represented by

| (11) |

where and is given by

| (12) |

which is obtained from the mutual independence of the process and observation noises.

Note under Assumption 2, it holds that and . Leveraging the two properties, one can induce that

| (13) |

where the deviation bound is given by

| (14) |

It is worth noting that will fluctuate around zero as increases in either case of and . For simplicity without loss of generality, consider node is injected with positive excitation input at time . Then, the observation deviation is given by

| (15) |

Note that the term in (15) represents the influence of the excitation input over after steps. Hereafter, we will drop the subscript in the variables if it does not cause confusion.

Remark 1.

The excitation put cannot be arbitrarily large due to the internal constraints in NDSs, otherwise one can easily infer the connections by a extremely large excitation input, which makes the inference trivial. Therefore, it is of greater necessity to investigate the relationships between the inference accuracy and excitation magnitude, providing available excitation guidance to obtain accurate inference results with probability guarantees.

III-B One-hop Neighbor Inference

After node is injected with excitation input , the one-step observation deviation of node is given by

| (16) |

For legibility, we temporarily assume and take its influence into consideration after the analysis. Since is associated with the stochastic process and measurement noises, finding can be modeled as a typical binary hypothesis testing. The null and alternative hypothesis are respectively defined as

| (17) |

Then, denote () as the probability that () holds given the observation . Then, we have the following decision criterion

| (18) |

which is also called the maximum posterior probability criterion. However, it is possible that (18) is misjudged in the test, for example, is true but is decided (Type I Error) or is true but is decided (Type II Error). Accordingly, let be the false alarm probability and be the missed detection probability, respectively. Therefore, the overall misjudgement probability is given by

| (19) |

Suppose the inference center has no prior information about and , i.e., . Under hypothesis testing (18), the following result presents the probabilistic relationship between the inference accuracy and the injected excitation magnitude.

Theorem 1 (Critical excitation for one-hop neighbors).

To ensure the misjudgement probability within a threshold , the excitation should satisfy

| (20) |

where the Gaussian error and is the reverse mapping of .

Proof.

The proof consists of two steps. First, we prove the decision threshold is given by . Then, we demonstrate the critical excitation magnitude under the .

For simplicity without losing generality, we begin with the case where the excitation input . Note that is a continuous random variable, the likelihood ratio in the test is given by

| (21) |

where is the probability density function of . Due to the prior probabilities , the decision threshold satisfies

| (22) |

Since , substituting into (22), it yields that

| (23) |

It follows from (23) that , leading to

| (24) |

Next, by the definition of , one has

| (25) |

Substitute into (III-B), yielding

| (26) |

Note that , thus it yields that

| (27) |

Substituting and into (27), we obtain

| (28) |

The result is likewise when due to the symmetry of Gaussian distribution. By the monotone increasing property of , to guarantee , the excitation input must satisfy . The proof is completed. ∎

Theorem 1 gives the lower magnitude bound of the excitation input to guarantee the specified misjudgment probability in a single time. Given the excitation input satisfying (20), one has with probability at least to accurately discriminate whether . Note that the interaction weight is not priorly known in reality. Thus the decision threshold in theory, , is unavailable. However, we can enable the hypothesis test by specifying the least interaction weight that one wishes to discriminate between two nodes.

To practice, since , thus we have and

| (29) |

Specifically, if is row-stochastic, then the upper bound can be further reduced to . Next, suppose that one aims to judge whether such that , where is the weight lower bound. Given the desired error probability bound and the excitation input such that , then with probability at one can discriminate whether by

| (30) |

where the parameters in (30) are all computable or known. Applying (30) to all other node and one can obtain an estimated set of . Note that although the observation deviation will affect the performance of excitation based topology inference, its influence is strictly bounded under Assumption 2. The whole procedures are summarized in Algorithm 1, where we use the lower bound that is sufficient to guarantee the accuracy probability.

A direct result from Theorem 1 is , which corresponds to the common intuition. As long as the excitation input is large enough, the one-hop neighbors of can always be inferred. Under this situation, it is also very likely that the two-hop (even more) out-neighbors of the excited node can also be identified by just single excitation.

III-C Multi-hop Neighbor Inference

In this part, we will demonstrate how to identify multi-hop out-neighbors of a node by single excitation. Similar with the hypothesis (17), we first define the following hypothesis that tests whether , i.e.,

| (31) |

Note that (31) is a test using the observation deviation to judge whether node is an out-neighbor of node within steps. Although it cannot infer the -hop neighbor directly, valuable information can still be extracted for the final inference. To begin with, we present the following result.

Lemma 1 (Critical excitation for neighbors within hops).

Under hypothesis test (31), to ensure the misjudgement probability for all the neighbor within h-hop is lower than , the excitation should satisfy

| (32) |

Proof.

Directly focusing on the -step node response after the excitation input is injected on , becomes the equivalent topology that corresponds to the -step process. Based on Theorem 1, when ensures the misjudgement probability is no more than , which completes the proof. ∎

Note that Lemma 1 only illustrates how to reduce the misjudgement probability of , and does not provide information about whether . A key insight is that if is decided not in but in , then it is very likely that is true. Starting from this point, we utilize a single-time excitation input and do -rounds tests to achieve the inference goal. Two auxiliary functions are defined as

| (33) | |||

| (34) |

where . Based on and , the inference probability of multi-hop out-neighbors is presented as follows.

Theorem 2 (Lower probability bound of neighbor inference).

Given the maximum false alarm probability of hypothesis test (31), if the single-time excitation input , then we have

| (35) |

where and are given by

| (36) |

Proof.

The proof consists of three steps. Denote the false alarm probability by and the missed detection probability by . We first prove the critical excitation magnitude for identifying the neighbors within -hops. Then, we find the lower and upper bounds of and .

Based on the famous Neyman-Pearson rule, with a specified , one has

| (37) |

It follows from (37) that

| (38) |

Due to the prior probabilities and based on Lemma 1, also holds at -step response. Substituting it into (38), it yields that

| (39) |

Next, note that decreases with increasing. If the excitation input is designed such that

| (40) |

then one infers that

| (41) |

Meanwhile, recall the detection probability is calculated by

| (42) |

Since increases with increasing, one has

| (43) |

Finally, utilizing the Law of Total Probability, the probability that is decided as member of is calculated by

| . | (44) |

Substitute and into (III-C), and it yields that

| (45) |

The proof is completed. ∎

Theorem 2 provides the lower probability bounds for given the maximum false alarm probability of the test (31). Note that the test (31) is implemented multiple rounds to infer the neighbor within -hop, , respectively. Therefore, a notable characteristic of the bounds by (35) is that they can be calculated recursively with just one-shot excitation input. The higher the hop number is, the lower the probability bound is. The practical application of this test is similar to (30) and omitted here.

III-D Extensions and Discussions

Theorem 1 and 2 illustrate the conditions and performances of using just one-time excitation. However, there are also situations where a large excitation input is not allowed in the network dynamics, making the methods not directly available. To overcome this deficiency, multi-excitation is a promising alternative to achieve the inference goal. In this part, we will briefly show how to address the issue.

Suppose node is excited times with the same excitation input , the inference center obtains the average observation deviation of rounds by .

Corallary 1 (Upper bound of the misjudgement probability under multiple excitations).

Given excitation input and implement times of excitations, the misjudgement probability satisfies

| (46) |

where .

Proof.

Based on the independent identically distributed characteristic of , is subject to . Then, the misjudgement probability is calculated by

| (47) |

which completes the proof. ∎

From Corallary 1, we have that the variance and will decrease as grows. Therefore, it follows that

| (48) |

Corollary 1 illustrates that even when the magnitude of the excitation input is constrained, the misjudgment probability can be significantly reduced by increasing the excitation times. Due to is not priorly known, we can relax the decision threshold as in (30). Given the maximum available excitation input and specified weight threshold , one has with probability at least to discriminate whether by the following multiple excitation testing

| (49) |

where . A minor drawback of this method is that if the weight between two nodes is small and the excitation time is also limited, an existing edge may be regarded as not existing.

Finally, we illustrate how to use the excitation method to prove the performance of existing inferences. Suppose the observer has gained the observations from to moments. Traditionally, the inference problem can be formulated as solving the ordinary least square problem

| (50) |

By the excitation-based method, we can inject the excitation input on node at moment . Based on the observation , the results of the excitation based inference method are utilized to solve the following constrained least square problem

| (51a) | ||||

| s.t. | (51b) | |||

| (51c) | ||||

By solving problem (51), the final inferred global topology has smaller errors compared with that of (50).

Remark 2.

The key insight of improving the inference accuracy of (50) lies in that the topology is estimated in the independently row-by-row manner (i.e., solving ). Since the connections between and constitute a column of , the explicit constraints (51b) and (51c) for reduce the uncertainty of all other elements in -th row of , thus making the global inference accuracy improved.

IV Numerical Simulations

In this section, we present numerical simulations to demonstrate the performance of the analytical results. First, we display the basic setup. Then, we conduct groups of experiments under different conditions, including the system stability and noise variance. Detailed analysis is also provided to demonstrate the performance of the proposed method.

The most critical components are the adjacent matrix and the interaction matrix . For the setting of interaction matrix , we randomly generate a directed topology structure with , and the weight of is designed by the Laplacian rule. To save space, we mainly present the results of the case (the results of case are likewise). For generality, the initial states of all agents are randomly selected from the interval , and the variance of the process and observation noise satisfy and .

Now, we move on to verify the performance excitation-based method, as shown in Fig. 1. First, we excite a target node and wish to find its one-hop out-neighbor subject to . Given the lower probability bound , the critical excitation input is calculated by . We use the input to conduct the hypothesis test times and compute the ratio of positive results. As one expects, considering the same one-hop neighbor connection to be inferred, larger excitation input ensures higher accuracy of the decision results, as shown in Fig. 1(a). Next, the multi-hop neighbor inference results are provided in Fig. 1(b). It is easy to see that given the maximum false alarm probability and under the same excitation input, the accuracy for multi-hop neighbor inference will decrease as the hop number grows, which corresponds to the common intuition. The probability lower bound here is computed by (35). We note that the dashed lines in Fig. 1(a) and Fig. 1(b) are lower bounds of the accuracy in theory. Thus it makes sense that the actual accuracy in experiments is higher than that bound. The multiple excitation cases are likewise and are omitted here.

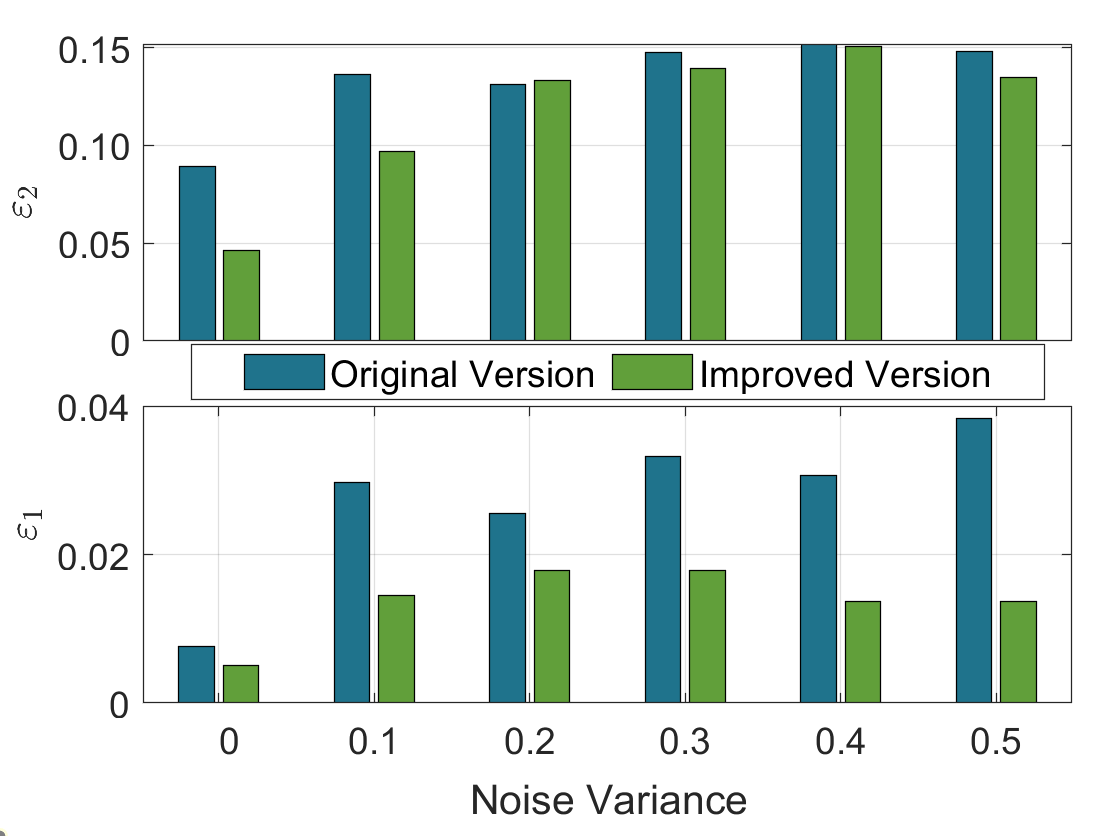

Finally, we provide the results of improving the inference performance of the causality based estimator in [7] to solve (50). Here we directly present the case and consider the following two error indexes

| (52) | ||||

| (53) |

which represents the structure and magnitude errors, respectively. As we can see from Fig. 1(c), with the same observations, the inference error is largely reduced by combining the excitation based inference results to solve (51), especially in terms of the structure error.

V Conclusions

In this paper, we investigated the topology inference problem of NDSs by using very few excitations. First, we introduced the definition of -hop neighbor and proposed the one-shot excitation based method. By utilizing the tool of hypothesis testing, we proved the magnitude condition of the excitation input with probability guarantees. Then, we extended the one-hop inference method to -hop neighbor and multiple excitations cases. The inference accuracy was rigorously analyzed. Finally, the performance study by simulations verified our performance analysis. The proposed inference method is helpful in scenarios of insufficient observations over NDSs, and can also be used as auxiliary means to improve the accuracy of existing methods. Future directions include extending the method to infer the specific values of the global topology, and making the few excitations cooperate to finish the inference task.

References

- [1] G. Cavraro and V. Kekatos, “Graph algorithms for topology identification using power grid probing,” IEEE Control Systems Letters, vol. 2, no. 4, pp. 689–694, 2018.

- [2] J. A. Deri and J. M. Moura, “New York City Taxi analysis with graph signal processing,” in IEEE Global Conference on Signal and Information Processing (GlobalSIP). IEEE, 2016, pp. 1275–1279.

- [3] Y. Li, J. He, and C. Lin, “Topology inference on partially observable mobile robotic networks under formation control,” in 2021 European Control Conference (ECC). IEEE, 2021, pp. 497–502.

- [4] I. Brugere, B. Gallagher, and T. Y. Berger-Wolf, “Network structure inference, a survey: Motivations, methods, and applications,” ACM Computing Surveys (CSUR), vol. 51, no. 2, pp. 1–39, 2018.

- [5] J. Etesami and N. Kiyavash, “Measuring causal relationships in dynamical systems through recovery of functional dependencies,” IEEE Transactions on Signal and Information Processing over Networks, vol. 3, no. 4, pp. 650–659, 2017.

- [6] A. Santos, V. Matta, and A. H. Sayed, “Local tomography of large networks under the low-observability regime,” IEEE Transactions on Information Theory, vol. 66, no. 1, pp. 587–613, 2020.

- [7] Y. Li and J. He, “Topology inference for networked dynamical systems: A causality and correlation perspective,” in 60th IEEE Conference on Decision and Control (CDC). IEEE, 2021, pp. 1218–1223.

- [8] Q. Jiao, Y. Li, and J. He, “Topology inference for consensus-based cooperation under time-invariant latent input,” in 2021 IEEE 94th Vehicular Technology Conference (VTC2021-Fall), 2021, pp. 1–5.

- [9] S. Segarra, A. G. Marques, G. Mateos, and A. Ribeiro, “Network topology inference from spectral templates,” IEEE Transactions on Signal and Information Processing over Networks, vol. 3, no. 3, pp. 467–483, 2017.

- [10] S. Segarra, M. T. Schaub, and A. Jadbabaie, “Network inference from consensus dynamics,” in 56th IEEE Conference on Decision and Control (CDC). IEEE, 2017, pp. 3212–3217.

- [11] Y. Zhu, M. T. Schaub, A. Jadbabaie, and S. Segarra, “Network inference from consensus dynamics with unknown parameters,” IEEE Transactions on Signal and Information Processing over Networks, vol. 6, pp. 300–315, 2020.

- [12] A. J. Gibberd and J. D. Nelson, “High dimensional changepoint detection with a dynamic graphical Lasso,” in 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2014, pp. 2684–2688.

- [13] B. Baingana and G. B. Giannakis, “Tracking switched dynamic network topologies from information cascades,” IEEE Transactions on Signal Processing, vol. 65, no. 4, pp. 985–997, 2016.

- [14] G. Karanikolas, G. B. Giannakis, K. Slavakis, and R. M. Leahy, “Multi-kernel based nonlinear models for connectivity identification of brain networks,” in 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2016, pp. 6315–6319.

- [15] S. Wang, E. D. Herzog, I. Z. Kiss, W. J. Schwartz, G. Bloch, M. Sebek, D. Granados-Fuentes, L. Wang, and J.-S. Li, “Inferring dynamic topology for decoding spatiotemporal structures in complex heterogeneous networks,” Proceedings of the National Academy of Sciences, vol. 115, no. 37, pp. 9300–9305, 2018.

- [16] X. Dong, D. Thanou, M. Rabbat, and P. Frossard, “Learning graphs from data: A signal representation perspective,” IEEE Signal Processing Magazine, vol. 36, no. 3, pp. 44–63, 2019.

- [17] B. Holbert, S. Tati, S. Silvestri, T. F. La Porta, and A. Swami, “Network topology inference with partial information,” IEEE Transactions on Network and Service Management, vol. 12, no. 3, pp. 406–419, 2015.

- [18] G. Cavraro and V. Kekatos, “Inverter probing for power distribution network topology processing,” IEEE Transactions on Control of Network Systems, vol. 6, no. 3, pp. 980–992, 2019.

- [19] H. H. Weerts, A. G. Dankers, and P. M. Van den Hof, “Identifiability in dynamic network identification,” IFAC-PapersOnLine, vol. 48, no. 28, pp. 1409–1414, 2015.

- [20] J. M. Hendrickx, M. Gevers, and A. S. Bazanella, “Identifiability of dynamical networks with partial node measurements,” IEEE Transactions on Automatic Control, vol. 64, no. 6, pp. 2240–2253, 2018.

- [21] A. S. Bazanella, M. Gevers, and J. M. Hendrickx, “Network identification with partial excitation and measurement,” in 58th IEEE Conference on Decision and Control (CDC). IEEE, 2019, pp. 5500–5506.

- [22] M. Coutino, E. Isufi, T. Maehara, and G. Leus, “State-space network topology identification from partial observations,” IEEE Transactions on Signal and Information Processing over Networks, vol. 6, pp. 211–225, 2020.

- [23] X. Mao, J. He, and C. Zhao, “An improved subspace identification method with variance minimization and input design,” in 2022 American Control Conference (ACC). IEEE, to be published.

- [24] A. H. Sayed et al., “Adaptation, learning, and optimization over networks,” Foundations and Trends® in Machine Learning, vol. 7, no. 4-5, pp. 311–801, 2014.

- [25] V. Matta and A. H. Sayed, “Consistent tomography under partial observations over adaptive networks,” IEEE Transactions on Information Theory, vol. 65, no. 1, pp. 622–646, 2019.