Inference via robust optimal transportation: theory and methods

Abstract

Optimal transportation theory and the related -Wasserstein distance (, ) are widely-applied in statistics and machine learning. In spite of their popularity, inference based on these tools has some issues. For instance, it is sensitive to outliers and it may not be even defined when the underlying model has infinite moments. To cope with these problems, first we consider a robust version of the primal transportation problem and show that it defines the robust Wasserstein distance, , depending on a tuning parameter . Second, we illustrate the link between and and study its key measure theoretic aspects. Third, we derive some concentration inequalities for . Fourth, we use to define minimum distance estimators, we provide their statistical guarantees and we illustrate how to apply the derived concentration inequalities for a data driven selection of . Fifth, we provide the dual form of the robust optimal transportation problem and we apply it to machine learning problems (generative adversarial networks and domain adaptation). Numerical exercises provide evidence of the benefits yielded by our novel methods.

keywords:

[class=MSC]keywords:

2303.110540 \startlocaldefs \endlocaldefs

, and

1 Introduction

1.1 Related literature

Optimal transportation (OT) dates back to the work of [43], who considered the problem of finding the optimal way to move given piles of sand to fill up given holes of the same total volume. Monge’s problem remained open until it was revisited and solved by Kantorovich, who characterized the optimal transportation plan in relation to the economic problem of optimal allocation of resources. We refer to [57], [53] for a book-length presentation in mathematics, to [47] for a data science view, and to [46] for a statistical perspective.

Within the OT setting, the Wasserstein distance () is defined as the minimum cost of transferring the probability mass from a source distribution to a target distribution, for a given transfer cost function. Nowadays, OT theory and are of fundamental importance for many scientific areas, including statistics, econometrics, information theory and machine learning. For instance, in machine learning, OT based inference is a popular tool in generative models; see e.g. [3], [23], [45] and references therein. Similarly, OT techniques are widely-applied for problems related to domain adaptation; see [15] and [4]. In statistics and econometrics, estimation methods based on are related to minimum distance estimation (MDE); see [5] and [8]. MDE is a research area in continuous development and the estimators based on this technique are called minimum distance estimators. They are linked to M-estimators and may be robust. We refer to [29], [55] and [7] for a book-length statistical introduction. As far as econometrics is concerned, we refer to [34] and [30]. The extant approaches for MDE are usually defined via Kullback-Leibler (KL) divergence, Cressie-Read power divergence, total variation (TV) distance, Hellinger distance, to mention a few. Some of those approaches (e.g. Hellinger distance) require kernel density estimation of the underlying probability density function. Some others (e.g. KL divergence) have poor performance when the considered distributions do not have a common support. Compared to other notions of distance or divergence, avoids the mentioned drawbacks, e.g. it can be applied when the considered distributions do not share the same support and inference is conducted in a generative fashion—namely, a sampling mechanism is considered, in which non-linear functions map a low dimensional latent random vector to a larger dimensional space; see [23]. This explains why is a popular inference tool; see e.g. [15, 22, 35, 12, 47]; see [25] and [37] for a recent overview of technology transfers among different areas.

In spite of their appealing theoretical properties, OT and have two important inference aspects to deal with: (i) implementation cost and (ii) lack of robustness. (i) The computation of the solution to OT problem and of the computation of can be demanding. To circumvent this problem, [16] proposes the use of an entropy regularized version of the OT problem. The resulting divergence is called Sinkhorn divergence; see [2] for further details. [23] illustrate the use of this divergence for inference in generative models, via MDE. The proposed estimation method is attractive in terms of performance and speed of computation. However (to the best of our knowledge) the statistical guarantees of the minimum Sinkhorn divergence estimators have not been provided. (ii) Robustness is becoming a crucial aspect for many complex statistical and machine learning applications. The reason is essentially two-fold. First, large datasets (e.g. records of images or large-scale data collected over the internet) can be cluttered with outlying values (e.g. due to recording device failures). Second, every statistical model represents only an approximation to reality. Thus, the need for inference procedures that remain informative even in the presence of small deviations from the assumed statistical (generative) model is in high demand, in statistics and in machine learning. Robust statistics deals with this problem. We refer to [29] and [51] for a book-length discussion on robustness principles.

Other papers have already mentioned the robustness issues of and OT; see e.g. [1], [26],[27],[28] [18], [44], [60] and [50]. In the OT literature, [13] proposes to deal with outliers via unbalanced OT, namely allowing the source and target distribution to be non-standard probability distributions. This sensitivity is an undesired consequence of exactly satisfying the marginal constraints in OT transportation problem: intuitively, using Monge’s formulation, the piles of sand and the holes need to have the same total volume, thus the optimal transportation plan is affected by the outlying values inducing a high transportation cost. Relaxing the exact marginal constraints in a way that the transportation plan does not assign large weights to outliers yields the unbalanced OT theory: a mass variation is allowed in the OT problem (intuitively, the piles of sand and the holes do not need to have the same volume) and outlying values can be ignored by the transportation plan. Working along the lines of this approach, [4] define a robust optimal transportation (ROBOT) problem and study its applicability to machine learning. In the same spirit, starting from OT formulation with a regularization term which relaxes the marginal constraints, [44] derive a novel robust OT problem, that they label as ROBOT and they apply it to machine learning. Moreover, building on the Minimum Kantorovich Estimator (MKE) of [5], Mukherjee et al. propose a class of estimators obtained through minimizing the ROBOT and illustrate numerically their performance. In the same spirit, recently, [45] defined a notion of outlier-robust Wasserstein distance which allows for an -percentage of outlier mass to be removed from contaminated distributions.

1.2 Our contributions

Working at the intersection between mathematics, probability, statistics, machine learning and econometrics, we study the theoretical, methodological and computational aspects of ROBOT. Our contributions to these different research areas can be summarized as follows.

We prove that the primal ROBOT problem of [44] defines a robust distance that we call robust Wasserstein distance , which depends on a tuning parameter controlling the level of robustness. We study the measure theoretic properties of , proving that it induces a metric space whose separability and completeness are proved. Moreover, we derive concentration inequalities for the robust Wasserstein distance and illustrate their practical applicability for the selection of in the presence of outliers.

These theoretical (in mathematics and probability) findings are instrumental to another contribution (in statistics and econometrics) of this paper: the development of a novel inferential theory based on robust Wasserstein distance. Making use of convex analysis [49] and of the techniques in [8], we prove that the minimum robust Wasserstein distance estimators exist (almost surely) and are consistent (at the reference model, possibly misspecified). Numerical experiments show that the robust Wasserstein distance estimators remain reliable even in the presence of local departures from the postulated statistical model. These results complement the methodological investigations already available in the literature on minimum distance estimation (in particular the MKE of [5] and [8]) and on ROBOT ([4] and [44]). Indeed, beside the numerical evidence available in the mentioned papers, there are (to the best of our knowledge) no statistical guarantees on the estimators obtained by the method of [44] and [4]. Moreover, our results extend the use of OT to conduct reliable inference on random variables with infinite moments.

Another contribution is the derivation of the dual form of ROBOT for application to machine learning problems. This result not only completes the theory in [44], but also provides the stepping stone for the implementation of ROBOT: we explain how our duality can be applied to define robust Wasserstein Generative Adversarial Networks (RWGAN). Numerical exercises provide evidence that RWGAN have better performance than the extant Wasserstein GAN (WGAN) models. Moreover, we illustrate how our ROBOT can be applied to domain adaptation, another popular machine learning problem; see Section 5.4 and Appendix .4.1.

Finally, as far as the approach in [45] is concerned, we remark that their notion of robust Wasserstein distance has a similar spirit to our (both are based on a total variation penalization of the original OT problem, but yield different duality formulations) and, similarly to our results in §5.3, it is proved to be useful in generative adversarial network (GAN). In spite of these similarities, our investigation goes deeper in the probabilistic and statistical aspects related to inference methods based on . With this regard, some key difference between [45] and our paper are that Nietert et al.: (a) do not study concentration inequalities for their distance; (b) do not provide indications on how to select and control the -percentage of outlier mass ignored by the OT solution—for a numerical illustration of this aspect in the setting of GAN, see Section 5.3; (c) do not define the estimators related to the minimization of their outlier-robust Wasserstein distance; (d) use regularization in the dual and a constraint in the primal, whereas ROBOT does the reverse: this simplifies our derivation of the dual problem. In the next sections we take care of all these and other aspects for the ROBOT, providing the theoretical and the applied statistician with a novel toolkit for robust inference via OT.

2 Optimal transport

2.1 Classical OT

We recall briefly the fundamental notions of OT as formulated by Kontorovich; more details are available in Appendix .1. Let and denote two Polish spaces, and let represent the set of all probability measures on . Let denote the set of all joint probability measures of and . Kontorovich’s problem aims at finding a joint distribution which minimizes the expectation of the coupling between and in terms of the cost function . This problem can be formulated as

| (1) |

A solution to Kantorovich’s problem (KP) (1) is called an optimal transport plan. Note that the problem is convex, and its solution exists under some mild assumptions on , e.g., lower semicontinuous; see e.g. [57, Chapter 4].

KP as in (1) has a dual form, which is related to Kantorovich dual (KD) problem

for , where is the set of bounded continuous functions on . According to Th. 5.10 in [57], if function is lower semicontinuous, there exists a solution to the dual problem such that the solutions to KD and KP coincide (no duality gap). In this case, the solution is

where the functions and are called -concave and -convex, respectively, and (resp. ) is called the -transform of (resp. ). When is a metric on , OT problem becomes , which is the Kantorovich-Rubenstein duality. Now, let denote a complete metric space equipped with a metric , and let and be two probability measures on . Solving the optimal transport problem in (1), with the cost function , introduces a distance, called Wasserstein distance of order :

| (2) |

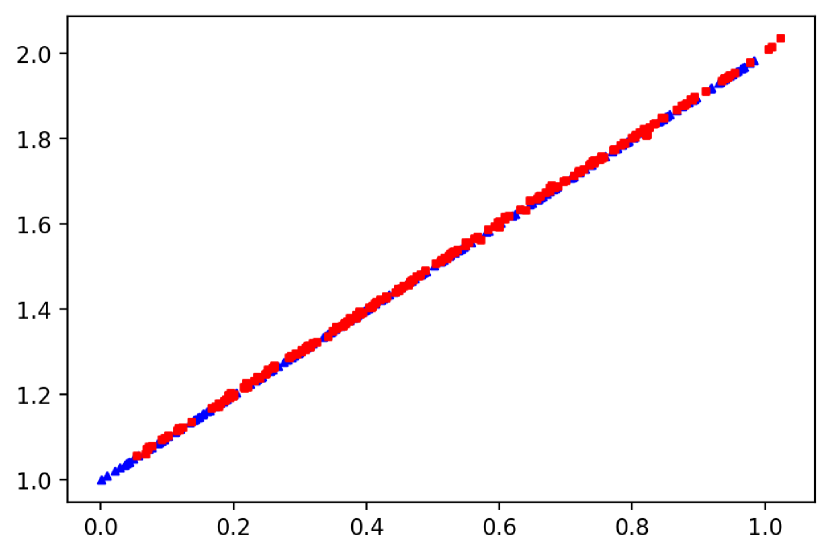

With regard to (2 ), we emphasize that the ability to lift the ground distance is one of the perks of and it makes it a suitable tool in statistics and machine learning; see Remark 2.18 in [47]. Interestingly, this desirable feature becomes a negative aspect as far as robustness is concerned. Intuitively, OT embeds the distributions geometry: when the underlying distribution is contaminated by outliers, the marginal constraints force OT to transport outlying values, inducing an undesirable extra cost, which in turns entails large changes in the . More in detail, let be point mass measure centered at (a point in the sample space), and be the ground distance. Then, we introduce the framework, in which the majority of the data are inliers, that is i.i.d. informative data with distribution , whilst a few data are outliers, meaning that they are not i.i.d. with distribution . We refer to [39] (and references therein) for more detail on and for discussion on its connection to Huber gross-error neighbourhood. Thus, for , we have , as . Thus, can be arbitrarily inflated by a small number of large observations and it is sensitive to outliers. For instance, let us consider , with . In Figure 1(a) and 1(b), we display the scatter plot for two bivariate distributions: the distribution in panel (b) has some abnormal points (contaminated sample) compared to the distribution in panel (a) (clean sample). Although only a small fraction of the sample data is contaminated, both and display a large increase. Anticipating some of the results that we will derive in the next pages, we compute our (see §3.1) and we notice that its value remains roughly the same in both panels. We refer to §5.1 for additional insights on the resistance to outliers of .

2.2 Robust OT (ROBOT)

We recall the primal formulation of ROBOT problem, as defined in [44], who introduce a modified version of Kantorovich OT problem and work on the notion of unbalanced OT. Specifically, they consider a TV-regularized OT re-formulation

| (3) |

where is a regularization parameter. In (3), the original source measure is modified by adding (nevertheless, the first and last constraints ensure that is still a valid probability measure). Intuitively, having means that has strong impact on the OT problem and hence can be labelled as an outlier. The outlier is eliminated from the sample, since the probability measure at this point is zero.

[44] prove that a simplified, computationally efficient formulation equivalent to (3) is

| (4) |

which has a functional form similar to (1), but the cost function is replaced by the trimmed cost function that is bounded from above by . Following [44], we call formulations (3) and (4) ROBOT. Beside the primal formulation, in the following theorem we drive the dual form of the ROBOT, which is not available in [44]. We need this duality for the development our inferential approach and, for ease of reference, we state it in form of theorem—the proof can be obtained from Th. 5.10 in [57] and it is available in Appendix .2.

Theorem 2.1.

Let be probability measures in , with as in (6). Then the c-transform of a c-convex function is itself, i.e. . Moreover, the dual form of ROBOT is related to the Kantorovich potential , which is a solution to

| (5) |

where satisfies and .

Note that the difference between the dual form of OT and that of ROBOT is that the latter imposes a restriction on the range of . Thanks to this, we may think of using the ROBOT in theoretical proofs and inference problems where the dual form of OT is already applied, with the advantage that ROBOT remains stable even in the presence of outliers. In the next sections, we illustrate these ideas and we start the construction of our inference methodology by proving that to ROBOT is associated a robust distance, which will be the pivotal tool of our approach.

3 Robust Wasserstein distance

3.1 Key notions

Setting , (2) implies that the corresponding minimal cost is a metric between two probability measures and . A similar result can be obtained also in the ROBOT setting of (4), if the modified cost function takes the form

| (6) |

Lemma 3.1.

Let denote a metric on . Then in (6) is also a metric on .

Based on Lemma 3.1, we define the robust Wasserstein distance and prove that it is a metric on .

Theorem 3.2.

It is worth noting that is well defined only if probability measures have finite -th order moments. In contrast, thanks to the boundedness of the , our in (7) can be applied to all probability measures, even those with infinite moments of any order. Making use of we introduce the following

Definition 3.3 (Robust Wasserstein space).

A robust Wasserstein space is an ordered pair , where is the set of all probability measures on a complete separable metric space , and defined in Th. 3.2 is a (finite) distance on .

3.2 Some measure theoretic properties

In this section we state, briefly, some preliminary results. The proofs (which are based on [57]) of these results are available in Appendix .2, to which we refer the reader interested in the mathematical details. Here, we provide essentially the key aspects, which we prefer to state in the form of theorems and corollary for the ease of reference in the theoretical developments available in the next sections.

Equipped with Th. 3.2 and Definition 3.3, it is interesting to connect to , via the characterization of its limiting behavior as . Since is continuous and monotonically increasing with respect to , one can prove (via dominated convergence theorem) that its limit coincides with the Wasserstein distance of order . Thus:

Theorem 3.4.

For any probability measures and in , is continuous and monotonically non-decreasing with respect to . Moreover, if exists, we have

Th. 3.4 has a two-fold implication: first, ; second, for large values of the regularization parameter, and behave similarly. Another important property of is that weak convergence is entirely characterized by convergence in the robust Wasserstein distance. More precisely, we have

Theorem 3.5.

Let be a Polish space. Then metrizes the weak convergence in . In other words, if is a sequence of measures in and is another measure in , then and are equivalent.

The next result follows immediately from Theorem 3.5.

Corollary 3.6 (Continuity of ).

For a Polish space , suppose that converges weakly to in as . Then

Finally, we prove the separability and completeness of robust Wasserstein space, when is separable and complete. To this end, we state

Theorem 3.7.

Let be a complete separable metric space. Then the space , metrized by the robust Wasserstein distance , is complete and separable. Moreover, the set of finitely supported measures with rational coefficients is dense in .

We remark that our dual form in Th. 2.1 unveils a connection between and the Bounded Lipschitz (BL) metric discussed in [19, p.41 and p.42]: the two metrics coincide for a suitable selection of . With this regard, we emphaisze that, since the BL meterizes the weak-star topology, Th. 3.2 and Th. 3.5 are in line with the results in Section 3 of [19].

3.3 Some novel probabilistic aspects

The previous results offer the mathematical toolkit needed to derive some concentration inequalities for building on the extant results for . To illustrate this aspect, let us consider the case of Talagrand transportation inequality . We recall that using as a distance between probability measures, transportation inequalities state that, given , a probability measure on satisfies if the inequality

holds for any probability measure , with being the Kullback-Leibler divergence. For , it has been proven that is equivalent to the existence of a square-exponential moment; see e.g. [10]. Now, note that, from Th. 3.4, we have : we can immediately claim that if holds, then we have

Thus, we state

Theorem 3.8.

Let be a probability measure on , which satisfies a inequality, and be a random sample of independent variables, all distributed according to ; let also be the associated empirical measure, where is the Dirac distribution with mass on . Then, for any and , there exists some constant , depending only on and some square-exponential moment of , such that for any and

we have

The proof follows along the lines as the proof of Th. 2.1 in Bolley et al. combined with Theorem 2.1 which yields

| (8) |

We remark that condition implies that Th. 3.8 has an asymptotic nature. Nevertheless, Bolley et al. prove that

hods for any with being a (large) constant depending on . The argument in (8) implies

for any . This yields a concentration inequality which is valid for any sample size.

We obtained the results in Th. 3.8 using an upper bound on . The simplicity of our argument comes with a cost: for Th. 3.8 to hold, the underlying measure needs to satisfy some conditions required for , like e.g. some moment restrictions. To obtain bounds that do not need these stringent conditions, in the next theorem we derive novel non asymptotic upper bounds working directly on . The techniques that we apply to obtain our new results put under the spotlight the role of to control the concentration in the framework.

Theorem 3.9.

Assume that are generated according to the setting and let denote the common distribution of the inliers. Let denote the empirical distribution based on inliers. Then, for any an, when , we have

| (9) |

where the variance term is

and is an independent copy of . When , we have that, for any ,

| (10) |

Some remarks are in order. (i) In the case where , (9) holds without any assumption on as the variance term is always bounded, even without assuming a finite second moment of . This strongly relaxes the exponential square moment assumption required for in Th. 3.8.

(ii) (10) illustrates that the presence of contamination entails the extra term : one can make use of the tuning parameter to mitigate the influence of outlying values.

(iii) In (10), it is always possible to replace the term

by either or , where and are i.i.d. with common distribution . This can be proved noticing that it is possible to bound exploiting the fact that is bounded by , so Th. 2.1 implies

| (11) |

(iv) Delving more into the link between (10) and (11), consider that, for any ,

As , for any , it holds that

Therefore, we have, for any ,

Taking the suprema over in yields (11). From (11) and the triangular inequality, we have that (adding and subtracting )

Inequality (10) follows by plugging (9) into this last bound. Finally, combining (10) and (11), we obtain

| (12) |

Beside the concentrations in Th. 3.9, we consider the problem of providing an upper bound to . Following [9], we call this quantity the mean rate of convergence. For the sake of exposition, we assume that all data are inliers, that is are i.i.d. with common distribution —the case with outliers can be obtained using (11). As in Th. 3.8, we notice that it is always possible to use from Th. 3.4 and derive bounds for applying the results for ; see e.g. [9], [21, 40, 20]. Beside this option, here we derive novel results for . In what follows, we assume that is supported in , the ball in Euclidean distance of with radius and that the reference distance is the Euclidean distance in . Then we state:

Theorem 3.10.

Let denote a measure on , the ball in Euclidean distance of with radius . Let denote an i.i.d. sample of and denote the empirical measure. Then, we have

The bound is obtained using (i) the duality formula derived in Th. 2.1, (ii) an extension of Dudley’s inequality in the spirit of [9], and (iii) a computation of the entropy number of a set of -Lipschitz functions defined on the ball and taking value in . The technical details are available in Appendix .2. Here, we remark the key role of when (univariate case), which allows to bound the mean rate of convergence. For , our bounds could have been obtained also from [9]. However, the obtained results can be substantially larger than our upper bounds when : Th. 3.10 provides a refinement (related to the boundedness of ) of the extant bounds; see also Proposition 9 in [45] for a similar result.

4 Inference via minimum estimation

4.1 Estimation method

Let us consider a probability space . On this probability space, we define random variables taking values on and endowed with the Borel -algebra. We observe i.i.d. data points , which are distributed according to . A parametric statistical model on is denoted by and it is a collection of probability distributions indexed by a parameter of dimension . The parameter space is , which is equipped with a distance . We let represent the observations from . For every , the sequence of random probability measures on converges (in some sense) to a distribution , where

with . Similarly, we will assume that the empirical measure converges (in some sense) to some distribution as . We say that the model is well-specified if there exists such that ; otherwise, it is misspecified. Parameters are identifiable: is implied by .

Our estimation method relies on selecting a parametric model in , which is the closest, in robust Wasserstein distance, to the true model . Thus, minimum estimation refers to the minimization, over the parameter , of the robust Wasserstein distance between the empirical distribution and the reference model distribution . This is similar to the approach described in [5] and [8], who derive minimum Kantorovich estimators by making use of .

More formally, the minimum robust Wasserstein estimator (MRWE) is defined as

| (13) |

When there is no explicit expression for the probability measure characterizing the parametric model (e.g. in complex generative models, see [23] and § 5.2 for some examples), the computation of MRWE can be difficult. To cope with this issue, in lieu of (13), we propose using the minimum expected robust Wasserstein estimator (MERWE) defined as

| (14) |

where the expectation is taken over the distribution . To implement the MERWE one can rely on Monte Carlo methods and approximate numerically . Replacing the robust Wasserstein distance with the in (14), one obtains the minimum Wasserstein estimator (MWE) and the minimum expected Wasserstein estimator (MEWE), studied in [8].

4.2 Statistical guarantees

Intuitively, the consistency of the MRWE and MERWE can be conceptualized as follows. We expect that the empirical measure converges to , in the sense that as ; see Th. 3.5. Therefore, the of should converge to

assuming its existence and unicity. The same can be said for the minimum of the MERWE, provided that . If the reference parametric model is correctly specified (e.g. no data contamination), is the limiting object of interest and it is the minimizer of . In the case of model misspecification (e.g. wrong parametric form of and/or presence of data contamination), is not necessarily the parameter that minimizes the KL divergence between the empirical measure and the measure characterizing the reference model. We emphasize that this is at odd with the standard misspecification theory (see e.g. [59]) and it is due to the fact that we replace the KL divergence (which yields non robust estimators) with our novel robust Wasserstein distance.

To formalize these arguments, we introduce the following set of assumptions, which are standard in the literature on MKE; see [8].

Assumption 4.1.

The data-generating process is such that -almost surely as .

Assumption 4.2.

The map is continuous in the sense that implies that convergences to weakly as .

Assumption 4.3.

For some ,

with , is a bounded set.

Then we state the following

Theorem 4.4 (Existence of MRWE).

To prove Th. 4.4 we need to show that the sequence of functions epi-converges (see Definition .5 in Appendix .2) to . The result follows from [49]. We remark that Th. 4.4 generalizes the results of [5] and [8], where the model is assumed to be well specified ([5]) and moments of order are needed ([8]).

Moving along the same lines as in Th. 2.1 in [8], we may prove the measurability of MRWE; see Th. .6 in Appendix A. These results for the MRWE provide the stepping stone to derive similar theorems for the MERWE . To this end, the following assumptions are needed.

Assumption 4.5.

For any , if , then converges to weakly as .

Assumption 4.6.

If , then as .

Theorem 4.7 (Existence of MERWE).

For a generic function , let us define

Then, we state the following

Theorem 4.8 (Measurability of MERWE).

Suppose that is a -compact Borel measurable subset of . Under Assumption 4.5, for any and and , there exists a Borel measurable function that satisfies

if this set is non-empty, otherwise,

Considering the case where the data and are fixed, the next result shows that the MERWE converges to the MRWE, as . In the next assumption, the observed empirical distribution is kept fix and .

Assumption 4.9.

For some , the set

is bounded.

5 Numerical experiments

5.1 Sensitivity to outliers

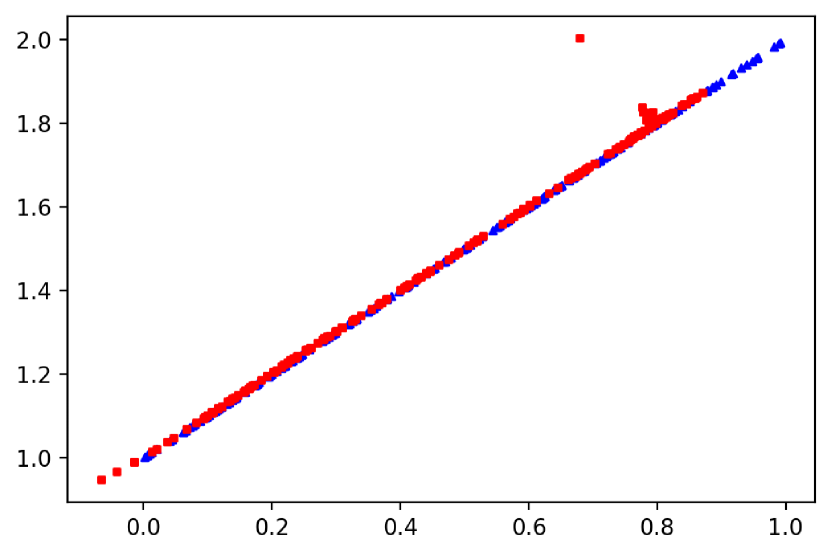

To complement the insights obtained looking at Figure 1, we build on the notion of sensitive curve, which is an empirical tool that illustrates the stability of statistical functionals; see [54] and [29] for a book-length presentation. We consider a similar tool to study the sensitivity of and in finite samples, both interpreted as functionals of the empirical measure. More in detail, we fix the standard normal distribution as a reference model (for this distribution both and are well-defined) and we use it to generate a sample of size . We denote the resulting sample , whose empirical measure is . Then, we replace with a value and form a new set of sample points, denoted by , whose empirical measure is . We let represent the robust Wasserstein distance between an -dimensional sample and . So, is the empirical robust Wasserstein distance between and its self, thus it is equal to , while is the empirical robust Wasserstein distance between and . Finally, for different values of and , we compute (numerically)

A similar procedure is applied to obtain . We display the results in Figure 2. For each value of , the plots illustrate that first grows and then remains flat (at the value of ) even for very large values of . In contrast, for , diverges, in line with the evidence from (and the comments on) Figure 1. In addition, we notice that as , the plot of becomes more and more similar to the one of : this aspect is in line with Th. 3.4 and it is reminiscent of the behaviour of the Huber loss function ([32]) for location estimation, which converges to the quadratic loss (maximum likelihood estimation), as the constant tuning the robustness goes to infinity. However, an important remark is order: the cost yields, in the language of robust statistics, the so-called “hard rejection”: it bounds the influence of outlying values (to be contrasted with the behavior of Huber loss, which downweights outliers to preserve efficiency at the reference model); see [50] for a similar comment.

5.2 Estimation of location

Let us consider the statistical problem of inference on univariate location models. We study the robustness of MERWE, comparing its performance to the one of MEWE based on minimizing , under different degrees of data contamination and for different underlying data generating models (with finite and infinite moments). Before delving into the numerical exercise, let us give some numerical details. We recall that the MERWE aims at minimizing the function

With the same notation as in §4.1, suppose we generate replications independently from a reference model , and let denote the empirical probability measure of . Then the loss function

is a natural estimator of , since the former converges almost surely to the latter as . We note that the algorithmic complexity in is super-linear while the complexity in is linear: the incremental cost of increasing is lower than that one of increasing . Moreover, a key aspect for the implementation of our estimator is related to the need for specifying . In the next numerical exercises we set : this value yields a good performance (in terms of Mean-Squared Error, MSE) of our estimators across the Monte Carlo settings, which consider different models and levels of contamination. In §6, we describe a data driven procedure for selecting .

In our numerical investigation, first, we consider a reference model which is the sum of log-normal random variables having finite moments. For a given , and , we have where are sampled from independently. Suppose we are interested in estimating the location parameter . We investigate the performance of MERWE and MEWE, under different scenarios: namely, in presence of outliers, for different sample sizes and contamination values. Specifically, we consider the model

where for (clean part of the sample) and for (contaminated part of the sample). Therefore, in each sample, there are outliers of size .

In our simulation experiments, we set , and . To implement the MERWE and MEWE of , we choose , and . The bias and MSE, based on replications, of the estimators are displayed in Table 1, for various sample sizes , different contamination size and proportion of contamination . The table illustrates the superior performance (both in terms of bias and MSE) of the MERWE with respect to the MEWE. In small samples (), the MERWE has smaller bias and MSE than the MEWE, in all settings. Similar results are available in moderate and large sample size and ). Interestingly, for , MERWE and MEWE have similar performance when (no contamination), whilst the MERWE still has smaller MSE for . This implies that the MERWE maintains good efficiency with respect to MEWE at the reference model.

| SETTINGS | \bigstrut | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BIAS | MSE | BIAS | MSE | BIAS | MSE \bigstrut | |||||||

| MERWE | MEWE | MERWE | MEWE | MERWE | MEWE | MERWE | MEWE | MERWE | MEWE | MERWE | MEWE \bigstrut | |

| 0.049 | 0.092 | 0.003 | 0.010 | 0.042 | 0.093 | 0.002 | 0.012 | 0.037 | 0.086 | 0.002 | 0.008 \bigstrut | |

| 0.035 | 0.090 | 0.002 | 0.012 | 0.029 | 0.097 | 0.001 | 0.016 | 0.013 | 0.100 | 0.018 \bigstrut | ||

| 0.071 | 0.157 | 0.008 | 0.028 | 0.086 | 0.178 | 0.009 | 0.033 | 0.081 | 0.172 | 0.007 | 0.031 \bigstrut | |

| 0.046 | 0.204 | 0.003 | 0.045 | 0.035 | 0.203 | 0.002 | 0.043 | 0.017 | 0.195 | 0.038\bigstrut | ||

| 0.036 | 0.034 | 0.002 | 0.002 | 0.022 | 0.022 | 0.001 | 0.001 | 0.012 | 0.010 | \bigstrut | ||

Now, let us turn to the case of random variables having infinite moments. [60] recently illustrates that minimum Wasserstein distance estimators do not perform well for heavy-tailed distributions and suggests to avoid the use of minimum Wasserstein distance inference when the underlying distribution has infinite -th moment, with . The theoretical developments of Section 3.1 show that our MERWE does not suffer from the same criticism. In the next MC experiment, we illustrate the good performance of MERWE when the data generating process does not admit finite first moment. We consider the problem of estimating the location parameters for a symmetric -stable distribution; see e.g. [52] for a book-length presentation. A stable distribution is characterized by four parameter : is the index parameter, is the skewness parameter, is the scale parameter and is the location parameter. It is worth noting that stable distributions have undefined variance for , and undefined mean for . We consider three parameters setting:

-

(1)

, which represents a heavy-tailed distribution without defined mean;

-

(2)

which is the standard Cauchy distribution, having undefined moment of order ;

-

(3)

representing a distribution having a finite mean.

In each MC experiment, we estimate the location parameter, while the other parameters are supposed to be known. In addition, we consider contaminated heavy-tailed data, where proportion of observations is generated from -stable distribution with parameter and the other proportion (outliers) comes from the distribution with parameter ( is the size of outliers). We set , and and repeat the experiment 1000 times for each distribution and estimator, and . We display the results in Table 2. For the stable distributions, the MEWE has larger bias and MSE than the ones yielded by the MERWE. This aspect is particularly evident for the distributions with undefined first moment, namely the Cauchy distribution and the stable distribution with . These experiments, complementing the ones available in [60], illustrate that while MEWE (which is not well-defined in the considered setting) entails large bias and MSE values, MERWE is well-defined and performs well even for stable distributions with infinite moments.

| SETTINGS | Cauchy | Stable () | Stable () \bigstrut | |||||||||

| BIAS | MSE | BIAS | MSE | BIAS | MSE \bigstrut | |||||||

| MERWE | MEWE | MERWE | MEWE | MERWE | MEWE | MERWE | MEWE | MERWE | MEWE | MERWE | MEWE \bigstrut | |

| 0.084 | 1.531 | 0.011 | 3.628 | 0.088 | 3.179 | 0.011 | 13.731 | 0.090 | 0.658 | 0.011 | 1.030 \bigstrut | |

| 0.205 | 1.529 | 0.047 | 3.656 | 0.164 | 3.174 | 0.034 | 13.706 | 0.207 | 0.746 | 0.048 | 1.051 \bigstrut | |

| 0.181 | 1.502 | 0.038 | 3.602 | 0.171 | 3.155 | 0.037 | 12.840 | 0.181 | 0.676 | 0.038 | 0.942 \bigstrut | |

| 0.459 | 1.820 | 0.224 | 4.691 | 0.384 | 3.140 | 0.166 | 12.713 | 0.485 | 1.072 | 0.245 | 1.802 \bigstrut | |

| 0.046 | 1.551 | 0.003 | 3.740 | 0.045 | 3.118 | 0.003 | 12.600 | 0.042 | 0.613 | 0.003 | 0.894 \bigstrut | |

5.3 Generative Adversarial Networks (GAN)

Synthetic data. We propose two RWGAN deep learning models: both approaches are based on ROBOT. The first one is derived directly from the dual form in Th. 2.1, while the second one is derived from (3). We compare these two methods with routinely-applied Wasserstein GAN (WGAN) and with the robust WGAN introduced by [4]. To provide some details on our new procedures, we recall that GAN is a deep learning method, first proposed by [24]. It is one of the most popular machine learning approaches for unsupervised learning on complex distributions. GAN includes a generator and a discriminator that are both neural networks and the procedure is based on mutual game learning between them. The generator creates fake samples as well as possible to deceive the discriminator, while the discriminator needs to be more and more powerful to detect the fake samples. The ultimate goal of this procedure is to produce a generator with a great ability to produce high-quality samples just like the original sample. To illustrate the connections with the ROBOT, let us consider the following GAN architecture; more details are available in the Supplementary Material (Appendix .4.2). Let and , where and denote the distribution of the reference sample and of a random sample which is the input for the generator. Then, denote by the function applied by generator (it transforms to create fake samples, which are the output of a statistical model , indexed by the finite dimensional parameter ) and by the function applied by the discriminator (it is indexed by a finite dimensional parameter , which outputs the estimate of the probability that the sample is true). The objective function is

| (15) |

where represents the probability that came from the data rather than . The GAN mechanism trains to maximize the probability of assigning the correct label to both training examples and fake samples. Simultaneously, it trains to minimize . In other words, we would like to train such that is very close (in some distance/divergence) to .

Despite its popularity, GAN has some inference issues. For instance, during the training, the generator may collapse to a setting where it always produces the same samples and face the fast vanishing gradient problem. Thus, training GANs is a delicate and unstable numerical and inferential task; see [3]. To overcome these problems, Arjovsky et al. propose the so-called Wasserstein GAN (WGAN) based on Wasserstein distance of order . The main idea is still based on mutual learning, but rather than using a discriminator to predict the probability of generated images as being real or fake, the WGAN replaces with a function (it corresponds to in Kantorovich-Rubenstein duality), indexed by parameter , which is called “a critic”, namely a function that evaluates the realness or fakeness of a given sample. In mathematical form, the WGAN objective function is

| (16) |

Also in this new formulation, the task is still to train in such a way that is very close (now, in Wasserstein distance) to . [3] explain how the WGAN is connected to minimum distance estimation. We remark that (16) has the same form as the Kantorovich-Rubenstein duality: we apply our dual form of ROBOT in Th. 2.1 to the WGAN to obtain a new objective function

| (17) |

The central idea is to train a RWGAN by minimizing the robust Wasserstein distance (actually, using the dual form) between real and generative data. To this end, we define a novel RWGAN model based on (17). The algorithm for training this RWGAN model is very similar to the one for WGAN available in [3], to which we refer for the implementation. We label this robust GAN model as RWGAN-1. Besides this model, we propose another approach derived from (3), where we use a new neural network to represent the modified distribution and add a penalty term to (16) in order to control modification. Because of space constraint, the details of this procedure are provided in Algorithm 3 in Appendix .4.2. We label this new RWGAN model as RWGAN-2. Different from [4]’s robust GAN, which uses -divergence to constrain the modification of distribution, our RWGAN-2 does this by making use of the TV. In the sequel, we will write RWGAN-B for the robust GAN of [4], which we implement using the same set up as Balaji et al. We remark that the RWGAN-1 is less computationally complex than RWGAN-2 and RWGAN-B. Indeed, RWGAN-2 and RWGAN-B make use of some regularized terms, thus an additional neural network is needed to represent the modification of the distribution. In contrast, RWGAN-1 has a simpler structure: for its implementation, it requires only a small modification of the activation function in the generator network; see Appendix .4.2.

To investigate the robustness of RWGAN-1 and RWGAN-2 we rely on synthetic data. We consider reference samples generated from a simple model, containing points in total, with outliers, whose data generation process is

| (18) |

with representing the size of outliers. We set and try four different settings by changing values of and . As it is common in the GAN literature, our generative adversarial models are obtained using the Python library Pytorch; see Appendix .4.2. We display the results in Figure 3, where we compare WGAN, RWGAN-1, RWGAN-2, RWGAN-B, and RWGAN-N (based on the outlier-robust Wasserstein distance of [45], with and , as in Nietert et al. paper) and RWGAN-D (based on the BL distance, as in [19]). To measure the distance between the data simulated by the generator and the input data, we report the Wasserstein distance of order 1. For visual comparison, we display the cloud of clean data points (blue triangles) and the cloud of GAN generated points (red squares). The plots reveal that WGAN is greatly affected by outliers. Differently, RWGAN-2, RWGAN-B, RWGAN-N and RWGAN-D are able to generate data roughly consistent with the uncontaminated distribution in most of the settings. Nevertheless, they still produce some obvious abnormal points, especially when the proportion and size of outliers increase. In particular, we notice that RWGAN-D performs well in the first two contamination settings (, and , ) but it breaks down (namely, it generates points which are very different from the uncontaminated data) in the third and fourth contamination setting (, and , ), where the amount and the size of contamination increase and affect negatively the GAN performance. In a very different way, RWGAN-1 performs better than its competitors and generates data that agree with the uncontaminated distribution, even when the proportion and size of outliers are large. Several repetitions of the experiment lead to the same conclusions.

To conclude this experiment, we remark that RWGAN-N is very sensitive to the specified value of . For instance, in the first contaminations setting, comparing the between the clean and the generated data by RWGAN-N with to the of the RWGAN-1 illustrates that our method (whose has been selected using the procedure described in Section 6) performs better. Increasing to 0.25 improves on the RWGAN-N performance, making its closer to the one of the RWGAN-1. This is an important methodological consideration, which has a practical implication for the application of the RWGAN-N: a selection criterion

for the hyper-parameter needs to be proposed, discussed and studied similarly to what we do in Section 6. To the best of our knowledge, such a criterion is not available in the literature.

Fashion-MNIST real data example. We illustrate the performance of RWGAN-1 and RWGAN-2 through comparing them with the WGAN and RWGAN-B in analysing the Fashion-MNIST real dataset, which contains 70000 grayscale images of apparels, including T-shirt, jeans, pullover, skirt, coat, etc. Each image is of pixels, and each pixel gets value from 0 to 255, indicating the darkness or lightness. We generate outlying images by taking the negative effect of images already available in the dataset—a negative image is a total inversion, in which light areas appear dark and vice versa; in the Fashion-MNIST dataset, for each pixel of a negative image, it takes 255 minus the value of the pixel corresponding to the normal picture. By varying the number of negative images we define different levels of contamination: we consider 0, 3000, and 6000 outlying images. For the sake of visualization, in Figure 4(a) and 4(b) we show normal and negative images, respectively. Our goal is to obtain a generator which can produce images of the same style as Figure 4(a), even if it is trained on sample containing normal images and outliers. A more detailed explanation on how images generation works via Pytorch is available in Appendix .4.2.

In Figure 5, we display images generated by the WGAN, RWGAN-1, RWGAN-2 and RWGAN-B under the different levels of contamination—we select making use of the data driven procedure described in Section 6. We see that all of the GANs generate similar images when there are no outliers in the reference sample (1st row). When there are 3000 outliers in the reference sample (2nd row), the GANs generate some negative images. However, by a simple eyeball search on the plots, we notice that WGAN produces many obvious negative images, while RWGAN-B, RWGAN-1 and RWGAN-2 generate less outlying images than WGAN. Remarkably, RWGAN-1 produces the smaller number of negative images among the considered methods. Similar conclusions holds for the higher contamination level with 6000 outlying images.

To quantify the accuracy of these GANs, we train a convolutional neural network (CNN) to classify normal images and negative images, using a training set of 60000 normal images and 60000 negative images. The resulting CNN is 100% accurate on a test set of size 20000. Then, we use this CNN to classify 1000 images generated by the four GANs. In Table 3, we report the frequencies of normal images detected by the CNN. The results are consistent with the eyeball analysis of Figure 5: the RWGAN-1 produces the smaller numbers of negative images at different contamination levels.

| WGAN | RWGAN-1 | RWGAN-2 | RWGAN-B | |

|---|---|---|---|---|

| 0 outliers | 1.000 | 1.000 | 1.000 | 1.000 |

| 3000 outliers | 0.941 | 0.982 | 0.953 | 0.945 |

| 6000 outliers | 0.890 | 0.975 | 0.913 | 0.897 |

5.4 Domain adaptation

Methodology. We consider an application of ROBOT to domain adaptation (DA), which is a popular machine learning problem and it deals with the inconsistent probability distributions of training samples and test samples. It has a wide range of applications in natural language processing ([48]), text analysis ([17]) and medical data analysis ([31]).

The DA problem can be stated as follows. Let

denote an i.i.d. sequence of source domain (training sample) and

denote an i.i.d. sequence of target domain (test sample), which have joint distributions and , respectively. Interestingly, and may be two different distributions: discrepancies (also known as drift) in these data distributions depend on the type of application considered—e.g. in image analysis, domain drifts can be due to changing lighting conditions, recording device failures, or to changes in the backgrounds.

In the context of DA, we are interested in estimating the labels from observations

and . Therefore, the goal is to develop a learning method which is able to transfer knowledge from the source domain to the target domain, taking into account that the training and test samples may have different distributions.

To achieve this inference goal, [14] assume that there is a transformation between and , and estimate the map via OT. To elaborate further, let us consider that, in practice, the population distribution of the source sample is unknown and it is usually estimated by its empirical counterpart

As far as the target domain is concerned, suppose we can obtain a function to predict the unobserved response variable . Thus, the empirical distribution of the target sample is

The central idea of Curtey et al. (2014) relies on transforming the data to make the source and target distributions similar (namely, close in some distance), and use the label information available in the source domain to learn a classifier in the transformed domain, which in turn can be exploited to get the labels in the target domain.

More formally, the DA problem is written as an optimization problem which aims at finding the function that minimizes the transport cost between the estimated source distribution and the estimated joint target distribution . To set up this problem, [15] propose to use the cost function

which defines a joint cost combining both the distances between the features and a measure of the discrepancy between and , where (as recommended in the Courty et al. code) . Then, the optimization problem formulated in terms of 1-Wasserstein distance is

| (19) |

which, in the case of squared loss function (see [15]), becomes

Courty et al. 2014 propose to solve this problem using (a regularized version of) OT. Since OT can be sensitive to outliers, in the same spirit of [15] but with the additional aim of reducing the impact of outlying values, we propose to use ROBOT instead of OT in the DA problem. The basic intuition is that ROBOT yields a matrix which is able (by construction) to identify and downweight the impact of anomalous values, yielding a robust , for every . We provide the key mathematical and implementation details in Algorithm 1.

Numerical exercise. The next Monte Carlo experiment illustrates the use and the performance of Algorithm 1 on synthetic data. We consider source data points (training sample) generated from

| (20) |

and the target points (test sample) are generated from

| (21) |

We notice that the source and target domain have a similar distribution but there is a shift, called drift in the DA literature, between them. In the training sample, outliers in the response variable are due to the drift added to the for . Moreover, mimicking the problem of leverage points in linear regression (see [29, Ch. 6]), our simulation design is such that half of the s are generated from a , whilst the other half is obtained from a shifted/drifted Gaussian ; the feature space in the test sample contains a similar pattern but with a different drift.

In our simulation exercise, we set and and we compare three methods for estimating : the first one is the Kernel Ridge Regression (KRR); the second one is the method proposed by [14], which is a combination of OT and KRR; the third one is our method, which is based on a modification of the second method, as obtained using ROBOT instead of OT. To the best of our knowledge, this application of ROBOT to DA is new to the literature.

In Figure 6 we show the results. The outliers in the training sample are clearly visible as a cluster of points which are far a part from the bulk of the data. The plot illustrates that the curve obtained via KRR fits reasonably well only the majority of the data in the source domain, but it looks inadequate for the target domain. This is due to the drift between the target and source distributions: by design, the KRR approach cannot capture this drift since it takes into account only the source domain information. The method in [14] yields a curve that is closer to the target data than the KRR curve, but because of the outliers in the , it does not perform well when the feature takes on negative values or values larger than four. Differently from the other methods, our method yields an estimated curve that is not too influenced by outlying values and that fits the target distribution better than the other methods.

6 Choice of via concentration inequalities

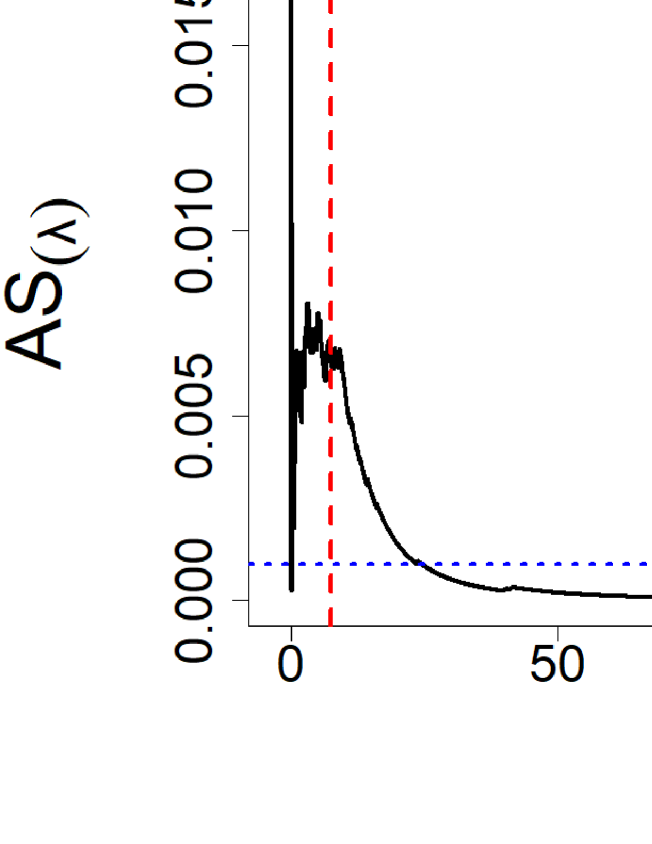

The application of requires the specification of . In the literature on robust statistics, many selection criteria are based on first-order asymptotic theory. For instance, [41] and [38] propose to select the tuning constant for Huber-type M-estimators achieving a given asymptotic efficiency at the reference model (namely, without considering the contamination). Unfortunately, these type of criteria hinge on the asymptotic notion of influence function, which is not available for our estimators defined in Section 4; see [6] for a related discussion on MKE. [44] propose a criterion which needs access to a clean data set and do not develop its theoretical underpinning. To fill this gap in the literature, we introduce a selection criterion of that does not have an asymptotic nature and is data-driven. Moreover, it does not need the computation of for each , that makes its implementation fast.

To start with, let us notice that the term expresses the robust Wasserstein distance between the fitted model () and the actual data generating measure (). Intuitively, outlying values induce bias in the estimates, entailing abrupt changes in the distribution of . This, in turn, entails changes in . Therefore, the stability of the estimates and the stability of are tied together.

This consideration is the stepping stone of our selection criterion for . To elaborate further, repeated applications of the triangle inequality yield

| (22) |

which implies that the term has an influence on the behavior of . Therefore, we argue that to reduce the impact of outliers in the distribution of (hence, on the distribution of ), we need to control the concentration of about its mean. To study this quantity, we make use of (9) and (10) and we define a data-driven and non-asymptotic (i.e. depending on the finite , on the estimated and on the guessed ) selection criterion for .

We refer to Appendix .3.2 for the mathematical detail, here, we simply state the basic intuition. Our idea hinges on the fact that controls the impact that the outliers have on the concentration bounds of . Thus, one may think of selecting the value(s) of ensuring a desired level of stability in the distribution of . Operationally, we encode this stability in the ratio between the quantiles of in the presence and in the absence of contamination (see the quantity , as available in Appendix .3.2). Then, we measure the impact that different values of have on this ratio computing its derivative w.r.t. (see the quantity , as available in Appendix .3.2). Finally, we specify a tolerance for the changes in this derivative and we identify the value(s) of yielding the desired level of stability.

The described procedure allows to identify a (range of values of) , not too close to zero and not too large either. This is a suitable and sensible achievement. Indeed, on the one hand, values of too close to zero imply that trims too many observations which likely include inliers and most outliers. On the other hand, too large values of imply that trims a very limited number of outliers; see Appendix .3.1 for a numerical illustration of this aspect in terms of MSE as a function of . Additional methodological aspects of the selection procedure and its numerical illustration are deferred to Appendix .3.1 and Appendix .3.2.

Appendix

This Appendix complements the results available in the main text. Specifically, Appendix .1 provides some key theoretical results of optimal transport—the reader familiar with optimal transport theory may skip Appendix .1. All proofs are collected in Appendix .2. Appendix .4, as a complement to §5, provides more details and additional numerical results of a few applications of ROBOT—see Appendix .4.1 for an application of ROBOT to detect outliers in the context of parametric estimation in a linear regression model. In Appendix .4.2, we provide more details about implementation of RWGAN. Appendix .3 contains the methodological aspects of selection. All our numerical results can be reproduced using the (Python and R) code available upon request to the corresponding author.

.1 Some key theoretical details about optimal transport

This section is a supplementary to Section 2.1. For deep details about optimal transport, we refer the reader to Villani (2003, 2009).

Measure transportation theory dates back to the celebrated work [43], in which Monge formulated a mathematical problem that in modern language can be expressed as follows. Given two probability measures , and a cost function , Monge’s problem is to solve the minimization equation

| (23) |

(in the measure transportation terminology, means that is pushing foward to ). The solution to the Monge’s problem (MP) (23) is called optimal transport map. Informally, one says that transports the mass represented by the measure to the mass represented by the measure . The optimal transport map which solves problem (23) (for a given ) hence naturally yields minimal cost of transportation.

It should be stressed that Monge’s formulation has two undesirable prospectives. First, for some measures, no solution to (23) exists. Considering, for instance, the case that is a Dirac measure while is not, there is no transport map which transport mass between and . Second, since the set of all measurable transport map is non-convex, solving problem (23) is algorithmically challenging.

Monge’s problem was revisited by [33], in which a more flexible and computationally feasible formulation was proposed. The intuition behind the the Kantorovich’s problem is that mass can be disassembled and combined freely. Let denote the set of all joint probability measures of and . Kontorovich’s problem aims at finding a joint distribution which minimizes the expectation of the coupling between and in terms of the cost function , and it can be formulated as

| (24) |

A solution to Kantorovich’s problem (KP) (24) is called an optimal transport plan. Note that Kantorovich’s problem is more general than Monge’s one, since it allows for mass splitting. Moreover, unlike , the set is convex, and the solution to (24) exists under some mild assumptions on , e.g., lower semicontinuous (see [57, Chapter 4]). [11] established the relationship between optimal transport plan and optimal transport map when the cost function is the squared Euclidean distance. More specifically, the optimal transport plan can be expressed as .

The dual form (KD) of Kantorovich’s primal minimization problem (24) is to solve the maximization problem

| (25) |

where is the set of bounded continuous functions on . According to Theorem 5.10 in [57], if the cost function is a lower semicontinuous, there exists a solution to the dual problem such that the solutions to KD and KP coincide, namely there is no duality gap. In this case, the solution takes the form

| (26) |

where the functions and are called -concave and -convex, respectively, and (resp. ) is called the -transform of (resp. ). A special case is that when is a metric on , equation (25) simplifies to

| (27) |

This case is commonly known as Kantorovich-Rubenstein duality.

Another important concept in measure transportation theory is Wasserstein distance. Let denote a complete metric space equipped with a metric , and let and be two probability measures on . Solving the optimal transport problem in (24), with the cost function , would introduce a distance, called Wasserstein distance, between and . More specifically, the Wasserstein distance of order is defined by (2).

.2 Proofs

Proof of Theorem 2.1. First, if , then and hence

where the supremum of the left-hand side is reached when . Therefore, is c-convex and . Combined with Theorem 5.10 in [57], the proof can be completed. ∎

Proof of Lemma 3.1. We will prove that satisfies the axioms of a distance. Let be three points in the space . First, the symmetry of , that is, , follows immediately from

Next, it is obvious that . Assuming that , then

which entails that since is a metric. By definition, we also have for all .

Finally, note that

when or , and

when and . Therefore, we conclude that is a metric on . ∎

Proof of Theorem 3.2. We prove that satisfies the axioms of a distance. First, is obvious. Conversely, letting be two probability measures such that , then there exists at least an optimal transport plan [57]. It is clear that the support set of is the diagonal . Thus, for all continuous and bounded functions ,

which implies .

Next, let and be three probability measures on , and let , be optimal transport plans. By the Gluing Lemma, there exist a probability measure in with marginals , and on . Use the triangle inequality in Lemma 3.1, we obtain

Moreover, the symmetry is obvious since

Finally, note that is finite since

In conclusion, is a finite distance on . ∎

Proof of Theorem 3.4. First, we prove that is monotonically increasing with respect to . Assuming that , then . Suppose that there is an optimal transport plan such that reaches its minimum. Then is not necessarily the optimal transport plan when the cost function is . We therefore have .

Then we prove the continuity of . Fixing and letting , we have

Therefore, is continuous.

Now we turn to the last part of Theorem 3.4. Note that if exists, we have for any . Since is increasing with respect to , its limit exists. It remains to prove . Since , for any fixing , there exists a number such that where is the optimal transport plan corresponding to the cost function . Let , then and . Finally, since is arbitrarily small, we have . ∎

In order to prove Theorem 3.5, we need a lemma which shows that a Cauchy sequence in robust Wasserstein distance is tight.

Lemma .1.

Let be a Polish space and be a Cauchy sequence in . Then is tight.

Proof of Lemma .1. Because is a Cauchy sequence, we have

as Hence, for , there is a such that

| (28) |

Then for any , there is a such that (if , this is (28); if , we just let ).

Since the finite set is tight, there is a compact set such that for all . By compactness, can be covered by a finite number of small balls, that is, for a fixed integer .

Now write

Note that and is -Lipschitz. By Theorem 2.1, we find that for , (this is reasonable because we need as small as possible) and arbitrary,

Inequality holds for

Note that if , and, for each , we can find such that . We therefore have

Now we have shown the following: For each there is a finite family such that all measures give mass at least to the set .

For , we can find such that

Now we let

It is clear that .

By construction, is closed and it can be covered by finitely many balls of arbitrarily small radius, so it is also totally bounded. We note that in complete metric space, a closed totally bounded set is equivalent to a compact set. In conclusion, is compact. The result then follows. ∎

Proof of Theorem 3.5. First, we prove that converges to in if . By Lemma .1, the sequence is tight, so there is a subsequence such that converges weakly to some probability measure . Let be the solution of the dual form of ROBOT for and . Then, by Theorem 2.1,

where holds since the subsequence converges weakly to and holds since is a subsequence of . Therefore, , and the whole sequence has to converge to weakly.

Conversely, suppose converges to weakly. By Prokhorov’s theorem, forms a tight sequence; also, is tight. Let be a sequence representing the optimal plan for and . By Lemma 4.4 in [57], the sequence is tight in . So, up to the extraction of a subsequence, denoted by , we may assume that converges to weakly in . Since each is optimal, Theorem in [57] guarantees that is an optimal coupling of and , so this is the coupling . In fact, this is independent of the extracted subsequence. So converges to weakly. Finally,

∎

Proof of Theorem 3.7. First, we prove completeness. Let be a Cauchy sequence. By Lemma .1, is tight and there is a subsequence which convergence to a measure in . Therefore, the continuity of (Corollary 3.6) entails that

The result of the completeness follows.

Then, we complete the proof of separability by using a rational step function approximation. We notice that lies in and is integrable. So for , we can find a compact set such that Letting be a countable dense set in , we can find a finite family of balls , which cover . Moreover, by letting

then still covers the , and and are disjoint for . Then by defining a step function , we can easily find

Moreover, can be written as . Next, we prove that can be approximated to arbitrary accuracy by another step function with rational coefficients . Specifically,

Therefore, we can replace with some well-chosen rational coefficients , and can be approximated by with arbitrary precision. In conclusion, the set of finitely supported measures with rational coefficients is dense in . ∎

Proof of Theorem 3.9. The proof derives from [40, Theorem 5.1]. To apply this result, we have to check that Condition (11) p 779 in [40] holds. For this, let denote i.i.d. data with common distribution , independent from . Denote by , fix and let , (the data in the original dataset of inliers has been replaced by an independent copy ). Let denote the associated empirical measure. Let us define the function . We have, by the triangular inequality established in Theorem 3.2:

By the duality formula given in Theorem 2.1, we have moreover

Therefore, we deduce that, for any ,

Thus, the function satisfies Condition (11) p 779 in [40] with and , thus by Theorem 5.1 in [40], we deduce that, for any ,

Next, letting

and repeating the above arguments yield

Inequality (9) then follows from piecing together the above two inequalities.

Proof of Theorem 3.10. The proof is divided into three steps. We start by using the duality formula derived in Theorem 2.1 and prove a slight extension of Dudley’s inequality in the spirit of [9] to reduce the bound to the computation of the entropy numbers of the of -Lipschitz functions defined on and taking values in . Then we compute these entropy numbers using a slight extension of the computation of these numbers done, for example in [56, Exercise 8.2.8]. Finally, we put together all these ingredients to derive our final results.

Step 1: Reduction to bounding entropy. Using the dual formulation of , we get that

Fix first and in . The random variables

are independent, centered and take values in and therefore by Hoeffding’s inequality, the process , where the variables

has increments satisfying

| (29) |

where, here and in the rest of the proof . Introduce the truncated sup-distance

we just proved that the process has sub-Gaussian increments with respect to the distance .

At this point, we will use a chaining argument to bound the expectation . Let denote the largest such that . As is larger than the diameter of w.r.t. , we define and all functions satisfy . For any denote by a sequence of nets of with minimal cardinality, so . For any and , let be such that . We have for any , so we can write, for any ,

| (30) |

Write , we have thus

| (31) |

We bound both terms separately. For any function , , so . On the other hand, for any and , by (29),

For any ,

we deduce that

| (32) |

Now we use the following classical result.

Lemma .2.

If are random variables satisfying

| (33) |

then

We apply Lemma .2 to the random variable . There are less than such variables, and all of them satisfy Assumption 33 with thanks to (32). Therefore, Lemma .2 and imply that

We conclude that, for any , it holds that

| (34) |

To exploit this bound, we have now to bound from above the entropy numbers of .

Step 2: Bounding the entropy of . Let and let denote an -net of with size (for a proof that such nets exist, we refer for example to [56, Corollary 4.2.13]: For any , there exists such that . Let also denote an -grid of with step size and . We define the set of all functions such that

and linearly interpolates between points in . Starting from and moving recursively to its neighbors, we see that

where the first term counts the number of choices for and is an upper bound on the number of choices for given the value of its neighbor due to the constraint . This shows that there exists a numerical constant such that

By construction, . Fix now . By construction of , there exists such that for any . Moreover, for any , there exists such that . As both and are -Lipschitz, we have therefore that

We have thus established that there exists a numerical constant such that

| (35) |

Step 3: Conclusion of the proof. Plugging the estimate (35) into the chaining bound (34) yields, for any ,

Here, the behavior of the last sum is different when and .

When , the sum is convergent, so we can choose and the bound becomes

When , the sum is no longer convergent. For , we have

Taking such that yields, as ,

| (36) |

Finally, for any , we have

Optimizing over yields the choice and thus, as ,

| (37) |

∎

Lemma .3.

Let be a sequence in and . Suppose 0 implies that convergence weakly to . Then the map is continuous, where .

Proof.

The result follows directly from Corollary 3.6. ∎

Lemma .4.

The function is continuous with respect to weak convergence. Furthermore, if implies that converges weakly to , then the map is continuous.

Proof.

The proof moves along the same lines as the proof of Lemma A2 in [8]. It is worth noting that , so we can use dominated convergence theorem instead of Fatou’s lemma. Because of the continuity of robust Wasserstein distance, we have

This implies is continuous. ∎

In order to prove Theorem 4.4, we introduce the concept of the epi-converge as follows.

Definition .5.

We call a sequence of functions epi-converge to if for all ,

For any , the continuity of the map follows from Lemma .3, via Assumption 4.2. Next, by definition of the infimum, the set with the of Assumption 4.3 is non-empty. Moreover, since is continuous, the set is non-empty and the set is closed. Then, by Assumption 4.3, is a compact set.

The next step is to prove the sequence of functions epi-converges to by applying Proposition 7.29 of [48]. Then we can obtain the results by Proposition 7.29 and Theorem 7.31 of [48]. These steps are similar to [8] and are hence omitted.∎

Moreover, we state

Theorem .6 (Measurability of MRWE).

Suppose that is a -compact Borel measurable subset of . Then under Assumption 4.2, for any and , there exists a Borel measurable function that satisfies if this set is non-empty, otherwise, .

Th. .6 implies that, for any , there is a measurable function that coincides (or it is very close) to the minimizer of .

.3 The choice of

.3.1 Some considerations based on RWGAN

Here we focus on the applications of RWGAN and MERWE and illustrate, via Monte Carlo experiments, how would affect the results. First, we recall the experiment in § 5.2: MERWE of location for sum of log-normal. We keep other conditions unchanged, and just change the parameter to see how the estimated value changes. We set , and and other parameters are still set as in § 5.2. The result is shown in Figure 7. We can observe that the MSE is large when is small. The reason for this is that we truncate the cost matrix prematurely at this time, making robust Wasserstein distance unable to distinguish the differences between distributions. When is large, there is negligible difference between Wasserstein distance and robust Wasserstein distance, resulting in almost the same MSE estimated by MERWE and MEWE. When is moderate, within a large range (e.g., from to ), the MERWE outperforms the MEWE.

We consider a novel way to study parameter selection based on our Theorem 2.1, which implies that modifying the constraint on is equivalent to changing the parameter in equation (4). This reminds us that we can use RWGAN-1 to study how to select .

We consider the same synthetic data as in § .4.2 and set and . To quantify training quality of RWGAN-1, we take the Wasserstein distance of order 1 between the clean data and data generated from RWGAN-1. Specifically, we draw 1000 samples from model (18) and generate 1000 samples from the model trained by RWGAN-1 with different parameter . Then, we calculate the empirical Wasserstein distance of order 1 between them. The plot of the empirical Wasserstein distance against is shown in Figure 8. The Wasserstein distance has a trend of decreasing first and increasing later on: it reaches the minimum at . Also, we notice that over a large range of values (i.e. from to ), the Wasserstein distance is small: this illustrates that RWGAN-1 has a good outlier detection ability for a choice of the penalization parameter within this range.

Heuristically, a large means the truncated cost function is just slightly different from the non-truncated one. Therefore, RWGAN-1 will be greatly affected by outliers. When is small, the cost function is severely truncated, which means that we are modifying too much of the distribution. This deviates from our main purpose of GAN (i.e., ). Hence, the distribution of final outputs generated from RWGAN-1 becomes very different from the reference one.

Both experiments suggest that to achieve good performance of ROBOT, one could choose a moderate within a large range. Actually, the range of a “good” depends on the size and proportion of outliers: When the size and proportion are close to zero, both moderate and large will work well, since only slight or no truncation of the cost function is needed; when the size and proportion are large, a large truncation is needed, so this range becomes smaller. Generally, we prefer to choose a slightly larger , as we found in the experiment that when we use a larger , the performance of ROBOT is at least no worse than that using OT.

.3.2 Selection based on concentration inequality

Methodology. In the Monte Carlo setting of Section 5.2, Figure 7 in Appendix .3.1 illustrates that MERWE has good performance (in terms of MSE) when is within the range . The estimation of the MSE represents, in principle, a valuable criterion that one can apply to select . However, while this idea is perfectly fine in the case of synthetic data, its applicability seems to be hard in real data examples, where the true underling parameter is not known. To cope with this issue, [58] propose to use a pilot estimators which can yield an estimated MSE. The same argument can be considered also for the our estimator, but this entails the need for selecting a pilot estimator: a task that cannot be easy for some complex models and for the models considered in this paper. Therefore, we propose and investigate the use of an alternative approach, which yields a range of values of similar to the one obtained computing the MSE but it does not need to select such a pilot estimator.

To start with, let us consider Th. 3.9. At the reference model, namely in the absence of contamination (i.e. and ), the mean concentration in (9) implies that takes on values larger than the threshold

| (38) |

with probability bounded by . In the presence of contamination, from (12) we have that the probability that the deviations of from its mean are larger than the threshold

| (39) |

is still bounded by .

Then, to select we propose to set up the following criterion, which compares the changes in (38) and (39) due to different values of . More in detail, for a fixed , we assume a contamination level which represents a guess that the statistician does about the actual level of data contamination. Then we compare the thresholds at different values of . To this end, we compute the ratio between (38) and (39) and define the quotient

| (40) |

Assuming that is a twice differentiable function of , one can check that decreases monotonically in . Indeed, the partial derivative with respect to of is

| (41) |

where

is positive when . Moreover, let us denote by and the first and second derivative of with respect to . So the monotonicity of depends on . Now, notice that when and that . Moreover, from the definition of , is increasing with respect to and when . So when and when . This means decreases monotonically.