Inference in generalized bilinear models

Abstract

Latent factor models are widely used to discover and adjust for hidden variation in modern applications. However, most methods do not fully account for uncertainty in the latent factors, which can lead to miscalibrated inferences such as overconfident p-values. In this article, we develop a fast and accurate method of uncertainty quantification in generalized bilinear models, which are a flexible extension of generalized linear models to include latent factors as well as row covariates, column covariates, and interactions. In particular, we introduce delta propagation, a general technique for propagating uncertainty among model components using the delta method. Further, we provide a rapidly converging algorithm for maximum a posteriori GBM estimation that extends earlier methods by estimating row and column dispersions. In simulation studies, we find that our method provides approximately correct frequentist coverage of most parameters of interest. We demonstrate on RNA-seq gene expression analysis and copy ratio estimation in cancer genomics.

Keywords: batch effects, factor analysis, Fisher information, negative-binomial regression, uncertainty quantification.

1 Introduction

Latent factor models have become an essential tool for analyzing and adjusting for hidden sources of variation in complex data. Building on generalized linear model theory, generalized bilinear models (GBMs) provide a flexible framework incorporating latent factors along with row covariates, column covariates, and interactions to analyze matrix data (Choulakian, 1996; Gabriel, 1998; de Falguerolles, 2000; Perry and Pillai, 2013; Hoff, 2015; Buettner et al., 2017). However, uncertainty quantification is a persistent problem in large complex models, and GBMs are particularly challenging due to the non-linearity of multiplicative terms, constraints and strong dependencies among parameters, inapplicability of normal model theory, and the fact that the number of parameters grows with the data.

Most latent factor methods do not fully account for uncertainty in the latent factors. For example, to remove batch effects in gene expression analysis, several methods first estimate a factorization and then treat as a known matrix of covariates, accounting for uncertainty only in (Leek and Storey, 2007, 2008; Sun et al., 2012; Risso et al., 2014). In copy number variation detection, it is common to treat the estimated as known and subtract it off (Fromer et al., 2012; Krumm et al., 2012; Jiang et al., 2015). In principle, Bayesian inference provides full uncertainty quantification (Carvalho et al., 2008), however, Markov chain Monte Carlo tends to be slow in large parameter spaces with strong dependencies, as in the case of GBMs. Variational Bayes approaches are faster (Stegle et al., 2010; Buettner et al., 2017; Babadi et al., 2018), but rely on factorized approximations that tend to underestimate uncertainty. Meanwhile, the classical method of inverting the Fisher information matrix is computationally prohibitive in large GBMs.

In this article, we introduce a novel method for uncertainty quantification in GBMs, focusing on the case of count data with negative binomial outcomes. The basic idea is to propagate uncertainty between model components using the delta method, which can be done analytically using closed-form expressions involving the gradient and the Fisher information; we refer to this as delta propagation. The method facilitates computation of p-values and confidence intervals with approximately correct frequentist properties in GBMs. Further, we provide an algorithm for maximum a posteriori GBM estimation that extends previous work by estimating row- and column-specific dispersion parameters, improving numerical stability, and explicitly handling identifiability constraints.

In a suite of simulation studies, we find that our methods perform favorably in terms of consistency, frequentist coverage, computation time, algorithm convergence, and robustness to the outcome distribution. We then apply our methods to gene expression analysis, (a) comparing performance with DESeq2 (Love et al., 2014) on a benchmark dataset of RNA-seq samples from lymphoblastoid cell lines, and (b) testing for age-related genes using RNA-seq data from the Genotype-Tissue Expression (GTEx) project (Melé et al., 2015). Finally, we apply our methods to copy ratio estimation in cancer genomics, comparing performance with the Genome Analysis Toolkit (GATK) (Broad Institute, 2020) on whole-exome sequencing data from the Cancer Cell Line Encyclopedia (Ghandi et al., 2019).

The article is organized as follows. In Section 2, we define the GBM model and we address identifiability, interpretability, and residuals. In Sections 3 and 4, we describe our estimation and inference methods, respectively. In Section 5, we establish theoretical results, and Section 6 contains simulation studies. In Sections 7 and 8, we apply our methods to gene expression analysis and copy ratio estimation in cancer genomics. The supplementary material contains a discussion of previous work and challenges, additional empirical results, mathematical derivations and proofs, and step-by-step algorithms.

2 Model

In this section, we define the class of models considered in this paper and we provide conditions guaranteeing identifiability and interpretability of the model parameters. For and , suppose is a random variable such that

| (2.1) |

where and are observed covariates, , , , , , and are parameters to be estimated, and is a smooth function such that is positive, referred to as the link function. In matrix form, denoting , Equation 2.1 is equivalent to

| (2.2) |

where is applied element-wise to the matrix . To be able to use capital to denote scalar random variables, we use bold to denote the data matrix. We refer to this as a generalized bilinear model (GBM), following the terminology of Choulakian (1996).

In the genomics applications in Sections 7 and 8, we use negative binomial outcomes with log link , and the role of each piece is as follows (see Figure 1): is the read count for feature in sample , contains feature covariates and contains the corresponding coefficients, contains sample covariates and contains the corresponding coefficients, contains intercepts and coefficients for interactions between the ’s and ’s, and is a low-rank matrix that captures latent effects due, for example, to unobserved covariates such as batch.

2.1 Identifiability and interpretation

For identifiability and interpretability, we impose certain constraints (Conditions 2.1 and 2.2). The only constraints on the covariates are that and are full-rank, centered, and include a column of ones for intercepts; see below. The rest of the constraints are enforced on the parameters during estimation. We write to denote the identity matrix to distinguish it from the number of features, .

Condition 2.1 (Identifiability constraints).

Assume the following constraints:

-

(a)

and are invertible,

-

(b)

, , , and ,

-

(c)

and ,

-

(d)

is a diagonal matrix such that , and

-

(e)

the first nonzero entry of each column of is positive,

where , , , , , , , , and .

In Theorem 5.1, we show that Condition 2.1 is sufficient to guarantee identifiability of , , , , , and in any GBM satisfying Equation 2.2. More precisely, letting

| (2.3) |

for some fixed full-rank and , Theorem 5.1 shows that is a one-to-one function on the set of parameters satisfying Condition 2.1.

Condition 2.2 (Interpretability constraints).

Assume that (a) and for all , and (b) and for all , .

When Condition 2.2(a) holds, we can rearrange the right-hand side of Equation 2.1 as

Using this and assuming Conditions 2.1 and 2.2, we show in Theorem 5.2 that the interpretation of each parameter is: (1) is the overall intercept, , (2) is a sample-specific offset and is a feature-specific offset, (3) is the mean effect of the th feature covariate and is the sample-specific offset of this effect, (4) is the mean effect of the th sample covariate and is the feature-specific offset of this effect, and (5) is the effect of the interaction for and .

GBMs can be decomposed in terms of the sum-of-squares of each component’s contribution to the overall model fit, enabling one to interpret the proportion of variation explained by each component. Specifically, in Theorem 5.3, we show that

whenever Condition 2.1(b) holds, where denotes the sum of squares of the entries of a matrix. This extends a similar result by Takane and Shibayama (1991).

2.2 Outcome distributions

For the distribution of , we focus on discrete exponential dispersion families (Jørgensen, 1987; Agresti, 2015). Specifically, suppose where for ,

| (2.4) |

is a probability mass function for all and ; this is referred to as a discrete EDF. Here, , , and denotes the integers. For any discrete EDF, the mean and variance are and (Jørgensen, 1987); also see Section S4. We refer to as the inverse dispersion parameter, and is the dispersion. Discrete EDFs can be translated into standard EDFs of the form via the transformation . We refer to a GBM with EDF outcomes as an EDF-GBM.

Negative binomial (NB) outcomes. In the applications in Sections 7 and 8, we use negative binomial outcomes: where is the mean and is the dispersion. This is a discrete EDF as in Equation 2.4 with and ; thus, and . The NB distribution is an overdispersed Poisson since if and , then integrating out , we have . We refer to a GBM with NB outcomes as an NB-GBM.

We parametrize the dispersions as and work in terms of , , and , subject to the identifiability constraints and . Note that this makes .

2.3 Residuals and adjusting out selected effects

Residuals are useful for many purposes, such as visualization, model criticism, and downstream analyses. We define GBM residuals as where as in Equation 2.3 and is a small constant to make well-defined; for NB-GBMs, we use as a default. A model-based estimate of the variance of is given by where and are the mean and variance of under the model. This formula can be derived either from the Fisher information or from a first-order Taylor approximation to . It turns out that the corresponding precisions play a key role in our GBM estimation and inference algorithms. In the NB case with , these residual precisions are .

Often, it is useful to adjust out some effects but not others. Let , , and be the indices of the columns of , , and (or ) that one does not wish to adjust out. We define the partial residuals where is defined as in Equation 2.3 but with , , and replaced by , , and .

3 Estimation

We provide a general algorithm for estimating the parameters of a discrete EDF-GBM (Equations 2.2 and 2.4), and we augment the algorithm to estimate the NB-GBM dispersion parameters as well. Here, we only give an outline; see Section S7 for step-by-step details.

Inputs. The required inputs are , , , and . Optional inputs are the maximum step size , prior precisions , prior means and precisions for the log-dispersions in the NB-GBM case, and the convergence criterion. As defaults, we use , , , , convergence tolerance for the relative change in log-likelihood+log-prior, and a maximum of iterations.

Preprocessing.

- (1)

-

(2)

Unless the covariates are already on a common scale in terms of units, standardize and such that and for all and .

-

(3)

Precompute the pseudoinverses and .

Initialization.

-

(1)

Solve for , , and to minimize the sum-of-squares of the GBM residuals .

-

(2)

Randomly initialize , , and by computing the truncated singular value decomposition (of rank ) of a random matrix with i.i.d. entries.

-

(3)

In the case of NB-GBMs, iteratively update , , and for a few iterations.

Iteration. In each iteration, we cycle through the components of the model, updating each in turn using an optimization-projection step, consisting of an unconstrained optimization step and a likelihood-preserving projection onto the constrained parameter space. We use a bounded, regularized version of Fisher scoring to perform the unconstrained optimization step for each of , , , , , and , separately, holding all the other parameters fixed. For a generic parameter vector , the (unbounded) regularized Fisher scoring step is where is the log-likelihood and is a regularization parameter. This arises from optimizing the log-likelihood plus the log-prior, where the prior on is , since then the gradient and Fisher information are and . Since these Fisher scoring steps occasionally diverge, for numerical stability we bound them using

| (3.1) | ||||

where is the Euclidean norm. The idea is that points in the same direction as , but its root-mean-square is capped at . Similarly, for and in the NB-GBM, we use bounded regularized Newton steps for the unconstrained optimizations, since the (expected) Fisher information for and is not closed form.

4 Inference

In this section, we introduce our methodology for computing approximate standard errors for the parameters of a GBM. Since the standard technique of inverting the Fisher information matrix does not work well on GBMs, we develop a novel technique for propagating uncertainty from one part of the model to another; see Section 4.1. We provide an outline of our inference algorithm in Section 4.2, and step-by-step details are in Section S8.

4.1 Delta propagation method

In fixed-dimension parametric models, the asymptotic covariance of the maximum likelihood estimator is equal to the inverse of the Fisher information matrix. Thus, classically, approximate standard errors are given by the square roots of the diagonal entries of the inverse Fisher information. However, in GBMs, inverting the full (constraint-augmented) Fisher information does not work well for two reasons: (1) it is computationally intractable for large data matrices, and (2) it does not yield well-calibrated standard errors in terms of coverage, presumably because the number of parameters grows with the amount of data. Meanwhile, the inverse Fisher information for each component individually (for instance, for ) is computationally efficient, but severely underestimates uncertainty since it treats the other components as known, and thus, can be thought of as representing the conditional uncertainty in each component given the other components.

We propose a general technique for approximating, for each model component, the additional variance due to uncertainty in the other components. By adding this to the conditional variance (that is, in the case of ), we obtain approximate variances that are better calibrated, empirically. The basic idea is to write the estimator for each component as a function of the other components, and propagate the variance of the other components through this function using the same idea as the delta method.

In general, suppose we have a model with parameters and , and we wish to quantify the uncertainty in due to uncertainty in . Suppose the true values are and , and let and be the maximum likelihood estimators. Define

where is the gradient of the log-likelihood and is the Fisher information matrix for , evaluated at . The interpretation is that , since is a Fisher scoring step on starting at , when the current estimate of is .

By a Taylor approximation to at , we have where such that . Define the random element , where the randomness comes from the data. Assuming for some , we have . Meanwhile, under standard regularity conditions, and . Then, by the law of total covariance,

The interpretation of this decomposition is that represents the uncertainty in given , and represents the uncertainty in due to uncertainty in . Since , plugging in empirical estimates leads to the approximation

| (4.1) |

where .

To compute the Jacobian matrix , observe that the th column of is

| (4.2) |

by Bishop (2006, Eqn C.21), where , , and is applied element-wise. Equation 4.2 also holds for , but with in place of ; this facilitates computing in Equation 4.1. To obtain standard errors for each element of , computation is simplified by the fact that we only need the diagonal of , and to simplify computation even further we use a diagonal matrix for when we apply this technique to GBMs. Additionally, since we use maximum a posteriori estimates, we use the regularized Fisher information and the gradient of the log-posterior in the formulas above.

4.2 Outline of inference algorithm

Here we outline our procedure for computing GBM standard errors; see Section S8 for step-by-step details. The strategy is to handle and jointly by inverting the regularized, constraint-augmented Fisher information matrix for and then employ delta propagation for , , , , and ; see Figure 2. Empirically, we find that some of the delta propagation terms are negligible, thus, these have been excluded.

For notational convenience, we vectorize the parameter matrices as follows. For , define . Denote , , , , , and . It is easier to work with the vectorized transposes of , , , and (that is, rather than ), since then the Fisher information matrices have a block diagonal structure. We write , , , , , and to denote the regularized Fisher information for , , , , , and , respectively, for instance, . We write and for the regularized, observed Fisher information for and , that is, .

Inputs. The required inputs are , , , and the estimates of , , , , , , , , and . Optional inputs are the prior parameters ().

Compute conditional uncertainty for each component.

-

(1)

Compute and the diagonal blocks of , , , and .

-

(2)

Compute the diagonals of and .

Compute joint uncertainty in accounting for constraints.

-

(1)

Compute , the regularized constraint-augmented Fisher information for .

-

(2)

Compute . (It is key to do this in a computationally efficient way.)

-

(3)

Define and to be the entries of corresponding to and .

Propagate uncertainty between components using delta propagation.

-

(1)

Propagate uncertainty in to and , to obtain and , the additional variance of the estimators of and due to uncertainty in .

-

(2)

Propagate uncertainty in and through to , to obtain .

-

(3)

Propagate uncertainty in , , , to and , to get and .

Compute approximate standard errors.

-

(1)

and

-

(2)

-

(3)

and

-

(4)

and

Here, is the element-wise square root. We do not provide standard errors for and , since it seems difficult to estimate them without non-negligible bias. See Section S8 for the complete step-by-step algorithm. See Section 5 for computation time complexity.

5 Theory

In this section, we provide theoretical results on GBMs. The proofs are in Section S10.

Theorem 5.1 (Identifiability).

If and satisfy Condition 2.1 and

| (5.1) |

then , , , , , and . In particular, for any GBM satisfying Equation 2.2 for some , , and , if Condition 2.1 holds, then , , , , , and are identifiable in the sense that they are uniquely determined by the distribution of ; in fact, they are uniquely determined by .

Theorem 5.2 (Interpretation of parameters).

For a matrix , we write for the sum of squares.

Theorem 5.3 (Sum-of-squares decomposition).

If Condition 2.1(b) holds, then

Theorem 5.4 shows that the projections we use in the estimation algorithm are likelihood-preserving. The idea is that, for example, is the result of an unconstrained optimization step on , and we project to to enforce the constraints in Condition 2.1 without affecting the likelihood. To interpret items 3 and 4 of Theorem 5.4, note that in the algorithm, we optimize with respect to and , rather than and . We write to denote the pseudoinverse. When exists, .

Theorem 5.4 (Likelihood-preserving projections).

Next, we provide the computation time complexity of our estimation and inference algorithms in Sections 3 and 4. To simplify the expressions, here we assume

| (5.2) |

For the estimation algorithm, preprocessing and initialization take time, and Table 1 summarizes the computation time for updating each model component. The table first breaks out the time required to compute (Equation 2.3), which is a prerequisite within each other update, and then lists the time required for each update given . In total, it takes time to perform each overall iteration.

For the inference algorithm, Table 2 shows the computation time assuming Equation 5.2 and also assuming . These are one-time costs since there are not repeated iterations. When , the most expensive operation tends to be computing the joint uncertainty in , and as grows this dominates the cost. We have experimented extensively but have not found a faster alternative that provides well-calibrated standard errors.

| Operation | Time complexity |

|---|---|

| Computing | |

| Updating | |

| Updating | |

| Updating | |

| Updating , , and | |

| Updating and | |

| Total per iteration |

| Operation | Time complexity |

|---|---|

| Preprocessing | |

| Conditional uncertainty for each component | |

| Joint uncertainty in accounting for constraints | |

| Propagate uncertainty between components | |

| Compute approximate standard errors | |

| Total |

6 Simulations

In this section, we present simulation studies assessing (a) consistency and statistical efficiency, (b) accuracy of standard errors, (c) computation time and algorithm convergence, and (d) robustness to the outcome distribution. See Section S2 for more simulation results.

In each simulation run, the data are generated as follows; see Section S2 for full details. We generate the covariates using one of three schemes, Normal, Gamma, or Binary, then we generate the true parameters using either a Normal or Gamma scheme, and finally we generate the outcome data using the log link and a NB (negative binomial), LNP (log-normal Poisson), Poisson, or Geometric distribution. For brevity, we refer to each combination of choices by the triplet of outcomes/covariates/parameters, for instance, NB/Binary/Normal.

Typical example. Figure 3 shows scatterplots of the estimated versus true parameters for an NB/Normal/Normal simulation with , , , , and . Each dot represents a single univariate parameter, for example, the plot for contains dots, one for each entry . The error bars are . Visually, the estimates are close to true values, and the standard errors look appropriate. Since the likelihood and prior are invariant to permutations and sign changes of the latent factors, in this section we permute and flip signs to find the correct assignment to the true latent factors. Note that the estimates are biased upward when the true value of is very low; this is because very low values of make row roughly Poisson, and in this case any value of from to could yield a reasonable fit. The prior on prevents the estimate from diverging, but also leads to an upward bias when the true value is very low.

6.1 Consistency and statistical efficiency

In many applications, is much larger than . One would hope that for any , the estimates of , , , and would be consistent as since then these parameters have fixed dimension. Meanwhile, one cannot hope for consistency in , , and as .

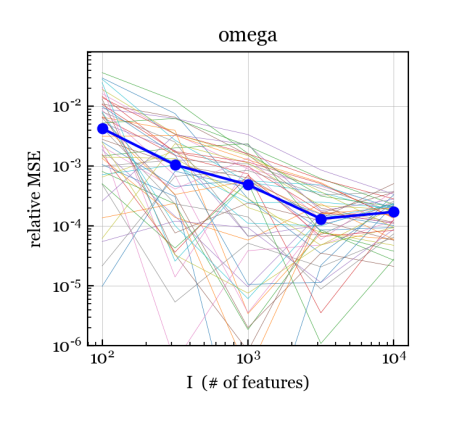

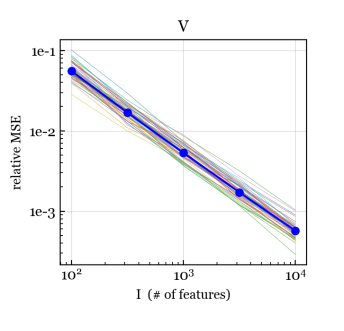

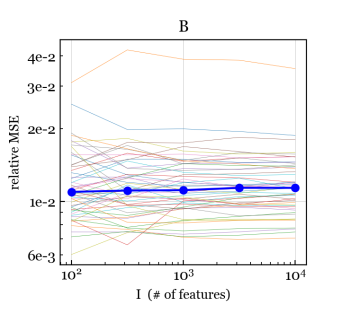

To assess consistency and efficiency, for each we use the NB/Normal/Normal simulation scheme to generate data matrices with , , , and , each with a different set of covariates and parameters. For each data matrix, we run our NB-GBM estimation algorithm with convergence tolerance . Figure 4 shows the relative mean-squared error (MSE) between the estimates and the true values for , , , and . For , we measure the relative MSE in the dispersion parametrization (rather than log-dispersion) since there is little difference between, say, and ; both make column approximately Poisson distributed.

We see that for , , , and , the relative MSE is decreasing to zero, suggesting that the estimates of these parameters are consistent as . Further, for and , the relative MSE appears to be , which is the optimal rate of convergence even for fixed-dimension parametric models. For , , and (Figure S1), the relative MSE hovers around a small nonzero value, but does not appear to be trending to zero, as expected. For and (Figure S1), the relative MSE is small and the trend is suggestive but less clear.

6.2 Accuracy of standard errors

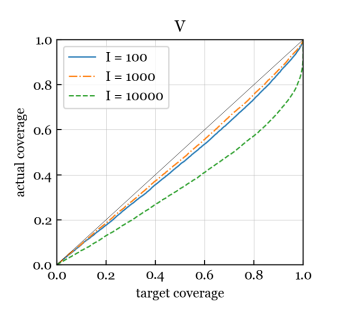

Next, we assess the accuracy of the standard errors produced by our inference algorithm, in terms of the coverage. Ideally, a 95% confidence interval would contain the true parameter 95% of the time, but even when the model is correct, this is not guaranteed since intervals are usually based on an approximation to the distribution of an estimator. To assess coverage, for each , we use the NB/Normal/Normal scheme to generate data matrices with , , , and , each with a different set of covariates and parameters. For each data matrix, we run our NB-GBM estimation algorithm (with tolerance ) and then we run our NB-GBM inference algorithm to obtain approximate standard errors. We construct Wald-type confidence intervals for each univariate parameter, for example, the 95% confidence interval for is where is the approximate standard error for .

Figure 5 shows actual coverage versus target coverage, estimated by combining across all runs and across all entries of each parameter matrix/vector. Perfect coverage would be a straight line on the diagonal. We exclude , , and in these coverage results since it seems challenging to estimate them without non-negligible bias, skewing the results. We see in Figure 5 that the actual coverage for , , , , and is excellent at every target coverage level from 0% to 100%. For and , the coverage is reasonably good for the smaller values of but appears to degrade when increases.

6.3 Computation time and algorithm convergence

Computation time. For each combination of and , we generate data matrices using the NB/Normal/Normal simulation scheme with , , and . For each and , Figure 6 shows the average computation time per iteration of the estimation algorithm, along with the average computation time for the inference algorithm. These empirical results agree with our theory in Section 5 showing that the time per iteration scales like (that is, linearly with the size of the data matrix) and the time for inference scales like .

Algorithm convergence. Next, we evaluate the number of iterations required for the estimation algorithm to converge. Similarly to before, for , we run the NB/Normal/Normal scheme times with , , , and . Figure S2 shows the log-likelihood+log-prior (plus a constant) versus iteration number for each simulation run. In these simulations, the log-likelihood+log-prior levels off after around 5 or fewer iterations, indicating that the algorithm converges rapidly.

6.4 Robustness to the outcome distribution

To assess the robustness of the NB-GBM to the assumption that the outcome distribution is negative binomial, we rerun the experiments in Sections 6.1 and 6.2 using the following data simulation schemes: (a) LNP/Normal/Normal (b) Poisson/Normal/Normal, and (c) Geometric/Normal/Normal. The results in Figures S3, S4, and S5 show that the algorithms are quite robust to misspecification of the outcome distribution.

7 Application to gene expression analysis

In this section, we evaluate our GBM algorithms on RNA-seq gene expression data. An RNA-seq dataset consists of a matrix of counts in which entry is the number of high-throughput sequencing reads that were mapped to gene for sample . These read counts are related to gene expression level, but there are many biases – both sample-specific and gene-specific. More generally, there are often significant sources of unwanted variation—both biological and technical—that obscure the signal of interest. Most methods use pipelines that adjust for each bias sequentially, rather than in an integrated way. GBMs enable one to use a single coherent model that adjusts for gene covariates and sample covariates as well as unobserved factors such as batch effects.

7.1 Comparing to DESeq2 on lymphoblastoid cell lines

As a test of our GBM methods, we compare with DESeq2 (Love et al., 2014), a leading method for RNA-seq differential expression analysis. We first consider a benchmark dataset used by Love et al. (2014) consisting of 161 samples from lymphoblastoid cell lines (Pickrell et al., 2010). We use the subset of 20,815 genes with nonzero median count across samples.

In both DESeq2 and the GBM, we adjust for two sample covariates: sequencing center (Argonne or Yale) and cDNA concentration. To adjust for GC content, which is often the most important gene covariate, in the GBM we construct the matrix using a natural cubic spline basis with knots at the 2.5%, 25%, 50%, 75%, and 97.5% quantiles of GC content. DESeq2 does not have a built-in capacity to adjust for gene covariates, so for DESeq2, we adjust for GC using their recommended approach of pre-computing normalization factors using CQN (Hansen et al., 2012), which uses the same spline basis. Since DESeq2 does not adjust for latent factors, we first set for direct comparison; later, we set .

It is natural to use negative binomial (NB) outcomes for sequencing data since the technical variability is close to Poisson (Marioni et al., 2008), and biological variability introduces overdispersion (Robinson et al., 2010). These modeling choices yield an NB-GBM with , , , and . DESeq2 also uses an NB model, so the main difference between DESeq2 and this particular GBM is the way that the parameters and standard errors are estimated. Using a 1.8GHz processor, GBM estimation and inference took 42 seconds, whereas DESeq2+CQN took 105 seconds.

Correctness of p-values under mock null comparisons. First, we assess the calibration of p-values for testing for differential expression between two conditions. Under the null hypothesis of no difference, the p-values would ideally be uniformly distributed on . Since the Pickrell samples appear to be relatively homogeneous (when adjusting for sequencing center and cDNA concentration), we can assess how well this ideal is attained by randomly splitting the samples into two groups and testing for differential expression.

To this end, we add a sample covariate consisting of a dummy variable for the assignment of samples to the two random groups. Thus, the null hypothesis of no difference for gene is and the alternative is . The (two-sided) p-value for gene is where is the standard normal CDF (cumulative distribution function). Figure 7 shows the p-value CDF over all genes, aggregating over 50 random splits into two groups containing 80 and 81 samples, respectively. Both DESeq2 and the GBM yield p-values that are very close to the ideal uniform distribution. This indicates that both methods are accurately controlling the false positive rate.

Sensitivity to detect actual differences. To compare sensitivity, we test for differential expression between sequencing centers by computing p-values where is the index of the sequencing center covariate. Using Bonferroni to control the family-wise error rate (FWER) at 0.05, the number of genes detected as differentially expressed by the GBM and DESeq2 are 1038 and 892, respectively. Figure 7 shows the lower tail of the p-value CDFs. In these results, the GBM yields equal or greater sensitivity.

Visualization using GBM latent factors. The latent factors of the GBM provide a model-based approach to visualizing high-dimensional count data, while adjusting for covariates. To illustrate, we modify the model to use . Figure 8 shows a scatterplot of versus for the estimated matrix. Observe that this yields very tightly grouped clusters of samples from the same subject. This is analogous to plotting the first two scores in principal components analysis (PCA). Thus, for comparison, Figure S15 shows the PCA plots based on (a) log-transformed TPMs (Transcripts per Million), specifically, , and (b) the variance stabilizing transform (VST) in the DESeq2 package, using the GC adjustment from CQN. The DESeq2 model does not estimate latent factors, which is why PCA is used in DESeq2. The TPM plot is very noisy in terms of subject ID clusters. The VST plot is better than TPMs, but still not quite as clean as the GBM plot.

Overall, in terms of sensitivity, controlling false positives, computation time, and visualization, these results suggest that the GBM performs very well. When is very small, it may be beneficial to augment the GBM to use DESeq2-like shrinkage estimates for .

7.2 Analyzing GTEx data for aging-related genes

Next, we test our methods on an application of scientific interest, using RNA-seq data from the Genotype-Tissue Expression (GTEx) project (Melé et al., 2015), consisting of 8,551 samples from 30 tissues in the human body, obtained from 544 subjects. We apply the GBM to find genes whose expression changes with age, adjusting for technical biases. See Jia et al. (2018) and Zeng et al. (2020) for studies of age-related genes using GTEx.

We use the GTEx RNA-seq data from recount2 (Collado-Torres et al., 2017),111Downloaded from https://jhubiostatistics.shinyapps.io/recount on 8/7/2020. normalized using the scale_counts function in the recount R library. We use the subset of 8,551 samples that passed GTEx quality control, and the subset of genes in chromosomes 1–22 that have an HGNC-approved gene symbol and have nonzero median across all samples.

To visualize the samples, we take a random subset of 5,000 genes and estimate an NB-GBM with two latent factors and no sample covariates. For the gene covariates, we use , , and where is the sum of the exon lengths and is the GC content of gene . Thus, in this initial model for visualization, , , , , and . Figure 9 shows the latent factors ( versus ), similarly to Figure 8. The samples tend to fall into clusters according to the tissue from which they were taken. Some tissues, such as brain and blood, clearly contain two or more subclusters which turn out to correspond to subtissue types (Figure S16). Meanwhile, when more latent factors are used (that is, ), some clusters that overlap in Figure 9 become well-separated in higher latent dimensions. For comparison, running PCA on the log TPMs is not nearly as clear in terms of tissue/subtissue clusters (Figure S17).

Testing for age-related genes. To find genes that are related to aging, we add subject age as a sample covariate. Each gene then has a coefficient describing how its expression changes with age, and we compute a p-value for each gene to test whether its coefficient is nonzero. Due to the heterogeneity of tissue/subtissue types, we analyze each subtissue type separately. To perform both exploratory analysis and valid hypothesis testing, we used a random subset of 108 subjects during an exploratory model-building phase and then used the remaining 436 subjects during a testing phase with the selected model.

In the exploratory phase, we considered adjusting for various technical sample covariates and gene covariates, and varied from 0 to 10. For each model and each subtissue type, we used the GBM to find the set of genes exhibiting a significant association with age, controlling FWER at 0.05 using the Bonferroni correction. To score the relevance of each of these gene sets in terms of aging biology, we computed its F1 score for overlap with the set of aging-related genes identified by De Magalhães et al. (2009).222From https://genomics.senescence.info/gene_expression/signatures.html on 8/11/2020. Based on this exploratory analysis, we chose to keep , , and as gene covariates, and use smexncrt (exonic rate, the fraction of reads that map within exons) as well as age (subject age, coded as a numerical value in ) as sample covariates. For each subtissue, we chose the that yielded the highest F1 score on the exploratory data.

In the testing phase, we apply the selected model for each subtissue to test for age-associated genes. For illustration, we present results for the “Heart - Left Ventricle” subtissue (Heart-LV), which had the highest F1 score across all subtissues on the exploratory data. We ran the GBM on the 176 Heart-LV samples in the test set, using the 19,853 genes with nonzero median across these samples, with based on the exploratory phase. Thus, in this model, , , , , and .

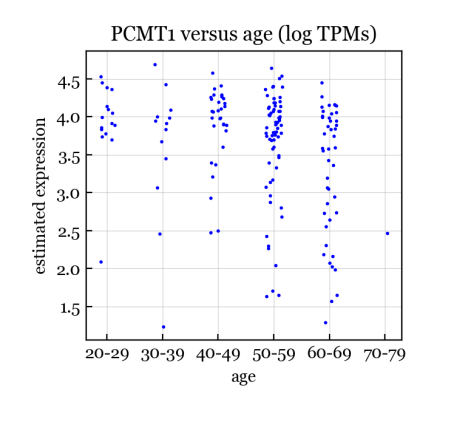

Results. We found 2,444 genes to be significantly associated with age in Heart-LV, controlling FWER at 0.05. For comparison, simple linear regression on the log TPMs yields only 1 significant gene; thus, the GBM has much greater power than a simple standard approach. To validate the biological relevance of the GBM hits, we compare with what is known from the aging literature. First, the top GBM hit for Heart-LV is PCMT1 (Entrez gene ID 5110) with a p-value of . PCMT1 is involved in the repair and degradation of damaged proteins, and is a well-known aging gene, being one of 307 human genes in the GenAge database (build version 20) from Tacutu et al. (2018). Figure 10 shows the estimated expression of PCMT1 versus age for the Heart-LV samples. The GBM-estimated expression exhibits a clear downward linear trend with age. For comparison, Figure 10 shows that the log TPMs are considerably noiser and the trend is much less clear. Simple linear regression on the log TPMs yields a p-value of for PCMT1, which does not reach the Bonferroni significance threshold of . Here, we define the GBM-estimated expression as the partial residual where is the index of the age column in , and is the GBM residual (Section 2.3).

To evaluate the GBM hits altogether for biological relevance to aging, we test for enrichment of Gene Ontology (GO) term gene sets using DAVID v6.8 (Huang et al., 2009a, b). We run DAVID on the top 1000 GBM hits for Heart-LV, using all 19,853 tested genes as the background list. (DAVID allows at most 1000 genes.) Tables S2 and S3 show the top 20 enriched GO terms in the Biological Process and Cellular Component categories. These results are highly consistent with known aging biology (López-Otín et al., 2013).

8 Application to cancer genomics

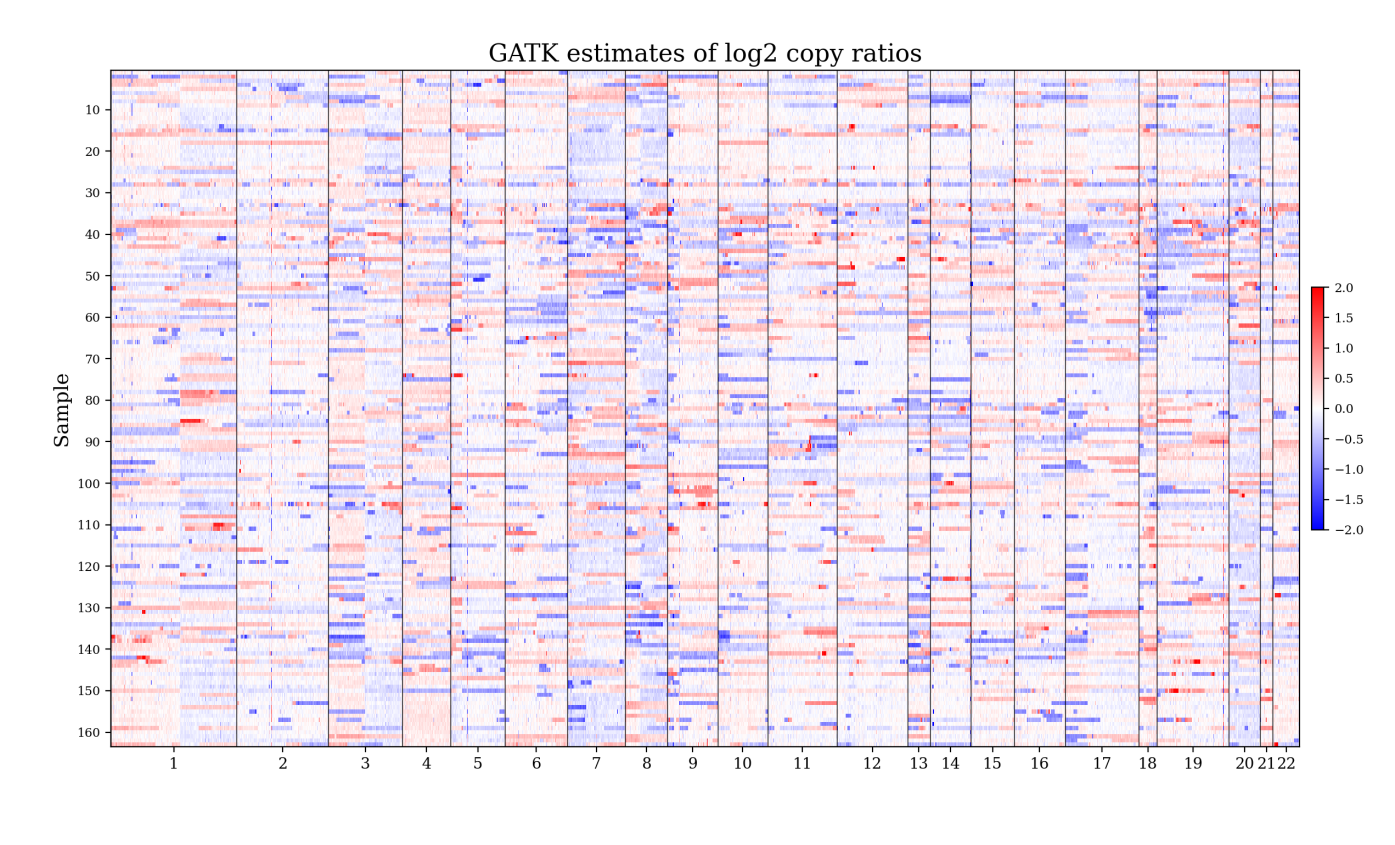

Next, we apply the GBM to estimate copy ratios for sequencing data from cancer cell lines. Copy ratio estimation is an essential step in detecting somatic copy number alterations (SCNAs), that is, duplications or deletions of segments of the genome. The input data is a matrix of counts where entry is the number of reads from sample that map to target region of the genome. The goal is to estimate the copy ratio of each region, that is, the relative concentration of copies of that region in the original DNA sample.

Simple estimates based on row and column normalization are very noisy and are contaminated by significant technical biases. State-of-the-art methods employ a panel of normals (that is, sequencing samples from non-cancer tissues) to estimate technical biases using principal components analysis (PCA), and then use linear regression to remove these biases from the cancer samples of interest. We take an analogous approach, first running a GBM on a panel of normals, and then running a GBM on the cancer samples using a feature covariate matrix that includes the matrix estimated from the panel of normals.

To assess performance, we compare with the state-of-the-art method provided by the Broad Institute’s Genome Analysis Toolkit (GATK) (Broad Institute, 2020) on the 326 whole-exome sequencing samples from the Cancer Cell Line Encyclopedia (CCLE) (Ghandi et al., 2019). These samples are from a wide range of cancer types, including lung, breast, colon, prostate, brain, and many others. We use the subset of 180,495 target regions that are in chromosomes 1–22 and have nonzero median count across the 326 samples.

Since there are essentially no normal samples in the CCLE dataset, we create a panel of pseudo-normals by taking a random subset of 163 samples as a training set and de-segmenting them to adjust for copy number alterations; see Section S3.2 for details. The remaining 163 samples are used as a test set. For the GBM, we use , , and as region covariates, no sample covariates, and 5 latent factors. Thus, , , , , and on the training set, while , , , , and on the test set. We define the GBM copy ratio estimates as the exponentiated residuals where ; see Section 2.3. The GBM took 10 minutes and 4.3 minutes to run on the training and test sets, respectively, while GATK took 3.3 minutes and 28 minutes on training and test, respectively. The slowness of GATK on the test set is likely due to having to run it separately on every test sample.

Figure 11 shows the GBM and GATK copy ratio estimates for an illustrative sample from the test set. As a baseline, we also show the simple normalization-based estimates defined as where and . A major advantage of the GBM is that it provides uncertainty quantification. Here, the estimated precision (that is, the inverse variance) of each log copy ratio estimate is (Section 2.3). In the GBM plot in Figure 11, this is illustrated by using cyan for the regions with low relative precision; see Section S3.2. By downweighting regions with low estimated precision, downstream analyses such as SCNA detection can be made more accurate.

To quantify performance, Figure 12 compares the GBM and GATK in terms of two performance metrics. The local relative standard error quantifies the variability of the log copy ratio estimates around a weighted moving average, accounting for the precision of each estimate. Meanwhile, the weighted median absolute difference quantifies the typical magnitude of the slope of a weighted moving average. On these data, the GBM exhibits better performance in terms of both metrics; see Section S3.2 for details. Figures S18 and S19 show the GBM and GATK copy ratio estimates for all 163 test samples. The GBM estimates are visibly less noisy than the GATK estimates.

Overall, the GBM appears to perform very well in terms of removing technical biases and denoising, particularly when using uncertainty quantification to downweight low precision regions. The improved performance appears to be due to (a) model-based uncertainty quantification and (b) using a robust probabilistic model for count data.

9 Conclusion

Generalized bilinear models provide a flexible framework for the analysis of matrix data, and the delta propagation method enables accurate GBM uncertainty quantification in modern applications. In future work, it would be interesting to extend to the more general model of Gabriel (1998), provide theoretical guarantees for delta propagation, and try applying delta propagation to other models.

Acknowledgments

We would like to thank Jonathan Huggins, Will Townes, Mehrtash Babadi, Samuel Lee, David Benjamin, Robert Klein, Samuel Markson, Philipp Hähnel, and Rafael Irizarry for many helpful conversations.

References

- Agresti (2015) Agresti, A. Foundations of Linear and Generalized Linear Models. John Wiley & Sons, 2015.

- Aitchison and Silvey (1958) Aitchison, J. and Silvey, S. Maximum-likelihood estimation of parameters subject to restraints. The Annals of Mathematical Statistics, pages 813–828, 1958.

- Babadi et al. (2018) Babadi, M., Lee, S. K., and Smirnov, A. N. GATK gCNV: accurate germline copy-number variant discovery from sequencing read-depth data. The International Conference on Probabilistic Programming (PROBPROG), Oct 2018.

- Benzécri (1973) Benzécri, J. L’analyse des Correspondances. L’analyse des Données, Vol. 2. Dunod. Paris, 1973.

- Bishop (2006) Bishop, C. M. Pattern Recognition and Machine Learning. springer, 2006.

- Blum et al. (2020) Blum, A., Hopcroft, J., and Kannan, R. Foundations of Data Science. Cambridge University Press, 2020.

- Boyd and Vandenberghe (2004) Boyd, S. and Vandenberghe, L. Convex Optimization. Cambridge University Press, 2004.

- Broad Institute (2020) Broad Institute. Genome Analysis Toolkit (GATK) v4.1.8.1, 2020. URL https://gatk.broadinstitute.org/.

- Buettner et al. (2017) Buettner, F., Pratanwanich, N., McCarthy, D. J., Marioni, J. C., and Stegle, O. f-scLVM: scalable and versatile factor analysis for single-cell RNA-seq. Genome Biology, 18(1):212, 2017.

- Carroll et al. (1980) Carroll, J. D., Pruzansky, S., and Kruskal, J. B. CANDELINC: A general approach to multidimensional analysis of many-way arrays with linear constraints on parameters. Psychometrika, 45(1):3–24, 1980.

- Carvalho et al. (2008) Carvalho, C. M., Chang, J., Lucas, J. E., Nevins, J. R., Wang, Q., and West, M. High-dimensional sparse factor modeling: applications in gene expression genomics. Journal of the American Statistical Association, 103(484):1438–1456, 2008.

- Chadoeuf and Denis (1991) Chadoeuf, J. and Denis, J. B. Asymptotic variances for the multiplicative interaction model. Journal of Applied Statistics, 18(3):331–353, 1991.

- Choulakian (1996) Choulakian, V. Generalized bilinear models. Psychometrika, 61(2):271–283, 1996.

- Cochran (1943) Cochran, W. The comparison of different scales of measurement for experimental results. The Annals of Mathematical Statistics, 14(3):205–216, 1943.

- Collado-Torres et al. (2017) Collado-Torres, L., Nellore, A., Kammers, K., Ellis, S. E., Taub, M. A., Hansen, K. D., Jaffe, A. E., Langmead, B., and Leek, J. T. Reproducible RNA-seq analysis using recount2. Nature Biotechnology, 35(4):319–321, 2017.

- Davies and Tso (1982) Davies, P. and Tso, M. K.-S. Procedures for reduced-rank regression. Journal of the Royal Statistical Society: Series C (Applied Statistics), 31(3):244–255, 1982.

- de Falguerolles (2000) de Falguerolles, A. GBMs: GLMs with bilinear terms. In COMPSTAT, pages 53–64. Springer, 2000.

- De Magalhães et al. (2009) De Magalhães, J. P., Curado, J., and Church, G. M. Meta-analysis of age-related gene expression profiles identifies common signatures of aging. Bioinformatics, 25(7):875–881, 2009.

- Denis and Gower (1996) Denis, J.-B. and Gower, J. C. Asymptotic confidence regions for biadditive models: Interpreting genotype-environment interactions. Journal of the Royal Statistical Society: Series C (Applied Statistics), 45(4):479–493, 1996.

- Dorkenoo and Mathieu (1993) Dorkenoo, K. and Mathieu, J.-R. Etude d’un modele factoriel d’analyse de la variance comme modele lineaire generalise. Revue de Statistique Appliquée, 41(2):43–57, 1993.

- Fisher and Mackenzie (1923) Fisher, R. and Mackenzie, W. Studies in Crop Variation: The Manurial Response of Different Potato Varieties. Journal of Agricultural Sciences, 13:311–320, 1923.

- Freeman (1973) Freeman, G. Statistical methods for the analysis of genotype-environment interactions. Heredity, 31(3):339–354, 1973.

- Fromer et al. (2012) Fromer, M., Moran, J. L., Chambert, K., Banks, E., Bergen, S. E., Ruderfer, D. M., Handsaker, R. E., McCarroll, S. A., O’Donovan, M. C., Owen, M. J., et al. Discovery and statistical genotyping of copy-number variation from whole-exome sequencing depth. The American Journal of Human Genetics, 91(4):597–607, 2012.

- Gabriel (1978) Gabriel, K. R. Least squares approximation of matrices by additive and multiplicative models. Journal of the Royal Statistical Society: Series B (Methodological), 40(2):186–196, 1978.

- Gabriel (1998) Gabriel, K. R. Generalised bilinear regression. Biometrika, 85(3):689–700, 1998.

- Gabriel and Zamir (1979) Gabriel, K. R. and Zamir, S. Lower rank approximation of matrices by least squares with any choice of weights. Technometrics, 21(4):489–498, 1979.

- Gauch (1988) Gauch, H. G. Jr. Model selection and validation for yield trials with interaction. Biometrics, pages 705–715, 1988.

- Gauch (2006) Gauch, H. G. Jr. Statistical analysis of yield trials by AMMI and GGE. Crop Science, 46(4):1488–1500, 2006.

- Gauch et al. (2008) Gauch, H. G. Jr., Piepho, H.-P., and Annicchiarico, P. Statistical analysis of yield trials by AMMI and GGE: Further considerations. Crop Science, 48(3):866–889, 2008.

- Ghandi et al. (2019) Ghandi, M., Huang, F. W., Jané-Valbuena, J., Kryukov, G. V., Lo, C. C., McDonald, E. R., Barretina, J., Gelfand, E. T., Bielski, C. M., Li, H., et al. Next-generation characterization of the cancer cell line encyclopedia. Nature, 569(7757):503–508, 2019.

- Gilbert (1963) Gilbert, N. Non-additive combining abilities. Genetics Research, 4(1):65–73, 1963.

- Gollob (1968) Gollob, H. F. A statistical model which combines features of factor analytic and analysis of variance techniques. Psychometrika, 33(1):73–115, 1968.

- Goodman (1979) Goodman, L. A. Simple models for the analysis of association in cross-classifications having ordered categories. Journal of the American Statistical Association, 74(367):537–552, 1979.

- Goodman (1981) Goodman, L. A. Association models and canonical correlation in the analysis of cross-classifications having ordered categories. Journal of the American Statistical Association, 76(374):320–334, 1981.

- Goodman (1986) Goodman, L. A. Some useful extensions of the usual correspondence analysis approach and the usual log-linear models approach in the analysis of contingency tables. International Statistical Review/Revue Internationale de Statistique, pages 243–270, 1986.

- Goodman (1991) Goodman, L. A. Measures, models, and graphical displays in the analysis of cross-classified data. Journal of the American Statistical Association, 86(416):1085–1111, 1991.

- Goodman and Haberman (1990) Goodman, L. A. and Haberman, S. J. The analysis of nonadditivity in two-way analysis of variance. Journal of the American Statistical Association, 85(409):139–145, 1990.

- Gower (1989) Gower, J. Discussion of the paper by van der Heijden, de Falguerolles and de Leeuw. Applied Statistics, 38:273–276, 1989.

- Greenacre (1984) Greenacre, M. J. Theory and applications of correspondence analysis. London (UK) Academic Press, 1984.

- Halko et al. (2011) Halko, N., Martinsson, P.-G., and Tropp, J. A. Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions. SIAM review, 53(2):217–288, 2011.

- Hansen et al. (2012) Hansen, K. D., Irizarry, R. A., and Wu, Z. Removing technical variability in RNA-seq data using conditional quantile normalization. Biostatistics, 13(2):204–216, 2012.

- Hoff (2015) Hoff, P. D. Multilinear tensor regression for longitudinal relational data. The Annals of Applied Statistics, 9(3):1169, 2015.

- Huang et al. (2009a) Huang, D. W., Sherman, B. T., and Lempicki, R. A. Bioinformatics enrichment tools: paths toward the comprehensive functional analysis of large gene lists. Nucleic Acids Research, 37(1):1–13, 2009a.

- Huang et al. (2009b) Huang, D. W., Sherman, B. T., and Lempicki, R. A. Systematic and integrative analysis of large gene lists using DAVID bioinformatics resources. Nature Protocols, 4(1):44, 2009b.

- Jia et al. (2018) Jia, K., Cui, C., Gao, Y., Zhou, Y., and Cui, Q. An analysis of aging-related genes derived from the Genotype-Tissue Expression project (GTEx). Cell Death Discovery, 4(1):1–14, 2018.

- Jiang et al. (2015) Jiang, Y., Oldridge, D. A., Diskin, S. J., and Zhang, N. R. CODEX: A normalization and copy number variation detection method for whole exome sequencing. Nucleic Acids Research, 43(6):e39–e39, 2015.

- Jørgensen (1987) Jørgensen, B. Exponential dispersion models. Journal of the Royal Statistical Society: Series B (Methodological), 49(2):127–145, 1987.

- Killick et al. (2012) Killick, R., Fearnhead, P., and Eckley, I. A. Optimal detection of changepoints with a linear computational cost. Journal of the American Statistical Association, 107(500):1590–1598, 2012.

- Krumm et al. (2012) Krumm, N., Sudmant, P. H., Ko, A., O’Roak, B. J., Malig, M., Coe, B. P., Quinlan, A. R., Nickerson, D. A., and Eichler, E. E. Copy number variation detection and genotyping from exome sequence data. Genome Research, 22(8):1525–1532, 2012.

- Leek and Storey (2007) Leek, J. T. and Storey, J. D. Capturing heterogeneity in gene expression studies by surrogate variable analysis. PLoS Genetics, 3(9):e161, 2007.

- Leek and Storey (2008) Leek, J. T. and Storey, J. D. A general framework for multiple testing dependence. Proceedings of the National Academy of Sciences, 105(48):18718–18723, 2008.

- López-Otín et al. (2013) López-Otín, C., Blasco, M. A., Partridge, L., Serrano, M., and Kroemer, G. The hallmarks of aging. Cell, 153(6):1194–1217, 2013.

- Love et al. (2014) Love, M. I., Huber, W., and Anders, S. Moderated estimation of fold change and dispersion for RNA-seq data with DESeq2. Genome Biology, 15(12):550, 2014.

- Mandel (1961) Mandel, J. Non-additivity in two-way analysis of variance. Journal of the American Statistical Association, 56(296):878–888, 1961.

- Mandel (1969) Mandel, J. The partitioning of interaction in analysis of variance. Journal of Research of the National Bureau of Standards, Series B, 73:309–328, 1969.

- Marchenko and Pastur (1967) Marchenko, V. A. and Pastur, L. A. Distribution of eigenvalues for some sets of random matrices. Matematicheskii Sbornik, 114(4):507–536, 1967.

- Marioni et al. (2008) Marioni, J. C., Mason, C. E., Mane, S. M., Stephens, M., and Gilad, Y. RNA-seq: An assessment of technical reproducibility and comparison with gene expression arrays. Genome Research, 18(9):1509–1517, 2008.

- Melé et al. (2015) Melé, M., Ferreira, P. G., Reverter, F., DeLuca, D. S., Monlong, J., Sammeth, M., Young, T. R., Goldmann, J. M., Pervouchine, D. D., Sullivan, T. J., et al. The human transcriptome across tissues and individuals. Science, 348(6235):660–665, 2015.

- Perry and Pillai (2013) Perry, P. O. and Pillai, N. S. Degrees of freedom for combining regression with factor analysis. arXiv preprint arXiv:1310.7269, 2013.

- Pickrell et al. (2010) Pickrell, J. K., Marioni, J. C., Pai, A. A., Degner, J. F., Engelhardt, B. E., Nkadori, E., Veyrieras, J.-B., Stephens, M., Gilad, Y., and Pritchard, J. K. Understanding mechanisms underlying human gene expression variation with RNA sequencing. Nature, 464(7289):768–772, 2010.

- Price et al. (2006) Price, A. L., Patterson, N. J., Plenge, R. M., Weinblatt, M. E., Shadick, N. A., and Reich, D. Principal components analysis corrects for stratification in genome-wide association studies. Nature Genetics, 38(8):904–909, 2006.

- Risso et al. (2014) Risso, D., Ngai, J., Speed, T. P., and Dudoit, S. Normalization of RNA-seq data using factor analysis of control genes or samples. Nature Biotechnology, 32(9):896–902, 2014.

- Robinson et al. (2010) Robinson, M. D., McCarthy, D. J., and Smyth, G. K. edgeR: A Bioconductor package for differential expression analysis of digital gene expression data. Bioinformatics, 26(1):139–140, 2010.

- Silvey (1959) Silvey, S. D. The Lagrangian multiplier test. The Annals of Mathematical Statistics, 30(2):389–407, 1959.

- Silvey (1975) Silvey, S. D. Statistical Inference. CRC Press, 1975.

- Stegle et al. (2010) Stegle, O., Parts, L., Durbin, R., and Winn, J. A Bayesian framework to account for complex non-genetic factors in gene expression levels greatly increases power in eQTL studies. PLoS Comput Biol, 6(5):e1000770, 2010.

- Sun et al. (2012) Sun, Y., Zhang, N. R., and Owen, A. B. Multiple hypothesis testing adjusted for latent variables, with an application to the AGEMAP gene expression data. The Annals of Applied Statistics, 6(4):1664–1688, 2012.

- Tacutu et al. (2018) Tacutu, R., Thornton, D., Johnson, E., Budovsky, A., Barardo, D., Craig, T., Diana, E., Lehmann, G., Toren, D., Wang, J., et al. Human ageing genomic resources: New and updated databases. Nucleic Acids Research, 46(D1):D1083–D1090, 2018.

- Takane and Shibayama (1991) Takane, Y. and Shibayama, T. Principal component analysis with external information on both subjects and variables. Psychometrika, 56(1):97–120, 1991.

- Townes (2019) Townes, F. W. Generalized principal component analysis. arXiv preprint arXiv:1907.02647, 2019.

- Tukey (1949) Tukey, J. W. One degree of freedom for non-additivity. Biometrics, 5(3):232–242, 1949.

- Tukey (1962) Tukey, J. W. The future of data analysis. The Annals of Mathematical Statistics, 33(1):1–67, 1962.

- Van Eeuwijk (1995) Van Eeuwijk, F. A. Multiplicative interaction in generalized linear models. Biometrics, pages 1017–1032, 1995.

- Williams (1952) Williams, E. J. The interpretation of interactions in factorial experiments. Biometrika, 39(1-2):65–81, 1952.

- Zeng et al. (2020) Zeng, L., Yang, J., Peng, S., Zhu, J., Zhang, B., Suh, Y., and Tu, Z. Transcriptome analysis reveals the difference between “healthy” and “common” aging and their connection with age-related diseases. Aging Cell, 19(3):e13121, 2020.

Supplementary material for “Inference in generalized bilinear models”

S1 Discussion

S1.1 Previous work

There is an extensive literature on models involving an unknown low-rank matrix, going by a variety of names including latent factor models, factor analysis models, multiplicative models, bi-additive models, and bilinear models. In particular, a large number of models can be viewed as special cases of generalized bilinear models (GBMs). Since a full review is beyond the scope of this article, we settle for covering the main threads in the literature.

S1.1.1 Normal bilinear models without covariates.

Principal components analysis (PCA) is equivalent to maximum likelihood estimation in a GBM with only the term (, , ), assuming normally distributed outcomes with common variance . PCA (or equivalently, the SVD) is often performed after centering the rows and columns of the data matrix, and from a model-based perspective, this is equivalent to including intercepts (, , ):

| (S1.1) |

where is a normal residual. Similarly, scaling the rows and columns is analogous to using a rank-one factorization of the variance, that is, .

Estimation. Equation S1.1 is often called the AMMI (additive main effects and multiplicative interaction) model, and a range of techniques for using it have been developed (Gauch, 1988). The least squares fit of an AMMI model can be obtained by first fitting the linear terms ignoring the non-linear term, and then estimating the non-linear term using PCA on the residuals (Gilbert, 1963; Gollob, 1968; Mandel, 1969; Gabriel, 1978). Estimation is more difficult when each entry is allowed to have a different variance, that is, when with known; this is sometimes called a weighted AMMI model (Van Eeuwijk, 1995). To handle this, Gabriel and Zamir (1979) develop the criss-cross method of estimation, which successively fits the non-linear terms , one by one, using weighted least squares.

Hypothesis testing for model selection. While most applications of PCA only use the estimates, without any uncertainty quantification, statistical research on the AMMI model has largely focused on hypothesis testing for which factors to include in the model. Early contributions on testing in this model were made by Fisher and Mackenzie (1923), Cochran (1943), Tukey (1949), Williams (1952), Mandel (1961), Gollob (1968), and Mandel (1969). Methods of this type are very widely used, particularly in the study of genotype-environment interactions in agronomy; see reviews by Freeman (1973), Gauch (2006), and Gauch et al. (2008).

Confidence regions for parameters. Uncertainty quantification for the AMMI model parameters has also been studied. Asymptotic covariance formulas for the least squares estimates have been given by Goodman and Haberman (1990), Chadoeuf and Denis (1991), Dorkenoo and Mathieu (1993) and Denis and Gower (1996) for the AMMI model and various special cases. These results are based on inverting the constraint-augmented Fisher information matrix (Aitchison and Silvey, 1958; Silvey, 1959); we use the same technique in Section S6 to estimate standard errors for and in our more general GBM model and we extend it using delta propagation.

S1.1.2 Normal bilinear models with covariates.

Estimation. In a wide-ranging article, Tukey (1962) discussed the possibility of combining regression with factor analysis, by factoring the matrix of residuals after adjusting for covariates. Indeed, for the case of normal outcomes with common variance, Gabriel (1978, Cor 3.1) showed that when using a model of the form , the least squares fit can be obtained simply by first fitting and using regression (ignoring ), then fitting to the residuals. This can be viewed as a generalization of the AMMI estimation procedure. Takane and Shibayama (1991) extend the results of Gabriel (1978) by first fitting using least squares, and then using PCA to analyze the residuals as well as each fitted component of the model, that is, , , , , and combinations thereof.

In a complementary direction, reduced-rank regression (Davies and Tso, 1982) and CANDELINC (Carroll et al., 1980) use least squares to fit models of the form and , respectively, where and are constrained to be low-rank.

Hypothesis testing and confidence regions. In the case of normal outcomes with common variance , for the model with , Perry and Pillai (2013) show how to perform inference for univariate entries of and (and univariate linear projections, more generally) accounting for the uncertainty in via an estimate of the degrees of freedom associated with the latent factors. Further, Perry and Pillai (2013) show that the problem can be reduced to the covariate-free case, , enabling one to use results on hypothesis testing in the AMMI model (Gollob, 1968; Mandel, 1969) which provide estimates of the degrees of freedom. However, this approach appears to rely on the assumption of normal outcomes with common variance.

S1.1.3 Generalized bilinear models without covariates.

In many applications, it is unreasonable to use a normal outcome model. A classical approach is to transform the data and then apply a normal outcome model, however, as discussed by Van Eeuwijk (1995), there is unlikely to be a transformation that simultaneously achieves (a) approximate normality, (b) common variance, and (c) additive effects.

A more principled approach is to extend the generalized linear model (GLM) framework to handle latent factors, as suggested by Gower (1989). Goodman’s RC models are early contributions in this direction (Goodman, 1979, 1981, 1986, 1991), consisting of count models with multinomial or Poisson outcomes where . More generally, Van Eeuwijk (1995) develops the generalized AMMI (GAMMI) model, which is a GLM version of the AMMI model in Equation S1.1, specifically, . Van Eeuwijk (1995) introduces a coordinate descent algorithm and discusses approaches for choosing , however, he does not consider uncertainty quantification for parameters, does not estimate dispersion parameters, and only demonstrates the method on very small datasets ( and ).

Correspondence analysis (Benzécri, 1973; Greenacre, 1984) is an SVD-based exploratory analysis method for matrices of categorical data, and has been reinvented under many names, such as reciprocal averaging and dual scaling (de Falguerolles, 2000). Correspondence analysis bears resemblence to estimation methods for the GAMMI model, however, it is primarily descriptive in perspective, and thus typically does not involve quantification of uncertainty.

S1.1.4 Generalized bilinear models with covariates.

Choulakian (1996) defines a class of GBMs of the same form as in this article, where and is the (a) canonical, (b) identical, or (c) logarithmic link function. For the case of no covariates (that is, the GAMMI model), Choulakian (1996) proposes an estimation algorithm that involves univariate Fisher scoring updates, which is attractive for its simplicity, but may exhibit slow convergence or failure to converge due to strong dependencies among parameters. While the defined model class is general, some limitations of the paper by Choulakian (1996) are that uncertainty quantification is not addressed, the estimation algorithm is for the special case of GAMMI, a single common dispersion is assumed and estimation of dispersion is not addressed, identifiability constraints are not enforced, no initialization procedure is provided, and only very small datasets are considered ( and ).

Gabriel (1998) considers a very general class of models of the form , where and are observed matrices (for instance, covariates) and is a low-rank matrix of parameters for each . He extends the criss-cross estimation algorithm of Gabriel and Zamir (1979) to this model. While the model of Gabriel (1998) is very elegant, some limitations are that estimation is performed using a vectorization approach that is computationally prohibitive on large matrices, uncertainty quantification is not addressed, a common dispersion parameter is assumed for all entries, and only very small datasets are considered ( and ). Also, it is not clear what identifiability constraints are assumed on the matrices.

In recent work, Townes (2019) considers a model of the form where is a vector of ones and is a vector of fixed column-specific offsets. Townes (2019) derives diagonal approximations to Fisher scoring updates for -penalized maximum likelihood estimation, and in a postprocessing stage, enforces orthogonality constraints to aid interpretability. Other differences compared to the present work are that only estimation is considered (uncertainty quantification is not addressed) and overdispersion parameters are not estimated.

The overview by de Falguerolles (2000) provides an interesting and insightful discussion of several threads in the literature.

S1.1.5 Recent applications of bilinear models.

Several authors have used bilinear models or GBMs in genetics and genomics, usually to remove unwanted variation such as batch effects. However, most of these methods do not fully account for uncertainty in the latent factors, which may lead to miscalibrated inferences such as overconfident p-values. For example, to remove batch effects in gene expression analysis, several approaches involve first estimating and then treating as a known matrix of covariates, accounting for uncertainty only in using standard regression (Leek and Storey, 2007, 2008; Sun et al., 2012; Risso et al., 2014); this is also done to adjust for population structure in genetic association studies (Price et al., 2006). In copy number variation detection, it is common to simply treat the estimated as known and subtract it off (Fromer et al., 2012; Krumm et al., 2012; Jiang et al., 2015).

Carvalho et al. (2008) use a Bayesian sparse factor analysis model with covariates, employing evolutionary stochastic search for model selection and Markov chain Monte Carlo (MCMC) for posterior inference within models. Stegle et al. (2010), Buettner et al. (2017), and Babadi et al. (2018) use complex hierarchical models that can be viewed as Bayesian GBMs with additional prior structure, and they employ variational methods for approximate posterior inference. Another application in which bilinear models have seen recent use is longitudinal relational data such as networks, for which Hoff (2015) employs an interesting Bayesian model with , where is a matrix of observed covariates that depend on time .

S1.2 Challenges and solutions

Estimation and inference in large GBMs is complicated by a number of nontrivial challenges. In this section, we discuss several issues and how we resolve them.

Estimating the dispersion parameters. There are several issues with estimating the negative binomial (NB) dispersions . First, since there is insufficient information to estimate all dispersions individually, we use the rank-one parametrization . Second, the choice of identifiability constraints matters — the natural choice of contraints, and , leads to noticeably biased estimates of and , particularly for higher values; see Figure S6. Instead, we constrain and , which effectively mitigates this bias, empirically. Third, the maximum likelihood estimates sometimes exhibit a severe downward bias, particularly for low values of log-dispersion; we use a simple heuristic bias correction to deal with this. Fourth, to avoid arithmetic underflow/overflow in the log-dispersion update steps, we develop carefully constructed expressions for the gradient and Hessian. Finally, to prevent occasional lack of convergence due to oscillating estimates, we employ an adaptive maximum step size.

Inapplicability of standard GLM methods. Since is linear in the parameters, one could vectorize and write it as where and is a function of and . In principle, one could then apply standard GLM estimation methods for estimating to construct a joint update to . However, this vectorization approach is only computationally feasible for small data matrices since computing the matrix inverse takes on the order of time. Further, this update would need to be done repeatedly since , , , , , and also need to be simultaneously estimated, and the vectorization approach does not help estimate these parameters.

Inapplicability of the singular value decomposition. At first glance, it might appear that the singular value decomposition (SVD) would make it straightforward to estimate , , and given the other parameters. However, when used for estimation, the SVD implicitly assumes that every entry has the same variance. This is far from true in GBMs, and consequently, naively using the SVD to update leads to poor estimation accuracy. The criss-cross algorithm of Gabriel and Zamir (1979) yields a low-rank matrix factorization that accounts for entry-specific variances, and our algorithm provides another way of doing this while adjusting for covariates in a GBM. In our algorithm, we only directly use the SVD for enforcing the identifiability constraints, not for estimation of .

Computational efficiency. The genomics applications in Sections 7 and 8 involve large count matrices where the number of features is on the order of to and the number of samples can be as large as or more. Consequently, computational efficiency is essential for practical usage of the method. For estimation, we exploit the special structure of the GBM to derive computationally efficient Fisher scoring updates to each component of the model. For inference, we develop a novel method for efficiently propagating uncertainty between components of the model. Assuming , our estimation algorithm takes time per iteration, and our inference algorithm requires time, making them computationally feasible on large data matrices.

Numerical stability. Using a good choice of initialization is crucial for numerical stability. To initialize the estimation algorithm, we analytically solve for values of , , and to approximate the data matrix and then, for NB-GBMs, we iteratively update and for a few iterations. Even with a good initialization, optimization methods occasionally diverge. In a large GBM, there are so many parameters that even occasional divergences cause the algorithm to fail with high probability. We reduce the frequency of divergences to be negligible by enforcing a bound on the norm of the optimization steps; see Section 3.

Enforcing identifiability constraints. Rather than performing constrained optimization steps, we use a combination of unconstrained optimization steps and likelihood-preserving projections onto the constrained parameter space. Although the construction of likelihood-preserving projections in a GBM is not obvious, we show that they can be efficiently computed using simple linear algebra operations. This optimization-projection approach has a number of advantages; see Section S1.3 for further discussion.

Dependencies in latent factors. Optimizing the latent factor term is challenging due to the dependencies among , , and as well as the orthonormality contraints and . Consequently, updating , , and individually does not seem to work well. To resolve this issue, we relax the dependencies and constraints by defining and , and updating , , and separately.

Prior / regularization. To improve estimation accuracy in a high-dimensional setting, we place independent normal priors on the entries of , , , , , , , and , and use maximum a posteriori (MAP) estimation, which is equivalent to -penalization/shrinkage for this choice of prior. An additional benefit of using priors is that it improves the numerical stability of the estimation algorithm. See Section S9 for prior details.

S1.3 Enforcing the GBM identifiability constraints

It might seem preferable to perform unconstrained optimization throughout the estimation algorithm until convergence, and then enforce the identifiability constraints as a postprocessing step. However, in general, this would not converge to a local optimum in the constrained space because the prior does not have the same invariance properties as the likelihood. Thus, we maintain the constraints throughout the algorithm by applying a projection at each step.

When updating each component (, , , , , , , and ), rather than using a constrained optimization step such as equality-constrained Newton’s method (Boyd and Vandenberghe, 2004), we use an unconstrained optimization step followed by a likelihood-preserving projection onto the constrained space.

It is crucial to preserve the likelihood when projecting onto the constrained space, since otherwise the projection might undo all the gains obtained by the unconstrained optimization step — in short, otherwise we might end up “taking one step forward and two steps back.” To this end, we employ likelihood-preserving projections for each component of the GBM. By Theorem 5.4, the likelihood is invariant under these operations and the projected values satisfy the identifiability constraints. The optimization-projection approach has several major advantages.

-

1.

In the likelihood surface, there can be strong dependencies among the parameters within each row of , , , and , whereas the between-row dependencies are much weaker (specifically, they have zero Fisher cross-information). Thus, it is desirable to optimize each row jointly, however, this is complicated by the fact that the constraints create dependencies between rows. Consequently, using equality-constrained Newton appears to be computationally infeasible since it would require a joint update of each parameter matrix in entirety.

-

2.

Since each optimization-projection step modifies multiple components of the GBM, it effectively performs a joint update on multiple components. For instance, the likelihood-preserving projection for also modifies , so the optimization-projection step on is effectively a joint update to and . This has the effect of enlarging the constrained space within which each update takes place, improving convergence.

-

3.

For and , the constrained space is particular difficult to optimize over since it involves not only within-column linear dependencies ( and ), but also quadratic dependencies within and between columns ( and ). The optimization-projection approach makes it easy to handle these constraints.

-

4.

It is straightforward to perform unconstrained optimization for each component separately, and the projections that we derive turn out to be very easy to apply.

S2 Additional simulation results and details

We present additional simulation results and details supplementing Section 6.

Simulating covariates, parameters, and data

Here, we provide the details of how the simulation data are generated. First, the covariates are generated using a copula model as follows. We describe the procedure for the feature covariate matrix ; the sample covariate matrix is generated in the same way but with and in place of and . We generate a random covariance matrix where the entries of are i.i.d., and then we compute the resulting correlation matrix by setting . We generate i.i.d. for , and define by setting where , is the standard normal CDF, and is the generalized inverse CDF for the desired marginal distribution, which we take to be for the Normal scheme, for the Gamma scheme, and for the Binary scheme. Finally, we standardize by setting for all and centering/scaling so that and for .

The true parameters , , , , , , , , and are then generated as follows. First, we generate matrices , , and with i.i.d. entries as follows: (Normal scheme) , , and , or (Gamma scheme) , , and . These distributions are defined so that the scale of the entries of , , and is not affected by and .

Then we set , , and . Next, we set and where and are sampled uniformly from their respective Stiefel manifolds, that is, uniformly subject to and . The diagonal entries of are evenly spaced from to ; this scaling is motivated by the Marchenko–Pastur law for the distribution of singular values of random matrices (Marchenko and Pastur, 1967). For the log-dispersion parameters (, , and ), we generate i.i.d., and set , , and ,

Given the true parameters and covariates, the data matrix is generated as follows. We compute the mean matrix , where the inverse link function is applied element-wise, and we compute the inverse dispersions . Then we sample where in the NB scheme, in the LNP scheme, in the Poisson scheme, or in the Geometric scheme, so that in each case. Here, for , has p.m.f. , whereas has p.m.f.

| (S2.1) |

for and . These outcome distributions are defined so that in each case, if then . Further, in the LNP case, , so the interpretation of is the same as in the NB case.

Consistency and statistical efficiency – Details on Section 6.1

In these simulations, to accurately measure the trend with increasing , we generate the covariates, true parameters, and data with and project them onto the lower-dimensional spaces for smaller values; for , , and this projection simply consists of taking the first rows/entries, and are unaffected by the projection, and , , , , , and are projected by matching the first rows of the mean matrix .

We use the relative MSE rather than the MSE to facilitate interpretability, since this puts the errors on a common scale that does not depend on the magnitude of the parameters. For instance, the relative MSE for is defined as

where is the estimate and is the true value.