[dynnumwidth]toclinefigure\DeclareTOCStyleEntry[dynnumwidth]toclinetable

Inference for bivariate extremes via a semi-parametric angular-radial model

Abstract

The modelling of multivariate extreme events is important in a wide variety of applications, including flood risk analysis, metocean engineering and financial modelling. A wide variety of statistical techniques have been proposed in the literature; however, many such methods are limited in the forms of dependence they can capture, or make strong parametric assumptions about data structures. In this article, we introduce a novel inference framework for bivariate extremes based on a semi-parametric angular-radial model. This model overcomes the limitations of many existing approaches and provides a unified paradigm for assessing joint tail behaviour. Alongside inferential tools, we also introduce techniques for assessing uncertainty and goodness of fit. Our proposed technique is tested on simulated data sets alongside observed metocean time series’, with results indicating generally good performance.

Keywords: Multivariate Extremes, Extremal Dependence, Generalised Additive Models, Coordinate Systems

1 Introduction

1.1 Multivariate extreme value modelling

The modelling of multivariate extremes is an active area of research, with applications spanning many domains, including meteorology [6], metocean engineering [24, 62], financial modelling [3] and flood risk assessment [13]. Typically, approaches in this research field are comprised of two steps: first, modelling the extremes of individual variables and transforming to common margins, followed by modelling of the dependence between the extremes of different variables. We refer to this dependence as the extremal dependence structure henceforth.

This article discusses inference for multivariate extremes using an angular-radial model for the probability density function, illustrated using examples in two dimensions. To place the proposed model in context, we first provide a brief synopsis of the existing literature for multivariate extremes. Given a random vector with marginal distributions functions and , the strength of dependence in the upper tail of can be quantified in terms of the tail dependence coefficient, , defined as the limiting probability

| (1.1) |

when this limit exists [23]. When , the components of are said to be asymptotically dependent (AD) in the upper tail, and when , they are said to be asymptotically independent (AI). Much of the focus of recent work in multivariate extreme value theory has been related to developing a general framework for modelling joint extremes of which is applicable to both AD and AI cases, and can be used to evaluate joint tail behaviour in the region where at least one variable is large.

To discuss the approaches proposed to date and their associated limitations, it is helpful to categorise them in terms of whether they assume heavy- or light-tailed margins, and whether they consider the distribution or density function. Classical multivariate extreme value theory assumes heavy-tailed margins, and is based on the framework of multivariate regular variation [MRV, 53]. It addresses the case where , and has been widely studied – see Beirlant et al., [2], de Haan and Ferreira, [11] and Resnick, [54] for reviews. Under some regularity conditions, equivalent asymptotic descriptions of joint extremal behaviour can be obtained from either the density or distribution function [12].

In the MRV framework, any distribution with has the same asymptotic representation. To address this issue, [30, 31] proposed a method to characterise joint extremes for both AI and AD distributions in the region where both variables are large. Model forms for the Ledford-Tawn representation were proposed by Ramos and Ledford, [50]. The resulting framework also assumes heavy-tailed margins and is referred to as hidden regular variation [HRV, 52]. However, for AI distributions, a description of extremal behaviour in the region where both variables are large may not be the most useful, since extremes of both variables are unlikely to occur simultaneously. Moreover, for AI distributions with certain regularity conditions, the asymptotic representation in this framework is governed only by the properties of the distribution along the line [36]. To provide a more useful representation for AI distributions, applicable in the region where either variable is large, Wadsworth and Tawn, [64] introduced an asymptotic model for the joint survivor function on standard exponential margins. In contrast to the MRV framework, the resulting model provides a useful description of AI distributions, but all AD distributions have the same representation.

More recently, there has been interest in modelling the limiting shapes of scaled sample clouds, or limit sets. The study of limit sets has been around since the 1960s [14, 8], and recent works from Nolde, [42] and Nolde and Wadsworth, [43] have shown that these sets are directly linked to several representations for multivariate extremes. For a given distribution, the limit set is obtained by evaluating the asymptotic behaviour of the joint density function on light tailed margins. Many recent approaches have focused on estimation of the limit set in order to approximate extremal dependence properties; see, for instance, Simpson and Tawn, [55], Wadsworth and Campbell, [63], Majumder et al., [38] and Papastathopoulos et al., [47]. However, the limit set itself does not provide a full description of the asymptotic joint density or distribution, so is less useful from a practical modelling perspective.

To understand the limitations of the methods discussed above, it is instructive to provide an illustration of the joint distribution and density functions on heavy- and light-tailed margins for AI and AD random vectors. All the methods discussed above have equivalent representations in angular-radial coordinates, so without loss of generality, we consider the angular-radial dependence. The first step for most methods for modelling multivariate extremes is to transform variables to common margins. Define

so that and have standard Pareto and exponential margins, respectively. Note that , and that the dependence structure or copula of remains unchanged by the marginal transformation [56]. Furthermore, the joint survivor function is related to the joint survivor function of by . Moreover, if has joint density function , then has joint density , where ..

Figure 1.1 shows the joint survivor and density functions for the AD Joe copula, as defined in the Supplementary Material, on standard Pareto and exponential margins. Rays of constant angle on each margin are also shown. On Pareto margins, with the axes shown on a logarithmic scale, lines of constant angle asymptote to lines with unit gradient. As such, the MRV framework provides a description of joint tail behaviour in the region close to the line , i.e., where and are of similar magnitudes. In this region, the contours of the joint density and survivor functions asymptote to a curve of constant shape, which describes the joint extremal behaviour in this region. In contrast, the angular-radial description appears different on exponential margins. For the joint survivor function, the contours of constant probability appear to asymptote towards the line for some constant . Wadsworth and Tawn, [64] showed that is the case for all AD distributions. Informally, this is because for a distribution to be AD, the probability mass must be concentrated close to the line , so when the density is integrated to obtain the survivor function, the dominant contribution comes from this region. In contrast to the joint survivor function, the angular-radial description of the joint density is not the same for all AD distributions on exponential margins.

Figure 1.2 shows a similar set of plots for the AI Gaussian copula, also defined in the Supplementary Material. In this case, contours for both the joint density and joint survivor function are curved on both sets of margins. The angular-radial model on Pareto margins describes the section of the curves close to the line , which asymptote to straight lines as . Therefore, the HRV description of the asymptotic behaviour is effectively a straight line approximation to a curve, and is only applicable in the region close to the line ; see Mackay and Jonathan, [36] for details. In contrast, the angular-radial description of both the density and survivor functions on exponential margins provides a more useful description of asymptotic behaviour. That is, the representation on exponential margins is valid for the full angular range, whereas the representation on Pareto margins is only valid in the joint exceedance region where we are unlikely to observe the largest values of either variable for variables which are AI.

In some applications it is useful to describe the extremal behaviour of a random vector for both large and small values of certain variables; see Section 1.2. In this case, it is more useful to work on symmetric two-sided margins, rather than one-sided margins. Figure 1.3 shows the joint survivor and density functions for a Gaussian copula on standard Laplace margins. The angular-radial variation of the joint survivor function is useful in the first quadrant of the plane, but is less useful in the other quadrants. In the second and fourth quadrants, the contours of the joint survivor function asymptote to the corresponding marginal levels, providing no information about the asymptotic behaviour of the distribution in this region. In contrast, the joint density function provides useful asymptotic information in all regions of the plane.

This motivates an intuitively-appealing angular-radial description of the joint density function, referred to as the semi-parametric angular-radial (SPAR) model [32], which we consider in detail in this article. A similar model was recently proposed by Papastathopoulos et al., [47], although the application was only considered for standard Laplace margins. However, the SPAR framework can be applied on any type of margin. Mackay and Jonathan, [36] showed that on heavy-tailed margins, SPAR is consistent with the MRV/HRV frameworks, and on light-tailed margins, SPAR is consistent with limit set theory. However, the SPAR framework is more general than limit set theory, as it provides an explicit model for the density in extreme regions of the variable space. Moreover, there are distributions which have degenerate limit sets in some regions, for which there is still a useful SPAR representation.

In the SPAR framework, variables are transformed to angular-radial coordinates, and it is assumed that the conditional radial distribution is in the domain of attraction of an extreme value distribution. This implies the radial tail conditioned on angle can be approximated by a non-stationary generalised Pareto (GP) distribution. The SPAR approach generalises the model proposed by Wadsworth et al., [65], in which angular and radial components are assumed to be independent. In the Wadsworth et al., [65] model, the margins and angular-radial coordinate system are selected so that the assumption of independent angular and radial components is satisfied. The SPAR framework removes this requirement, providing a more flexible representation for multivariate extremes.

While a strong theoretical foundation for the SPAR model is provided in Mackay and Jonathan, [36], inference for this model has not yet been demonstrated. Inference via this framework would offer advantages over many existing approaches, and a fitted SPAR model could be used to estimate extreme quantities commonly applied in practice, such as risk measures [41] and joint tail probabilities [27].

The SPAR model reframes multivariate extreme value modelling as non-stationary peaks over threshold (POT) modelling with angular dependence. Many approaches have been proposed for non-stationary POT inference e.g. Randell et al., [51], Youngman, [71], Zanini et al., [73]. In this paper, we introduce an ‘off-the-shelf’ inference framework for the SPAR model. This framework, which utilises generalised additive models [GAMs; 68] for capturing the relationship between radial and angular components, offers a high degree of flexibility and can capture a wide variety of extremal dependence structures, as demonstrated in Sections 6 and 7. Our approach offers utility across a wide range of applications and provides a convenient, practical framework for performing inference on multivariate extremes. Moreover, our inference framework is ready to use by practitioners; open-source software for fitting the SPAR model is available at https://github.com/callumbarltrop/SPAR. For ease of discussion and illustration, we restrict attention to the bivariate setting throughout, noting that the SPAR model is not limited to this setting.

1.2 Motivating examples

To demonstrate the practical applicability of our proposed inference framework, we consider three bivariate metocean time series made up of zero-up-crossing period, , and significant wave height, , observations. We label these data sets as A, B and C, with each data set corresponding to a location off the coast of North America. data sets A and B were previously considered in a benchmarking exercise for environmental contours [19]. Observations were recorded on an hourly basis over 40, 31 and 42 year time periods for data sets A, B and C, resulting in , and observations, respectively, once missing observations are taken into account. Exploratory analysis indicates the joint time series are approximately stationary over the observation period. Understanding the joint extremes of metocean variables is important in the field of ocean engineering for assessing the reliability of offshore structures. Wave loading on structures is dependent on both wave height and period, and the largest loads on a structure may not necessarily occur with the largest wave heights. Resonances in a structure may result in the largest responses occurring with either short- or long-period waves, meaning it is necessary to characterise the joint distribution in both of these ranges. These data sets are illustrated in Figure 1.4.

Metocean data sets of this type can often exhibit complex dependence structures, for which many multivariate models fail to account. For example, data set B exhibits clear asymmetry in its dependence structure. Moreover, as demonstrated in Haselsteiner et al., [19], many existing approaches for modelling metocean data sets perform poorly in practice, often misrepresenting the joint tail behaviour or not offering sufficient flexibility to capture the complex data structures. These shortcomings can have drastic consequences if fitted models are used to inform the design bases for offshore structures, as is common in practice.

This paper is structured as follows. In Section 2, we briefly introduce the SPAR model and outline our assumptions. In Section 3, we introduce a technique to estimate the density of the angular component. In Section 4, we introduce a framework for estimating the density of the radial component, conditioned on a fixed angle. In Section 5, we introduce tools for quantifying uncertainty and assessing goodness of fit when applying the SPAR model in practice. In Section 6 and 7, we apply the proposed framework to simulated and real data sets, respectively, illustrating the proposed framework can accurately capture a wide range of extremal dependence structures for both prescribed and unknown marginal distriutions. We conclude in Section 8 with a discussion and outlook on future work.

2 The SPAR Model

2.1 Coordinate systems

Let denote a random vector in with continuous joint density function and simply connected support containing the point . The SPAR model for requires a transformation from Cartesian to polar coordinates. Polar coordinates can be defined in various ways; see Mackay and Jonathan, [36] for discussion. In this paper, we restrict attention to two particular angular-radial systems corresponding to the and norms, defined as , for . We define , , and consider these variables as radial components of . Such definitions of radial variables are common in multivariate extreme value models [e.g., 10, 65]. When using the norm to define the radial variable, the corresponding angular variable is usually defined as , where atan2 is the four-quadrant inverse tan function. The map between and is bijective on . When using the norm to define radii, the angular variable is typically defined as [e.g., 53, chapter 5]. The random vector has a one-to-one correspondence with in the upper half of the plane (), but the use of the vector becomes ambiguous if we are interested in the full plane, since contains no information about the sign of .

With this in mind, we follow Mackay and Jonathan, [36] and define the bijective angular functions , where is the unit circle for the norm. For , these are defined as

where for and otherwise, is the generalised signum function. The functions give a scaled measure of the distance along the unit circle from the point to , measured counter-clockwise.

With angular functions established, we define the angular variables of to be , . The corresponding radial-angular mapping given by

is bijective for . Consequently, we can recover from its radial and angular components, i.e., for . We note that . However, we use the variable here, in preference to , so that the angular range is the same for both and . The joint density of can be written in terms of the joint density of ,

where the terms and are the Jacobians of the respective transformations. For ease of notation, we henceforth drop the subscripts on the radial and angular components and simply let denote one of the coordinate systems, with corresponding joint density function .

2.2 Conditional radial tail assumption

Applying Bayes theorem, the joint density can be written in the conditional form where denotes the marginal density of , and denotes the density of , with corresponding distribution function . Viewed in this way, the modelling of joint extremes is reduced to the modelling of the angular density, , and the tail of the conditional density, .

Given any , define as for all , implying . We refer to as the threshold function henceforth. For the SPAR model, we assume that for all , there exists a normalising function such that

| (2.2) |

as , with . The right hand side of equation (2.2) denotes the cumulative distribution function of a generalised Pareto (GP) distribution, and we term the shape parameter function. The case can be interpreted as the limit of equation (2.2) as . Assumption (2.2) is equivalent to the assumption that is in the domain of attraction of an extreme value distribution [1]. Given the wide range of univariate distributions satisfying this assumption, it is reasonable to expect the convergence of (2.2) to hold in many cases for also. Mackay and Jonathan, [36] showed that this assumption holds for a wide variety of theoretical examples.

This convergence motivates a model for the upper tail of . Assuming that equation (2.2) approximately holds for some close to , we have

| (2.3) |

for some which we refer to as the scale parameter function. The inclusion of the scale parameter removes the need to estimate the normalising function , and this is equivalent to the standard peaks over threshold approximation used in univariate extreme value theory [9].

Given and , assumption (2.3) implies that

where denotes the survivor function. The joint density of in the region is then given by

| (2.4) |

where is the GP density function. Equation (2.4) implies that the SPAR model is defined within the region .

To simplify the inference, we also assume that the functions , , and are finite and continuous over and satisfy the periodicity property . Such conditions are not guaranteed in general, and whether they are satisfied depends on the choice of margins, alongside the form of the dependence structure. Mackay and Jonathan, [36] showed that the assumptions are valid for a wide range of copulas on Laplace margins, but using one-sided margins (e.g., exponential) or heavy-tailed margins can result in the assumptions not being satisfied for the same copulas.

The SPAR model does not require specific marginal assumptions, and SPAR representations exist for variables with different marginal domains of attraction; however we consider these characteristics unlikely for phenomena in the Earth’s environment. In applications, we typically assume either (i) a practical environmental setting, in which it is reasonable to assume that all variables are bounded (and then apply the model to standardised variables with zero mean and unit variance), or (ii) make marginal transformation to common scale. As discussed in [36], there are theoretical reasons to prefer transformation to Laplace margins.

3 Angular density estimation

In this section, we consider the angular density of equation (2.4), which we estimate using kernel density (KD) smoothing techniques. Such techniques offer many practical advantages: they are nonparametric, meaning no distributional assumptions for the underlying data are required, and they give smooth, continuous estimates of density functions. These features make KD techniques desirable for the estimation of . Note that other nonparametric smooth density estimation techniques are also available [e.g., 17, 51], but we do not consider these here.

Unlike standard KD estimators [7], we require functional estimates that are periodic on the angular domain , motivating the use of circular density techniques [5]. Given a sample from , the KD estimate of the density function is given by

where denotes some circular kernel with bandwidth parameter . The bandwidth controls the smoothness of the resulting density estimate, with the smoothness increasing as . The goal is typically to select as small as possible without overfitting. Within the literature, a wide range of circular kernels have been proposed; see Chaubey, [5] for an overview. We restrict attention to one particular kernel since it is perhaps the most widely used in practice [15]. Specifically, we consider the von Mises kernel,

| (3.5) |

where is the modified Bessel function of order zero [58]. Here we have modified the kernel to have support on , rather than the usual support of .

With a kernel selected, a critical issue when applying equation (3.5) in practice is the choice of . A variety of approaches have been proposed for automatically selecting the bandwidth parameter, including plug-in values [58], cross-validation techniques [18] and bootstrapping procedures [39].

For our modelling approach, we opt not to use automatic selection techniques for the bandwidth parameter; instead, we select on a case-by-case basis, using the diagnostics proposed in Section 5.2 to inform selection. In unreported results, we found many of the automatic selection methods to perform poorly in practice, and it has been shown that such techniques can fail for multi-modal densities [46]. Multi-modality is often observed within the angular density [36], suggesting it is better not to select using automatic techniques.

4 Conditional density estimation

We now consider the conditional density of equation (2.4). For simplicity, we assume that is fixed at some high level for each . In practice, the choice of non-exceedance probability is non-trivial, and sensitivity analyses must be performed to ensure an appropriate value is selected; see Sections 5 and 7 for further details. Note that this is directly analogous to the threshold selection problem in univariate analyses; see Murphy et al., [40] for a recent overview.

To apply equation (2.3), we require estimates of the threshold and GP parameter functions, denoted , and respectively. As noted in Section 1.1, this is equivalent to performing a non-stationary peaks over threshold analysis on the conditional radial variable , with viewed as a covariate.

Throughout this article, we let denote a sample of size from . In this section we introduce two methods for inference. The first approach assumes the conditional radial distribution is locally stationary over a small angular range. In the second approach, spline-based modelling techniques are used to estimate the threshold and parameter functions as smoothly-varying functions of angle. The local stationary inference is used as a precursor to the spline-based inference, providing a useful comparison and ‘sense check’ on results.

4.1 Local stationary inference

We compute local stationary estimates at a fixed grid of values , where denotes some large positive integer, selected to ensure has sufficient coverage on . For each , we assume there exists a local neighbourhood , , such that the distribution of is stationary for . This is true in the limit as , and a reasonable approximation for small .

In practice, rather than fixing the size of the interval, we select the nearest observations in terms of the angular distance from , defined as , , for some value . Define to be the index set of the smallest order statistics of . Local estimates of the threshold and parameter functions can be obtained from the corresponding radial set . Specifically, we define to be the empirical quantile of , and and to be maximum likelihood estimates of the GP distribution parameters obtained from the set . Choosing an appropriate value for involves a bias-variance trade-off; selecting too large (small) a value will increase the bias (variability) of the resulting pointwise threshold and parameter estimates. For our modelling procedure, this selection is not crucial, since local estimates are merely used as a means to inform the smooth estimation procedure presented in Section 4.2.

4.2 Smooth inference for the SPAR model

We now consider smooth estimation of the threshold and parameter functions. For this, we employ the approach of Youngman, [71], in which GAMs are used to capture covariate relationships; software for this approach is given in Youngman, [70]. Our procedure is two-fold; we first estimate the threshold function for a given , then estimate the parameter functions and using the resulting threshold exceedances.

This section is structured as follows. First, we provide a high-level overview of GAM-based modelling techniques. We then introduce procedures for estimating the threshold and parameter functions via the GAM framework. Finally, we discuss the selection of the basis dimensions required for the GAM formulations.

4.2.1 GAM-based procedures

GAMs are a flexible class of regression models that allow for complex, non-linear relationships between response and predictor variables. They extend the traditional linear regression model by allowing the response to be modelled as a sum of smooth basis functions of the predictor variables. They are particularly useful when the relationship between the response and predictor variables is complex in nature and cannot be easily captured using standard parametric regression techniques.

Employing the GAM framework, the threshold and parameter functions can be represented through a sum of smooth basis functions, or splines. For an arbitrary function , we write

| (4.6) |

where , denote smooth basis functions, , denote coefficients and denotes the basis dimension. To apply equation (4.6) in practice, one must first select a family of basis functions , . A wide variety of bases have been proposed in the literature; see Perperoglou et al., [48] for an overview. We restrict attention to one particular type of basis function known as a cubic spline. Cubic splines are widely used in practice to capture non-linear relationships, and exhibit many desirable properties, such as optimality in various respects, continuity and smoothness [68]. Moreover, cubic splines can be modified to ensure periodicity by imposing conditions on the coefficients, resulting in a cyclic cubic spline. In the context of the SPAR framework, these properties are desirable to ensure the estimated threshold and parameters functions are smooth and continuous, and that they satisfy periodicity on the interval .

With basis functions selected, an important consideration is the basis dimension size ; this corresponds to the number of knots of the spline function. This selection represents a trade-off, since selecting too many knots will result in higher computational burden and parameter variance, while selecting too few will not offer sufficient flexibility for capturing non-linear relationships. We consider this trade-off in detail in Section 4.2.3.

Given , the next step is to determine the knot locations; these are points where spline sections join. The knots should be more closely spaced in regions where more observations are available. In our case, we define knots at empirical quantiles of the angular variable corresponding to a set of equally spaced probability levels; this is typical for spline based modelling procedures.

With a GAM formulated, the final step is to estimate the spline coefficients , . Various methods have been proposed for this estimation [68]. We have opted to use the restricted maximum likelihood (REML) approach of Wood et al., [69]. For this technique, the log-likelihood function is penalised in a manner that avoids over-fitting, and cross-validation is used to automatically select the corresponding penalty parameters. Estimation via REML avoids the use of MCMC, which can be computationally expensive in practice; see Wood, [68] for further details.

4.2.2 Estimation of the threshold and GP parameter functions

Estimation of the threshold function is equivalent to estimating quantiles of over , motivating the use of quantile regression techniques. Employing the GAM framework with defined as in equation (4.6), we set , so that . We then employ the approach of Youngman, [71], whereby a misspecified asymmetric Laplace model is assumed for , and REML is used to estimate the coefficients associated with . By altering the pinball loss function typically used in quantile regression procedures [28], this approach avoids computational issues that can often arise within such procedures; see the Supplementary Material for further details.

4.2.3 Selecting basis dimensions

An important consideration when specifying the GAM forms for both the threshold and parameter functions is the basis dimension. Selecting an appropriate dimension is essential for ensuring accuracy and flexibility in spline modelling procedures [66, 48]. Generally speaking, selecting too few knots may result in functional estimates that do not capture the underlying covariate relationships, while parameter variance increases for larger dimensions.

While some approaches have been proposed for automatic dimension selection [e.g., 26], most available spline based modelling procedures select the dimension on a case-by-case basis using practical considerations. Moreover, as long as the basis dimension is sufficiently large enough, the resulting modelling procedure should be insensitive to the exact value, or the knot locations [68]. This is due to the REML estimation framework, which penalises over-fitting, thus dampening the effect of adding additional knots to the spline formulation. As such, it is preferable in practice to select more knots than one believes is truly necessary to capture the covariate relationships. Therefore, we select reasonably large basis dimensions for the data sets considered in Sections 6 and 7.

5 Practical tools for SPAR model inference

In this section, we introduce practical tools to aid with implementation of the inference frameworks presented in Sections 3 and 4. Specifically, we introduce a tool for quantifying uncertainty in the SPAR framework, alongside diagnostic tools for assessing goodness of fit. The latter tools can also be used to inform the selection of tuning parameters, such as the non-exceedance probability , the bandwidth parameter , and the basis dimension.

5.1 Evaluating uncertainty

When applying the SPAR modelling framework in practice, uncertainty will arise for each of the estimated components; namely, the angular density, threshold and parameter functions. In practice, this uncertainty is a result of sampling variability combined with model misspecification. The former arises due to finite sample sizes only partially representing the entire population, while the latter arises from modelling frameworks not fully capturing the complex features of the data. Quantifying this uncertainty is crucial for interpreting statistical results and making informed decisions based on the inherent modelling limitations.

To quantify uncertainty in SPAR model fits, we must consider each model component in turn. For this, we take a similar approach to Haselsteiner et al., [19] and Murphy-Barltrop et al., [41], and consider a fixed angle , with defined as in Section 4.1. We then quantify the estimation uncertainty for each model component while keeping the angle fixed. Specifically, we propose the following bootstrap procedure: for , where denotes some large positive integer, do the following

-

1.

Resample the original data set (with replacement) to produce a new sample of size .

-

2.

Compute the point estimate of the angular density at , denoted , using the methodology described in Section 3.

-

3.

Compute the point estimates of the threshold, scale and shape parameters at , denoted , and respectively, using the methodology described in Section 4.2.

We remark that the choice of resampling procedure can be adapted to incorporate data sets exhibiting temporal dependence. In this case, rather than using a standard bootstrap, one can apply a block bootstrap [29]. This sampling scheme retains temporal dependence in the resampled data set, ensuring the additional uncertainty that arises due to lower effective sample sizes is accounted for [49]. See Keef et al., [27] and Murphy-Barltrop et al., [41] for applications of block bootstrapping within the extremes literature.

Given a significance level , we use the outputs from the bootstrapping procedure to construct estimates of the median and confidence interval for each model component at . Considering the angular density, for example, these quantities are computed using the set . Assuming the estimation procedure is unbiased, one would expect , where and denote the empirical and quantile estimates from , respectively.

Repeating this procedure for all allows one to evaluate uncertainty over the angular domain for each model component, thus quantifying the SPAR model uncertainty. This in turn allows us to evaluate uncertainty in quantities computed from the SPAR model, such as isodensity contours or return level sets; see Sections 6 and 7.

5.2 Evaluating goodness of fit

We present a localised diagnostic to assess the relative performance of the SPAR model fits in different regions, similar to that used in [37]. Consider a partition of the angular domain around , corresponding to a variety of regions in . For each , take the local radial window as defined in Section 4.1. Treating as a sample from , we compare the observed quantiles with the fitted SPAR model quantiles, resulting in a localised QQ plot. Similar to before, uncertainty can be quantified via non-parametric bootstrapping. Comparing the resulting QQ plots over different angles, and different values of and , provides another means to assess model performance.

Finally, we propose comparing the estimated angular density, obtained using the methodology of Section 3, with the corresponding density function computed from the histogram. This comparison allows one to assess whether the choice of bandwidth parameter, , is appropriate for a given data structure.

6 Simulation study

6.1 Study set up

In this section, we evaluate the performance of the smooth inference framework introduced in Section 4.2 via simulation. We do not consider the local estimation approach of Section 4.1 here, since our proposed local estimates are only meant as a means of assessing smooth SPAR estimates when the true values are unknown.

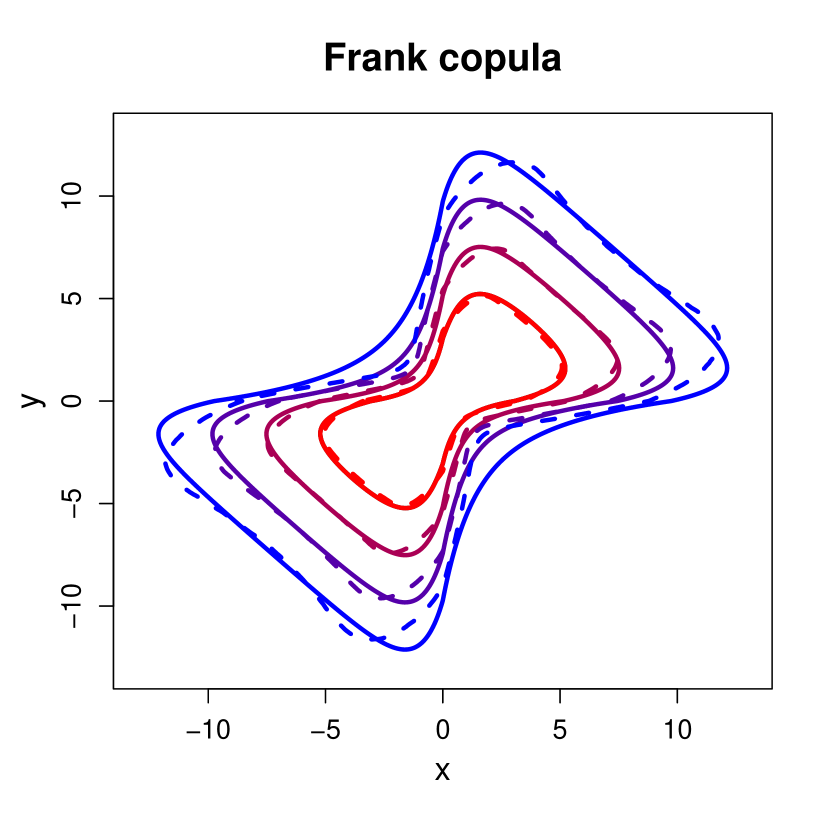

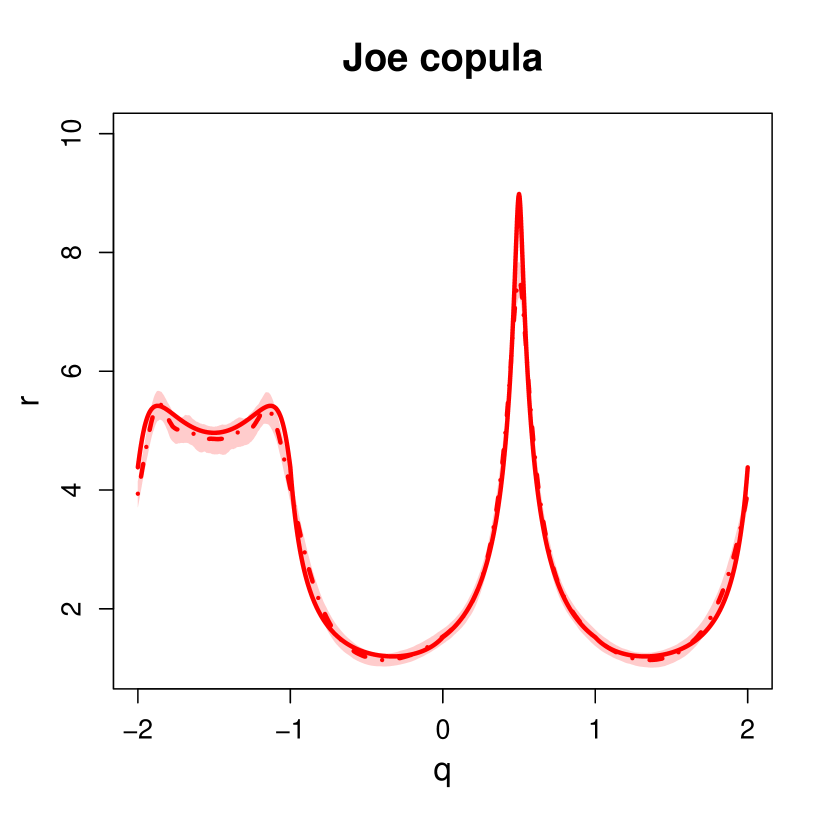

We consider four copulas on standard Laplace margins; Gaussian, Frank, t and Joe, as defined in the Supplementary Material. These distributions represent a range of dependence structures. Note that analogous dependence coefficients to can be defined to quantify the strength of extremal dependence in other regions of the plane [see 36]. In the following, we denote the four quadrants of as . For , the Gaussian copula has intermediate dependence in and [22], and negative dependence in and . The Frank copula is AI in all quadrants, whereas the t copula is AD in all quadrants. Finally, the Joe copula is AD in , negatively dependent in and , and AI in . Samples from each copula are shown in Figure 6.1, together with the corresponding values of the copula parameters used in the simulation studies. In each case, the sample size is . One can observe the variety in dependence structures, as evidenced by the shape of data clouds. For the distributions considered here, the asymptotic shape parameter function is , , and the asymptotic scale parameter functions can be derived analytically [see 36]. The true values of the threshold functions and angular density functions can be calculated using numerical integration. Hence, in all cases, the target values for the SPAR model parameters are known.

To evaluate performance, we simulate samples from each distribution and for every sample, apply the methods outlined in Section 3 and 4.2 to estimate all SPAR model components. Using these estimates, we compute isodensity contours, defined as for some . In particular, we consider ; the corresponding true contours for each distribution are given in Figure 6.1. These density values represent regions of low probability mass, corresponding to joint extremal behaviour. Moreover, estimates of the joint density are appropriate for evaluating the overall performance of the SPAR model, since in practice, capturing the joint density is crucial for ensuring one can accurately extrapolate into the joint tail.

Alongside isodensity contours, we also compare the estimated GP scale parameter functions and angular density functions to their corresponding target values. For the former, we remark that for each copula, the conditional radial distribution only converges to a GP distribution in limit as , implying we are unlikely to accurately estimate the asymptotic GP parameter functions for finite samples; see Mackay and Jonathan, [36]. We remark that even with this caveat, we still obtain high quality estimates for the isodensity contours at extreme levels.

Uncertainty in the estimation procedure is quantified by adapting the procedure of Section 5.1 across the simulated samples. This allows us to compute median estimates and confidence intervals for isodensity contours, scale functions and angular density functions.

Although the choice of coordinate system does not affect whether the SPAR model assumptions are satisfied, the asymptotic SPAR parameter functions do depend on the coordinate system. Since smooth, continuous splines are used to represent the GP parameter functions, the choice of coordinate system may affect the quality of model fits. The simulation study is therefore conducted using both and polar coordinates.

6.2 Tuning parameters

We first consider the tuning parameters required for the smooth inference procedure, as outlined in Section 4.2.3. For each copula, the threshold and scale parameter functions appeared to vary in a similar manner over angle. Furthermore, we fix a constant value of the shape parameter with angle, i.e., for all , since for each copula, the tail behaviour remains constant over angles [36]. As discussed in Section 7, this is not true in the general case, so fixing to be constant is an additional constraint imposed on the model.

As noted previously, it is better to select a basis dimension that is larger than one would expect to be necessary. We considered a range of dimensions in the interval , and compared the resulting model fits across each of the four copulas. From this analysis, we found that setting was sufficiently flexible for capturing the angular dependence for both the threshold and scale functions.

We are also required to select a non-exceedance probability . As observed in Mackay and Jonathan, [36], each of the four copulas exhibits a different rate of convergence to the asymptotic form. Therefore, different values of may be appropriate for these different dependence structures. However, we instead opt to keep fixed across all copulas. This is for consistency in the estimation framework, as well as to show that even in the case when the model is misspecified, the corresponding inference framework is still robust enough to approximate the true model. We considered a range of values, restricting our attention to the interval , and compared the resulting model fits. As expected, the performance for each copula varied non-homogeneously across values. Ultimately, we found that setting appeared sufficient for approximating the conditional radial tails for each dependence structure. In particular, this value appeared high enough to give approximate convergence to a GP distribution model, without giving a large degree of variability in model estimates.

Finally, for estimation of the angular density, we fix the bandwidth parameter at for each copula. Our results show that for these copula examples, this bandwidth is sufficiently flexible to approximate the true angular density functions across the majority of angles.

6.3 Results

Figure 6.2 compares the median estimates of isodensity contours, obtained using the coordinate system, to the true contours at a range of low density levels; the corresponding plots for the coordinate system are given in the Supplementary Material. For both coordinate systems, one can observe generally good agreement between the sets of contours, suggesting the modelling framework is, on average, capturing the dependence structure of each copula. Furthermore, plots comparing the median estimates from both coordinate systems can also be found in the Supplementary Material. These plots show a similar overall performance for both systems, with perhaps a slight preference for the estimates.

Plots comparing the estimated GP scale parameter functions, and associated confidence intervals, to the known asymptotic functions are given in the Supplementary Material. In some angular regions, the estimated isodensity contours and scale functions from the SPAR model do not agree with the known values; for example, in the region around for the Joe copula. In this case, there is a sharp cusp in the asymptotic GP scale parameter function. Similarly, there is a sharp cusp in the true GP scale parameter for the t copula at . As the inference framework assumes that the scale is a smooth function of angle, this behaviour is not properly captured. Despite the GAM representation not being able to capture these cusps, the overall performance of the estimated SPAR model is still reasonable in these regions. Furthermore, there is poor agreement between the estimated and asymptotic scale functions for the Frank copula. This is likely due to the relatively slow convergence of this distribution to its asymptotic form, and hence the poorer approximation by the GP distribution.

Next, we consider the uncertainty of the isodensity contours for in the radial-angular space. Figure 6.3 shows the median contour estimates, along with estimated 95% confidence intervals, obtained under the coordinate system; the corresponding plots for coordinates are given in the Supplementary Material. One can observe that the true contours are generally well captured within the estimated uncertainty regions. The exception in the density contour for the Frank copula in and , owing to the aforementioned slow rate of convergence to the asymptotic form for this copula.

Finally, we compare median estimates of the angular density functions, alongside estimated confidence regions, to the corresponding true density functions for each copula. These results are given in the Supplementary Material. Overall, we observe close agreement between the estimated and true functions at the majority of angles. However, we note that the KD estimation framework appeared unable to fully capture the modal regions for the Frank, t and Joe copulas; see Section 8 for further discussion.

Overall, when compared to the truth, the SPAR model estimates perform well for each copula. This observation suggests that our proposed inference framework, with appropriate tuning parameters, can capture the extremal dependence structure across a range of copulas with differing dependence classes. This illustrates both the flexibility and robustness of the SPAR approach, and its advantages over many alternative multivariate models.

7 Case Study

In this section, we apply the techniques introduced in Sections 3 and 4 to the data sets A, B and C introduced in Section 1.2. We show that the resulting model fits are physically plausible and capture the complex dependence features of each data set. We also apply the tools introduced in Section 5 to quantify uncertainty and assess goodness of fit; the resulting diagnostics indicate generally good performance.

7.1 Pre-processing

The simulation study in Section 6 considered data on standard Laplace margins. This is because the SPAR model assumptions are satisfied for a wide range of copulas on Laplace margins, and the resulting asymptotic representations are relatively simple [36]. However, the SPAR framework does not pre-suppose any particular choice of margins, and Mackay and Jonathan, [36] also showed that SPAR representations arise for random vectors with bounded and heavy tailed margins.

For the case of the metocean data sets, the margins are unknown. In practice, estimation of the marginal distributions, as is common in many extreme value analyses, introduces a high degree of additional modelling uncertainty, and poor marginal estimates affect the quality of the resulting multivariate inference [60]. Therefore, we opt not to transform the margins of the metocean time series, and to instead fit the SPAR model on the original scale of the data. With suitable selections of tuning parameters, we demonstrate below that our inference framework is flexible enough to capture the observed extremal dependence structures for the metocean data sets without the need for marginal transformation.

When modelling phenomena (such as ocean waves) in the Earth’s environment, physical constraints (e.g. limited capacity for energy transfer from surface wind caused by atmospheric low pressure systems, wave steepness limits) typically support the assumption that tails of marginal distributions of random variables are bounded. When variables are presented on different physical scales (e.g. mm vs. km), we are careful to standardise them to zero mean and unit standard deviation prior to analysis. For variables which are believed to have unbounded tails, we would transform to common margins prior to analysis; in this case, as outlined in [36], we favour transformation to common standard Laplace margins.

An important consideration for the SPAR model is where to place the origin of the polar coordinate system. When using Laplace margins, a natural choice is to locate the origin at . When working on the original scale of the data, the choice is less clear. One option would be to place the polar origin at . However, this would restrict the range of angles for which the SPAR model offers a useful representation, since both variables we are considering here take only positive values. To account for this, we normalise the data to have zero mean and unit variance, and select the polar origin at in the normalised variable space. Define normalised variables , where and denote the estimated means and standard deviations of , respectively.

We henceforth assume that for each data set, the normalised joint density function, , satisfies the assumptions of the SPAR model, namely that the conditional radial variable converges to a generalised Pareto distribution, in the sense of Equation 2.2, and that the functions , , and satisfy the finiteness and continuity assumptions of Section 2.2. We then apply the statistical techniques introduced in Sections 3, 4 and 5; these results are presented in Section 7.3. Throughout this section, we present all results for the coordinate system; the corresponding results for coordinates are given in the Supplementary Material, with both systems resulting in similar model fits.

We remark that each metocean time series exhibits non-negligible temporal dependence. Following Section 5.1, we apply block bootstrapping throughout this section whenever quantifying uncertainty, with the block size set to days. This block size appeared appropriate to account for the observed dependence in each time series. Note that temporal dependence could alternatively be accounted for by ‘declustering’ the data and only modelling peak values. However, in multivariate applications, what constitutes a ‘peak value’ is ambiguous since the extremes of each variable do not necessarily occur simultaneously; see Mackay et al., [34] and Mackay and de Hauteclocque, [33] for further discussion.

7.2 Tuning parameters

Prior to inference, we must first select each of the relevant tuning parameters for the methodologies discussed in Sections 3 and 4. Since the true dependence features are unknown, we use local estimates of the SPAR model, obtained using the framework of Section 4.1, to inform the choice of basis dimensions for the smooth inference procedure of Section 4.2.

To obtain local estimates, we are required to specify the number of reference angles , the number of order statistics , and the non-exceedance probability . For the first two values, we set and ; we found these values to be adequate to ensure a high degree of coverage over the angular interval , and to give angular windows that appeared approximately stationary. For selecting , we tested a range of probabilities in the interval . Through this testing, we set , since this value appeared to give approximate convergence to a GP tail across the majority of local windows. The same non-exceedance probability is also used for the smooth SPAR model estimates.

With local estimates obtained, we then consider smooth estimation of the SPAR components. Notably, for each of the time series, we observe clear trends in the locally estimated shape parameter function; it would therefore not be appropriate to specify this parameter as constant. This makes sense when one considers the shapes of the data clouds illustrated in Figure 1.4; the radial behaviour varies significantly over angles. Furthermore, both metocean variables are bounded below by ; therefore, we would expect shorter tails in angular directions that intersect the axes. A range of basis dimensions were tested, and from this analysis, we fixed for the threshold and scale functions, and for the shape function. These values appeared to offer adequate flexibility for capturing the trends observed over the angular variable.

Finally, for angular density estimation, we follow Section 6.2 and set the bandwidth . This value offered sufficient flexibility to capture the observed angular distributions.

7.3 Results

Figure 7.1 compares the threshold and parameter function estimates for data set B from the local and smooth inference procedures; the corresponding plots for data sets A and C are given in the Supplementary Material. The shaded regions in this figure denote the 95% bootstrapped confidence intervals for the smooth model fits. One can observe generally good agreement for each component of the SPAR model. We remark that the local estimates appear unstable, and hence unreliable, for certain angles; this is not surprising, given the small sample size of the angular window. However, the general overall agreement suggests the smooth SPAR estimates are accurately capturing the observed dependence features for each data set, providing evidence that the chosen tuning parameters are appropriate.

Following Section 5.2, we compare the median angular density functions from the KD estimation technique with empirical histograms. These comparisons are given in Figure 7.2 for each data set, and one can observe good agreement between the estimated quantities.

Estimates of isodensity contours are shown in Figure 7.3. These joint density contours are given on the original scale of the data, rather than on the normalised scale, and we consider the joint density levels , corresponding to regions of low probability mass. The estimated isodensity contours appear to capture the shape and structure of each data cloud well. Furthermore, we note that the SPAR model appears able to capture the observed asymmetric dependence structures, illustrating the flexibility and robustness of this modelling framework.

To further demonstrate the utility of the SPAR framework, we also use the fitted model to obtain return level sets for each of the data sets. Return level sets are commonly used in ocean engineering for the design of offshore structures. They can be defined in various ways, but are generally defined in terms of marginal probabilities under various rotations of the coordinate axes, or in terms of the probability of an observation falling anywhere outside the set (the so-called ‘total exceedance probability’ of a contour); see Mackay and Haselsteiner, [35]. Papastathopoulos et al., [47] noted that SPAR-type models offer a natural way for total exceedance probability contours to be constructed. For exceedance probability with , the radius of the contour at angle is the quantile of the GP distribution with parameter vector . For any angle , the probability of an observation exceeding this radius is equal to ; consequently, the probability of observing data outside of the resulting contour set is equal to . When observations are independent and the distribution is stationary, we can define such sets in terms of return periods; given a number of years , the -year return level set is the set corresponding to the probability , where denotes the number of observations per year. One would expect to observe data points outside of the return level set once, on average, every years. Given the temporal dependence observed within the metocean data sets, we note that such an interpretation is not possible due to clustering of extreme events, and as such these estimates are conservative [34]. However, the resulting return level sets can still provide a useful summary of joint extreme behaviour.

Plots of estimated median year return level sets for each data set are given in Figure 7.4, along with 95% bootstrapped confidence intervals. These sets, obtained by computing GP distribution quantiles from the fitted model, appear sensible in shape and structure when compared to the data cloud. Moreover, given the lengths of observation windows of each data set, we would not expect to observe many datapoints outside of the return level set; this is clearly true in every case. Furthermore, a comparison of return level sets from the two coordinate systems is given in the Supplementary Material, where one can observe generally good agreement between the estimated sets

We note that simulation from the SPAR model is straightforward; a simulation scheme is given in the Supplementary Material, alongside examples for each of the metocean data sets.

Inspection of the local (angular) QQ plots introduced in Section Section 5.2 suggests that the performance of the fitted SPAR model varies across different angular regions. Although we observe generally good agreement between quantiles, there is clearly better agreement for certain angles, suggesting rates of convergence to the GP tail model may vary over angle for these data sets.

8 Discussion

In this paper, we have introduced a novel inference framework for the SPAR model of Mackay and Jonathan, [36]. We have explored the properties of this framework, and introduced practical tools for quantifying uncertainty and assessing goodness of fit. Furthermore, we have applied this framework to simulated and real data sets in Sections 6 and 7, with results indicating that the proposed framework captures joint tail behaviour across a wide range of data structures. Moreover, this framework has been recently applied in Mackay et al., [37], where the authors show the SPAR model can accurately capture joint extremes of wind speeds and wave heights, and extreme response distributions for a variety of metocean data sets. Our proposed modelling framework is one of the first multivariate extreme value modelling techniques that can be applied without marginal transformation, offering an advantage over competing approaches and removing a significant degree of model variability.

Noting that the SPAR model has only been developed recently, this work is the first attempt to apply this modelling framework in practice, and it is likely that other inference approaches will follow. While our proposed framework performs well in general, we acknowledge there exist some shortcomings that could provide the motivation for future work.

The results from Section 6 indicate that the proposed angular density estimation framework from Section 3 performs poorly for some copulas in regions around the angular mode(s). However, we note that even with this caveat, the KD estimation framework appeared adequate for capturing the angular distribution for the observed data sets in Section 7. Future work could explore whether using alternative angular density estimation approaches [e.g., 17, 51] could further improve performance.

Observe that for the AD copulas considered in Section 6, the true isodensity functions exhibit clear cusps, where the underlying GP scale function is non-differentiable. Such sections cannot be captured under the current framework, since the use of cyclic cubic splines for smooth estimation imposes differentiability at all angles. Future work could explore how such behaviour could be captured in the inference framework. For example, one could use a spline representation that allows for superimposed knots. Combined with a more general spline inference procedure, this alternative representation could allow for optimal estimation of both the number and locations of knots, while simultaneously giving cusps in the estimated SPAR functions [20].

From Section 7, one can observe that for the estimated isodensity contours and return levels obtained using the coordinate system, there exist distinct cusps at certain angles; these arise due to the square shape of . We acknowledge that such cusps are not realistic for practical applications, and consequently, estimates from the coordinate system may be preferable in such settings.

When estimating the shape parameter functions in Section 7, we did not impose any functional constraints, even though the variables considered must have finite lower and upper bounds, and hence cannot be in the domain of attraction of a GP distribution with a non-negative shape parameter. However, even without bounding the shape parameter, we note that across all of our model fits, the estimated shape functions were almost always homogeneously negative, indicating the proposed framework is flexible enough to detect the form of tail behaviour directly from the data. Future work could explore whether imposing physical constraints on the shape function improves the quality of model fits.

Following on from Section 7.3, it appears that having one non-exceedance probability for all angles may not be optimal for fitting the SPAR model in practice due to different rates of convergence at different angles. Exploring techniques for selecting and estimating threshold functions with varying rates of exceedance [e.g., 44] remains an open area for future work. More generally, the results in Section 6 demonstrate that SPAR inference performs well for extremes from the known bivariate distributions considered. In Section 7, we demonstrate that SPAR inference generates physically reasonable estimates of extremes from real metocean data sets, and have provided diagnostic evidence that SPAR model fits are reasonable. It would be useful in future to compare the characteristics of SPAR estimates against those from competitor schemes, particularly those which require a two-stage inference of first estimating marginal extreme value models and transformation to some standard marginal scale of choice, followed by estimation of a dependence model on standard scale. Of course, these comparisons will only be possible for the intervals of the angular domain where the two-stage model is valid. In contrast, SPAR inference is useful on the full angular domain, using variables standardised to zero mean and unit variance.

In the current work, we have chosen to use particular approaches to estimate each of the angular and conditional radial models. The non-stationary extreme value literature provides a range of alternative representations, used routinely in environmental applications and in ocean engineering in particular (see e.g. Jones et al., 25, Randell et al., 51, Zanini et al., 73). Various software tools are also available for the task, including [57] and [61].

As discussed in Sections 3 and 6, SPAR inference involves the specification of various tuning parameters, regulating the characteristics of the angular and radial models estimated. In fact, a SPAR inference is computationally the same as a non-stationary extreme value inference. Indeed, studies (e.g. Jones et al., 25, Tendijck et al., 59) have already been conducted to evaluate the relative performance of different representations for the tail of the conditional radial component, and its sensitivity to tuning parameter setting. Nevertheless, future studies are recommended to assess the sensitivity of SPAR inference to choice of tuning parameter.

We have restricted attention to the bivariate setting throughout this work. This decision was made for simplicity, as well as the fact many of the examples given in Mackay and Jonathan, [36] are for bivariate vectors. Using the proposed inference techniques as a starting point, a natural avenue for future work would therefore be expanding the modelling framework to the general -dimensional setting.

A non-stationary SPAR model for the joint behaviour of extremes of variables which are non-stationary with respect to angular covariate can be constructed relatively straightforwardly. For example, we might adopt a SPAR representation (, say) for , and a 2-D basis representation for smooth functions on the angular domain (using e.g splines, see Wood, 67, Randell et al., 51, Youngman, 71) and a generalised Pareto conditional tail for . In an environmental context, this would be an appealing model for significant wave height and period, non-stationary with respect to wave direction.

Finally, we note that in Mackay and Jonathan, [36], the authors also derive a link between the SPAR model and the limit set representation for multivariate extremes. Specifically, the radius of the limit set at a fixed angle is given by the asymptotic shape parameter of the SPAR representation. We believe the inference approach we have proposed could be adapted for the estimation of limit sets, though additional care will be required given estimates obtained from finite sample sizes seldom equal limiting asymptotic quantities in practice.

Supplementary Material

-

•

Supplementary Material for “Inference for multivariate extremes via a semi-parametric angular-radial model”: File containing supporting figures and additional information about the REML procedures and simulation study. (.pdf file)

Declarations

Ethical Approval

Not Applicable

Availability of supporting data

The data sets analysed in Section 7 are freely available online at https://github.com/EC-BENCHMARK-ORGANIZERS/EC-BENCHMARK.

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Funding

This work was supported by EPSRC grant numbers EP/L015692/1 and EP/Y016297/1.

Authors’ contributions

All authors contributed to the study conception and design. Model development was performed by Ed Mackay and Phil Jonathan. Material preparation and initial analysis were performed by Callum Murphy-Barltrop. The first draft of the manuscript was written by Callum Murphy-Barltrop and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Acknowledgments

This paper is based on work partly completed while Callum Murphy-Barltrop was part of the EPSRC funded STOR-i centre for doctoral training (EP/L015692/1). Ed Mackay was funded by the EPSRC Supergen Offshore Renewable Energy Hub, United Kingdom (EP/Y016297/1). We would like to thank Ben Youngman for his assistance with the evgam package in the R computing language.

Supplementary Material to ‘Inference for bivariate extremes via a semi-parametric angular-radial model’

S1 Additional information for Section 4.4.2

In this section, we provide further details about the REML schemes used to estimate the GAM parameters associated with the threshold and parameter functions. We first consider the GAM formulation of the threshold function , which we estimate via quantile regression techniques. For finite sample sizes, conditional quantile regression requires us to compute

| (S1) |

where denotes the spline coefficients associated with , and for and for [28]. However, optimisation of (S1) is non-trivial and computational issues often result due to the non-differentiability of at zero.

To overcome these computational issues, Youngman, [71] proposed the following mis-specified model

with corresponding density function

where and denotes the modified check function of Oh et al., [45]. This check function is defined as

for , where is chosen close to zero. Unlike the standard loss function , is differentiable at zero, offering significant advantages for inference in terms of speed and computational efficiency; see Oh et al., [45] for further details. For our framework, we set ; this is the default value suggested in Youngman, [70].

Setting , where denotes a GAM formulation from equation (4.5) of the main article, and letting denote the associated coefficient vector, the log-likelihood associated with the mis-specified model given of equation (S1) is given by

| (S2) |

Treating as a nuisance parameter function, maximisation of equation (S2) with respect to is equivalent to minimisation of equation (S1). Consequently, the resulting estimate for gives an estimate of the conditional quantile function for . For further details, along with detailed examples, see Yu and Moyeed, [72] and Geraci and Bottai, [16]. Note that the same spline basis dimensions are used for both and .

Given an estimate of , let denote the observed indices of threshold exceedances. Recalling the GAM formulations for the GP scale and shape, and , the log-likelihood function of the GP tail model is given by

| (S3) |

where , , and denote the coefficient vectors associated with and , respectively. Minimising equation (S3) with respect to and results in estimates of the parameter functions.

S2 Additional information and plots for Section 6

In Section 6 of the main article, we consider four copula examples. The first is the Gaussian copula; given , termed the Pearson correlation coefficient, this is given by

where is the bivariate Gaussian cumulative distribution function with correlation matrix and is the inverse of the standard univariate Gaussian cumulative distribution function. The parameter controls the form and strength of dependence.

Secondly, for any , the Frank copula is defined as

with the parameter controlling the strength and form of dependence.

Next, for any and , the t copula is given by

where is the bivariate t cumulative distribution function with correlation matrix and degrees of freedom, and is the inverse of the univariate t cumulative distribution function with degrees of freedom. The strength of dependence in the tails is controlled by both and [4].

Finally, given any , the Joe copula is given by

where controlling the strength of dependence.

For each copula, we simulate data on standard Laplace margins, for which the marginal distribution is given by

| (S4) |

To achieve this, we first simulate data from each copula on standard uniform margins using the copula package in the R computing language. We then transform this data to standard Laplace using the inverse of equation (S4).

Figure S1 compares the median estimates of isodensity contours, obtained using the coordinate system, to the true contours at a range of low density levels.

Figure S2 compares the median isodensity contours estimates from the two coordinate systems for two density levels . One can observe that the differences between the sets of median estimates are negligible.

Figures S3 and S4 illustrate the median scale parameter estimates, with confidence intervals, for the and coordinate systems, respectively. Considering the fact estimates were computed using finite sample sizes, these plots illustrate reasonable agreement between the estimated and asymptotic scale parameter functions in most cases. One notable exception is the Frank copula, for which the estimated scale functions perform poorly. This is likely due to the relatively slow convergence of this distribution to its asymptotic form, as discussed in Mackay and Jonathan, [36]. Furthermore, the majority of the estimates obtained over the simulated data sets are slightly negative. This discrepancy from the asymptotic value () partly explains why the estimated scale functions estimates are biased high in most cases, since the scale and shape parameters are negatively correlated under the maximum likelihood framework [21].

Figure S5 illustrates the median contour estimates for on the radial-angular scale for the coordinate system, along with estimated 95% confidence intervals. As with the coordinates, the estimated confidence intervals capture the true contours in most cases.

Figures S6 and S7 compare the true and estimated angular density functions for the and coordinate systems, respectively. One can observe generally good agreement for both coordinate systems. We note that the KD estimation framework appears unable to fully capture the modal regions for the Frank, t and Joe copulas. We observed a marginal improvement in performance for these regions when the bandwidth parameter, , was decreased; however, this significantly increased the variability in the resulting angular density estimates, and consequently, we opted to keep fixed at . Moreover, we note that even for extremely small bandwidth parameters, the proposed KD framework was unable to approximate the modal behaviour of the Joe copula, suggesting the sample size () is not large enough to fully capture the true density function for this particular example.

S3 Additional plots for Section 7

Figures S1 and S2 compare the smoothly and locally estimated threshold and parameters functions for data sets A and C, respectively, under the coordinate system. One can observe generally good agreement for each component of the SPAR model.

Figures S3, S4 and S5 compare the smoothly and locally estimated threshold and parameters functions for data sets A, B and C, respectively, under the coordinate system. As with the coordinates, we obtain good agreement for each model component.

Figure S6 compares the estimated median angular density functions, and corresponding confidence intervals, with the histograms for each data set under the coordinate system. The two sets of estimates are in good agreement, providing evidence that the chosen bandwidth parameter is appropriate.

Figure S7 illustrates the estimated median isodensity contours, and corresponding uncertainty regions, for the density levels under the coordinate system. As with the coordinates, the estimated contours capture the shape and structure of each data set.

Figure S8 illustrates the estimated median year return level sets for each data set, along with 95% bootstrapped confidence intervals, obtained using the coordinate system. The estimated sets appear to capture the features of the observed data, and we observe only a handful of observations outside of the return level set for each data set.

Figure S9 compares the estimated year return level sets from the two coordinate systems for each of the data sets. One can observe generally good agreement between the two systems, although we note some small differences in the estimates for data set A at certain angles. The overall agreement, however, provides evidence of consistency between the two modelling approaches.

As noted in the main article, fitted SPAR models can be used to simulate new observations. This simulation is straightforward. We start by generating a random number , uniformly distributed in . A random angle can then be calculated by applying the probability integral transform so that , where denotes the estimated distribution function of . A corresponding radial value , is then simulated as a random value from the GP distribution with parameter vector . The resulting pair is then a random sample from the SPAR model. Figure S10 and S11 illustrate simulated data points for each metocean data set, overlayed on top of the observed time series, for the and coordinate systems, respectively. For each data set, we simulated the same number of new observations as the original sample size. One can observe that the simulated data sets closely resemble the threshold exceeding observations. Moreover, these plots illustrate the regions for which the SPAR model is valid.

Figures S12, S13 and S14 give the local window QQ plots for the SPAR model fits on data sets A, B, and C, respectively, under the coordinate system. The corresponding plots for the coordinate system are given in Figures S15, S16 and S17. We observe generally good agreement between the estimated model and observed quantiles. Note that the step-like behaviour observed in some of the plotted quantiles occurs due to repeated observations in the time series’; these are likely a result of rounding errors in measurement equipment.

References

- Balkema and de Haan, [1974] Balkema, A. A. and de Haan, L. (1974). Residual Life Time at Great Age. The Annals of Probability, 2:792–804.

- Beirlant et al., [2004] Beirlant, J., Goegebeur, Y., Teugels, J., and Segers, J. (2004). Statistics of Extremes. Wiley.

- Castro-Camilo et al., [2018] Castro-Camilo, D., de Carvalho, M., and Wadsworth, J. (2018). Time-varying extreme value dependence with application to leading European stock markets. Annals of Applied Statistics, 12:283–309.

- Chan and Li, [2008] Chan, Y. and Li, H. (2008). Tail dependence for multivariate t-copulas and its monotonicity. Insurance: Mathematics and Economics, 42(2):763–770.

- Chaubey, [2022] Chaubey, Y. P. (2022). Directional Statistics for Innovative Applications, pages 351–378. Springer.

- Chavez-Demoulin and Davison, [2005] Chavez-Demoulin, V. and Davison, A. C. (2005). Generalized additive modelling of sample extremes. Journal of the Royal Statistical Society: Series C (Applied Statistics), 54:207–222.

- Chen, [2017] Chen, Y.-C. (2017). A tutorial on kernel density estimation and recent advances. Biostatistics & Epidemiology, 1:161–187.

- Davis et al., [1988] Davis, R. A., Mulrow, E., and Resnick, S. I. (1988). Almost sure limit sets of random samples in . Advances in Applied Probability, 20(3):573–599.

- Davison and Smith, [1990] Davison, A. C. and Smith, R. L. (1990). Models for Exceedances Over High Thresholds. Journal of the Royal Statistical Society. Series B: Statistical Methodology, 52:393–425.

- de Haan and de Ronde, [1998] de Haan, L. and de Ronde, J. (1998). Sea and Wind: Multivariate Extremes at Work. Extremes, 1:7–45.

- de Haan and Ferreira, [2006] de Haan, L. and Ferreira, A. (2006). Extreme value theory: an introduction, volume 3. Springer.

- de Haan and Resnick, [1987] de Haan, L. and Resnick, S. I. (1987). On regular variation of probability densities. Stochastic processes and their applications, 25:83–93.

- Diaconu et al., [2021] Diaconu, D. C., Costache, R., and Popa, M. C. (2021). An Overview of Flood Risk Analysis Methods. Water, 13(4).

- Fisher, [1969] Fisher, L. (1969). Limiting Sets and Convex Hulls of Samples from Product Measures. The Annals of Mathematical Statistics, 40:1824–1832.

- García–Portugués, [2013] García–Portugués, E. (2013). Exact risk improvement of bandwidth selectors for kernel density estimation with directional data. Electronic Journal of Statistics, 7:1655–1685.

- Geraci and Bottai, [2007] Geraci, M. and Bottai, M. (2007). Quantile regression for longitudinal data using the asymmetric Laplace distribution. Biostatistics, 8:140–154.

- Gu, [1993] Gu, C. (1993). Smoothing Spline Density Estimation: A Dimensionless Automatic Algorithm. Journal of the American Statistical Association, 88:495–504.

- Hall et al., [1987] Hall, P., Watson, G. S., and Cabrera, J. (1987). Kernel Density Estimation with Spherical Data. Biometrika, 74:751.

- Haselsteiner et al., [2021] Haselsteiner, A. F., Coe, R. G., Manuel, L., Chai, W., Leira, B., Clarindo, G., Soares, C. G., Ásta Hannesdóttir, Dimitrov, N., Sander, A., Ohlendorf, J. H., Thoben, K. D., de Hauteclocque, G., Mackay, E., Jonathan, P., Qiao, C., Myers, A., Rode, A., Hildebrandt, A., Schmidt, B., Vanem, E., and Huseby, A. B. (2021). A benchmarking exercise for environmental contours. Ocean Engineering, 236:1–29.

- Hastie et al., [2009] Hastie, T., Tibshirani, R., Friedman, J. H., and Friedman, J. H. (2009). The elements of statistical learning: data mining, inference, and prediction, volume 2. Springer.

- Hosking and Wallis, [1987] Hosking, J. R. and Wallis, J. R. (1987). Parameter and quantile estimation for the generalized Pareto distribution. Technometrics, 29:339–349.

- Hua and Joe, [2011] Hua, L. and Joe, H. (2011). Tail order and intermediate tail dependence of multivariate copulas. Journal of Multivariate Analysis, 102(10):1454–1471.

- Joe, [1997] Joe, H. (1997). Multivariate Models and Multivariate Dependence Concepts. Chapman and Hall/CRC.