Industrial Anomaly Detection with Domain Shift: A Real-world Dataset and Masked Multi-scale Reconstruction

Abstract

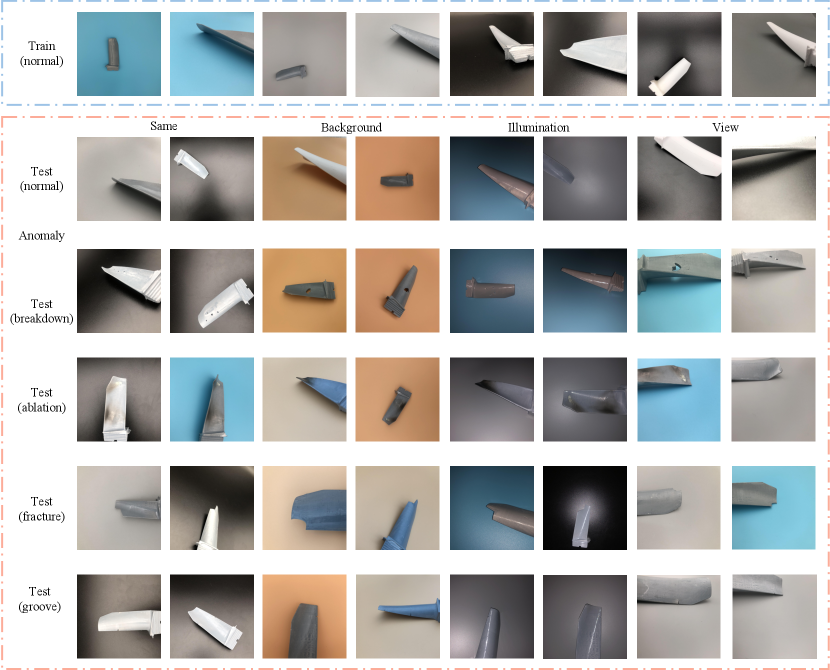

Industrial anomaly detection (IAD) is crucial for automating industrial quality inspection. The diversity of the datasets is the foundation for developing comprehensive IAD algorithms. Existing IAD datasets focus on diversity of data categories, overlooking the diversity of domains within the same data category. In this paper, to bridge this gap, we propose the Aero-engine Blade Anomaly Detection (AeBAD) dataset, consisting of two sub-datasets: the single-blade dataset and the video anomaly detection dataset of blades. Compared to existing datasets, AeBAD has the following two characteristics: 1.) The target samples are not aligned and at different scales. 2.) There is a domain shift between the distribution of normal samples in the test set and the training set, where the domain shifts are mainly caused by the changes in illumination and view. Based on this dataset, we observe that current state-of-the-art (SOTA) IAD methods exhibit limitations when the domain of normal samples in the test set undergoes a shift. To address this issue, we propose a novel method called masked multi-scale reconstruction (MMR), which enhances the model’s capacity to deduce causality among patches in normal samples by a masked reconstruction task. MMR achieves superior performance compared to SOTA methods on the AeBAD dataset. Furthermore, MMR achieves competitive performance with SOTA methods to detect the anomalies of different types on the MVTec AD dataset. Code and dataset are available at https://github.com/zhangzilongc/MMR.

1 Introduction

Industrial anomaly detection (IAD) involves the identification and localization of anomalies in industrial processes, ranging from subtle changes like thin scratches to larger structural defects like missing components [4], with limited or no prior knowledge of abnormality. IAD has numerous applications, such as smart manufacturing processes that ensure the production of high-quality products and automated maintenance that relies on robots. Collecting sufficient abnormal samples for training is often difficult since industrial processes are generally optimized to minimize the production of defective products [42]. Additionally, since industrial processes are affected by uncontrollable random factors, different types of abnormal samples may be produced [49, 28]. In other words, various and sufficient abnormal samples are generally difficult to obtain. Therefore, IAD is typically conducted in a one-class learning setting, where only normal 111In this paper, normal is by definition an antonym of abnormal. data is used.

Existing IAD methods [33, 12, 21, 35, 44, 36, 47] primarily rely on datasets such as MVTec AD [4], VisA [52], and MVTec LOCO AD [3], which share some common characteristics, such as aligned samples 222Some images of other objects in the VISA dataset depict not registered objects. and consistent scale within each class (as shown in Figure 1). However, in real-world automated product/component inspection scenarios, these conditions may not always hold. Factors such as inaccurate control or environmental changes can cause significant variations in object scale and viewpoint [29, 19]. This suggests that the mild conditions provided by existing datasets may not fully capture the complexities of real-world IAD applications. As a result, it is unclear whether current IAD methods can achieve the same level of success under such complex conditions, which raises important questions for the future development and evaluation of IAD techniques.

To address the aforementioned problem and bridge the gap between the real-world application of IAD and current datasets, we present the Aero-engine Blade Anomaly Detection (AeBAD) Dataset in this paper. The aim of AeBAD is to automatically detect abnormalities in the blades of aero-engines, ensuring their stable operation. Unlike previous datasets that focus on detecting diversity of defect categories, AeBAD is centered on the diversity of domains within the same data category. AeBAD consists of two sub-datasets: the single-blade dataset (AeBAD-S) and the video anomaly detection of blades (AeBAD-V). AeBAD-S comprises images of single blades of different scales, with a primary feature being that the samples are not aligned. Furthermore, there is a domain shift between the distribution of normal samples in the test set and the training set, where the domain shifts are mainly caused by the changes in illumination and view. Some examples are shown in Figure 1. AeBAD-V, on the other hand, includes videos of blades assembled on the blisks of aero-engines, with the aim of detecting blade anomalies during blisk rotation. A distinctive feature of AeBAD-V is that the shooting view in the test set differs from that in the training set. The partial frames of videos are shown in Figure 1. AeBAD-V is highly consistent with the real-world need for aero-engine blade detection, emphasizing the significance of IAD.

After constructing the AeBAD dataset, we evaluate the state-of-the-art (SOTA) IAD methods and make the following observations. Firstly, methods that rely on synthetic anomalies perform poorly when the samples are not aligned and at different scales, as most of the synthetic anomalies are distributed in non-target areas such as the background. This reduces the difficulty of discriminating normal samples from synthetic anomalies, resulting in poor generalization to unseen anomalies. Secondly, when the domain of normal samples in the test set shifts, the abnormal score of normal parts of the samples increases for all test methods, leading to a large number of false positives. This is because some current methods rely heavily on modeling the distribution of normal samples in the training set, which causes a mismatch between the features of the normal samples in the training set and those in the test set when the distribution changes. We believe that the root cause of this problem is the neglect of causality in normal samples.

Inspired by the above observations and analyses, we propose a method entitled Masked Multi-scales Reconstruction (MMR) for reconstructing multi-scale features of full images from masked inputs. Our method is free from synthetic anomalies and thus bypass the one of the above problems. Simultaneously, the pretext task of MMR enhances the model’s perception of causality in normal samples, which improves the robustness to domain shifts. In contrast to existing inpainting methods, our method focuses on reconstructing features at different scales rather than tanglesome details, which provides the output with greater semantic clarity. Moreover, our masked strategy is different with the previous, where our masked parts are completely hidden. We will demonstrate that this masked strategy can significantly improve the performance of anomaly detection. Our experiments show that MMR outperforms the state-of-the-art (SOTA) methods in terms of reducing false positives and boosting anomaly detection performance on the AeBAD dataset. Additionally, MMR achieves comparable performance with SOTA methods on the MVTec AD dataset.

The main contributions of this paper are as follows:

-

1.

We recognize the gap between the actual application of IAD and the available datasets, and thus introduce a new dataset named AeBAD. Unlike previous datasets, AeBAD takes into account various practical factors such as different scales and views, which induces a domain shift for normal samples in the test set. This dataset closely aligns with the requirements for detecting anomalies in aero-engine blades and highlights the practical significance of IAD. To the best of our knowledge, this is the first industrial anomaly detection dataset that accounts for domain shifts, making it more representative of real-world scenarios.

-

2.

We observe that existing SOTA methods for IAD exhibit limitations when the domain of normal samples in the test set undergoes a shift. To address this issue, we propose a novel method called MMR, which enhances the model’s capacity to deduce causality among patches in normal samples. Our method achieves superior performance compared to SOTA methods on the AeBAD dataset. Furthermore, MMR achieves competitive performance with SOTA methods on the MVTec AD dataset.

The rest paper is organized as follows. Section 2 reviews some related works. Section 3 describes our dataset and show the deficiency of current methods. The proposed MMR is given in Section 4. Section 5 presents the experimental results. Section 6 ablates the factors that are closely related to MMR. Section 7 presents the limitation of our work and the future work. A conclusion is presented in Section 8.

2 Related Work

We first review existing datasets for IAD. Then, we give an overview of relevant approaches to IAD. More comprehensive surveys of IAD can be found in [20, 9, 39]. In this paper, we do not review the methods and datasets related to semantic anomalies.

2.1 Datasets

The success of current deep learning methods heavily relies on the diversity of data distribution. This diversity encompasses a large number of data categories, such as ImageNet [34], as well as different domains of the same data category, such as NICO [50] and OOD-CV [51]. Evaluating the performance of target algorithms on datasets with different domains is a crucial step in applying them in real-world scenarios. Despite the availability of several datasets containing various industrial products for the task of IAD, like MVTec AD [4], DAGM [40], KSDD [38] and SensumSODF [31], to the best of our knowledge, all of them overlook the presence of domain shift between the training and test sets, which exists in crack segmentation [25], railway track maintenance using robotic vision [32], surface defect detection [37] and etc. Addressing this issue and considering domain shift is vital for developing more robust and effective IAD algorithms that can be reliably applied in practical scenarios.

Bergmann et al. [4] proposed the MVTec Anomaly Detection dataset (MVTec AD), which focuses on detecting subtle changes and larger structural defects. The main feature of MVTec AD is that all objects are roughly aligned, as shown in Figure 1. Zou et al. [52] proposed the Visual Anomaly (VisA) Dataset, which considers more complex structures and presents multiple objects in a single image. The recent proposed MVTec Logical Constraints Anomaly Detection (MVTec LOCO AD) dataset [3] aims to detect logical anomalies in addition to structural anomalies. Additionally, Bergmann et al. [6] introduced a 3D dataset for IAD.

However, all existing datasets ignore the presence of domain shift between the training and test sets. In this paper, inspired by the real-world application of aero-engine blade anomaly detection, we propose a novel dataset that includes domain shifts between the training and test sets. These domain shifts encompass different views and illuminations, and our proposed dataset can address some of the deficiencies of current datasets.

2.2 Methods

While there are different types of setups in IAD, such as normal data mixed with few noisy data (anomalies) [41], this paper focuses on practical applications and reviews only one-class learning in IAD, where only normal data is available in training. We categorize these methods into three categories based on the formulation of anomaly detection.

The first category formulates anomaly detection as a matching problem. These methods establish a pattern based on normal data and match the pattern of test data with the established pattern during testing. For example, PaDiM [10] establishes the distribution of every patch of normal data and calculates the likelihood of test data to detect anomalies. Similarly, FastFlow [45] and CFLOW-AD [14] model the normalizing flows of normal data. PatchCore [33] builds a “database” of multi-scale features and matches the test data through k-nearest neighbor search. MKDAD [35] and ReverseKD [12] match the general pattern generated by the teacher network with the pattern of normal data generated by the student network to detect anomalies.

The second category hopes the model can generalize the ability that distinguishes between normal and synthetic abnormalities to distinguish unseen abnormalities. The main difference among these methods is the construction of synthetic anomalies. For instance, CutPaste [21] crops the parts of a certain object into different objects to synthesize anomalies. NSA [36] uses Poisson image editing to seamlessly clone an object from one image into another image, which can significantly reduce discontinuities. DRAEM [47] generates anomalies based on the mask generated by perlin noise and an additional dataset.

The third category includes methods based on reconstruction. These methods [7, 11, 27] rely on the hypothesis that reconstruction models trained on normal samples only succeed in normal regions but fail in anomalous regions [44]. The main problem with this idea is that the model could learn tricks that anomalies are also restored well. To tackle this problem, different strategies are carried out. RIAD [48] and InTra [30] propose a masked image inpainting pretext task. Since some parts of the image are masked out, the model needs to perceive the causality in the image to recover the information of masked parts, which avoids the trivial solution. UniAD [44] proposes a neighbor masked encoder and a layer-wise query decoder to prevent the ”identical shortcut”.

The methods most related to our work are RIAD [48] and InTra [30]. The main differences are the following. First, we neglect to reconstruct intricate details in the full image and instead reconstruct features at different scales. This empowers the output of the model with more semantic information. Second, our masked strategy is different, where the masked parts are completely hidden, resulting in the complete removal of features in the masked parts, while in the previous methods, these features are shared with the features of the visible parts.

3 Aero-engine Blade Anomaly Detection Dataset

3.1 Background and Significance

The aero-engine is the heart of an airplane, and its healthy blades are critical for ensuring stable operation. Defects in aero-engine blades can significantly impact the engine’s efficiency or even cause it to fail [22]. Due to their high cost and infrequent occurrence, obtaining a variety of blades with different defects (anomalies) can be difficult. As a result, blade defect detection is often approached as a one-class anomaly detection problem. In real-world blade detection scenarios, images of blades acquired by borehole instruments can vary in view and illumination, depending on the location of the borehole on the aero-engine. Since the aero-engine to be detected is unknown, the view is also unknown. This motivates us to explore one-class IAD under the domain shift of test set. For meeting the need of the real-world application, the blades in the experiment are either from the real engine or a 1:1 replica.

| Train | Test | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AeBAD-S | 521 | same | background | illumination | view | |||||

| N | A | N | A | N | A | N | A | |||

| 230 | 459 | 93 | 212 | 75 | 198 | 92 | 280 | |||

| AeBAD-V | 707 | video-1 | video-2 | video-3 | ||||||

| N | A | N | A | N | A | |||||

| 533 | 368 | 251 | 650 | 381 | 520 | |||||

3.2 Description of The Dataset

To better serve the needs of blade anomaly detection in real-world scenarios and bridge the gap between current datasets and practical applications, we introduce the Aero-engine Blade Anomaly Detection (AeBAD) dataset, consisting of two sub-datasets: AeBAD-S and AeBAD-V. AeBAD-S contains images of single blades, while AeBAD-V consists of videos of multiple blades on blisks of aero-engines. The training set of AeBAD only contains normal samples, while the test sets include both normal and abnormal samples. The anomalies of the blade consist of the following four types of defects: breakdown, ablation, groove, and fracture. Breakdown is caused by foreign objects (e.g., sand, metal, birds, hail, etc.), which usually cause the blade to break [43]. Ablation comes from the high temperature gas that the blades are exposed to. Ablation will reduce the performance of the blade. When the ablation reaches a certain level and the performance of the blade material cannot meet the requirements, fracture will occur. Groove is caused by a large number of elastic stress cycles, which will expand causing the fracture of the blade. Some abnormal samples are shown in Figure 2. The statistical overview of AeBAD is listed in Table 1. The total training samples are 1228 and the test samples are 4342. In addition, for all anomalies present in AeBAD-S dataset, we provide pixel-level ground-truth annotations. And for all frames in AeBAD-V dataset, we provide sample-level annotations.

Different from the current datasets, where all images are roughly aligned, the shooting positions of acquired images in the training set of AeBAD-S are sampled from the position of blue dotted lines, which are enclosed by the negative half axis of x, the positive half axis of y, and the positive axis of z. The shooting positions are shown in Figure 3 top. Besides, there are three background colors for the training image: black, gray and blue. Some training samples are shown in the first row of Figure 2. The test set of AeBAD-S is divided into four categories: same, background, illumination, and view. In the ”same” category, the distribution of the normal samples in the test set is the same as that in the training set. The ”background” category involves changes to the image background, while the ”illumination” category involves changes to the lighting conditions. Finally, the ”view” category involves changes to the shooting positions, with the new positions being symmetrical to those in the training set. The shooting positions of the “view” test set are shown in Figure 3 bottom. In the above test sets, there is a domain shift in all except the “same” test set. The samples of different test sets are shown in Figure 2.

AeBAD-V contains videos taken from different viewpoints, and the training set consists of videos taken from four viewpoints, while the test set has videos taken from three viewpoints different from those of the training set. Some frames are shown in Figure 4. The environment in AeBAD-V is more consistent with the real anomaly detection of aero-engine blades compared to AeBAD-S, which is in a simulated environment.

3.3 Deficiencies of The Current Methods

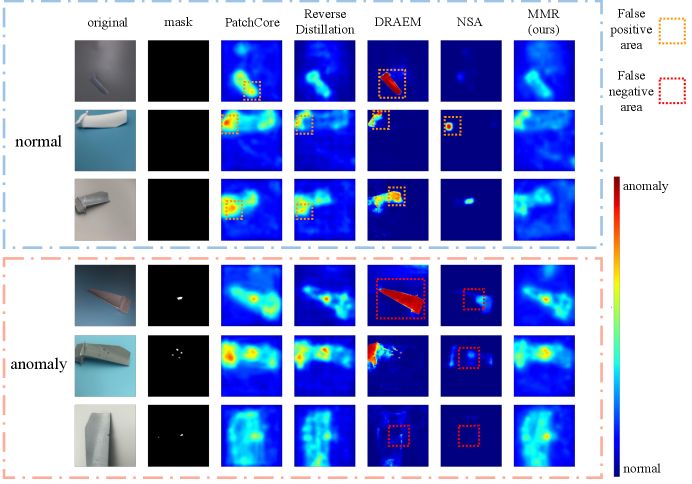

The proposed AeBAD dataset enables us to evaluate the performance of current IAD methods under domain shifts. We evaluate the performance of four SOTA methods on the AeBAD-S dataset: PatchCore [33], ReverseDistillation [12], DRAEM [47], and NSA [36]. It should be noted that in these methods, the anomaly score of an image is the maximum value of the heatmap generated by that image.

The qualitative results of the above methods are shown in Figure 5, and more results are available in Figure 9. In the qualitative results, the red areas are regarded as abnormal areas, and other colors as normal areas. We find the following problems: 1.) the methods based on synthetic abnormalities (e.g., NSA) tend to miss defects and produce false negative areas. For DRAEM, the cause of failure is more complicated, we will analyze it in Section 5. 2.) the methods based on matching, such as PatchCore and ReverseDistillation, tend to incorrectly identify normal regions as abnormal regions under domain shifts, resulting in many false positives.

Regarding problem 1, the reason is that the synthetic anomalies are mostly distributed in background areas as the samples are not aligned and at different scales. This significantly reduces the difficulty of the task of discriminating normal samples and synthetic anomalies, resulting in poor generalization for unseen anomalies. As for problem 2, existing methods heavily rely on modeling the distribution of normal samples in the training set, which creates a discrepancy between the features of normal samples in the training and test sets when the domian shifts. We think that the problem 2 is likely due to a lack of consideration for causality in normal samples.

4 Masked Multi-scales Reconstruction

Motivated by the above observations and analyses, we propose a synthetic anomalies free anomaly detection method entitled Masked Multi-scales Reconstruction (MMR). The overall framework of MMR is shown in Figure 6 (a).

4.1 Details of MMR

MMR consists of two parts. In the first part, as shown in Figure 6 (a) left, the image is evenly divided into several patches (16 for Figure 6), and a certain percentage of these patches are masked while others are preserved, where the patches have equal height and width. The preserved patches are fed into a vision transformer (ViT) [13], which generates embeddings of these patches. The random initialized embeddings of the masked patches are then appended to the embeddings of the preserved patches. A simple feature pyramid network (simple FPN) [23] is used to take the embeddings of all patches as input and generate multi-scale features. In the second part, as shown in Figure 6 (a) right, a frozen pre-trained hierarchical encoder is used to encode the same input used in the first part into multi-scale features. The goal of MMR is to align the multi-scale features produced by the two parts. The underlying motivation for recovering the information on the masked patches is that we hope the model can perceive the dependence of spatial positions of different parts in images. In MMR, the reconstruction task is responsible for focusing on the details, while the masked recovery task is for the causality of various parts.

Architecture of Simple FPN [23] Simple FPN follows the idea in [24, 1]. The target is to build a feature pyramid containing multi-scale features. Different from the previous work [24], which needs to take the features of different scales as the inputs and needs lateral connection, simple FPN only needs the features of final layers of ViT to decode the features of different scales in parallel. The schematic diagram is shown in Figure 6 (b). We use the original architecture in simple FPN [23], which is as follows: the scale which is the same as the features of final layer of ViT uses the ViT’s final feature map. Scale (or ) is built by one (or two) deconvolution layer(s) with stride=2. In the scale case, the first deconvolution is followed by LayerNorm (LN) [2] and GeLU [17]. Then for each pyramid level, simple FPN applys a 1×1 convolution with LN and then a 3×3 convolution also with LN. The dimensions of feature map are the same as the dimensions of the features of different scales in the frozen pre-trained hierarchical encoder. The architecture of simple FPN is shown in Figure 7.

Problem Formulation Formally, in the first part, to handle 2D images, we firstly reshape the original image into a sequence of flattened 2D patches , where is the resolution of the original image, is the number of channels, is the number of patches, and is the resolution of patch. Then, we use the operation to randomly extract flattened patches as non-masked patches, where is masking ratio. After that, we use a ViT to encode the non-masked patches, where each layer of ViT consists of a multi-head self-attention module and a feed forward network. The embeddings of masked patches, which are randomly initialized, are appended to the output of ViT by the operation . Finally, the embeddings of all patches are reshaped into , where the receptive field of every feature on the feature map is . The simple FPN decodes all embeddings to the features of different scales, where denotes the i-th scales. In the second part, a frozen pre-trained hierarchical encoder encodes x into the features of different scales, where are height, width and channels respectively. And the dimensions of are the same as . Note that and are . The final loss function of MMR is as follows:

| (1) |

where denotes the total scales and denotes the k-th row of . Our target is to minimize the Eq. (1) to recover the multi-scale features of masked patches.

In the test stage, MMR takes the original non-masked input (overall image) to the above two parts. The first part and the second part generate the features and respectively. The anomaly map of every scale is calculated as follows:

| (2) |

where is the result of normalized by row, is hadamard product, is a vector whose elements are all 1 and is an operation which reshapes the vector to . For the feature maps with different resolutions, they are uniformly up-sampled to the resolution of original image by bilinear interpolation. Anomaly map: The final anomaly map is the sum of up-sampled . Anomaly score: The anomaly score of one image is the largest value on .

Mechanism of MMR During the training stage, MMR uses normal samples as input and recovers multi-scale features of masked patches based on the information provided by non-masked patches. This enables ViT and simple FPN to capture causal relationships among different parts of normal samples and avoid learning an identical function that is not helpful for anomaly detection, where the causal relationships among different parts mean the dependence of spatial positions of various parts. Specifically, the human can predict the overall position of the blade and some details on the blade by only capturing the part of the root of the blade. In MMR, this process is replaced by predicting the masked patches by the non-masked patches. Mathematically, this process is equivalent to modeling the conditional distribution, where the non-masked part is the condition and the masked part is the target. As mentioned in Section 3.3, PatchCore and Reverse Distillation aim to model the distribution of the normal samples (unconditional distribution), which creates a discrepancy between the features of normal samples in the training and test sets when the domian shifts. Unlike these two methods, MMR models the conditional distribution among different parts. When the domain shifts, the conditional distribution among image patches remains relatively unchanged despite the change in the distribution of the image. This makes MMR relatively insensitive to domain shifts.

During testing, the original non-masked input is used for both parts of the method. Since the frozen pre-trained hierarchical encoder contains the rich semantics, it can perceive the unseen anomalies. And for ViT and simple FPN, since they only model causality in normal sample, it cannot reconstruct the abnormal parts well. This leads to biases in the reconstructed and encoded multi-scale features for anomalies, where the biases correspond to the abnormal parts in the feature map.

| Method | Same | Background | Illumination | View | Mean |

|---|---|---|---|---|---|

| PatchCore [33] CVPR’ 2022 | 75.2 0.3 | 74.1 0.3 | 74.6 0.4 | 60.1 0.4 | 71.0 |

| ReverseDistillation [12] CVPR’ 2022 | 82.4 0.6 | 84.3 0.9 | 85.5 0.9 | 71.9 0.8 | 81.0 |

| DRAEM [47] ICCV’ 2021 | 64.0 0.4 | 62.1 6.1 | 61.6 2.7 | 62.3 0.9 | 62.5 |

| NSA [36] ECCV’ 2022 | 66.5 1.4 | 48.8 3.5 | 55.5 3.2 | 55.9 1.1 | 56.7 |

| RIAD [48] PR’ 2020 | 38.6 0.6 | 41.6 1.3 | 46.8 0.8 | 33.0 0.6 | 40.0 |

| InTra [30] ICIAP’ 2022 | 39.8 0.8 | 46.1 0.5 | 44.7 0.3 | 46.3 1.5 | 44.2 |

| MMR (Ours) | 85.6 0.5 | 84.4 0.7 | 88.8 0.5 | 79.9 0.6 | 84.7 |

| Method | Same (PRO) | Background (PRO) | Illumination (PRO) | View (PRO) | Mean (PRO) |

|---|---|---|---|---|---|

| PatchCore [33] CVPR’ 2022 | 89.5 0.2 | 89.4 0.1 | 88.2 0.1 | 84.0 0.2 | 87.8 |

| ReverseDistillation [12] CVPR’ 2022 | 86.4 0.4 | 86.4 0.7 | 86.7 0.5 | 82.9 0.7 | 85.6 |

| DRAEM [47] ICCV’ 2021 | 71.4 4.2 | 44.3 11.6 | 67.6 2.7 | 71.1 2.3 | 63.6 |

| NSA [36] ECCV’ 2022 | 43.0 1.3 | 29.7 2.1 | 59.9 1.3 | 51.1 0.1 | 45.9 |

| RIAD [48] PR’ 2020 | 71.9 1.3 | 33.4 0.6 | 65.3 1.0 | 62.2 1.7 | 58.2 |

| InTra [30] ICIAP’ 2022 | 76.8 0.2 | 74.8 0.3 | 73.7 0.3 | 73.4 0.2 | 74.7 |

| MMR (Ours) | 89.6 0.2 | 90.1 0.2 | 90.2 0.2 | 86.3 0.3 | 89.1 |

4.2 Relations between MMR and Current Methods

Relations to the inpainting methods There are two main ways in which MMR differs from current methods based on reconstruction. First, as shown in Figure 8, MMR does not try to reconstruct all the details of the full image, but instead focuses on recovering features at different scales. Since the frozen encoder is insensitive to some changes [8], the encoded features are more robust. This allows MMR to emphasize causal relationships among different parts of normal samples. Second, MMR uses a different masked strategy compared to previous methods like RIAD and InTra, as shown in Figure 6 (c). In MMR, the masked parts are completely hidden, while in RIAD [48] (convolution in Figure 6 (c)) and InTra [30] (ViT in Figure 6 (c)), the features of the masked parts are shared with the features of the visible parts. MMR’s approach prevents information from the visible parts from leaking into the masked parts. This can be further verified by the ablation study in Section 6.

Relations to the student-teacher framework The student-teacher framework, also known as knowledge distillation [18], is an efficient method for handling the one-class anomaly detection task. Current methods, e.g., MKDAD [35] and ReverseKD [12], distilled the pre-trained features for the normal samples. MMR can also be regarded as a student-teacher network, where the frozen pre-trained encoder plays the role of the teacher and another part is a student network. The unique difference between MMR and current knowledge distillation methods is that there is a causal inference module in the knowledge distillation. This endows the model with the perception of spatial positions of different parts in images so that it can improve the generalization in the scenario under the domain shift.

Relations to masked autoencoder (MAE) [15] MAE adopted a same masked strategy as MMR. Although the masked strategy is the same, the intention of the masked strategy is entirely different. MAE used such a masked strategy, i.e., only training on a small subset of image patches, to reduces the consumption of memory so that the larger batch sizes can be allowed, while MMR uses this strategy to help the model perceive the dependence of spatial positions of different parts in images and model the causality in normal sample. More importantly, we provide a new insight into such a masked strategy, where it can prevent information from the visible parts from leaking into the masked parts (as described in Figure 6 (c)). Another difference between MMR and MAE is the reconstruction target, where MAE reconstructed the original pixel-wise input (similar to the top of Figure 8), while MMR performs a feature-wise reconstruction.

4.3 Implementation Details

The input image, augmented with random cropping, is resized to and then center croped to . The resolution of patch is (16, 16). The masking ratio is 0.4. The backbone of ViT is the vanilla ViT-B [13], where the backbone is pre-trained by masked autoencoder [15]. The simple FPN generates feature maps of 4, 8, and 16 receptive fields. We use a widely adopted WideResNet50 [46, 16] as the frozen pre-trained hierarchical encoder and then extract the multi-scale features from the layer 1, 2 and 3. We use AdamW optimizer [26] () with step-wise learning rate decay. The learning rate is 0.001. For all datasets, we set training epoch as 200 and batch size as 16. In addition, all experiments were conducted on Ubuntu 18.04.5 and a computer equipped with Xeon(R) Gold 6140R [email protected] and an NVIDIA GeForce RTX 2080 Ti with 11GB of memory.

5 Experiments

5.1 Datasets and Comparison Methods

We evaluate MMR on AeBAD dataset including AeBAD-S and AeBAD-V. In addition, we also evaluate MMR on MVTec dataset to show the ability to detect different types of anomalies. The comparison methods include the recent SOTA methods and the methods most related to our work, including PatchCore [33], ReverseDistillation [12], DRAEM [47], NSA [36], RIAD [48] and InTra [30]. For PatchCore, ReverseDistillation, DRAEM and NSA, we use the official codes, where the backbone of PatchCore and ReverseDistillation is a pre-trained WideResNet50. We tune the hyperparameters to make sure the optimal performance. For RIAD and InTra, since they do not provide the official code, we use the unofficial re-implementations of these methods 333The unofficial re-implementations of RIAD and InTra are here and here respectively..

5.2 Results

AeBAD-S: Metric: To evaluate sample-level anomaly detection, we use the area under the receiver operating characteristic (AUROC), which is a widely used metric that takes into account both false positives and false negatives. For pixel-level anomaly detection, we use the per-region-overlap (PRO) score [5] as the evaluation metric. The PRO score treats anomalies of any size equally. Unlike the common pixel-level AUROC, the PRO score decreases significantly when disconnected domains of anomalies are not detected.

The quantitative results are shown in Table 2 and 3. The qualitative results are shown in Figure 9. We can observe the following phenomena: 1.) Except for DRAEM, all methods show a significant drop in performance on the ”view” subset compared to other subsets. This is mainly because changes in view increase false positives for normal samples, as shown in Figure 5 and 9. In the third row of abnormal samples of Figure 9, MMR also produces a high score for the normal region. 2.) The methods based on the pre-trained model, such as PatchCore, ReverseDistillation and MMR, are robust to the change for background and illumination. In addition, compared to PatchCore and ReverseDistillation, our proposed method can reduce the anomaly score of the normal region as the domain shifts, as shown in the normal part of Figure 9. Note that the anomaly score of an image is the maximum value of the heatmap generated by that image. PatchCore and ReverseDistillation produce more false positive areas on the ”view” datasets, which is also the reason their AUROC performance on the ”view” dataset is poor, although their PRO performance is close to MMR’s. 3.) NSA show a poor performance for anomaly classification and localization and show a large variance compared to other methods. This is mainly because the proposed dataset is not aligned, and the augmented synthetic anomalies can significantly affect performance. When the synthetic anomalies are distributed less on the background region, the performance is good, and vice versa. 4.) For DRAEM, since it generates the synthetic anomalies distributed at the full image, it can detect some apparent anomalies (e.g., the second row of the anomaly part in Figure 5). However, when the light and background change, it regards the full blade as an anomaly (e.g., the first and fourth row of abnormal samples in Figure 5 and 9). We think that the main reason for this is that DREAM overfits the data due to its two large models, which results in a poor generalization for data with domain shift. 5.) RIAD and InTra use pixel-level reconstruction, which leads to larger reconstruction errors for complex details.

In addition, although the input of the first part of MMR is a whole image in the test stage, the output of this part is not identical with the output of the frozen pre-trained hierarchical encoder. This can be observed from Figure 5 and Figure 9, where MMR can detect some tiny defects (anomalies). This result demonstrates that the first part of MMR can avoid an “identical shortcut”.

| Method | Video 1 | Video 2 | Video 3 | Mean |

|---|---|---|---|---|

| PatchCore [33] | 71.1 0.2 | 86.0 0.4 | 55.1 0.7 | 70.7 |

| ReverseDistillation [12] | 66.0 2.1 | 84.8 1.8 | 62.1 1.1 | 71.0 |

| DRAEM [47] | 79.5 5.0 | 71.2 3.3 | 53.6 1.2 | 68.1 |

| NSA [36] | 59.4 3.5 | 72.7 1.3 | 61.9 4.0 | 64.6 |

| RIAD [48] | 78.0 1.2 | 47.1 2.9 | 43.2 1.1 | 56.1 |

| InTra [30] | 62.7 1.4 | 55.8 1.3 | 43.7 1.4 | 54.1 |

| MMR (Ours) | 75.7 0.2 | 88.3 1.4 | 70.7 0.6 | 78.2 |

AeBAD-V: Metric: We only evaluate sample-level anomaly detection by AUROC.

The quantitative results are shown in Table 4. The qualitative results are shown in Figure 10. Similar to the previous results, we observe that when the viewpoint changes, particularly for Video 2 and Video 3, PatchCore and ReverseDistillation produce more false positives, whereas DRAEM and NSA produce more false negatives. Our proposed method, MMR, is able to reduce the anomaly score of the normal region and detect multiple abnormal regions. It is worth noting that compared to AeBAD-S, the objects in AeBAD-V are more distributed in the center area. This allows NSA to locate apparent anomalies in some cases, but DRAEM still fails to predict anomalies. We believe that the main reason for this is also from the overfitting in DREAM. For the result of DRAEM on Video 1, since there is a similar view between video 1 and the training set, DRAEM is able to obtain a good result. In addition to the above results, we also present the qualitative results of the consecutive frames (videos) at https://github.com/zhangzilongc/MMR.

MVTec: Metric: We follow the common protocol. For the sample-level anomaly detection, we use AUROC. For the pixel-level anomaly detection, we use PRO and pixel-level AUROC. In addition, we follow the common setups to train and test on each product category.

| PatchCore [33] | RD [12] | DRAEM [47] | NSA [36] | RIAD [48] | InTra [30] | MMR (Ours) | |

|---|---|---|---|---|---|---|---|

| Bottle | 100 | 100 | 99.2 | 97.7 | 99.9 | 100 | 100 |

| Cable | 99.5 | 95.0 | 91.8 | 94.5 | 81.9 | 84.2 | 97.8 |

| Capsule | 98.1 | 96.3 | 98.5 | 95.2 | 88.4 | 86.5 | 96.9 |

| Carpet | 98.7 | 98.9 | 97.0 | 95.6 | 84.2 | 98.8 | 99.6 |

| Grid | 98.2 | 100 | 99.9 | 99.9 | 99.6 | 100 | 100 |

| Hazelnut | 100 | 99.9 | 100 | 94.7 | 83.3 | 95.7 | 100 |

| Leather | 100 | 100 | 100 | 99.9 | 100 | 100 | 100 |

| Metal Nut | 100 | 100 | 98.7 | 98.7 | 88.5 | 96.9 | 99.9 |

| Pill | 96.6 | 96.6 | 98.9 | 99.2 | 83.8 | 90.2 | 98.2 |

| Screw | 98.1 | 97.0 | 93.9 | 90.2 | 84.5 | 95.7 | 92.5 |

| Tile | 98.7 | 99.3 | 99.6 | 100 | 98.7 | 98.2 | 98.7 |

| Toothbrush | 100 | 99.5 | 100 | 100 | 100 | 99.7 | 100 |

| Transistor | 100 | 96.7 | 93.1 | 95.1 | 90.9 | 95.8 | 95.1 |

| Wood | 99.2 | 99.2 | 99.1 | 97.5 | 93.0 | 98.0 | 99.1 |

| Zipper | 99.4 | 98.5 | 100 | 99.8 | 98.1 | 99.4 | 97.6 |

| Mean | 99.1 | 98.5 | 98.0 | 97.2 | 91.7 | 95.9 | 98.4 |

| PatchCore [33] | RD [12] | DRAEM [47] | NSA [36] | RIAD [48] | InTra [30] | MMR (Ours) | |

|---|---|---|---|---|---|---|---|

| Bottle | 98.6/96.1 | 98.7/96.6 | 99.1/- | 98.3/- | 98.4/- | 97.1/- | 98.3/96.0 |

| Cable | 98.5/92.6 | 97.4/91.0 | 94.7/- | 96.0/- | 84.2/- | 93.2/- | 95.4/87.2 |

| Capsule | 98.9/95.5 | 98.7/95.8 | 94.3/- | 97.6/- | 92.8/- | 97.7/- | 98.0/94.5 |

| Carpet | 99.1/96.6 | 98.9/97.0 | 95.5/- | 95.5/- | 96.3/- | 99.2/- | 98.8/96.6 |

| Grid | 98.7/95.9 | 99.3/97.6 | 99.7/- | 99.2/- | 98.8/- | 99.4/- | 99.0/96.5 |

| Hazelnut | 98.7/93.9 | 98.9/95.5 | 99.7/- | 97.6/- | 96.1/- | 98.3/- | 98.5/91.2 |

| Leather | 99.3/98.9 | 99.4/99.1 | 98.6/- | 99.5/- | 99.4/- | 99.5/- | 99.2/98.6 |

| Metal Nut | 98.4/91.3 | 97.3/92.3 | 99.5/- | 98.4/- | 92.5/- | 93.3/- | 95.9/88.6 |

| Pill | 97.6/94.1 | 98.2/96.4 | 97.6/- | 98.5/- | 95.7/- | 98.3/- | 98.4/96.1 |

| Screw | 99.4/97.9 | 99.6/98.2 | 97.6/- | 96.5/- | 89.1/- | 99.5/- | 99.5/97.6 |

| Tile | 95.9/87.4 | 95.6/90.6 | 99.2/- | 99.3/- | 89.1/- | 94.4/- | 95.6/90.2 |

| Toothbrush | 98.7/91.4 | 99.1/94.5 | 98.1/- | 94.9/- | 98.9/- | 99.0/- | 98.4/93.0 |

| Transistor | 96.4/83.5 | 92.5/78.0 | 90.9/- | 88.0/- | 87.7/- | 96.1/- | 90.2/79.1 |

| Wood | 95.1/89.6 | 95.3/90.9 | 96.4/- | 90.7/- | 85.8/- | 90.5/- | 94.8/88.9 |

| Zipper | 98.9/97.1 | 98.2/95.4 | 98.8/- | 94.2/- | 97.8/- | 99.2/- | 98.0/95.0 |

| Mean | 98.1/93.5 | 97.8/93.9 | 97.3/- | 96.3/- | 94.2/- | 97.0/- | 97.2/92.6 |

The quantitative results are shown in Table 5 and 6. We can observe that MMR also obtain the competitive results with SOTA methods. It demonstrates the detection ability of MMR for the anomalies of different types. It is worth noting that compared with the superior performance in AeBAD dataset, the performance of MMR in MVTec dataset is suboptimal. The main reason is that when the distribution of the test sample changes, MMR can better judge normal samples and abnormal samples, while other methods will be affected by domain shift. However, for the MVTec dataset, the distribution of test samples is consistent with the training set, so the advantages of MMR cannot be highlighted. In addition, we observe that compared with PatchCore, the acquired abnormal masks of MMR are coarser, which is also the reason for the suboptimal performance in the pixel-level performance.

6 Ablation Study

In this part, we investigate some factors that have greater impacts on MMR. We choose AeBAD-S dataset for ablation study. If there is no additional statement, the setups and hyper-parameters of the experiments remain the same as in Section 4.3.

6.1 Form of Input

In MMR, we take non-masked patches as input. In this part, we select the entire image (all patches) as input while maintaining a constant masking ratio. We regard pixels as a unit and then randomly mask times the area of the entire image based on this unit. We choose . An example of masked images of different is shown in Figure 11. Note that when , the information included in the non-masked input is the same as the original input of MMR. The only difference is that the information in the non-masked part is shared, which is shown in Figure 6 (c). The results of different inputs are shown in Figure 12. We can observe that when the masking ratio is constant, the input with different masking forms can have a significant impact on performance. When , the performance is poor. The reason is that although the information of the masked patches is unknown, the adjacent region will leak information due to the small unit. This makes the model lose the ability to infer the information in the masked patches from the global context. When , the performance improves due to the larger unit. However, since this still cannot prevent the leakage of information, the performance at is lower than the proposed MMR at . In addition, we find an interesting phenomenon: as the smallest unit continues to increase, performance will continue to improve. We believe that the main reason is that when the smallest unit becomes larger, the association between image blocks will become weaker and weaker, even if the information leaks, it will not affect the overall reconstruction. However, the details of some reconstructions will become worse, which leads to a large increase in its mean PRO and basically no growth in mean AUROC. This is similar to 0.9 mask ratio in Figure 13.

6.2 Masking Ratio

We conduct experiments with different masking ratios in order to explore how they affect the model’s ability to perceive causal relationships. Figure 13 displays the results of this analysis for masking ratios ranging from 0 to 0.9. Our findings suggest that the model’s performance exhibits a pattern of low scores at both ends of the masking ratio, with the highest scores achieved in the middle. When the masking ratio is small, the model is able to make accurate inferences about the masked patches based on the surrounding regions that contain a lot of relevant information. However, as the masking ratio is high, the model becomes less able to infer the whole image based on just a few visible patches, resulting in lower performance scores. Note that different from the recent masked autoencoder [15], where the optimal masking ratio is high and the target is to perceive the semantic, the model for IAD needs to discriminate some tiny defects, and thus requires a smaller optimal masking ratio to reconstruct the details accurately.

| mean AUROC (%) | mean PRO (%) | |

|---|---|---|

| Train from scratch | 75.1 | 88.5 |

| PIMAE | 84.7 | 89.1 |

6.3 Tpye of Pre-training

In our implementation, the ViT backbone is pre-trained on ImageNet by a masked autoencoder [15]. In this part, we show the result that ViT is trained from scratch in Table 7. Intuitively, training ViT from scratch using normal samples should result in a model that is better able to identify unseen anomalies. However, we find that this is not the case. The main reason for this is that training ViT on a small dataset from scratch can lead to overfitting, which in turn reduces the generalization ability of the model. In addition, another advantage of choosing ViT pre-trained by a masked autoencoder is that the pre-trained upstream tasks are roughly the same as our proposed downstream task, both of which aim to reconstruct the original input. This similarity allows the model to transfer its learned representations more effectively, resulting in better performance on our task.

| mean AUROC (%) | mean PRO (%) | |

|---|---|---|

| Random Initialized ResNet 18 | 51.8 | 52.8 |

| Pre-trained ResNet 18 | 82.4 | 90.7 |

| WideResNet 50 | 84.7 | 89.1 |

6.4 Frozen Pre-trained Hierarchical Encoder

In this part, we choose different frozen pre-trained hierarchical encoders to train MMR. The results are shown in Table 8. We can observe that MMR can also generalize well to ResNet 18.

| layer1 | layer2 | layer3 | Mean AUROC (%) / Mean PRO (%) | |

|---|---|---|---|---|

| 1 | 66.5 / 85.6 | |||

| 2 | 76.9 / 86.6 | |||

| 3 | 76.5 / 82.4 | |||

| 4 | 74.7 / 86.9 | |||

| 5 | 85.0 / 88.1 | |||

| 6 | 84.7 / 89.1 |

6.5 Multi-scale Features

In this part, we report the effect of features at different scales on performance. The results are shown in Table 9. We can observe that the features at the intermediate scale are more conducive to detecting defects, while the features at the other scales can be a complement to improve performance.

7 Limitations and Future Works

The main limitation of MMR is that it needs two base models (a frozen pre-trained encoder and a specific trained model) to infer the test image, which consumes a lot of memory. This limits its usage in practical application. In addition, the proposed causal transformer-based module is memory-intensive, which also hinders practical applications. We calculate the throughput of some SOTA models and MMR, where the experimental condition is described in Section 4.3 and the input image is . The results are listed in Table 10. We can observe that since there are two base models in MMR, its throughput is inferior to ReverseDistillation. In future work, we will explore the causal module based on the convolution module and integrate it with the pre-trained model in the single model.

Furthermore, the proposed dataset only focuses on a single object (blade), which cannot fully show the capability of anomaly detection of the model. We will explore more practical scenarios of anomaly detection, which will include more defective types.

8 Conclusion

The aim of this paper is to introduce AeBAD, a new dataset that addresses the issue of domain shift, which has not been fully considered in existing datasets. Through our analysis on this dataset, we have identified deficiencies in current SOTA methods when the domain of normal samples in the test set shifts. We have also investigated the underlying causes of these deficiencies and proposed a new method called MMR, which enhances the model’s ability to infer causality among patches in normal samples and improves performance on different domain shifts. Moreover, MMR is competitive in detecting various types of anomalies. Our work presents a new direction for the anomaly detection community, one that is more closely aligned with real-world industrial scenarios.

Acknowledgments

This work is supported by National Natural Science Foundation of China under Grant 52175114&92060302, Science Center for Gas Turbine Project (P2022-DC-I-003-001) and Special support plan for high level talents in Shaanxi Province..

References

- [1] Edward H Adelson, Charles H Anderson, James R Bergen, Peter J Burt, and Joan M Ogden. Pyramid methods in image processing. RCA engineer, 29(6):33–41, 1984.

- [2] Jimmy Lei Ba, Jamie Ryan Kiros, and Geoffrey E Hinton. Layer normalization. arXiv preprint arXiv:1607.06450, 2016.

- [3] Paul Bergmann, Kilian Batzner, Michael Fauser, David Sattlegger, and Carsten Steger. Beyond dents and scratches: Logical constraints in unsupervised anomaly detection and localization. International Journal of Computer Vision, 130(4):947–969, 2022.

- [4] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. Mvtec ad–a comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9592–9600, 2019.

- [5] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4183–4192, 2020.

- [6] Paul Bergmann, Xin Jin, David Sattlegger, and Carsten Steger. The mvtec 3d-ad dataset for unsupervised 3d anomaly detection and localization. arXiv preprint arXiv:2112.09045, 2021.

- [7] Paul Bergmann, Sindy Löwe, Michael Fauser, David Sattlegger, and Carsten Steger. Improving unsupervised defect segmentation by applying structural similarity to autoencoders. arXiv preprint arXiv:1807.02011, 2018.

- [8] Sangnie Bhardwaj, Willie McClinton, Tongzhou Wang, Guillaume Lajoie, Chen Sun, Phillip Isola, and Dilip Krishnan. Steerable equivariant representation learning. arXiv preprint arXiv:2302.11349, 2023.

- [9] Yajie Cui, Zhaoxiang Liu, and Shiguo Lian. A survey on unsupervised industrial anomaly detection algorithms. arXiv preprint arXiv:2204.11161, 2022.

- [10] Thomas Defard, Aleksandr Setkov, Angelique Loesch, and Romaric Audigier. Padim: a patch distribution modeling framework for anomaly detection and localization. arXiv preprint arXiv:2011.08785, 2020.

- [11] David Dehaene, Oriel Frigo, Sébastien Combrexelle, and Pierre Eline. Iterative energy-based projection on a normal data manifold for anomaly localization. arXiv preprint arXiv:2002.03734, 2020.

- [12] Hanqiu Deng and Xingyu Li. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9737–9746, 2022.

- [13] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations, 2020.

- [14] Denis Gudovskiy, Shun Ishizaka, and Kazuki Kozuka. Cflow-ad: Real-time unsupervised anomaly detection with localization via conditional normalizing flows. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 98–107, 2022.

- [15] Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollár, and Ross Girshick. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16000–16009, 2022.

- [16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [17] Dan Hendrycks and Kevin Gimpel. Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415, 2016.

- [18] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531, 2015.

- [19] Jian Huang, Junzhe Wang, Yihua Tan, Dongrui Wu, and Yu Cao. An automatic analog instrument reading system using computer vision and inspection robot. IEEE Transactions on Instrumentation and Measurement, 69(9):6322–6335, 2020.

- [20] Xi Jiang, Guoyang Xie, Jinbao Wang, Yong Liu, Chengjie Wang, Feng Zheng, and Yaochu Jin. A survey of visual sensory anomaly detection. arXiv preprint arXiv:2202.07006, 2022.

- [21] Chun-Liang Li, Kihyuk Sohn, Jinsung Yoon, and Tomas Pfister. Cutpaste: Self-supervised learning for anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9664–9674, 2021.

- [22] Dawei Li, Yida Li, Qian Xie, Yuxiang Wu, Zhenghao Yu, and Jun Wang. Tiny defect detection in high-resolution aero-engine blade images via a coarse-to-fine framework. IEEE Transactions on Instrumentation and Measurement, 70:1–12, 2021.

- [23] Yanghao Li, Hanzi Mao, Ross Girshick, and Kaiming He. Exploring plain vision transformer backbones for object detection. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part IX, pages 280–296. Springer, 2022.

- [24] Tsung-Yi Lin, Piotr Dollár, Ross Girshick, Kaiming He, Bharath Hariharan, and Serge Belongie. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2117–2125, 2017.

- [25] Ye Liu, Jun Chen, and Jia-ao Hou. Learning position information from attention: End-to-end weakly supervised crack segmentation with gans. Computers in Industry, 149:103921, 2023.

- [26] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101, 2017.

- [27] Takashi Matsubara, Kazuki Sato, Kenta Hama, Ryosuke Tachibana, and Kuniaki Uehara. Deep generative model using unregularized score for anomaly detection with heterogeneous complexity. IEEE Transactions on Cybernetics, 52(6):5161–5173, 2020.

- [28] Subhrajit Nag, Dhruv Makwana, Sparsh Mittal, C Krishna Mohan, et al. Wafersegclassnet-a light-weight network for classification and segmentation of semiconductor wafer defects. Computers in Industry, 142:103720, 2022.

- [29] Christopher Naverschnigg, Ernst Csencsics, and Georg Schitter. Flexible robot-based in-line measurement system for high-precision optical surface inspection. IEEE Transactions on Instrumentation and Measurement, 71:1–9, 2022.

- [30] Jonathan Pirnay and Keng Chai. Inpainting transformer for anomaly detection. In Image Analysis and Processing–ICIAP 2022: 21st International Conference, Lecce, Italy, May 23–27, 2022, Proceedings, Part II, pages 394–406. Springer, 2022.

- [31] Domen Rački, Dejan Tomaževič, and Danijel Skočaj. Detection of surface defects on pharmaceutical solid oral dosage forms with convolutional neural networks. Neural Computing and Applications, 34(1):631–650, 2022.

- [32] Miftahur Rahman, Haochen Liu, Mohammed Masri, Isidro Durazo-Cardenas, and Andrew Starr. A railway track reconstruction method using robotic vision on a mobile manipulator: A proposed strategy. Computers in Industry, 148:103900, 2023.

- [33] Karsten Roth, Latha Pemula, Joaquin Zepeda, Bernhard Schölkopf, Thomas Brox, and Peter Gehler. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14318–14328, 2022.

- [34] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3):211–252, 2015.

- [35] Mohammadreza Salehi, Niousha Sadjadi, Soroosh Baselizadeh, Mohammad H Rohban, and Hamid R Rabiee. Multiresolution knowledge distillation for anomaly detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 14902–14912, 2021.

- [36] Hannah M Schlüter, Jeremy Tan, Benjamin Hou, and Bernhard Kainz. Natural synthetic anomalies for self-supervised anomaly detection and localization. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXI, pages 474–489. Springer, 2022.

- [37] Xiangwen Shi, Shaobing Zhang, Miao Cheng, Lian He, Xianghong Tang, and Zhe Cui. Few-shot semantic segmentation for industrial defect recognition. Computers in Industry, 148:103901, 2023.

- [38] Domen Tabernik, Samo Šela, Jure Skvarč, and Danijel Skočaj. Segmentation-based deep-learning approach for surface-defect detection. Journal of Intelligent Manufacturing, 31(3):759–776, 2020.

- [39] Xian Tao, Xinyi Gong, Xin Zhang, Shaohua Yan, and Chandranath Adak. Deep learning for unsupervised anomaly localization in industrial images: A survey. IEEE Transactions on Instrumentation and Measurement, 2022.

- [40] Matthias Wieler and Tobias Hahn. Weakly supervised learning for industrial optical inspection. In DAGM symposium in, 2007.

- [41] Jiang Xi, Jianlin Liu, Jinbao Wang, Qiang Nie, WU Kai, Yong Liu, Chengjie Wang, and Feng Zheng. Softpatch: Unsupervised anomaly detection with noisy data. In Advances in Neural Information Processing Systems.

- [42] Jie Yang, Yong Shi, and Zhiquan Qi. Dfr: Deep feature reconstruction for unsupervised anomaly segmentation. arXiv preprint arXiv:2012.07122, 2020.

- [43] Pingping Yang, Wenhui Yue, Jian Li, Guangfu Bin, and Chao Li. Review of damage mechanism and protection of aero-engine blades based on impact properties. Engineering Failure Analysis, page 106570, 2022.

- [44] Zhiyuan You, Lei Cui, Yujun Shen, Kai Yang, Xin Lu, Yu Zheng, and Xinyi Le. A unified model for multi-class anomaly detection. arXiv preprint arXiv:2206.03687, 2022.

- [45] Jiawei Yu, Ye Zheng, Xiang Wang, Wei Li, Yushuang Wu, Rui Zhao, and Liwei Wu. Fastflow: Unsupervised anomaly detection and localization via 2d normalizing flows. arXiv preprint arXiv:2111.07677, 2021.

- [46] Sergey Zagoruyko and Nikos Komodakis. Wide residual networks. arXiv preprint arXiv:1605.07146, 2016.

- [47] Vitjan Zavrtanik, Matej Kristan, and Danijel Skočaj. Draem-a discriminatively trained reconstruction embedding for surface anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 8330–8339, 2021.

- [48] Vitjan Zavrtanik, Matej Kristan, and Danijel Skočaj. Reconstruction by inpainting for visual anomaly detection. Pattern Recognition, 112:107706, 2021.

- [49] Alexander Zeiser, Bekir Özcan, Bas van Stein, and Thomas Bäck. Evaluation of deep unsupervised anomaly detection methods with a data-centric approach for on-line inspection. Computers in Industry, 146:103852, 2023.

- [50] Xingxuan Zhang, Linjun Zhou, Renzhe Xu, Peng Cui, Zheyan Shen, and Haoxin Liu. Nico++: Towards better benchmarking for domain generalization. arXiv preprint arXiv:2204.08040, 2022.

- [51] Bingchen Zhao, Shaozuo Yu, Wufei Ma, Mingxin Yu, Shenxiao Mei, Angtian Wang, Ju He, Alan Yuille, and Adam Kortylewski. Ood-cv: A benchmark for robustness to out-of-distribution shifts of individual nuisances in natural images. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part VIII, pages 163–180. Springer, 2022.

- [52] Yang Zou, Jongheon Jeong, Latha Pemula, Dongqing Zhang, and Onkar Dabeer. Spot-the-difference self-supervised pre-training for anomaly detection and segmentation. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXX, pages 392–408. Springer, 2022.