Inducing Equilibria via Incentives:

Simultaneous Design-and-Play Ensures Global Convergence

Abstract

To regulate a social system comprised of self-interested agents, economic incentives are often required to induce a desirable outcome. This incentive design problem naturally possesses a bilevel structure, in which a designer modifies the rewards of the agents with incentives while anticipating the response of the agents, who play a non-cooperative game that converges to an equilibrium. The existing bilevel optimization algorithms raise a dilemma when applied to this problem: anticipating how incentives affect the agents at equilibrium requires solving the equilibrium problem repeatedly, which is computationally inefficient; bypassing the time-consuming step of equilibrium-finding can reduce the computational cost, but may lead the designer to a sub-optimal solution. To address such a dilemma, we propose a method that tackles the designer’s and agents’ problems simultaneously in a single loop. Specifically, at each iteration, both the designer and the agents only move one step. Nevertheless, we allow the designer to gradually learn the overall influence of the incentives on the agents, which guarantees optimality after convergence. The convergence rate of the proposed scheme is also established for a broad class of games.

1 Introduction

A common thread in human history is how to “properly” regulate a social system comprised of self-interested individuals. In a laissez-faire economy, for example, the competitive market itself is the primary regulatory mechanism [47, 16]. However, a laissez-faire economy may falter due to the existence of significant “externalities” [8, 18], which may arise wherever the self-interested agents do not bear the external cost of their behaviors in the entirety. The right response, many argue, is to introduce corrective policies in the form of economic incentives (e.g., tolls, taxes, and subsidies) [32]. By modifying the rewards of the agents, these incentives can encourage (discourage) the agents to engage in activities that create positive (negative) side effects for the society, and thus guide the self-interests of the agents towards a socially desirable end. For example, carbon taxes can be levied on carbon emissions to protect the environment during the production of goods and services [35]. Surge pricing has been widely used to boost supply and dampen demand in volatile ride-hail markets [37]. Lately, subsidies and penalties were both introduced to overcome vaccine hesitancy in the world’s hard-fought battle against the COVID-19 pandemic.

The goal of this paper is to develop a provably efficient method for guiding the agents in a non-cooperative game towards a socially desirable outcome — e.g., the one that maximizes the social welfare — by modifying their payoffs with incentives. The resulting problem may be naturally interpreted as a Stackelberg game [50] in which the “incentive designer” is the leader while the agents being regulated are the followers. Hence, it naturally possesses a bilevel structure [3]: at the upper level, the “designer” optimizes the incentives by anticipating and regulating the best response of the agents, who play a non-cooperative game at the lower level. As the lower-level agents pursue their self-interests freely, their best response can be predicted by the Nash equilibrium [39], which dictates no agent can do better by unilaterally changing their strategy. Accordingly, the incentive design problem is a mathematical program with equilibrium constraints (MPEC) [30].

In the optimization literature, MPECs are well-known for their intractability [10]. Specifically, even getting a first-order derivative through their bilevel structure is a challenge. In the incentive design problem, for example, to calculate the gradient of the designer’s objective at equilibrium, which provides a principled direction for the designer to update the incentives, one must anticipate how the equilibrium is affected by the changes [17]. This is usually achieved by performing a sensitivity analysis, which in turn requires differentiation through the lower-level equilibrium problem, either implicitly or explicitly [25]. No matter how the sensitivity analysis is carried out, the equilibrium problem must be solved before updating the incentives. The resulting algorithm thus admits a double loop structure: in the outer loop, the designer iteratively moves along the gradient; but to find the gradient, it must allow the lower level game dynamics to run its course to arrive at the equilibrium given the current incentives.

Because of the inherent inefficiency of the double-loop structure, many heuristics methods have also been developed for bilevel programs in machine learning [27, 29, 14]. When applied to the incentive design problem, these methods assume that the designer does not solve the equilibrium exactly to evaluate the gradient. Instead, at each iteration, the game is allowed to run just a few rounds, enough for the designer to obtain a reasonable approximation of the gradient. Although such a method promises to reduce the computational cost significantly at each iteration, it may never converge to the same optimal solution obtained without the approximation.

Contribution. In a nutshell, correctly anticipating how incentives affect the agents at equilibrium requires solving the equilibrium problem repeatedly, which is computationally inefficient. On the other hand, simply bypassing the time-consuming step of equilibrium-finding may lead the designer to a sub-optimal solution. This dilemma prompts the following question that motivates this study: can we obtain the optimal solution to an incentive design problem without repeatedly solving the equilibrium problem?

In this paper, we propose an efficient principled method that tackles the designer’s problem and agents’ problem simultaneously in a single loop. At the lower level, we use the mirror descent method [40] to model the process through which the agents move towards equilibrium. At the upper level, we use the gradient descent method to update the incentives towards optimality. At each iteration, both the designer and the agents only move one step based on the first-order information. However, as discussed before, the upper gradient relies on the corresponding lower equilibrium, which is not available in the single-loop update. Hence, we propose to use the implicit differentiation formula—with equilibrium strategy replaced by the current strategy—to estimate the upper gradient, which might be biased at the beginning. Nevertheless, we prove that if we improve the lower-level solution with larger step sizes, the upper-level and lower-level problems may converge simultaneously at a fast rate. The proposed scheme hence guarantees optimality because it can anticipate the overall influence of the incentives on the agents eventually after convergence.

Organization. In Section 2, we discuss related work. In Section 3, we provide the mathematical formulation of the incentive design problem. In Section 4, we design algorithms for solving the problem. In Section 5, we establish conditions under which the proposed scheme globally converges to the optimal solution and analyze the convergence rate. The convergence analysis is restricted to games with a unique equilibrium. In Section 6, we discuss how to apply our algorithms to games with multiple equilibria. Eventually, we conduct experiments to test our algorithms in Section 7.

Notation. We denote as the inner product in vector spaces. For a vector form , we denote . For a finite set , we denote . For any vector norm , we denote as its dual norm. We refer readers to Appendix A for a collection of frequently used problem-specific notations.

2 Related work

The incentive design problem studied in this paper is a special case of mathematical programs with equilibrium constraints (MPEC) [19], a class of optimization problems constrained by equilibrium conditions. MPEC is closely related to bilevel programs [10], which bind two mathematical programs together by treating one program as part of the constraints for the other.

Bilevel Programming. In the optimization literature, bilevel programming was first introduced to tackle resource allocation problems [7] and has since found applications in such diverse topics as revenue management, network design, traffic control, and energy systems. In the past decade, researchers have discovered numerous applications of bilevel programming in machine learning, including meta-learning (ML) [14], adversarial learning [22], hyperparameter optimization, [31] and neural architecture search [27]. These newly found bilevel programs in ML are often solved by gradient descent methods, which require differentiating through the (usually unconstrained) lower-level optimization problem [28]. The differentiation can be carried out either implicitly on the optimality conditions as in the conventional sensitivity analysis [see e.g., 2, 43, 4], or explicitly by unrolling the numerical procedure used to solve the lower-level problem [see e.g., 31, 15]. In the explicit approach, one may “partially” unroll the solution procedure (i.e., stop after just a few rounds, or even only one round) to reduce the computational cost. Although this popular heuristic has delivered satisfactory performance on many practical tasks [29, 36, 14, 27], it cannot guarantee optimality for bilevel programs under the general setting, as it cannot derive the accurate upper-level gradient at each iteration [53].

MPEC. Unlike bilevel programs, MPEC is relatively under-explored in the ML literature so far. Recently, Li et al. [25] extended the explicit differentiation method for bilevel programs to MPECs. Their algorithm unrolls an iterative projection algorithm for solving the lower-level problem, which is formulated as a variational inequality (VI) problem. Leveraging the recent advance in differentiable programming [2], they embedded each projection iteration as a differentiable layer in a computational graph, and accordingly, transform the explicit differentiation as standard backpropagation through the graph. The algorithm proposed by Li et al. [26] has a similar overall structure, but theirs casts the lower-level solution process as the imitative logit dynamics [6] drawn from the evolutionary game theory, which can be more efficiently unrolled. Although backpropagation is efficient, constructing and storing such a graph — with potentially a large number of projection layers needed to find a good solution to the lower-level problem — is still demanding. To reduce this burden, partially unrolling the iterative projection algorithm is a solution. Yet, it still cannot guarantee optimality for MPECs due to the same reason as for bilevel programs.

The simultaneous design-and-play approach is proposed to address this dilemma. Our approach follows the algorithm of Hong et al. [21] and Chen et al. [9], which solves bilevel programs via single-loop update. Importantly, they solve both the upper- and the lower-level problem using a gradient descent algorithm and establish the relationship between the convergence rate of the single-loop algorithm and the step size used in gradient descent. However, their algorithms are limited to the cases where the lower-level optimization problem is unconstrained. Our work extends these single-loop algorithms to MPECs that have an equilibrium problem at the lower level. We choose mirror descent as the solution method to the lower-level problem because of its broad applicability to optimization problems with constraints [40] and generality in the behavioral interpretation of games [34, 23]. We show that the convergence of the proposed simultaneous design-and-play approach relies on the setting of the step size for both the upper- and lower-level updates, a finding that echos the key result in [21]. We first give the convergence rate under mirror descent and the unconstrained assumption and then extend the result to the constrained case. For the latter, we show that convergence cannot be guaranteed if the lower-level solution gets too close to the boundary early in the simultaneous solution process. To avoid this trap, the standard mirror descent method is revised to carefully steer the lower-level solution away from the boundary.

3 Problem Formulation

We study incentive design in both atomic games [39] and nonatomic games [45], classified depending on whether the set of agents is endowed with an atomic or a nonatomic measure. In social systems, both types of games can be useful, although the application context varies. Atomic games are typically employed when each agent has a non-trivial influence on the rewards of other agents. In a nonatomic game, on the contrary, a single agent’s influence is negligible and the reward could only be affected by the collective behavior of agents.

Atomic Game. Consider a game played by a finite set of agents , where each agent selects a strategy to maximize the reward received, which is determined by a continuously differentiable function . Formally, a joint strategy is a Nash equilibrium if

Suppose that for all , the strategy set is closed and convex, and the reward function is convex in , then is a Nash equilibrium if and only if there exists such that [46]

| (3.1) |

Example 3.1 (Oligopoly model).

In an oligopoly model, there is finite set of firms, each of which supplies the market with a quantity () of goods. Under this setting, we have . The good is then priced as , where and it the total output. The profit and the marginal profit of the firm are then given by

respectively, where is the constant marginal cost111Throughout this paper, we use the term “reward” to describe the scenario where the agents aim to maximize , and use “cost” when the agents aim to do the opposite. for firm .

Nonatomic Game. Consider a game played by a continuous set of agents, which can be divided into a finite set of classes . We assume that each represents a class of infinitesimal and homogeneous agents sharing the finite strategy set with . The mass distribution for the class is defined as a vector that gives the proportion of agents using each strategy. Let the cost of an agent in class to select a strategy given be . Formally, a joint mass distribution is a Nash equilibrium, also known as a Wardrop equilibrium [51], if for all , there exists such that

The following result extends the VI formulation to Nash equilibrium in nonatomic game: denote , then is a Nash equilibrium if and only if [11]

| (3.2) |

Example 3.2 (Routing game).

Consider a set of agents traveling from source nodes to sink nodes in a directed graph with nodes and edges . Denote as the set of source-sink pairs, as the set of paths connecting and as the set of all edges on the path . Suppose that each source-sink pair is associated with nonatomic agents aiming to choose a route from to minimize the total cost incurred. Let be the proportion of travelers using the path , be the number of travelers using the edge and be the cost for using edge . Then we have where equals 1 if and 0 otherwise. The total cost for a traveler selecting a path will then be .

Incentive Design. Despite the difference, we see that an equilibrium of both atomic and nonatomic games can be formulated as a solution to a corresponding VI problem in the following form

| (3.3) |

where and denote different terms in the two types of games. Suppose that there exists an incentive designer aiming to induce a desired equilibrium. To this end, the designer can add incentives , which is assumed to enter the reward/cost functions and thus leads to a parameterized . We assume that the designer’s objective is determined by a function . Denote as the solution set to (3.3). We then obtain the uniform formulation of the incentive design problem for both atomic games and nonatomic games

| (3.4) |

If the equilibrium problem admits multiple solutions, the agents may converge to different ones and it would be difficult to determine which one can better predict the behaviors of the agents without additional information. In this paper, we first consider the case where the game admits a unique equilibrium. Sufficient conditions under which the game admits a unique equilibrium will also be provided later. We would consider the non-unique case later and show that our algorithms can still become applicable by adding an appropriate regularizer in the cost function.

Stochastic Environment. In the aforementioned settings, is a deterministic function. Although most MPEC algorithms in the optimization literature follow this deterministic setting, in this paper, we hope our algorithm can handle more realistic scenarios. Specifically, in the real world, the environment could be stochastic if some environment parameters that fluctuate over days. In a traffic system, for example, both worse weather and special events may affect the road condition, hence the travel time experienced by the drivers. We expect our algorithm can still work in the face of such stochasticity. To this end, we assume that represents the expected value of the cost function. On each day, however, the agents can only receive a noised feedback as an estimation. In the next section, we develop algorithms based on such noisy feedback.

4 Algorithm

We propose to update and simultaneously to improve the computational efficiency. The game dynamics at the lower level is modeled using the mirror descent method. Specifically, at the stage , given and , the agent first receives as the feedback. After receiving the feedback, the agents update their strategies via

| (4.1) |

where is the Bregman divergence induced by a strongly convex function . The accurate value of , the gradient of its objective function, equals

which requires the exact lower-level equilibrium . However, at the stage , we only have the current strategy . Therefore, we also have to establish an estimator of and using , the form of which will be specified later.

Remark 4.1.

The standard gradient descent method is double-loop because at each it involves an inner loop for solving the exact value of and then calculating the exact gradient.

4.1 Unconstrained Game

We first consider unconstrained games with , for all . We select as smooth function, i.e., there exists a constant such that for all and ,

| (4.2) |

Example of satisfying this assumption include (but is not limited to) , where is a positive definite matrix. It can be directly checked that we can set , where is the largest singular value of . In this case, the corresponding Bregman divergence becomes , which is known as the squared Mahalanobis distance. Before laying out the algorithm, we first give the following lemma characterizing .

Lemma 4.2.

When and is non-singular, it holds that

Proof.

See Appendix B.2 for detailed proof. ∎

For any given and , we define

| (4.3) |

Although we cannot obtain the exact value of , we may use as a surrogate and update based on instead. Now we are ready to present the following bilevel incentive design algorithm for unconstrained games (see Algorithm 1).

| (4.4) |

In Algorithm 1, if and converge to fixed points and , respectively, then is expected to be satisfied. Thus, we would also have Thus, the optimality of can be then guaranteed. It implies that the algorithm would find the optimal solution if it converges. Instead, the difficult part is how to design appropriate step sizes that ensure convergence. In this paper, we provide such conditions in Section 5.1.

4.2 Simplex-Constrained Game

We then consider the case where for all , is constrained within the probability simplex

where is the vector of all ones. Here we remark that any classic game-theoretic models are simplex-constrained. In fact, as long as the agent faces a finite number of choices and adopts a mixed strategy, its decision space would be a probability simplex [39]. In addition, some other types of decisions may also be constrained by a simplex. For example, financial investment concerns how to split the money on different assets. In such a scenario, the budget constraint can also be represented by a probability simplex.

In such a case, we naturally consider , which is the Shannon entropy. Such a choice gives the Bregman divergence , which is known as the KL divergence. In this case, we still first need to characterize , which also has an analytic form. Specifically, if we define a function that satisfies

then for any , satisfies [12]. Implicitly differentiating through this fixed point equation then yields . Then, similar to (4.3), we may use

| (4.5) |

to approximate the actual gradient and then update based on instead.

Remark 4.3.

The mapping has an analytic expression, which reads

Hence, both and can also be calculated analytically.

In addition to a different gradient estimate, we also modify Algorithm 1 to keep the iterations from hitting the boundary at the early stage. The modification involves an additional step that mixes the strategy with a uniform strategy , i.e., imposing an additional step

upon finishing the update (4.4), where is a the mixing parameter, decreasing to 0 when . In the following, we give the formal presentation of the modified bilevel incentive design algorithm for simplex-constrained games (see Algorithm 2).

| (4.6) |

Similar to Algorithm 1, at the core of the convergence of Algorithm 2 is still the step size. This case is even more complicated, as we need to design , , and at the same time. In this paper, a provably convergent scheme is provided in Section 5.2.

Before closing this section, we remark that the algorithm can be easily adapted to other types of constraints by using another to model the game dynamics. Particularly, the projected gradient descent dynamics has very broad applicability. In this case, the algorithm for calculating and is given by, for example, Amos and Kolter [2]. The additional step (4.6) then becomes unnecessary as it is dedicated to simplex constraints.

5 Convergence Analysis

In this section, we study the convergence of the proposed algorithms. For simplicity, define We make the following assumptions.

Assumption 5.1.

The lower-level problem in (3.4) satisfies the following conditions.

-

•

The strategy set of agent is a nonempty, compact, and convex subset of .

-

•

For each , the gradient is -Lipschitz continuous with respect to , i.e., for all and , .

-

•

There exist constants such that for all and , , and .

-

•

For all , the equilibrium of the game is strongly stable with respect to , i.e., for all , .

Assumption 5.2.

The upper-level problem in (3.4) satisfies the following properties.

-

•

The set is compact and convex. The function is -strongly convex and has -norm uniformly bounded by .

-

•

The extended gradient is -Lipschitz continuous with respect to , i.e., for all , .

Assumption 5.3.

Define the filtration by , , , and We impose the following assumptions.

-

•

The feedback is an unbiased estimate, i.e., for all , we have .

-

•

The feedback has bounded mean squared estimation errors, i.e., there exists such that for all .

Below we discuss when the proposed assumptions hold, and if they are violated, how would our algorithm works. Assumption 5.1 includes the condition that is strongly stable. In this case, it is the unique Nash equilibrium of the game [34]. It is also a common assumption in the analysis of the mirror descent dynamics itself [13]. We provide sufficient conditions for checking strong stability in Appendix B.1. We refer the readers to Section 6 for an explanation of how to extend our algorithm when this assumption is violated. Assumption 5.2 includes the convexity of the upper-level problem, which is usually a necessary condition to ensure global convergence. Yet, without convexity, our algorithm can still converge to a local minimum. Assumption 5.3 becomes unnecessary if we simply assume that the environment is deterministic. In this case, the accurate value of is available. Yet, if the noises are added to the feedback, assuming that the noisy feedback is unbiased and bounded is still reasonable.

5.1 Unconstrained Game

In this part, we establish the convergence guarantee of Algorithm 1 for unconstrained games. We define the optimality gap and the equilibrium gap as

We track such two gaps as the convergence criteria in the subsequent results.

Theorem 5.4.

Proof.

See Appendix C for detailed proof and a detailed expression of convergence rates. ∎

5.2 Simplex-Constrained Game

In this part, we establish the convergence guarantee of Algorithm 2 for simplex constrained games. We still define optimality gap as Yet, corresponding to (4.6), we track as a measure of convergence for the strategies of the agents, which is defined as

where We are now ready to give the convergence guarantee of Algorithm 2.

Theorem 5.5.

Proof.

See Appendix D for detailed proof and a detailed form of the convergence rates. ∎

6 Extensions to Games with Multiple Equilibria

We then briefly discuss how to apply our algorithms when the lower-level game has multiple equilibria.

Case I: If the function is strongly monotone in the neighbourhood of each equilibrium, then all equilibria are strongly stable in a neighbourhood and hence isolated [38]. In this case, our algorithms can be directly applied as is non-singular in these neighborhoods. It is commonly believed that the most likely equilibrium is the one reached by the game dynamics [52]; our algorithm naturally converges to this one.

Case II: If the function is monotone but not strongly monotone, then the equilibrium set is a convex and closed region [34]. This case is challenging, as the matrix needed to be inverted would become singular. Nevertheless, we can simply assume the agents are bounded rational [42, 1, 33]. The bounded rationality would result in a quantal response equilibrium for predicting the agents’ response. We refer the readers to Appendix F for a detailed explanation (with numerical examples for illustration). Here we briefly sum up the key takeaways: (1) it is equivalent to add a regularizer to for some and ; (2) as long as , the strong stability condition in Assumption 5.1 would then be satisfied, hence a unique equilibrium would exist; (3) as long as , the Lipschitz continuous condition in Assumption 5.1 will also not be violated. In a nutshell, the bounded rationality assumption can simultaneously make our model more realistic and satisfy the assumptions in Section 5.

7 Numerical Experiments

In this section, we conduct two numerical experiments to test our algorithms. All numerical results reported in this section were produced either on a MacBook Pro (15-inch, 2017) with 2.9 GHz Quad-Core Intel Core i7 CPU.

7.1 Pollution Control via Emission Tax

We first consider the oligopoly model introduced in Example 3.1. We assume that producing units of output, firm would generate units of emissions. We consider the following social welfare function [44]

where the first term is the consumers’ surplus, the second term is the total production cost, and the third term is the social damage caused by pollution. To maximize social welfare, an authority can impose emission taxes on the productions. Specifically, whenever producing units of output, firm could be charged , where is specialized for the firm . In the experiment, we set , , , , and , where are evenly spaced between 1 and 2 and are the white noises. Under this setting, and are negatively correlated, which is realistic because if a firm hopes to reduce their pollution by updating their emission control systems, the production cost must be increased accordingly.

Through this small numerical example, we hope to illustrate that the single-loop scheme developed in this paper is indeed much more efficient than previous double-loop algorithms. To this end, we compare our algorithm with two double-loop schemes proposed by Li et al. [25]. In both approaches, the lower-level equilibrium problem is solved exactly first at each iteration. But afterwards, the upper-level gradients are respectively obtained via automatic differentiation (AD) and implicit differentiation (ID). To make a fair comparison, the same hyperparameters — including the initial solutions, the learning rates, and the tolerance values for both upper- and lower-level problems — are employed for the tested algorithms (double-loop AD, double-loop ID, and our algorithm).

Table 1 reports statistics related to the computation performance, including the total CPU time, the total iteration number, and the CPU time per iteration. The results reveal that all tested algorithm take a similar number of iterations to reach the same level of precision. However, the running time per iteration required by our algorithm is significantly lower than the two double-loop approaches. Hence, in general, our scheme is more efficient.

| Method | Double-loop AD | Double-loop ID | Our algorithm |

| Total CPU time (s) | 71.56 | 11.29 | 2.09 |

| Total iteration number | 519 | 626 | 813 |

| Time per iteration (ms) | 137.89 | 18.04 | 2.57 |

7.2 Second-Best Congestion Pricing

We then consider the routing game model introduced in Example 3.2. To minimize the total travel delay, an authority on behalf of the public sector could impose appropriate tolls on selected roads [49]. This problem of determining tolls is commonly known as the congestion pricing problem. The second-best scheme assumes that only a subset of links can be charged [48]. Specifically, we write as the toll imposed on all the links and as the set of tollable links. We model total cost for a traveler selecting a path as

where we add an extra term to characterize the uncertainties in travelers’ route choices [20, 5]. It results in a quantal response equilibrium, as discussed in Section 6. We test our algorithm on a real-world traffic network: the Sioux-Falls network (See Lawphongpanich and Hearn [24] for its structure). We select 20 links (11, 35, 32, 68, 46, 21, 65, 52, 71, 74, 33, 64, 69, 14, 18, 39, 57, 48, 15, 51) for imposing congestion tolls.

We run Algorithm 2 to solve the problem and compare 4 different settings on the selection of step sizes. Setting A: , , and . It ensures convergence according to Theorem 5.5. Setting B: , , and . It only increases the decreasing rate of the step size in the inner loop. Setting C: , , and . It assumes that all step sizes decrease based on the classic rate. Setting D: , , and . It does not adopt the mixing step proposed in our paper. We add white noise to the costs received by the agents based on Gaussian distributions and run our algorithm under each setting with 10 times. The mean value of upper-level optimality gaps and lower-level equilibrium gaps are reported in Figure 1 (the shadowed areas are plotted based on “mean std” area over all sampled trajectories).

Below we summarize a few observations. First, without the mixing step proposed in our paper, the algorithm does hit the boundary too early. The algorithm fails after just a few iterations. It verifies our earlier claim that directly extending previous methods [21, 9] designed for bilevel optimization problems to MPECs is problematic. Second, the step size given by Theorem 5.5 ensures the fastest and the most stable convergence.

References

- Ahmed et al. [2019] Ahmed, Z., Le Roux, N., Norouzi, M. and Schuurmans, D. (2019). Understanding the impact of entropy on policy optimization. In International conference on machine learning. PMLR.

- Amos and Kolter [2017] Amos, B. and Kolter, J. Z. (2017). Optnet: Differentiable optimization as a layer in neural networks. In Proceedings of the 34th International Conference on Machine Learning.

- Anandalingam and Friesz [1992] Anandalingam, G. and Friesz, T. L. (1992). Hierarchical optimization: An introduction. Annals of Operations Research 34 1–11.

- Bai et al. [2019] Bai, S., Kolter, J. Z. and Koltun, V. (2019). Deep equilibrium models. In Advances in Neural Information Processing Systems.

- Bar-Gera and Boyce [1999] Bar-Gera, H. and Boyce, D. (1999). Route flow entropy maximization in origin-based traffic assignment. In 14th International Symposium on Transportation and Traffic TheoryTransportation Research Institute.

- Björnerstedt and Weibull [1994] Björnerstedt, J. and Weibull, J. W. (1994). Nash equilibrium and evolution by imitation. Tech. rep., IUI Working Paper.

- Bracken and McGill [1973] Bracken, J. and McGill, J. T. (1973). Mathematical programs with optimization problems in the constraints. Operations Research 21 37–44.

- Buchanan and Stubblebine [1962] Buchanan, J. M. and Stubblebine, W. C. (1962). Externality. In Classic papers in natural resource economics. Springer, 138–154.

- Chen et al. [2021] Chen, T., Sun, Y. and Yin, W. (2021). A single-timescale stochastic bilevel optimization method. arXiv preprint arXiv:2102.04671 .

- Colson et al. [2007] Colson, B., Marcotte, P. and Savard, G. (2007). An overview of bilevel optimization. Annals of operations research 153 235–256.

- Dafermos [1980] Dafermos, S. (1980). Traffic equilibrium and variational inequalities. Transportation Science 14 42–54.

- Dafermos [1983] Dafermos, S. (1983). An iterative scheme for variational inequalities. Mathematical Programming 26 40–47.

- D’Orazio et al. [2021] D’Orazio, R., Loizou, N., Laradji, I. and Mitliagkas, I. (2021). Stochastic mirror descent: Convergence analysis and adaptive variants via the mirror stochastic polyak stepsize. arXiv preprint arXiv:2110.15412 .

- Finn et al. [2017] Finn, C., Abbeel, P. and Levine, S. (2017). Model-agnostic meta-learning for fast adaptation of deep networks. In International Conference on Machine Learning. PMLR.

- Franceschi et al. [2017] Franceschi, L., Donini, M., Frasconi, P. and Pontil, M. (2017). Forward and reverse gradient-based hyperparameter optimization. In International Conference on Machine Learning. PMLR.

- Friedman and Friedman [1990] Friedman, M. and Friedman, R. (1990). Free to choose: A personal statement. Houghton Mifflin Harcourt.

- Friesz et al. [1990] Friesz, T. L., Tobin, R. L., Cho, H.-J. and Mehta, N. J. (1990). Sensitivity analysis based heuristic algorithms for mathematical programs with variational inequality constraints. Mathematical Programming 48 265–284.

- Greenwald and Stiglitz [1986] Greenwald, B. C. and Stiglitz, J. E. (1986). Externalities in economies with imperfect information and incomplete markets. The quarterly journal of economics 101 229–264.

- Harker and Pang [1988] Harker, P. T. and Pang, J.-S. (1988). Existence of optimal solutions to mathematical programs with equilibrium constraints. Operations research letters 7 61–64.

- Hazelton [1998] Hazelton, M. L. (1998). Some remarks on stochastic user equilibrium. Transportation Research Part B: Methodological 32 101–108.

- Hong et al. [2020] Hong, M., Wai, H.-T., Wang, Z. and Yang, Z. (2020). A two-timescale framework for bilevel optimization: Complexity analysis and application to actor-critic. arXiv preprint arXiv:2007.05170 .

- Jiang et al. [2021] Jiang, H., Chen, Z., Shi, Y., Dai, B. and Zhao, T. (2021). Learning to defend by learning to attack. In International Conference on Artificial Intelligence and Statistics. PMLR.

- Krichene et al. [2015] Krichene, W., Krichene, S. and Bayen, A. (2015). Convergence of mirror descent dynamics in the routing game. In 2015 European Control Conference (ECC). IEEE.

- Lawphongpanich and Hearn [2004] Lawphongpanich, S. and Hearn, D. W. (2004). An mpec approach to second-best toll pricing. Mathematical programming 101 33–55.

- Li et al. [2020] Li, J., Yu, J., Nie, Y. M. and Wang, Z. (2020). End-to-end learning and intervention in games. Advances in Neural Information Processing Systems 33.

- Li et al. [2022] Li, J., Yu, J., Wang, Q., Liu, B., Wang, Z. and Nie, Y. M. (2022). Differentiable bilevel programming for stackelberg congestion games. arXiv preprint arXiv:2209.07618 .

- Liu et al. [2018] Liu, H., Simonyan, K. and Yang, Y. (2018). Darts: Differentiable architecture search. arXiv preprint arXiv:1806.09055 .

- Liu et al. [2021] Liu, R., Gao, J., Zhang, J., Meng, D. and Lin, Z. (2021). Investigating bi-level optimization for learning and vision from a unified perspective: A survey and beyond. arXiv preprint arXiv:2101.11517 .

- Luketina et al. [2016] Luketina, J., Berglund, M., Greff, K. and Raiko, T. (2016). Scalable gradient-based tuning of continuous regularization hyperparameters. In International conference on machine learning. PMLR.

- Luo et al. [1996] Luo, Z.-Q., Pang, J.-S. and Ralph, D. (1996). Mathematical programs with equilibrium constraints. Cambridge University Press.

- Maclaurin et al. [2015] Maclaurin, D., Duvenaud, D. and Adams, R. (2015). Gradient-based hyperparameter optimization through reversible learning. In International conference on machine learning. PMLR.

- Maskin [1994] Maskin, E. S. (1994). The invisible hand and externalities. The American Economic Review 84 333–337.

- Melo [2021] Melo, E. (2021). On the uniqueness of quantal response equilibria and its application to network games. Economic Theory 1–45.

- Mertikopoulos and Zhou [2019] Mertikopoulos, P. and Zhou, Z. (2019). Learning in games with continuous action sets and unknown payoff functions. Mathematical Programming 173 465–507.

- Metcalf and Weisbach [2009] Metcalf, G. E. and Weisbach, D. (2009). The design of a carbon tax. Harv. Envtl. L. Rev. 33 499.

- Metz et al. [2016] Metz, L., Poole, B., Pfau, D. and Sohl-Dickstein, J. (2016). Unrolled generative adversarial networks. arXiv preprint arXiv:1611.02163 .

- Mguni et al. [2019] Mguni, D., Jennings, J., Sison, E., Valcarcel Macua, S., Ceppi, S. and Munoz de Cote, E. (2019). Coordinating the crowd: Inducing desirable equilibria in non-cooperative systems. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems.

- Nagurney [2013] Nagurney, A. (2013). Network economics: A variational inequality approach, vol. 10. Springer Science & Business Media.

- Nash [1951] Nash, J. (1951). Non-cooperative games. Annals of mathematics 286–295.

- Nemirovskij and Yudin [1983] Nemirovskij, A. S. and Yudin, D. B. (1983). Problem Complexity and Method Efficiency in Optimization. A Wiley-Interscience publication, Wiley.

- Parise and Ozdaglar [2017] Parise, F. and Ozdaglar, A. (2017). Sensitivity analysis for network aggregative games. In 2017 IEEE 56th Annual Conference on Decision and Control (CDC). IEEE.

- Prashker and Bekhor [2004] Prashker, J. N. and Bekhor, S. (2004). Route choice models used in the stochastic user equilibrium problem: a review. Transport reviews 24 437–463.

- Rajeswaran et al. [2019] Rajeswaran, A., Finn, C., Kakade, S. and Levine, S. (2019). Meta-learning with implicit gradients. arXiv preprint arXiv:1909.04630 .

- Requate [1993] Requate, T. (1993). Pollution control in a cournot duopoly via taxes or permits. Journal of Economics 58 255–291.

- Schmeidler [1973] Schmeidler, D. (1973). Equilibrium points of nonatomic games. Journal of statistical Physics 7 295–300.

- Scutari et al. [2010] Scutari, G., Palomar, D. P., Facchinei, F. and Pang, J.-S. (2010). Convex optimization, game theory, and variational inequality theory. IEEE Signal Processing Magazine 27 35–49.

- Smith [1791] Smith, A. (1791). An Inquiry into the Nature and Causes of the Wealth of Nations, vol. 1. Librito Mondi.

- Verhoef [2002] Verhoef, E. T. (2002). Second-best congestion pricing in general networks. heuristic algorithms for finding second-best optimal toll levels and toll points. Transportation Research Part B: Methodological 36 707–729.

- Vickrey [1969] Vickrey, W. S. (1969). Congestion theory and transport investment. The American Economic Review 59 251–260.

- von Stackelberg [1952] von Stackelberg, H. (1952). The theory of the market economy. Oxford University Press.

- Wardrop [1952] Wardrop, J. G. (1952). Some theoretical aspects of road traffic research. In Proceedings of the Institution of Civil Engineers, vol. 1.

- Weibull [1997] Weibull, J. W. (1997). Evolutionary game theory. MIT press.

- Wu et al. [2018] Wu, Y., Ren, M., Liao, R. and Grosse, R. (2018). Understanding short-horizon bias in stochastic meta-optimization. arXiv preprint arXiv:1803.02021 .

Appendix A Notations

We collect the most frequently used notations here.

| Category | Symbol | Definition |

| Game (lower level) | set of all agents | |

| agent ’s strategy | ||

| reward function of agent | ||

| , unit reward of agent | ||

| variational stability coefficient of agent | ||

| incentivized reward and unit reward | ||

| Incentive designer (upper level) | incentive distributed to the agents | |

| objective of the incentive designer | ||

| equilibrium induced by incentive | ||

| designer’s objective value under | ||

| Algorithm (-th iteration) | Bregman divergence in agent ’s strategy update | |

| equilibrium learning rate for agent | ||

| incentive learning rate for the designer | ||

| estimate of incentivized unit cost | ||

| approximate incentive gradient | ||

| equilibrium learning dynamic | ||

| agent ’s strategy mixed with uniform strategy | ||

| Analysis (assumptions) | Lipschitz continuity coefficient of | |

| spectral upper bound of | ||

| spectral lower bound of | ||

| strong convexity coefficient of | ||

| upper bound of | ||

| Lipschitz continuity coefficient of | ||

| estimation MSE upper bound of |

Appendix B Detailed Discussions on the Games

In this section, we present detailed discussions on the properties of the games.

B.1 Strong Stability

We establish the following sufficient condition for strong stability.

Lemma B.1.

Define the matrix as a block matrix with -th block taking the form of

Suppose that satisfies the smooth condition (4.2). If for some , then the Nash equilibrium is -strongly stable with respect to the Bregman divergence .

Proof.

We define the -weighted gradient of the game as

By definition, we can verify that is the Jacobian matrix of with respect to . For any , let . We have

| (B.1) |

Taking additional dot product on both sides of (B.1), we have

| (B.2) |

where the inequality follows from . Setting , we further have

| (B.3) |

where the second inequality follows from the fact that is a Nash equilibrium. Eventually, as satisfies (4.2), we have

| (B.4) |

Lemma B.2.

Define the matrix as

Suppose that is the negative entropy function. If for some , then Nash equilibrium is -strongly stable with respect to the KL divergence.

B.2 Sensitivity of Nash Equilibrium

Unconstrained vame. We now provide the proof of Lemma 4.2.

Proof of Lemma 4.2.

Since for all , by the definition of , we have for all . Then, differentiating this equality with respect to on both ends, for any , we have

Thus, we have

∎

As a consequence of Lemma 4.2, we have the following lemma addressing the sensitivity of Nash equilibrium with respect to the incentive parameter .

Proof.

Simplex-Constrained Game. We first provide the following lemma.

Lemma B.4 (Theorem 1 in [41]).

When and is non-singular, the Jacobian of takes the form

where

Here , where is obtained by deleting the rows in the identity matrix with .

As a consequence of Lemma B.4, we have the following lemma as the simplex constrained version of Lemma B.3.

Lemma B.5.

Appendix C Proof of Theorem 5.4

We first present the following two lemmas under the conditions presented in Theorem 5.4.

Lemma C.1.

For all , we have

Proof.

See Appendix C.1 for detailed proof. ∎

Lemma C.2.

For all , we have

Proof.

See Appendix C.2 for detailed proof. ∎

Now we are ready to present the proof of Theorem 5.4.

Proof of Theorem 5.4.

C.1 Proof of Lemma C.1

Proof.

Recall that

We have

where the second inequality follows from the fact that . Taking the conditional expectation given , we obtain

| (C.3) | ||||

where the second inequality follows from Assumption 5.3. Summing up (C.3) for all , we have

| (C.4) |

By the -strong variational stability of , we have

| (C.5) |

Moreover, by Assumption 5.3 we have

summing up which gives

| (C.6) |

where the first inequality follows from the optimality condition that , and the second inequality follows from Assumption 5.1. Thus, taking (C.5) and (C.1) into (C.1), we obtain

| (C.7) | ||||

where the last inequality holds with , which is satisfied by . By Lemma E.1, we have for any ,

| (C.8) | ||||

where the third inequality follows from Lemma B.3, and the last inequality follows from the fact that proximal mapping is non-expensive. Taking (C.8) into (C.7) and choosing , we obtain

| (C.9) | ||||

By Assumptions 5.2 and 5.3, we have

| (C.10) | ||||

taking which into (C.9) and taking expectation on both sides, we further obtain

By , we have

where the first inequality follows from , and the second inequality follows from , , and . Thus, we obtain

| (C.11) |

Recursively applying (C.11), we obtain

Thus, we conclude the proof Lemma C.1. ∎

C.2 Proof of Lemma C.2

Proof.

Since the projection is non-expansive, we have

Taking the conditional expectation given , we obtain

| (C.12) |

where the second inequality follows from Assumption 5.2 and the Cauchy-Schwartz inequality, and the last inequality follows from Assumption 5.2. Applying (C.10) to (C.2) and taking expectation on both sides, we get

| (C.13) |

Recursively applying (C.13), we obtain

Thus, we conclude the proof of Lemma C.2. ∎

Appendix D Proof of Theorem 5.5

We first present the following two lemmas under the conditions presented in Theorem 5.5

Lemma D.1.

For all , we have

Proof.

See Appendix D.1 for detailed proof. ∎

Lemma D.2.

For all , we have

Proof.

See Appendix D.2 for detailed proof. ∎

Now we are ready to prove Theorem 5.5.

Proof of Theorem 5.5.

For , by [[21], Lemma C.4], we have

Thus, by Lemma C.1, we have

| (D.1) |

Since , we have

taking which into (D), we obtain

| (D.2) |

where

Similarly, we have for ,

Thus, combining (C) with Lemma C.2, we obtain

| (D.3) | ||||

Here the third inequality follows from . Therefore, (D) and (D.3) conclude the proof of Theorem 5.5. ∎

D.1 Proof of Lemma D.1

Proof.

We first show that

| (D.4) |

Since

we have the exact form of as

| (D.5) |

By the definition of KL divergence, we have

| (D.6) | ||||

Let be the normalization factor of the exact form of in (D.5). We have

| (D.7) | ||||

where the last equality follows from the fact that . Taking (D.7) into (D.6), we obtain

which concludes the proof of (D.4).

Continuing from (D.4), we have

where the second inequality follows from the fact that . Taking the conditional expectation given , we obtain

| (D.8) | ||||

where the last inequality follows from Cauchy-Schwartz inequality and the fact that . By the -strong variational stability of , we have

| (D.9) |

Moreover, by Assumption 5.3, we have

summing up which gives

| (D.10) | ||||

where the second inequality follows from , and the third inequality follows from Assumption 5.1. Thus, taking (D.9) and (D.10) into (D.8), we obtain for (i.e., ) that,

where the second inequality follows from Lemma E.3. By Lemma E.3, we further have for ,

| (D.11) | ||||

By Lemma E.2, we have for any ,

| (D.12) | ||||

where the second inequality follows from Lemma B.3. Taking (D.12) into (D.11) and choosing , we obtain

| (D.13) | ||||

By Assumption 5.3, we have

| (D.14) | ||||

where the third inequality follows from Assumptions 5.2 and 5.3, and the last inequality follows from Lemma E.3. Taking (D.14) into (D.13) and taking expectation on both sides, we obtain

| (D.15) |

With , , , , and , we have

taking which into (D.1), we obtain

| (D.16) |

Recursively applying (D.1), we obtain

∎

D.2 Proof of Lemma D.2

Appendix E Properties of the Bregman Divergence

The following lemma is used in the analysis of unconstrained games.

Lemma E.1.

Let be -strongly convex with respect to the norm . Assume that (4.2) holds. We have for any ,

Proof.

By the definition of Bregman divergence, we have for any ,

where the inequality follows from -strong convexity of and the Cauchy-Schwartz inequality. By (4.2), we further have

| (E.1) |

where the second inequality follows from -strong convexity of . Rearranging the terms in (E), we finish the proof of Lemma E.1. ∎

The following two lemmas are involved in the analysis of simplex constrained games.

Lemma E.2.

Proof.

Lemma E.3.

Let and be the sequences of strategy profiles generated by Algorithm 2 with . We have

| (E.2) | ||||

| (E.3) | ||||

| (E.4) |

Proof.

Appendix F Extensions to Games with Multiple Equilibria

In this section, we provide more details on how to extend our algorithms to games with multiple equilibria. As discussed in Section 6, the only challenging case is when the function is monotone but not strongly monotone, so that the equilibrium set is a convex and closed region.

Example F.1.

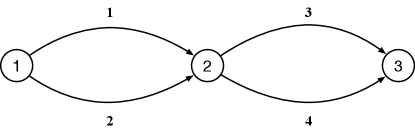

We consider a specific routing game (see Example 3.2). Specifically, we study a simple network as shown in Figure 2. Suppose that the number of nonatomic agents aiming to travel from node 1 to node 4 is .

The costs for using the 4 edges are given by , , and , respectively. There are 4 paths connecting node 1 and node 4 (path 1 uses link 1 and link 3, path 2 uses link 2 and link 4, path 3 uses link 1 and link 4, path 4 uses link 2 and 3). Let be the number of agents using each path and be cost of using each path. Then we have

where . Therefore, we have , which is semi-positive definite but not positive definite because the matrix is singular. Under this setting, the set of equilibria to this routing game can be written as

| (F.1) |

Yet, we can simply add a regularizer to each . We note that the Jacobian of is . The unique equilibrium then would be strongly stable (see Lemma B.2). Meanwhile, if , the Jacobian would also be upper-bounded, hence the function is still Lipschitz continuous. We summarize a few properties of this regularizer. First, as long as and , all conditions in Assumption 5.1 would be satisfied. Seond, if and , the resulting equilibrium is often referred to as a quantal response (response) equilibrium, would is more realistic than a Nash (Wardrop) equilibrium if we believe that human beings are not always fully rational [42, 1, 33]. Third, if and , the resulting equilibrium would be a “smoothed” quantal response equilibrium. It is close to the quantal response equilibrium when is sufficiently small, but it preserves the Lipschitz continuity.