11email: [email protected]

11email: {jqyang,ynzhang}@nwpu.edu.cn

Indoor 3D Reconstruction with an Unknown Camera-Projector Pair

Abstract

Structured light-based method with a camera-projector pair (CPP) plays a vital role in indoor 3D reconstruction, especially for scenes with weak textures. Previous methods usually assume known intrinsics, which are pre-calibrated from known objects, or self-calibrated from multi-view observations. It is still challenging to reliably recover CPP intrinsics from only two views without any known objects. In this paper, we provide a simple yet reliable solution. We demonstrate that, for the first time, sufficient constraints on CPP intrinsics can be derived from an unknown cuboid corner (C2), e.g. a room’s corner, which is a common structure in indoor scenes. In addition, with only known camera principal point, the complex multi-variable estimation of all CPP intrinsics can be simplified to a simple univariable optimization problem, leading to reliable calibration and thus direct 3D reconstruction with unknown CPP. Extensive results have demonstrated the superiority of the proposed method over both traditional and learning-based counterparts. Furthermore, the proposed method also demonstrates impressive potential to solve similar tasks without active lighting, such as sparse-view structure from motion.

Keywords:

Indoor 3D reconstruction Structured light Camera self-calibration Two-view geometry1 Introduction

Compared with other 3D reconstruction methods, such as structure from motion (SfM) [29], multi-view stereo (MVS) [12, 30, 31], time-of-flight (TOF) cameras [1], structured-light (SL) methods [13] can produce accurate and dense 3D point cloud of the scene without requiring texture on the scene surfaces. In addition, the system used in SL is simple and cheap, which often consists of a camera-projector pair (CPP). These advantages make SL more suitable for indoor scenes, even when dealing with walls or floors that lack textures. However, most CPPs are assumed pre-calibrated offline [40] before 3D reconstruction. The parameters of the camera and projector are known and fixed across reconstruction. This limits its flexibility and applications, especially for scenarios where parameters of the CPP need to be frequently adjusted. It is more desirable to reconstruct UNKNOWN indoor scenes with an UNKNOWN CPP, where the underlying CPP self-calibration is still an open problem due to the following challenges.

Firstly, CPP self-calibration can be formulated as a typical two-view vary-intrinsic camera self-calibration problem, where the projector in a CPP is treated as another camera with distinct parameters. This problem is under-constrained for previous camera self-calibration in restricted[18, 2] or general motion[9], since only two constraints can be constructed from the Kruppa equation [39, 10, 17] or fundamental matrix [5, 23], whereas there are at least three unknowns even for a constant camera. Therefore, at least three views [26] (often tens or more for a reliable result) are required for previous methods including most learning-based [14, 24, 16, 21]. In addition, the assumptions, such as a constant camera, or a known principal point (PP) [20, 4, 32, 25], do not hold for a CPP, where the intrinsics of a projector are often different from those of the camera. The unknown PP and focal length for the projector plus unknowns of the camera (even with a known PP) still make the calibration an challenging ill-posed problem.

Secondly, there are only limited scene cues for CPP self-calibration in indoor scenes. Indeed, for an indoor scene, there are lots of planes, from which inter-view homographies [15, 19] provide additional constraints. However, at least four such homographies yield a complete solution, which is incapable of the two-view CPP problem. The Manhattan World (MW) assumption [8] is another common scene property. Under this assumption, vanishing points (VPs) can be extracted from images of parallel line segments [36]. At least three (say mutually orthogonal) VPs yield a camera calibration [6], even from a single image. Nevertheless, the texture-lessness of an indoor scene, see Fig. 1(a), often “violates” this assumption, leading to insufficient lines for reliably estimating a VP.

Please note that recent learning-based methods [27, 37, 22, 33, 3, 7, 34] for indoor reconstruction from sparse or even single view have demonstrated impressive performance. However, most of them still assume a known or partially (PP is known) known camera.

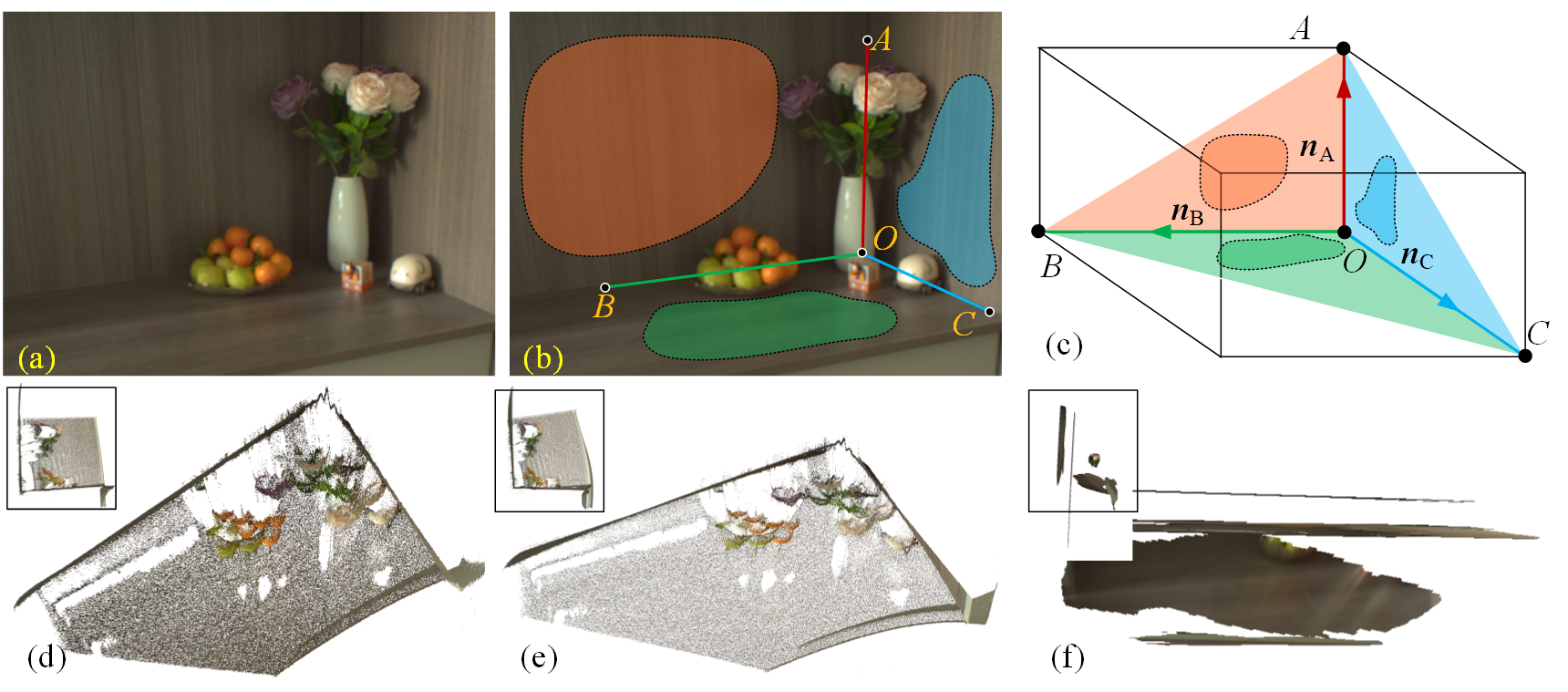

We propose a method to overcome these limitations above. Instead of line segments, we focus on another common but simple structure in indoor scenes, i.e. a cuboid corner (C2) such as a room corner (see Fig. 1(a)), from which sufficient constraints can be constructed for CPP self-calibration. The geometry of a C2 needs not be known, and occlusion is allowed when observing it. Additionally, with only known camera principal point, the complex multi-variable estimation of all CPP intrinsics can be simplified to a simple univariable optimization problem. This leads to a reliable and accurate calibration from only two-view observations of an unknown scene, see Fig. 1(d), achieving indoor 3D reconstruction with only an unknown CPP. Furthermore, this C2-based calibration can be easily extended to similar tasks, such as the challenging camera self-calibration in two-view SfM.

2 Notations and Basic Principles

2.1 Camera model

A camera is represented with a pin-hole model as

| (1) |

where is the camera matrix and is a scalar.

The camera matrix is decomposed into a concatenation of camera intrinsics and extrinsics, i.e. camera rotation and translation , as

| (2) |

where has the form as

| (3) |

where and are focal lengths along and directions. is the skew factor. is the principal point.

Similar to [26], we assume a “natural” camera with square pixels, i.e. equals to 1 and . Additionally, the lens distortion is assumed insignificant or corrected in advance, which is thus not considered in the following discussion.

2.2 C2 parameterization

As shown in Fig. 1(c), a typical C2 is a Tri-rectangular tetrahedron with four vertices, e.g. . Each two of vertices defines an edge and hence a total of six edges. There is a special vertex, i.e. in Fig. 1(c), usually called the right angle (RA), where the angle between each two edges with RA as their common vertex is a right angle. Accordingly, we have three mutually orthogonal edges for a C2, as shown in Fig. 1(c), which are called legs , and , respectively. The planes defined by each two of these legs are also orthogonal to each other, which are denoted as the faces and with normalized , and as their normal respectively, see triangles and in Fig. 1(c). Notably, significant occlusion of a C2 is allowed, as shown in Fig. 1(b), where all or some of the vertices and legs are not directly observed. In fact, we require only three partially observed faces of a C2 for calibration, since vertices and legs can be inferred from inter-plane homographies (see Sec. 4.2).

According to the definition above, a C2 is defined by seven parameters up to an unknown scale: three for rotation, two for scaled translation and two for the length ratios between legs. Specifically, in the camera coordinate frame, these parameters can be specified as

| (4) |

| (5) |

and

| (6) |

where is the 3D coordinate of , and is an arbitrary scalar. and are length ratios of corresponding legs, respectively.

2.3 Problem formulation

The core of direct 3D reconstruction with an unknown CPP lies in the CPP self-calibration. Provided a known camera principal point, the CPP self-calibration aims to recover the remaining CPP intrinsics from only two-view correspondences of an unknown C2.

More specifically, given matched image point pairs on faces of a C2, or , respectively, and the camera principal point , we aim to recover both focal lengths and of the camera and projector, and the principal point of the projector. Extrinsics and the parameters of C2 are last considered in this paper, since they can be easily determined after intrinsics estimated.

Please note that, even is assumed known, according to a simple argument counting, the self-calibration is still significantly ill-posed: There are four unknowns, i.e. , and , whereas the Kruppa equation can only provides two constraints, leaving two unknowns unconstrainted. For textureless indoor scenes with insufficient line segments, we will show how to solve this problem by constructing sufficient constraints from an unknown C2 in the following section.

3 Geometry of cameras viewing a C2

In this section, we describe the single- and two-view geometry of a camera viewing a C2, from which the self-calibration algorithm is developed.

3.1 Single-view geometry

For clarity, we assume a canonical C2 as shown in Fig. 1(b). Suppose the four vertices , , , of this C2 are imaged by a camera at image points , , and , respectively. , , and are inhomogeneous 3D coordinates of these vertices, respectively, in the camera coordinate frame. The camera intrinsic matrix is . Please note that since only a single view is considered in this subsection, the subscript “c” is omitted.

Each vertex can be obtained by back-projecting from its image as

| (7) |

where is the vertex index which can be , , or . is an unknown scalar.

Since the C2 is parameterized up to an unknown scalar, without loss of generalization, we set to a specific value, say . Accordingly, only , and are unknown.

By enforcing the orthogonality constraints between the legs , and , we have the following equations.

| (8) |

where is the image of the absolute conic (IAC).

Eq. 9 establishes a concise relationship between the IAC and the image of C2, which provides three independent constraints on the camera intrinsics. Moreover, Proposition 1 can be easily derived as below.

Proposition 1. Given a calibrated camera, a C2 can be determined up to at most two different solutions from its image.

Proof. As an alternative parameterization to that in Sec. 2.2, a C2 can also be defined by its four vertices. Given the images , , and of these vertices, the up-to-scale determination of this C2 is to determine a triplet of three scalars , and . Similarly, is set to 1. Enforcing the orthogonality constraints yields Eq. 9. Since the camera intrinsics is known for a calibrated camera, Eq. 9 changes to a ternary quadratic form. Solving this form of Eq. 9 leads to two solutions for the unknown triplet.

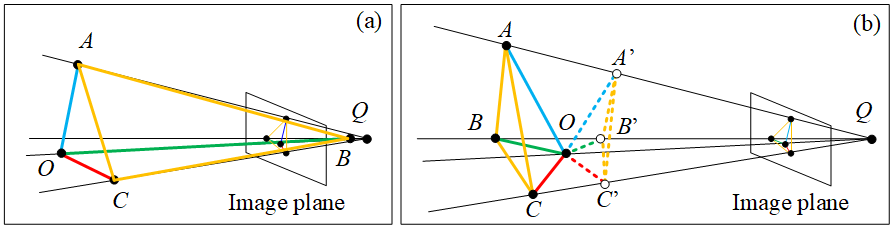

There are three possible configurations for the solutions: two real different solutions, one real solution and two complex solutions, as shown in Fig. 2. Here we only provide a geometric interpretation about these configurations instead of rigorous proof (since it is in fact straightforward). The last two configurations correspond to a C2 located with (at least) one vertex (not the RA) close to or behind the camera center, see vertex close to the camera center in Fig. 2(a), which are not possible for a real camera. Hence, we focus only on the configuration with two different real solutions, as shown in Fig. 2(b). These two solutions just correspond to two C2’s with the same common RA but different orientations. As shown in Fig. 2(b), they are “concave” and “convex” C2’s, respectively. Given another view or some prior about the C2, the “convexity” can be easily identified, see convexity check in Sec. 4.2, and the only solution of C2 can thus be determined.

3.2 Two-view geometry

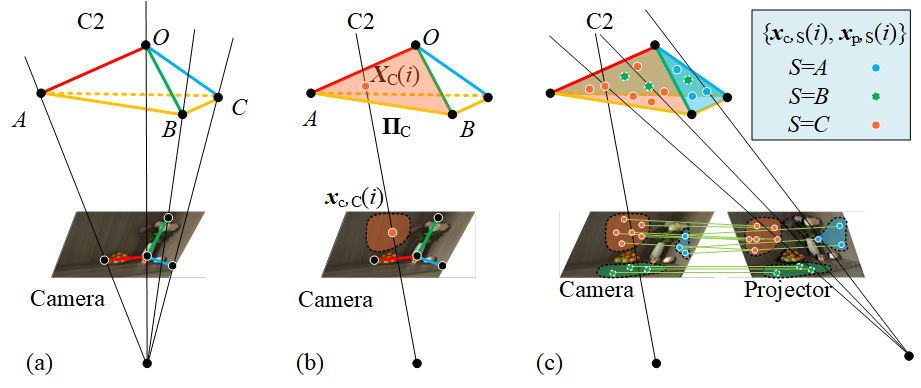

Based on Proposition 1, the two-view geometry can be easily derived. It defines a transfer from camera intrinsics to those of the projector, as shown in Fig. 3.

Camera to C2. Other than vertex images, matches on faces of the C2 are also considered. can be , or , and is the point number of face . Given the camera’s , we first determine the unknown C2 from its vertex images according to Proposition 1. Those vertices , , and are thus computed, which are represented in the camera coordinate frame. Note that in this specific derivation the world coordinate frame coincides with the camera coordinate frame.

C2 to . Since three points define a plane, the three faces are determined by three corresponding vertices. Accordingly, all points on there faces can be recovered by finding the intersections of the back-projecting from their image points and the respective faces. For instance, the face is determined by , and . A point satisfies both the plane equation and the back-projecting equation Eq. 7 as

| (10) |

where is the image point of captured by the camera. is an unknown scalar.

Solving Eq. 10 yields the unique and hence . Similarly, all points on each face can be determined from their images.

to projector. Now, these non-coplanar points and their images in the projector’s view are available. The parameter matrix for the projector can easily be solved via the Direct Linear Transformation (DLT) algorithm [17]. The intrinsics (as well as and between the camera and projector) can obtained by decomposing .

This process establishes a transferring chain from to , which can be represented by an abstract function as

| (11) |

Additionally, if a known principal point of the camera is given, Eq. 11 further changes to a univariable function.

| (12) |

According to Eq. 11 and Eq. 12, given a C2, depends only on or . Accordingly, the original multi-variable (i.e. four) CPP calibration problem is simplified to a univariable estimation problem with the only unknown . Additionally, this relationship between and is deterministic, since given a guess of , the corresponding can be computed uniquely without solving ambiguous polynomial equations such as Kruppa equation. This determinacy together with univariability leads to a simple yet reliable calibration algorithm.

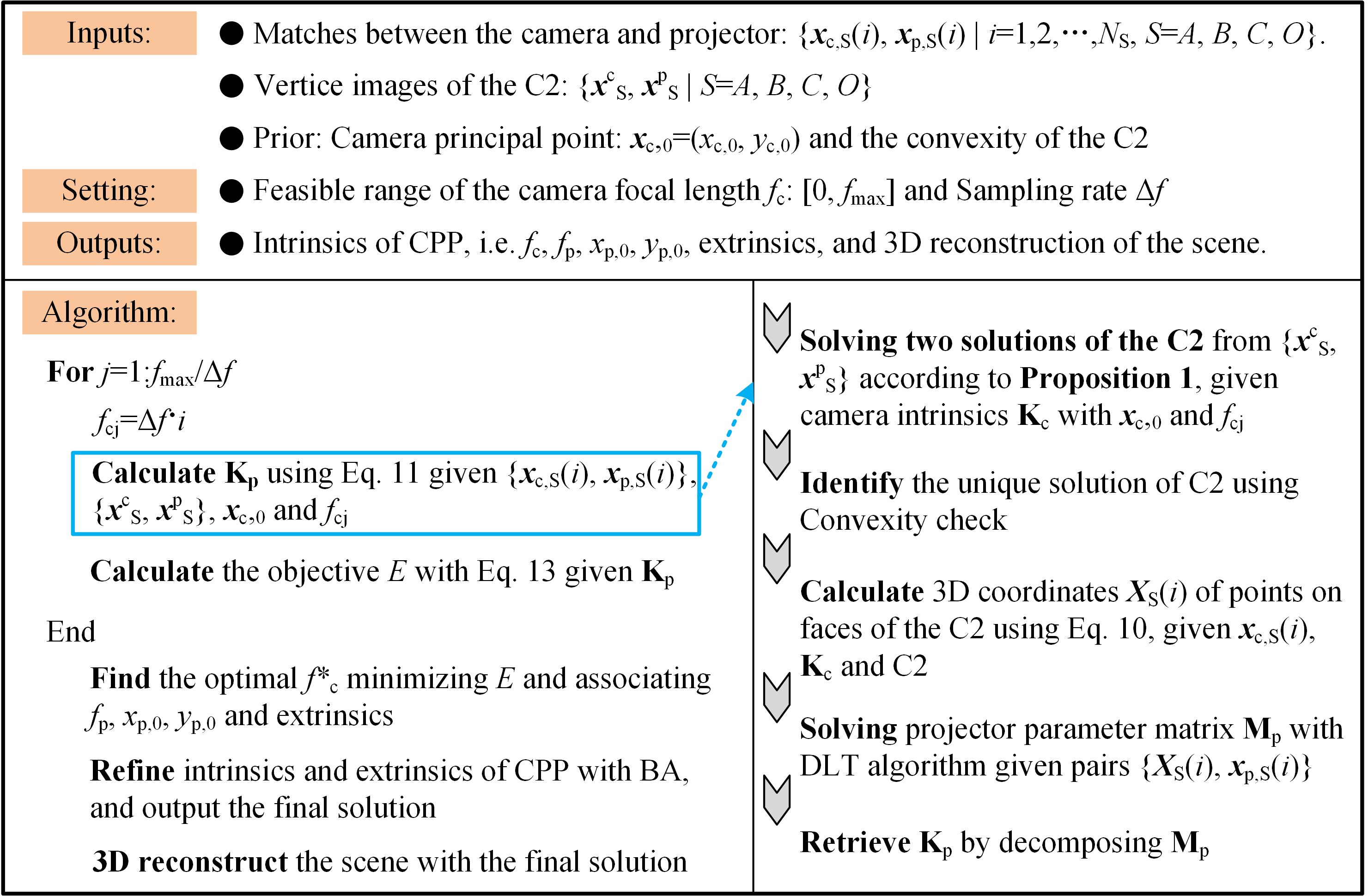

4 CPP self-calibration algorithm

4.1 Optimization objective

According to Eq. 12, we only need to determine the single unknown for the CPP calibration. Please note the transferring in Eq. 11 from the camera to projector is invertible. Considering that the forward and backward transferring should be consistent, the camera transferring to the projector should transfer to the same camera if transferring back. This allows us to define a cycle loss between the original and transferred back camera intrinsics. Additionally, since the projector is also assumed “natural”, of which equals to 0 and =, we construct an optimization objective as

| (13) |

where

| (14) |

where the superscripts “ori” and “bk” indicate variables from the original or back-transferred intrisics of the camera.

Solving Eq. 13 is a simple univariable optimization problem. Additionally, if a relatively loose feasible range for , say , where can be set to 10000 or larger, a globally optimal solution can be estimated via an exact search [11]. This is trivial when we discretizing the feasible range with some sampling rate , say , without sacrificing much accuracy. Alternatively, the solution can be searched via a bounded one-dimensional optimizer[11], which was used in our experiments due to its fast convergence and comparable stability.

4.2 Implementation details

Input preparation. Our algorithm requires only matches between the camera and projector views for a C2. We firstly established correspondences using structured-light patterns [28]. These correspondences are then partitioned into three sets on faces of the C2, as shown in Fig. 3(c), by manually drawing respective masks, see details in Supplementary material.

Inference of vertices and legs for an occluded C2. Given only partially observed faces of a C2, the inter-view homography , = , or , induced by each face can be estimated. The leg, say , between two faces, i.e. and , can be determined using the two eigen vectors of [38]. Accordingly, the can be determined from the three legs. The other three vertices can be picked manually in the legs.

Convexity check. In our algorithm, the convexity of a C2 is visually recognized by human or known as a prior. For instance, when reconstruction a room corner, as shown in Fig. 1(a), the C2 can be easily recognized as a concave one.

The pseudocode of our algorithm is shown in Fig. 4.

5 Experiments

5.1 Results on indoor scenes

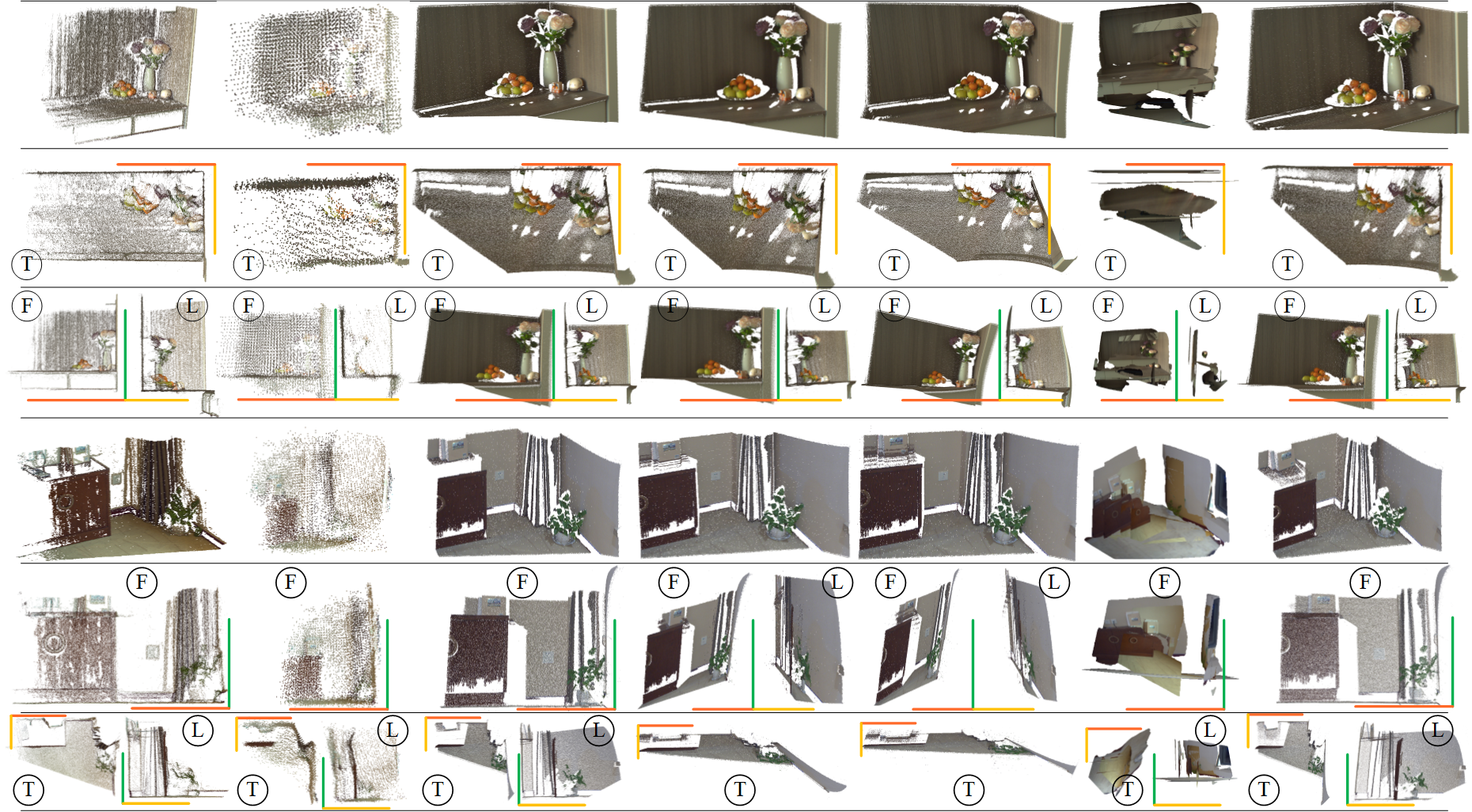

Scenes. Since there is no publicly available datasets of structured-light indoor images, we captured our own data using a CPP (see CCP setup below) to evaluate methods on diverse indoor scenes. As shown in the first row in Fig. 5, each scene contains a partially observed C2, which consists of floors and walls with repetitive or weak textures. Additionally, only limited line segments are observable in the scenes. These makes challenging scenes for CPP self-calibration and reconstruction. To be noted, in spite of different-level occlusions, vertex images of the C2 can still be inferred from its partially observed faces, see the second row in Fig. 5.

CPP setup. A CPP with a 2448×2048 camera and a 854×480 projector was used, which contained constant but different intrinsics across scenes. The ground truths were obtained with a target-based method [35], where the focal lengths and principal points are {1791.1, (1256.3, 1054.3)} and {1247.3, (377.1, 234.0)} for the camera and projector, respectively. More comparison results of different CPPs can be found in Supplementary material.

Baselines. We compared our method with state-of-the-art methods in both two-view and multi-view configurations (see Tab. 1), respectively. As baselines of traditional and learning-based self-calibration, COLMAP [29] and the recently reported DroidCalib [16] were used.

In the multi-view configuration, both methods estimated only camera intrinsics from about 20 views, whereas the projector was not involved since it cannot capture images. This is a typical multi-view SfM task in well-posed configuration.

In the two-view configuration, similarly, DroidCalib still only perform camera calibration but from two views, of which one is identical to the camera view used in our method and the other is close to the projector view. In contrast, COLMAP with different initial value and optimization strategies were performed for a comprehensive comparison, see Tab. 1. For a fair comparison, all of them accept matches from our method as the input, i.e. , and then directly estimate the intrinsics of the camera and projector, respectively. The camera principal point (PP) is known a prior. In Tab. 1, “C” refers to imposing the projector image center as its initial values of PP, whereas “R” means a normally random PP from the range . and are width and height of the projector image, respectively. COLMAP with “BA” performs additional bundle adjustment (BA) [17] upon the result of those without “BA”. Additionally, PP’s were refined in methods with “BA”, which, however, were fixed in those without “BA”.

In addition to methods above, PlaneFormer [3], a state-of-the-art two-view 3D reconstruction method, was also compared for 3D reconstruction evaluation.

5.1.1 Calibration result.

As shown in Tab. 1, our method achieves competitive accuracy as those of multi-view methods, reaching a mean absolute error (MAE) of 4.3% against 0.4% and 1.9% for COLMAP and DroidCalib in multi-view setting. It is important to note that 20 views and only a constant camera was assumed in these baselines, which is a much simpler task than that of our method with two views of varying intrinsics. When the number of views reduces to only two, DroidCalib just failed (thus not shown in Tab. 1). The calibration errors of two-view COLMAPs increase dramatically, especially for those of the projector intrinsics, reaching 30% or even not converging. Additionally, since the two-view calibration is significantly ill-posed, refinement on PP with BA doesn’t improve and even reduce the accuracy, see results of COLMAP with “BA” in Tab. 1. Furthermore, the performance of COLMAP demonstrates high dependency on the initial value of the projector PP. Since the image center is close to the PP, taking image center as the initial value generally leads to a more accurate calibration than those with a random value, as shown in rows marked with “C” and “R” in Tab. 1. In contrast, our method direct estimate the projector PP without any initial guess, yielding more stable and accurate result.

| Scenes | Multi-view | Two-view | ||||||

|---|---|---|---|---|---|---|---|---|

| Method | Colmap | DroidCalib | Colmap | Ours | ||||

| C | C-BA | R | R-BA | |||||

| No.1 | 0.4 | -3.1 | -2.3 | 11.5 | -70.0 | -60.8 | -2.4 | |

| - | - | 3.3 | 3.1 | -95.0 | -113.2 | 1.9 | ||

| - | - | -13.2 | -12.5 | 22.6 | 38.6 | -9.3 | ||

| - | - | -2.6 | -1.7 | -67.4 | -116.8 | -10.2 | ||

| No.2 | 0.4 | -1.4 | -68.5 | -33.0 | -47.4 | -56.5 | -4.6 | |

| - | - | -382.1 | -497.5 | -966.6 | -1634.8 | 0.1 | ||

| - | - | -13.2 | 72.3 | 22.6 | -46.1 | -9.1 | ||

| - | - | -2.6 | -53.2 | -67.4 | -108.5 | 4.2 | ||

| No.3 | -0.1 | -1.6 | -41.3 | -32.6 | -43.9 | -39.3 | -3.0 | |

| - | - | -128.7 | -99.5 | -164.3 | -143.2 | -0.8 | ||

| - | - | -13.2 | -6.1 | 22.6 | 26.7 | -7.3 | ||

| - | - | -2.6 | -18.4 | -67.4 | -65.6 | -4.9 | ||

| No.4 | 0.6 | -1.6 | -37.2 | -26.7 | -103.4 | -37.2 | 1.3 | |

| - | - | -68.9 | -64.9 | -181.3 | -178.3 | 6.6 | ||

| - | - | -13.2 | -18.6 | 22.6 | 16.1 | -3.4 | ||

| - | - | -2.6 | 32.8 | -67.4 | -148.9 | 0.1 | ||

| MAE | 0.4 | 1.9 | 49.7 | 61.5 | 127.0 | 176.9 | 4.3 | |

5.1.2 Reconstruction result.

As expected, multi-view COLMAP achieves the most accurate result, whereas those from its learning-based counterpart are “noisier” with noticeable distortions on orthogonal planes, such as the floor and wall in the second scene in Fig. 6. In contrast to both methods, our method produced reconstruction with similar fidelity but higher density from two views. Additionally, compared with two-view methods such COLMAPs with C-BA, R-BA or PlaneFormer, which generate significantly distorted reconstructions due to inaccurate calibration, as shown in the fourth and fifth columns in Fig. 6, or tend to fail due to mis-detection and matching of planes, as shown in the sixth column in Fig. 6, our method demonstrated noticeable superiority over baselines. Furthermore, comparable reconstruction accuracy was achieved against COLMAP with GT CPP parameters.

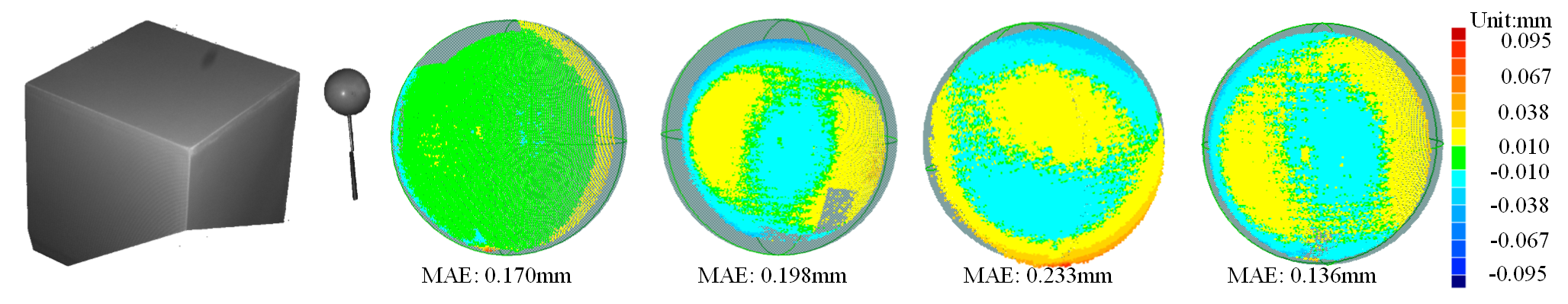

Since the orthogonality of faces of the C2 has been exploited inherently in our method, for a fairer comparison, we further quantitatively compared the reconstruction accuracy of a high-precision sphere. As shown in Fig. 7, the corner of a cuboid was used for calibration, and a 50.8-mm sphere was used for evaluation. Please note that the sphere has never been used for calibration. We use MAE and heatmaps of sphere-fitting errors to access the reconstruction accuracy. While the heatmap demonstrates the COLMAP with GT achieves balanced performance with more even error distribution across the sphere surface, our method reaches the lowest MAE among them, demonstrating a competitive performance.

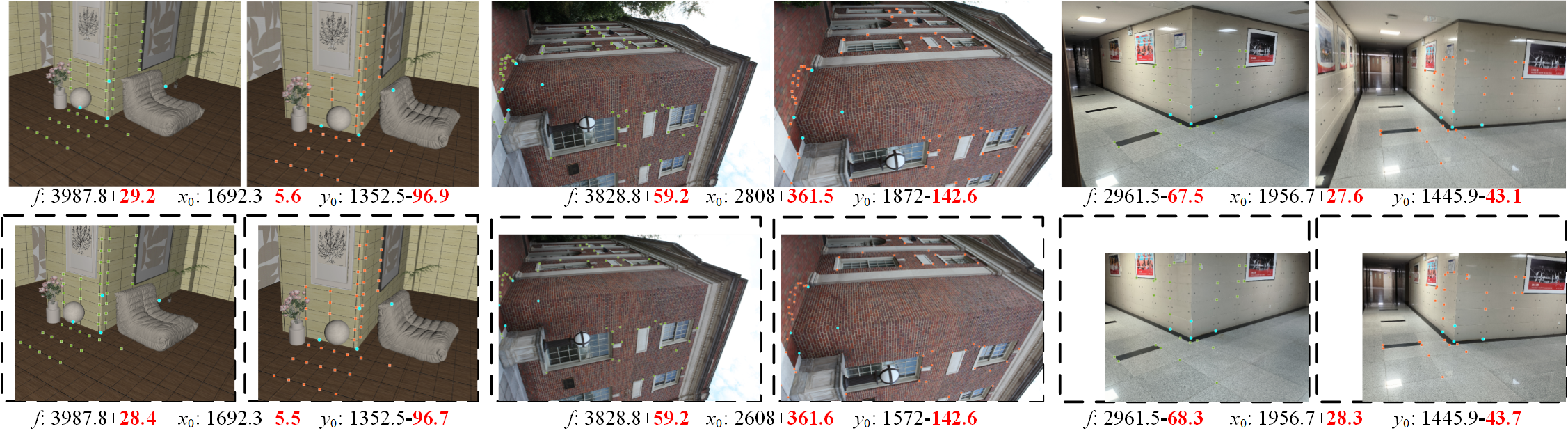

5.2 Extension to two-view SfM

Instead of limited in indoor scenes with a CPP, our method can be easily adapted to more general scenarios with only cameras, where we try to self-calibrate cameras in a two-view SfM problem. A constant but unknown camera across views was assumed. With simple modification on the objective in Eq. 13, see details in Supplementary material, the ill-posed problem is solved, as shown in the first row in Fig. 8. Furthermore, since additional constraints can be derived from the assumption of a constant camera, no prior about the camera principal point is required. This allows to estimate all camera intrinsics even from a pair of cropped images, as shown in the second row in Fig. 8. Surprisingly, our method achieved consistent accuracy on cropped images as the original ones, which demonstrates considerable stability and robustness to image cropping. It provides potential solution for reliable camera self-calibration in two-view SfM.

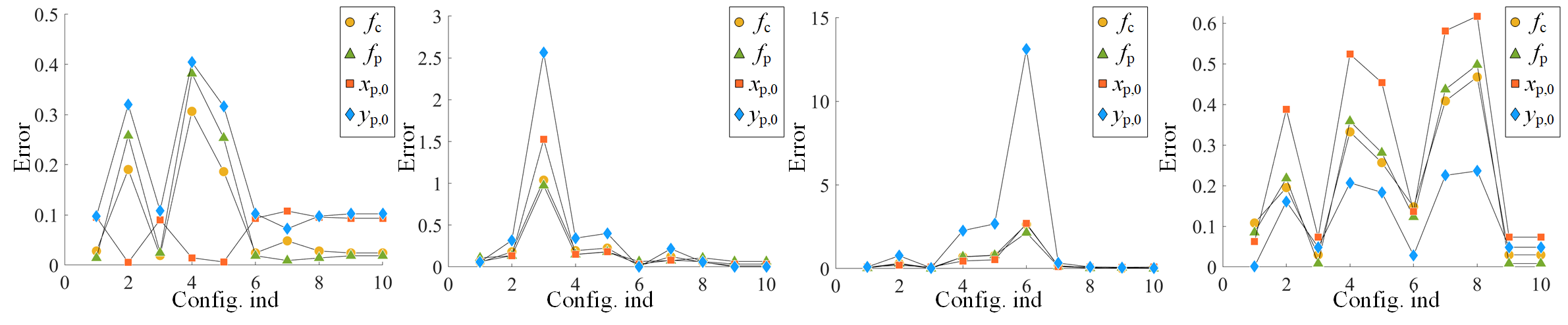

5.3 Impact of the optimization objective and density of matches

We further investigated the impact of different configurations of the optimization objective, to determine which one is more significant for the calibration. Ten configurations were evaluated, of which the first seven corresponded to , and the last three were , and in Eq. 13 and Eq. 14, respectively. As shown in Fig. 9 and Tab. 2, the last two configurations contribute dominantly to the calibration, which achieved the lowest errors across scenes. This indicates that the cycle loss is much more significant than . The sum of and , i.e. , achieved comprehensively best performance, reaching the top calibration accuracy.

| Config | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Error/% | 6.7 | 25.4 | 41.8 | 46.9 | 48.2 | 133.8 | 19.6 | 16.5 | 4.4 | 4.3 |

We also investigated the impact of the number of matches. The original matches were downsampled by different rates, where the average number for the four scenes were reduced from an order of magnitude 9× to that of only 100. As shown in Tab. 3, our method performs consistently well with different downsampling rates, demonstrating robustness to the number of matches.

| Downsampl.rate | 1 | 10 | 20 | 100 | 200 | 300 | 500 | 100 |

|---|---|---|---|---|---|---|---|---|

| Error/% | 4.29 | 4.32 | 4.34 | 4.29 | 4.23 | 4.33 | 4.31 | 4.22 |

5.4 Degenerated configuration and limitation

There are still some limitations for the proposed method. On the one hand, our method fails in some degenerated configuration, for instance, when at least one of the faces of a C2 passes through the camera center. This is just the case where all points on a face are imaged to the same line. On the other hand, our method requires accurate matches of three segmented faces of a C2. To achieve this, we manually segment images and use structured light patterns to establish reliable and accurate correspondence across views. In the future work, we will further develop algorithms for automatically detecting faces of a C2 and their matches, to fully automate the calibration and reconstruction.

6 Conclusion

This paper proposes to use the C2, a common and simple structure in most daily indoor scenes, to solve the ill-posed two-view CPP self-calibration problem with varying intrinsics across views. The view geometry of a C2 is derived, from which sufficient constraints can be constructed. These constraints allow to simplify the complex multi-variable estimation problem of CPP calibration to a much simpler uni-variable searching one, resulting a reliable and accurate calibration and thus enabling indoor 3D reconstruction with an unknown CPP. Compared with both traditional and learning-based state-of-the-art methods, the proposed method has demonstrated significant improvement on both calibration and reconstruction accuracy. Additionally, the proposed method also demonstrates promising potential for similar tasks such as camera self-calibration in sparse-view SfM.

References

- [1] Achar, S., Bartels, J.R., Whittaker, W.L., Kutulakos, K.N., Narasimhan, S.G.: Epipolar time-of-flight imaging. ACM Transactions on Graphics (2017)

- [2] Agapito, L., Hayman, E., Reid, I.: Self-calibration of rotating and zooming cameras. International journal of computer vision 45, 107–127 (2001)

- [3] Agarwala, S., Jin, L., Rockwell, C., Fouhey, D.F.: Planeformers: From sparse view planes to 3d reconstruction. In: European Conference on Computer Vision. pp. 192–209. Springer (2022)

- [4] Barath, D., Toth, T., Hajder, L.: A minimal solution for two-view focal-length estimation using two affine correspondences. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 6003–6011 (2017)

- [5] Bougnoux, S.: From projective to euclidean space under any practical situation, a criticism of self-calibration. In: Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271). pp. 790–796. IEEE (1998)

- [6] Caprile, B., Torre, V.: Using vanishing points for camera calibration. International journal of computer vision 4(2), 127–139 (1990)

- [7] Chen, Z., Wang, C., Guo, Y.C., Zhang, S.H.: Structnerf: Neural radiance fields for indoor scenes with structural hints. IEEE Transactions on Pattern Analysis and Machine Intelligence (2023)

- [8] Coughlan, J., Yuille, A.L.: The manhattan world assumption: Regularities in scene statistics which enable bayesian inference. Advances in Neural Information Processing Systems 13 (2000)

- [9] Espuny, F.: A new linear method for camera self-calibration with planar motion. Journal of Mathematical Imaging and Vision 27(1), 81–88 (2007)

- [10] Faugeras, O.D., Luong, Q.T., Maybank, S.J.: Camera self-calibration: Theory and experiments. In: Computer Vision—ECCV’92: Second European Conference on Computer Vision Santa Margherita Ligure, Italy, May 19–22, 1992 Proceedings 2. pp. 321–334. Springer (1992)

- [11] Forsythe, G.E., et al.: Computer methods for mathematical computations. Prentice-hall (1977)

- [12] Furukawa, Y., Hernández, C., et al.: Multi-view stereo: A tutorial. Foundations and Trends® in Computer Graphics and Vision 9(1-2), 1–148 (2015)

- [13] Geng, J.: Structured-light 3d surface imaging: a tutorial. Advances in optics and photonics 3(2), 128–160 (2011)

- [14] Gordon, A., Li, H., Jonschkowski, R., Angelova, A.: Depth from videos in the wild: Unsupervised monocular depth learning from unknown cameras. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 8977–8986 (2019)

- [15] Gurdjos, P., Sturm, P.: Methods and geometry for plane-based self-calibration. In: 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings. vol. 1, pp. I–I. IEEE (2003)

- [16] Hagemann, A., Knorr, M., Stiller, C.: Deep geometry-aware camera self-calibration from video. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 3438–3448 (2023)

- [17] Hartley, R., Zisserman, A.: Multiple view geometry in computer vision. Cambridge university press (2003)

- [18] Hartley, R.I.: Self-calibration from two views (1994)

- [19] Herrera, D., Kannala, C.J., Heikkila, J.: Forget the checkerboard: Practical self-calibration using a planar scene. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV). pp. 1–9. IEEE (2016)

- [20] Li, H.: A simple solution to the six-point two-view focal-length problem. In: Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, May 7-13, 2006, Proceedings, Part IV 9. pp. 200–213. Springer (2006)

- [21] Liao, K., Nie, L., Huang, S., Lin, C., Zhang, J., Zhao, Y., Gabbouj, M., Tao, D.: Deep learning for camera calibration and beyond: A survey. arXiv preprint arXiv:2303.10559 (2023)

- [22] Liu, C., Kim, K., Gu, J., Furukawa, Y., Kautz, J.: Planercnn: 3d plane detection and reconstruction from a single image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4450–4459 (2019)

- [23] Liu, Y., Zhang, H.: Camera auto-calibration from the steiner conic of the fundamental matrix. In: European Conference on Computer Vision. pp. 431–446. Springer (2022)

- [24] Lopez, M., Mari, R., Gargallo, P., Kuang, Y., Gonzalez-Jimenez, J., Haro, G.: Deep single image camera calibration with radial distortion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 11817–11825 (2019)

- [25] Melanitis, N., Maragos, P.: A linear method for camera pair self-calibration. Computer Vision and Image Understanding 210, 103223 (2021)

- [26] Pollefeys, M., Koch, R., Gool, L.V.: Self-calibration and metric reconstruction inspite of varying and unknown intrinsic camera parameters. International journal of computer vision 32(1), 7–25 (1999)

- [27] Qian, Y., Furukawa, Y.: Learning pairwise inter-plane relations for piecewise planar reconstruction. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VII 16. pp. 330–345. Springer (2020)

- [28] Salvi, J., Fernandez, S., Pribanic, T., Llado, X.: A state of the art in structured light patterns for surface profilometry. Pattern recognition 43(8), 2666–2680 (2010)

- [29] Schonberger, J.L., Frahm, J.M.: Structure-from-motion revisited. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4104–4113 (2016)

- [30] Schönberger, J.L., Zheng, E., Frahm, J.M., Pollefeys, M.: Pixelwise view selection for unstructured multi-view stereo. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part III 14. pp. 501–518. Springer (2016)

- [31] Shen, S.: Accurate multiple view 3d reconstruction using patch-based stereo for large-scale scenes. IEEE transactions on image processing 22(5), 1901–1914 (2013)

- [32] Sturm, P.: On focal length calibration from two views. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001. vol. 2, pp. II–II. IEEE (2001)

- [33] Tan, B., Xue, N., Bai, S., Wu, T., Xia, G.S.: Planetr: Structure-guided transformers for 3d plane recovery. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 4186–4195 (2021)

- [34] Tan, B., Xue, N., Wu, T., Xia, G.S.: Nope-sac: Neural one-plane ransac for sparse-view planar 3d reconstruction. IEEE Transactions on Pattern Analysis and Machine Intelligence (2023)

- [35] Tsai, R.: A versatile camera calibration technique for high-accuracy 3d machine vision metrology using off-the-shelf tv cameras and lenses. IEEE Journal on Robotics and Automation 3(4), 323–344 (1987)

- [36] Wildenauer, H., Hanbury, A.: Robust camera self-calibration from monocular images of manhattan worlds. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition. pp. 2831–2838. IEEE (2012)

- [37] Yazdanpour, M., Fan, G., Sheng, W.: Manhattanfusion: Online dense reconstruction of indoor scenes from depth sequences. IEEE Transactions on Visualization and Computer Graphics 28(7), 2668–2681 (2020)

- [38] Zeinik-Manor, L., Irani, M.: Multiview constraints on homographies. IEEE Transactions on Pattern Analysis and Machine Intelligence 24(2), 214–223 (2002)

- [39] Zeller, C., Faugeras, O.: Camera self-calibration from video sequences: the Kruppa equations revisited. Ph.D. thesis, INRIA (1996)

- [40] Zhang, Z.: A flexible new technique for camera calibration. IEEE Transactions on pattern analysis and machine intelligence 22(11), 1330–1334 (2000)