Incremental Object Grounding Using Scene Graphs

Abstract.

Object grounding tasks aim to locate the target object in an image through verbal communications. Understanding human command is an important process needed for effective human-robot communication. However, this is challenging because human commands can be ambiguous and erroneous. This paper aims to disambiguate the human’s referring expressions by allowing the agent to ask relevant questions based on semantic data obtained from scene graphs. We test if our agent can use relations between objects from a scene graph to ask semantically relevant questions that can disambiguate the original user command. In this paper, we present Incremental Grounding using Scene Graphs (IGSG), a disambiguation model that uses semantic data from an image scene graph and linguistic structures from a language scene graph to ground objects based on human command. Compared to the baseline, IGSG shows promising results in complex real-world scenes where there are multiple identical target objects. IGSG can effectively disambiguate ambiguous or wrong referring expressions by asking disambiguating questions back to the user.

1. Introduction

In the context of human-robot interaction, object grounding is an important ability for understanding human instruction. In order for a robot to complete a task in response to a user’s command, such as retrieving an object, the user must provide a description of the object with respect to the object’s defining features and/or its relation to other surrounding objects. The object descriptions provided by the user are called referring expressions.

Human referring expressions can be difficult for robots to understand because such phrases are often ambiguous or contain errors (Doğan and Leite, 2021). Humans often utilize additional context or communication modalities, such as gestures or facial expressions, to resolve ambiguities (Holler and Beattie, 2003). However, today’s robots typically lack the ability to process such inputs.

In this research, we aim to develop a system that can achieve object grounding in a human-robot interaction scenario. Our main contribution, Incremental Grounding using Scene Graphs (IGSG), is a disambiguation model that can clarify ambiguous or wrong expressions by asking questions to the human. We use scene graphs generated from both the referring expression and the image to obtain all relational information of the objects in the scene. In parallel, we use an off-the-shelf language scene graph parser (Schuster et al., 2015) to convert natural language to a scene graph since the linguistic structure of a language scene graph couples well with the image scene graph data structure and allows the grounding process to be incremental, pruning unnecessary computation. Grounding is achieved by incrementally matching the scene graph generated from the human command to the edges of the image scene graph. If needed, disambiguating questions are generated base on the relational information from the image scene graph.

Prior work has explored the use of natural language referring expressions and object detectors for object grounding (Hatori et al., 2018)(Shridhar and Hsu, 2018). In this work, we contribute the first approach to leverage image scene graphs in this context, and demonstrate that the resulting semantic representation enables improved object disambiguation for complex scenes.

We evaluate our model by testing on a set of images that contain multiple identical objects using clear, ambiguous, and erroneous referring expressions. Experiments show that our model outperforms prior state-of-the-art (Shridhar and Hsu, 2018) on interactive visual grounding on the tested images.

Key contributions of our work include:

-

•

First work to utilize the linguistic structure of a language scene graph to process referring expressions.

-

•

First work to use semantic relations from scene graphs for interactive visual grounding.

-

•

First object grounding work to correct erroneous referring expressions from humans.

2. Related Work

In this section, we set the backbone of our work in scene graph generation by discussing relevant works on language scene graph parser and image scene graph generation. Then, we discuss previous works on object grounding and user command disambiguation to identify common problems and improvements implemented in our work.

2.1. Language Scene Graph

A scene graph is a data structure that provides a formalized way of representing the content of an image. Due to its conciseness and its ability to represent a wide range of content, it was used by Johnson et al. (Johnson et al., 2015) as a novel framework for image retrieval tasks where the authors used scene graphs as queries to retrieve semantically related images. The authors stated that a scene graph is superior to natural language because natural language requires a complex pipeline to handle problems such as co-reference and unstructured-to-structured tuples. Schuster et al. (Schuster et al., 2015) acknowledged the importance of the scene graph in image retrieval and presented a rule-based and classifier-based scene graph parser that converts scene descriptions into scene graphs. Our work utilizes the rule-based parser to generate language scene graphs from input referring expressions.

2.2. Image Scene Graph

Utilizing scene graphs in visual scenes enhance the understanding of an image much further than simple object detection. A visually grounded scene graph (Johnson et al., 2015)(Xu et al., 2017) of an image can capture detailed semantics from an image by modeling the relationships between objects. The ability of the image scene graph to extract rich semantic information from images is being realized in visual question answering (Teney et al., 2017)(Hildebrandt et al., 2020), image captioning (Yao et al., 2018)(Yang et al., 2019c), visual grounding (Yang et al., 2018), and image manipulation (Dhamo et al., 2020). While the promise of scene graphs is exciting, there has been difficulty extracting relational information efficiently and accurately. The naive approach of parsing through every possible relation triplets can be taxing with the increase of the number of objects in the scene. Yang et al. (Yang et al., 2018) attempts to resolve this problem by using a relation proposal network to prune irrelevant object-relation pairs and a graph convolutional network to capture contextual information between objects. Recently, a model proposed by Tang et al. (Tang et al., 2020) reduces the biased relations generated by traditional scene graph generation methods. (Zellers et al., 2018) Counterfactual causality of the biased model is used to identify bad biases, which are removed. This model is able to generate more detailed relationships between objects such as ”standing on” or ”holding” instead of simple relations like ”on”. We use the model from Tang et al. (Tang et al., 2020) to generate scene graphs from images.

2.3. Object Grounding

Object grounding is a task of locating an object referred by a natural language expression in an image. This referring expression includes the appearance of the referent object and relations to other objects in the image. Some approaches to tackle this task is to learn holistic representations of the objects in the image and the expression using neural networks (Zhuang et al., 2018)(Yang et al., 2019b)(Yang et al., 2019a). However, these works neglect linguistic structures that could be utilized to disambiguate vague expressions. Other works use a fixed linguistic structure such as, subject-location-relation (Hu et al., 2017) or subject-relation-object (Yu et al., 2018) triplets to ground an object which often fails in real world scenes which may need complex expressions. Yang et al. (Yang et al., 2020) uses neural modules to ground an object with the guidance of linguistic structure; namely the language scene graph extracted from a rule-based parser (Schuster et al., 2015). Despite all the progress, the limitations of these grounding methods are that they assume perfect expressions and do not take into account vague or erroneous referring expressions.

2.4. Command Disambiguation in Robotics

While visual grounding models (Schuster et al., 2015)(Yang et al., 2020) assume that the given referring expression is clear and lead to eventual grounding, the same cannot be said with real-world human-robot interaction scenarios. Grounding objects in an environment where multiple objects match the user command requires further interaction to disambiguate the given task. For example, in Figure 2, there are multiple cups in the image. A simple command to ”grab the cup” will not lead to immediate grounding. The robot will need more detailed instructions, such as, ”grab the green cup on the table” in order to achieve grounding.

Several works attempt to resolve ambiguities by asking clarifying questions back to the human (Shridhar and Hsu, 2018)(Doğan et al., 2020)(Hatori et al., 2018)(Magassouba et al., 2019). Li et al. (Li et al., 2016) modeled abstract spatial concepts into a probabilistic model where explicit hierarchical symbols are introduced. Sibirtseva et al. (Sibirtseva et al., 2018) compared mixed reality, augmented reality, and monitor as visualization modalities to disambiguate human instructions. Whitney et al. (Whitney et al., 2017) adds pointing gestures to further disambiguate user command. Other works ask questions based on the perspective of the robot relative to the human (Li et al., 2016)(Doğan et al., 2020) However, the results from these papers are generated in a carefully designed lab environment with limited variation of objects such as Legos (Sibirtseva et al., 2018) or blocks (Sibirtseva et al., 2018)(Paul et al., [n.d.]) and are restricted to tabletop (Shridhar and Hsu, 2018) or four-box (Hatori et al., 2018)(Magassouba et al., 2019) settings. Although those can be a straightforward baseline environment, it is far from a real-world environment with objects in various places.

Shridhar et al. (Shridhar and Hsu, 2018) comes close to our goal by achieving interactive visual grounding using a real robot in a tabletop environment. However, their works are limited to rather simple referring expressions heavily based on positional relations such as ”on” and ”next to”. Also, when there are multiple identical objects in in the scene, their model chooses to point at the objects one by one, instead of asking further questions.

Furthermore, all of the works above fail to resolve wrong referring expressions. For example, going back to Figure 2, ”grab the green banana” will fail since there is no banana in the scene. While (Marge and Rudnicky, 2019) identifies and categorizes these as ”impossible to execute” commands, there is no attempt in correcting it. Our proposed model is applicable in real-world scenes with diverse objects and require few interactions. Our model is also able to handle vague or wrong referring expressions.

model structure caption

3. Method

Figure 1 represents the overall architecture of IGSG. Given an image and a referring expression of the target object in the image, IGSG grounds the target object and produces a bounding box around it. If needed, it can ask questions to clarify an ambiguous or wrong expression. This section provides a detailed explanation of the individual parts of IGSG.

3.1. Overview

IGSG achieves grounding using information obtained from the image and language scene graphs. Referring expression from a user is taken as an input for the language scene graph parser and an RGB image is taken as an input for the image scene graph generator to produce scene graphs with nodes as objects and edges as relation between the objects. Figure 1 represents the overall architecture of IGSG. The two scene graphs are taken as input for the reasoning module. The reasoning module uses incremental matching to gather image scene graph edges that are similar to the language scene graph edges. The edge ”candidates” generated from the Reasoning module is then carried over to the Ask module. The ask module asks questions based on the candidates. The human is asked to either select one of the options given or confirm a given relation. In case the candidate edges are identical, the ask module can ask distinctive questions for each candidate. Finally, object grounding is achieved based on the interaction between the human and the ask module.

3.2. Language Scene Graph Generation

Given a referring expression, we parse the command into a language scene graph using an off-the-shelf scene graph parser (Schuster et al., 2015). The output language scene graph renders objects and their attributes as nodes and relations between the objects as edges. In this paper, we consider an edge from a scene graph as a relation ”triplet”: two object nodes and the relation connecting the two objects. A triplet consists of (subject, predicate, object), where the subject and object are nodes and the predicate is a relation. For example, ”cup on table” would become (cup, on, table) in triplet form.

Given a referring expression , we define the language scene graph representation of the expression as a set of edges where . Each edge in consists of an subject node, the relation between the subject and object node, and the object node . All nodes in the set of nodes have the corresponding attribute of the object and its name: . Therefore, a node in set of nodes will be .

3.3. Image Scene Graph Generation

We use the image scene graph generator by Tang et.al (Tang et al., 2020) to extract scene graphs from images. This generator tries to avoid bias in predicate prediction stemming from skewed training data. Compared to other scene graph generators, this model can generate various unbiased predicates instead of common ones such as ”on” and ”has”.

Given an image , we define the image scene graph of the image as where is the set of edges. Each edge where contains the subject predicate and object . The subject and object are from the set of nodes: where each node contains the object name and object attribute .

3.4. Reasoning Module

The reasoning module takes each edge from the language scene graph and every edge from the image scene graph as input. It performs incremental matching to match the components from the language edge to the edges from the image. The goal of the reasoning module is to prune edges from the image scene graph and leave one or more ”candidate” edges that are similar to the human command from the language scene graph.

Algorithm 1 explains the edge matching process. An edge from the language scene graph is compared with the set of image scene graph edges . The overall incremental matching order is . This means that if matching the edges by its object is not enough, the module moves on to matching the subject, then the predicate, and attribute. The , , , and functions in Algorithm 1 return edges from the image scene graph that contain the object/subject/predicate/attribute from . For example, if ”cat on the table”, will return edges from that contain the object ”table”, such as ”white plate on the table”, or ”lamp next to the table”.

Algorithm 2 shows the function. It takes as input an edge from the language scene graph and multiple edges from the image scene graph. Notice that for , we use the Sentence-BERT (Reimers and Gurevych, 2019) sentence embedding model to compare the cosine similarity of the object word pair. A pair of words that surpass the similarity score of 0.8 is considered matching.

There exist three cases in edge matching: one match, multiple matches, and no match. When there are one or more matches, the reasoning module moves on to match the next component in the matching sequence. When there are no matching edges, the module stops and the edge candidates from the previous match are used to ask questions. One exception is when there are no matching edges in the object match sequence. In this case we move on to match the .

Through the reasoning process, irrelevant edges from the image scene graph are pruned and only candidate edges that match or are similar to the human command remain. When there are multiple edges in the candidate list, they are asked back to the human in order to disambiguate the initial command. The function in Algorithm 1 represents this process. This is further explained in the next section.

3.5. Ask Module

A critical step in disambiguation is validating the possible groundings through asking. This means that the focus should expand beyond improving the agent’s ability to understand humans better, to allowing humans to understand the agent better. However, prior solutions (Shridhar and Hsu, 2018)(Hatori et al., 2018) that simply list the objects are not sufficient or can be more confusing for humans to reply accurately. For example, if the command is to ”grab the white plate” in a scene where three white plates exist, there should be a way to differentiate between them so that the human can understand which white plate is where. We use relations with the surrounding objects from the scene graph to pick different relations such as ”white plate near the black cat”, ”white plate next to the lamp”, ”white plate next to the silver fork”. We do this by extracting the lowest occurring relation from each object node, which is illustrated in Algorithm 3. Given that we have multiple identical candidate edges , we look at all image scene graph edges connected to each subject node in the candidate edge. Each candidate subject will have a list of edges from image scene graph. We count how many times an image scene graph edge has occurred for all candidates. Then, each candidate picks an edge with minimum number of overlap.

4. Experiments

We evaluate IGSG against INGRESS (Shridhar and Hsu, 2018), the leading prior interactive human-robot visual grounding tool. INGRESS uses LSTMs (Hochreiter and Schmidhuber, 1997) to learn the holistic representations of the objects in a scene and follows an iterative rule to ask disambiguating questions.

We used a set of images from the Visual Genome dataset (Krishna et al., 2017) to perform our evaluation due to physical access restrictions resulting from COVID-19 closures at the time of the research. Also, we conducted a user survey to collect referring expressions for target objects in the images. Using the answers from the user survey, we generated a set of referring expressions to evaluate our model and INGRESS. We evaluated IGSG using two metrics: i) number of interactions, and ii) success rate.

example image

4.1. Settings and Data

We selected fifteen images from the Visual Genome (Krishna et al., 2017) dataset to be used for object grounding. All selected images contain some components of ambiguity, meaning, multiple objects of the same class exist in the scene. These objects, such as cups or plates, may be of different color of shape, and are placed in the same or different places. For example, in Figure 2, there are three cups in the scene. Simply asking ”the cup” or ”the cup on the table” is not enough to achieve grounding. There are an average of 9.66 objects per image, and the max number of identical objects in an image is 6. One of the objects in the scene is set as the ”target object”. The user has to give commands to the model to ground the target object.

4.2. Preliminary Experiment

We conducted a user experiment in order to collect referring expressions from people without a robotics background in an actual human-robot interaction scenario. The goals of the experiment were to i) observe how people structure their commands in a scene with potential ambiguities, and ii) use the collected responses in the disambiguation experiment to avoid bias. The user experiment was done through Amazon Mechanical Turk. Figure 3 demonstrates the question format seen by the test participant. Given an image, the target object is marked with a red box. The test participant is asked to provide two different commands in order for a robot to grab the target object. For the image in Figure 3, an example pair of commands would be ”(grab the) cup next to the remote” and ”cup next to the green cup”. A total of 150 people participated in the experiment, and after eliminating irrelevant answers a total of 217 answers were collected.

The collected commands were divided into three categories: clear, vague, and not solvable by scene graphs. The first category contains commands that are clear meaning there exists only one object that fit the referring expression of the command. These commands does not require further disambiguation. The second category contains ambiguous or wrong commands that need to be clarified by asking questions. We consider a command is ambiguous if there exists more than one object that fit the referring expression of the command. The third category contains commands that are not solvable with semantic scene graphs. Commands in this category contain positional attributes like furthest, top, and third left. Since image scene graphs extract relations between a pair of objects, those that require comparison between three or more objects cannot be generated. Although such positional attributes does not exist in scene graphs, given a correct subject, our reasoning and asking module can disambiguate and ground the target. Table 1 shows the distribution of categories of the collected commands.

question format

4.3. Disambiguation Evaluation

A total of 15 images were used for disambiguation evaluation. Grounding commands were generated based on the responses collected from the preliminary experiment in section 4.2. Commands from all three categories (clear, vague, not solvable with semantic scene graphs) were used. In addition, complex commands that contain more than one edge were also used. An example of a complex command is ”Black bag in the car next to the red bag”. This command contains two edges ”black bag in the car” and ”black bag next to the red bag”. An average of 6.8 commands were used per image, and a total of 103 commands were used for evaluation.

For each evaluation, a ground truth target object is set and the grounding command is fed into the disambiguation model. When the model asks questions, a relevant answer is provided and the number of interactions is recorded. Grounding accuracy is assessed based on the final grounded object from the model. Grounding is successful when the grounded object is the target object.

Both INGRESS and our IGSG use Faster-RCNN (Ren et al., 2015) for object detection. During the evaluation process, we noticed that the object detection pipeline fails to detect objects for some images. This behavior was especially noticeable on images with cluttered objects. Thus, we divide images into two batches based on the object detection results. Batch 1 contains images where Faster-RCNN successfully detects more than half of objects in the image. Batch 2 contains images where Faster-RCNN detects less than half of the objects in the image. Of the 15 images used, 10 images were in batch 1. We conduct disambiguation on batches and report results separately.

| Category | count |

|---|---|

| clear | 83 |

| vague | 51 |

| not solvable | 83 |

4.4. Results

The experiment results are reported in Tables 2 and 3. As evaluation metrics we use i) Number of interactions, and ii) Success rate. The number of interactions is measured by the number of times the agent asks questions to the human. If the model achieves grounding immediately after the initial command, the number of interaction is zero. The success rate is assessed based on the final grounding result after interactions. IGSG is compared with INGRESS (Shridhar and Hsu, 2018) on the two metrics. We report results separately on the two batches of images.

Table 2 illustrates experiment results on batch 1 images. For batch 1, the object detector gives correct bounding boxes and labels for most of the objects in the image. This allows the image scene graph generator to generate more solid semantic data for the model to utilize. The difference in access to this solid semantic data results in a significantly higher success rate compared to the baseline.

Table 3 illustrates the case where the object detector is not robust and fails to detect more than half of the objects in the image. With additional semantic data from the image scene graph generator, IGSG manages to improve the success rate compared to the baseline. Overall, the results show that IGSG outperforms the baseline in success rate with slight difference in average number of interactions regardless of the performance of the object detector. This shows that our model is more suited for real-world settings with complex scenes.

In Table 4, to further analyze the success rate of our model, we divide the referring expressions into three categories used in the preliminary experiment section, and calculate the success rate of each category. This is done on batch 1 to disregard the effect of failing object detection as much as possible. IGSG significantly outperforms the baseline on all three categories, doubling the success rate when vague user commands were given. It is also notable that even though the image scene graph cannot fully process commands from the ”not solvable” category, IGSG is able to achieve grounding in 80 percent of those referring expressions by interacting with the user.

Figure 4 displays the distribution of the number of interactions for INGRESS and IGSG. Notice that IGSG has a high concentration of one interaction; the agent asks disambiguating questions to the human once. This is mainly because the asking module asks validating questions when there is a slight uncertainty in the reasoning module. For example, in the case of Figure 2, if the provided referring expression is ”green cup under the table” and when the only candidate edge is ”green cup on the table”, the asking module still asks a question to the user to verify if the intended object is the green cup on the table. INGRESS tends to move straight to grounding without validation, which frequently lead to incorrect groundings. This behavior is reflected in the high number of zero interactions for INGRESS and its low success rate. Our model also rarely asks more than one question, and moves directly to grounding only when the referring expression exactly matches one edge from the image scene graph.

| Metrics | INGRESS | OURS |

|---|---|---|

| Average Interactions | 0.809 | 0.89 |

| Success Rate | 0.441 | 0.779 |

| Metrics | INGRESS | OURS |

|---|---|---|

| Average Interactions | 0.847 | 0.8 |

| Success Rate | 0.176 | 0.314 |

| Category | INGRESS | OURS |

|---|---|---|

| clear | 0.432 | 0.757 |

| vague | 0.423 | 0.885 |

| not solvable | 0.6 | 0.8 |

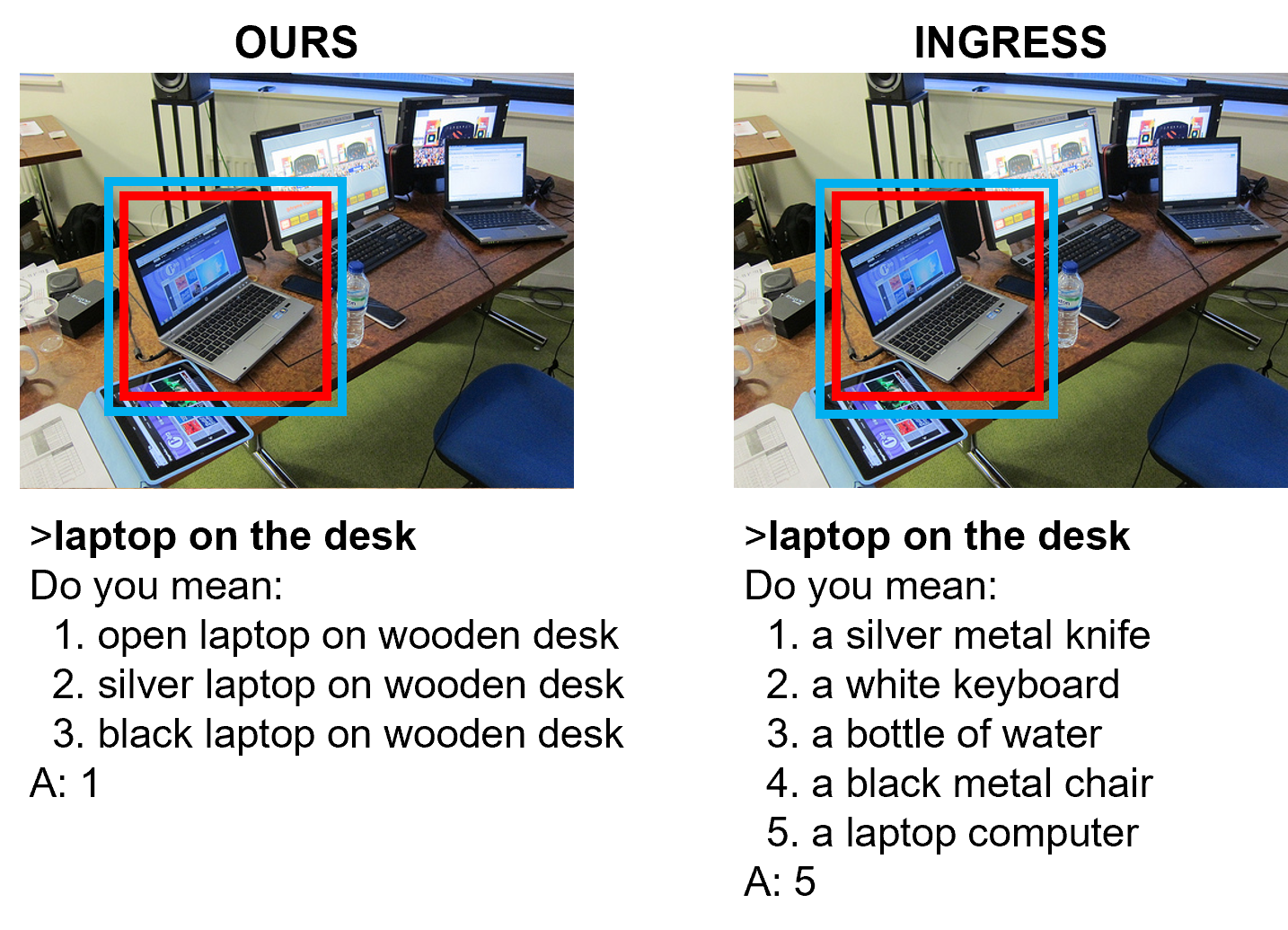

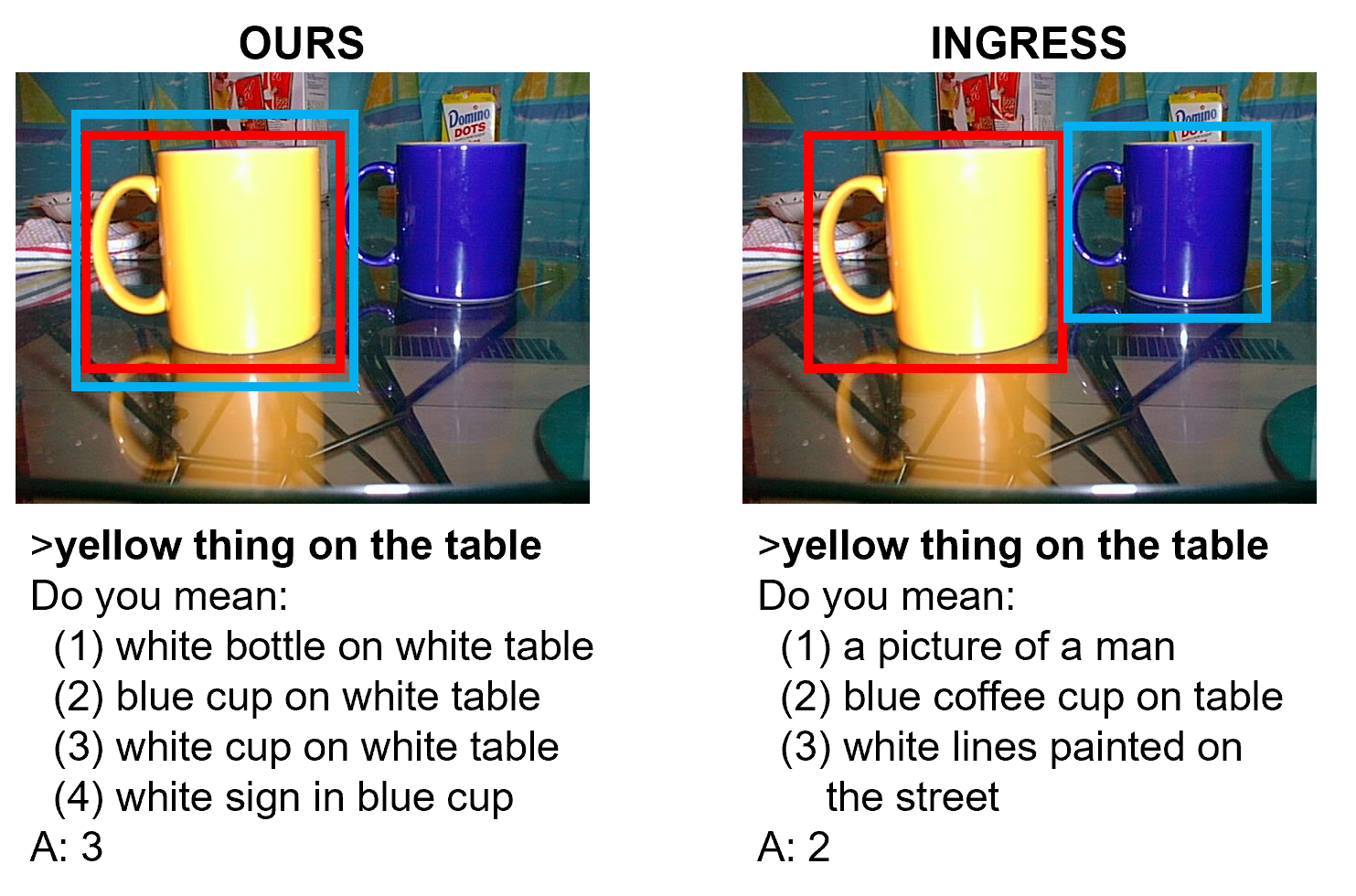

4.5. Examples

Figure 5 shows some examples of the grounding results. The object inside the red box indicates the ground truth target object, and the object inside the blue box is the final grounded object. Figure 5(a) represents a case where IGSG achieves direct grounding. Since there is only one cup on the box and the language referring expression matches one edge from the image scene graph, we can achieve grounding right away without asking further questions. However, INGRESS fails to identify the only cup on the box and has to go through one iteration of interaction to achieve grounding.

The referring expression used in Figure 5(b) cannot be solved with a semantic scene graph, since the image scene graph cannot identify the positional information ”top”. However, IGSG can still achieve grounding by using the subject ”fruit” and asking questions based on it. Notice the two questions asked (the object detection layer fails to detect the third fruit on the far right) contain distinctive relations of the two fruits. Questions asked by INGRESS, on the other hand, are not very useful since the human cannot distinguish the two ”red fruit”. In the case of Figure 5(c), INGRESS asks questions that are irrelevant to the subject (laptop) from the referring expression. Only one of the questions is about the laptop. It also does not give any information about the relation of the objects.

The referring expression in Figure 5(d) contains two edges: ”boy wearing black shirt” and ”boy inside the boat”. IGSG asks questions for each edge. Since the ”young boy” selected from the first and second iterations point to the same object, it achieves grounding after two interactions. INGRESS achieves direct grounding but fails to ground to the right object.

Figure 6 contains an experiment with a wrong referring expression. The input expression ”yellow thing on the table” does contain information about the subject attribute (yellow) and its position, (on the table) but the subject ”thing” does not exist in the image. While INGRESS fails to ask the edge containing the yellow cup, IGSG does and achieves grounding.

5. Discussion

Although the experiment results show promising results, IGSG has some limitations. Mainly, false or failed predictions from the Faster-RCNN object detector and the scene graph generator results in a corrupted image scene graph. This prevents IGSG from achieving accurate grounding and asking relevant disambiguating questions. With an ”ideal” image scene graph generated for the images used for evaluation, IGSG can achieve 0.68 average number of interactions and a success rate of 91.17 percent. Second, the robustness of the language scene graph parser is limited due to its rule-based approach. Since (Schuster et al., 2015) was first proposed, there has been recent advances in natural language processing with the appearance of LSTMs (Hochreiter and Schmidhuber, 1997) and transformers (Vaswani et al., 2017). We believe a data-driven learning based approach that encompasses the recent advancements of natural language processing, can further increase the robustness and accuracy of the language scene graph parser.

6. Conclusion

In this work we presented IGSG, an incremental object grounding model using scene graphs. IGSG achieves grounding by incrementally matching the language scene graph generated from the human’s referring expression to the image scene graph created from the input image. When the referring expression is ambiguous or wrong, IGSG can ask disambiguating questions to interactively reach grounding. Our model outperformed INGRESS (Shridhar and Hsu, 2018) in a visual object grounding experiment both in the number of interactions and success rate. Through this model, we presented a new perspective in object grounding where we acknowledge that humans can be ambiguous and can make mistakes when giving commands to robots. Although existing limitations of scene graph generation models prevent us from creating a perfect grounding model, we hope this is a right step in the direction of using semantic information from scene graphs for grounding and disambiguation.

References

- (1)

- Dhamo et al. (2020) Helisa Dhamo, Azade Farshad, Iro Laina, Nassir Navab, Gregory D Hager, Federico Tombari, and Christian Rupprecht. 2020. Semantic Image Manipulation Using Scene Graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5213–5222.

- Doğan et al. (2020) Fethiye Irmak Doğan, Sarah Gillet, Elizabeth J Carter, and Iolanda Leite. 2020. The impact of adding perspective-taking to spatial referencing during human–robot interaction. Robotics and Autonomous Systems 134 (2020), 103654.

- Doğan and Leite (2021) Fethiye Irmak Doğan and Iolanda Leite. 2021. Open Challenges on Generating Referring Expressions for Human-Robot Interaction. arXiv preprint arXiv:2104.09193 (2021).

- Hatori et al. (2018) Jun Hatori, Yuta Kikuchi, Sosuke Kobayashi, Kuniyuki Takahashi, Yuta Tsuboi, Yuya Unno, Wilson Ko, and Jethro Tan. 2018. Interactively picking real-world objects with unconstrained spoken language instructions. In 2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 3774–3781.

- Hildebrandt et al. (2020) Marcel Hildebrandt, Hang Li, Rajat Koner, Volker Tresp, and Stephan Günnemann. 2020. Scene Graph Reasoning for Visual Question Answering. arXiv preprint arXiv:2007.01072 (2020).

- Hochreiter and Schmidhuber (1997) Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long short-term memory. Neural computation 9, 8 (1997), 1735–1780.

- Holler and Beattie (2003) Judith Holler and Geoffrey Beattie. 2003. Pragmatic aspects of representational gestures: Do speakers use them to clarify verbal ambiguity for the listener? Gesture 3, 2 (2003), 127–154.

- Hu et al. (2017) Ronghang Hu, Marcus Rohrbach, Jacob Andreas, Trevor Darrell, and Kate Saenko. 2017. Modeling relationships in referential expressions with compositional modular networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1115–1124.

- Johnson et al. (2015) Justin Johnson, Ranjay Krishna, Michael Stark, Li-Jia Li, David Shamma, Michael Bernstein, and Li Fei-Fei. 2015. Image retrieval using scene graphs. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3668–3678.

- Krishna et al. (2017) Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A Shamma, et al. 2017. Visual genome: Connecting language and vision using crowdsourced dense image annotations. International journal of computer vision 123, 1 (2017), 32–73.

- Li et al. (2016) Shen Li, Rosario Scalise, Henny Admoni, Stephanie Rosenthal, and Siddhartha S Srinivasa. 2016. Spatial references and perspective in natural language instructions for collaborative manipulation. In 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN). IEEE, 44–51.

- Magassouba et al. (2019) Aly Magassouba, Komei Sugiura, Anh Trinh Quoc, and Hisashi Kawai. 2019. Understanding natural language instructions for fetching daily objects using GAN-based multimodal target–source classification. IEEE Robotics and Automation Letters 4, 4 (2019), 3884–3891.

- Marge and Rudnicky (2019) Matthew Marge and Alexander I Rudnicky. 2019. Miscommunication detection and recovery in situated human–robot dialogue. ACM Transactions on Interactive Intelligent Systems (TiiS) 9, 1 (2019), 1–40.

- Paul et al. ([n.d.]) Rohan Paul, Jacob Arkin, Nicholas Roy, and Thomas M Howard. [n.d.]. Grounding Abstract Spatial Concepts for Language Interaction with Robots.

- Reimers and Gurevych (2019) Nils Reimers and Iryna Gurevych. 2019. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv preprint arXiv:1908.10084 (2019).

- Ren et al. (2015) Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. 2015. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv preprint arXiv:1506.01497 (2015).

- Schuster et al. (2015) Sebastian Schuster, Ranjay Krishna, Angel Chang, Li Fei-Fei, and Christopher D Manning. 2015. Generating semantically precise scene graphs from textual descriptions for improved image retrieval. In Proceedings of the fourth workshop on vision and language. 70–80.

- Shridhar and Hsu (2018) Mohit Shridhar and David Hsu. 2018. Interactive visual grounding of referring expressions for human-robot interaction. arXiv preprint arXiv:1806.03831 (2018).

- Sibirtseva et al. (2018) Elena Sibirtseva, Dimosthenis Kontogiorgos, Olov Nykvist, Hakan Karaoguz, Iolanda Leite, Joakim Gustafson, and Danica Kragic. 2018. A comparison of visualisation methods for disambiguating verbal requests in human-robot interaction. In 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN). IEEE, 43–50.

- Tang et al. (2020) Kaihua Tang, Yulei Niu, Jianqiang Huang, Jiaxin Shi, and Hanwang Zhang. 2020. Unbiased scene graph generation from biased training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3716–3725.

- Teney et al. (2017) Damien Teney, Lingqiao Liu, and Anton van Den Hengel. 2017. Graph-structured representations for visual question answering. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1–9.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. arXiv preprint arXiv:1706.03762 (2017).

- Whitney et al. (2017) David Whitney, Eric Rosen, James MacGlashan, Lawson LS Wong, and Stefanie Tellex. 2017. Reducing errors in object-fetching interactions through social feedback. In 2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 1006–1013.

- Xu et al. (2017) Danfei Xu, Yuke Zhu, Christopher B Choy, and Li Fei-Fei. 2017. Scene graph generation by iterative message passing. In Proceedings of the IEEE conference on computer vision and pattern recognition. 5410–5419.

- Yang et al. (2018) Jianwei Yang, Jiasen Lu, Stefan Lee, Dhruv Batra, and Devi Parikh. 2018. Graph r-cnn for scene graph generation. In Proceedings of the European conference on computer vision (ECCV). 670–685.

- Yang et al. (2019a) Sibei Yang, Guanbin Li, and Yizhou Yu. 2019a. Cross-modal relationship inference for grounding referring expressions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4145–4154.

- Yang et al. (2019b) Sibei Yang, Guanbin Li, and Yizhou Yu. 2019b. Relationship-embedded representation learning for grounding referring expressions. arXiv preprint arXiv:1906.04464 (2019).

- Yang et al. (2020) Sibei Yang, Guanbin Li, and Yizhou Yu. 2020. Graph-structured referring expression reasoning in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9952–9961.

- Yang et al. (2019c) Xu Yang, Kaihua Tang, Hanwang Zhang, and Jianfei Cai. 2019c. Auto-encoding scene graphs for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10685–10694.

- Yao et al. (2018) Ting Yao, Yingwei Pan, Yehao Li, and Tao Mei. 2018. Exploring visual relationship for image captioning. In Proceedings of the European conference on computer vision (ECCV). 684–699.

- Yu et al. (2018) Licheng Yu, Zhe Lin, Xiaohui Shen, Jimei Yang, Xin Lu, Mohit Bansal, and Tamara L Berg. 2018. Mattnet: Modular attention network for referring expression comprehension. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1307–1315.

- Zellers et al. (2018) Rowan Zellers, Mark Yatskar, Sam Thomson, and Yejin Choi. 2018. Neural motifs: Scene graph parsing with global context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5831–5840.

- Zhuang et al. (2018) Bohan Zhuang, Qi Wu, Chunhua Shen, Ian Reid, and Anton Van Den Hengel. 2018. Parallel attention: A unified framework for visual object discovery through dialogs and queries. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4252–4261.