In Defense and Revival of Bayesian Filtering for Thermal Infrared Object Tracking

Abstract

Deep learning-based methods monopolize the latest research in the field of thermal infrared (TIR) object tracking. However, relying solely on deep learning models to obtain better tracking results requires carefully selecting feature information that is beneficial to representing the target object and designing a reasonable template update strategy, which undoubtedly increases the difficulty of model design. Thus, recent TIR tracking methods face many challenges in complex scenarios. This paper introduces a novel Deep Bayesian Filtering (DBF) method to enhance TIR tracking in these challenging situations. DBF is distinctive in its dual-model structure: the system and observation models. The system model leverages motion data to estimate the potential positions of the target object based on two-dimensional Brownian motion, thus generating a prior probability. Following this, the observation model comes into play upon capturing the TIR image. It serves as a classifier and employs infrared information to ascertain the likelihood of these estimated positions, creating a likelihood probability. According to the guidance of the two models, the position of the target object can be determined, and the template can be dynamically updated. Experimental analysis across several benchmark datasets reveals that DBF achieves competitive performance, surpassing most existing TIR tracking methods in complex scenarios.

Index Terms:

Thermal infrared tracking, Bayesian filtering, deep learning, information fusion.I Introduction

Thermal infrared (TIR) object tracking, a key issue in computer vision, has extensive applications in fields like human-computer interaction, video surveillance, unmanned vehicles, and motion analysis. This process entails using TIR cameras to estimating the position and size of a specified target object [1, 2]. Unlike visible light cameras that capture light reflection, TIR cameras detect infrared radiation. Since all objects above absolute zero (-273.15 ∘C or -459.67 ∘F) emit infrared radiation, TIR imaging proves effective in complete darkness or challenging weather [3, 4]. The advantages of TIR tracking include the ability to detect target objects in low-visibility conditions such as fog, smoke, or darkness, where visible light cameras might be ineffective. It also excels in identifying target objects with similar temperatures to their surroundings, a task difficult for other visual tracking methods [5].

Over the past decade, deep learning, particularly convolutional neural networks (CNNs), has emerged as a foundational element for feature extraction in image and video analysis, thereby improving a variety of computer vision tasks [6, 7]. Among the diverse range of network architectures, Siamese networks have gained traction for their effectiveness in visual tracking [8, 9, 10, 11, 12]. These networks function by matching a template of the target object against various candidate regions in subsequent frames, achieving efficient and robust performance [13]. Despite the advancements in deep learning for TIR tracking, challenges persist in certain complex scenarios [14, 15], such as deformation (DEF), scale variation (SV), out-of-view (OV), thermal crossover (TC), motion blur (MB), and background clutter (BC). The constantly changing appearance and shape of the target object necessitate ongoing updates to the template [16]. There are two principal strategies for this: (1) constant use the target object in the first frame as the template [17, 18], and (2) continuous updating of the template with each new frame [19, 20, 21]. Nevertheless, these strategies can be problematic in intricate scenarios. The first strategy struggles with DEF, SV, and OV, as the unaltered template cannot adapt to the target object’s evolving appearance and size, leading to tracking failures. The second strategy, while updating continuously, can be adversely affected by background distractions in cases of TC, MB, and BC, which results in error accumulation and tracking drift.

Recent developments in TIR tracking have largely focused on mitigating the limitations of existing strategies by either updating the template sporadically over several frames or adjusting the learning rate to partially update the template for each frame [22, 23]. This strategy, however, represents a compromise, often resulting in suboptimal performance in complex scenarios. The underlying issue, as we argue, is the exclusive reliance on a single type of data: infrared information is not sufficient on its own for effective TIR tracking in such contexts. The major developmental thrust in existing methods has been towards continuously enhancing the quality of critical representations, with the expectation that the template would become more adaptable to the diverse changes in the target object. Nevertheless, this line of approach is not adequate to fundamentally tackle the complexities of TIR tracking in challenging scenarios [24]. To improve outcomes in these difficult situations, we contend, it’s vital to integrate motion data that is independent of infrared information. By incorporating additional motion data, the tracker is better equipped to estimate the possible position of the target object, aiding the infrared information in effectively differentiating it from the background. For instance, in scenarios with BC, using motion data to forecast the target position can lead to more conservative updates to the template, thereby minimizing tracking errors. While some pioneering methods have incorporated optical flow as motion data [25], merging different types of features often requires elaborate network architectures and extensive training on larger datasets. This necessitates a more adaptive and holistic approach that skillfully combines the strengths of deep learning with additional data types.

Bayesian filtering, distinguished for its ability to proficiently handle uncertainty and integrate different data types, offers an effective solution to the previously outlined challenges [26, 27]. Its effectiveness extends far beyond theoretical constructs, as evidenced by its successful implementation in multiple domains. In autonomous vehicle navigation, the use of Bayesian filters has proven essential for accurately determining vehicle positions and movements under uncertain conditions [28, 29]. Similarly, in robotics, Bayesian filters have significantly improved the ability of robots to navigate and interact in changing environments, thus enhancing their decision-making skills [30, 31]. In the field of signal processing, Bayesian methods have made considerable contributions, notably in noise reduction and signal improvement [32, 33]. These diverse applications not only attest to the robustness and flexibility of Bayesian filtering but also underscore its potential to significantly refine TIR object tracking. By integrating Bayesian filtering, it becomes possible to provide motion information and combine it with infrared data for a more sophisticated and probabilistic analysis of the target position and movements, effectively overcoming the critical limitations of deep learning approaches in TIR tracking, especially in situations where infrared data is ambiguous or insufficient.

We believe incorporating Bayesian filtering into deep learning-based methods for TIR tracking yields two primary benefits. First, it enables the inclusion of motion data independent of infrared information, significantly enhancing the tracker’s capacity to anticipate and discriminate to fast changes in the target position or appearance. This aspect is vital for ensuring tracking precision in scenarios where the infrared information alone may not suffice for accurate differentiation [34]. Second, Bayesian filtering introduces a dynamic model updating strategy, which often lacking in conventional deep learning techniques. Rather than relying on static or indiscriminate updating strategies, Bayesian filtering applies a nuanced approach, adjusting the model based on calculated probabilistic confidence [35]. This method of adaptive updating greatly mitigates the risk of tracking drift and error accumulation, especially in scenarios characterized by intricate thermal behavior. Therefore, the integration of Bayesian filtering not only complements but also significantly enhances the performance of deep learning-based TIR tracking methods, fostering the development of more efficient and effective tracking technologies for a range of challenging situations.

Informed by our earlier analysis, we present a novel framework called Deep Bayesian Filtering (DBF), aimed at enhancing TIR tracking in complex scenarios through the efficient combination of motion and infrared information. DBF comprises two autonomous models: the system model and the observation model. The system model operates prior to the acquisition of the TIR image, leveraging motion data of the target object to estimate its possible positions (generating a prior probability), and is developed based on two-dimensional Brownian motion [36]. After the TIR image is captured, the observation model uses infrared information to determine the likelihood (generating likelihood probability) of the possible position, built on a deep learning-based tracking paradigm [37]. These models converge using the Bayesian formula, which combines the prior probability distribution from the system model with the likelihood probability distribution from the observation model, culminating in the posterior probability distribution of the target position. The peak of this distribution serves as the estimated position. Experimental results on several benchmark datasets have shown that DBF obtains competitive performance against most existing TIR tracking methods in complex scenarios. The significant contributions of this study can be outlined as follows:

-

•

We introduce Deep Bayesian Filtering, an innovative framework designed to merge motion and infrared information for enhanced TIR object tracking.

-

•

The development of both a system model and an observation model, which together collaboratively predict the position of target object, is presented.

-

•

The effectiveness of DBF is validated through comparative experiments on two prominent datasets, demonstrating its superiority over conventional trackers based on single infrared information.

The remainder of this paper is organized as follows. Recent advances related to TIR object tracking are introduced in Section II. Section III elaborates the methodology of the proposed DBF. Section IV details the experiments and discusses the results. Finally, a brief summary of this study is provided in Section V.

II Related Work

In this section, we outline relevant research. First, we explore existing methods in deep learning-based visual tracking in Section II-A. Next, we examine recent representative TIR object tracking methods in Section II-B. The section wraps up with an examination of how Bayesian approaches are used in other visual tasks in Section II-C.

II-A Deep visual object tracking

Deep learning has significantly transformed the landscape of visual object tracking, introducing unparalleled accuracy and robustness [38]. This paradigm shift has catalyzed the development of sophisticated models capable of handling diverse and complex tracking scenarios. The integration of deep learning in object tracking began with works like DLT [39] and GOTURN [40], which demonstrated the efficacy of CNNs. This marked a departure from traditional correlation filter-based tracking methods [19, 41, 42, 43], paving the way for more advanced neural network applications. Subsequent advancements saw the emergence of various methods such as SiamFC [17], MDNet [37], SiamRPN [8], and SiamEXTR [12]. Each method offered unique strengths; for instance, Siamese networks gained popularity for their balance between accuracy and speed in real-time tracking applications [16]. The development and refinement of these methods have been greatly influenced by large-scale training datasets [44, 45, 46], and challenging benchmarks such as OTB [47], GOT-10k [48], and LaSOT [49]. These resources have been crucial in evaluating the performance and robustness of tracking methods under varied conditions. Despite advancements, challenges such as OCC, SV, and BC remain persistent issues in deep learning-based trackers. Moreover, the generalizability of these methods across different environments and objects is an area of ongoing research. Recent notable studies introduce the famous Transformer [50] architecture to the visual tracking community, achieving state-of-the-art performance in most benchmark datasets [51, 52, 53]. Besides, in the broader context of visual object tracking, significant advancements have been made by segmentation-based methods [54, 55, 56]. While these methods focus on visible data, their underlying principles offer valuable insights into feature extraction, semantic segmentation, and integrating diverse data types. These studies reflect the continuous evolution and refinement of visual tracking methods.

II-B TIR object tracking

TIR object tracking, leveraging TIR imaging to detect and follow objects, plays a critical role in scenarios with limited or no visible light. This tracking modality offers unique advantages over traditional visual tracking, particularly in challenging visibility conditions [2]. The integration of deep learning into TIR tracking has marked a significant advancement [57]. Notable models such as CNNs and recurrent neural networks (RNNs) [58] have been adapted to enhance the accuracy and reliability of TIR tracking methods. Integrating infrared information with other modalities, like RGB data, has been explored to improve robustness. This multimodal approach, as demonstrated in MMMPT [59], shows promise in creating more resilient tracking systems under varying conditions. Significant recent contributions include MLSSNet [22], achieving breakthrough performance in distinguishing distractors, and MMNet [24], which innovated in learning dual-level deep representation. These studies showcase the rapid progress in TIR tracking. Emerging trends include the use of powerful Transformer architecture to further refine TIR tracking and the exploration of new feature-fusion technologies [60, 61, 62]. It is also noteworthy that segmentation-based tracking methods have not yet been developed for TIR object tracking due to the lack of accurate pixel-level annotations. We believe TIR tracking has undergone substantial growth, primarily driven by advancements in deep learning. The ongoing research in this field is poised to significantly enhance capabilities in complex scenarios, expanding its applicability across various domains.

II-C Bayesian filtering in visual tasks

Bayesian filtering has become a cornerstone technique in many visual tasks, offering a probabilistic approach to understanding and predicting dynamic systems [63, 64]. Its evolution from simple Bayesian methods to sophisticated filtering algorithms parallels the advancements in visual processing and computational power. At its core, Bayesian filtering involves updating beliefs over time using Bayes theorem [65]. Approaches like the Kalman filter [66] and particle filter [67] have been pivotal in visual tasks, each with its strengths in handling linear and non-linear systems, respectively [68]. The intersection of Bayesian filtering with machine learning has opened new frontiers. Bayesian filtering excels in dealing with uncertainty and noise in visual data, which is crucial in tasks like object recognition [69], object detection [70], gesture recognition [71], and scene understanding [72]. Recent breakthroughs by Yu et al. [73], which introduced a recursive Bayesian filtering technique for remaining useful life estimation of degrading systems, and Su et al. [74], which showcased improved performance in breast cancer prediction using a hybrid Bayesian network model. We hold the opinion that Bayesian filtering continues to play a pivotal role in advancing visual task processing. Its ability to manage uncertainty and integrate with emerging technologies positions it as a critical player in the future of image and video analysis.

III Proposed Method

DBF is the main work in this study. As outlined earlier, prevailing approaches predominantly use infrared information to determine the position of the target object. DBF, however, innovatively incorporates motion data to address the challenges of TIR tracking in complex scenarios. In the DBF framework, motion and infrared information from the target object are sourced from the system and observation models and then integrated for TIR tracking. Section III-A briefly revisits the principle framework of Bayesian filtering. Section III-B is dedicated to explaining how the TIR tracking issue is modeled using Bayesian filtering. The construction of the system model and observation model specific to DBF are detailed in Sections III-C and III-D, respectively.

III-A Revisit Bayesian Filtering

Bayesian filtering deals with estimating a system’s hidden state based on a sequence of observations. In the context of TIR tracking, if we consider the target position as the hidden state and the raw infrared information from each frame as the observation, then TIR tracking essentially becomes a specialized application of Bayesian filtering. However, it should be noted that Bayesian filtering itself only offers a theoretical framework for state estimation and requires the establishment of precise system and observation models for practical application to a specific problem.

The system state and the observations are the most essential concepts in Bayesian filtering, and they have the following important characteristics:

-

1.

The system state is the target to be estimated and is not directly observable.

-

2.

The system state affects the observations that can be observed.

-

3.

The system state is Markovian with respect to the observations, i.e., the system state at the next moment is only related to the state at the current moment and is independent of both the system state and the observations at past moments.

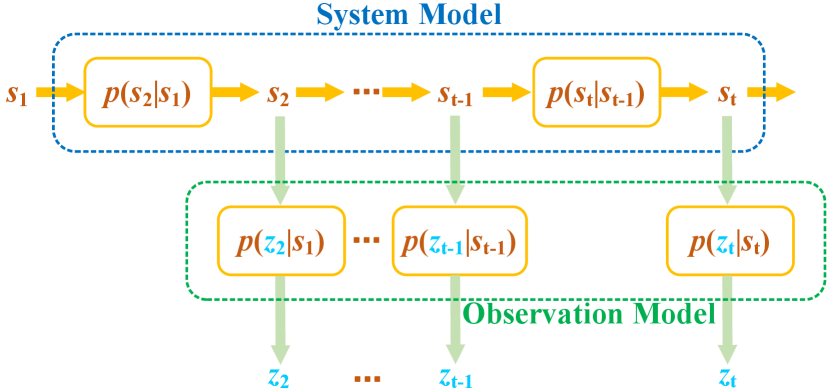

A state space can describe the above system characteristics, which consists of the following two models:

The system model describes the relationship between the system state at adjacent moments and , which is a Markov chain according to the Markovianity of the system state and can be expressed as:

| (1) |

where denotes the system state at moment , and denotes the observations from moment 1 to .

The observation model describes the effect of the system state on the observations. It is the conditional probability distribution of the observations when the system state is .

Fig.1 illustrates the relationship between the system state, observations, system model, and observation model. It can be seen that the update of the system state is only related to the system state and the system model at the current moment, while the update of the observations is only associated with the system state and the observable model at the current moment. Over time, the system state changes and produces observations.

The objective of Bayesian filtering is to estimate the system state , given a series of observations , a known system model , and an observation model . This step of the estimation process is iterative, and each step requires an estimate of the current system state . The objective function can therefore be formulated as follows:

| (2) |

where is the initial state.

The iterative formula between and can solve the objective function (Eq.2). The derivation is as follows:

Based on the total probability theorem, the following equation can be obtained as:

| (3) |

Combining Eqs.1 and 3, we get:

| (4) |

According to the Bayes rule, the following equation can be obtained as:

| (5) |

The iterative relationship can be obtained according to Eqs.4 and 5 as:

| (6) |

The denominator is independent of and can be regarded as a constant here. Therefore, the above equation can be simplified as:

| (7) |

Eq.7 is the iterative formula for to . Here, the initial value is given, and it is only necessary to obtain and at each step to carry the estimation process forward, which are provided by the system and observation models, respectively. Therefore, solving the state estimation problem depends on the specific settings of the system model and the observation model .

III-B TIR Tracking Formalization

To solve the TIR tracking problem using Bayesian filtering, we define the system state at time as the motion data of the target object, i.e., the relative variation of the target center coordinates between and . The reasons for this definition are as follows.

-

1.

The tracking method is concerned with the change in position due to the motion of the target object between consecutive TIR video frames, not the position itself. The next position can be obtained by adding the change to the current position.

-

2.

The size of the target object is different in TIR video sequences. Therefore, the same absolute change in position has different meanings for different target sizes. As a highly abstract variable, the system state should not depend on a specific target size, so it is more appropriate to use relative center variations.

In the TIR tracking problem, a rectangular box is generally used to annotate the target object in a two-dimensional image, where are the coordinates of the top-left corner of the rectangle, and is the width and height of the rectangular box, respectively. Therefore, the center coordinates of the rectangular box are calculated as:

| (8) |

The variation in the center coordinates of the rectangular box between and (where ) is as follows,

| (9) |

The relative change corresponding to the variation is as follows,

| (10) |

where is the system state . During the tracking process, the position of the target object at time can be expressed as , where denotes the best estimate of the system state at time .

For the observation in the Bayesian filtering framework, we define it as the raw infrared image of the -th frame. The observation model estimates the distribution of the system state from the infrared information of the current frame. Thus, the tracking problem can be solved by estimating the optimal system state .

III-C System Model

The system model is defined as the probability distribution of the current state under the condition that the system state at the previous frame. It is used to give an a priori estimate of the likelihood of the current state of the system based on the previous state, i.e., the prior distribution, before the observation acts at the current moment. We established the system model using 2D Brownian motion, which describes the tendency of a target object to move on its own without external influences. It is independent of the observation model and unrelated to infrared information. This situation is similar to the motion of particles in physics when they are not subject to external forces.

For a 2D Brownian motion , it satisfies the following properties:

-

1.

The initial value is 0, i.e., .

-

2.

The function is continuous at almost everywhere.

-

3.

The increment of is independent, and for , satisfies independently of any , where .

-

4.

The increment obeys a 2D Gaussian distribution with mean and covariance .

We model the DBF framework with 2D Brownian motion and define as the target position at time . The system state is the difference between two adjacent frames, which is also the increment of . Their relationship can be expressed as follows,

| (11) |

where the units of the difference are the scale of the DBF state space, not the pixel distance of the raw infrared image.

Since the initial target position is specified in the first video frame, we set this position as the origin to satisfy Property 1, i.e., . Although the computer calculates and stores the position in discrete form, we can assume that the position in DBF state space is continuous to satisfy Property 2. The fact that the pixel distances, in practice, are just discrete samples of the position in DBF space does not affect the effectiveness of our approach. In Property 3, has independent increments implies that and are independent if — the combination of Eq.11 leads to the conclusion that and are independent. So the system model can be simplified as . According to Property 4, the increment obeys a 2D Gaussian distribution with mean and covariance . Since the time interval is 1 here, the covariance can be expressed as . It further follows that both and obey a 1D Gaussian distribution and are independent of each other.

Based on the above analysis, the system model can be represented as follows,

| (12) |

It is worth noting that the scale units in DBF state space are not equivalent to the actual image scale. Therefore, we use the coefficients and to transform the unit scale from the image to the state space. The final system model is as follows,

| (13) |

where and is the target position difference in the raw image.

III-D Observation Model

The observation model is used to evaluate the probability that the most recent observation occurs in the current system state . It obtains a likelihood estimate of the system state after obtaining the observations. It is used to make a likelihood estimation of the system state after obtaining the observations, correcting the probability distribution predicted by the system model.

The observation model in this study is based on the detection methods. This is due to the many similarities between the two approaches as follows,

-

1.

Each candidate corresponds to each possible system state .

-

2.

The current frame image is the observation .

-

3.

The detection method generates a response score based on the feature similarity between the template and the candidate window in the current frame, and the more similar to the template, the higher the response score. The response scores are highly consistent with the role of the observation model output .

Based on this analysis, we propose an observation model based on the tracking-by-detection method. As shown in Fig.2, the pipeline of the observation model mainly consists of two modules: feature extraction and classifier.

| Layers | Configuration | Strides |

| Convolution | 96 7 7 | 2 |

| Activation | ReLU+LRN | - |

| Pooling | Maxpooling | 2 |

| Convolution | 256 5 5 | 2 |

| Activation | ReLU+LRN | - |

| Pooling | Maxpooling | 2 |

| Convolution | 512 3 3 | 1 |

| Activation | ReLU | - |

| Layers | Configuration |

| Convolution | 512 1 1 |

| Activation | ReLU+LRN |

| Convolution | 512 1 1 |

| Activation | ReLU+LRN |

| Convolution | 2 1 1 |

| Loss | Softmax |

We choose VGG-M [75] as the network architecture for feature extraction. We use the activation value of the third convolutional layer as the deep feature for image representation, and all other parameters are kept consistent with the original VGG-M architecture, as shown in Table I. Inspired by MDNet [37], we use a neural network with three fully connected layers as the classifier. The tracking task involves only two categories, i.e., foreground (target object) and background, instead of the 1000 categories of the ImageNet classification task [44]. Moreover, the former uses low-level convolutional features, unlike the latter, which uses high-level convolutional features [76]. Therefore, we reduce the number of units in the intermediate hidden layer from 4,096 to 512 dimensions for the general classification task. This also reduces the costs of computation and storage. The specific network parameters are shown in Table II, where the fully connected layer is actually implemented with a convolutional layer, and the last layer is a softmax function that outputs a 2D vector , where and denote the probability that the candidate is foreground and background, respectively. Since , we take as the response score.

The final output of the observation model is the likelihood probability , so it is also necessary to transform the response score into a probabilistic form. We use a weighted softmax function to perform the following transformation as follows,

| (14) |

where is the response score of the system state . It can be seen that the higher the response score is, the higher the likelihood probability is. is a penalty hyperparameter that obeys the Gaussian distribution and controls the impact of the response score on the probability distribution, i.e., the closer is to the center of the previous frame, the larger is.

IV Experiments

In this section, we detail the experimental results that highlight the need and effectiveness of applying and revitalizing Bayesian filtering for TIR object tracking. Section IV-A outlines the implementation details of our DBF. Section IV-B describes the benchmark datasets used and the metrics for evaluation. The ablation studies are presented in Section IV-C, followed by the comparison results with state-of-the-arts on the LSOTB-TIR [14] and PTB-TIR [15] benchmark datasets in Section IV-D. Finally, Section IV-E offers more discussions to improve the performance of DBF.

IV-A Implementation Details

Our TIR object tracking method, DBF, was developed using the MatConvNet [77] toolbox and trained on both RGB datasets such as COCO [45], LaSOT [49], and GOT-10k [48], and the TIR dataset LSOTB-TIR [14]. All the experiments were conducted on a cloud server with an Intel® Xeon® E5-2680 v4 CPU @ 2.4GHz CPU with 128GB RAM, and a NVIDIA® GeForce RTXTM 2080 Ti GPU with 11GB VRAM. We trained the observation model using Stochastic Gradient Descent (SGD) with a batch size of 8 and a momentum of 0.9. As described in Section III-D, we used a modified VGG-M [75] as the base feature extractor. The classifier’s settings adhered to the default configurations of MDNet [37]. The initial training phase of the observation model spanned 60 epochs on the RGB datasets, with the learning rate exponentially decayed from to . This was followed by a fine-tuning phase of 30 epochs on the TIR dataset, setting the learning rate at and the weight decay at .

IV-B Datasets and Evaluation Metrics

Datasets: PTB-TIR [15] dataset comprises 60 manually annotated infrared pedestrian sequences, amassing more than 30,000 frames in total. Each sequence has nine attribute labels for the attribute-based evaluation. LSOTB-TIR [14] dataset is notably larger, offering 1400 infrared video sequences with high-quality annotations, totaling in excess of 600,000 frames. It features five varieties of moving objects – people, animals, vehicles, aircraft, and boats – in four distinct scenarios and is evaluated on 12 challenging attributes, thereby making it the most extensive TIR dataset to date.

Evaluation metrics: Three distinct metrics are employed in LSOTB-TIR [14] dataset to evaluate the performance of TIR trackers. Precision is defined as the ratio of frames in which the Center Location Error (CLE) falls below a specified threshold (20 pixels), relative to the total number of frames. Normalized Precision adjusts for the resolution and size of the predicted bounding box, mitigating the impact of varying target sizes. The effectiveness of TIR trackers is assessed using the Area Under Curve (AUC) of Normalized Precision within the range of 0 to 0.5. Success is measured by the proportion of frames where the overlap ratio (OR) between the predicted and ground truth bounding boxes exceeds a predefined threshold. For the PTB-TIR [15] dataset, precision and success are used to assess the performance of the TIR trackers.

IV-C Ablation Studies

In this section, we first analyze the proposed DBF method on two benchmark datasets to evaluate the effectiveness of the feature extractor network architecture and training dataset size.

| Network | LSOTB-TIR | PTB-TIR | |||||

| Success | Precision | Norm. Precision | Success | Precision | |||

| AlexNet | 0.603 | 0.752 | 0.692 | 0.607 | 0.825 | ||

| VGG-M | 0.625 | 0.770 | 0.703 | 0.626 | 0.839 | ||

| VGG-16 | 0.617 | 0.760 | 0.697 | 0.613 | 0.831 | ||

Network architecture: Among existing TIR object tracking methods, the commonly used CNN architectures are AlexNet [78], VGG-M [75], and VGG-16 [75]. Table III presents the results of the proposed DBF using these different architectures as the feature extractor. Notably, VGG-M surpasses AlexNet and VGG-16, registering the best scores across all evaluation metrics on both benchmark datasets. AlexNet, an earlier architecture, is smaller in size and offers faster computation but lacks in performance. It is typically employed in early tracking methods where speed is prioritized. VGG-M, in comparison, is wider than AlexNet. The initial two convolutional layers of AlexNet use and kernels, whereas VGG-M uses and kernels in its first two layers. Additionally, VGG-M boasts more convolutional channels in layers 2, 3, and 4 than AlexNet and incorporates batch normalization to enhance training efficiency. In practice, many TIR tracking methods utilize only the shallow features of VGG-M, which allows for rapid computation while delivering robust performance, making it a popular choice among convolutional feature extractors. The characteristic of VGG-16 is that it is relatively deep, and replaces a single layer of large convolution kernels with multiple layers of small convolution kernels. VGG-16, known for its depth and use of smaller convolution kernels, does not perform as well in tracking tasks as it does in classification. This could be due to tracking tasks relying more on shallow features.

| Training Dataset | LSOTB-TIR | PTB-TIR | |||||

| Success | Precision | Norm. Precision | Success | Precision | |||

| Only-RGB | 0.596 | 0.749 | 0.680 | 0.606 | 0.795 | ||

| Only-TIR | 0.603 | 0.742 | 0.679 | 0.597 | 0.781 | ||

| All | 0.625 | 0.770 | 0.703 | 0.626 | 0.839 | ||

Training dataset size: To train the observation model of DBF, a mix of RBG and TIR datasets was used. The RGB datasets encompassed COCO [45], LaSOT [49], and GOT-10k [48]. COCO is a comprehensive dataset designed for image detection and semantic segmentation, containing over 330,000 images and 1.5 million objects. LaSOT, a newer benchmark for long-term visual tracking, includes 1,400 video sequences, each averaging 2,500 frames, spanning 70 class categories with 16 sequences per category in its training set. GOT-10k, another challenging benchmark, consists of 10,000 video sequences for training purposes. The training subset of the LSOTB-TIR [14] was also utilized. Table IV illustrates the effectiveness of DBF using different combinations of training datasets. The combined use of RGB and TIR datasets achieves better results than training solely with either RGB or TIR datasets. This indicates that the integration of RGB and TIR datasets exploits the unique properties of RGB and TIR images, thereby enhancing the robustness of feature extraction for TIR object tracking.

IV-D Comparison with state-of-the-arts

To evaluate our proposed DBF, we compared it with state-of-the-art TIR tracking methods, including CFNet [79], DSiam [80], ECO-deep [20], ECO-HC [20], ECO-STIR [81], HSSNet [82], MDNet [37], MLSSNet [22], MMNet [24], SiamFC [17], SiamTri [18], SRDCF [83], TADT [84], VITAL [85], and UDT [86].

IV-D1 Results on LSOTB-TIR

Fig.3 shows that DBF achieves top scores in all evaluation criteria, recording 0.625 in success, 0.770 in precision, and 0.703 in normalized precision, outperforming the baseline MDNet [37] by 2.4%, 2.0%, and 1.7%, respectively. This underscores the effectiveness of Bayesian filtering in learning classifiers for TIR tracking. ECO-deep [20], utilizing factorized continuous convolution operators for multi-resolution deep feature map integration, scores 0.609 in success, 0.739 in precision, and 0.670 in normalized precision. ECO-STIR [81], which employs additional synthetic TIR-based deep features for training, records the second-best success and precision scores of 0.616 and 0.750, and the fourth-best normalized precision score of 0.672. This surpasses ECO-deep by 0.7%, 1.1%, and 0.2% in the respective metrics, highlighting the superiority of TIR-based over RGB-based deep features in TIR tracking. Using the LSOTB-TIR training subset, DBF significantly outperforms ECO-STIR with absolute gains of 0.9%, 2.0%, and 3.2% in success, precision, and normalized precision. Notably, classification-based trackers such as VITAL [85], UDT [86], and SRDCF [83], with precision scores of 0.749, 0.629, and 0.642, lag behind DBF by more than 2.1%, 14.1%, and 12.8%, respectively. MMNet [24], a matching-based tracker with a dual-level feature model, still lags behind DBF by considerable margins of 14.9%, 18.8%, and 16.4% in success, precision, and normalized precision. We attribute the robust performance of DBF to inheriting the strong discriminative capacity of the MDNet classifier. Moreover, DBF outperforms other matching-based trackers such as TADT [84], SiamFC [17], SiamTri [18], MLSSNet [22], CFNet [79], HSSNet [82], and DSiam [80], with absolute success score gains of 3.8%, 10.8%, 11.2%, 16.6%, 20.9%, 21.6%, and 24.5%, respectively. Overall, the results on the LSOTB-TIR benchmark dataset illustrate that the proposed DBF tracker performs favorably against state-of-the-art TIR trackers over the 120 challenging video sequences.

In assessing the efficacy of our DBF tracker for various TIR tracking challenges, we benchmarked it against top-tier methods across different attributes. All the 120 video sequences in LSOTB-TIR are divided into four scenarios according to the TIR camera platform: drone-mounted (25 sequences), hand-held (35 sequences), surveillance (40 sequences), and vehicle-mounted (20 sequences). The results of this scenario-based comparison, depicted in Fig.4, reveal that while DBF underperforms in the vehicle-mounted scenarios, it excels in the other three. Most classification-based methods, including ours, demonstrate top-tier performance due to their effective use of binary classifiers trained on positive and negative samples of the target objects. Each sequence is also annotated with 12 attributes, posing unique challenges: aspect ratio variation (ARV), BC, DEF, distractor (DIS), fast motion (FM), intensity variation (IV), low resolution (LR), MB, occlusion (OCC), OV, SV, and TC. As Fig.5 shows, DBF demonstrates impressive results across these attributes, achieving the highest success rate in six of them. Although it ranks second in OV, SV, and TC and third in DIS, FM, and LR, its success scores are on par with the top-ranked trackers for each attribute. Specifically, DBF outperforms the base tracker MDNet [37] across various attributes, indicating the effectiveness of the Bayesian filtering framework in TIR tracking. In addition, DBF outperforms ECO-STIR [81], the leading tracker on FM, OV, and SV, with improvements of 2.7%, 1.0%, 3.4%, 1.8%, 3.1%, and 6.5% on ARV, DEF, IV, MB, OCC, and TC, respectively. This comparable performance of DBF can be attributed to the application of Bayesian filtering, which provides enriched motion data and learns the classifier dynamically.

IV-D2 Results on PTB-TIR

Fig.6 reveals that DBF significantly outperforms the baseline MDNet [37], recording a 4.0% increase in success score and a 2.2% increase in precision score. This underlines the substantial improvement Bayesian filtering brings to TIR trackers, particularly in pedestrian tracking. DBF attains the highest precision score (0.839), outperforming ECO-deep [20], though ECO-deep slightly leads in success score (0.633). Notably, DBF significantly outperforms ECO-STIR [81] on the PTB-TIR dataset, despite ECO-STIR being a close competitor on the LSOTB dataset. MLSSNet [22], utilizing a multi-level similarity model for TIR tracking, records a success score of 0.539 and a precision score of 0.741. In comparison, DBF exceeds MLSSNet by 7.6% and 9.8% in these metrics, respectively. Additionally, DBF outshines the classification-based tracker VITAL [85], gaining 4.8% in success and 4.4% in precision. Furthermore, DBF registers substantial success/precision gains of 3.3%/3.5%, 3.5%/5.6%, 6.6%/9.9%, 6.9%/5.6%, 9.7%/14.0%, 14.6%/21.6%, 15.8%/15.0%, 16.7%/23.1%, 17.7%/21.0%, and 30.7%/40.8% compared to SRDCF [83], ECO-HC [20], TADT [84], MMNet [24], UDT [86], SiamFC [17], HSSNet [82], SiamTri [18], CFNet [79], and DSiam [80], respectively. These results highlight DBF’s superior and harmonious tracking performance, excelling in both accuracy and robustness.

IV-D3 Visualized results

As shown in Fig.7, the visualized results of DBF on four video sequences selected from the PTB-TIR benchmark dataset — conversation, crouching, crowd1, and park5 — are presented. These sequences encompass four challenging attributes: BC, MB, SV, and TC. We chose MDNet [37] as the baseline for comparative experiments, as our observation model is an extension of it. In BC cases, where the background shares infrared and texture characteristics with the target object, MDNet often misidentifies the background as the target object, leading to tracking inaccuracies. DBF, however, shows improved tracking by leveraging not only infrared information but also an independent system model to estimate the possible position of the target object, thereby reducing background interference. For MB, SV, and TC cases, involving rapid changes in appearance and shape of the target object, MDNet struggles to maintain tracking, whereas DBF excels. The classifier updating strategy of MDNet every 20 frames is inadequate for rapidly changes, often mistaking the altered target object for the background. Conversely, DBF, despite updating the classifier at the same interval, incorporates a system model for additional estimation, effectively mitigating these challenges. The experimental results highlight that incorporating a system model into DBF substantially improves TIR object tracking effectiveness in complex scenarios. Fig.8 shows two tracking failure cases of DBF. The fast movement of the camera equipment in the cow sequence aggravates the motion blur. At the same time, because the distractors in the background are entirely consistent in appearance and shape with the target object, the system model provides the wrong candidates to the observation model. In the head sequence, the target object is occluded by distractors with the same appearance and motion information, causing misjudgment by the observation model.

IV-E Discussions

IV-E1 Comparison with Transformer-based trackers

| Trackers | LSOTB-TIR | PTB-TIR | |||||

| Success | Precision | Norm. Precision | Success | Precision | |||

| TransT [87] | 0.673 | 0.798 | 0.721 | 0.613 | 0.764 | ||

| MixFormer [88] | 0.660 | 0.778 | 0.703 | 0.612 | 0.753 | ||

| CSWinTT [89] | 0.644 | 0.761 | 0.685 | 0.572 | 0.753 | ||

| DBF | 0.625 | 0.770 | 0.703 | 0.626 | 0.839 | ||

Transformer-based trackers have shown excellent performance compared to CNN-based trackers and are no surprise dominating the visual object tracking community. We also compare our DBF with three recent representative Transformer-based trackers111To ensure fairness, all Transformer-based trackers were retrained on the LSOTB-TIR training set.: TransT [87], MixFormer [88], and CCWinTT [89], on the LSOTB-TIR and PTB-TIR benchmark datasets.

As shown in Table V, DBF exhibits superior performance on the PTB-TIR dataset, achieving a success score of 0.626 and a precision score of 0.839, significantly outperforming indicating its closest competitor, TransT. Such a margin is a notable superiority in the accuracy of DBF locating the target object. We believe that DBF comprehensively surpasses Transformer-based trackers on the PTB-TIR dataset because the target objects in this dataset are all pedestrian, causing the difference between consecutive video frames to be small. The motion information provided by the system model makes up for the lack of attention mechanism and spatiotemporal data compared to the Transformer architecture. In contrast, on LSOTB-TIR, a dataset with more object classes and challenging scenarios, Transformer-based trackers outperformed DBF. However, DBF maintains competitive precision and normalized precision scores, even outperforming CSWinTT. It is worth noting that while DBF does not lead on the LSOTB-TIR dataset, its consistent performance across different datasets for both success and precision metrics suggests robustness. This could imply that DBF has a more generalized model, which is less susceptible to dataset-specific biases or overfitting. Inspired by this, we will explore Transformer-based observation models to further improve the accuracy while maintaining or enhancing the robustness of DBF.

IV-E2 Motion distributions

In Section III-C, based on the assumption of Brownian motion, we use a Gaussian distribution with zero means to describe the object motion information. In order to obtain a better system model, we further studied the motion distribution of target objects in the LSOTB-TIR and PTB-TIR benchmark datasets. These two datasets contain a total of 111,982 frames. We need to convert the ground-truth annotations of these target objects into the system state, that is, the position difference of the target object in two consecutive frames can be calculated as:

| (15) |

where , , , and represent the top-left abscissa, the top-left ordinate, the width, and the height of the ground-truth annotation, respectively. Since the scale of the target object changes over the TIR video sequence, we use the relative motion of the centroid of the ground-truth annotation to represent the position difference of the target object. Fig.9 shows the histogram after converting 111,982 ground-truth annotations into position differences of the target object. We fit the frequency histograms of and using a Gaussian distribution — the topper two graphs of Fig.9 show fitting results.

It is clear that the histogram appears to approximate a Gaussian distribution, indicating the Gaussian distribution’s ability to effectively characterize motion differences. The density center of the histogram is near zero, and the mean values of the best Gaussian distribution fits for and have mean values of 0.0057 and 0.0063, respectively. This observation ratifies the zero-mean hypothesis of the Gaussian distribution. However, the peak of the histogram is much sharper than that of the Gaussian distribution, leading to a suboptimal fit. This discrepancy prompts us to explore an alternative distribution with a sharper peak, which might offer a better fit for the histogram. The Laplace distribution, with its sharper peak at the same mean, is more appropriate for fitting the absolute values of the position differences and , given its domain of . The lower pair of graphs in Fig.9 illustrates the fitting of the Laplace distribution for and . It is clear from these graphs that the Laplace distribution, for both and , achieves a higher correlation coefficient R2 than that of the Gaussian distribution. In statistical analysis, R2 is employed to assess the accuracy of a fitted curve in representing actual data. Therefore, based on these results, we adopt the Laplace distribution over the Gaussian distribution to model the motion distribution of the target object as:

| (16) |

where and are the conversion coefficients.

| System Model | LSOTB-TIR | PTB-TIR | |||||

| Success | Precision | Norm. Precision | Success | Precision | |||

| Gaussian | 0.625 | 0.770 | 0.703 | 0.626 | 0.839 | ||

| Laplace | 0.633 | 0.796 | 0.737 | 0.641 | 0.862 | ||

The effectiveness of system models based on Gaussian and Laplace distributions was compared using the LSOTB-TIR and PTB-TIR benchmark datasets, with the results detailed in Table VI. The results clearly show that the system model based on the Laplace distribution outperforms the one using the Gaussian distribution, demonstrating the effectiveness of our improvement in the system model.

V Conclusion

This study proposes a novel method, DBF, that revisits and integrates Bayesian filtering with deep learning techniques for TIR object tracking. It enables the integration of motion data independent of infrared information, thereby enhancing the tracker’s ability to predict and distinguish rapid changes in the appearance of the target objects. This is particularly crucial in scenarios where infrared information alone may falter. Moreover, Bayesian filtering introduces a probabilistic model updating strategy. This adaptive strategy significantly reduces the risk of tracking errors and drifts. Experimental evaluations on multiple benchmark datasets confirm that DBF outperforms most existing TIR tracking methods in complex scenarios, thus not only vindicating but also revitalizing the role of Bayesian filtering in this domain. The successful implementation of DBF paves the way for more robust and effective TIR tracking technologies capable of overcoming the nuanced challenges posed by intricate tracking environments. However, there are currently the following shortcomings: (a) the classifier used by the observation model is relatively simple, which is insufficient for TC and DIS cases with small differences in object classes, (b) although motion information can serve as spatiotemporal correlation between frames, it is still not sufficient in case of FM, and (c) competitive tracking performance has not been achieved in case of LR. In future work, we would like to employ more powerful network architectures from off-the-shelf Transformer-based and segmentation-based visual object tracking methods as the classifier of the observation model and adopt super-resolution methods to provide more robust TIR tracking performance.

References

- [1] M. Felsberg, A. Berg, G. Hager, J. Ahlberg, M. Kristan, J. Matas, A. Leonardis, L. Cehovin, G. Fernandez, T. Vojir et al., “The thermal infrared visual object tracking vot-tir2015 challenge results,” in International Conference on Computer Vision Workshops (ICCVW), 2015, pp. 76–88.

- [2] D. Yuan, X. Shu, and Q. Liu, “Recent advances on thermal infrared target tracking: A survey,” in Asian Conference on Artificial Intelligence Technology (ACAIT). IEEE, 2022, pp. 1–6.

- [3] J. A. Sobrino, F. Del Frate, M. Drusch, J. C. Jiménez-Muñoz, P. Manunta, and A. Regan, “Review of thermal infrared applications and requirements for future high-resolution sensors,” IEEE Transactions on Geoscience and Remote Sensing, vol. 54, no. 5, pp. 2963–2972, 2016.

- [4] R. Gade and T. B. Moeslund, “Thermal cameras and applications: a survey,” Machine vision and applications, vol. 25, pp. 245–262, 2014.

- [5] X. Zhang, P. Ye, H. Leung, K. Gong, and G. Xiao, “Object fusion tracking based on visible and infrared images: A comprehensive review,” Information Fusion, vol. 63, pp. 166–187, 2020.

- [6] Y. Matsuo, Y. LeCun, M. Sahani, D. Precup, D. Silver, M. Sugiyama, E. Uchibe, and J. Morimoto, “Deep learning, reinforcement learning, and world models,” Neural Networks, vol. 152, pp. 267–275, 2022.

- [7] G. Menghani, “Efficient deep learning: A survey on making deep learning models smaller, faster, and better,” ACM Computing Surveys, vol. 55, no. 12, pp. 1–37, 2023.

- [8] B. Li, J. Yan, W. Wu, Z. Zhu, and X. Hu, “High performance visual tracking with siamese region proposal network,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2018, pp. 8971–8980.

- [9] D. Zhang, C. P. Chen, T. Li, Y. Zuo, and N. Q. Duy, “Target tracking method of siamese networks based on the broad learning system,” CAAI Transactions on Intelligence Technology, vol. 8, no. 3, pp. 1043–1057, 2023.

- [10] P. Gao, R. Yuan, F. Wang, L. Xiao, H. Fujita, and Y. Zhang, “Siamese attentional keypoint network for high performance visual tracking,” Knowledge-based systems, vol. 193, p. 105448, 2020.

- [11] D. Xing, N. Evangeliou, A. Tsoukalas, and A. Tzes, “Siamese transformer pyramid networks for real-time uav tracking,” in IEEE/CVF Winter Conference on Applications of Computer Vision, 2022, pp. 2139–2148.

- [12] P. Gao, X.-Y. Zhang, X.-L. Yang, F. Gao, H. Fujita, and F. Wang, “Robust visual tracking with extreme point graph-guided annotation: Approach and experiment,” Expert Systems with Applications, vol. 238, p. 122013, 2024.

- [13] S. Javed, M. Danelljan, F. S. Khan, M. H. Khan, M. Felsberg, and J. Matas, “Visual object tracking with discriminative filters and siamese networks: a survey and outlook,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 5, pp. 6552–6574, 2022.

- [14] Q. Liu, X. Li, D. Yuan, C. Yang, X. Chang, and Z. He, “Lsotb-tir: A large-scale high-diversity thermal infrared single object tracking benchmark,” IEEE Transactions on Neural Networks and Learning Systems, 2023.

- [15] Q. Liu, Z. He, X. Li, and Y. Zheng, “Ptb-tir: A thermal infrared pedestrian tracking benchmark,” IEEE Transactions on Multimedia, vol. 22, no. 3, pp. 666–675, 2019.

- [16] M. Ondrašovič and P. Tarábek, “Siamese visual object tracking: A survey,” IEEE Access, vol. 9, pp. 110 149–110 172, 2021.

- [17] L. Bertinetto, J. Valmadre, J. Henriques, A. Vedaldi, and P. H. S. Torr, “Fully-convolutional siamese networks for object tracking,” in European Conference on Computer Vision (ECCV). Springer-Verlag, 2016, pp. 850–865.

- [18] X. Dong and J. Shen, “Triplet loss in siamese network for object tracking,” in European conference on computer vision (ECCV), 2018, pp. 459–474.

- [19] P. Gao, Y. Ma, K. Song, C. Li, F. Wang, L. Xiao, and Y. Zhang, “High performance visual tracking with circular and structural operators,” Knowledge-Based Systems, vol. 161, pp. 240–253, 2018.

- [20] M. Danelljan, G. Bhat, S. F. Khan, and M. Felsberg, “Eco: Efficient convolution operators for tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017, pp. 6931–6939.

- [21] P. Gao, Q. Zhang, F. Wang, L. Xiao, H. Fujita, and Y. Zhang, “Learning reinforced attentional representation for end-to-end visual tracking,” Information Sciences, vol. 517, pp. 52–67, 2020.

- [22] Q. Liu, X. Li, Z. He, N. Fan, D. Yuan, and H. Wang, “Learning deep multi-level similarity for thermal infrared object tracking,” IEEE Transactions on Multimedia, vol. 23, pp. 2114–2126, 2020.

- [23] C. Yang, Q. Liu, G. Li, H. Pan, and Z. He, “Learning diverse fine-grained features for thermal infrared tracking,” Expert Systems with Applications, vol. 238, p. 121577, 2024.

- [24] Q. Liu, D. Yuan, N. Fan, P. Gao, X. Li, and Z. He, “Learning dual-level deep representation for thermal infrared tracking,” IEEE Transactions on Multimedia, vol. 25, pp. 1269–1281, 2022.

- [25] P. Gao, Y. Ma, K. Song, C. Li, F. Wang, and L. Xiao, “Large margin structured convolution operator for thermal infrared object tracking,” in IEEE International Conference on Pattern Recognition (ICPR). IEEE, 2018, pp. 2380–2385.

- [26] P. Stano, Z. Lendek, J. Braaksma, R. Babuška, C. de Keizer, and A. J. den Dekker, “Parametric bayesian filters for nonlinear stochastic dynamical systems: A survey,” IEEE transactions on cybernetics, vol. 43, no. 6, pp. 1607–1624, 2013.

- [27] M. S. Arulampalam, S. Maskell, N. Gordon, and T. Clapp, “A tutorial on particle filters for online nonlinear/non-gaussian bayesian tracking,” IEEE Transactions on Signal Processing, vol. 50, no. 2, pp. 174–188, 2002.

- [28] S. Fang, H. Li, M. Yang, and Z. Wang, “Inertial navigation system based vehicle temporal relative localization with split covariance intersection filter,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 5270–5277, 2022.

- [29] H. Huang, J. Tang, C. Liu, B. Zhang, and B. Wang, “Variational bayesian-based filter for inaccurate input in underwater navigation,” IEEE Transactions on Vehicular Technology, vol. 70, no. 9, pp. 8441–8452, 2021.

- [30] S. Choe, H. Seong, and E. Kim, “Indoor place category recognition for a cleaning robot by fusing a probabilistic approach and deep learning,” IEEE Transactions on Cybernetics, vol. 52, no. 8, pp. 7265–7276, 2021.

- [31] O. Dagan and N. R. Ahmed, “Conservative filtering for heterogeneous decentralized data fusion in dynamic robotic systems,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022, pp. 5840–5847.

- [32] Y. Petetin, Y. Janati, and F. Desbouvries, “Structured variational bayesian inference for gaussian state-space models with regime switching,” IEEE Signal Processing Letters, vol. 28, pp. 1953–1957, 2021.

- [33] L. Martino and V. Elvira, “Compressed monte carlo with application in particle filtering,” Information Sciences, vol. 553, pp. 331–352, 2021.

- [34] P. G. Bhat, B. N. Subudhi, T. Veerakumar, V. Laxmi, and M. S. Gaur, “Multi-feature fusion in particle filter framework for visual tracking,” IEEE Sensors Journal, vol. 20, no. 5, pp. 2405–2415, 2019.

- [35] Y. Cao, G. Shi, T. Zhang, W. Dong, J. Wu, X. Xie, and X. Li, “Bayesian correlation filter learning with gaussian scale mixture model for visual tracking,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 5, pp. 3085–3098, 2021.

- [36] S. Iyengar, “Hitting lines with two-dimensional brownian motion,” SIAM Journal on Applied Mathematics, vol. 45, no. 6, pp. 983–989, 1985.

- [37] H. Nam and B. Han, “Learning multi-domain convolutional neural networks for visual tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2016, pp. 4293–4302.

- [38] S. M. Marvasti-Zadeh, L. Cheng, H. Ghanei-Yakhdan, and S. Kasaei, “Deep learning for visual tracking: A comprehensive survey,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 5, pp. 3943–3968, 2022.

- [39] N. Wang and D.-Y. Yeung, “Learning a deep compact image representation for visual tracking,” in Advances in Neural Information Processing Systems (NeurIPS), 2013, pp. 809–817.

- [40] D. Held, S. Thrun, and S. Savarese, “Learning to track at 100 fps with deep regression networks,” in European Conference on Computer Vision (ECCV). Springer, 2016, pp. 749–765.

- [41] P. Gao, Y. Ma, C. Li, K. Song, Y. Zhang, F. Wang, and L. Xiao, “Adaptive object tracking with complementary models,” IEICE TRANSACTIONS on Information and Systems, vol. 101, no. 11, pp. 2849–2854, 2018.

- [42] Y. Ma, C. Yuan, P. Gao, and F. Wang, “Efficient multi-level correlating for visual tracking,” in Asian Conference on Computer Vision (ACCV). Springer-Verlag, 2018, pp. 452–465.

- [43] P. Gao, Y. Ma, C. Li, K. Song, F. Wang, and L. Xiao, “A complementary tracking model with multiple features,” in International Conference on Image, Video Processing and Artificial Intelligence (IVPAI), ser. Proceedings of SPIE 10836. SPIE, 2018, pp. 248–252.

- [44] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and F.-F. Li, “Imagenet large scale visual recognition challenge,” International Journal of Computer Vision, vol. 115, no. 3, pp. 211–252, 2015.

- [45] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in European Conference on Computer Vision (ECCV). Springer-Verlag, 2014, pp. 740–755.

- [46] M. Muller, A. Bibi, S. Giancola, S. Alsubaihi, and B. Ghanem, “Trackingnet: A large-scale dataset and benchmark for object tracking in the wild,” in European conference on computer vision (ECCV). Springer, 2018, pp. 300–317.

- [47] Y. Wu, J. Lim, and M.-H. Yang, “Object tracking benchmark,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 37, no. 9, pp. 1834–1848, 2015.

- [48] L. Huang, X. Zhao, and K. Huang, “Got-10k: A large high-diversity benchmark for generic object tracking in the wild,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 5, pp. 1562–1577, 2019.

- [49] H. Fan, H. Bai, L. Lin, F. Yang, P. Chu, G. Deng, S. Yu, M. Huang, J. Liu, Y. Xu et al., “Lasot: A high-quality large-scale single object tracking benchmark,” International Journal of Computer Vision, vol. 129, no. 2, pp. 439–461, 2021.

- [50] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [51] Q. Wu, T. Yang, Z. Liu, B. Wu, Y. Shan, and A. B. Chan, “Dropmae: Masked autoencoders with spatial-attention dropout for tracking tasks,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 14 561–14 571.

- [52] B. Ye, H. Chang, B. Ma, S. Shan, and X. Chen, “Joint feature learning and relation modeling for tracking: A one-stream framework,” in European Conference on Computer Vision. Springer, 2022, pp. 341–357.

- [53] X. Chen, H. Peng, D. Wang, H. Lu, and H. Hu, “Seqtrack: Sequence to sequence learning for visual object tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 14 572–14 581.

- [54] Z. Yang, Y. Wei, and Y. Yang, “Collaborative video object segmentation by multi-scale foreground-background integration,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 9, pp. 4701–4712, 2021.

- [55] ——, “Associating objects with transformers for video object segmentation,” Advances in Neural Information Processing Systems, vol. 34, pp. 2491–2502, 2021.

- [56] Z. Yang and Y. Yang, “Decoupling features in hierarchical propagation for video object segmentation,” Advances in Neural Information Processing Systems, vol. 35, pp. 36 324–36 336, 2022.

- [57] Q. Liu, X. Lu, Z. He, C. Zhang, and W.-S. Chen, “Deep convolutional neural networks for thermal infrared object tracking,” Knowledge-Based Systems, vol. 134, pp. 189–198, 2017.

- [58] M. Schuster and K. K. Paliwal, “Bidirectional recurrent neural networks,” IEEE transactions on Signal Processing, vol. 45, no. 11, pp. 2673–2681, 1997.

- [59] Y. Cai, X. Sui, G. Gu, and Q. Chen, “Learning modality feature fusion via transformer for rgbt-tracking,” Infrared Physics & Technology, vol. 133, p. 104819, 2023.

- [60] Y. Huang, X. Li, R. Lu, and N. Qi, “Rgb-t object tracking via sparse response-consistency discriminative correlation filters,” Infrared Physics & Technology, vol. 128, p. 104509, 2023.

- [61] J. Qiu, R. Yao, Y. Zhou, P. Wang, Y. Zhang, and H. Zhu, “Visible and infrared object tracking via convolution-transformer network with joint multimodal feature learning,” IEEE Geoscience and Remote Sensing Letters, vol. 20, pp. 1–5, 2023.

- [62] H. Li, Y. Zha, H. Li, P. Zhang, and W. Huang, “Efficient thermal infrared tracking with cross-modal compress distillation,” Engineering Applications of Artificial Intelligence, vol. 123, p. 106360, 2023.

- [63] W. Wang, Y. Sun, K. Li, J. Wang, C. He, and D. Sun, “Fully bayesian analysis of the relevance vector machine classification for imbalanced data problem,” CAAI Transactions on Intelligence Technology, vol. 8, no. 1, pp. 192–205, 2023.

- [64] E. Stengård and R. Van den Berg, “Imperfect bayesian inference in visual perception,” PLoS computational biology, vol. 15, no. 4, p. e1006465, 2019.

- [65] J. L. Puga, M. Krzywinski, and N. Altman, “Bayes’ theorem: Incorporate new evidence to update prior information,” Nature Methods, vol. 12, no. 4, pp. 277–279, 2015.

- [66] R. E. Kalman et al., “A new approach to linear filtering and prediction problems,” Journal of basic Engineering, vol. 82, no. 1, pp. 35–45, 1960.

- [67] A. Doucet, N. De Freitas, and N. Gordon, “An introduction to sequential monte carlo methods,” Sequential Monte Carlo methods in practice, pp. 3–14, 2001.

- [68] A. Kutschireiter, S. C. Surace, H. Sprekeler, and J.-P. Pfister, “Nonlinear bayesian filtering and learning: a neuronal dynamics for perception,” Scientific reports, vol. 7, no. 1, p. 8722, 2017.

- [69] E. Pei, Y. Zhao, M. C. Oveneke, D. Jiang, and H. Sahli, “A bayesian filtering framework for continuous affect recognition from facial images,” IEEE Transactions on Multimedia, 2022.

- [70] S. Wang, J. Gao, B. Li, and W. Hu, “Narrowing the gap: Improved detector training with noisy location annotations,” IEEE Transactions on Image Processing, vol. 31, pp. 6369–6380, 2022.

- [71] F. Alrowais, R. Marzouk, F. N. Al-Wesabi, and A. M. Hilal, “Hand gesture recognition for disabled people using bayesian optimization with transfer learning.” Intelligent Automation & Soft Computing, vol. 36, no. 3, 2023.

- [72] M. A. Lee, B. Yi, R. Martín-Martín, S. Savarese, and J. Bohg, “Multimodal sensor fusion with differentiable filters,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 10 444–10 451.

- [73] W. Yu, Y. Shao, J. Xu, and C. Mechefske, “An adaptive and generalized wiener process model with a recursive filtering algorithm for remaining useful life estimation,” Reliability Engineering & System Safety, vol. 217, p. 108099, 2022.

- [74] F. Su, J. Chao, P. Liu, B. Zhang, N. Zhang, Z. Luo, and J. Han, “Prognostic models for breast cancer: based on logistics regression and hybrid bayesian network,” BMC Medical Informatics and Decision Making, vol. 23, no. 1, p. 120, 2023.

- [75] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556v6, 2015.

- [76] P. Gao, Y. Ma, R. Yuan, L. Xiao, and F. Wang, “Learning cascaded siamese networks for high performance visual tracking,” in IEEE International Conference on Image Processing (ICIP). IEEE, 2019, pp. 3078–3082.

- [77] A. Vedaldi and K. Lenc, “Matconvnet: Convolutional neural networks for matlab,” in Proceedings of the 23rd ACM international conference on Multimedia, 2015, pp. 689–692.

- [78] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems (NeurIPS), 2012, pp. 1097–1105.

- [79] J. Valmadre, L. Bertinetto, J. Henriques, A. Vedaldi, and P. H. S. Torr, “End-to-end representation learning for correlation filter based tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017, pp. 2805–2813.

- [80] Q. Guo, W. Feng, C. Zhou, R. Huang, L. Wan, and S. Wang, “Learning dynamic siamese network for visual object tracking,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 1763–1771.

- [81] L. Zhang, A. Gonzalez-Garcia, J. Van De Weijer, M. Danelljan, and F. S. Khan, “Synthetic data generation for end-to-end thermal infrared tracking,” IEEE Transactions on Image Processing, vol. 28, no. 4, pp. 1837–1850, 2018.

- [82] X. Li, Q. Liu, N. Fan, Z. He, and H. Wang, “Hierarchical spatial-aware siamese network for thermal infrared object tracking,” Knowledge-Based Systems, vol. 166, pp. 71–81, 2019.

- [83] M. Danelljan, G. Häger, F. S. Khan, and M. Felsberg, “Learning spatially regularized correlation filters for visual tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2015, pp. 4310–4318.

- [84] X. Li, C. Ma, B. Wu, Z. He, and M.-H. Yang, “Target-aware deep tracking,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 1369–1378.

- [85] Y. Song, C. Ma, X. Wu, L. Gong, L. Bao, W. Zuo, C. Shen, R. W. Lau, and M.-H. Yang, “Vital: Visual tracking via adversarial learning,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 8990–8999.

- [86] N. Wang, Y. Song, C. Ma, W. Zhou, W. Liu, and H. Li, “Unsupervised deep tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 1308–1317.

- [87] X. Chen, B. Yan, J. Zhu, D. Wang, X. Yang, and H. Lu, “Transformer tracking,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2021, pp. 8126–8135.

- [88] Y. Cui, C. Jiang, L. Wang, and G. Wu, “Mixformer: End-to-end tracking with iterative mixed attention,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 13 608–13 618.

- [89] Z. Song, J. Yu, Y.-P. P. Chen, and W. Yang, “Transformer tracking with cyclic shifting window attention,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 8791–8800.