Improving Weakly-supervised Object Localization

via Causal Intervention

Abstract.

The recently emerged weakly-supervised object localization (WSOL) methods can learn to localize an object in the image only using image-level labels. Previous works endeavor to perceive the interval objects from the small and sparse discriminative attention map, yet ignoring the co-occurrence confounder (e.g., duck and water), which makes the model inspection (e.g., CAM) hard to distinguish between the object and context. In this paper, we make an early attempt to tackle this challenge via causal intervention (CI). Our proposed method, dubbed CI-CAM, explores the causalities among image features, contexts, and categories to eliminate the biased object-context entanglement in the class activation maps thus improving the accuracy of object localization. Extensive experiments on several benchmarks demonstrate the effectiveness of CI-CAM in learning the clear object boundary from confounding contexts. Particularly, on the CUB-200-2011 which severely suffers from the co-occurrence confounder, CI-CAM significantly outperforms the traditional CAM-based baseline ( vs in Top-1 localization accuracy). While in more general scenarios such as ILSVRC 2016, CI-CAM can also perform on par with the state of the arts.

1. Introduction

Object localization (Tompson et al., 2015; Choudhuri et al., 2018) aims to indicate the category, the spatial location and scope of an object in a given image, in forms of bounding box (Everingham et al., 2010; Russakovsky et al., 2015). This task has been studied extensively in the computer vision community (Tompson et al., 2015) due to its broad applications, such as scene understanding and autonomous driving. Recently, the techniques based on deep convolutional neural networks (DCNNs) (Simonyan and Zisserman, 2014; Szegedy et al., 2015; He et al., 2016; Luo et al., 2021, 2018) promote the localization performance to a new level. However, this performance promotion is at the price of huge amounts of fine-grained human annotations(Luo et al., 2019, 2020). To alleviate such a heavy burden, weakly-supervised object localization (WSOL) has been proposed by only resorting to image-level labels.

To capitalize on the image-level labels, the existing studies (Selvaraju et al., 2017; Diba et al., 2017; Kim et al., 2017; Wei et al., 2018; Shao et al., 2021) follow the Class Activation Mapping (CAM) approach (Zhou et al., 2016) to generate class activation maps first and segment the highest activation area for a coarse localization. Albeit, CAM is initially designed for the classification task and tends to focus only on the most discriminative feature to increase its classification accuracy. Targeting on this issue, recent prevailing works (Diba et al., 2017; Kim et al., 2017; Wei et al., 2018; Zhang et al., 2018; Gao et al., 2019; Mai et al., 2020; Yang et al., 2020a) endeavor to perceive the interval objects from the small and sparse “discriminative regions”. On one hand, they adjust the network structure to make the detector more tailored for object localization in a weak supervision setting. For example, some methods (Diba et al., 2017; Wei et al., 2018) use a three stages network structure to continuously optimize the prediction results by training the current stage using the output of the previous stages as supervision. Besides, some methods (Kim et al., 2017; Zhang et al., 2018; Gao et al., 2019; Mai et al., 2020) use two parallel branches that its first branch is responsible for digging out the most discriminative small regions, and the second branch is responsible for detecting the second discriminative large regions. On the other hand, they also make full use of the image information to improve the prediction results. For example, (Wei et al., 2018) and NL-CCAM (Yang et al., 2020a) utilize the contextual information of surrounding pixels and the activation maps of low probability class, respectively.

In contrast to the prevailing efforts focusing on the most discriminative feature, in this work we target another key issue which we called “entangled context”. The reason behind this issue is that the objects usually co-occur with a certain background. For example, if most “duck” appears concurrently with “water” in the images, these two concepts would be inevitably entangled and a classification model would wrongly generate ambiguous boundary between “duck” and “water” with only image-level supervision. In contrast to the vanilla CAM which yields a relatively small bounding box on the small discriminative region, we notice that our targeted problem would cause a biased bounding box that includes the wrongly entangled background, which impairs the localization accuracy in terms of the object range. Consequently, we argue that resolving the “entangled context” issue is vital for WSOL but remains unnoticed and unexplored.

In this paper, we propose a principled solution, dubbed CI-CAM, tailored for WSOL based on Causal Inference (Pearl, 2014) (CI). CI-CAM ascribes the “entangled context” issue to the frequently co-occurred background that misleads the image-level classification model to learn spurious correlations between pixels and labels. To find those intrinsic pixels which truly cause the image-level label, CI-CAM first establishes a structural causal model (SCM) (Pearl et al., 2016). Based on SCM, CI-CAM further regards the object context as a confounder and explores the causalities among image features, contexts, and labels to eliminate the biased co-occurrence in the class activation maps. More specifically, the CI-CAM causal context pool accumulates the contextual information of each image for each category and employs it as attention in convolutional layers to enhance the feature representation for making feature boundary clearer. To our knowledge, we are making an early attempt to apprehend and approach the “entangled context” issue for WSOL. To sum up, the contributions of this paper are as follows.

-

•

We are among the pioneers to concern and reveal the “entangled context” issue of WSOL that remains unexplored by prevailing efforts.

-

•

We propose a principled solution for the “entangled context” issue based on causal inference, in which we pinpoint the context as a confounder and eliminate its negative effects on image features via backdoor adjustment (Pearl et al., 2016).

-

•

We design a new network structure, dubbed CI-CAM, to embed the causal inference into the WSOL pipeline with an end-to-end scheme.

-

•

The proposed CI-CAM achieves state-of-the-art performance on the CUB-200-2011 dataset (Wah et al., 2011), which significantly outperforms the baseline method by and in terms of classification and localization accuracy, respectively. While in more general scenarios such as ILSVRC 2016 (Russakovsky et al., 2015) which suffer less from the “entangled context” due to the huge amount of images and various backgrounds, CI-CAM can also perform on par with the state of the arts.

2. Related Work

2.1. Weakly-supervised Object Localization

Since CAM (Zhou et al., 2016) is prone to bias to the most discriminative part of the object rather than the integral object, the research attention of most of the current methods is how to improve the accuracy of object localization. These methods can be broadly categorized into two groups: enlarging the proposal region and discriminative region removal.

1) Enlarging proposal region: enlarging the box size appropriately of the initial prediction box (Diba et al., 2017; Wei et al., 2018). WCCN (Diba et al., 2017) introduces three cascaded networks trained in an end-to-end pipeline. The latter stage continuously enlarges and refines the output proposals of its previous stage. (Wei et al., 2018) selects the box by comparing the mean pixel confidence values of the initial prediction region and its surrounding region. If the gap of the mean values of two regions is large, the initial prediction region is the final prediction box; otherwise, the surrounding region.

2) Discriminative region removal: detecting the bigger region after removing the most discriminative region (Kim et al., 2017; Zhang et al., 2018; Choe and Shim, 2019; Mai et al., 2020). TP-WSL (Kim et al., 2017) first detects the most discriminative region in the first network, Then, it erases this region of the conv5-3 feature maps in the second network (e.g., zero). ACoL (Zhang et al., 2018) uses the masked feature maps by erasing the most discriminative region discovered in the first classifier as the input feature maps of the second classifier. ADL (Choe and Shim, 2019) stochastically produces an erased mask or an importance map at each iteration as a final attention map projected in the feature maps of images. EIL (Mai et al., 2020) is an adversarial erasing method that simultaneously computes the erased branch and the unerased branch by sharing one classifier.

The above methods basically focus on the poor localization caused by the most discriminative part of the object. However, they ignore the problem of the fuzzy boundary between the objects and the co-occur certain context background. For example, if most “duck” appears concurrently with “water” in the images, these two concepts would be inevitably entangled and wrongly generate ambiguous boundaries using only image-level supervision.

2.2. Causal Inference

Causal inference (Sobel, 1996; Yao et al., 2020; Pearl et al., 2009) is a critical research topic across many domains, such as statistics (Pearl et al., 2016), politics (Keele, 2015), psychology, and epidemiology (MacKinnon et al., 2007; Richiardi et al., 2013). The purpose of causal inference is to give the model the ability to pursue causal effects: we can eliminate false bias (Bareinboim and Pearl, 2012), clarify the expected model effects (Besserve et al., 2018), and modularize reusable features to make them well generalized (Parascandolo et al., 2018). Nowadays, causal inference is used repeatedly in computer vision tasks (Niu et al., 2020; Chen et al., 2020; Qi et al., 2020; Tang et al., 2020a, b; Wang et al., 2020; Yang et al., 2020b; Yue et al., 2020; Zhang et al., 2020). Specifically, Zhang et al. (Zhang et al., 2020) utilize a SCM (Didelez and Pigeot, 2001; Pearl et al., 2016) to deeply analyze the causalities among image features, contexts, and class labels and propose a new network: Context Adjustment (CONTA) that achieves the new state-of-the-art performance in weakly-supervised semantic segmentation task. Yue et al. (Yue et al., 2020) use the causal intervention in few-shot learning. They uncover the pre-trained knowledge is indeed a confounder that limits the performance. And they propose a novel FSL paradigm: Interventional Few-Shot Learning (IFSL), which is implemented via the backdoor adjustment (Pearl et al., 2016). Tang et al. (Tang et al., 2020a) show that the SGD momentum is essentially a confounder in long-tailed classification by using a SCM.

In our work, we also leverage a SCM (Pearl et al., 2016) to analyze the causalities among image features, contexts, and class labels, we find that context is a confounder factor. In §3.4, we will introduce a causal context pool that is used for eliminating the negative effects of the context and keeping its positive effects.

3. Methodology

In this section, we describe the details of the CI-CAM method as shown in Figure 3. We first introduce the preliminaries of CI-CAM including problem settings, causal inference, and baseline method in §3.1. Second, we formulate the causalities among pixels, context, and labels with a SCM in §3.2. Based on SCM, we approach the “entangled context” issue in a principled way via causal inference in §3.3. We design the network structure of CI-CAM to embed causal inference in the WSOL pipeline detailed in §3.4, at the core of which is the causal context pool. Finally, we give the training objective of CI-CAM in §3.5.

3.1. Preliminaries

Problem Settings. Before presenting our method, we first introduce the problem settings of WSOL formally. Given an image , WSOL targeting at classifying and locating one object in terms of the class label and the bounding box. However, only image-level labels can be accessed during the training phase.

Causal Inference. Causal inference enables to equip the model with the ability to pursue causal effects: it can eliminate false bias (Bareinboim and Pearl, 2012) as well as clarify the expected model effects (Besserve et al., 2018). SCM (Pearl et al., 2016) is a directed graph in which each node represents each participant of the model, and each link denotes the causalities between the two nodes. Nowdays, SCM is widely used in causal inference scenes (Yue et al., 2020; Zhang et al., 2020; Tang et al., 2020a). Backdoor adjustment (Pearl et al., 2016) is responsible for finding the wrong impact between two nodes and eliminating this issue by leveraging the three do-calculus rules (Neuberg, 2003).

Baseline Method. Class activation maps (CAMs) are widely employed for locating the object boxes in the WSOL task. Yang et al. (Yang et al., 2020a) argue that using only one activation map of the highest probability class for segmenting object boxes is problematic since it often biases into limited regions or sometimes even highlights background regions. Based on such observation, they propose the NL-CCAM (Yang et al., 2020a) method to combine all activation maps from the highest to the lowest probability class to a localization map using a specific combinational function and achieves good localization performance.

Based on vanilla fully convolutional network (FCN)-based backbone, e.g., VGG16 (Simonyan and Zisserman, 2014), NL-CCAM (Yang et al., 2020a) inserts four non-local blocks before every bottleneck layer excluding the first bottleneck layer simultaneously to produce a non-local fully convolutional network (NL-FCN). Given an image , it is fed into the NL-FCN to produce its feature maps , where is the number of channels and is the spatial size. Then, the feature maps are forwarded to a global average pooling (GAP) layer followed by a classifier with a fully connected layer. The prediction scores are computed by using a softmax layer on the top of the classifier for classification. The weight matrix of the classifier is denoted as , where is the number of image classes. Therefore, the activation maps of class among class activation maps (CAMs) proposed in (Zhou et al., 2016) are given as follows.

| (1) |

where .

NL-CCAM (Yang et al., 2020a) produces a localization map by using a combinational function in CAMs instead of using the activation map of the highest probability class among CAMs. Firstly, it ranks the activation maps from the highest probability class to the lowest and uses to denote the activation map of the highest probability class. The class label with the highest probability is computed as follows.

| (2) |

where . Then it combines the class activation maps to a localization map as follows.

| (3) |

where is a combinational function. Finally, it segments the localization map using a thresholding technique proposed in (Zhou et al., 2016) to generate a bounding box for object localization.

Our method is based on NL-CCAM (Yang et al., 2020a) but introduces substantial improvements. We equip the baseline network with the ability of causal inference to tackle the “entangled context” issue, which will be detailed in the following.

3.2. Structural Causal Model

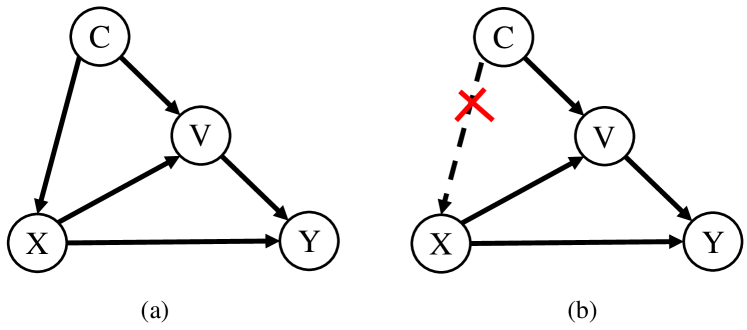

In this section, we will reveal the reason why the context hurts the object localization quality. We formulate the causalities among image features , context confounder , and image-level labels , with a structural causal model (SCM) (Pearl et al., 2016) shown in Figure 2 (a). The direct links denote the causalities between the two nodes: cause effect.

: This link indicates that the backbone generates feature maps under the effect of context . Although the confounder context is helpful for a better association between the image features and labels via a model , e.g., it is likely a “duck” when seeing a “water” region, mistakenly associates non-causal but positively correlated pixels to labels, e.g., the “water” region wrongly belongs to “duck”. That is one reason for the inaccurate localization in WSOL. Fortunately, as we will introduce later in §3.4, we can avoid it by using a causal context pool in causal intervention.

: is an image specific-representation using the contextual templates from . For example, tells us that the shape and location of a “duck” (foreground) in a scene (background). Note that this assumption is not adhoc in our model, it underpins almost every concept learning method from the classic Deformable Part Models (Felzenszwalb et al., 2009) to modern CNNs (Girshick et al., 2015).

: These links indicate that and together affect the label of an image. denotes that the contextual templates directly affect the image label. It is worth noting that even if we do not explicitly take as an input for the WSOL model, still holds. On the contrary, if in Figure 2 (a), the only path left from to : . Considering the effect of context on image features , we will cut off the link of between . Then, no context are allowed to contribute to the image label by training , which results in never uncovering the context. So, WSOL would be impossible.

So far, we have pinpointed the role of context played in the causal graph of image-level classification in Figure 2 (a). Next, we will introduce a causal intervention method to remove the confounding effect.

3.3. Causal Intervention for WSOL

We propose to use based on the backdoor adjustment (Pearl et al., 2016) as the new image-level classifier, which removes the confounder and pursues the true causality from to shown in Figure 2 (b). By this way, we can achieve better classification and localization in WSOL. The key idea is to 1) cut off the link , and 2) stratify into pieces , and denotes the class context. Formally, we have

| (4) |

where abstractly represents that is formed by the combination of and , and is the number of image class. As does not affect , it guarantees to have a fair opportunity to incorporate every context into ’s prediction, subject to a prior . To simplify the forward propagation of the network, we adopt the Normalized Weighted Geometric Mean (Xu et al., 2015) to optimize Eq. (4) by moving the outer sum into the feature level

| (5) |

Therefore, we only need to feed-forward the network once instead of times. Since the number of samples for each class in the dataset is roughly the same, we set to uniform . After further optimizing Eq. (5), we have

| (6) |

where denotes projection. So far, the “entangled context” issue has been transferred into calculating . We will introduce a causal context pool to represent in §3.4.

3.4. Network Structure

In this section, we implement causal inference for WSOL with a tailored network structure, at the core of which is a causal context pool. The main idea of the causal context pool is to accumulate all contexts of each class, and then re-project the contexts to the feature maps of convolutional layers shown in Eq. (6) to pursue the pure causality between the cause and the effect . Figure 3 illustrates the overview of CI-CAM that includes four parts: backbone, CAM module, causal context pool, and combinational part.

Backbone. Inherited from the baseline method, we design our backbone by inserting multiple non-local blocks at both low- and high-level layers of a FCN-based network simultaneously. It acts as a feature extractor that takes the RGB images as input and producing high-level position-aware feature maps.

CAM module. It includes a global average pooling (GAP) layer and a classifier with a fully connected layer (Zhou et al., 2016). Image feature maps generated by the backbone are fed into GAP and classifier to produce prediction scores . Then, the CAM network multiplies the weight of the classifier to to produce class activation maps shown in Eq. (1). In our model, we use two CAM modules with shared weights. The first CAM module is designed to produce initial prediction scores and class activation maps , and the second CAM network is responsible for producing more accurate prediction scores and class activation maps using the feature maps enhanced by the causal context pool.

Causal context pool. We maintain a causal context pool during the network training phase, where denotes the context of all class images. ceaselessly stores all contextual information maps of each class by accumulating the activation map of the highest probability class. Then, it projects all contexts of each class as attentions onto the feature maps of the last convolutional layer to produce enhanced feature maps. The idea behind using a causal context pool is not only to cut off the negative effect of entangled context on image feature maps but also to spotlight the positive region of the image feature maps for boosting localization performance.

Combinational part. The input of the combinational part is class activation maps generated from the CAM module, and the corresponding output is a localization map calculated by Eq. (3): First, the combinational part ranks the activation maps from the highest probability class to the lowest. Second, it combines these sorted activation maps by a combinational function as Eq. (3).

With all the key modules presented above, we would give a brief illustration of the data flow in our network. Given an image , we first forward to the backbone to produce feature maps . is then fed into the following two parallel CAM branches. The first CAM branch produces initial prediction scores and class activation maps . Then, the causal context pool would be updated by fusing the activation map of the highest probability class in as follows:

| (7) |

where , denotes the update rate, and denotes the batch normalization operation. The second branch is responsible for producing more accurate prediction scores and class activation maps . The input of the second branch is enhanced feature maps projected by the context among causal context pool of the highest probability class generated from the first branch. More concretely, the feature enhancement can be calculated as

| (8) |

where denotes matrix dot product. In the combinational part, we first build a localization map by aggregating all activation maps from the highest to the lowest probability class using a specific combinational function (Yang et al., 2020a) in Eq. (3). Then, we use the simple thresholding technique proposed by (Zhou et al., 2016) to generate a bounding box from the localization map. Finally, the bounding box and prediction scores as the final prediction of CI-CAM.

3.5. Training Objective

During the phase of training, our proposed network learns to minimize image classification losses for both classification branches. Given an image , we can obtain initial prediction scores and more accurate prediction scores of the two classifiers shown in Figure 3. We follow a naive scheme to train the two classifiers together in an end-to-end pipeline using the following loss function .

| (9) |

where is the ground-truth label of an image.

4. Experiments

4.1. Datasets and Evaluation Metrics

Datasets. The proposed CI-CAM was evaluated on two public datasets, i.e., CUB-200-2011 (Wah et al., 2011) and ILSVRC 2016 (Russakovsky et al., 2015). 1) CUB-200-2011 is an extended version of Caltech-UCSD Birds 200 (CUB-200) (Welinder et al., 2010) containing bird species which focuses on the study of subordinate categorization. Based on the CUB-200, CUB-200-2011 adds more images for each category and labels new part localization annotations. CUB-200-2011 contains images in the training set and images in the test set. Each image of CUB-200-2011 is annotated by the bounding boxes, part locations, and attribute labels. 2) ILSVRC 2016 is the dataset originally prepared for the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). It contains million images of categories in the training set, in the validation set and images in the test set. For both datasets, we only utilize the image-level classification labels for training, as constrained by the problem setting in WSOL.

Evaluation Metrics. We leverage the classification accuracy (cls) and localization accuracy (loc) as the evaluation metrics for WSOL. The former includes Top-1 and Top-5 classification accuracy, while the latter includes Top-1, Top-5, and GT-know localization accuracy. Top-1 classification accuracy denotes the accuracy of the highest prediction score (likewise for localization accuracy). Top-5 classification accuracy denotes that if one of the five predictions with the highest score is correct, it counts as correct (likewise for localization accuracy). GT-know localization accuracy is the accuracy that only considers localization regardless of classification result compared to Top-1 localization accuracy (Mai et al., 2020).

4.2. Implementation Details

We use PyTorch and PaddlePaddle for implementation, both achieve similar performance. We adopt the VGG16 (Simonyan and Zisserman, 2014) pre-trained on ImageNet (Russakovsky et al., 2015) as the backbone. We insert four non-local blocks to the backbone before every bottleneck layer excluding the first one. The newly added blocks are randomly initialized except for the batch normalization layers in the non-local blocks, which are initialized as zero. We use Adam (Kingma and Ba, 2014) to optimize CI-CAM with , . We finetune our network with the learning rate , batch size , update rate , and epoch on the CUB-200-2011 (and learning rate , batch size , update rate , and epoch on the ILSVRC 2016). At test stage, we resize images to . For object localization, we produce the localization map with the threshold , followed by segmenting it to generate a bounding box. The source code will be made public available.

| Methods | Top-1 Cls(%) | Top-1 Loc(%) |

|---|---|---|

| VGG-CAM (Zhou et al., 2016) | 71.24 | 44.15 |

| VGG-ACoL (Zhang et al., 2018) | 71.90 | 45.92 |

| VGG-ADL (Choe and Shim, 2019) | 65.27 | 52.36 |

| VGG-DANet (Xue et al., 2019) | 75.40 | 52.52 |

| VGG-NL-CCAM (Yang et al., 2020a) | 73.4 | 52.4 |

| VGG-MEIL (Mai et al., 2020) | 74.77 | 57.46 |

| VGG-Rethinking-CAM (Bae et al., 2020) | 74.91 | 61.3 |

| VGG-Baseline∗ (Yang et al., 2020a) | 74.45 | 52.54 |

| VGG-CI-CAM(ours) | 75.56 ( 1.11 ) | 58.39 ( 5.85 ) |

| Methods | Top-1 Cls(%) | Top-1 Loc(%) |

|---|---|---|

| VGG-CAM (Zhou et al., 2016) | 66.6 | 42.8 |

| VGG-ACoL (Zhang et al., 2018) | 67.5 | 45.83 |

| VGG-ADL (Choe and Shim, 2019) | 69.48 | 44.92 |

| VGG-NL-CCAM (Yang et al., 2020a) | 72.3 | 50.17 |

| VGG-MEIL (Mai et al., 2020) | 70.27 | 46.81 |

| VGG-Rethinking-CAM (Bae et al., 2020) | 67.22 | 45.4 |

| VGG-Baseline∗ (Yang et al., 2020a) | 72.15 | 48.55 |

| VGG-CI-CAM(ours) | 72.62 ( 0.47 ) | 48.71 ( 0.16 ) |

4.3. Comparison with State-of-the-Art Methods

We compare CI-CAM with other state-of-the-art methods on the CUB-200-2011 and ILSVRC 2016 shown in Table 1 and Table 2.

on the CUB-200-2011, we observe that our CI-CAM significantly outperforms the baseline and can be on par with the existing methods under all the evaluation metrics: CI-CAM yields Top-1 classification accuracy of , which is higher than the baseline and brings about improvement to the baseline on Top-1 localization accuracy. Compared with the current state-of-the-art method in terms of classification accuracy, i.e., DANet (Xue et al., 2019), CI-CAM outperforms it by . While under the localization metric, CI-CAM brings a significant performance gain of over DANet. Compared with the current state-of-the-art method in terms of Top-1 localization accuracy, i.e., Rethinking-CAM, our model yields a slightly lower localization accuracy but outperforms it on classification. In conclusion, the introduction of causal inference to WSOL task is effective for on both object localization and classification.

For more general scenarios as in ILSVRC 2016 which suffer less from the “entangled context” due to the huge amount of images and various backgrounds, CI-CAM can also perform on par with the state of the arts. Compared with the NL-CCAM model (Baseline re-implemented by ourselves), which enjoys state-of-the-art classification and localization accuracy simultaneously, CI-CAM yields a slightly higher result under both metrics. Compared with MEIL (Xue et al., 2019), CI-CAM brings a significant performance gain of and in classification and localization accuracy over it, respectively.

| Dataset | TwoC | ConPool | Top-1 Cls(%) | Top-5 Cls(%) | Top-1 Loc(%) | Top-5 Loc(%) | GT-know Loc(%) |

|---|---|---|---|---|---|---|---|

| CUB-200-2011 test set | 74.43 | 91.85 | 57.16 | 70.12 | 75.37 | ||

| 75.56 | 92.03 | 58.39 | 70.54 | 75.68 | |||

| ILSVRC 2016 val set | 72.60 | 90.90 | 48.25 | 58.20 | 61.82 | ||

| 72.62 | 90.93 | 48.71 | 58.76 | 62.36 |

4.4. Ablation Study

To better understand the effectiveness of the causal context pool module, we conducted several ablation studies on the CUB-200-2011 and ILSVRC 2016 using VGG16. The results of our ablation studies are illustrated in Table 3.

Comparing the first-row and the second-row experimental results on the CUB-200-2011 dataset, employing a causal context pool can comprehensively improve the accuracy of classification and localization. Especially it has increased by and in the Top-1 classification accuracy and the Top-1 localization accuracy, respectively. Meanwhile, it also improves by , , and in the Top-5 classification accuracy, Top-5 localization accuracy, and GT-know localization accuracy, respectively. In addition, comparing the third-row and the fourth-row experimental results on the ILSVRC 2016 dataset, we are surprised to find that the causal context pool also performs well on the ILSVRC 2016 dataset which suffers less from the “entangled context” due to the huge amount of images and various backgrounds. More specifically, using a causal context pool has improved by , , and in the Top-1, Top-5, and GT-know localization accuracy. At the same time, the Top-1 and Top-5 classification accuracy has been increased by and by using a causal context pool, respectively.

In conclusion, employing a causal context pool can improve classification and localization together on the CUB-200-2011 dataset. And the main improvement of employing the causal context pool on the ILSVRC 2016 dataset is localization accuracy. The experimental results from Table 3 verify that the introduced causal context pool module can boost the accuracy in the WSOL task.

| Update rate | Top-1 Cls(%) | Top-1 Loc(%) | GT-know Loc |

|---|---|---|---|

| 0.001 | 74.28 | 58.28 | 76.37 |

| 0.002 | 74.77 | 59.23 | 77.80 |

| 0.005 | 74.49 | 58.27 | 76.94 |

| 0.01 | 75.56 | 58.39 | 75.68 |

| 0.02 | 74.58 | 57.90 | 76.54 |

| 0.04 | 74.53 | 59.03 | 77.63 |

| 0.08 | 74.20 | 58.70 | 77.77 |

4.5. Analysis

As shown in the Eq. (7), we introduce a hyperparameter for updating the causal context pool. Besides, we use a necessary hyperparameter segmentation threshold for segmenting bounding box. Therefore, we will discuss their effects on detection performance when and take different values in this section on the CUB-200-2011 dataset.

1) Update rate . To inspect the effect of the update rate on classification and localization accuracy, we report the results of using different values of shown in Table 4. By comparing the results we can observe that the update rate has a great impact on the classification and localization accuracy of the model, especially in GT-know localization ( vs ). The highest Top-1 classification accuracy outperforms the lowest Top-1 classification accuracy by , and the highest Top-1 localization accuracy outperforms the lowest Top-1 localization accuracy by . However, in these experiments, there is no that performs best in both classification and localization. Therefore, when we choose the update rate , we should determine it according to the specific needs of the task.

2) Segmentation threshold . Although does not participate in the training of the model, it still plays a very important role in object localization. If the value of is low, the detector tends to introduce some highlighted background area around the object. So previous methods (Zhou et al., 2016; Zhang et al., 2018) used segmentation threshold , and NL-CCAM used segmentation threshold . Based on a large segmentation threshold, they tend to filter out low-light object regions and focus on the most discriminative part of the object rather than the whole object. Fortunately, we leverage a causal context pool to resolve the above problem by making the boundary of object and co-occurrence context clearer.

To inspect the effect of causal context pool on classification and localization accuracy, we test the different segmentation threshold shown in Figure 4. Firstly, we find that the best localization of NL-CCAM is . When becomes smaller or larger, it will reduce the localization accuracy. Secondly, we can observe that the localization accuracy of CI-CAM is higher than that of NL-CCAM. Especially we obtain the highest Top-1 localization, Top-5 localization, and GT-know localization accuracy when , which means that CI-CAM can locate a larger part of the object without including the background. Therefore, we can indirectly conclude that CI-CAM is better in dealing with the boundary between the instance and the co-occurrence context background. To illustrate the effect of CI-CAM more vividly, we present the localization maps of the CAM, NL-CCAM, and our model on the CUB-200-2011 as well as the ILSVRC 2016 datasets in Figure 5. Our visualization in Figure 5 indicates our method is effective in dealing with the co-occurrence context.

5. Conclusions

In this paper, we targeted the “entangled context” problem in the WSOL task, which remains unnoticed and unexplored by existing efforts. Through analyzing the causal relationship between image features, context, and image labels using a structural causal model, we pinpointed the context as a confounder and tried to utilize probability formula transformation to cut off the link between context and image features. Based on the causal analysis, we proposed an end-to-end CI-CAM model, which uses a causal context pool to accumulate all contexts of each class, and then re-project the fused contexts to the feature maps of convolutional layers to make the feature boundary clearer. To our knowledge, we have made a very early attempt to apprehend and approach the “entangled context” issue for WSOL. Extensive experiments have demonstrated that the “entangled context” is a practical issue within the WSOL task and our proposed method is effective towards it: CI-CAM achieved the new state-of-the-art performance on the CUB-200-2011 and performed on par with the state of the arts.

6. Acknowledge

This work was supported by the National Natural Science Foundation of China (U19B2043, 61976185), Zhejiang Natural Science Foundation (LR19F020002), Zhejiang Innovation Foundation(2019R52002), CCF-Baidu Open Fund under Grant No. CCF-BAIDUOF2020016, and the Fundamental Research Funds for the Central Universities.

References

- (1)

- Bae et al. (2020) Wonho Bae, Junhyug Noh, and Gunhee Kim. 2020. Rethinking class activation mapping for weakly supervised object localization. In European Conference on Computer Vision. Springer, 618–634.

- Bareinboim and Pearl (2012) Elias Bareinboim and Judea Pearl. 2012. Controlling selection bias in causal inference. In Artificial Intelligence and Statistics. PMLR, 100–108.

- Besserve et al. (2018) Michel Besserve, Arash Mehrjou, Rémy Sun, and Bernhard Schölkopf. 2018. Counterfactuals uncover the modular structure of deep generative models. arXiv preprint arXiv:1812.03253 (2018).

- Chen et al. (2020) Long Chen, Xin Yan, Jun Xiao, Hanwang Zhang, Shiliang Pu, and Yueting Zhuang. 2020. Counterfactual samples synthesizing for robust visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10800–10809.

- Choe and Shim (2019) Junsuk Choe and Hyunjung Shim. 2019. Attention-based dropout layer for weakly supervised object localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2219–2228.

- Choudhuri et al. (2018) Sandipan Choudhuri, Nibaran Das, Ritesh Sarkhel, and Mita Nasipuri. 2018. Object localization on natural scenes: A survey. International Journal of Pattern Recognition and Artificial Intelligence 32, 02 (2018), 1855001.

- Diba et al. (2017) Ali Diba, Vivek Sharma, Ali Pazandeh, Hamed Pirsiavash, and Luc Van Gool. 2017. Weakly supervised cascaded convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 914–922.

- Didelez and Pigeot (2001) Vanessa Didelez and Iris Pigeot. 2001. Judea pearl: Causality: Models, reasoning, and inference. Politische Vierteljahresschrift 42, 2 (2001), 313–315.

- Everingham et al. (2010) Mark Everingham, Luc Van Gool, Christopher KI Williams, John Winn, and Andrew Zisserman. 2010. The pascal visual object classes (voc) challenge. IJCV (2010).

- Felzenszwalb et al. (2009) Pedro F Felzenszwalb, Ross B Girshick, David McAllester, and Deva Ramanan. 2009. Object detection with discriminatively trained part-based models. IEEE transactions on pattern analysis and machine intelligence 32, 9 (2009), 1627–1645.

- Gao et al. (2019) Yan Gao, Boxiao Liu, Nan Guo, Xiaochun Ye, Fang Wan, Haihang You, and Dongrui Fan. 2019. C-MIDN: Coupled Multiple Instance Detection Network With Segmentation Guidance for Weakly Supervised Object Detection. In ICCV.

- Girshick et al. (2015) Ross Girshick, Forrest Iandola, Trevor Darrell, and Jitendra Malik. 2015. Deformable part models are convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. 437–446.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In CVPR.

- Keele (2015) Luke Keele. 2015. The statistics of causal inference: A view from political methodology. Political Analysis (2015), 313–335.

- Kim et al. (2017) Dahun Kim, Donghyeon Cho, Donggeun Yoo, and In So Kweon. 2017. Two-phase learning for weakly supervised object localization. In Proceedings of the IEEE International Conference on Computer Vision. 3534–3543.

- Kingma and Ba (2014) Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

- Luo et al. (2020) Yawei Luo, Ping Liu, Tao Guan, Junqing Yu, and Yi Yang. 2020. Adversarial Style Mining for One-Shot Unsupervised Domain Adaptation. In Advances in Neural Information Processing Systems. 20612–20623.

- Luo et al. (2021) Yawei Luo, Ping Liu, Liang Zheng, Tao Guan, Junqing Yu, and Yi Yang. 2021. Category-Level Adversarial Adaptation for Semantic Segmentation using Purified Features. IEEE Transactions on Pattern Analysis & Machine Intelligence (TPAMI) (2021).

- Luo et al. (2019) Yawei Luo, Liang Zheng, Tao Guan, Junqing Yu, and Yi Yang. 2019. Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2507–2516.

- Luo et al. (2018) Yawei Luo, Zhedong Zheng, Liang Zheng, Tao Guan, Junqing Yu, and Yi Yang. 2018. Macro-micro adversarial network for human parsing. In Proceedings of the European conference on computer vision (ECCV). 418–434.

- MacKinnon et al. (2007) David P MacKinnon, Amanda J Fairchild, and Matthew S Fritz. 2007. Mediation analysis. Annu. Rev. Psychol. 58 (2007), 593–614.

- Mai et al. (2020) Jinjie Mai, Meng Yang, and Wenfeng Luo. 2020. Erasing Integrated Learning: A Simple Yet Effective Approach for Weakly Supervised Object Localization. In CVPR.

- Neuberg (2003) Leland Gerson Neuberg. 2003. Causality: Models, Reasoning, and Inference.

- Niu et al. (2020) Yulei Niu, Kaihua Tang, Hanwang Zhang, Zhiwu Lu, Xian-Sheng Hua, and Ji-Rong Wen. 2020. Counterfactual vqa: A cause-effect look at language bias. arXiv preprint arXiv:2006.04315 (2020).

- Parascandolo et al. (2018) Giambattista Parascandolo, Niki Kilbertus, Mateo Rojas-Carulla, and Bernhard Schölkopf. 2018. Learning independent causal mechanisms. In International Conference on Machine Learning. PMLR, 4036–4044.

- Pearl (2014) Judea Pearl. 2014. Interpretation and identification of causal mediation. Psychological methods 19, 4 (2014), 459.

- Pearl et al. (2009) Judea Pearl et al. 2009. Causal inference in statistics: An overview. Statistics surveys 3 (2009), 96–146.

- Pearl et al. (2016) Judea Pearl, Madelyn Glymour, and Nicholas P Jewell. 2016. Causal inference in statistics: A primer. John Wiley & Sons.

- Qi et al. (2020) Jiaxin Qi, Yulei Niu, Jianqiang Huang, and Hanwang Zhang. 2020. Two causal principles for improving visual dialog. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10860–10869.

- Richiardi et al. (2013) Lorenzo Richiardi, Rino Bellocco, and Daniela Zugna. 2013. Mediation analysis in epidemiology: methods, interpretation and bias. International journal of epidemiology 42, 5 (2013), 1511–1519.

- Russakovsky et al. (2015) Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. 2015. Imagenet large scale visual recognition challenge. IJCV (2015).

- Selvaraju et al. (2017) Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. 2017. Grad-cam: Visual explanations from deep networks via gradient-based localization. In ICCV.

- Shao et al. (2021) Feifei Shao, Long Chen, Jian Shao, Wei Ji, Shaoning Xiao, Lu Ye, Yueting Zhuang, and Jun Xiao. 2021. Deep Learning for Weakly-Supervised Object Detection and Object Localization: A Survey. arXiv preprint arXiv:2105.12694 (2021).

- Simonyan and Zisserman (2014) Karen Simonyan and Andrew Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. In arXiv.

- Sobel (1996) Michael E Sobel. 1996. An introduction to causal inference. Sociological Methods & Research 24, 3 (1996), 353–379.

- Szegedy et al. (2015) Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. 2015. Going deeper with convolutions. In CVPR.

- Tang et al. (2020a) Kaihua Tang, Jianqiang Huang, and Hanwang Zhang. 2020a. Long-tailed classification by keeping the good and removing the bad momentum causal effect. arXiv preprint arXiv:2009.12991 (2020).

- Tang et al. (2020b) Kaihua Tang, Yulei Niu, Jianqiang Huang, Jiaxin Shi, and Hanwang Zhang. 2020b. Unbiased scene graph generation from biased training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3716–3725.

- Tompson et al. (2015) Jonathan Tompson, Ross Goroshin, Arjun Jain, Yann LeCun, and Christoph Bregler. 2015. Efficient object localization using convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 648–656.

- Wah et al. (2011) Catherine Wah, Steve Branson, Peter Welinder, Pietro Perona, and Serge Belongie. 2011. The caltech-ucsd birds-200-2011 dataset. (2011).

- Wang et al. (2020) Tan Wang, Jianqiang Huang, Hanwang Zhang, and Qianru Sun. 2020. Visual commonsense r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10760–10770.

- Wei et al. (2018) Yunchao Wei, Zhiqiang Shen, Bowen Cheng, Honghui Shi, Jinjun Xiong, Jiashi Feng, and Thomas Huang. 2018. Ts2c: Tight box mining with surrounding segmentation context for weakly supervised object detection. In Proceedings of the European Conference on Computer Vision (ECCV). 434–450.

- Welinder et al. (2010) Peter Welinder, Steve Branson, Takeshi Mita, Catherine Wah, Florian Schroff, Serge Belongie, and Pietro Perona. 2010. Caltech-UCSD birds 200. (2010).

- Xu et al. (2015) Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhudinov, Rich Zemel, and Yoshua Bengio. 2015. Show, attend and tell: Neural image caption generation with visual attention. In International conference on machine learning. PMLR, 2048–2057.

- Xue et al. (2019) Haolan Xue, Chang Liu, Fang Wan, Jianbin Jiao, Xiangyang Ji, and Qixiang Ye. 2019. DANet: Divergent Activation for Weakly Supervised Object Localization. In ICCV.

- Yang et al. (2020a) Seunghan Yang, Yoonhyung Kim, Youngeun Kim, and Changick Kim. 2020a. Combinational Class Activation Maps for Weakly Supervised Object Localization. In WACV.

- Yang et al. (2020b) Xu Yang, Hanwang Zhang, and Jianfei Cai. 2020b. Deconfounded image captioning: A causal retrospect. arXiv preprint arXiv:2003.03923 (2020).

- Yao et al. (2020) Liuyi Yao, Zhixuan Chu, Sheng Li, Yaliang Li, Jing Gao, and Aidong Zhang. 2020. A survey on causal inference. arXiv preprint arXiv:2002.02770 (2020).

- Yue et al. (2020) Zhongqi Yue, Hanwang Zhang, Qianru Sun, and Xian-Sheng Hua. 2020. Interventional few-shot learning. arXiv preprint arXiv:2009.13000 (2020).

- Zhang et al. (2020) Dong Zhang, Hanwang Zhang, Jinhui Tang, Xiansheng Hua, and Qianru Sun. 2020. Causal intervention for weakly-supervised semantic segmentation. arXiv preprint arXiv:2009.12547 (2020).

- Zhang et al. (2018) Xiaolin Zhang, Yunchao Wei, Jiashi Feng, Yi Yang, and Thomas S Huang. 2018. Adversarial complementary learning for weakly supervised object localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1325–1334.

- Zhou et al. (2016) Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. 2016. Learning deep features for discriminative localization. In CVPR.