IMPROVING TRAJECTORY LOCALIZATION ACCURACY VIA DIRECTION-OF-ARRIVAL DERIVATIVE ESTIMATION

Abstract

Sound source localization is crucial in acoustic sensing and monitoring-related applications. In this paper, we do a comprehensive analysis of improvement in sound source localization by combining the direction of arrivals (DOAs) with their derivatives which quantify the changes in the positions of sources over time. This study uses the SALSA-Lite feature with a convolutional recurrent neural network (CRNN) model for predicting DOAs and their first-order derivatives. An update rule is introduced to combine the predicted DOAs with the estimated derivatives to obtain the final DOAs. The experimental validation is done using TAU-NIGENS Spatial Sound Events (TNSSE) 2021 dataset. We compare the performance of the networks predicting DOAs with derivative vs. the one predicting only the DOAs at low SNR levels. The results show that combining the derivatives with the DOAs improves the localization accuracy of moving sources.

Index Terms— Deep learning, Microphone array, SALSA-Lite, Sound event localization and detection (SELD).

1 Introduction

Sound source localization is an integral part of many modern applications such as video conferencing, hearing aids, and human-robot interactions [1, 2]. There are a plethora of localization methods existing in the literature [3, 4, 5, 6, 7, 8]. Recently data-driven methods have shown promising results for sound source localization in reverberant and low signal-to-noise ratio (SNR) scenarios [9, 10, 11, 12]. The deep neural network (DNN) based methods were shown to outperform parametric methods in terms of high resolution and low erroneous DOAs [13, 14, 15, 16, 12, 17].

In the recent past, polyphonic sound event localization and detection (SELD) problems have garnered a lot of attention among researchers, which combine the detection and localization tasks and have many practical applications [18, 11, 14]. Since its introduction, various model architectures and features have been proposed [19, 20, 21, 22, 23]. In this study, we focus on improving the localization accuracy of an existing model [23] by predicting both the DOAs and their derivatives, i.e., changes in DOAs over time. In the real world, the sources move non-linearly and non-uniformly; hence estimating the derivatives potentially improves the model learning resulting in accurate source trajectories. We compare the existing localization models (considering the detection ground truth to be known), which predicts only DOAs, with the model, which predicts both DOAs and their derivatives.

Our experiments reveal that localization can be a challenging task, even for immobile sound sources. This research aims to better understand deep learning-based models for localization task with a special focus on combining DOAs with their derivatives to improve the source trajectories. We hope the analysis will direct the research focus towards localization aspect of SELD task.

The specific contributions of this paper are

(a) deriving an update rule incorporating predicted DOAs and derivatives to improve localization accuracy;

(b) conduct experimental validation using TAU-NIGENS Spatial Sound Events 2021 dataset [24];

(c) carry out performance analysis of the proposed model at different SNR levels by synthetically adding noise;

(d) performance analysis of the proposed model when using the pre-trained model for initialization.

2 Model architecture

2.1 Features

The SALSA-Lite was introduced as an efficient computational version of the Spatial Cue-Augmented Log-Spectrogram (SALSA) feature for MIC (audio format) data [22, 23]. For -channel audio recording, SALSA-Lite is a () channel feature consisting log-power spectrogram with () frequency-normalized interchannel phase differences (NIPDs). The NIPD () approximating the relative distance of arrival (RDOA) can be written as

| (1) | ||||

| (2) |

where is the array response for any arbitrary array structure under the far-field assumption and is the RDOA between the first (reference) and mic. The SALSA-Lite provides the exact time-frequency positioning between the spectrogram and the NIPD resulting in the model being able to localize multiple overlapping sources.

2.2 Architecture

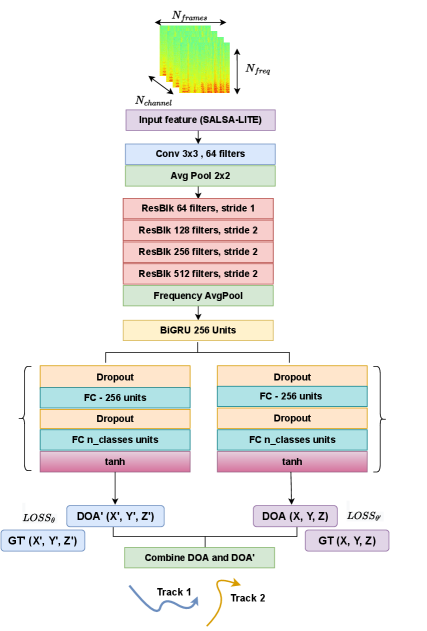

Figure 1 shows the neural network architecture designed to predict both the DOAs and their derivatives simultaneously. Here derivative represents the change in DOAs between two time frames. The SALSA-lite is fed to the CRNN network, which consists of one convolutional layer followed by four ResNet22 blocks [25] in the network body [22, 23].

The output of ResNet block is fed into a two-layer bidirectional Gated Recurrent Unit (GRU) followed by two distinct regression heads for predicting DOAs and their derivatives in Cartesian coordinates (X, Y, and Z), respectively. Unlike the SELD network in [23], we focus only on the localization task and replace the detection head with the regression head, which predicts the derivatives of DOAs at different timestamps as shown in Fig. 1. Along with predicting the intermediate DOAs, the additional derivative information helps to obtain better overall DOA estimates. Once the network predicts the DOAs and their derivatives, the final DOAs are obtained using the following update equation

| (3) |

where is an estimate of the DOA derivative at time and is the first derivative assumed to be zero. We expect that by additionally incorporating derivative predictions, DOA of moving targets can be estimated more accurately. For both the regression heads (predicting DOAs and derivatives), the number of active sources are assumed to be known. The ground truth is used to compute the losses for both the prediction heads. The mean squared error (MSE) loss is minimized while training both the network heads and can be written as

| (4) |

where are true DOAs and their derivatives at different timestamps, and and represent the predicted DOAs and the estimated derivatives, respectively.

3 Simulations and results

3.1 Dataset and training

The TAU-NIGENS Spatial Sound Events 2021 dataset was used for the performance analysis of the proposed models. The dataset consists of 600 recordings (1 minute long and four channels each) with 24 kHz sampling frequency in MIC data format. In this paper, 400 recordings were used for training, and 100 recordings were used each for validation and testing. The audio files consist of various static and moving sources from 12 unique classes. The angular range for azimuth and elevation angles are and , respectively.

For feature extraction, we followed the same setup as in [23], and processed frequencies from 50Hz to 2kHz to avoid the aliasing. While training, Adam optimizer [26] was used, with the initial learning rate as which linearly decreased to over last 15 epochs. A total of 70 epochs with batch size were processed. The validation set was used for model selection, whereas the test data was used for the performance analysis. While generating the ground truth for DOA derivatives, the derivative is considered zero if the source appears for the first time or reappears after 20 or more frames. In all other cases the derivative is the difference between the previous and current DOA.

3.2 Accuracy metric

Following the framework of baseline method [23], the detection ground truth was used to compute the error only for the frames where sources were present. For this study, the error was computed as the DOA error which corresponds to the spatial angular distance between the predicted and true positions, [27, 14].

| (5) |

where and are unit norm vectors of [X, Y, Z] coordinates corresponding to the true and predicted positions, respectively. A source is considered to be localized only when the average (averaged across the active frames) spatial distance between the predicted and true positions is less than ; we call these cases as true positive (TP). The false negative (FN) counts the number of incidences when the averaged spatial distance is more than . The probability of detection () is the fraction of frames where the distance between the predicted and true positions is less than . Note that as the network is designed to provide only one prediction per class, the error was not calculated for the cases where multiple sources were present from the same class in the frame.

3.3 Effect of combining derivative

In this subsection, the effect of combining the predicted derivatives with predicted DOAs is demonstrated. Fig. 2 shows the predicted source trajectories from both Model1 (with derivative estimation) and Model2 (without derivative estimation) along with classwise mean absolute error (MAE) computed for the correctly detected sources for one of the recordings. The cross in MAE plot denotes the cases when the source has not been detected by the models. It can be seen that Model1 detects more number of sources, hence resulting in higher MAE for some classes compared to Model2. It can be observed that by combining the derivatives via the proposed update rule (3), the final DOA exhibits a smoother trajectory since the outliers are eliminated. The update rule is helpful even for static sources. We observed that Model2 gives more erroneous DOAs for static sources than Model1. Overall, Model1’s estimates are closer to true trajectories resulting in higher . For this recording, the average for static and moving sources for Model1 and Model2 are, , , , and , respectively. The total averaged over 100 recordings from the test data is reported in Table 1.

From Table 1, it is evident that both Model1 and Model2 show similar performance for the clean dataset. We observed that Model1’s performance degrades when the network predicts the erroneous DOAs; hence combining them with equal weights leads to incorrect estimates. As a correction step, the current DOA prediction with derivative and the past prediction can be weighted depending on the threshold. A choice must be made depending on confidence in the present and past predictions.

3.4 Effect of transfer learning

To speed up the training process and reduce the risk of overfitting, we repeated the experiments using the pre-trained CRNN weights from an existing SELD model using SALSA-Lite, where the best model was obtained at the 47th epoch [23]. The dataset, architecture, and framework for the pre-trained model detailed in [23] are same as the CRNN body used in this paper. By keeping the CRNN body’s weights fixed using the pre-trained SELD model, the overall is increased by 10 % for both Model1 and Model2 as shown in Table 2. From Fig. 3, it can be observed that Model1 outperforms Model2 with higher and lower DOA error.

| SNR | Model | TPs | TPm | FNs | FNm | Pds | Pdm |

|---|---|---|---|---|---|---|---|

| Clean | Model1 | 28843 | 21523 | 13056 | 9892 | 68.8 | 68.5 |

| Model2 | 27637 | 21389 | 14262 | 10026 | 65.9 | 68 | |

| -2dB | Model1 | 17169 | 13097 | 24730 | 18318 | 40.9 | 41.7 |

| Model2 | 17199 | 12487 | 24700 | 18928 | 41 | 39.7 | |

| -5dB | Model1 | 15812 | 10919 | 26087 | 20496 | 37.7 | 34.7 |

| Model2 | 14136 | 10522 | 27763 | 20893 | 33.7 | 33.4 |

3.5 Effect of low SNR levels

Although the dataset consists of unknown interference and noise, to analyze the robustness of both the models, we synthetically added additive white gaussian noise to the recordings. From Table 1, it is observed that as the SNR level decreases, the of both the models degrades drastically. However, it can also be seen that Model1 outperforms Model2 in noisy scenarios as the estimated derivatives help improve the final DOAs. Fig. 4 shows the source trajectories obtained from both Model1 and Model2 for comparison. We observe that the estimates obtained from Model1 are more reliable than those from Model2.

| SNR | Model | TPs | TPm | FNs | FNm | Pds | Pdm |

| Clean | Model1 | 33033 | 24687 | 8866 | 6728 | 78.8 | 78.5 |

| Model2 | 33431 | 24531 | 8468 | 6884 | 79.7 | 78 | |

| -2dB | Model1 | 18349 | 12249 | 23550 | 19166 | 43.7 | 39 |

| Model2 | 17777 | 11906 | 24122 | 19509 | 42.4 | 37.8 | |

| -5dB | Model1 | 15365 | 10060 | 26534 | 21355 | 36.7 | 32 |

| Model2 | 15404 | 9816 | 26495 | 21599 | 36.7 | 31.2 |

4 CONCLUSION AND FUTURE WORK

This work demonstrates that predicting DOA derivatives along with DOAs (Model1) improves the overall localization performance compared to only predicting DOAs (Model2). We show that the proposed Model1 is robust to noise and outperforms Model2 at low SNR levels. As the SELD tasks have numerous applications, this analysis shows that estimating DOAs and their derivatives help to improve the source trajectories and overall performance cumulatively. In future, it would be interesting to see the effect of combining higher-order derivatives in the SELD tasks when detection and localization are done simultaneously.

References

- [1] M. Farmani, M. S. Pedersen, Z. H. Tan, and J. Jensen, “Maximum likelihood approach to “informed” sound source localization for hearing aid applications,” in IEEE Inter. Conf. Acous., Spe., Sig. Proces. IEEE, 2015, pp. 16–20.

- [2] L. Wan, G. Han, L. Shu, S. Chan, and T. Zhu, “The application of DOA estimation approach in patient tracking systems with high patient density,” IEEE Trans. Ind. Electron., vol. 12, no. 6, pp. 2353–2364, 2016.

- [3] H. L. Van Trees, Optimum Array Processing (Detection, Estimation, and Modulation Theory, Part IV), John Wiley & Sons, 2002.

- [4] R. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. Antennas Propag., vol. 34, no. 3, pp. 276–280, 1986.

- [5] C. Knapp and G. Carter, “The generalized correlation method for estimation of time delay,” IEEE Trans. Acoust. Speech Sig. Process., vol. 24, no. 4, pp. 320–327, 1976.

- [6] A. Xenaki, P. Gerstoft, and K. Mosegaard, “Compressive beamforming,” J. Acoust. Soc. Am., vol. 136, no. 1, pp. 260–271, 2014.

- [7] P. Gerstoft, C. F. Mecklenbräuker, A. Xenaki, and S. Nannuru, “Multisnapshot sparse Bayesian learning for DOA,” IEEE Signal Process. Lett., vol. 23, no. 10, pp. 1469–1473, Oct. 2016.

- [8] R. Pandey, S. Nannuru, and A. Siripuram, “Sparse Bayesian learning for acoustic source localization,” in IEEE Inter. Conf. Acous., Spe., Sig. Proces. IEEE, 2021, pp. 4670–4674.

- [9] D. Diaz-Guerra, A. Miguel, and J. R. Beltran, “Robust sound source tracking using SRP-PHAT and 3D convolutional neural networks,” IEEE/ACM Trans. Audio, Speech, Language Process., vol. 29, pp. 300–311, 2020.

- [10] R. Opochinsky, G. Chechik, and S. Gannot, “Deep ranking-based DOA tracking algorithm,” in 29th European Sig. Process. Conf. (EUSIPCO). IEEE, 2021, pp. 1020–1024.

- [11] S. Adavanne, A. Politis, J. Nikunen, and T. Virtanen, “Sound event localization and detection of overlapping sources using convolutional recurrent neural networks,” IEEE J. Sel. Topics Signal Process., vol. 13, no. 1, pp. 34–48, 2018.

- [12] P. A. Grumiaux, S. Kitić, L. Girin, and A. Guérin, “A survey of sound source localization with deep learning methods,” J. Acoust. Soc. Am., vol. 152, no. 1, pp. 107–151, 2022.

- [13] S. Chakrabarty and E. A. Habets, “Broadband doa estimation using convolutional neural networks trained with noise signals,” in Workshop on Applications of Sig. Proces. to Aud. and Acous. (WASPAA), 2017, pp. 136–140.

- [14] S. Adavanne, A. Politis, and T. Virtanen, “A multi-room reverberant dataset for sound event localization and detection,” arXiv preprint arXiv:1905.08546, 2019.

- [15] X. Xiao, S. Zhao, X. Zhong, D. L. Jones, E. S. Chng, and H. Li, “A learning-based approach to direction of arrival estimation in noisy and reverberant environments,” in IEEE Inter. Conf. Acous., Spe., Sig. Proces., 2015, pp. 2814–2818.

- [16] D. Suvorov, G. Dong, and R. Zhukov, “Deep residual network for sound source localization in the time domain,” arXiv preprint arXiv:1808.06429, 2018.

- [17] Q. Nguyen, L. Girin, G. Bailly, F. Elisei, and D. C. Nguyen, “Autonomous sensorimotor learning for sound source localization by a humanoid robot,” in Workshop on Crossmodal Learning for Intel. Robot. in conj. with IEEE/RSJ IROS, 2018.

- [18] S. Adavanne, A. Politis, and T. Virtanen, “Direction of arrival estimation for multiple sound sources using convolutional recurrent neural network,” in Euro. Sig. Proces. Conf. (EUSIPCO), 2018, pp. 1462–1466.

- [19] K. Shimada, Y. Koyama, N. Takahashi, S. Takahashi, and Y. Mitsufuji, “Accdoa: Activity-coupled cartesian direction of arrival representation for sound event localization and detection,” in IEEE Inter. Conf. Acous., Spe., Sig. Proces., 2021, pp. 915–919.

- [20] H. Phan, L. Pham, P. Koch, N. Q. Duong, I. McLoughlin, and A. Mertins, “On multitask loss function for audio event detection and localization,” arXiv preprint arXiv:2009.05527, 2020.

- [21] Y. Cao, T. Iqbal, Q. Kong, F. An, W. Wang, and D. M. Plumbley, “An improved event-independent network for polyphonic sound event localization and detection,” in IEEE Inter. Conf. Acous., Spe., Sig. Proces., 2021, pp. 885–889.

- [22] T.N.T. Nguyen, K.N. Watcharasupat, N.K. Nguyen, D.L. Jones, and W.S. Gan, “SALSA: Spatial cue-augmented log-spectrogram features for polyphonic sound event localization and detection,” IEEE/ACM Trans. Audio, Speech, Language Process., vol. 30, pp. 1749–1762, 2022.

- [23] T.N.T. Nguyen, D.L. Jones, K.N. Watcharasupat, H. Phan, and W.S. Gan, “SALSA-Lite: A fast and effective feature for polyphonic sound event localization and detection with microphone arrays,” in IEEE Inter. Conf. Acous., Spe., Sig. Proces., 2022, pp. 716–720.

- [24] A. Politis, S. Adavanne, D. Krause, A. Deleforge, P. Srivastava, and T. Virtanen, “A dataset of dynamic reverberant sound scenes with directional interferers for sound event localization and detection,” arXiv preprint arXiv:2106.06999, 2021.

- [25] Q. Kong, Y. Cao, T. Iqbal, Y. Wang, W. Wang, and M. D Plumbley, “Panns: Large-scale pretrained audio neural networks for audio pattern recognition,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 2880–2894, 2020.

- [26] Diederik P Kingma and Jimmy Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [27] A. Mesaros, S. Adavanne, A. Politis, T. Heittola, and T. Virtanen, “Joint measurement of localization and detection of sound events,” in IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), 2019, pp. 333–337.