Improving thermodynamic bounds using correlations

Abstract

We discuss how to use correlations between different physical observables to improve recently obtained thermodynamics bounds, notably the fluctuation-response inequality (FRI) and the thermodynamic uncertainty relation (TUR). We show that increasing the number of measured observables will always produce a tighter bound. This tighter bound becomes particularly useful if one of the observables is a conserved quantity, whose expectation is invariant under a given perturbation of the system. For the case of the TUR, we show that this applies to any function of the state of the system. The resulting correlation-TUR takes into account the correlations between a current and a non-current observable, thereby tightening the TUR. We demonstrate our finding on a model of the -ATPase molecular motor, a Markov jump model consisting of two rings and transport through a two-dimensional channel. We find that the correlation-TUR is significantly tighter than the TUR and can be close to an equality even far from equilibrium.

I Introduction

Entropy production is a fundamental concept of non-equilibrium statistical mechanics. It relates the asymmetry of microscopic transitions in a system to the measurable loss of energy in the form of heat dissipated into the environment. For macroscopic systems, measuring the latter thus provides a measure of microscopic time-reversal symmetry breaking. While the same relation holds for microscopic systems and can be even be formulated on the level of single trajectories [1, 2], measuring the dissipated heat is generally very challenging, as the resulting temperature changes are very small and typically lost among the fluctuations of the noisy environment. A more practical way to measure the entropy production in microscopic systems is provided by the work of Harada and Sasa [3], who show that the entropy production can be obtained from the violation of the fluctuation-dissipation relation. We remark that in principle, the entropy production may also be obtained directly from the probabilities of microscopic transitions in the system, however, this requires very good spatial and temporal resolution as well as lots of statistics.

A different way of estimating entropy production has recently been suggested [4, 5, 6, 7, 8] using the thermodynamic uncertainty relation (TUR) [9, 10, 11, 12]. The TUR establishes a connection between entropy production on the one hand, and measurable currents in the system and their fluctuations on the other hand. It may be understood as a more precise formulation of the second law, since it not only establishes the positivity of entropy production but also provides a finite lower bound in terms of experimentally accessible quantities. However, since the TUR is an inequality, there is generally no guarantee that the lower bound is tight, i. e. that a useful estimate of entropy production is obtained from a given measurement. In principle the lower bound can be optimized to produce an accurate estimate of entropy production [4, 5, 6, 7] and even realize equality [13], however, the resulting quantities may not be any easier to measure than the entropy production itself.

From an experimental point of view, it is thus highly desirable to improve the tightness of the bound using available data. However, the tightness of the bound is also of fundamental interest: For example, it has been shown [14] that the TUR is generally not very tight for models of biological molecular motors, with the lower estimate on entropy production being on the order of to of the actual value. This raises the intriguing question of whether evolution is “bad” at saturating thermodynamic bounds, or whether indeed a tighter bound exists.

So far, applications and extensions of the TUR have mostly focused only on current-like observables (for example the displacement of a particle or the heat exchanged with the environment) [15, 16, 17], although it has been found [18, 19, 20] that, in the presence of time-dependent driving, also state-dependent observables (like the instantaneous position or potential energy) may yield information about the entropy production. While the presence of non-zero average currents clearly distinguishes a non-equilibrium steady state from an equilibrium system; it is thus reasonable that a relation between currents and the entropy production should exist. By contrast, the average of state-dependent observables is independent of time both in equilibrium and non-equilibrium steady states, intuitively, it seems that such observables can provide no additional information about the steady state entropy production. As the main result of this article, we show that this intuitive notion is not correct. We can exploit the correlations between a state-dependent observable and current to obtain a tighter version of the TUR. We formulate the TUR in terms of the transport efficiency [11]

| (1) |

where is the time-integrated current, denotes the average and the variance, and is the total entropy production. Our main result is the bound

| (2) |

where is the time-integral of the state-dependent observable and , with the covariance, is the Pearson correlation coefficient, which satisfies . We refer to Eq. (3) as correlation TUR (CTUR). As a consequence, we obtain a tighter bound on the entropy production

| (3) |

where the leftmost expression corresponds to the TUR. Surprisingly, the observable can be almost arbitrary, as long as it is the time-integral of a quantity which only depends on the state of the system. This implies that virtually any additional observable that can be obtained from a measurement may be used to tighten the TUR. As we show below, a tight bound is generally obtained when is chosen as the local average value of . Importantly, the CTUR can be evaluated using only the experimentally obtained trajectory data and does not require any additional information about the parameters of the model. Thus suggests that taking into account correlations between observables may indeed be crucial to obtaining accurate estimates of the entropy production in terms of experimentally accessible quantities.

We demonstrate the usefulness of the CTUR by applying it to three distinct examples: For a model for the -ATPase molecular motor [21, 22], we find that, while the bound obtained on the entropy production using the TUR for the displacement of the motor is only around of the actual value, measuring the time-integrated local mean velocity in addition to the displacement and using the CTUR yields an estimate that is about accurate over a wide range of parameters. For a Markov jump model, in which two currents are driven through two connected rings, we show that, even though measuring the current in one of the rings can only give an estimate on the contribution to the entropy production stemming from this ring, this estimate can be tightened considerably using the CTUR. Finally, for transport in a two-dimensional channel, we demonstrate that even simple choices of the state-dependent observable can yield a significant improvement over the TUR.

II Multidimensional FRI and monotonicity of information

The mathematical basis of our results is an extension of the fluctuation-response inequality (FRI) [23] to multiple observables, similar to the multidimensional TUR [24]. The FRI gives an upper bound on the ratio between the response of the average of an observable to a small perturbation of the system, and its fluctuations in the unperturbed system,

| (4) |

Here, is the response of the observable to the perturbation which changes the probability density describing the system from to and is the Kullback-Leibler divergence between the probability densities. Here, may be the state of the system, but it may also represent a trajectory of the system during the measurement interval. When we consider the perturbation to be described by a parameter , such that and , then this is equivalent to the Cramér-Rao inequality [25, 26]

| (5) |

where is the Fisher information

| (6) |

With this identification, we can use the Cramér-Rao inequality for vector-valued observables, ,

| (7) |

where the superscript T denotes transposition and is the covariance matrix with entries . Note that here we assumed that the observables are not linearly dependent such that the covariance matrix is positive definite. As noted in Ref. [24], Eq. (7) is the extension of the FRI to more than one observable.

Next, we want to show that increasing the number of observables results in a tighter bound, i. e. that . We write the covariance matrix of observables as

| (8) | |||

We compute its inverse using the block-inversion formula

| (9) | |||

Further, we have the Schur determinant identity

| (10) |

Since and are positive definite, the second factor on the right-hand side is also positive. As a consequence, the matrix in Eq. (9) is positive semi-definite and we have for any -vector ,

| (11) | ||||

where is the vector with the -th component removed. For this yields the desired inequality

| (12) |

In light of the Cramér-Rao inequality Eq. (7), this means that considering more observables yields more information about the parameter (i. e. the perturbation) and thus a tighter lower bound on the Fisher information. In that sense, the information obtained from a measurement increases monotonically with increasing the number of measured observables. This holds true only as long as the additional observables are not linearly dependent on the existing ones; if this is not the case, then the covariance matrix becomes singular and the bound saturates, as the additional observables do not contain any new information.

In the case of two observables and , the inverse of the covariance matrix can be computed explicitly and we obtain the bound

| (13) |

This expression simplifies further if is a conserved quantity with respect to the perturbation, . Then, we find

| (14) |

In this case it is obvious that the bound is tighter than Eq. (5). This shows that, even if the average of contains no information about the parameter and the perturbation, we may still use its correlations with to obtain a tighter version of the Cramér-Rao inequality and thus the FRI.

III Continuous time-reversal and TUR

In view of later applications, we slightly generalize the discussion to a Langevin dynamics in with an internal degree of freedom

| (15) |

where the drift vector and diffusion matrix depend on the discrete state . The dynamics of the discrete state are governed by a Markov jump process with transition rates from state to state . We take the diffusion matrix to be independent of the position in order to simplify some of the following notation, however, the extension to a position-dependent diffusion matrix can be readily obtained. The evolution of the probability density for being at position and in state at time is governed by the Fokker-Planck master equation

| (16) | ||||

Here, is assumed to be positive definite (i. e. should have full rank). This dynamics reduces to a pure Langevin dynamics in absence of the discrete degree of freedom and to a pure Markov jump dynamics if there is no dependence on . The quantity is called the local mean velocity and characterizes the local flows in the system. We have also introduced its analog for the jump part. For the type of dynamics Eq. (15), we may consider two flavors of currents [27]

| (17a) | ||||

| (17b) | ||||

Here is a differentiable vector field, are the entries of an antisymmetric matrix and denotes the Stratonovich product. Intuitively, the diffusive current may be interpreted as a generalized displacement, in which the velocity is weighted by the position- and state-dependent function . The jump current , on the other hand, counts transitions between different states, which are weighted by the function . The averages of these quantities in the steady state are proportional to time and given by

| (18a) | ||||

| (18b) | ||||

Here the superscript st denotes the steady state value of the respective quantity. Note that both types of current are proportional to the respective local mean velocity. Next, we briefly summarize the continuous time-reversal introduced in Ref. [13]. This transformation is defined by a family of dynamics of the type Eq. (15), with a parameter ; corresponds to the time-forward dynamics, while represents the time-reversed dynamics [28]. So, we can connect the time-forward and time-reversed dynamics by a continuous family of dynamics. In the present context, the most important property of this transformation is that it leads to a rescaling of the local mean velocities and , while the steady state probability density is independent of . This generalizes the intuitive notion that time-reversal should lead to a reversal of all flows in the system to a continuous transformation. From Eq. (18), we then see that the averages of currents are also rescaled by the continuous time-reversal operation:

| (19) |

This implies that . Further, the Fisher information corresponding to the path probabilities of the dynamics parameterized by is related to the entropy production [29, 23, 13],

| (20) |

where equality holds for a pure Langevin dynamics. With this, Eq. (7) implies the multidimensional TUR [24],

| (21) |

where the components of are currents of either type in Eq. (17). The new insight from the preceding discussion is that the left-hand side increases monotonically when increasing the number of measured currents. This fact is very useful when we want to use the left-hand side to estimate the entropy production: Any additional information that can be obtained from a measurement can be used to improve the estimate. We note that, in the steady state of Eq. (15), the entropy production is explicitly given by

| (22) |

Crucially, Eq. (21) is not restricted to current observables. To see this, we recall that the steady state probability density is invariant under changing the parameter [13]. As a consequence, for a state-dependent (or non-current) observable

| (23) |

where the function may depend on the position, the internal state and time, its average does not depend on

| (24) |

Thus, , and we may include such observables in Eq. (21) by setting the corresponding entries in the vector to zero. However, such observables do contribute to the covariance matrix , and Eq. (12) guarantees that the resulting bound will be tighter than the one without these observables. For the case of one current and one state-dependent observable, we may use Eq. (14) to write the bound explicitly

| (25) |

which is equivalent to the CTUR Eq. (3). This is very appealing from an experimental point of view: Currents as in Eq. (17) depend on the velocity or transitions between the internal states. Since observing these requires a high time-resolution, such quantities are generally challenging to measure accurately. The only exception are specific choices of the weighting functions, for which the time-integrated observable can be measured directly, for example the displacement of a particle. By contrast, observables of the type Eq. (23), which depend only on the position and the internal state can easily be evaluated from trajectory data. The CTUR Eq. (25) implies that, provided at least one current can be obtained from the measurement, we may use other, non-current observables to improve the lower bound on the entropy production.

IV Optimal observables and stochastic entropy production

Given that the choice of the observable in Eq. (25) has a lot of freedom, a natural question is whether there exists an optimal observable which maximizes the bound. This is equivalent to finding such that magnitude of the Pearson coefficient becomes maximal for given . Unfortunately, we have not been able to solve this optimization problem in general. However, there is one particular case, where we can obtain the solution explicitly. For a pure Langevin dynamics without internal states, we may consider the stochastic entropy production as the observable . This corresponds to the weighting function

| (26) |

As we show in Appendix A, in this case, the optimal choice for is

| (27) |

This quantity can be interpreted as a local mean entropy production, i. e. the expected entropy production rate at position integrated along the trajectory. Note that both and have the entropy production as their average value. As it turns out, this choice turns Eq. (25) into an equality,

| (28) |

which shows that this really is the optimal choice of . We remark that this equality is equivalent to the equality with derived in Ref. [13]. For general currents, while the optimal could not be obtained explicitly, we note that the average current is expressed in terms of the local mean velocity as

| (29) |

Comparing this to Eq. (27), this suggests that a good choice for may be

| (30) |

This choice is the local mean value of the current, which has the same average as the current itself.

Further insight into the meaning of the optimal observable can be gained from the following consideration. Since the average of the observables and exhibit the same scaling under continuous time-reversal

| (31) |

they both satisfy a TUR

| (32) |

Since the choice of is arbitrary within the class of observables Eq. (23), we may minimize the variance of with respect to ,

| (33) |

We may generalize this slightly by choosing , where is a constant. In this case, the minimization with respect to can be done explicitly and yields

| (34) |

from which we readily obtain Eq. (25) and finding the optimal observable corresponds to maximizing the Pearson coefficient. Intuitively, the optimal observable is the state-dependent observable whose fluctuations most closely mimic those of the current , thus minimizing the variance of . The tightness of the TUR is thus limited by how closely the current can be emulated by a state-dependent observable , i. e., the magnitude of the predictable part of . An extreme case are the fluctuations of the stochastic entropy around its local mean value . This quantity is equivalent to the entropy production measured in terms of the stochastic time coordinate introduced in Ref. [30]. Its statistics are described by simple Brownian motion and thus its predictable part is zero and the corresponding TUR is an equality [13].

In the case of a pure Markov jump dynamics, the right-hand side of the Eq. (25) can be replaced by a tighter bound

| (35) |

with the quantity given by

| (36) |

The inequality is a straightforward consequence of the elementary inequality

| (37) |

which holds of arbitrary . We note that the quantity has been introduced several times in the recent literature [24, 6, 31] and been termed pseudo entropy production in Ref. [31]. Like the entropy production Eq. (22), this quantity measures the degree to which the system is out of equilibrium. As shown in Appendix A, the choice

| (38) |

in the current Eq. (17) allows to be interpreted as a stochastic version of the pseudo entropy in the sense that . Further, choosing

| (39) |

that is, the local mean of the current , we obtain equality in Eq. (35),

| (40) |

which is the analog of Eq. (27). This shows that as in the Langevin case, we may realize equality in Eq. (35), with the difference that the quantity being estimated is the pseudo entropy production instead of the entropy production . As a consequence, Eq. (35) can only yield a reasonable estimate on the entropy production if . From Eq. (37), this holds when for all transitions, i. e. the bias across any transition is small compared to the total activity. The latter condition is realized either near equilibrium (where the total bias is small) or in the continuum limit (where a finite total bias is distributed over many individual transitions). In all other cases, Eq. (35) can at most yield a lower bound on , the extreme case being unidirectional transitions [32], where diverges while remains finite. Note that, comparing Eq. (39) with the definition of a general current Eq. (17) suggests that a good choice for the state-dependent observable should be

| (41) |

with the “local mean velocity”

| (42) |

V Improved TUR from trajectory data

Based on the considerations in the previous sections, we now provide a general recipe to obtain sharper TUR-like bounds from existing trajectory data. The goal of this procedure is to use the trajectory data to obtain an estimate of the entropy production. We assume that the current , i. e. the weighting functions and in Eq. (17) are fixed. The reasoning behind this is that, in order to optimize the current, we need to explicitly observe all the transitions along the trajectory, which typically requires a sufficiently high time-resolution. Failing that, we are limited to observing current observables whose time-integral directly corresponds to a measurable quantity, for example the total displacement of a tracer particle. Then, obtaining a good estimate for the entropy production corresponds to finding a good choice for the observable in Eq. (25). We propose three methods for finding such a choice. We also point the reader to Appendix B, where we investigate the dependence of the estimate on the size of the data set and the sampling interval.

V.1 Explicit optimization

Given a set of trajectory data, we may in principle optimize the function in Eq. (23) explicitly by maximizing the Pearson coefficient between and . In the case of a pure jump process with states, this corresponds to optimizing parameters—since the Pearson coefficient is invariant under a global rescaling of , we may set without loss of generality. In the case of a diffusion process, we may choose a reasonable set of (say ) basis functions and write , which (setting ) again corresponds to optimizing parameters. Provided that and the data set are not too large, this explicit optimization is feasible and has the advantage of yielding the tightest possible bound within the accuracy of the optimization algorithm. However, as or the size of the data set increase, this procedure becomes increasingly unfeasible and less tight but easier to compute heuristic bounds may be desired.

V.2 Approximate local mean velocity

Given that, in many physically relevant situations, the local mean current Eq. (30) is expected to give a reasonably tight bound, we may use an approximate expression for the local mean velocity to compute . Such an approximate expression may be obtained directly from the trajectory data. Even if the measurement resolution is not sufficient to observe every transition, we may still obtain a coarse-grained mean velocity profile. For a spatial resolution and a temporal resolution , we may divide the observation volume into cells of size and obtain an estimate for the local mean velocity in a given cell by considering the displacement at time , averaged over all particles starting out in the cell at time . An approximate local mean velocity may also be obtained from theoretical considerations by considering a simplified version of the dynamics, in which the local mean velocity can be computed explicitly and is expected to resemble the one of the actual dynamics. A simplified model may be obtained, for example, by linearizing a given non-linear model or by constructing a lower-dimensional effective model. Even though both approaches are generally valid only in a certain limit, in many cases, the resulting local mean velocity still yields an improved bound via the CTUR even beyond the strict validity of the approximations. As a concrete example, we consider in the next section the flow through a two-dimensional channel, which we approximate by a one-dimensional flow in an effective potential, for which an explicit expression of the local mean velocity can be obtained.

Another application, for which we anticipate this approach to be useful, is for systems in the presence of spatial disorder. Since the disorder configuration is generally not known precisely, we also cannot obtain an explicit expression for the local mean velocity. In this case, we may compute the local mean velocity for the disorder-free system, and provided that the disorder is not too strong, we can still expect the CTUR with the corresponding observable to provide a notable improvement over the TUR. For example, we can imagine a channel as in Fig. 5, through which a current is driven by an external bias. However, due to variances in the fabrication of the channel or the presence of contamination, the actual potential experienced by the particle may be more complicated. Nevertheless, if we can obtain an expression for the local mean velocity of the ideal situation, we can use it to obtain an improved estimate of the entropy production.

V.3 Inverse occupation fraction

Finally, we provide a concrete formula for obtaining a candidate for the observable . This relies on the observation that, in many systems, the local mean velocity at some position and the probability of finding the particle at position are approximately inversely proportional. This relation is exact for Langevin dynamics in a spatially periodic one-dimensional system; in this case, we have , where is the drift velocity and the period of the system. The same relation holds for a Markov jump dynamics with on a ring, where is the total current on the ring.

However, even in higher-dimensional settings, we often expect the probability density to be small where the magnitude of is large and vice versa. The reasoning for this relation is that, for ergodic dynamics, is equal to the temporal occupation fraction, i. e. the fraction of time that the particle resides in the volume element around in the limit of long observation times. On the other hand, if the local mean velocity at is large, this means that the particle tends to move at a large velocity at and thus we expect it to only spend a short time in the volume element . Note that this qualitative argument may break down, e. g. near vortices in the local mean velocity, where particles may move fast yet still mostly reside in a small volume. However, since we only require an approximation to the local mean velocity to improve the TUR bound on the entropy production, using the time-integral of the inverse occupation fraction as the observable is still expected to yield a notable improvement in most cases. Specifically, dividing the observation volume into cells (for simplicity, we assume they have equal volume ) and the observation time into steps, we define as the occupation number the number of times that any trajectory takes a value in the -th cell. Then, we may define the observable as

| (43) |

where is the cell the trajectory is located in at time step . Note that, while is evaluated for each individual trajectory, is computed from the entire set of trajectory data. We do not need to normalize the occupation number into an occupation fraction, since the overall normalization factor does not impact the Pearson coefficient. While the observable Eq. (43) may not yield the tightest bound in Eq. (25), it has the advantage that it may be computed using only the trajectory data without any additional input.

Finally, we remark upon a possible issue with the definition Eq. (43). If the phase-space volume that is accessible to the system is not finite, then it becomes necessary to introduce a lower cutoff on the occupation number . To see this, note that for an ergodic system in the limit of long time and/or a large number of trajectories, we have , where is the location of the -th cell and is the cell volume. Then,

| (44) |

So the average of is proportional to the volume and diverges if the latter becomes infinite. For example, if , then grows exponentially for large and its average is not well-defined. Intuitively, this means that Eq. (43) is dominated by rare events, which are never well sampled no matter how many trajectories we record. To avoid this problem, we may introduce a lower cutoff on , that is, we only count cells that have been visited in at least a fraction of all data points. In terms of the probability density, this means that we discard all points with . In doing so, the average of becomes finite and we may use the resulting observable as a qualitative estimate for the local mean velocity. Obviously, the resulting quantity is only an approximation to the actual occupation fraction, however, we may still expect it to yield a useful improvement over the TUR, provided that is sufficiently small and the statistics of the current are not dominated by rare events. This lower cutoff also avoids another possible issue: In Eq. (43), we use the same data set to evaluate the occupation fraction and the trajectory-dependent observable . While this is not problematic for a large number of trajectories, since the influence of any single trajectory on is small, it may lead to unintended correlations if the number of trajectories is not large. In the latter case, it may be preferable to divide the data set into two parts, using one to determine and the other to compute . In doing so, we may encounter the situation where a given cell is visited in the second part but not the first part of the data set. In this case, Eq. (43) is no-longer well defined since we divide by zero. Introducing a lower cutoff excludes this possibility. However, we stress that even if and are computed from the same data set, Eq. (3) remains valid, so the worst outcome of unintended correlations is to reduce the quality of the estimate on the entropy production.

VI Demonstrations

VI.1 Molecular motor model

To demonstrate how Eq. (25) may be used to obtain a tight bound on the entropy production, we consider the model for the -ATPase molecular motor introduced in Ref. [21] and further studied in Ref. [22]. This model describes the motion of a probe bead coupled to a rotating molecular motor, which either consumes a molecule called ATP in order to generate rotational motion, or, conversely, generates ATP when driven by an external torque. The probe is considered to be trapped inside a potential , which is determined both the joint between the probe and the motor and the internal structure of the motor. As the motor rotates in steps of length , the potential depends on the current state of the motor as . In the simplest form of the model, the trapping potential is harmonic, , and the motor rotates in steps of . The transitions between the states of the motor are described by the position-dependent transition rates

| (45) | ||||

where () is the rate of transitions from to (from to ). Here, quantifies the overall activity of the motor, which is proportional to the concentration of ATP in the environment of the motor. is the chemical potential difference driving the rotation; it is the amount of energy gained by the motor when consuming a single molecule of ATP. The parameter characterizes the asymmetry of the position-dependence of the rates. The spatial motion of the probe is described by one-dimensional Brownian motion in the potential . In addition, an external torque may be applied to the probe, which enters the equation of motion in the form of a non-conservative bias force . As a consequence, the drift coefficient in Eq. (15) is given by , where is the friction coefficient, is an external force acting on the probe, while the diffusion matrix is . An experimentally accessible current is the total displacement of the probe,

| (46) |

In the steady state, we have , where is the drift velocity. Because the system is effectively one-dimensional, the local mean velocity in the steady state is given by where is the -periodic steady state probability density. In this case, we can thus reconstruct the local mean velocity from the trajectory data of the probe by taking a histogram of the probe positions. However, it should be noted that this local mean velocity is a coarse-grained quantity, the true local mean velocity entering Eq. (16) also depends on the state of the motor. We define the observable

| (47) |

which is proportional to . Since the proportionality factor cancels in the Pearson coefficient, and are equivalent with respect to Eq. (25). To asses the tightness of the various inequalities, we introduce the transport efficiencies

| (48) | ||||

Both of these quantities are smaller than unity and measure the magnitude of the average transport relative to its fluctuations and the dissipation.

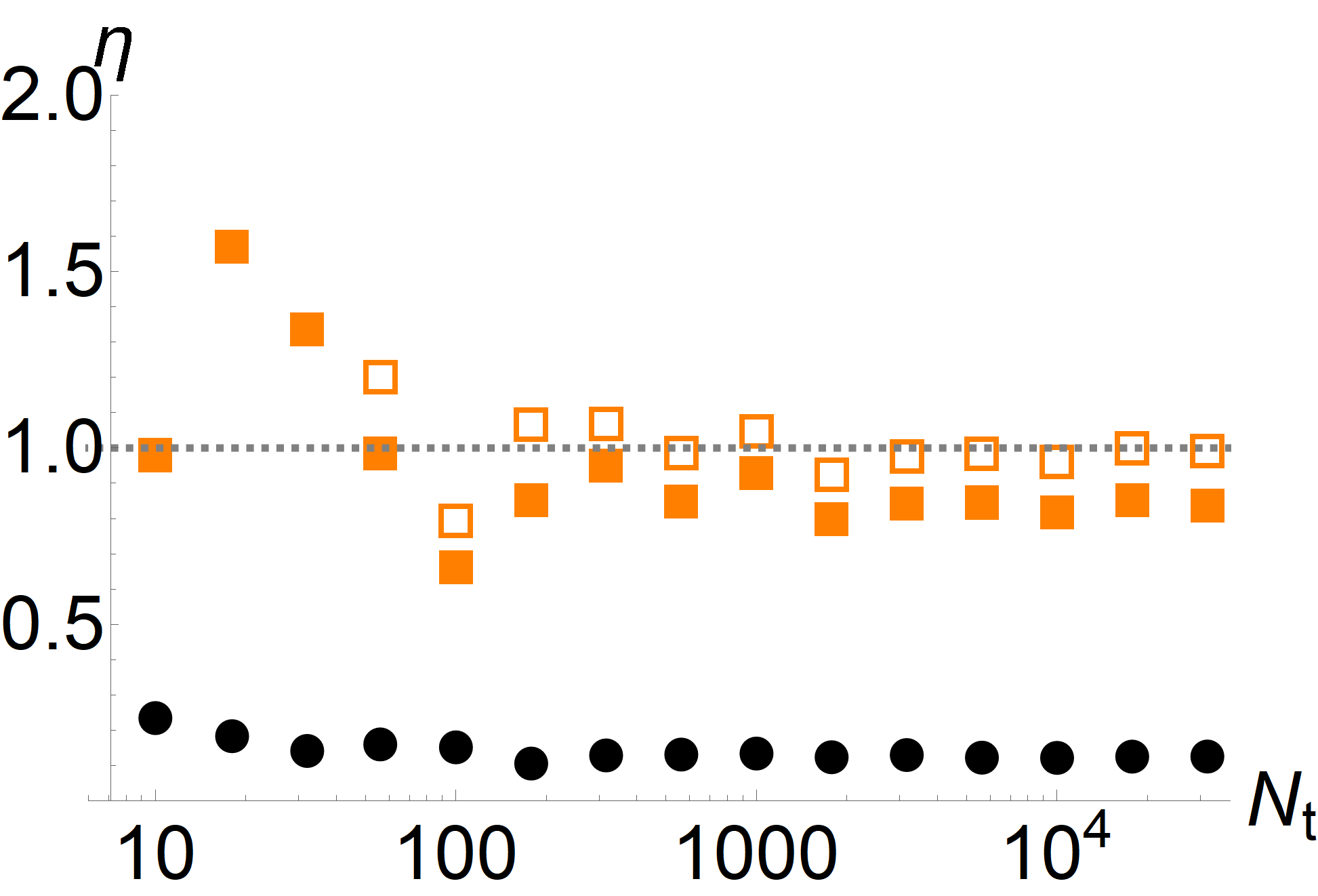

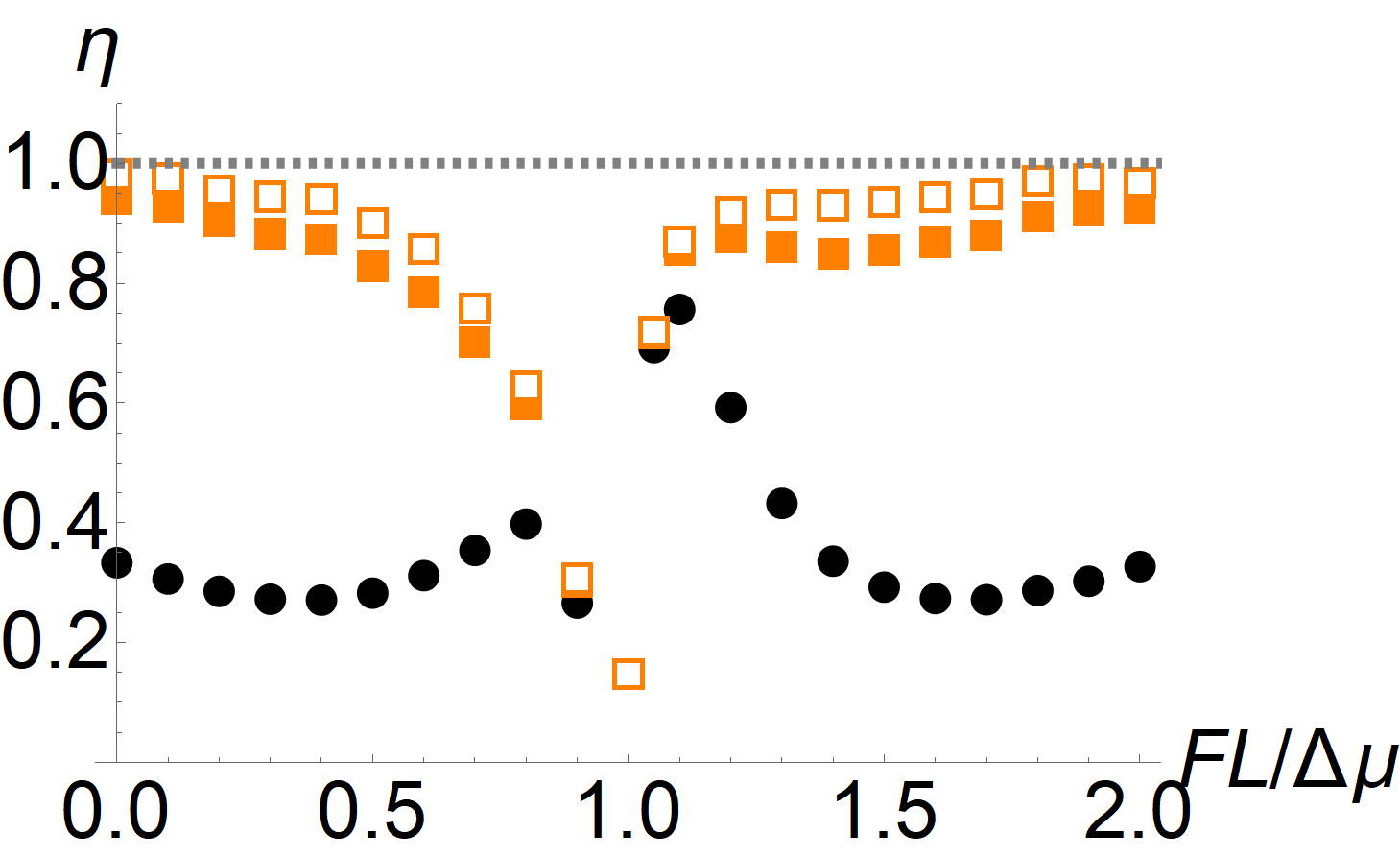

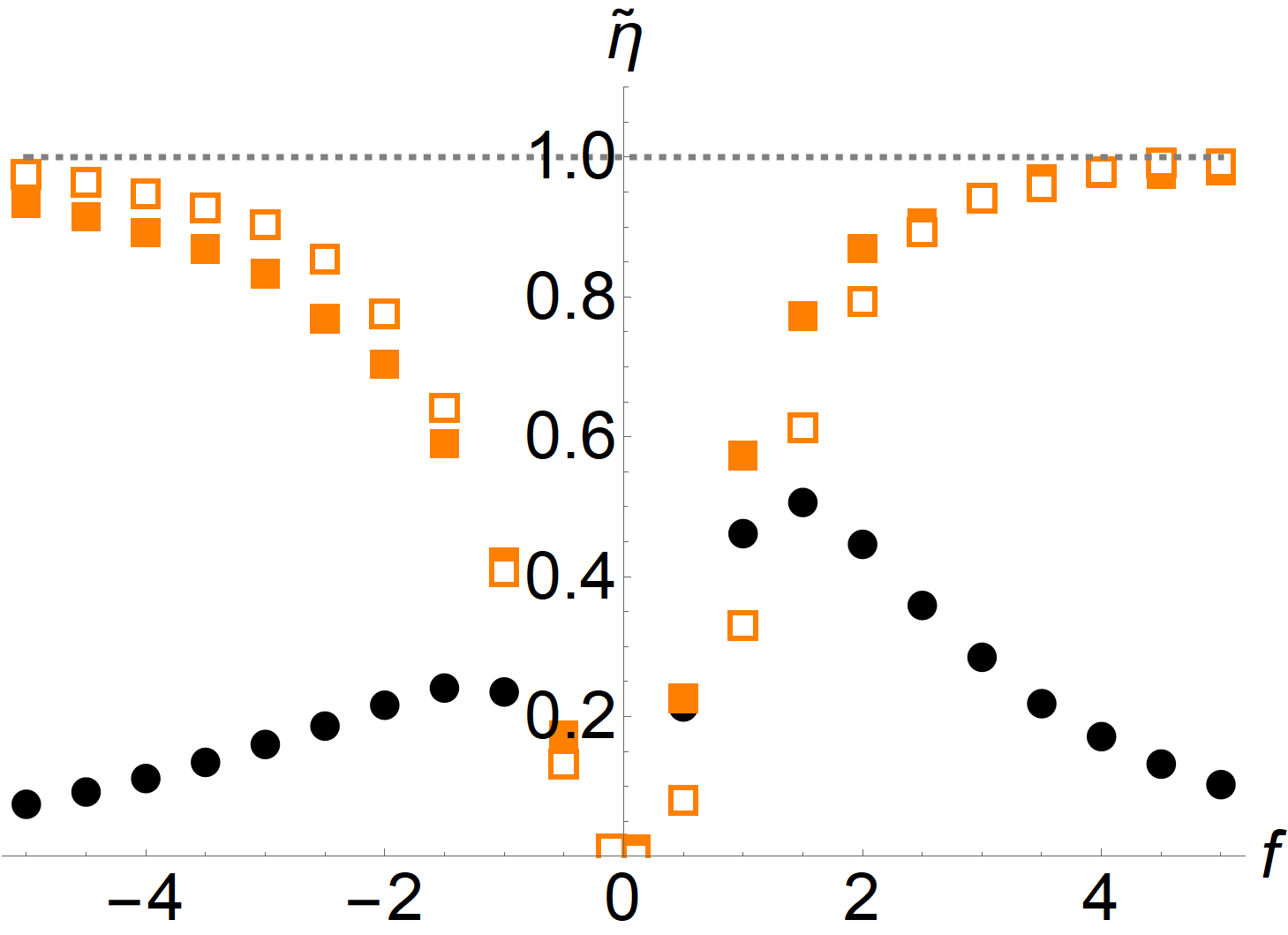

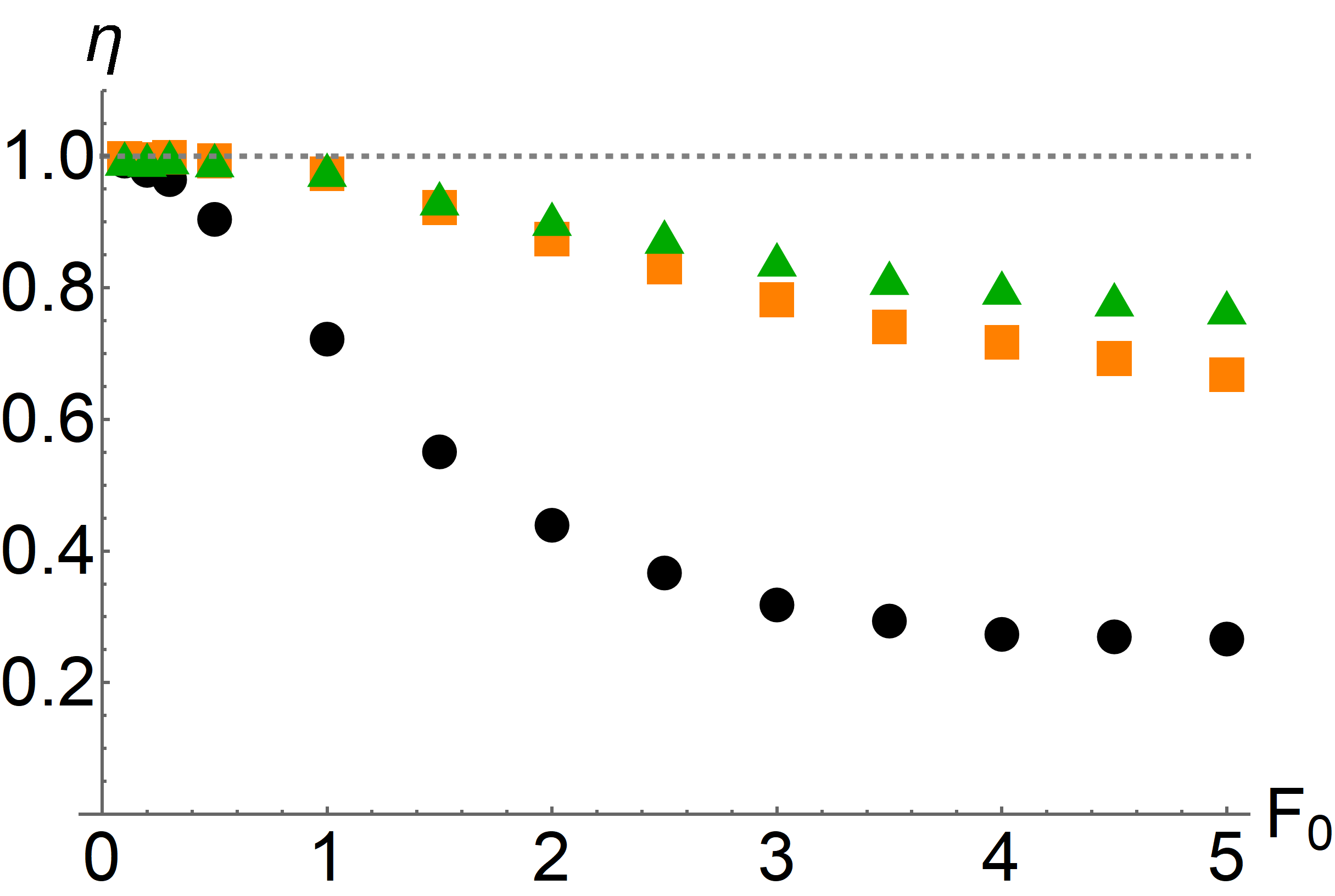

The efficiencies Eq. (48) are shown for the molecular motor model in Fig. 1 as a function of various parameters. The top-left panel shows as a function of the base activity , which corresponds to the concentration of ATP in the experiment. For small activity both and are comparable and small; in this limit, the transitions between the different motor conformations are not translated efficiently into motion and the dissipation is not reflected in the motion of the probe [22]. For large activity, saturates at a value of around . However, when we compute in this regime, we find that it saturates at a value close to unity, i. e. the maximum possible value. The top-right panel shows as a function of the chemical potential difference . While this value cannot be readily changed in experiment, it yields important insight into the nature of the bound Eq. (25). For small the system is almost in equilibrium, and both the TUR and CTUR are close to an equality. However, as we drive the system out of equilibrium, the TUR ratio quickly drops, while the CTUR remains close to unity. This suggests that, while the TUR is generically only saturated close to equilibrium [33, 4], the improved bound from the CTUR Eq. (25) can yield an accurate estimate of the entropy production even far from equilibrium. The bottom-left panel shows as a function of the external load force. Close to the stall condition neither of the bounds is tight. This is reasonable, since when the motor stalls, also the probe stops moving, while the motor keeps changing its conformation and thus dissipating energy. Interestingly, both bounds are close to unity slightly above the stall condition, i. e. when the external load is just strong enough to turn the motor in the opposite direction. Away from the stall condition, we again find that the TUR is rather loose, while the CTUR remains tight. Note that in this panel we also included the results obtained by optimizing the observable . Specifically, we write

| (49) |

and then numerically optimize the parameters , such that is maximal for the given trajectory data. Here we use ; further increasing of the number of parameters provides no notable improvement. The numerical optimization is done using Mathematica’s NMaximize command. The result are the empty orange squares in the bottom-left panel of Fig. 1. As can be seen, the observable is not truly optimal, so that some improvement of the lower bound on entropy production is possible. However, the heuristic choice already provides a useful estimate without the need for any parameter optimization. Finally, the bottom-right panel shows as a function of the asymmetry parameter . As was shown in Ref. [22] the motor can operate without internal dissipation close to , whereas other values of result in a finite amount of internal dissipation. Since this difference is most pronounced at low activity, we choose such that the velocity remains constant at for all values of ; this corresponds to in the top-left panel. While in this regime of low activity, neither bound is saturated, Eq. (25) does yield a considerable improvement over the TUR. Interestingly, neither bound shows a pronounced dependence on , which indicates that their tightness, at least for this model, is not related to the amount of internal vs. external dissipation.

The molecular motor model is essentially one-dimensional: Since the energy scale of the coupling between the probe particle and motor are large compared to the temperature, the position of the probe and the state of the motor are tightly coupled, so measuring the displacement of the probe is almost equivalent to measuring the internal transitions of the motor. Indeed, considering the internal transitions of the motor as an additional current , Eq. (21) yields no notable improvement over the TUR. Because of this, it is reasonable that the entropy production can be estimated accurately from a measurement of the probe position. However, this tight coupling breaks down for slow switching (see the top-left panel of Fig. 1): In this regime, while the asymmetry in the transitions between the motor states still gives rise to entropy production, these transitions increasingly fail to produce a directed motion of the probe and thus its displacement can no longer be used to obtain an accurate estimate of entropy production.

In summary, we find that the TUR is generally not tight for this model, which mirrors the behavior in other types of molecular motors [14]. Viewed on its own, this would suggest that -ATPase is not efficient at saturating the bound set by the TUR. When we take into account the correlations between the velocity and its local mean value via the CTUR, the resulting inequality is almost saturated. Thus, in reality, the motor operates close to the limit permitted by the thermodynamic bound in the biologically relevant parameter regime. In contrast to the TUR, which represents a trade-off between dissipation and precision, the biological meaning of saturating the CTUR is not so clear. We note that, in the case of a particle moving in a flat potential, where the local mean velocity is independent of space, the TUR and CTUR are equivalent and both saturated. The presence of a spatially varying local mean velocity generally increases the amount of fluctuations in the current and also induces correlations between the former and the latter. As a consequence, the TUR becomes less tight and we explicitly need to take into account the correlations to counter this. However, in case of the -motor, the spatial inhomogeniety of the dynamics is also an integral part of the mechanism creating the motion of the motor in the first place. In that sense, the CTUR may be interpreted as a trade-off between dissipation, precision and the spatial structure necessary for the motion of the motor.

VI.2 Two-cycle Markov jump process

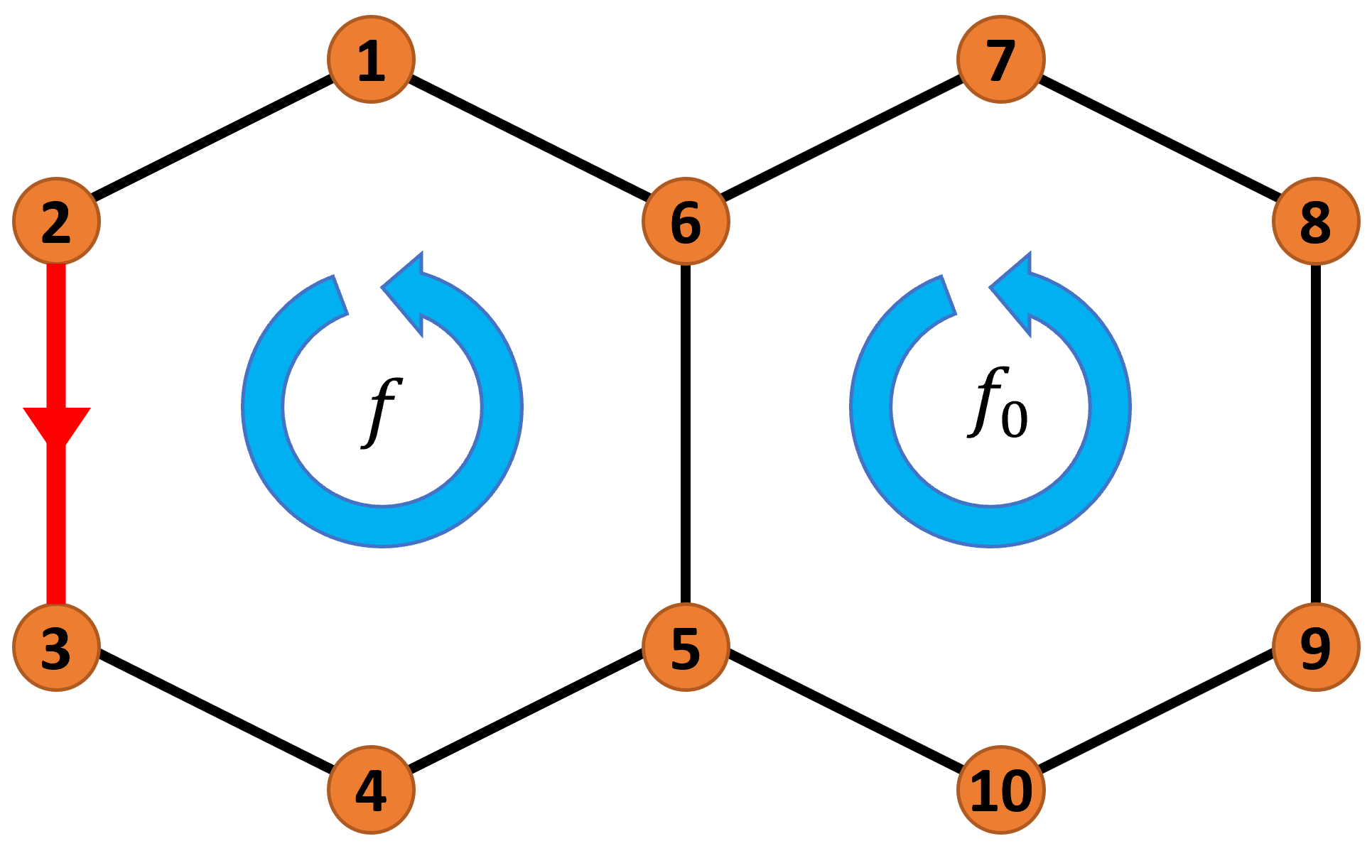

An obvious question is how the CTUR fares in the presence of multiple independent currents. To investigate this issue, we introduce a simple Markov jump model consisting of sites forming two loops with a common edge, see Fig. 2. While such two-cycle models are also used in modeling the chemical state space of molecular motors [34], we use a simpler setup in order to better understand its behavior in relation to the CTUR.

We parameterize the transition rates between connected states as

| (50) |

where is the inverse temperature, the energy of state and is the bias, with the choice corresponding to counter-clockwise and to clockwise transitions. As indicated in Fig. 2, we keep the bias of the right cycle fixed at a value while varying the bias in the left cycle; the bias on the shared link is set to . As the current , we choose the number of counterclockwise transitions through the link (indicated in red in Fig. 2), i. e. and otherwise in Eq. (17).

As the state-dependent observable , we choose the inverse of the steady state occupation fraction in the left ring, , which corresponds to measuring the ratio of the time spent in state and the time spent in the left ring. The motivation behind this choice is that, even though the system no longer consists of a single cycle, we still expect the occupation fraction to be approximately inversely proportional to the current through the site, as argued in Section V.3. This observable can be measured by observing only the states in the left ring, for example if the right ring cannot be directly observed. As in the previous example, we define the ratios

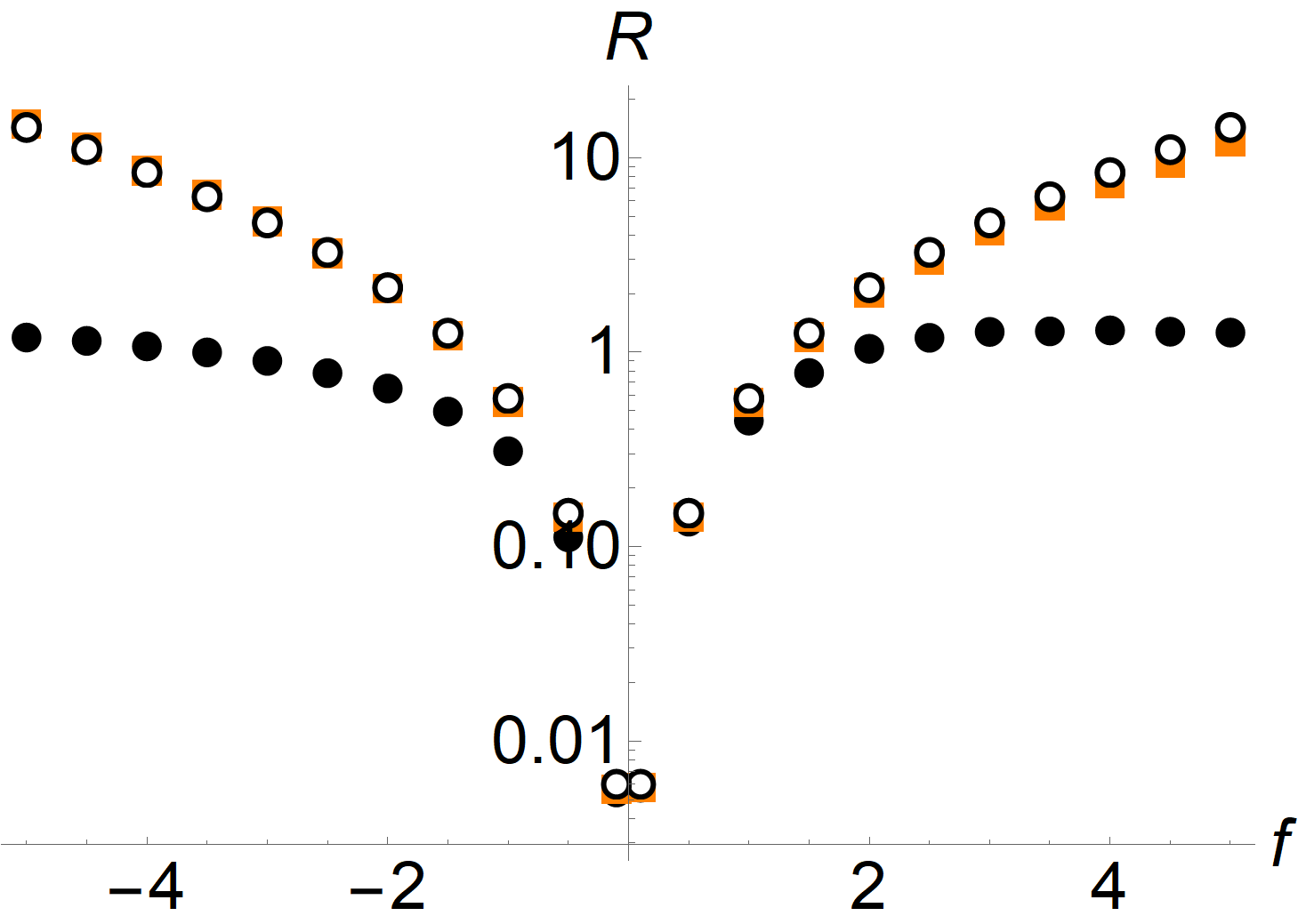

| (51) | ||||

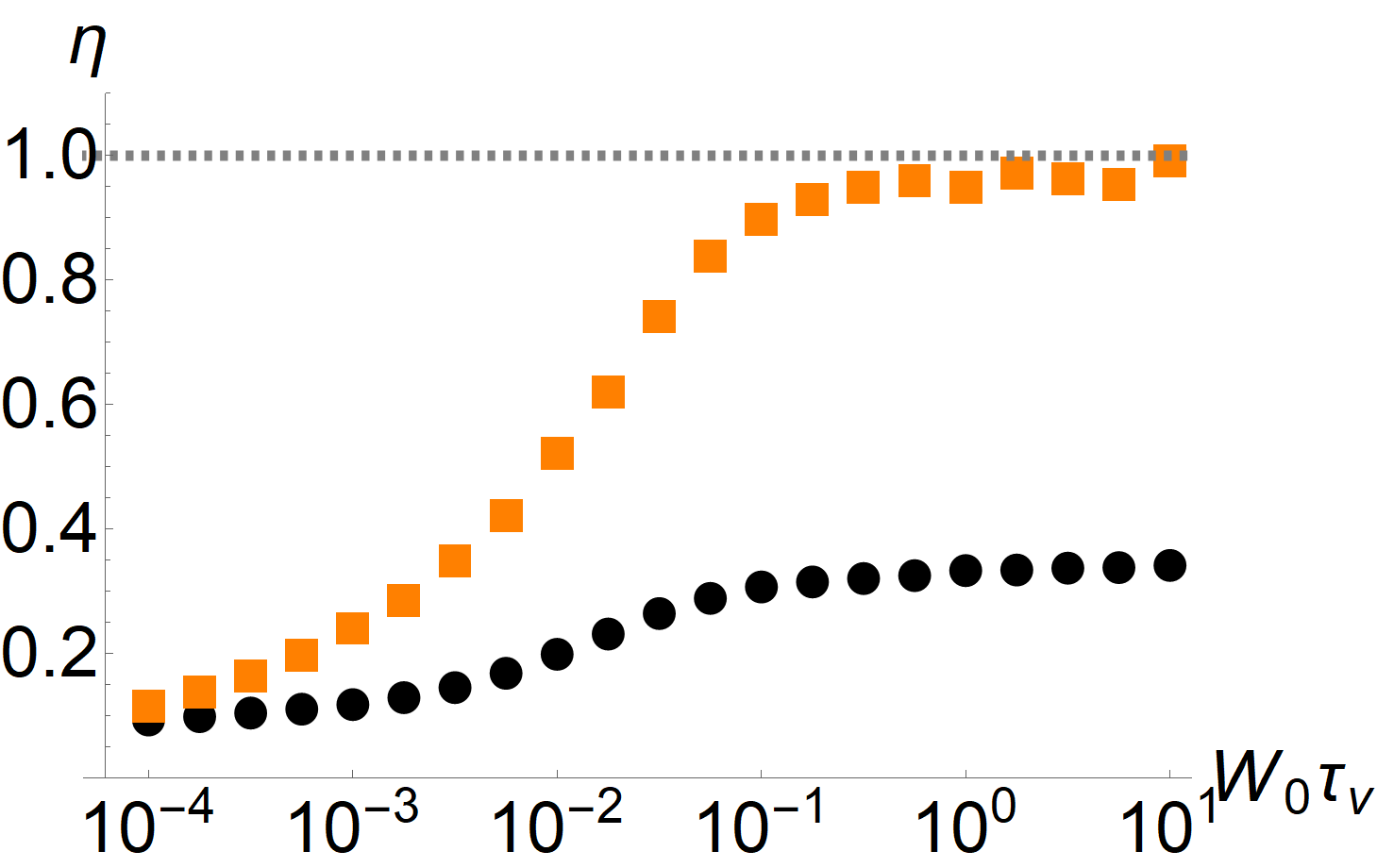

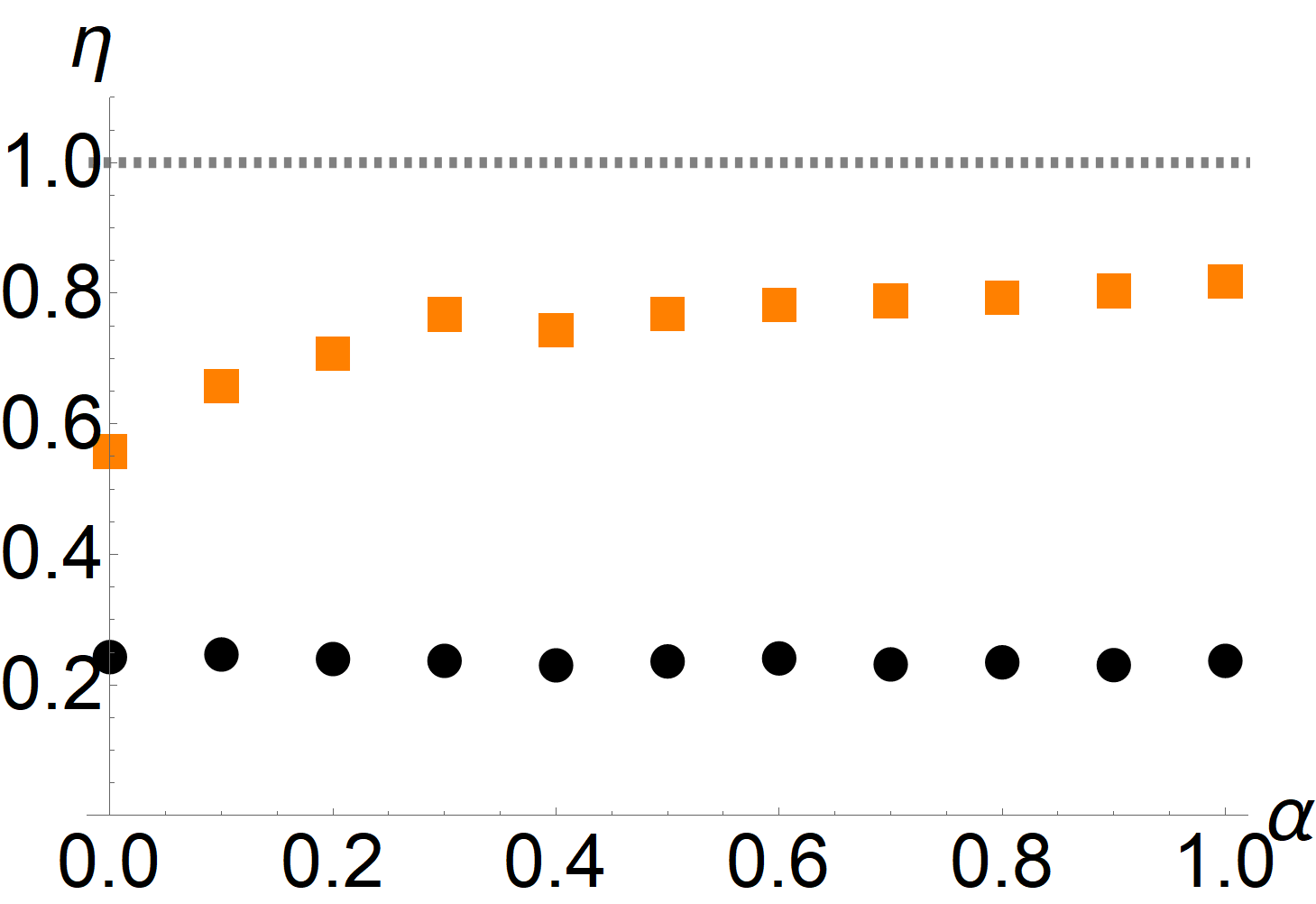

which are shown in Fig. 3. In the absence of bias, , the average current in the left ring vanishes and thus neither the TUR nor the CTUR give us a non-trivial estimate on the entropy production. The contribution of to the total entropy production increases with increasing bias and thus we can get a non-trivial lower bound on the entropy production. Quantitatively, we observe that the estimate provided by the CTUR is considerably more accurate than the TUR, in particular for larger bias. This finding is independent of the precise potential landscape; indeed, the bound obtained from the CTUR for flat and random configurations of energies is qualitatively similar. However, as noted in Eq. (35), in case of a Markov jump process, we are actually estimating the pseudo entropy production Eq. (36) . Since the latter is smaller than the entropy production for large bias (i. e. asymmetry between forward and reverse transitions), the estimate becomes worse when we increase the bias further, even though most of the entropy production in the system now stems from the left ring. To quantify the difference between and , we also consider the modified efficiencies

| (52) | ||||

As can be seen from Fig. 3, the CTUR saturates this bound in the limit of large bias; thus, we can accurately estimate the pseudo entropy production from a measurement of the current and the occupation probabilities in the left ring. By contrast, just as for the molecular motor model, the TUR estimate becomes less accurate in the limit of large bias.

In the case of small bias, it is expected that the (pseudo) entropy production cannot be estimated accurately, since it mostly originates from the right ring, and is not reflected in the current through the left ring. Thus, at most, we can estimate the contribution to the (pseudo) entropy production that originates from the left ring. To illustrate this, we compare the estimate of obtained from the TUR and CTUR with the pseudo entropy production of only the left ring, i. e. the system in Fig. 2 with . There is no rigorous inequality between the two, since the bias in the right ring may affect the occupation probabilities and currents in the left ring. However, we do observe that the CTUR for the current in the left ring almost exactly reproduces . This is an example of a more general statement: While the CTUR can potentially provide an accurate estimate of the (pseudo) entropy production, it can only capture the contributions to entropy production that correspond to the chosen current observable.

VI.3 Transport in a two-dimensional channel

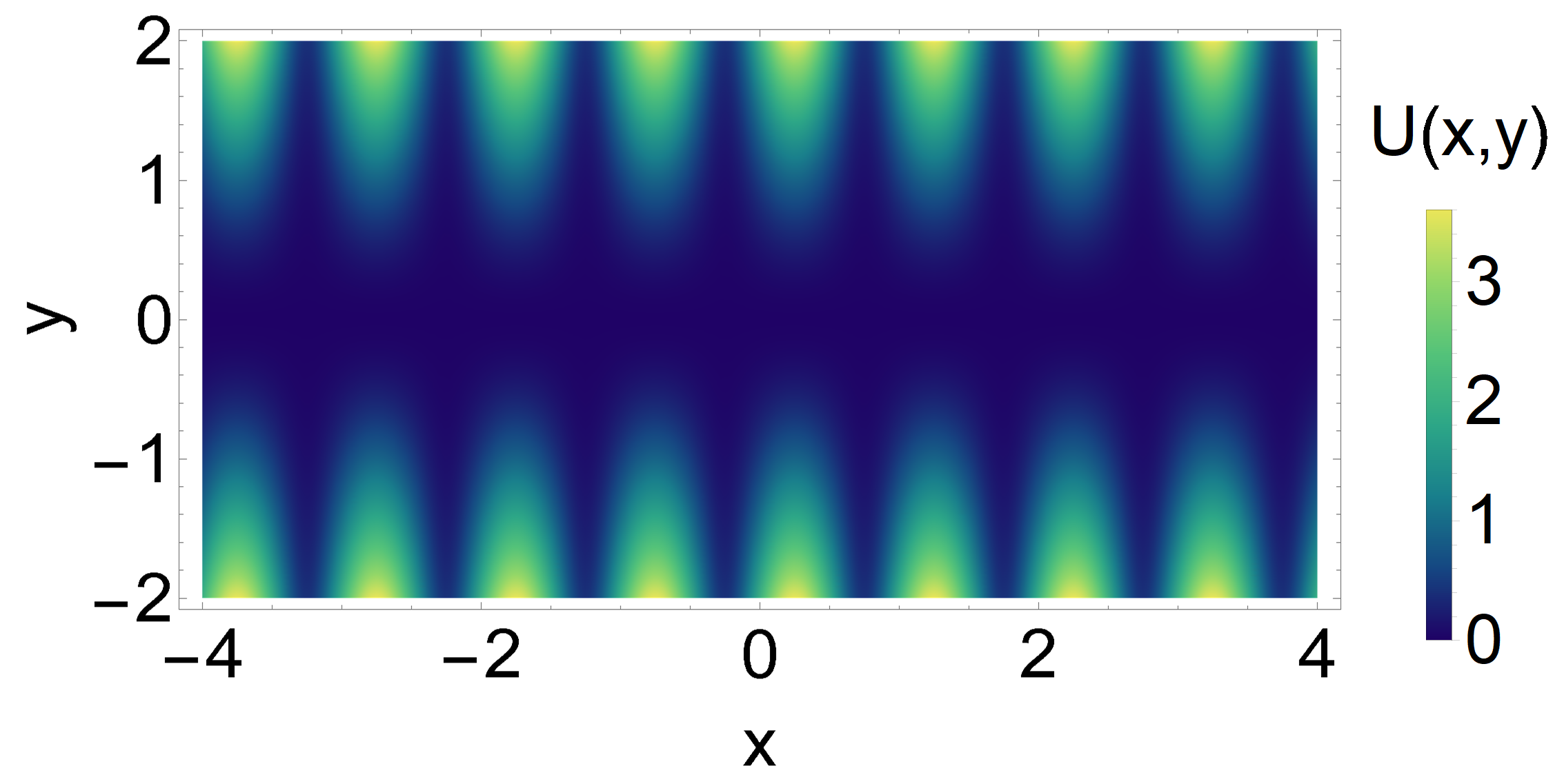

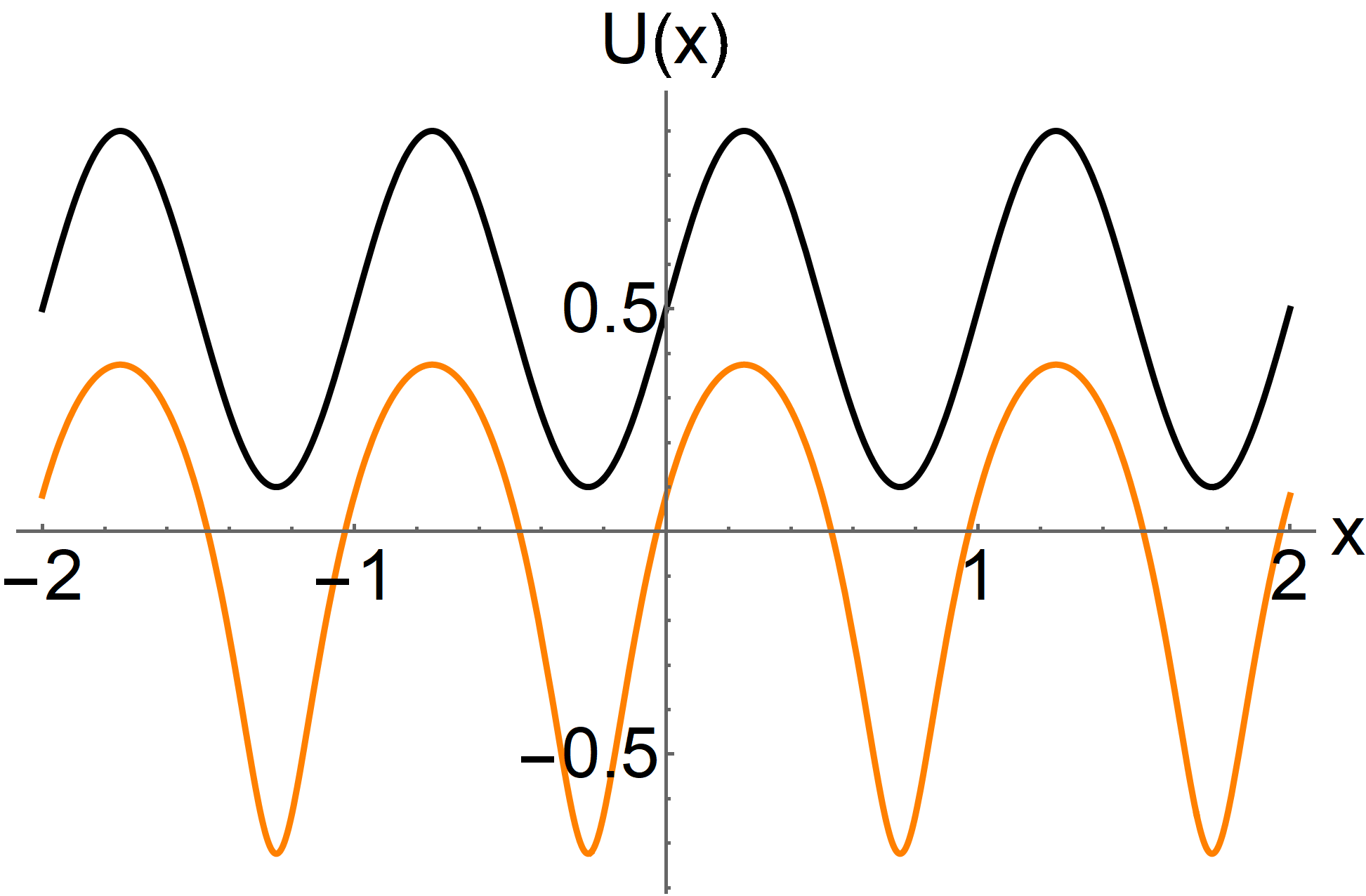

Both models in the previous example have a very simple geometry: transitions only occur between neighboring sites along one-dimensional structures. To illustrate the usefulness of the CTUR also in more complicated situations, we study the transport of a Brownian particle in a two-dimensional, soft-walled channel described by the potential

| (53) |

Along the transverse -direction, the particle is confined in a parabolic trap, whose strength is periodically modulated in the longitudinal -direction, see Fig. 5. The period of the modulation is described by the wave number , its amplitude by the parameter , where the condition ensures that the potential remains trapping in the transverse direction. The longitudinal motion in the potential Eq. (53) is qualitatively similar to the motion in a one-dimensional periodic potential. This analogy can be made explicit by assuming that the relaxation in the transverse direction is fast compared to the longitudinal motion, so that the transverse coordinate is distributed according to the equilibrium distribution,

| (54) |

where is the conditional probability density of finding the particle at transverse coordinate given the longitudinal position and is the normalizing partition function. This assumption is justified for a relatively narrow channel and in the absence of bias. If it holds, we can describe the motion in the longitudinal direction via an effective one-dimensional Langevin dynamics

| (55) |

with the potential

| (56) |

For the form Eq. (53) of the potential, the integral can be evaluated explicitly and we find

| (57) |

The effective potential governing the motion in the longitudinal direction appears only due to diffusion in the transverse direction—in the center of the channel, the potential is flat—and is thus proportional to the temperature, rather than the depth of the channel potential. If we assume Eq. (55) to describe the dynamics in the longitudinal direction, then we can use it to compute the local mean velocity in the presence of a constant bias ,

| (58) |

We remark that this expression is only an approximation, since, strictly speaking, the assumption of a transverse equilibrium distribution Eq. (54) breaks down in the presence of a bias. Thus, the true local mean velocity has to be computed using Eq. (53) and depends on both the longitudinal and transverse coordinate. However, the solution of the two-dimensional problem is considerably harder and does not permit a closed-form expression. Thus, we use Eq. (58) as an approximate expression and the corresponding observable

| (59) |

to compute the CTUR for the current given by the displacement in the longitudinal direction. Note that, in principle, we may obtain even simpler approximations for the local mean velocity. Since we expect the dynamics to be described by an effectively one-dimensional motion, the local mean velocity and probability density are related by , see the discussion in Section V.3. Provided that the bias is not too strong, we may further expect the probability density to be qualitatively similar to the equilibrium density. Thus, a crude approximation of the local mean velocity is given by

| (60) |

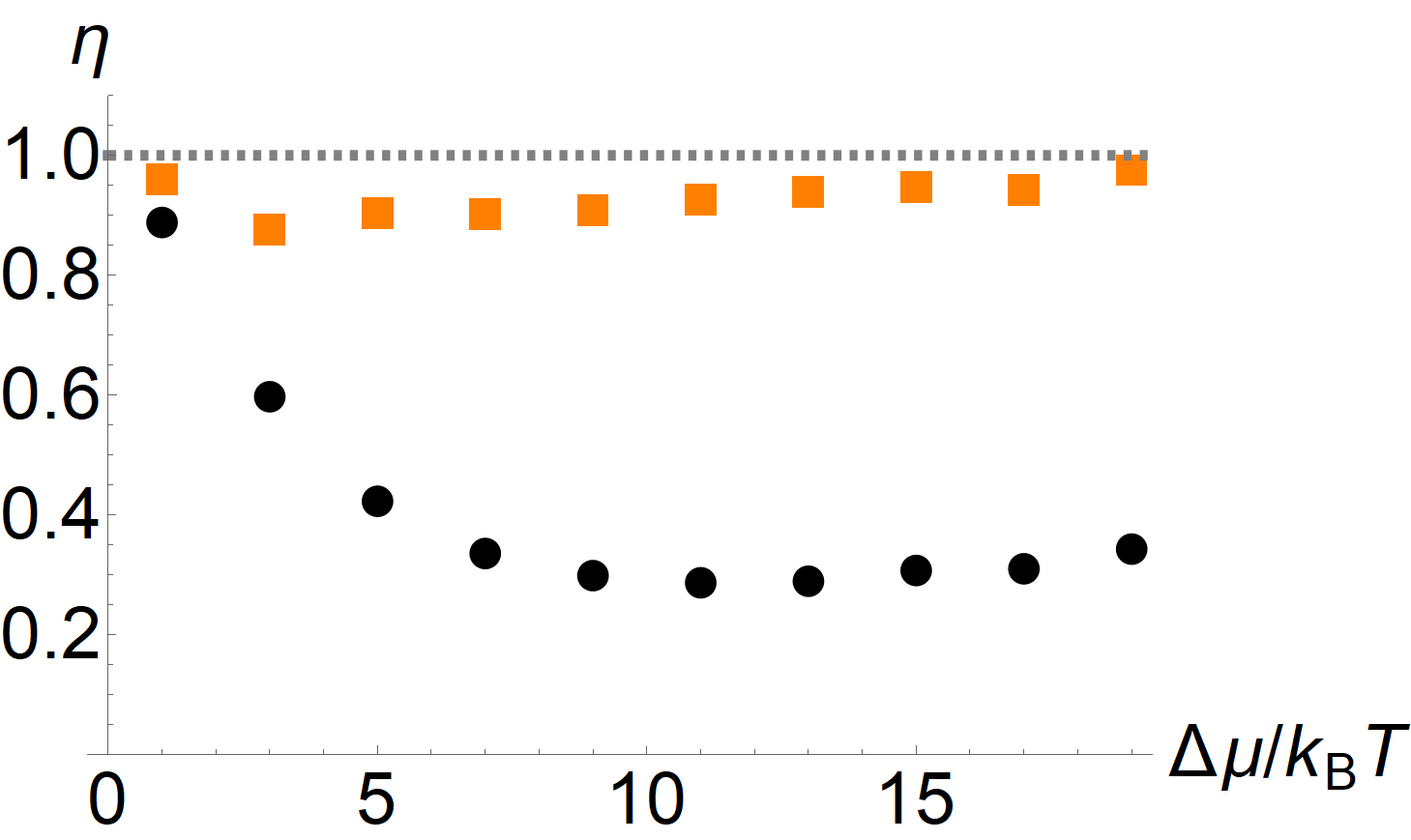

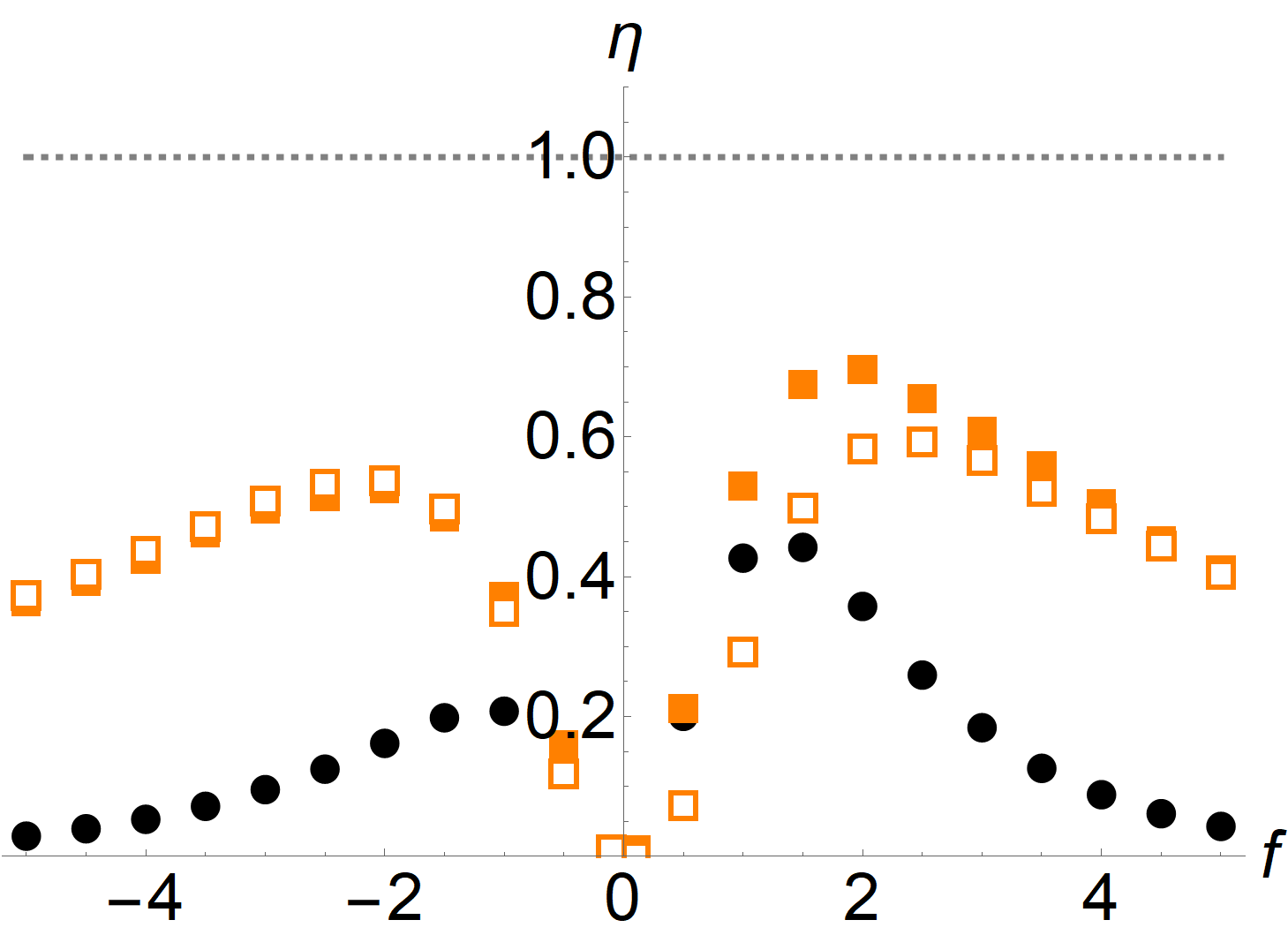

where we evaluated the potential at , which is a measure of the average distance of the particle from the center of the channel. The resulting bounds Eq. (48) obtained from the TUR and CTUR are shown in Fig. 6. For small bias and thus close to equilibrium, both the TUR and CTUR are close to an equality [33]. As we drive the system further away from equilibrium by increasing the bias, the TUR ratio decreases sharply. In this regime, the CTUR with either approximate local mean velocity yields a tighter bound than the TUR and remains close to an equality. For even stronger bias, the equilibrium argument behind the approximate expressions Eq. (58) and Eq. (60) as well as the one-dimensional approximation become increasingly invalid, and thus the tightness of the CTUR likewise starts to decrease. However, we stress that for moderately strong bias, the estimate on the entropy production from the CTUR with Eq. (58) is around of the true value, which is considerably tighter than the TUR estimate of less than .

VII Discussion

In this article, we have shown how to improve the TUR by taking into account the correlations between current and non-current observables and that the resulting inequality can yield a much improved estimate of entropy production. We remark that Eq. (14) is more general: It allows us to improve any bound provided by the FRI, provided that we can find a quantity whose average is invariant under a suitable perturbation of the dynamics. This is a manifestation of the monotonicity of information established in Eq. (12). Since many generalizations of the TUR may be derived from the FRI [35, 18, 19, 20, 17], these generalizations can be improved in a completely analogous manner, by exploiting the existence of symmetries and conserved quantities. For example, in Ref. [18], the TUR was generalized from steady states to systems in the presence of time-periodic driving. In this case, the perturbation that leads to the TUR is a change in the driving frequency, and thus any non-current observable that is independent of the driving frequency can be used to obtain a tighter bound, similar to Eq. (25).

In the presence of several currents, it is only possible to estimate the partial entropy production corresponding to the measured current [36, 6], see also the discussion in Section VI.2. In such situations, measuring several currents and state-dependent observables is required to obtain a good estimate on the entropy production using Eq. (21). We note that, since the derivation of Ref. [36] involves minimizing the entropy production constrained on the measured current while keeping the steady state probability fixed, the CTUR extends in a straightforward manner to the tighter bounds involving the partial entropy production. In general, caution is needed when interpreting the results of the estimation of entropy production for complex systems. This is particularly true for biological systems, in which there are typically many out of equilibrium processes that to not directly contribute to any directly accessible observable, yet still result in (considerable) contributions to entropy production. For example, observing the motion a biological cell can yield an accurate estimate on the entropy production corresponding to its mechanical motion, but it should not be interpreted as the entropy production of the cell, which involves a multitude of different chemical processes.

Finally, the fact that the model of -ATPase is close to saturating the CTUR at biological conditions poses the question of whether this finding extends to models of other molecular motors. In the light of interpreting Eq. (25) as a transport efficiency, Eq. (48), this appears reasonable: Achieving precise transport at minimal dissipation would obviously be advantageous for any machine, whether artificial or naturally occurring. We leave this issue for future research.

Acknowledgements.

Acknowledgments. This work was supported by KAKENHI (Nos. 17H01148, 19H05795 and 20K20425).References

- Sekimoto [2010] K. Sekimoto, Stochastic Energetics, Lecture Notes in Physics (Springer Berlin Heidelberg, 2010).

- Seifert [2012] U. Seifert, Stochastic thermodynamics, fluctuation theorems and molecular machines, Rep. Prog. Phys. 75, 126001 (2012).

- Harada and Sasa [2005] T. Harada and S.-i. Sasa, Equality connecting energy dissipation with a violation of the fluctuation-response relation, Phys. Rev. Lett. 95, 130602 (2005).

- Li et al. [2019] J. Li, J. M. Horowitz, T. R. Gingrich, and N. Fakhri, Quantifying dissipation using fluctuating currents, Nature Comm. 10, 1 (2019).

- Manikandan et al. [2020] S. K. Manikandan, D. Gupta, and S. Krishnamurthy, Inferring entropy production from short experiments, Phys. Rev. Lett. 124, 120603 (2020).

- Otsubo et al. [2020] S. Otsubo, S. Ito, A. Dechant, and T. Sagawa, Estimating entropy production by machine learning of short-time fluctuating currents, Phys. Rev. E 101, 062106 (2020).

- Van Vu et al. [2020] T. Van Vu, V. T. Vo, and Y. Hasegawa, Entropy production estimation with optimal current, Phys. Rev. E 101, 042138 (2020).

- Horowitz and Gingrich [2020] J. M. Horowitz and T. R. Gingrich, Thermodynamic uncertainty relations constrain non-equilibrium fluctuations, Nature Phys. 16, 15 (2020).

- Barato and Seifert [2015] A. C. Barato and U. Seifert, Thermodynamic uncertainty relation for biomolecular processes, Phys. Rev. Lett. 114, 158101 (2015).

- Gingrich et al. [2016] T. R. Gingrich, J. M. Horowitz, N. Perunov, and J. L. England, Dissipation bounds all steady-state current fluctuations, Phys. Rev. Lett. 116, 120601 (2016).

- Dechant and Sasa [2018a] A. Dechant and S.-i. Sasa, Current fluctuations and transport efficiency for general Langevin systems, J. Stat. Mech. Theory E. 2018, 063209 (2018a).

- Pietzonka et al. [2017] P. Pietzonka, F. Ritort, and U. Seifert, Finite-time generalization of the thermodynamic uncertainty relation, Phys. Rev. E 96, 012101 (2017).

- Dechant and i. Sasa [2020] A. Dechant and S. i. Sasa, Continuous time-reversal and equality in the thermodynamic uncertainty relation (2020), arXiv:2010.14769 [cond-mat.stat-mech] .

- Hwang and Hyeon [2018] W. Hwang and C. Hyeon, Energetic costs, precision, and transport efficiency of molecular motors, J. Phys. Chem. Lett. 9, 513 (2018).

- Dechant and Sasa [2018b] A. Dechant and S.-i. Sasa, Entropic bounds on currents in Langevin systems, Phys. Rev. E 97, 062101 (2018b).

- Pal et al. [2020] S. Pal, S. Saryal, D. Segal, T. S. Mahesh, and B. K. Agarwalla, Experimental study of the thermodynamic uncertainty relation, Phys. Rev. Research 2, 022044(R) (2020).

- Liu et al. [2020] K. Liu, Z. Gong, and M. Ueda, Thermodynamic uncertainty relation for arbitrary initial states, Phys. Rev. Lett. 125, 140602 (2020).

- Koyuk and Seifert [2019] T. Koyuk and U. Seifert, Operationally accessible bounds on fluctuations and entropy production in periodically driven systems, Phys. Rev. Lett. 122, 230601 (2019).

- Koyuk and Seifert [2020] T. Koyuk and U. Seifert, Thermodynamic uncertainty relation for time-dependent driving, Phys. Rev. Lett. 125, 260604 (2020).

- Van Vu and Hasegawa [2020] T. Van Vu and Y. Hasegawa, Thermodynamic uncertainty relations under arbitrary control protocols, Phys. Rev. Research 2, 013060 (2020).

- Zimmermann and Seifert [2012] E. Zimmermann and U. Seifert, Efficiencies of a molecular motor: a generic hybrid model applied to the F1-ATPase, New J. Phys. 14, 103023 (2012).

- Kawaguchi et al. [2014] K. Kawaguchi, S. i. Sasa, and T. Sagawa, Nonequilibrium dissipation-free transport in f1-atpase and the thermodynamic role of asymmetric allosterism, Biophys. J. 106, 2450 (2014).

- Dechant and Sasa [2020] A. Dechant and S.-i. Sasa, Fluctuation–response inequality out of equilibrium, Proc. Natl. Acad. Sci. 117, 6430 (2020).

- Dechant [2018] A. Dechant, Multidimensional thermodynamic uncertainty relations, J. Phys. A Math. Theor. 52, 035001 (2018).

- Radhakrishna Rao [1945] C. Radhakrishna Rao, Information and the accuracy attainable in the estimation of statistical parameters, Bull. Calcutta Math. Soc. 37, 81 (1945).

- Cramér [2016] H. Cramér, Mathematical methods of statistics, Vol. 9 (Princeton university press, 2016).

- Chetrite and Touchette [2015] R. Chetrite and H. Touchette, Nonequilibrium Markov processes conditioned on large deviations, Ann. Henri Poincaré 16, 2005 (2015).

- Sasa [2014] S.-i. Sasa, Possible extended forms of thermodynamic entropy, J. Stat. Mech. Theory E. 2014, P01004 (2014).

- Hasegawa and Van Vu [2019] Y. Hasegawa and T. Van Vu, Uncertainty relations in stochastic processes: An information inequality approach, Phys. Rev. E 99, 062126 (2019).

- Pigolotti et al. [2017] S. Pigolotti, I. Neri, E. Roldán, and F. Jülicher, Generic properties of stochastic entropy production, Phys. Rev. Lett. 119, 140604 (2017).

- Shiraishi [2021] N. Shiraishi, Optimal thermodynamic uncertainty relation in markov jump processes (2021), arXiv:2106.11634 [cond-mat.stat-mech] .

- Pal et al. [2021] A. Pal, S. Reuveni, and S. Rahav, Thermodynamic uncertainty relation for systems with unidirectional transitions, Phys. Rev. Research 3, 013273 (2021).

- Macieszczak et al. [2018] K. Macieszczak, K. Brandner, and J. P. Garrahan, Unified thermodynamic uncertainty relations in linear response, Phys. Rev. Lett. 121, 130601 (2018).

- Liepelt and Lipowsky [2007] S. Liepelt and R. Lipowsky, Kinesin’s network of chemomechanical motor cycles, Phys. Rev. Lett. 98, 258102 (2007).

- Di Terlizzi and Baiesi [2018] I. Di Terlizzi and M. Baiesi, Kinetic uncertainty relation, J. Phys. A Math. Theor. 52, 02LT03 (2018).

- Polettini et al. [2016] M. Polettini, A. Lazarescu, and M. Esposito, Tightening the uncertainty principle for stochastic currents, Phys. Rev. E 94, 052104 (2016).

Appendix A Optimal state dependent observables for stochastic entropy production

In Ref. [13], an explicit expression for the variance of a stochastic current in a diffusion process was derived,

| (61) | ||||

where is the joint probability density corresponding to Eq. (15) in the absence of the discrete degree of freedom. In the above expression, we defined

| (62) |

Here, is the reversible part of the drift,

| (63) |

tr denotes the trace and is the Jacobian matrix of the weighting function . In a similar manner, we may derive the covariance between the current and the state-dependent observable ,

| (64) | ||||

while the variance of is given by

| (65) |

The stochastic entropy production is the current with the weighting function . For this choice, we find

| (66) |

where we used that the expression in parentheses is nothing but the steady-state condition of the Fokker-Planck equation Eq. (16). In this case, the expressions Eq. (61) and Eq. (64) simplify,

| (67) |

With these expressions, it is obvious that choosing , i. e.,

| (68) |

results in

| (69) |

This choice thus realizes the equality in the CTUR Eq. (3).

For the case of a pure jump process, we have for the variances and covariance,

| (70) | ||||

We decompose the transition rates into the (irreversible) local mean velocity and a reversible part,

| (71) |

Using that , we can write

| (72) |

We focus on the stochastic pseudo-entropy with the weighting function

| (73) |

For this choice, we obtain

| (74) |

where we used the steady state condition of the master equation. As a consequence, all terms involving in Eq. (72) vanish, and we have

| (75) | ||||

Note that we can change the second argument of the conditional probabilities, since we have

| (76) |

where we used in the first, in the second and renamed indices in the third step. We choose the function corresponding to the local average of the stochastic pseudo entropy

| (77) |

which, when plugged into the above expressions, yields

| (78) |

This is precisely the same as the first term in and thus, we finally obtain

| (79) |

which yields equality in Eq. (35).

Appendix B Dependence of the CTUR on the size of the data set and sampling interval

The CTUR Eq. (3) is a relation between the averages and variances of different physical observables. Formally, these quantities are defined for an infinite ensemble. However, in reality the number of trajectories is always finite, both in experiments and numerical simulations. It is clear that the averages and variance computed from a finite number of random trajectories are themselves random quantities; thus, for any finite data set, there is a finite probability that the relation Eq. (3) can be violated. Moreover, the state-dependent observable Eq. (23) is defined as a time-integral over continuous-time trajectories. However, in reality, the sampling interval is likewise finite, that is, instead of a continuous trajectory , we observe a sequence of discrete values with and . Here, we want to investigate the dependence of the bound Eq. (3) on and . For simplicity, we focus on a simple model that serves as a minimal example of a non-equilibrium steady state. We consider a single overdamped particle in a one-dimensional periodic potential , which is driven by a constant force , while the environment is characterized by the mobility and temperature . The corresponding Langevin equation reads

| (80) |

For long times, this system settles into a periodic steady state with probability density . Since the system is one-dimensional, this also fixes the steady-state local mean velocity , see Section V.3. As a current observable, we consider the total displacement

| (81) |

As in Section VI.1, we choose the state-dependent observable defined by the inverse probability density,

| (82) |

which is proportional to the heuristic observable defined in Section IV. is estimated by the occupation fraction of the entire set of trajectories. For a finite sampling interval , we instead use the discrete approximation of the integral

| (83) |

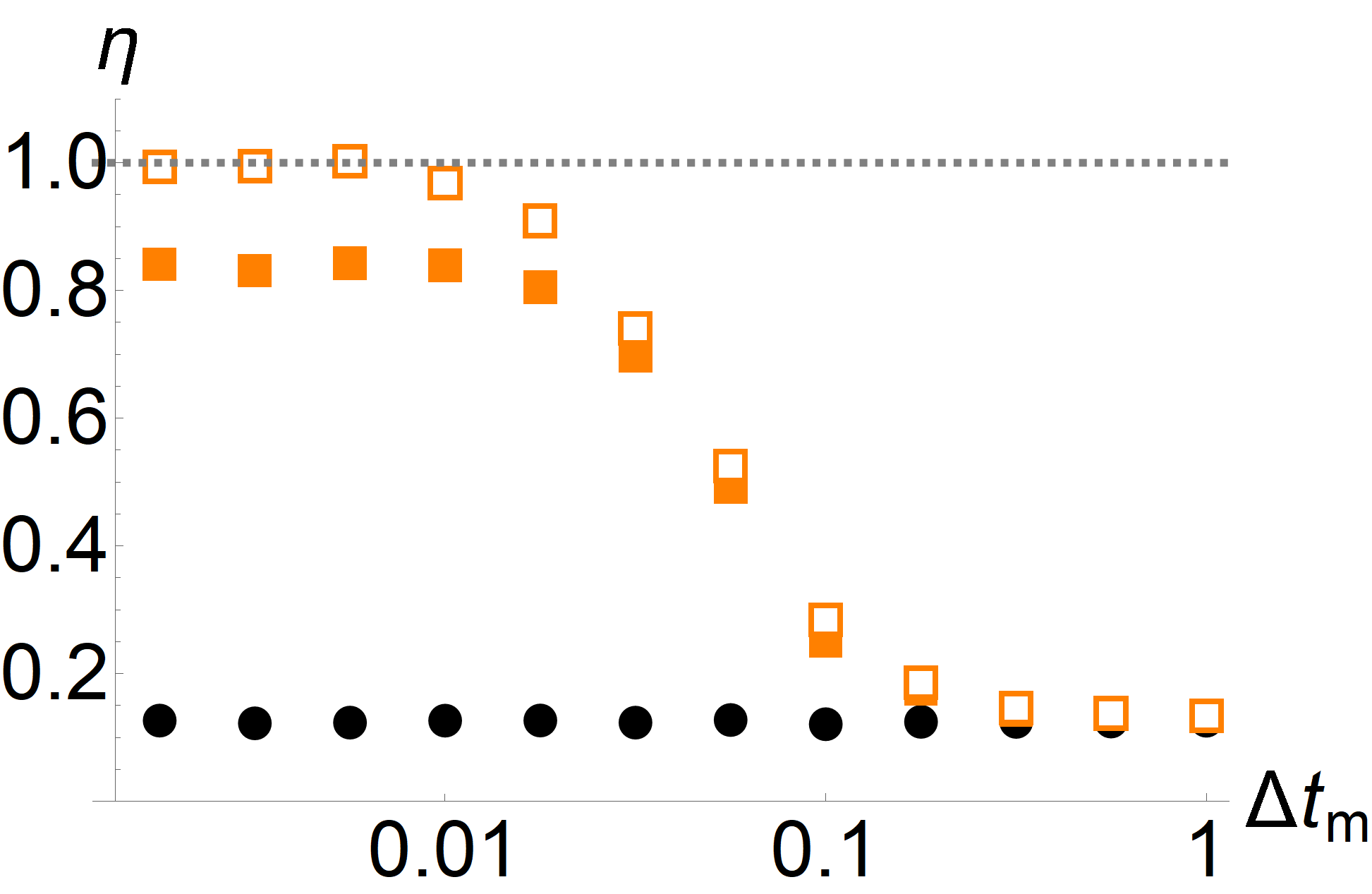

We further define an observable parameterized as Eq. (49) with and numerically maximize the Pearson coefficient with respect to the parameters and . As a concrete example, we consider the case with , , , and . This corresponds to a moderately strong driving at relatively low temperature. In this regime, the relevant timescale describing the dynamics is the time it takes the particle to traverse one period of the potential, . For the above parameter values, the TUR is generally not tight; the ratio defined in Eq. (48) has a value of and thus the ratio between the average current and its variance underestimates the entropy production by about a factor of . By contrast, as shown in Fig. 7, the CTUR with the numerically optimized observable can provide an accurate estimate of the entropy production, given a sufficient amount of trajectories and a sufficiently small sampling interval. As a function of (left panel of Fig. 7), the ratios corresponding to both the TUR and the CTUR show noticeable fluctuations for a small number of trajectories. However, while the value of the TUR ratio stabilizes at around , we need around trajectories for the CTUR to provide a reliable estimate. The reason is that, since, in this case, the CTUR is much tighter than the TUR, the Pearson coefficient is close to . Since the left-hand side of Eq. (3) diverges as approaches unity, small fluctuations in its value have a large impact on the estimate. As a function of the sampling interval , we note that, if is comparable to the transport timescale , the CTUR does not yield any improvement over the TUR. The reason is that, for such a large sampling interval, the spatially periodic function in Eq. (83) can no longer resolve the motion of the particles. Thus, the observable is no longer correlated with the displacement of the particle, causing the Pearson coefficient to vanish. Note that the TUR, which only depends on the accumulated current until the final time, is independent of the sampling interval. We see that the CTUR can yield a significant improvement over the TUR for and saturates at . We remark that this is still two orders of magnitude larger than the sampling interval needed to accurately determine the entropy production directly from the trajectory using its representation as a stochastic current

| (84) |