Improving the Knowledge Gradient Algorithm

Abstract

The knowledge gradient (KG) algorithm is a popular policy for the best arm identification (BAI) problem. It is built on the simple idea of always choosing the measurement that yields the greatest expected one-step improvement in the estimate of the best mean of the arms. In this research, we show that this policy has limitations, causing the algorithm not asymptotically optimal. We next provide a remedy for it, by following the manner of one-step look ahead of KG, but instead choosing the measurement that yields the greatest one-step improvement in the probability of selecting the best arm. The new policy is called improved knowledge gradient (iKG). iKG can be shown to be asymptotically optimal. In addition, we show that compared to KG, it is easier to extend iKG to variant problems of BAI, with the -good arm identification and feasible arm identification as two examples. The superior performances of iKG on these problems are further demonstrated using numerical examples.

1 Introduction

The best arm identification (BAI) is a sequential decision problem where in each stage, the agent pulls one out of given arms and observes a noisy sample of the chosen arm. At the end of the sampling stage, the agent needs to select the arm that is believed to be the best according to the samples. In this research, we let the best arm be the one with the largest mean. BAI is a useful abstraction of issues faced in many practical settings berry1978modified ; gilotte2018offline and has been widely studied in the machine learning community even2006action ; audibert2010best . Since in practical problems, the target arm(s) (to be identified) is not necessarily the best arm, some variant models of BAI have also been proposed in the literature, e.g., top- arm identification bubeck2013multiple ; xiao2018simulation , Pareto front identification auer2016pareto , -good arm identification mason2020finding , feasible arm identification gao2016efficient ; katz2018feasible , etc.

In this research, we focus on the fixed-budget BAI, in which the total number of samples (budget) is fixed and known by the agent. The goal is to correctly identify the best arm when the budget is used up. To solve this problem, many methods have been proposed, e.g., successive rejects (SR) audibert2010best , expected improvements (EI) chick2010sequential , top-two sampling qin2017improving ; russo2020simple , knowledge gradient (KG) ryzhov2010robustness ; li2022finite , optimal computing budget allocation (OCBA)chen2000simulation ; gao2017new ; li2023convergence , etc. Among these methods, KG has been prevailing. It was first proposed in gupta1994bayesian and further analyzed in frazier2008knowledge ; frazier2009knowledge . It is built on the simple idea of always pulling the arm that yields the greatest expected one-step improvement in the estimate of the best mean of the arms. This improvement measure is analytical, making the algorithm easily implementable. KG often offers reasonable empirical performances and has been successfully applied in a number of real applications schoppe2010wind ; negoescu2011knowledge .

However, we observe that this definition of KG has limitations, causing the algorithm not asymptotically optimal. Here by not being asymptotically optimal, we mean that the KG algorithm is not rate optimal, in the sense that the probability of the best arm being falsely selected based on the posterior means of the arms does not converge to zero at the fastest possible rate. This is resulted from KG allocating too few samples to the best arm and excessive samples to the remaining arms. Note that Frazier et al. frazier2008knowledge claimed that KG is “asymptotically optimal”, but in their context, “asymptotically optimal” is consistent, i.e., all the arms will be infinitely sampled as the round , so that the best arm will be correctly selected eventually. This is a relatively weak result for BAI algorithms (the simple equal allocation is also consistent). In this paper, asymptotically optimal refers to rate optimal.

Contributions. We propose a new policy that can overcome this limitation of KG. The new policy follows the manner of one-step look ahead of KG, but pulls the arm that yields the greatest one-step improvement in the probability of selecting the best arm. We call it improved knowledge gradient (iKG) and show that it is asymptotically optimal. This policy is originated from the thought of looking at whether the best arm has been selected at the end of sampling, instead of looking at the extent that the mean of the selected arm has been maximized. Although both ways can identify the best arm, it turns out that the algorithms developed from them are significantly different in the rates of posterior convergence. Another advantage of iKG over KG is that iKG is more general and can be more easily extended to variant problems of BAI. We use -good arm identification and feasible arm identification as examples, develop algorithms for them using the idea of iKG and establish asymptotic optimality for the algorithms.

This paper is conceptually similar to qin2017improving which improves the EI algorithm for BAI. However, for EI, sampling ratios of any two arms in the non-best set are already asymptotically optimal. One only needs to introduce a parameter to balance the probabilities of sampling the best arm and the non-best set without changing the sampling policy within the non-best set to further improve EI. For KG, sampling ratios are not asymptotically optimal for any two out of the arms. It requires a fundamental change on the sampling policy that influences the sampling rates of all the arms to improve KG. Moreover, the improved rate of posterior convergence of EI in qin2017improving still depends on which is not necessarily optimal, while we can show that this rate of iKG is optimal.

2 Knowledge Gradient and its Limitations

In this section, we review KG and discuss its limitations. Suppose there are arms in BAI. In each round , the agent chooses any arm to pull and obtains a noisy sample . After rounds, the agent needs to select an arm that he/she believes to be the best. Under the framework of the KG algorithm, ’s are assumed to be independent across different rounds and arms and following the normal distribution with unknown means and known variances . The best arm is assumed to be unique. Without loss of generality, let , where indicates the arm with -th largest mean.

The KG algorithm can be derived from a dynamic programming (DP) formulation of BAI. The state space consists of all the possible posterior means and variances of the arms, denoted as . State in round can be written as . In the Bayesian model, the unknown mean is treated as random and let be the random variable following its posterior distribution. We adopt normal distribution priors . With samples of the arms, we can compute their posterior distributions, which are still normal in round by conjugacy. The posterior mean and variance of arm are

| (1) |

In this paper, we adopt a non-informative prior for each arm , i.e., and . Denote the action space as and transition function as . Suppose is a random variable following the posterior distribution of arm . Then, the state transition can be written as . Let be the sampling policy that guides the agent to pull arm in round and be the set of sampling policies adapted to the filtration . After rounds, the estimated best arm is selected and a terminal reward is received. We can write our objective as

| (2) |

The DP principle implies that the value function in round can be computed recursively by

We define the Q-factors as

and the DP principle tells us that any policy satisfying

is optimal. However, the optimal policy is basically intractable unless for problems with very small scales, known as the “curse of dimensionality”.

On the other hand, note that except the terminal reward , this problem has no rewards in the other rounds, so we can restructure as a telescoping sequence

Thus, can be treated as the cumulation of multiple one-step improvements , . A class of one-step look ahead algorithms iteratively pull the arm that maximizes the expectation of the one-step improvement on the value function

| (3) |

These algorithms are not optimal in general unless there is only one round left, i.e., .

The KG algorithm falls in this class. It sets the terminal reward as . With this reward, the one-step improvement in (3) becomes

and in each round, the KG algorithm pulls the arm .

We next characterize for the KG algorithm the rate of posterior convergence of , the probability that the best arm is falsely selected.

Proposition 1.

Let , . For the KG algorithm,

where

We observe that is not optimal. To make this point, Proposition 2 gives an example that is no better than this rate of the TTEI algorithm qin2017improving when the parameter (probability of sampling the best arm) of TTEI is set to some suboptimal value.

Proposition 2.

For the TTEI algorithm qin2017improving , the rate of posterior convergence of exists and is denoted as . Let its probability of sampling the best arm . We have .

According to the proof of Proposition 2, there are configurations of the BAI problem leading to , i.e., is not optimal. In fact, with , is achieved only in some special cases, e.g., when .

3 Improved Knowledge Gradient

In this section, we propose an improved knowledge gradient (iKG) algorithm. We still follow the manner of one-step look ahead of KG, but set the terminal reward of problem (2) as . That is, for the goal of identifying the best arm, we reward the selected arm by a 0-1 quantity showing whether this arm is the best arm, instead of the mean of this arm (as in KG).

In this case, , where

| (4) |

However, the probability in (4) does not have an analytical expression. To facilitate the algorithm implementation and analysis, we adopt an approximation to it using the Bonferroni inequality galambos1977bonferroni :

and can be approximately computed as

| (5) |

Note that the Bonferroni inequality has been adopted as an approximation of the probability of correct selection in the literature for development of BAI algorithms chen2000simulation . For our purpose, we can show that the use of this approximation still makes the resulting algorithm asymptotically optimal and empirically superior.

Let iKGt,i be the one-step improvement in (3) with treated as unchanged after one more sample and approximated by (5). We have the following proposition to compute iKGt,i. The iKG algorithm pulls the arm with the largest iKGt,i in each round.

Proposition 3.

With the definition of iKGt,i above, we have

| (6) |

Both KG and iKG are greedy algorithms that look at the improvement only one-step ahead. The essential difference between them is on the reward they use for the event of best arm identification. For KG, it is the mean of the arm selected, while for iKG, it is a 0-1 quantity showing whether the best arm is selected. It is interesting to note that the choice between these two rewards has been discussed in the control community for optimization of complex systems, known as cardinal optimization (similar to KG) vs. ordinal optimization (similar to iKG) ho1992ordinal , with the discussion result in line with this research, indicating that ordinal optimization has advantages over cardinal optimization in the convergence rates of the optimization algorithms ho1999explanation .

Theorem 1.

For the iKG algorithm, , where

| (7) |

and is the sampling rate of arm satisfying

| (8) |

In addition, for any BAI algorithms,

Theorem 1 shows that the rate of posterior convergence of the iKG algorithm is the fastest possible. We still use TTEI as an example. This theorem indicates that for any and the equality holds only when is set to , where is the optimal value of and is typically unknown.

4 Variant Problems of BAI

Another advantage of iKG over KG is that iKG is more general, in the sense that it can be easily extended to solve variant problems of BAI. In the variants, the target arms to be identified are not the single best arm, but no matter how the target arms are defined, one can always look at the event that whether these arms are correctly identified at the end of sampling and investigate the probability of this event to develop iKG and the algorithm. In contrast, it is difficult to extend KG to identify arms that cannot be found through optimizing means of these (and/or other) arms. In this section, we extend iKG to two BAI variants: -good arm identification mason2020finding and feasible arm identification katz2018feasible . We develop algorithms for them and establish their asymptotic optimality. Note that in these two variant problems, the target arms need to be found by comparing their means with some fixed values. In such cases, the idea of KG is not straightforward.

4.1 -Good Arm Identification

We follow the notation in Sections 2 and 3. For the arms, suppose . Given , the -good arm identification problem aims to find all the arms with , i.e., all the arms whose means are close enough to the best (-good). Assume that no arms have means lying on . Denote the set of -good arms as and the estimated set of -good arms after rounds as . We set the terminal reward , i.e., whether the set is correctly selected. Then, , where

Again, applying the Bonferroni inequality,

| (9) |

Let iKG be the one-step improvement in (3) with treated as unchanged after one more sample and approximated by the right-hand side of (9). We have the following proposition to compute iKG .

Proposition 4.

With the definition of iKG above, we have

| (10) |

To identify the -good arms, the iKG- algorithm pulls the arm with the largest iKG in each round. For this algorithm, we can show that the rate of posterior convergence of is the fastest possible.

Theorem 2.

For the iKG- algorithm, , where

| (11) |

and is the sampling rate of arm satisfying

| (12) |

In addition, for any -good arm identification algorithms,

4.2 Feasible Arm Identification

In the feasible arm identification, samples from pulling arms are -dimensional vectors instead of scalars, where each dimension of the vector corresponds to some measure of the system performance and is the observation associated with arm and measure . Suppose ’s follow the normal distribution with unknown means and known variances . We impose constraints on arms and measures . The goal of this problem is to find the set of feasible arms . Let the estimated set of feasible arms after rounds be and . We assume that ’s are independent across different rounds and measures , and ’s do not lie on the constraint limits . To facilitate the analysis, we also define for round the set of measures satisfied by arm and the set of measures violated by arm .

Set the terminal reward , i.e., whether the set is correctly selected. Then, , where

Applying the Bonferroni inequality,

| (13) |

The inequality holds because .

Let iKG be the one-step improvement in (3) with , and treated as unchanged after one more sample and approximated by the right-hand side of (13). We have the following proposition to compute iKG .

Proposition 5.

With the definition of iKG above, we have

| (14) |

To identify the feasible arms, the iKG-F algorithm pulls the arm with the largest iKG in each round. For this algorithm, we can show that the rate of posterior convergence of is also the fastest possible.

Theorem 3.

For the iKG-F algorithm, , where

| (15) |

and is the sampling rate of arm satisfying

| (16) |

In addition, for any feasible arm identification algorithms

5 Numerical Experiments

In this section, we show empirical performances of the iKG, iKG- and iKG-F algorithms on synthetic and real-world examples. For the best arm identification problem, we compare iKG with the following algorithms.

-

•

Expected Improvement (EI) chick2010sequential . This is another common strategy for BAI. In each round, it pulls the arm offering the maximal expected improvement over the current estimate of the best mean of the arms.

-

•

Top-Two Expected Improvement (TTEI) qin2017improving . This is a modification of the EI algorithm by introducing a parameter to control the probabilities of sampling the best arm and the non-best set. We set the parameter in TTEI as its default value .

-

•

Knowledge Gradient. This is the algorithm under study in this research.

For the -good arm identification problem, we compare iKG- with the following algorithms.

-

•

APT Algorithm locatelli2016optimal . It is a fixed-budget algorithm for identifying the arms whose means are above a given threshold. We set the input tolerance parameter as and the threshold as the posterior mean of the estimated best arm minus .

-

•

Algorithm mason2020finding . It is a fixed-confidence algorithm for -good arm identification. It pulls three arms in each round, the estimated best arm, one arm above the threshold and one arm below the threshold. We set the input tolerance parameter as and .

For the feasible arm identification problem, we compare iKG-F with the following algorithms.

-

•

MD-UCBE Algorithm katz2018feasible . This is a fixed-budget algorithm for feasible arm identification based on the upper confidence bound. We set the input tolerance parameter as and hyperparameter , where is a constant that can be computed. Katz-Samuels and Scott katz2018feasible showed that with , the performance of MD-UCBE is nearly optimal.

-

•

MD-SAR Algorithm katz2018feasible . This is a fixed-budget algorithm for feasible arm identification based on successive accepts and rejects. We set the input tolerance parameter as .

In addition, iKG, iKG- and iKG-F will be compared with the equal allocation, where each arm is simply played with the same number of rounds. It is a naive method and is often used as a benchmark against which improvements might be measured.

The examples for testing include three synthetic examples, called Examples 1-3, and three real examples, namely the Dose-Finding Problem, Drug Selection Problem, and Caption Selection Problem. For Example 1-3 and the Dose-Finding problem, samples of the arms are two-dimensional. We call the measures of them measures 1 and 2. When the examples are tested for the best arm identification and -good identification, only measure 1 will be used for identifying good/best arms. When the examples are tested for the feasible arm identification, both measures will be used for feasibility detection. For the Drug Selection and Caption Selection problems, samples of the arms are one-dimensional. They are tested for the best arm identification, -good identification and feasible arm identification.

Synthetic Datasets. We consider three examples, all containing ten arms.

Example 1. The means in measure 1 of the ten arms are , , , , , , , , and , and the means in measure 2 of the ten arms are , , , , , , ,, and , all of which are uniformly generated in . Samples of the arms are corrupted by normal noises . The best arm is arm and -good arms are arms 3 and 4. For the feasible arm identification, we choose arms with means in both measures less than . Then the feasible arms are arms , , , and .

Example 2. We keep the setting of Example 1. Distributions of the noises for arms 1-5 are changed to .

Example 3. Consider functions , and . The means in measure 1 of the ten arms are with . The means in measure 2 of the ten arms are with and with . Noises follow the normal distribution . The best arm is arm and -good arms are arms 1-3. For the feasible arm identification, we choose arms with means in measure greater than and means in measure less than . The feasible arms are arms 1-3.

Dose-Finding Problem. We use the data in genovese2013efficacy (see ACR in week ) for treating rheumatoid arthritis by the drug secukinumab. There are four dosage levels, mg, mg, mg, and mg, and a placebo, which are treated as five arms. We develop a simulation model based on the dataset. Each arm is associated with two performance measures: the probability of the drug being effective and the probability of the drug causing infections. The means of the five arms are , , , and . Samples of each arm are corrupted by normal noises . The best arm is arm 3 and the 0.03-good arms are arms 2 and 3. For the feasible arm identification, we find the arms whose probability of being effective is larger than and the probability of causing infections is less than . The feasible arms are arms 2 and 3.

Drug Selection Problem. We consider five contraceptive alternatives based on the Drug Review Dataset (https://doi.org/10.24432/C5SK5S): Ethinyl estradiol / levonorgest, Ethinyl estradiol / norethindro, Ethinyl estradiol / norgestimat, Etonogestrel and Nexplanon, which can be treated as five arms. The dataset provides user reviews on the five drugs along with related conditions and ratings reflecting overall user satisfaction. We set the means of the five arms as , , , and , and the variances of the five arms as , , , and , all calculated by the data. When this example is used for the best arm identification and -good arm identification, the best arm (with the highest user satisfaction) and 0.003-good arm are both arm 3 (Ethinyl estradiol / norgestimat). When this example is used for feasible arm identification, we will select the drugs whose ratings are over , and the feasible arms are arm (Ethinyl estradiol / levonorgest), arm (Ethinyl estradiol / norethindro), arm (Ethinyl estradiol / norgestimat), arm (Etonogestrel) and arm (Nexplanon).

Caption Selection Problem. We aim to select good captions based on the New Yorker Cartoon Caption Contest Dataset (https://nextml.github.io/caption-contest-data/). In the contests, each caption can be treated as an arm. The dataset provides the mean and variance of each arm, which can be used to set up our experiments. We will test contests 853 (Caption 853) and 854 (Caption 854).

In Caption 853, we randomly select ten captions as arms. We set the means of the ten arms as , , , , , , , , and , and the variances of the arms as , , , , , , , , and , which are all calculated by the data. When this example is used for the best arm identification, the best arm (with the highest funniness score) is arm 10. When this example is used for -good arm identification, the 0.1-good arms are arms 3 and 10. When this example is used for feasible arm identification, we will select the captions whose funniness scores are over 1.4, and the feasible arms are arms 3 and 10.

| Example | Example 1 | Example 2 | Example 3 | Dose-finding | Drug Selection | Caption 853 | Caption 854 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1000 | 5000 | 4400 | 18000 | 400 | 1000 | 1200 | 13000 | 2400 | 98000 | 1600 | 3000 | 12000 | 18000 | ||

| BAI | Equal Allocation | 0.38 | 0.22 | 0.44 | 0.31 | 0.25 | 0.13 | 0.35 | 0.05 | 0.43 | 0.27 | 0.17 | 0.11 | 0.26 | 0.18 |

| EI | 0.36 | 0.21 | 0.40 | 0.28 | 0.28 | 0.22 | 0.46 | 0.21 | 0.46 | 0.37 | 0.14 | 0.12 | 0.26 | 0.23 | |

| TTEI | 0.25 | 0.07 | 0.32 | 0.09 | 0.13 | 0.02 | 0.31 | 0.03 | 0.55 | 0.28 | 0.04 | 0.01 | 0.10 | 0.06 | |

| KG | 0.29 | 0.14 | 0.32 | 0.13 | 0.14 | 0.03 | 0.40 | 0.03 | 0.44 | 0.28 | 0.04 | 0.01 | 0.11 | 0.05 | |

| iKG | 0.21 | 0.03 | 0.23 | 0.03 | 0.09 | 0.01 | 0.29 | 0.01 | 0.38 | 0.23 | 0.02 | 0.00 | 0.07 | 0.04 | |

In Caption 854, we also randomly select ten captions as arms. We set the means of the ten arms as , , , , , , , , and , and the variances of the arms as , , , , , , , , and , all calculated by the data. When this example is used for the best arm identification, the best arm is arm 8. When this example is used for -good arm identification, the 0.05-good arms are arms 4 and 8. When this example is used for feasible arm identification, we will select the captions whose funniness scores are over 1.25, and the feasible arms are arms 4 and 8.

| Example | Example 1 | Example 2 | Example 3 | Dose-finding | Drug Selection | Caption 853 | Caption 854 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1000 | 4000 | 2400 | 12000 | 400 | 4000 | 1600 | 6000 | 2600 | 90000 | 4000 | 10000 | 9400 | 15000 | ||

| -good | Equal Allocation | 0.54 | 0.20 | 0.65 | 0.28 | 0.61 | 0.26 | 0.46 | 0.18 | 0.62 | 0.37 | 0.28 | 0.19 | 0.14 | 0.05 |

| APT | 0.28 | 0.17 | 0.52 | 0.25 | 0.72 | 0.49 | 0.56 | 0.53 | 0.74 | 0.70 | 0.41 | 0.35 | 0.48 | 0.49 | |

| 0.29 | 0.07 | 0.35 | 0.11 | 0.51 | 0.06 | 0.38 | 0.17 | 0.64 | 0.34 | 0.21 | 0.10 | 0.12 | 0.04 | ||

| iKG- | 0.17 | 0.03 | 0.29 | 0.00 | 0.48 | 0.03 | 0.34 | 0.06 | 0.60 | 0.27 | 0.10 | 0.02 | 0.11 | 0.03 | |

| Example | Example 1 | Example 2 | Example 3 | Dose-finding | Drug Selection | Caption 853 | Caption 854 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3400 | 11000 | 4800 | 14000 | 2200 | 4800 | 2000 | 4000 | 100000 | 140000 | 4000 | 10000 | 30600 | 44000 | ||

| feasible arm | Equal Allocation | 0.34 | 0.26 | 0.33 | 0.23 | 0.22 | 0.14 | 0.22 | 0.18 | 0.03 | 0.03 | 0.36 | 0.29 | 0.18 | 0.07 |

| MD-UCBE | 0.27 | 0.16 | 0.33 | 0.26 | 0.05 | 0.01 | 0.20 | 0.17 | 0.06 | 0.06 | 0.32 | 0.15 | 0.06 | 0.04 | |

| MD-SAR | 0.74 | 0.33 | 0.68 | 0.22 | 0.30 | 0.03 | 0.79 | 0.55 | 0.06 | 0.02 | 0.58 | 0.19 | 0.08 | 0.05 | |

| iKG-F | 0.23 | 0.02 | 0.24 | 0.01 | 0.04 | 0.00 | 0.14 | 0.01 | 0.01 | 0.01 | 0.20 | 0.07 | 0.05 | 0.00 | |

For the tested algorithms, probabilities of false selection (PFS) are obtained based on the average of 100 macro-replications. Tables 1-3 show the PFS of the algorithms under some fixed sample sizes (additional numerical results about the PFS and sampling rates of the tested algorithms are provided in the Supplement). The proposed iKG, iKG- and iKG-F perform the best. For the best arm identification, EI tends to allocate too many samples to the estimated best arm, leading to insufficient exploration in the remaining arms, while KG tends to allocate too few samples to the estimated best arm, leading to excessive exploration in the remaining arms. TTEI always allocates approximately one-half budget to the estimated best arm when , leading to the budget not being the best utilized. For the -good identification, APT and are inferior because the former insufficiently pulls the estimate best arm, leading to inaccurate estimates of the threshold, while the latter falls in the fixed-confidence regime that focuses on making guarantees on the probability of false selection instead of minimizing it. For the feasible arm identification, both MD-UCBE and MD-SAR allocate too many samples to the arms near the constraint limits. For the three problems, equal allocation performs the worst in general, because it does not have any efficient sampling mechanisms for identifying the target arms in these problems.

6 Conclusion

This paper studies the knowledge gradient (KG), a popular policy for the best arm identification (BAI). We observe that the KG algorithm is not asymptotically optimal, and then propose a remedy for it. The new policy follows KG’s manner of one-step look ahead, but utilizes different evidence to identify the best arm. We call it improved knowledge gradient (iKG) and show that it is asymptotically optimal. Another advantage of iKG is that it can be easily extended to variant problems of BAI. We use -good arm identification and feasible arm identification as two examples for algorithm development and analysis. The superior performances of iKG on BAI and the two variants are further demonstrated using numerical examples.

References

- [1] D. A. Berry. Modified two-armed bandit strategies for certain clinical trials. Journal of the American Statistical Association, 73(362):339–345, 1978.

- [2] A. Gilotte, C. Calauzènes, T. Nedelec, A. Abraham, and S. Dollé. Offline a/b testing for recommender systems. In Proceedings of the 11th ACM International Conference on Web Search and Data Mining, pages 198–206, 2018.

- [3] E. Even-Dar, S. Mannor, Y. Mansour, and S. Mahadevan. Action elimination and stopping conditions for the multi-armed bandit and reinforcement learning problems. Journal of Machine Learning Research, 7(6), 2006.

- [4] J.-Y. Audibert, S. Bubeck, and R. Munos. Best arm identification in multi-armed bandits. In 23rd Conference on Learning Theory, pages 41–53, 2010.

- [5] S. Bubeck, T. Wang, and N. Viswanathan. Multiple identifications in multi-armed bandits. In International Conference on Machine Learning, pages 258–265. PMLR, 2013.

- [6] H. Xiao and S. Gao. Simulation budget allocation for selecting the top-m designs with input uncertainty. IEEE Transactions on Automatic Control, 63(9):3127–3134, 2018.

- [7] P. Auer, C. K. Chiang, R. Ortner, and M. Drugan. Pareto front identification from stochastic bandit feedback. In Artificial Intelligence and Statistics, pages 939–947. PMLR, 2016.

- [8] B. Mason, L. Jain, A. Tripathy, and R. Nowak. Finding all -good arms in stochastic bandits. Advances in Neural Information Processing Systems, 33:20707–20718, 2020.

- [9] S. Gao and W. Chen. Efficient feasibility determination with multiple performance measure constraints. IEEE Transactions on Automatic Control, 62(1):113–122, 2016.

- [10] J. Katz-Samuels and C. Scott. Feasible arm identification. In International Conference on Machine Learning, pages 2535–2543. PMLR, 2018.

- [11] S. E. Chick, J. Branke, and C. Schmidt. Sequential sampling to myopically maximize the expected value of information. INFORMS Journal on Computing, 22(1):71–80, 2010.

- [12] C. Qin, D. Klabjan, and D. Russo. Improving the expected improvement algorithm. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pages 5387–5397, 2017.

- [13] D. Russo. Simple Bayesian algorithms for best arm identification. Operations Research, 68(6):1625–1647, 2020.

- [14] I. O. Ryzhov, P. I. Frazier, and W. B. Powell. On the robustness of a one-period look-ahead policy in multi-armed bandit problems. Procedia Computer Science, 1(1):1635–1644, 2010.

- [15] Y. Li and S. Gao. On the finite-time performance of the knowledge gradient algorithm. In International Conference on Machine Learning, pages 12741–12764. PMLR, 2022.

- [16] C. H. Chen, J. Lin, E. Yücesan, and S. E. Chick. Simulation budget allocation for further enhancing the efficiency of ordinal optimization. Discrete Event Dynamic Systems, 10:251–270, 2000.

- [17] S. Gao, W. Chen, and L. Shi. A new budget allocation framework for the expected opportunity cost. Operations Research, 65(3):787–803, 2017.

- [18] Y. Li and S. Gao. Convergence rate analysis for optimal computing budget allocation algorithms. Automatica, 153:111042, 2023.

- [19] S. S. Gupta and K. J. Miescke. Bayesian look ahead one stage sampling allocations for selecting the largest normal mean. Statistical Papers, 35(1):169–177, 1994.

- [20] P. I. Frazier, W. B. Powell, and S. Dayanik. A knowledge-gradient policy for sequential information collection. SIAM Journal on Control and Optimization, 47(5):2410–2439, 2008.

- [21] P. Frazier, W. Powell, and S. Dayanik. The knowledge-gradient policy for correlated normal beliefs. INFORMS Journal on Computing, 21(4):599–613, 2009.

- [22] C. Schoppe. Wind and pumped-hydro power storage: Determining optimal commitment policies with knowledge gradient non-parametric estimation. B.Sc. Thesis at Princeton University, 2010.

- [23] D. M. Negoescu, P. I. Frazier, and W. B. Powell. The knowledge-gradient algorithm for sequencing experiments in drug discovery. INFORMS Journal on Computing, 23(3):346–363, 2011.

- [24] J. Galambos. Bonferroni inequalities. The Annals of Probability, pages 577–581, 1977.

- [25] Y.-C. Ho, S. Sreenivas, and P. Vakili. Ordinal optimization of deds. Discrete Event Dynamic Systems, 2(1):61–88, 1992.

- [26] Y.-C. Ho. An explanation of ordinal optimization: Soft computing for hard problems. Information Sciences, 113(3-4):169–192, 1999.

- [27] A. Locatelli, M. Gutzeit, and A. Carpentier. An optimal algorithm for the thresholding bandit problem. In International Conference on Machine Learning, pages 1690–1698. PMLR, 2016.

- [28] M. C. Genovese, P. Durez, H. B. Richards, J. Supronik, E. Dokoupilova, V. Mazurov, J. A. Aelion, S. H. Lee, C. E. Codding, H. Kellner, et al. Efficacy and safety of secukinumab in patients with rheumatoid arthritis: a phase ii, dose-finding, double-blind, randomised, placebo controlled study. Annals of the Rheumatic Diseases, 72(6):863–869, 2013.

- [29] I. O. Ryzhov. On the convergence rates of expected improvement methods. Operations Research, 64(6):1515–1528, 2016.

- [30] P. Glynn and S. Juneja. A large deviations perspective on ordinal optimization. In Proceedings of the 2004 Winter Simulation Conference, 2004., volume 1. IEEE, 2004.

Appendix A Proof of Proposition 1

To facilitate the analysis, we make the following definition. For two real-valued sequences and , if , we call them logarithmically equivalent, denoted by . We first analyze . Note that and we have

Then . In round , follows . Let and be the cumulative density function and probability density function of the standard normal distribution, respectively. We have

Let and . By the following property of the cumulative probability function of the standard normal distribution

and , we have

| (17) |

Denote as the number of samples for arm before round , i.e., . By (1) in the main text, we have

Appendix B Proof of Proposition 2

Similar to the proof of Proposition 1, we have

Since for the TTEI algorithm,

we have

According to (19), for the KG algorithm, . Now by setting of the TTEI algorithm to the same value, the sampling rates of the best arm from these two algorithms will be the same. According to Theorem 2 of [12], among algorithms allocating the same proportion of the samples to the best arm, of the TTEI algorithm is optimal, i.e., .

Appendix C Proof of Propositions 3, 4 and 5

Propositions 3, 4 and 5 give the expressions of iKGt,i, iKG and iKG. Below we introduce a lemma first, which will be used in the proofs of the three propositions.

Lemma 1.

If arm is sampled from in round , and follow and respectively. Then,

Proof of Proposition 3:

For the best arm identification problem, if ,

If ,

Proof of Proposition 4:

We explore the expression of in the -good arm identification problem first. We know that

Note that in round , follows . Similarly as in the proof of Proposition 1, we can know that if

and if

Then

For the -good arm identification problem, if ,

If ,

Proof of Proposition 5:

We explore the expression of in the feasible arm identification problem first. We know that

Note that in round , follows . Similarly as in the proof of Proposition 1, we can know that if and measure ,

and if and measure ,

Then

For the feasible arm identification problem,

Appendix D Proof of Theorem 1

Our proof of Theorem 1 will be divided into the analysis of the consistency, sampling rates and asymptotic optimality of the iKG algorithm.

We first show the consistency, i.e., each arm will be pulled infinitely by the algorithm as the round goes to infinity. Since

| (21) |

it is obvious that for . To prove the consistency, we define a set . It suffices to prove that , and then the claim is straightforward based on the Strong Law of Large Numbers. For any and arm , there exists such that when , , because arms not in will be infinitely pulled. Since the is a continuous function and holds for arm , then for any , there exists such that when , iKG.

Arms are pulled for only a finite number of rounds. Then exists and we have . According to the continuity of the function , there exists such that iKG. Since is arbitrary, let , and then iKGiKGt,i holds, which implies . As the total number of rounds tend to infinity, will become an empty set eventually. In other words, all the arms will be pulled infinitely and holds with probability .

We next analyze the sampling rate of each arm by the iKG algorithm. Let , we know that when is large, iKG for all . Then iKGiKGiKGiKG, where . For any and ,

where is the sampling rate of arm . For any , we have if and only if

| (22) |

by the continuity of the function and . For arms ,

Notice that . When is large enough, is sufficiently small according to the consistency of the algorithm. Then

Since for , given , we have

By (22), we have

Then

Hence

Since can be arbitarily small, .

We have shown that

Then

| (23) |

By (22),

| (24) |

where in (23) and (24) is the solution of (8) in the main text.

Next, we will show that for any BAI algorithms, . Let be set of the feasible sampling rates of the arms. The proof of this claim is divided into two stages. First, suppose that is fixed for some . We will show that is achieved when

| (25) |

In other words, in this stage, we will prove the first and third equations in (8) of the main text. We prove it by contradiction. Suppose there exists a policy with sampling rates of the arms such that . Since the solution of (25) is unique, there exists an arm satisfying . We consider a new policy. There exists such that and for . Then

which yields a contradiction. Therefore, the first and third equations in (8) of the main text hold.

In the second stage, we will prove the second equation in (8) of the main text. Consider the following optimization problem

| (26) |

The Lagrangian function of (26) is

where ’s are the Lagrange multipliers. By the KKT conditions, we have for all and . Then

i.e., .

Remark: The conditions in (8) of the main text coincide with the optimality conditions developed in [30] using the OCBA method under normal sampling distributions.

Appendix E Proof of Theorem 2

Our proof of Theorem 2 will be divided into the analysis of the consistency, sampling rates and asymptotic optimality of the iKG- algorithm.

We first show consistency, i.e., each arm will be pulled infinitely by the algorithm as the round goes to infinity. Since

| (27) |

it is obvious that for . To prove the consistency, it suffices to prove that , and then the claim is straightforward based on the Strong Law of Large Numbers. For any and arm , there exists such that when , , because arms not in will be infinitely pulled. Since the is a continuous function and holds for arm , then for any , there exists such that when , iKG.

Arms are pulled for only a finite number of rounds. Then exists and we have . According to the continuity of the function , there exists such that iKG. Since is arbitrary, let , and then iKGiKG holds, which implies . As the total number of rounds tend to infinity, will become an empty set eventually. In other words, all the arms will be pulled infinitely and holds with probability .

We next analyze the sampling rate each arm by the iKG- algorithm. Let , we know that when is large, iKG holds for . Then iKGiKGiKGiKG, where . For any and ,

where is the sampling rate of arm . For any , we have if and only if

| (28) |

by the continuity of the function and . For arms ,

Notice that . When is large enough, is sufficiently small according to the consistency of the algorithm. Then

Since for , given , we have

By (28), we have

Then

Hence

Since can be arbitarily small, .

We know that

and

Then

We have

| (29) |

By (28),

| (30) |

where in (29) and (30) is the solution of (12) in the main text.

Next, we will show that for any -good arm identification algorithms, . Let be set of the feasible sampling rates of the arms. The proof of this claim is divided into two stages. First, suppose that is fixed for some . We will show that is achieved when

| (31) |

In other words, in this stage, we will prove the first and third equations in (12) of the main text. We prove it by contradiction. Suppose there exists a policy with sampling rates of the arms such that . Since the solution of (31) is unique, there exists an arm satisfying . We consider a new policy. There exists such that and for . Then

which yields a contradiction. Therefore, the first and third equations in (12) of the main text hold.

In the second stage, we will prove the second equation in (12) of the main text. Consider the following optimization problem

| (32) |

The Lagrangian function of (32) is

where ’s are the Lagrange multipliers. By the KKT conditions, we have for all and . Then

i.e., .

Appendix F Proof of Theorem 3

Our proof of Theorem 3 will be divided into the analysis of the consistency, sampling rates and asymptotic optimality of the iKG-F algorithm.

We first show consistency, i.e., each arm will be pulled infinitely by the algorithm as the round goes to infinity. Since

| (33) |

It is obvious that for . To prove the consistency, it suffices to prove that and then the claim is straightforward based on the Strong Law of Large Numbers. For any and , there exists such that when , , because arms not in will be infinitely pulled. Since the is a continuous function and holds for arm , then for any , there exists such that when , iKG.

Arms are pulled for only a finite number of rounds. Then exists and we have . According to the continuity of the function , there exists such that iKG. Since is arbitrary, let and then iKGiKG holds, which implies . As the total number of rounds tend to infinity, will become an empty set eventually. In other words, all the arms will be pulled infinitely.

We next analyze the sampling rate each arm by the iKG-F algorithm. Let , we know that when is large, iKG holds for . Then iKGiKGiKGiKG, where . For any ,

where is the sampling rate of arm . We have shown that for any and . We can find a sufficiently large positive integer such that when , , , and , where and . Note that and . If , . We have shown that . Hence for any by the continuity of the function . We can get similar result when . Hence, if and only if

| (34) |

by the continuity of the function and .

We have known that

We have

Then

For arm ,

For arm ,

because . Hence

We have

| (35) |

By (34),

| (36) |

where in (35) and (36) is the solution of (16) in the main text.

Next, we will show that for any feasible arm identification algorithms, . Let be set of the feasible sampling rates of the arms. We prove it by contradiction. Suppose there exists a policy with sampling rates of the arms such that

We will show that is achieved when

| (37) |

Since the solution of (37) is unique, there exists an arm satisfying

We consider a new policy. There exists such that and for . Then

which yields a contradiction. Therefore, the equations in (16) of the main text hold.

Appendix G Additional Numerical Results

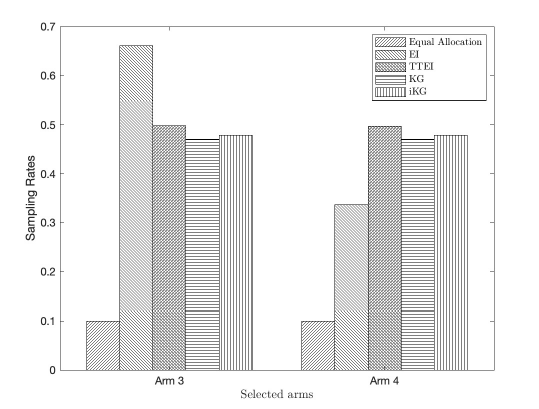

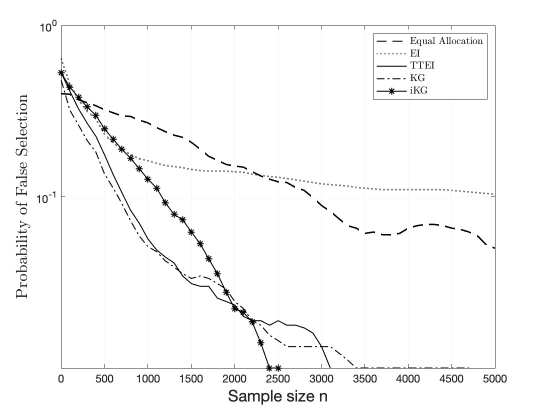

In this section, we provide additional numerical results for the experiments conducted in Section 5 of the main text. Figures 1(a)-7(a) show how the probabilities of false selection of the compared algorithms change with the sample sizes for the best arm identification problem, and Figures 1(b)-7(b) show the sampling rates of the algorithms on some selected arms. It can be observed in Figures 1(a)-7(a) that the proposed iKG algorithm performs the best, followed by TTEI, KG, EI and the equal allocation. On the log scale, the probability of false selection (PFS) values of the iKG algorithm demonstrate linear patterns, indicating the potentially exponential convergence rates. For both EI and KG, the rates of the posterior convergence are not optimal, which might influence their empirical performances. The equal allocation performs the worst in general. In Figures 1(b)-7(b), we can see that TTEI always allocates half samples to the best arm when , EI allocates too many samples to the best arm while KG allocates too few samples to the best arm.

Figures 8(a)-14(a) show how the probabilities of false selection of the compared algorithms change with the sample sizes for the -good arm identification, and Figures 8(b)-14(b) show the sampling rates of the algorithms on some selected arms. It can be observed in Figures 8(a)-14(a) the proposed iKG- algorithm performs the best and demonstrates a linear pattern on the log scale. (ST)2 and APT are inferior, and the equal allocation performs the worst. In Figures 8(b)-14(b), we can see that APT allocates too few samples to the best arm and too many samples to the arms near the threshold. ST2 allocates too few samples to the best arm, which influences the accuracy of the threshold.

Figures 15(a)-21(a) show how the probabilities of false selection of the compared algorithms change with the sample sizes for the feasible arm identification, and Figures 15(b)-21(b) show the sampling rates of the algorithms on some selected arms. The results in Figures 15(a)-21(a) are similar to those in Figures 1(a)-14(a). The proposed iKG-F algorithm has the best performance, followed by the compared MD-UCBE, equal allocation and MD-SAR. In Figures 15(b)-21(b), we can see that MD-UCBE and MD-SAR allocate too many samples to the arms near the constraint limits.