Improving Surrogate Model Robustness to Perturbations for Dynamical Systems Through Machine Learning and Data Assimilation

Abstract

Many real-world systems are modelled using complex ordinary differential equations (ODEs). However, the dimensionality of these systems can make them challenging to analyze. Dimensionality reduction techniques like Proper Orthogonal Decomposition (POD) can be used in such cases. However, these reduced order models are susceptible to perturbations in the input. We propose a novel framework that combines machine learning and data assimilation techniques to improving surrogate models to handle perturbations in input data effectively. Through rigorous experiments on dynamical systems modelled on graphs, we demonstrate that our framework substantially improves the accuracy of surrogate models under input perturbations. Furthermore, we evaluate the framework’s efficacy on alternative surrogate models, including neural ODEs, and the empirical results consistently show enhanced performance.

Keywords: Surrogate model Machine learning Dimensionality reduction Reaction-diffusion system

1 Introduction

Complex networks provide valuable insights into real-world systems, such as the spread of epidemics [1] and the understanding of biochemical and neuronal processes [2]. However, modeling these systems becomes computationally complex when dealing with large state spaces. To address this challenge, methods like Proper Orthogonal Decomposition (POD) ([3], [4], [5], [6]) are employed to capture patterns while operating in reduced dimensions. While dimensionality reduction techniques like POD are effective, their sensitivity to data necessitates robust surrogate models that can maintain accuracy under input perturbations. Consequently, the question arises regarding how to enhance a surrogate model to accommodate perturbed data sets. This study proposes a framework designed to improve the robustness of surrogate models, ensuring their reliability under varying input conditions.

Surrogate models serve as approximations of complex real-world systems, which are often modeled using ordinary differential equations (ODEs) or partial differential equations (PDEs). In many cases, the complexity of these systems makes it difficult to fully capture their underlying processes. To address the challenges of modeling high-dimensional and chaotic systems, several machine learning (ML) approaches have been proposed to construct surrogate models that provide accurate and computationally efficient approximations. Several ML-based approaches have been developed to construct surrogate models that provide accurate and computationally efficient approximations. These include analog models [7], recurrent neural networks (RNNs) [8, 9], residual neural networks that approximate resolvents [10, 11], and differential equation-based models such as in [12, 13, 14, 15]. A notable study [10] integrates machine learning and data assimilation (DA) to improve predictions in scenarios with sparse observations. However, the DA step is computationally expensive, limiting its practical applicability. To address model inaccuracies, researchers have explored alternative approaches such as weak-constraint DA methods [16] and ML-DA frameworks [17].

The contributions of our paper can be summarized as follows:

-

1.

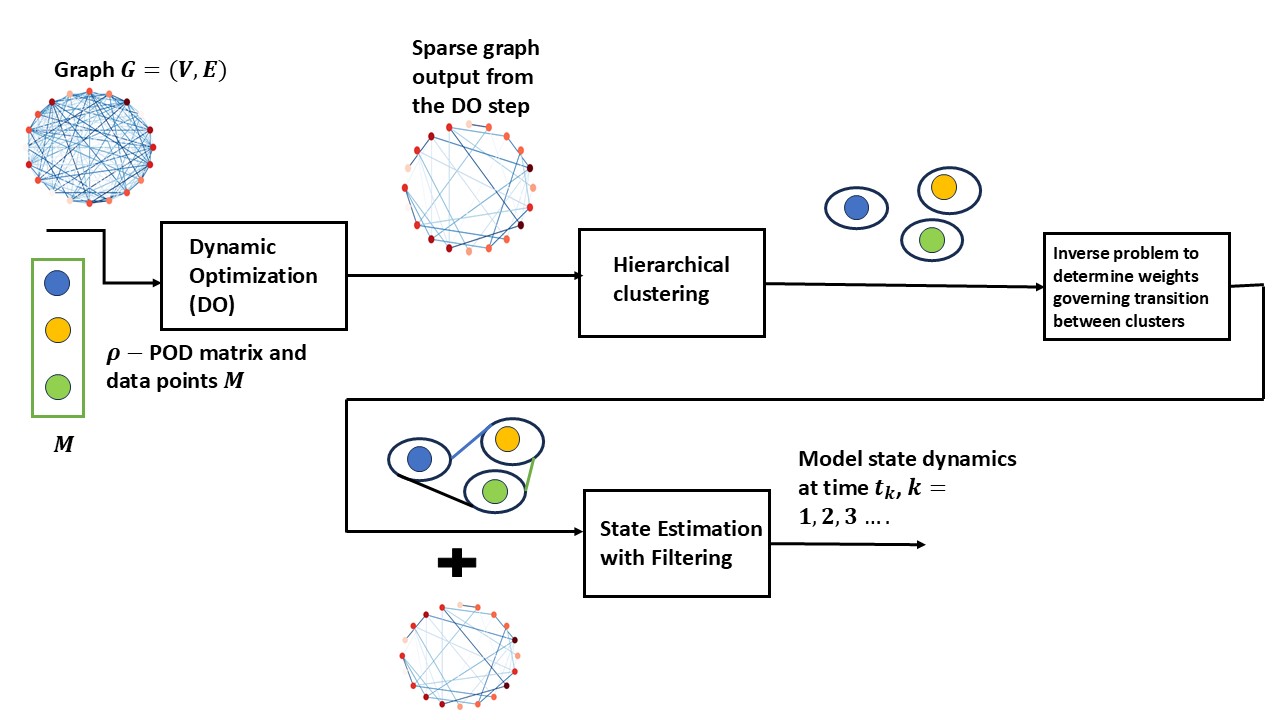

We introduce a novel framework (Figure 1) that improves the robustness of surrogate models against input perturbations by integrating data assimilation and machine learning techniques.

-

2.

We conduct extensive experiments on dynamical systems represented as graphs, specifically the diffusion system () and the chemical Brusselator model () discussed in Section 2. For dynamical systems on graphs, we propose a dynamic optimization step, described in Sections 5.2 and 5.3, to obtain a sparse graph and reduce memory complexity.

- (a)

-

(b)

Section 5.3 presents the dynamic optimization step for reaction-diffusion systems on graphs, detailing the required constraints.

- 3.

-

4.

In Section 7, we demonstrate how our framework can be integrated into a general setting to reinforce neural ODE-based surrogate models trained on data-driven systems. The improved models exhibit enhanced performance and robustness against input perturbations, outperforming both POD-based models and the original neural ODE solutions, as shown in Figures 3, 4, and 5.

2 Background

We present the fundamental tools and techniques essential for understanding the methodologies discussed in this paper.

2.1 Orthogonal collocation method

The orthogonal collocation method is a well-established numerical technique for solving differential equations, particularly in dynamic optimization problems. It provides a versatile approach applicable to both ordinary and partial differential equations [18, 19].

The proposed method entails partitioning the problem domain into a discrete set of collocation points, ensuring that the polynomial approximation of the vector field satisfies the orthogonality condition. Common choices for collocation points include Legendre, Chebyshev, and Gaussian points.

The method enforces the differential equations at the collocation points, resulting in a system of algebraic equations that can be solved using numerical techniques such as Newton’s method or direct solvers. The choice of collocation points and basis functions significantly impacts the method’s accuracy and efficiency. To illustrate the method, we provide an example using the Lotka-Volterra system.

Here and denote the prey and predator population. The parameters determine the prey growth rate and determine the predator growth rate. If we consider a single collocation element with two nodes per element, the orthogonal collocation method [18] solves the following system of equations to determine the prey and predator populations at time , denoted as .

. This method is applied in the dynamic optimization step of our framework (Section 4).

2.2 Spatio temporal propagation in graphs

Spatiotemporal propagation in complex networks has been widely studied across disciplines such as physics, biology, and neuroscience. Complex networks comprise interconnected nodes whose interactions give rise to dynamic processes. Understanding the mechanisms governing information, signal, or dynamic propagation within these networks is crucial for analyzing their complex behaviors and emergent properties.

Spatiotemporal propagation describes the transmission or diffusion of information, signals, or dynamics across interconnected nodes and edges in a complex network. It examines how localized events, disturbances, or modifications in one region of the network evolve and influence other nodes or areas. This phenomenon has significant implications across diverse fields, including physics, biology, and neuroscience. The following examples highlight key applications of complex networks.

-

1.

Diffusion Processes : The study of diffusion phenomena, including heat transfer and molecular diffusion, aids in modeling and optimizing processes involving transport and dispersion. By considering the normalized Laplacian matrix of the graph, the heat equation can be represented as an equivalent discrete dynamical system. Normalized Laplacian matrix , where is the diagonal matrix of degrees and is the Laplacian matrix of the graph.

Here denotes the temperature at node and time .

- 2.

-

3.

Epidemic Spread : Understanding how infectious diseases propagate through social networks can aid in designing effective strategies for disease control, information dissemination, and opinion formation. The SIS (susceptible-infected-susceptible) model, used to study epidemic spreading, is described as follows:

represents the -th entry of the adjacency matrix of the graph. denotes the number of nodes.

-

4.

Neural Dynamics (): The study of neural activity propagation in brain networks provides insights into cognition and neurological disorders. One such system, described in [22], follows the equation:

2.3 Proper Orthogonal Decomposition for Dynamical systems

This section provides a concise overview of the application of Proper Orthogonal Decomposition (POD) to initial value problems in dynamical systems [3]. Given a dataset consisting of a collection of points , where denotes the state of the system at time for a particular trajectory , the POD method seeks a subspace such that

is minimized. is the orthogonal projection onto the subspace and denotes the projected data set.

is the best dimensional approximating affine subspace, with the matrix of projection consisting of leading eigenvectors of the covariance matrix (). The subspace for a dataset is uniquely determined by the projection matrix and the mean , .

denotes the number of trajectories.

An asymptotic and sensitivity analysis of POD is presented in [3]. Consider a dynamical system in governed by a vector field :

The reduced-order model (ROM) vector field is defined as:

Thus an initial value problem for the system with using the projection method is given by,

| (2) |

Here, is the projection of onto the subspace .

The sensitivity of the POD projection to changes in the dataset is quantified by the following proposition from [3]:

Proposition: (Rathinam and Petzold [3]) Consider applying POD to a data set to find the best approximating dimensional subspace. Let the ordered eigenvalues of the covariance matrix of the data be given by . Suppose which ensures that is well defined. Then

| (3) |

2.4 Filtering

This section introduces the filtering problem and outlines the key steps in the filtering framework. For further details on the concept of filtering, see [23, 24]. Rooted in Bayesian inference, optimal filtering estimates the state of time-varying systems under noisy measurements. Its objective is to achieve statistically optimal estimation of the system state. Optimal filtering follows the Bayesian framework for state estimation, integrating statistical optimization with Bayesian reasoning to improve applications in signal processing, control systems, and sensor networks.

In Bayesian optimal filtering, the system state consists of dynamic variables such as position, velocity, orientation, and angular velocity. Due to measurement noise, observations do not yield deterministic values but rather a distribution of possible states, introducing uncertainty. The system state evolution is modeled as a dynamic system with process noise capturing inherent uncertainties in system dynamics. While the underlying system is often deterministic, stochasticity is introduced to represent model uncertainties.

The system’s state evolves according to:

where is the system state at time , and represents the model error. The observations are given by:

where represents the observation noise.

The filtering step estimates the state at time () based on observations up to .

3 Problem statement

Surrogate modeling techniques, such as the reduced-order model (ROM) discussed in Section 2.3, provide computational efficiency but are highly sensitive to input variations, as indicated in Equation 3

Consequently, when initialized with a new random condition, the surrogate model () efficiently approximates the system trajectory but may fail to capture deviations from the true state.

The surrogate model is used to obtain compressed representations of state vectors at various timesteps, which are treated as noisy observations. The observations are compressed for better memory efficiency if the model operates on the state dimension, as in the neural ODE surrogate model (Section 7). Let (where ) denote the state observation relationship. and denote the high and low wavelength components of error, capturing deviations between the true state and the state obtained from the surrogate model () at time . and denote the high and low-wavelength components of error that capture the difference between the observation and the state at time .

denotes the true state of the system at time . denotes the forward model. For instance, if the forward model uses the Euler forward scheme and the surrogate model applies POD to the vector field , then:

where denotes the step size. The state forward dynamics and state observation relationship are given by:

4 Proposed Framework

The key steps of the framework, as illustrated in Figure 1, are as follows. For brevity, the framework is discussed in the context of dynamical systems represented on graphs. For dynamical systems that do not involve graphs, the dynamic optimization step is not required. The framework’s applicability to general modeling scenarios is demonstrated in Section 7, where a neural ODE-based surrogate model is used to learn from data.

-

1.

Dynamic optimization. For dynamical systems on graphs, a dynamic optimization problem is formulated to extract a sparse graph from known solution trajectory snapshots. The sparse graph improves memory efficiency in the filtering step (Step 4) of the framework. The original graph can be replaced with the sparse graph, further enhancing memory efficiency. This step outputs the graph Laplacian matrix of the sparse graph, given by , where the weights for linear and nonlinear dynamical systems on graphs are determined by solving the optimization problems detailed in Sections 5.2 and 5.3.

-

2.

Hierarchical clustering. This step quantifies deviations between the surrogate model (e.g., POD) and the actual system trajectories. Given a dataset and surrogate model predictions , we apply hierarchical clustering to partition into clusters. The assigned cluster labels are represented as , where denotes the cluster assignment for data point . For each cluster , we construct a set of triplets , where is the number of triplets in cluster . For reduced order model using POD (Section 2.3), we denote . The center of cluster is the triplet , where . As shown in Figure 2, a visualization of the clusters is provided, with representing the indices of data points within cluster .

-

3.

Inverse Problem for Estimating Weights Between Clusters. For an initial condition at time step , we obtain a compressed representation of the data point . For example, when using the POD method, we get the compressed representation of as . From the clusters formed in Step 3, we assign the cluster to the point based on the following,

(4) The initial cluster distribution is a one-hot vector, where the index corresponding to is set to 1. The cluster distribution at time is denoted by . For successive transitions , it is essential to efficiently determine the cluster of each approximate state generated by the surrogate model at time . This step is performed for the estimation of high wavelength components of errors described in Section 3. The transitioning of cluster distribution () is modelled as a Markovian process discussed in [25]. We consider the nodes of the clusters from Step 2 as nodes of graph , , where the weights of the graph are unknown. The topology of the graph is considered complete with self-loops, meaning there is an edge between each node of the cluster and an edge from each node to itself. We use a supervised learning approach to determine the weights within the graph, where the labels from Step 2 are used in the optimization objective function with the cross-entropy loss (See P).

The transitions of the cluster distribution is modeled as Markovian, based on the assumption that the states of the ODE exhibit Markovian properties, which is explained below. The general explicit Runge-Kutta numerical scheme using slopes ([26]) for obtaining solution of the differential equation is given by the following relation

From this formulation, it follows that the solution transitions of the ODE adhere to a discrete-time Markov chain:

In a graph , a walk is represented as a sequence of vertices , where each consecutive pair of vertices is connected by an edge in . In other words, there is an edge between and for all . A random walk is characterized by the transition probabilities , which define the probability of moving from vertex to vertex in one step. Each vertex in the graph can transition to multiple neighboring vertices . If we consider the transitions between the clusters as Markovian, the transition matrix , where is the diagonal matrix of degrees and is the adjacency matrix of the graph. For each vertex ,

If we consider a complete graph on 3 nodes with self-loops as shown in Figure 2, the transition matrix,

Figure 2: Illustration of triplet organization into clusters, with mutually exclusive sets of indices (where ). The inverse problem P is used to estimate weights . We now pose a constrained optimization problem P to estimate the weights () responsible for governing the transitions of data points.

(P) represents the cluster index of data point . The optimization problem above is solved to find the optimal , which can be efficiently obtained using the adjoint method for data assimilation, as detailed in [23]. Given the cluster distribution () at time , we estimate components (Eq. 5) for the high wavelength component of errors as follows:

-

4.

State Estimation with Filtering. The goal of filtering is to estimate system states over time using noisy measurements and state observation relations, as discussed in Section 2.4. The POD surrogate model defines the evolution of state dynamics and the state-measurement relationship as follows:

(5) The terms and represent the high- and low-wavelength components of model error at time , respectively. Similarly, and characterize the high- and low-wavelength components of the state-observation error at time . denotes the projection matrix. At a particular time step, the data point at time is obtained using the ROM , and the state vector after projection . The matrices and are computed following the methodology outlined in Step 3 of the framework. The term is the solution to the linear system . For dynamical systems represented on graphs, the term is computed using an explicit numerical scheme with the sparse graph obtained from the dynamic optimization Step 1. In the case of a diffusion equation represented on graphs in Section 2.2) with Euler discretization step size , the sparse Laplacian matrix from the output of Step 1 is used instead of matrix to obtain . is the solution to the linear system . and are modelled as Gaussian with means and variances The distribution governing the low wavelength components of errors () is deliberately shaped to exhibit minimal variability, with a centered mean of . This assumption is made with the expectation that the high wavelength component will accurately capture the true nature of the error. There exist linear and non-linear filters for state estimation as described in [23], [24]. When the uncertainty in the state prediction exceeds a threshold, vectors and are updated as solutions of the linear systems defined below:

(6) (7) The computation of is performed using an explicit numerical scheme that leverages the state vector obtained from the filter at time step using the sparse graph.

5 Dynamic optimization problem formulation for dynamical systems represented on graphs

This section outlines the constraints necessary for the dynamic optimization step (Step 1 in Section 4) applied to a linear dynamical system represented on graphs (Section 2.2). Section 5.2 details the dynamic optimization process for linear diffusion systems on graphs. Section 5.3 presents the optimization framework for reaction-diffusion systems on graphs, including the corresponding constraint formulations.

Existing techniques for approximating linear dynamical systems on graphs include [27], [28], and [29]. The approach in [29] constructs spectral sparsifiers by sampling edges according to their resistance .

The probability of sampling an edge to construct a sparse approximation of graph is given by

where the edge resistance is defined as

See Algorithm 5 for further details.

|

Computing bounds on the edge weights of the sparse graph approximation , using effective resistances or local edge connectivities, is computationally intensive. To overcome this challenge, we propose Algorithm 5.1, which leverages Theorems 2 and 3 to efficiently compute upper bounds on edge weights and node degrees in the sparse graph. The following discussion introduces Bernstein’s Inequality [30], which is utilized in the derivation of Theorem 2.

Theorem 1.

Bernstein’s Inequality: Suppose are independent random variables with finite variances, and suppose that almost surely for some constant . Let Then, for every

and

Theorem 2.

Let each edge of a graph be sampled with probability . where , then , , where

, where .

Proof.

The expectation of satisfies

The second moment sum satisfies

where .

Applying Bernstein’s Inequality (Theorem 1), we obtain

Completing the square yields

Hence, it suffices to choose

∎

Theorem 2 provides upper bounds on the edge weights of the sparse graph generated by Algorithm 5. Furthermore, it is necessary to establish bounds on the node degrees in the sparse graph . To derive these bounds, we introduce Theorem 3, which utilizes the established relationship between the eigenvalues of the Laplacian matrix and the node degrees, as discussed in Section 5.1.

Notation:

Let be a simple weighted graph, i.e., a graph without self-loops or multiple edges with vertices . We define two subsets of vertices in based on their degrees. The set contains the vertices with the highest degrees, while consists of the vertices with the lowest degrees. The subgraphs and are defined as the subgraphs of induced by the vertex sets and , respectively.

| (8) | ||||

Here

5.1 Some known bounds on eigen values of Laplacian matrix

Unweighted graphs: [31](corollary 1) For simple unweighted graphs on vertices and and , we have the following relation

Weighted graphs: [31](corollary 2) Let be finite simple weighted graph on vertices and denote by a the maximal weight of an edge in , then

| (9) |

We present the following theorem, which establishes bounds on the degrees of the spectral sparsifier of which are incorporated as constraints in the dynamic optimization step of our framework (Section 5.2).

Theorem 3.

Let be an -spectral sparsifier of an undirected graph with vertices, where the eigenvalues of satisfy , with as the largest eigenvalue. The following bounds on the degrees of must hold:

for i = 2 to n-1.

Proof.

Since is an -spectral approximation of , the eigenvalues satisfy:

| (a) |

for .

From the spectral bounds on eigenvalues in Equation 9, we know:

| (b) |

Applying (a) to the upper bound in (b), we obtain:

| (c) |

Similarly, using the lower bound in (b), we have:

| (d) |

For the largest degree, we use the fact that preserves cuts in , giving:

| (e) |

Hence, the degree bounds for all nodes in are established. ∎

Let and denote the upper and lower bounds on the degree of node , respectively, as established in Theorem 3. Additionally, let represent the refined lower bound of degree , and the refined upper bound:

Algorithm 5.1 outlines the computation of the upper bound and the lower bound on the node degrees in the sparsified graph .

Here, denotes the upper bound on the maximum degree in the subgraph of induced by the vertices , while represents the lower bound on the minimum degree in the subgraph induced by . Furthermore, we define upper and lower bounds on graph as and .

Remove node from

for to do

for do

5.2 Dynamic Optimization for Linear Dynamical Systems on Graphs Using a POD-Based Surrogate Model

Real-time dynamic optimization problems are described in [32], [33], and [34]). Using snapshots of solutions from several trajectories (Section 2.2), we formulate the dynamic optimization problem in a ROM space [3], [35] using the matrix of projection and mean , where denotes the reduced dimension and (Section 2.3). The cost function (Eq. 10) in the dynamic optimization makes use of data points obtained from ROM. The dynamic optimization framework for obtaining a sparse graph in the case of diffusion () considering a single trajectory is given below:

| (10) |

| subject to | |||||

| (D3) | |||||

| (D4) | |||||

| (D5) | |||||

| (D6) | |||||

| (D7) | |||||

The term in the objective function (Eq. 10) aims to minimize the discrepancy between the states obtained using the projected vector field with multipliers and the known ROM solution obtained from the original graph. The term enforces sparsity in by penalizing nonzero elements. The optimization considers states at discrete increments of step size . The matrix is determined by the chosen collocation method (see [18]).

denotes the step size. denotes the number of collocation elements. represents the th entry of state in the -th collocation element. represents the initial condition used for generating data using ROM . is determined based on the procedure described as in [3]. The first set of constraints stems from the orthogonal collocation method on finite elements (Eq. 5.2). Non-negativity of the multipliers is imposed in constraints (Eq. D7). The constraints (Eq. D3) and (Eq. D4) enforce connectivity levels as derived in Theorem 3. Here, , where represents the unsigned incidence matrix of the graph and represents the weights of the given graph. The weight constraints and are based on Theorem 2. From the output of the dynamic optimization framework , we prune out weights , where is a user-defined parameter (). The new weight vector is denoted as . The sparse graph Laplacian replaces the standard Laplacian in the filtering step to improve the efficiency of Laplacian-vector product computations.

Several key observations can be made about the dynamic optimization problem. The objective function () can be made continuous by using the substitution , . represents the positive entries in (0 elsewhere) and represents the negative entries in (0 elsewhere). The objective function is convex, and the constraints are continuous. To solve this optimization problem, we can employ the Barrier method for constrained optimization, as discussed in [36] and [37].

5.3 Dynamic Optimization for Reaction-diffusion Dynamical Systems on Graphs Using a POD-Based Surrogate Model

In this section, we present the formulation of the dynamic optimization framework for a reaction-diffusion system. A general reaction-diffusion system is described in [21], where the activity at node at time is represented by an -dimensional variable . The temporal evolution of follows the differential equation:

denotes the reaction component, while the remaining terms represent the diffusion process. , . For brevity, we use the alternating self-dynamics Brusselator model () discussed in Section 2. When the input graph is connected, We impose a minimum connectivity constraint to limit perturbations in the second smallest eigenvalue of the graph Laplacian matrix. The intuition behind this constraint is that for a connected graph, the lowest degree will be greater than zero, making this constraint necessary. is the minimum degree imposed on the sparse graph (Eq. D10). Non-negativity of weights is also imposed on the dynamic optimization problem (Eq. D11). While [35] discusses generating sparse graphs using snapshots of data at arbitrary time points, we utilize data points at collocation time points for sparsification. We consider the following dynamic optimization problem for a reaction-diffusion system considering a single trajectory:

| subject to | |||||

| (D10) | |||||

| (D11) | |||||

The initial condition is used to obtain data points () using ROM. is determined as described in [3]. The output from the dynamic optimization problem is . Then, . Elements in less than are set to 0, and is updated. We update the solutions in the filtering step using this sparsified graph when the uncertainty value in the covariance matrix of filtering exceeds a threshold.

6 Experimental Results

In this section, we present empirical results demonstrating the effectiveness of the proposed framework in reinforcing graph-based linear dynamical systems () and nonlinear reaction-diffusion systems, specifically the chemical Brusselator model (), as described in Section 2.2. We evaluate the effectiveness of our framework by analyzing RMSE values under perturbations in initial conditions for surrogate models applied to both linear and reaction-diffusion systems on graphs. Additionally, we assess the impact of incorporating our framework on these models for both linear and non-linear dynamical systems. The influence of initial condition perturbations on neural ODE-based surrogate models, both with and without our framework discussed in Section 7, is also presented in Table 1.

| Experiment | Surrogate model | Surrogate model with Framework | ROM |

|---|---|---|---|

| RMSE | RMSE | RMSE | |

| Reaction-Diffusion with ROM surrogate model | 0.59 | 0.40 | — |

| Linear Diffusion with ROM surrogate model | 5.17 | 0.38 | — |

| Linear Diffusion with Neural ODE surrogate model | 0.48 | 0.29 | 0.65 |

Remark: In certain graph structures, empirical experiments revealed instances of particle filter divergence. Particle filter divergence is a critical issue that must be carefully addressed, as it compromises estimation accuracy and reduces the framework’s effectiveness. Several factors can contribute to this phenomenon, including suboptimal filter tuning, modeling inaccuracies, inconsistent measurement data, or system-related issues such as hardware-induced delays. Specifically, inaccurate likelihood estimations due to imprecise noise assumptions, erroneous process models, or delayed measurement updates can lead to divergence. For further examples, see [38]. Empirically, we observed that in certain graph configurations, the particle filter exhibited divergence, necessitating additional updates in the filtering step.

6.1 Linear dynamical system represented on graphs

We present the experimental results for the linear diffusion equation () on a 30-node Erdős-Rnyi random graph using the 4-point orthogonal collocation method with 20 elements. The parameters used in Algorithm 5.1 and Section 5.2 are as follows: , , , with two trajectories considered. The number of clusters is set to , while the particle filter employs 15,000 particles with . The noise terms and are assumed to be normally distributed with zero mean and variances and , where and .

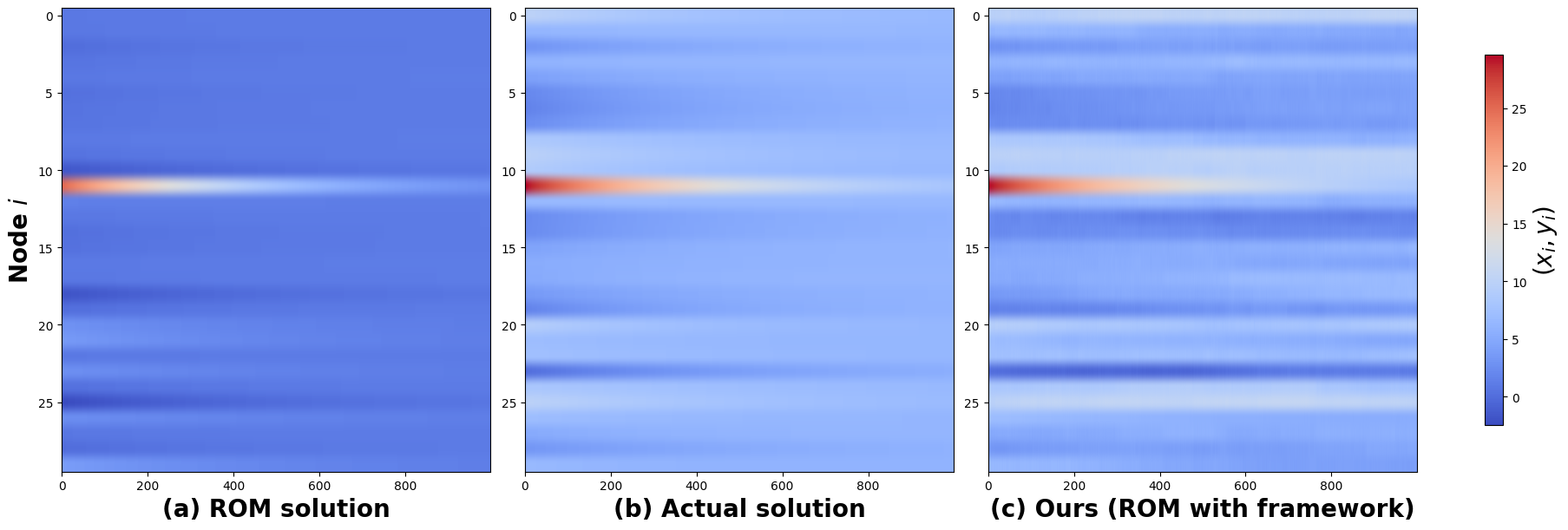

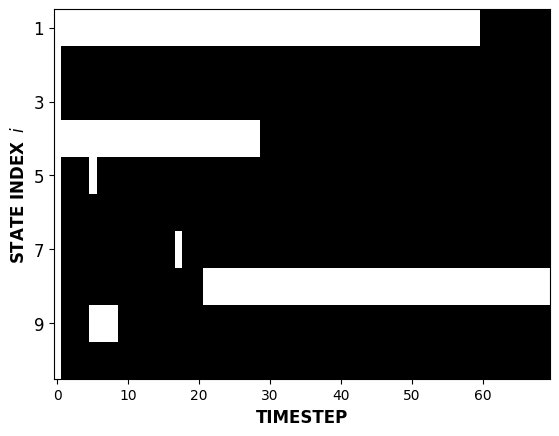

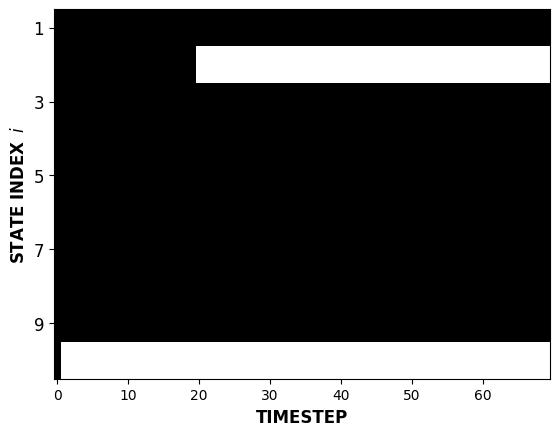

Following the dynamic optimization step, the resulting graph exhibited 31 sparse edges, a substantial reduction from the original graph’s 336 edges. During the filtering step, 193 updates were required for prediction over 1000 timesteps. Figure 3 compares the reduced order model solution, the actual solution, and the reduced-order model solution with our framework for the linear dynamical system () described in Section 2.2. Figure 3(c) shows that the ROM solution with our framework closely resembles the actual solution in Figure 3(b) than the reduced-order model solution in Figure 3(a), as discussed in Section 2.3.

6.2 Reaction-diffusion system represented on graphs

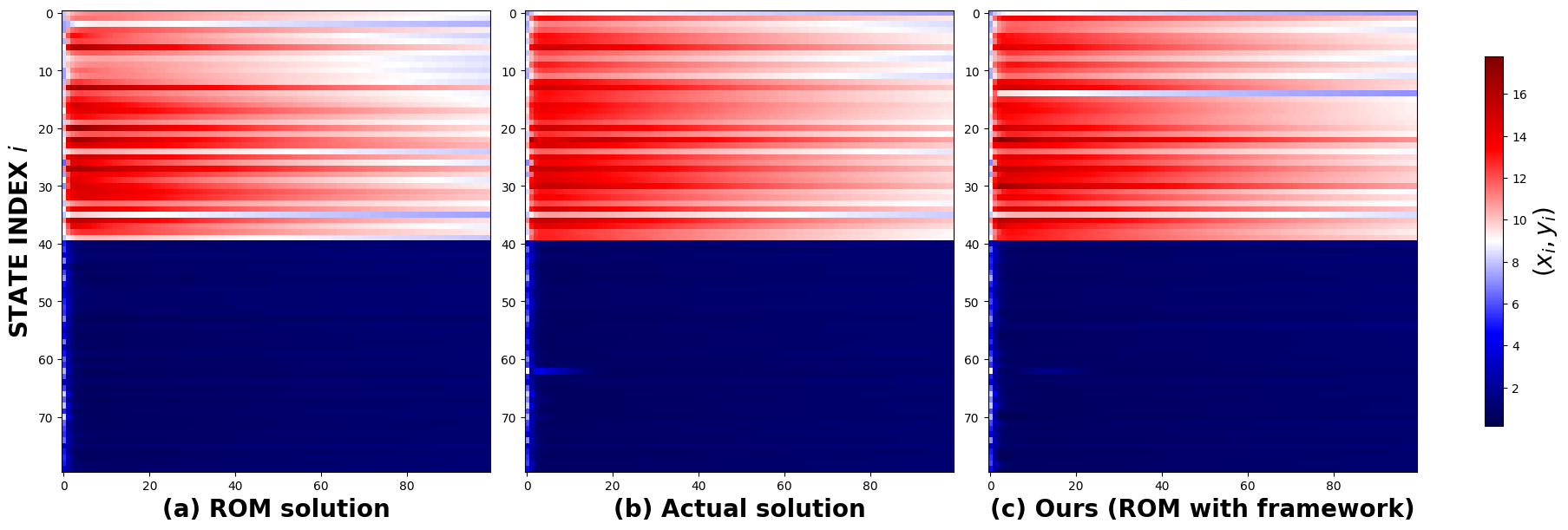

The results for the nonlinear case are based on the alternating self-dynamics Brusselator model applied to a 40-node Erdős-Rnyi random graph, using the 4-point orthogonal collocation method. The number of trajectories is set to two, and the particle filter employs 15,000 particles. The total simulation time is set to , with the number of clusters fixed at . The sparsification parameter is set to , and the minimum connectivity constraint is defined as , where denotes the minimum degree of the graph. The reduced-order dimension is given by . The noise terms and are assumed to be normally distributed with zero mean and variances and , where and . The graph obtained from the dynamic optimization step contained 286 sparse edges, a reduction from the original graph’s 336 edges. The filtering step required a total of 50 updates for predictions over 100 timesteps.

Figure 4 presents a comparison of the actual solution of the chemical Brusselator reaction-diffusion system ( in Section 2.2) for a randomly initialized condition, with both the reduced-order model (Section 2.3) and the ROM solution with our framework. The grid-based representation highlights regions marked in black, indicating areas where the absolute error of the particle filter solution is lower than that of the ROM solution.

7 Benchmarking the framework using neural ODEs

To broaden the applicability of our framework, we evaluate its effectiveness using a neural-ODE-based surrogate model, where state vectors are observed at discrete time intervals. Neural ordinary differential equations (neural ODEs) provide a powerful framework for modeling and analyzing complex dynamical systems [39, 40]. They offer a flexible approach to capturing system dynamics by integrating the principles of ordinary differential equations with neural networks. Unlike traditional neural networks that process inputs through discrete layers, neural ODEs represent system dynamics continuously using an ordinary differential equation to govern the evolution of hidden states over time. Neural ODEs learn system dynamics by learning the parameters of the differential equation using the adjoint sensitivity method ([23]). This approach allows the model to capture complex temporal dependencies in data. By leveraging the continuous-time nature of differential equations, neural ODEs provide key advantages, including the ability to model irregularly sampled time-series data and accommodate variable-length inputs.

To illustrate the framework, we consider a linear dynamical system () from Section 2.2, defined as follows:

| (11) |

The key distinction in applying our framework with the neural ODE model as the surrogate is the absence of the dynamic optimization step outlined in Section 4. Instead, an additional step is included to train the parameters of the neural ODE surrogate model, as described in Equation 13. The neural ODE solutions are treated as observations in the filtering step after projection (), where the POD method is applied for dimensionality reduction. The forward state dynamics and the state observation relationship, as applied in Step 4 of the filtering process within the framework, are given by:

| (12) |

When utilizing the neural ODE surrogate model with an Euler discretization step , the forward model is expressed as:

At iteration , the vector is updated by solving the following linear system:

The matrices and are computed as described in Step 3 of the framework (Section 4). The solution at time step is determined as:

where . The vector is initialized as . The neural network architecture is structured as follows:

The experiment involved 41 updates, with predictions performed over 100 timesteps. The number of particles in the particle filter step was set to 20,000. The noise terms and were assumed to follow normal distributions with zero mean and variances and , where and . The Laplacian matrix was chosen as the Laplacian of the complete graph , and observations were randomly sampled over the time interval to .

The cost function (Eq. 13) for estimating the parameters of the neural ODE model is given by:

| (13) |

The parameter vector , obtained by flattening and , is represented as . For experimentation, the parameters in Eq. 13 are determined using the adjoint sensitivity method with an Euler discretization scheme. The number of clusters is fixed at 40.

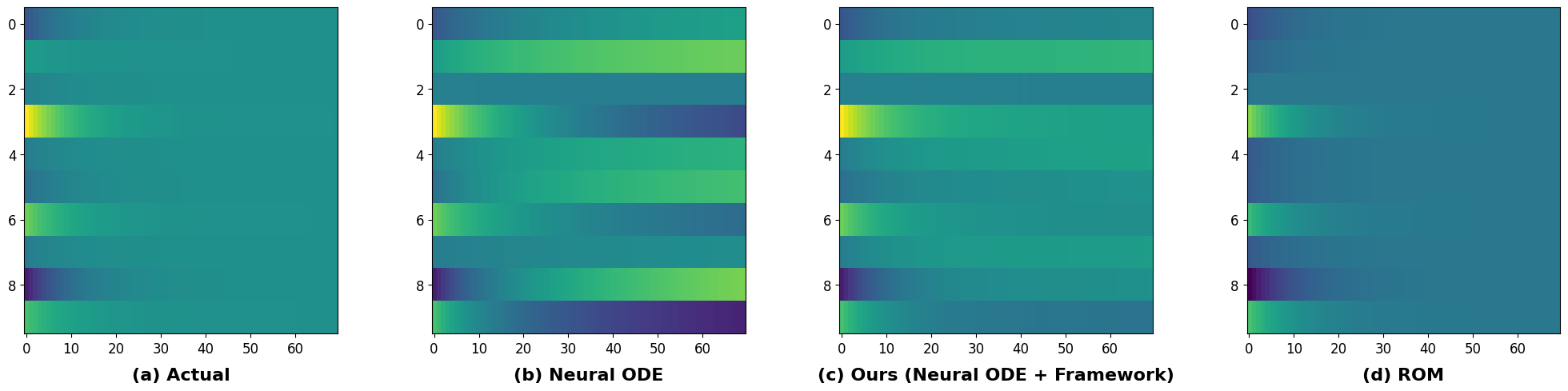

Figure 5 compares the neural-ODE solution for a random initial condition with the ROM and neural-ODE solution with our framework. The neural-ODE solution (Figure 5(b)) exhibits higher noise levels compared to the actual solution (Figure 5(a)). Figure 6 contrasts the ROM solution (Figure 5(d)) of the linear dynamical system (Equation 11) with the neural-ODE solution with our framework (Figure 5(c)). In the grid-wise comparison of solutions, the black regions in Figures 6(a) and 6(b) indicate areas where the error in the neural-ODE solution with our framework is lower than that in the neural-ODE and ROM solutions. Notably, the neural-ODE solution with our framework exhibits a greater number of black regions, suggesting a closer resemblance to the actual solution compared to the ROM and neural ODE solutions.

8 Conclusion and Discussion

In this study, we proposed a novel framework to improve the robustness of surrogate models against input perturbations, addressing a key challenge in modeling complex systems while ensuring computational efficiency. By integrating machine learning techniques with stochastic filtering, our approach has demonstrated significant improvements in surrogate model accuracy under perturbations. The experimental results presented in Section 1 provide strong validation of the framework’s effectiveness.

The versatility of our framework is evident from its application to dynamical systems represented on graphs and its extension to a general setting, where a neural-ODE-based surrogate model was employed to simulate complex physical phenomena. This not only highlights the robustness of our approach but also underscores its applicability across diverse domains, as discussed in Section 7.

Despite its strengths, our framework has certain limitations. Notably, its reliance on stochastic filtering methods introduces computational overhead and may also be susceptible to filter divergence in certain cases. While our study focuses on undirected graphs, many real-world complex systems involve directed networks, such as those governed by the complex Ginzburg-Landau equation [21]. Addressing directed graph structures presents an important avenue for future research, with potential extensions of our framework to handle these more intricate dynamical systems.

Acknowledgments

We thank Prof. S. Lakshmivarahan and Prof. Arun Tangirala for their insightful feedback, contributing to the enhancement of this work. We thank the anonymous reviewers for their insightful comments, which significantly improved the quality of the manuscript. This work was partially supported by the MATRIX grant MTR/2020/000186 of the Science and Engineering Research Board of India.

References

- [1] Dirk Brockmann and Dirk Helbing. The hidden geometry of complex, network-driven contagion phenomena. Science, 342(6164):1337–1342, 2013.

- [2] Sergei Maslov and I. Ispolatov. Propagation of large concentration changes in reversible protein-binding networks. Proceedings of the National Academy of Sciences, 104(34):13655–13660, 2007.

- [3] Muruhan Rathinam and Linda R. Petzold. A new look at proper orthogonal decomposition. SIAM Journal on Numerical Analysis, 41(5):1893–1925, 2003.

- [4] Suhan Kim and Hyunseong Shin. Data-driven multiscale finite-element method using deep neural network combined with proper orthogonal decomposition. Engineering with Computers, 40, 04 2023.

- [5] B.A. Le, Julien Yvonnet, and Qi-Chang He. Computational homogenization of nonlinear elastic materials using neural networks. International Journal for Numerical Methods in Engineering, 104(12):1061–1084, 2015.

- [6] Yanwen Huang and Yuanchang Deng. A hybrid model utilizing principal component analysis and artificial neural networks for driving drowsiness detection. Applied Sciences, 12(12), 2022.

- [7] Redouane Lguensat, Pierre Tandeo, Pierre Ailliot, Manuel Pulido, and Ronan Fablet. The analog data assimilation. Monthly Weather Review, 145(10):4093–4107, 2017.

- [8] D.C. Park and Yan Zhu. Bilinear recurrent neural network. Proceedings of 1994 IEEE International Conference on Neural Networks (ICNN’94).

- [9] D.-C. Park. A time series data prediction scheme using bilinear recurrent neural network. In 2010 International Conference on Information Science and Applications, pages 1–7, Seoul, Korea (South), 2010. IEEE.

- [10] Julien Brajard, Alberto Carrassi, Marc Bocquet, and Laurent Bertino. Combining data assimilation and machine learning to emulate a dynamical model from sparse and noisy observations: A case study with the lorenz 96 model. Journal of Computational Science, 44:101171, 2020.

- [11] Peter D. Dueben and Peter Bauer. Challenges and design choices for global weather and climate models based on machine learning. Geoscientific Model Development, 11(10):3999–4009, 2018.

- [12] Ronan Fablet, Souhaib Ouala, and Cédric Herzet. Bilinear residual neural network for the identification and forecasting of geophysical dynamics. In 2018 26th European Signal Processing Conference (EUSIPCO), pages 1477–1481, Rome, 2018. IEEE.

- [13] Zichao Long, Yiping Lu, Xianzhong Ma, and Bin Dong. PDE-net: Learning PDEs from data. In Proceedings of the 35th International Conference on Machine Learning, page 9, 2018.

- [14] Marc Bocquet, Jérémie Brajard, Alberto Carrassi, and Laurent Bertino. Data assimilation as a learning tool to infer ordinary differential equation representations of dynamical models. Nonlinear Processes in Geophysics, 26(3):143–162, 2019.

- [15] Marc Bocquet, Jérémie Brajard, Alberto Carrassi, and Laurent Bertino. Bayesian inference of chaotic dynamics by merging data assimilation, machine learning and expectation-maximization. Foundations of Data Science, 2(1):55–80, 2020.

- [16] Pavel Sakov, Jean-Michel Haussaire, and Marc Bocquet. An iterative ensemble kalman filter in the presence of additive model error. Quarterly Journal of the Royal Meteorological Society, 144(713):1297–1309, 2018.

- [17] Alban Farchi, Patrick Laloyaux, Massimo Bonavita, and Marc Bocquet. Using machine learning to correct model error in data assimilation and forecast applications. Quarterly Journal of the Royal Meteorological Society, 147(739):3067–3084, jul 2021.

- [18] John D. Hedengren, Reza Asgharzadeh Shishavan, Kody M. Powell, and Thomas F. Edgar. Nonlinear modeling, estimation and predictive control in apmonitor. Computers & Chemical Engineering, 70:133–148, 2014. Manfred Morari Special Issue.

- [19] Lorenz T. Biegler. Nonlinear Programming. Society for Industrial and Applied Mathematics, 2010.

- [20] P. T. Landsberg. The fourth law of thermodynamics. Nature, 238(5361):229–231, 1972.

- [21] Giulia Cencetti, Pau Clusella, and Duccio Fanelli. Pattern invariance for reaction-diffusion systems on complex networks. Scientific Reports, 8(1), 2018.

- [22] Chittaranjan Hens, Uzi Harush, Simi Haber, Reuven Cohen, and Baruch Barzel. Spatiotemporal signal propagation in complex networks. Nature Physics, 15(4):403–412, 2019.

- [23] John M. Lewis, Sivaramakrishnan Lakshmivarahan, and Sudarshan Kumar Dhall. Dynamic Data Assimilation: A least squares approach. Cambridge Univ. Press, 2009.

- [24] Tiancheng Li, Miodrag Bolic, and Petar M. Djuric. Resampling methods for particle filtering: Classification, implementation, and strategies. IEEE Signal Processing Magazine, 32(3):70–86, 2015.

- [25] Fan R. K. Chung. Spectral Graph Theory. American Mathematical Society, Providence, RI, 1997.

- [26] Richard L. Burden, J. Douglas Faires, and Annette M. Burden. Numerical analysis. Cengage Learning, 2016.

- [27] Ming-Jun Lai, Jiaxin Xie, and Zhiqiang Xu. Graph sparsification by universal greedy algorithms, 2021. https://doi.org/10.48550/arXiv.2007.07161.

- [28] Daniel A. Spielman and Shang-Hua Teng. Spectral sparsification of graphs. SIAM Journal on Computing, 40(4):981–1025, 2011.

- [29] Daniel A. Spielman and Nikhil Srivastava. Graph sparsification by effective resistances, 2009.

- [30] Stphane Boucheron, Gbor Lugosi, and Olivier Bousquet. Concentration inequalities. Stphane Boucheron, Gbor Lugosi, and Olivier Bousquet, 2013.

- [31] Miriam Farber and Ido Kaminer. Upper bound for the laplacian eigenvalues of a graph, 2011. https://doi.org/10.48550/arXiv.1106.0769.

- [32] Martin Grötschel, Sven Krumke, and Jörg Rambau. Online Optimization of Large Scale Systems. 01 2001.

- [33] R. Donald Bartusiak. Nlmpc: A platform for optimal control of feed- or product-flexible manufacturing. Assessment and Future Directions of Nonlinear Model Predictive Control, page 367–381.

- [34] Zoltan K. Nagy, Bernd Mahn, Rüdiger Franke, and Frank Allgöwer. Real-Time Implementation of Nonlinear Model Predictive Control of Batch Processes in an Industrial Framework, pages 465–472. Springer Berlin Heidelberg, Berlin, Heidelberg, 2007.

- [35] Abhishek Ajayakumar and Soumyendu Raha. Data assimilation for sparsification of reaction diffusion systems in a complex network, 2023. https://doi.org/10.48550/arXiv.2303.11943.

- [36] Jorge Nocedal and Stephen J. Wright. Numerical optimization. Springer, 2006.

- [37] David G. Luenberger and Yinyu Ye. Linear and nonlinear programming. Springer, 2021.

- [38] Jeroen Elfring, Elena Torta, and Rob van de Molengraft. Particle filters: A hands-on tutorial. Sensors (Basel, Switzerland), 21(2):438, 2021.

- [39] Wai Shing Fung and Nicholas J. A. Harvey. Graph sparsification by edge-connectivity and random spanning trees. CoRR, abs/1005.0265, 2010.

- [40] Hanshu Yan, Jiawei Du, Vincent Y. F. Tan, and Jiashi Feng. On robustness of neural ordinary differential equations, 2022.