Improving Global Adversarial Robustness Generalization

With Adversarially Trained GAN

Abstract

Convolutional neural networks (CNNs) have achieved beyond human-level accuracy in the image classification task and are widely deployed in real-world environments. However, CNNs show vulnerability to adversarial perturbations that are well-designed noises aiming to mislead the classification models. In order to defend against the adversarial perturbations, adversarially trained GAN (ATGAN) is proposed to improve the adversarial robustness generalization of the state-of-the-art CNNs trained by adversarial training. ATGAN incorporates adversarial training into standard GAN training procedure to remove obfuscated gradients which can lead to a false sense in defending against the adversarial perturbations and are commonly observed in existing GANs-based adversarial defense methods. Moreover, ATGAN adopts the image-to-image generator as data augmentation to increase the sample complexity needed for adversarial robustness generalization in adversarial training. Experimental results in MNIST SVHN and CIFAR-10 datasets show that the proposed method doesn’t rely on obfuscated gradients and achieves better global adversarial robustness generalization performance than the adversarially trained state-of-the-art CNNs.

<[email protected]>

1 Introduction

Deep learning [1] has achieved great success on a range of computer vision applications such as image classification [2, 3, 4, 5, 6, 7], object detection [8, 9, 10], and semantic segmentation [11, 12, 13]. However, recent researches [14, 15] demonstrate that Deep Neural Networks are vulnerable to adversarial perturbations. The adversarial perturbations are well-designed noises that can for example mislead a well-trained CNN to output incorrect class labels with high confidence. The perturbations are imperceptible to humans and can transfer across different model parameters even architectures. The examples combining the clean examples with the adversarial perturbations are so-called adversarial examples. The adversarial examples post a great threat to the Deep Learning-based security-crucial applications such as self-driving cars [16], person detection systems [17] or medical diagnosis systems.

Adversarial examples attract great attention in the deep learning community. Lots of works emerge on the generation of more powerful adversarial examples [14, 15, 18, 19, 20, 21, 22, 23, 24]. Besides, there are also large amounts of works on how to train the deep learning models to be robust against the adversarial examples [24, 15, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39]. A model’s ability to behave correctly under adversarial examples is called adversarial robustness. Adversarial robustness is denoted by the accuracy of the classification model on the adversarial examples. One of the lines of research on the adversarial defense is generative model-based adversarial defense methods [36, 37, 38, 39]. However, these methods are shown to lead to a false sense of security in defending against adversarial examples due to obfuscated gradients [40] or evaluated under weak threat models [41]. Therefore it is significant to remove the obfuscated gradients of the generative model-based adversarial defense methods and make them robust under strong adversarial attacks. Adversarial training (AT) [24, 15] is by so far the only effective adversarial defense method which doesn’t rely on the obfuscated gradients and can truly improve the adversarial robustness of the target model. Nevertheless, the CNN classifiers trained by AT still suffer from the poor robustness generalization problem especially for complicated datasets, and this robustness generalization gap is further enlarged when the perturbations larger than the value used during training [24].

Recently, Schmidt et al. [42] studied adversarially robust learning from the generalization perspective. According to their theoretical analysis, the overfitting of the classifiers trained by AT is due to the lack of training data. The sample complexity of adversarial robustness generalization is significantly larger than that of standard generalization.

A common solution to improve the standard generalization when deep learning works with limited data is to use data augmentation [43]. The commonly used data augmentation techniques are geometric transformations such as flipping, cropping, rotation, and translation which are demonstrated can improve the standard generalization accuracy of CNN classifiers on CIFAR-10, CIFAR-100, and ImageNet datasets [4, 7]. The above-mentioned data augmentation methods can only simply manipulate the original data in pixel space. The Generative Adversarial Networks (GANs) [54], due to the ability to model the data distribution and generate new training data, have already been adopted as high-level data augmentation technique and resulted in better performance classification models [44, 45]. For the robustness generalization we study in this paper, Liu et al. [46] demonstrated that the robustness of classifiers trained by AT can be improved if we have a deeper understanding of the image data distribution. And adding a generator to the AT procedure is shown to have the benefit of improving the robustness of the discriminator. Therefore, the GANs can be used as the data augmentation technique to improve the robustness generalization of the CNN classifiers trained by AT.

In order to defend against the adversarial examples, ATGAN is proposed in this paper which can simultaneously get rid of the obfuscated gradients and improve the adversarial robustness generalization performance of the CNN classifiers trained by AT. In the proposed ATGAN framework, the min-max AT procedure [24] is incorporated into the standard GANs training procedure. Experimental results show that ATGAN achieves better adversarial robustness generalization performance than the state-of-the-art CNN classifiers trained by AT. Our contributions are listed as follows:

-

A novel adversarial defense method called ATGAN is proposed with the combination of the cGANs and the AC-GANs. Moreover, the perceptual loss is adopted to increase the quality of the output of the generator.

-

AT is incorporated into the standard GANs training procedure. This combination not only makes the proposed method gets rid of obfuscated gradients but also increase the sample complexity needed by adversarial robustness generalization.

-

Extensive experiments are conducted to evaluate the proposed method on MNIST SVHN and CIFAR-10 datasets. The experimental results demonstrate that the proposed method achieves better adversarial robustness generalization performance against the norm bounded adversarial examples than the state-of-the-art CNN classifiers trained by AT.

The rest of the paper is arranged as follows: In Section 2, we review some related works about the adversarial attack and defense methods, the high sample complexity for adversarial robustness generalization, and the generative adversarial networks. In Section 3, we introduce our proposed ATGAN method. In Section 4, we list the experimental results to demonstrate the feasibility of ATGAN. In Section 5, we do some discussions about our experimental results. Finally, we give some conclusions in Section 6.

2 Related Work

2.1 Adversarial Attacks and Defenses

Since Szegedy et al. [14] first discovered the vulnerability of neural networks to the adversarial examples, researches have proposed various of adversarial attacks methods to generate adversarial examples for evaluating the worst-case robustness of the neural network models. These works generate small perturbations under norm constrains, such as norm, norm or norm. For the norm constrain, Papernot et al. [19] proposed JSMA method, Su et al. [20] proposed one pixel attack method. For the norm constrain, Carlini and Wagner [21] proposed C&W attack method, Moosavi-Dezfooli at al. [22] proposed DeepFool method. For the norm, Szegedy et al. [14] proposed L-BFGS method, Goodfellow at al. [15] proposed FGSM method, Madry et al. [24] proposed PGD method.

Many adversarial defense methods have been proposed to make the neural network models robust to the norm constrained adversarial examples. These works include modifying the input such as data compression [26] and feature squeezing [35], modifying the network such as stochastic activation pruning [47] and Deep Contractive Network [28], adding auxiliary network such as Defense-gan [37], Pixeldefend [36], and Magnet [38]. Nevertheless, these methods are demonstrated to fail to defend against the adversarial examples. AT [24] trains the neural network models on the adversarial examples in a min-max manner, which is by so far the only defense method that can improve the adversarial robustness of the neural networks.

2.2 High Sample Complexity for Adversarial Robustness Generalization

Although AT can improve the adversarial robustness of CNNs, the CNNs trained by AT still suffer from the problem of poor adversarial robustness generalization performance. Schmidt et al. [42] point out that adversarial robustness generalization requires much more labeled data than standard generalization. Based on this theoretical work, a follow-up work done by Zhai et al. [48] demonstrates that the labeled sample complexity for adversarial robustness generalization can be largely reduced if unlabeled data is used, and an adversarially robust model which generalizes well can be learned if we have plenty of unlabeled data. Another independent work done by Uesato et al. [49] also shows that an Unsupervised Adversarial Training (UAT) algorithm which uses unlabeled data together with very limited labeled data can significantly improve the adversarial robustness generalization performance achieved by purely supervised approaches. Carmon et al. [50] show that unlabeled data can fill the sample complexity gap between adversarial robustness generalization and standard generalization through a self-training algorithm. Najafi et al. [51] extent the Distributionally Robust Learning (DRL) method to handle Semi-Supervised Learning (SSL). Their proposed method demonstrates a comparable performance to that of the state-of-the-art method. Besides, two interesting works [52, 53] show that self-supervised learning can improve the adversarial robustness of the CNNs.

2.3 Generative Adversarial Networks

Generative Adversarial Networks (GANs) are an approach to generative modeling using deep learning methods such as CNNs. The idea of GANs was first introduced by Goodfellow et al. [54] in 2014. The architecture of GANs consists of a generator that aims to generate photo-realistic images from randomly selected noise vectors, and a discriminator that aims to discriminate between real images and generated images. The generator and the discriminator are trained simultaneously in a zero-sum game. Although the impressive results achieved by GANs, the training process of GANs is unstable. In [55], the authors give an architecture guideline for designing both the generator and the discriminator, which is demonstrated can result in stable GANs models. Based on these works, plenty of methods that extend GANs on the architecture or the loss function are proposed and achieve state-of-the-art results on various applications. Among these methods, the most related ones to our work are conditional GANs (cGANs) for image-to-image translation [56] and Auxiliary Classifier GANs (AC-GANs) [57]. The cGANs learn to map from conditional information and random noise vector to commonly an image , , which is different from the traditional GANs that learn a mapping from random noise vector to output image , . In [56], the authors use images as the conditional information and leverage an image-to-image network to generate synthetic images from images of other domain. The AC-GANs modify the discriminator to contain an auxiliary classifier that outputs the class label for the training data. The experimental results show that the GANs tasked with classification objective result in higher quality samples.

3 Method

ATGAN is proposed to defend against the norm bounded adversarial examples from the perspective of mapping the adversarial examples back onto the clean examples manifold. The image-to-image network is widely adopted as the generator in cGANs to translate the images from one domain to another. Thus ATGAN uses the image-to-image network generator to map the adversarial examples back onto the clean examples manifold. However, the GANs trained by the standard training procedure have obfuscated gradients in the generator, leading to a false sense on defending against the adversarial examples. AT is by so far the only adversarial defense method that doesn’t rely on the obfuscated gradients and can truly improve the adversarial robustness generalization of the CNNs. In order to remove the obfuscated gradients in GANs, ATGAN incorporates AT into the standard GANs training procedure. AT is a supervised training algorithm while the GANs is an unsupervised method, thus AT cannot be used to train the GANs directly. Inspired by AC-GANs, ATGAN adds an auxiliary decoder to the discriminator that is tasked with reconstructing class labels. During training, the discriminator distinguishes the output of the generator from the real images and classify them simultaneously. Therefore, ATGAN can be treated as a classifier, and AT can be applied to ATGAN directly without modification. Moreover, the perceptual loss is adopted to improve the perceptual quality and the sample complexity of the output of the generator, which can further improve the adversarial robustness generalization.

3.1 GAN Adversarial Training

The main idea of ATGAN is incorporating AT into the standard GANs training procedure in order to remove the obfuscated gradients in GANs. Formally, a standard classification task can be defined as finding the set of model parameters that minimize the empirical risk where and are pairs of examples and corresponding class labels sampled from the empirical data distribution and is a loss function such as the cross-entropy loss for a neural network. Although yielding classifiers with high standard generalization accuracy, empirical risk minimization (ERM) doesn’t ensure the robustness to adversarial examples. AT extends ERM through robust optimization. In AT, a threat model is specified which defines the attacks the classifiers should be robust to. During training, instead of directly feeding into the loss the samples from the distribution , AT allows the threat model to perturb the samples first. This can be formalized as the min-max optimization problem:

| (1) |

where is the set of perturbations the threat model can find, such as the union of the -balls around the clean examples . In this min-max optimization, the inner maximization problem finds an adversarial example that maximizes the loss, which is solved by projected gradient descent (PGD) [24]:

| (2) |

The outer minimization problem finds model parameters so that the loss is minimized on the adversarial examples found by the inner maximization, which can be solved by back-propagation for neural networks. AT doesn’t rely on obfuscated gradients when defending against the adversarial examples. GANs are also adopted as adversarial defense method based on the idea of mapping the adversarial examples back onto the manifold of the clean examples. However, the GANs trained by the standard training procedure have obfuscated gradients that give a false sense on the adversarial robustness. In order to remove the obfuscated gradients in GANs, making GANs truly robust against adversarial examples, ATGAN incorporates AT into the standard GANs training procedure. For ATGAN, the inner maximization problem in the min-max optimization 1 is modified as:

| (3) |

where is the image-to-image network generator of ATGAN. Before fed into the classifier, the samples are first mapped by the generator. During the inner maximization, the generator combined with the classifier, or the discriminator here, is treated as a whole classifier which the threat model aims to generate the adversarial examples against. This operation not only guarantees that ATGAN can learn the mapping from the distribution of the adversarial examples to the manifold of the clean examples but also guarantees that the obfuscated gradients in the generator can be removed.

3.2 Adversarial Robustness Generalization

In order to improve the adversarial robustness generalization performance of the CNNs trained by AT, ATGAN uses data augmentation to increase the sample complexity of the adversarial examples. The sample complexity needed by adversarial robustness generalization is higher than that needed by standard generalization. Thus the training data used to train a model with high standard generalization accuracy often cannot yield a model of similar high adversarial robustness generalization accuracy. The image-to-image network generator of ATGAN first encodes the adversarial examples into the low-dimensionality latent space then decodes the latent vectors into the clean example manifold. This operation can reduce the magnitude of the perturbations in the adversarial examples thus can reduce the power of the adversarial examples. ATGAN also adopts the perceptual loss which uses a pre-trained CNN to extract the high-level feature representations, then calculates the difference of the output of the generator and the clean examples based on these high-level feature representations:

| (4) |

where denotes the activations of the feature map of shape which are extracted from the th layer of the pre-trained CNN, denotes the Euclidean distance between feature representations. The perceptual loss can drive the output of the generator to be perceptually similar to the clean examples but not match the output of the generator and the clean examples exactly. Therefore the generator plays s role of data augmentation that can increase the sample complexity of the adversarial examples. As a result, ATGAN improves the adversarial robustness generalization performance of the CNNs trained by AT.

| MNIST | SVHN | CIFAR-10 |

| Conv(8,3,1) BN Relu }1 | Conv(64,4,2) BN LeakyRelu(0.2) }1 | Conv(128,4,2) BN LeakyRelu(0.2) }3 |

| Conv(16,3,2) BN Relu }1 | Conv(128,4,2) BN LeakyRelu(0.2) }1 | TransConv(128,4,2) BN LeakyRelu(0.2) }3 |

| Conv(32,3,2) BN Relu }1 | TransConv(128,4,2) BN LeakyRelu(0.2) }1 | Conv(3,3,1) |

| ResBlock(32)4 | TransConv(64,4,2) BN LeakyRelu(0.2) }1 | tanh |

| TransConv(16,3,2) BN Relu }1 | Conv(3,3,1) | |

| TransConv(8,3,2) BN Relu }1 | tanh | |

| Conv(3,3,1) | ||

| tanh |

3.3 ATGAN Architecture

The schematic of the architecture of ATGAN is exhibited in Fig. 1. ATGAN consists of an adversarial attacker denoted by A, a generator denoted by G, a discriminator denoted by D, and a loss network denoted by L. The adversarial attacker is the norm PGD described in section 3.1 which treats the whole network consisted of the generator and the discriminator as a classifier and attacks this classifier to generate adversarial examples in one training epoch according to formula 2, indicated by Fig. 1 (a). The generated adversarial examples and their clean examples counterparts are then used to train the generator and the discriminator during the same training epoch. The generator is a fully convolutional image-to-image network that maps the adversarial examples onto the clean examples manifold. The strided convolutions and deconvolutions are adopted to downsample and upsample the adversarial examples instead of pooling layers. Tanh nonlinearity is adopted for the output layer to bound the values of the pixels in the range . The exact architectures and hyper-parameters of the generators are different for different datasets, which are shown in Table 1. ATGAN adopts an AC-GANs-like discriminator which has an auxiliary classifier tasked with reconstructing the class labels of the inputs. The discrimination output of the discriminator produces a scalar value between 0 and 1 that indicates how likely the input image is a clean image. The classification output of the discriminator produces a -dimensional vector that indicates the discrete probability distribution of classes. The discriminator is used to discriminate the adversarial examples and the clean examples and predict their class labels simultaneously. The discrimination task can drive the generator to output images that are similar to the clean examples and the classification task can further improve the perceptual quality of the output images. The CNN used in [21], the DenseNet40 [59] and the ResNet18 [60] are adopted as discriminators respectively for MNIST SVHN and CIFAR-10 datasets. ATGAN adopts a pre-trained CNN as the loss network and the activations of the first convolutional layers of the loss network are used to calculate the perceptual loss for the feature representations extracted from early layers of the loss network tends to encourage the generator to produce images that are visually realistic. The architectures of the loss network are the same with the discriminators for MNIST SVHN and CIFAR-10 datasets, except that there is no discrimination output.

3.4 Loss Functions

ATGAN incorporates AT into standard GANs training procedure to remove obfuscated gradients and improve the adversarial robustness generalization performance of the CNN classifiers trained by AT. Thus the loss functions for both AT and GANs are extended by ATGAN.

3.4.1 Adversarial Loss

To incorporate the AT into standard GANs training procedure, the generator G and the discriminator D are first treated as an entire classifier (the discrimination output of the discriminator is ignored in this step), and the PGD is used as the adversarial attacker A to generate a set of adversarial examples on this classifier by solving problem 5:

| (5) |

where is the adversarial loss:

| (6) |

where denotes the cross-entropy, , denotes the weights and biases of the generator, denotes the weights and the biases of the discriminator, and denotes the classification output of the discriminator. The adversarial attacker finds the perturbations that maximize the adversarial loss. During this step, and are fixed. This inner maximization loop of the AT is shown in Fig. 1 (a).

3.4.2 Discriminator Loss

After generating the adversarial examples against the intermediate snapshot of the ATGAN model, the parameters of ATGAN are updated by one step on the adversarial examples and their clean examples counterpart. This includes the updating of the parameters of the discriminator and the generator. The discriminator is trained by solving problem 7:

| (7) |

where and are the classification loss and the discriminator loss:

| (8) |

| (9) |

where is the scalar discrimination output of the discriminator. The first term and the second term in the classification loss 8 indicate the losses on the clean examples and the adversarial examples. For convenience, we denote them as and .

3.4.3 Generator Loss

The generator is trained by solving problem 10:

| (10) |

where and are the generator loss and the perceptual loss:

| (11) |

| (12) |

where denotes the activations of the feature map of the th layer of the loss network of shape , denotes the Euclidean distance between feature representations.

3.4.4 Full Objective

The full objective to train ATGAN is presented as below:

| (13) |

| (14) |

| (15) |

The , and, in equation 14 and 15 are weight parameters to control the importance of different loss items. These three steps are repeated until the model converges. The training algorithm of ATGAN is described in Algorithm 1.

3.5 Differences from Similar Works

It is noted that there are several important differences between ATGAN and the previous similar works [46, 39]. The Rob-GAN [46] also incorporates AT into the standard GANs training procedure, but it just adversarially trains the discriminator, which is different from ATGAN where the AT is applied to the whole classifier consisted of the generator and the discriminator. The generator of the Rob-GAN takes a noise vector and a class label as input, but the inputs of the generator of ATGAN are adversarial examples. The APE-GAN [39] adversarially trains the GAN, but the procedure of generating the adversarial examples is not incorporated into the GANs training procedure. They generate the adversarial examples on the target classifier they aim to defend then use the generated adversarial examples to train the GAN. The discriminator of the APE-GAN doesn’t task with classification objective. And both Rob-GAN and APE-GAN don’t use the perceptual loss.

4 Experiments and Results

4.1 Datasets

We evaluate ATGAN on three standard datasets: MNIST SVHN and CIFAR-10. MNIST is a dataset of handwritten digits containing 70000 gray images in 10 classes. The training set contains 60000 samples and the testing set contains 10000 samples. SVHN is a dataset containing 10 classes of street view house numbers RGB images of size . The training set contains 73257 samples and the testing set contains 26032 samples. CIFAR-10 is a dataset containing 60000 RGB images in 10 classes. The training set contains 50000 samples and the testing set contains 10000 samples.

4.2 Threat Model

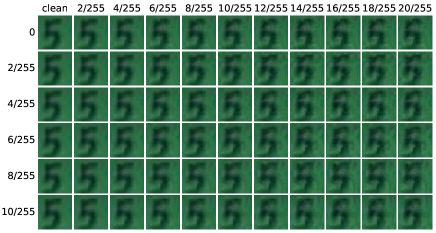

ATGANs and the baseline models are trained by AT to resist the norm bounded adversarial examples using PGD. The number of steps of PGD is set to be 40 for MNIST and 7 for SVHN and CIFAR-10. The step size of PGD is set to be 1 for MNIST and 2/255 for SVHN and CIFAR-10. To compare the global adversarial robustness generalization performance of ATGANs and the baseline models, we set a range of magnitudes of the perturbations during training and testing which are denoted by and . We choose from the range [0.1, 0.15, 0.2, 0.25, 0.3] and from the range [0.1, 0.15, 0.2, 0.25, 0.3, 0.35, 0.4, 0.45, 0.5] for MNIST. We choose from the range [2/255, 4/255, 6/255, 8/255, 10/255] and from the range [2/255, 4/255, 6/255, 8/255, 10/255, 12/255, 14/255, 16/255, 18/255, 20/255] for SVHN and CIFAR-10. In addition to the standard AT procedure, we adopt another AT procedure which is of more generality: only use a fraction of adversarial examples in each mini-batch, and the rest of each mini-batch is replaced with clean examples. We denote this method by FracAT.

4.3 Baseline Models

4.4 Training Parameters

On MNIST we use Adam optimizer, learning rate of 0.001, batch size of 128, and epochs of 50 for both the generator and the discriminator. The loss weights are set as , , and . On SVHN we use batch size of 64 and epochs of 40 for both the generator and the discriminator. The Adam optimizer parameterized with learning rate of 0.0002, of 0.5, and of 0.999 is used for the generator, and the Momentum optimizer parameterized with initial learning rate of 0.1 and momentum of 0.9 is used for the discriminator, the learning rate of the discriminator decays by a factor of 10 at epoch 20 and 30. The loss weights are set as , , and . On CIFAR-10 we use batch size of 128 and steps of 80000 for both the generator and the discriminator. The Adam optimizer parameterized with learning rate of 0.0002, of 0.5, and of 0.999 is used for the generator, and the Momentum optimizer parameterized with initial learning rate of 0.1 and momentum of 0.9 is used for the discriminator, the learning rate of the discriminator decays by a factor of 10 at epoch 100 and 150. The loss weights are set as , , and .

4.5 Evaluation Metrics

The recently proposed robustness curves [61] is used to quantitatively evaluate the global robustness of different models. Accuracy is adopted instead of the error to plot the robustness curves in this paper. According to Risse et al. [62], the commonly adopted scaler perturbation threshold fails to capture important global robustness properties, thus is not sufficient to reliably and meaningfully compare the robustness of different models. The saliency maps [63] is used to qualitatively evaluate the robustness of the features learned by different models.

4.6 Results

4.6.1 MNIST Experiments

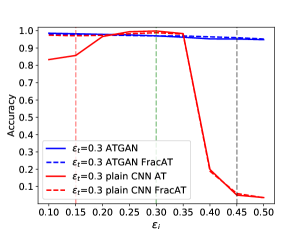

We compare the adversarial robustness generalization performance of ATGAN with the plain CNN in the white-box attacks setting on the MNIST dataset using the threat model described above. The experimental results are shown in Fig. 2. We can get three insights by analyzing Fig. 2. First, the ATGAN without AT shows better adversarial robustness generalization performance than the plain CNN without AT. This indicates the superiority of the network architecture of ATGAN for resisting the adversarial examples on the MNIST dataset compared with the plain CNN. Second, after using AT, the adversarial robustness generalization performance of ATGAN and the plain CNN is further improved. The adversarial robustness accuracy of the plain CNN is higher than the ATGAN only when the magnitude of perturbation used for testing matches that used for training. When the magnitude of perturbation used for testing doesn’t match that used for training, the adversarial robustness accuracy of the plain CNN is lower than the ATGAN and drops dramatically. By contrast, ATGAN show more robust adversarial generalization accuracy under different threat models. Finally, when using FracAT, the adversarial robustness generalization performance of ATGAN is still better than the plain CNN.

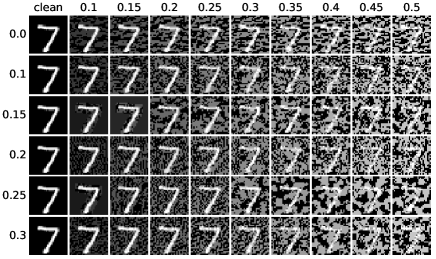

In order to qualitatively evaluate and compare the adversarial robustness generalization performance of ATGAN and the plain CNN on the MNIST dataset, we compute the saliency maps of the clean examples and the adversarial examples, which highlight the pixels that matter most on the decision-making of the classification models. The results are exhibited in Fig. 5. First, it is shown by the top left and the bottom left figures that the magnitude of the perturbation in the adversarial examples generated against ATGAN is much lower than that in the adversarial examples generated against the plain CNN. Second, for both ATGAN and the plain CNN that are trained by the standard procedure, the saliency maps don’t exhibit discriminative features. After AT, the saliency maps exhibit more discriminative features, shown by the top right and bottom right features. Third, the pixels that matter most on the decision-making of the plain CNN trained by AT distribute in the background. As the strength of attacks increases, the discriminative features in the saliency maps become less discriminative. But for ATGAN, the most important pixels distribute in the foreground. As the strength of attacks increases, the discriminative features in the saliency maps stay discriminative. This indicated that ATGAN can learn image features that are more discriminative and robust than the plain CNN does on the MNIST dataset.

4.6.2 SVHN Experiments

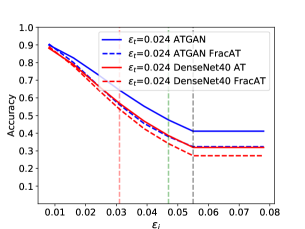

We compare the adversarial robustness generalization performance of ATGAN and DenseNet40 on the SVHN dataset under white-box attack. The experimental results are exhibited in Fig. 3. We can obtain four insights by analyzing Fig. 3. First, the adversarial robustness generalization performance of ATGAN is better than DenseNet40 when trained with standard procedure. This indicates the superiority of the network architecture of ATGAN for resisting the adversarial examples on the SVHN dataset compared with DenseNet40. Second, when using AT, the adversarial robustness generalization performance of both ATGAN and DenseNet40 are improved and the adversarial robustness generalization performance of ATGAN is better than DenseNet40 for all . Third, for both ATGAN and DenseNet40, the adversarial robustness generalization performance increases as increases. Finally, models trained with FracAT show different global adversarial robustness generalization performance rank for different , when , , and , the adversarial robustness generalization accuracies of ATGAN are higher than DenseNet40, when and , the robustness curves of ATGAN and DenseNet40 intersect.

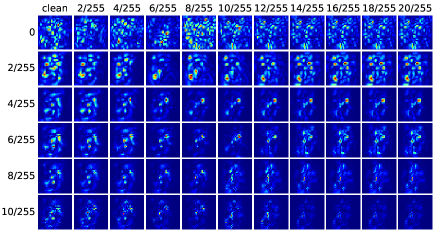

In order to qualitatively evaluate and compare the adversarial robustness generalization performance of ATGAN and DenseNet40 on the SVHN dataset, we compute the saliency maps of the clean examples and the adversarial examples. The results are exhibited in Fig. 6. First, it is shown by the top left and the bottom left figures that the semantic information in the adversarial examples generated against DenseNet40 changes from ’5’ to ’6’ as the strength of attack increases. But the semantic information in the adversarial examples generated against ATGAN stays unchanged. Second, the pixels that matter most on the decision-making of ATGAN trained by standard procedure exhibit more concentrated patterns in the saliency maps compared to DenseNet40, shown by the first rows in the top right and bottom right figures. Third, the foreground is more important to the decision-making for ATGAN compared to DenseNet40, which is indicated by the higher contrast between the foreground and background of the saliency maps.

| 0.2 | 0.3 | |||||||

| A | AFracAT | pCNN | PFracAT | A | AFracAT | pCNN | PFracAT | |

| 0.15 | 0.981 | 0.980 | 0.999 | 0.990 | 0.983 | 0.978 | 0.857 | 0.971 |

| 0.30 | 0.959 | 0.966 | 0.827 | 0.807 | 0.970 | 0.970 | 0.999 | 0.989 |

| 0.45 | 0.952 | 0.948 | 0.151 | 0.193 | 0.952 | 0.960 | 0.050 | 0.059 |

| 6/255 | 8/255 | |||||||

| A | AFracAT | D | DFracAT | A | AFracAT | D | DFracAT | |

| 8/255 | 0.634 | 0.561 | 0.570 | 0.536 | 0.680 | 0.620 | 0.579 | 0.590 |

| 12/255 | 0.454 | 0.380 | 0.386 | 0.341 | 0.518 | 0.421 | 0.403 | 0.452 |

| 14/255 | 0.387 | 0.323 | 0.319 | 0.272 | 0.454 | 0.345 | 0.338 | 0.410 |

| 2/255 | 6/255 | |||||||

| A | AFracAT | R | RFracAT | A | AFracAT | R | RFracAT | |

| 8/255 | 0.678 | 0.667 | 0.604 | 0.642 | 0.592 | 0.583 | 0.604 | 0.595 |

| 12/255 | 0.656 | 0.632 | 0.558 | 0.578 | 0.534 | 0.544 | 0.517 | 0.553 |

| 14/255 | 0.646 | 0.627 | 0.544 | 0.563 | 0.514 | 0.530 | 0.493 | 0.536 |

4.6.3 CIFAR-10 Experiments

We also compare the adversarial robustness generalization performance of ATGAN and ResNet18 on the CIFAR-10 dataset under white-box attacks. The experimental results are shown in Fig. 4. We can get four insights by analyzing Fig. 4. First, when trained using standard procedure, the adversarial robustness generalization performance of ATGAN is better than ResNet18 by a large margin. This indicates the superiority of the network architecture of ATGAN for resisting the adversarial examples on CIFAR-10 compared with ResNet18. Second, when trained using AT, the adversarial robustness generalization performance of both ATGAN and ResNet18 improves. For , the adversarial robustness generalization accuracies of ATGAN are higher than ResNet18 for all . For , the adversarial robustness generalization accuracies of ATGAN are lower than ResNet18 for all . For other , the robustness curves of ATGAN and ResNet18 intersect. This indicates a trade-off between ATGAN and ResNet18 in terms of adversarial robustness generalization performance on CIFAR-10. Third, for both ATGAN and ResNet18, the adversarial robustness generalization performance decreases as increases. Finally, a similar trade-off is also observed on the models trained with FracAT.

In order to qualitatively evaluate and compare the adversarial robustness generalization performance of ATGAN and ResNet18 on the CIFAR-10 dataset, we compute the saliency maps of the clean examples and the adversarial examples. The results are exhibited in Fig. 7. For the more complicated dataset, the perturbations in the adversarial examples are imperceptible to human eyes. But the saliency maps exhibit different features for different examples and different models. First, both ATGAN and ResNet18 learn more discriminative features after AT. Second, similar to MNIST and SVHN datasets, the foregrounds matter more on the decision-making of ATGAN compared to ResNet18.

4.6.4 Obfuscated Gradients Test

The evaluation of adversarial defense methods should be careful because obfuscated gradients are a common occurrence which leads to a false sense of security in defending against adversarial examples. Following the advise of Athalye et al. [40], we conduct two experiments to test whether ATGAN rely on obfuscated gradients or not. We attack ATGAN with 1000-step PGD with for MNIST and for SVHN and CIFAR-10. And we attack ATGAN with 100-step PGD with . Without loss of generality, we choose the ATGAN trained with for MNIST and the ATGAN trained with for SVHN and CIFAR-10. The experimental results are exhibited in Table 5. ATGAN achieves similar robust accuracy on PGD-1K compared to PGD-40 for MNSIT and PGD-7 for SVHN and CIFAR-10. And the robust accuracy becomes zero on unconstrained PGD-100 attack. This indicates that the adversarial robustness of ATGAN is not a result of obfuscated gradients.

| Dataset | PGD | PGD-1k | |

| MNIST | 0.970 | 0.970 | 0.005 |

| SVHN | 0.680 | 0.642 | 0.000 |

| CIFAR-10 | 0.588 | 0.560 | 0.007 |

5 Discussions

Local vs Global Perspective of Adversarial Robustness On the comparison of the adversarial robustness of different models, the most common method is the point-wise measure: pick up some perturbation threshold then compare the robust accuracy of different models based on the perturbation threshold. And for AT, most recent publications only use a single perturbation threshold during training. These methods have the problem of reflecting global properties concerning the adversarial robustness of different models. Our experimental results reflect this notion. The vertical dashed lines plotted in Figure 2, 3, and 4 correspond to different . We take the adversarial accuracies at the intersection points of the adversarial curves and the vertical dashed lines and compared them in Table 2, 3, and 4. According to Table 2, 3, and 4, the models trained by AT with different perturbation thresholds exhibit different adversarial robustness when evaluated under the same point-wise measure. And the models trained by AT with the same perturbation threshold exhibit different adversarial robustness under different point-wise measures. This observation indicates that the point-wise measure can only provide a local perspective of adversarial robustness. To comprehensively compare the adversarial robustness of different models and get a global perspective, the robustness curves should be leveraged as a tool.

Superiority of ATGAN in Architecture The experimental results exhibited in Section 4.8 show that the magnitude of perturbations in the adversarial examples generated against ATGAN is much lower than that in the adversarial examples generated against the baseline models, especially for MNIST and SVHN datasets. This is a qualitative performance of the superiority of adversarial robustness generalization of ATGAN in architecture. And this benefit comes from combining the AT with the GANs training procedure. During the training of the GANs, the discriminator loss and the perceptual loss encourage the distribution of the output of the generator to be similar to the clean examples. This objective has not only the benefit of denoising but also the benefit of encouraging the AT to generate adversarial examples with a lower magnitude of perturbations, which restrict the power of the adversarial examples. On the other hand, the discriminator loss and the perceptual loss don’t drive the output of the generator to match the clean examples exactly, making the generator play the role of data augmentation that increases the sample complexity of the adversarial examples, which also improve the adversarial robustness generalization.

Specificity of Adversarial Robustness in terms of Datasets By analyzing the robustness curves of different datasets, we find that the impact of and on the adversarial robustness generalization performance of ATGAN has unique patterns in terms of different datasets. For the MNIST dataset, ATGAN trained by AT with different exhibit comparable adversarial robustness generalization performance, therefore we don’t need to pay too much attention to the choice of when adversarially training ATGAN on MNIST. For the SVHN dataset, the adversarial robustness generalization performance of ATGAN increases as increases, this indicates that we need to choose a high value of when adversarially training the ATGAN on SVHN. And for CIFAR-10, different from MNIST and SVHN, the adversarial robustness generalization performance of ATGAN decreases as increases, this indicates that we need to choose a low value of when adversarially training ATGAN. By the discussion above, we can get the notion that the distribution of the dataset has a significantly impact on the configuration of parameters when adversarially training ATGAN. So we need to leverage different configurations when applying ATGAN to different datasets.

6 Conclusions

In order to defend against adversarial examples, this paper proposes ATGAN that incorporates AT into standard GANs training procedure to gain robustness against norm constrained perturbations. Experimental results demonstrate that ATGAN not only outperforms the state-of-the-art CNN classifiers trained by AT in terms of adversarial robustness generalization performance but also overcomes the obfuscated gradients problems of existing generative model-based adversarial defense methods. Moreover, ATGAN can learn more discriminative and robust features than the baseline models, which can explain the superiority of ATGAN in adversarial robustness generalization performance.

References

- [1] LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. nature 521(7553), 436-444 (2015). https://doi.org/10.1038/nature14539

- [2] LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proceedings of the IEEE 86(11), 2278-2324 (1998). https://doi.org/10.1109/5.726791

- [3] Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. Communications of the ACM 60(6), 84-90 (2017). https://doi.org/10.1145/3065386

- [4] Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556v6 (2015).

- [5] Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2015, pp. 1-9. https://doi.org/10.1109/cvpr.2015.7298594

- [6] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2016, pp. 770-778. https://doi.org/10.1109/cvpr.2016.90

- [7] Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2017, pp. 4700-4708. https://doi.org/10.1109/cvpr.2017.243

- [8] Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision 2015, pp. 1440-1448. https://doi.org/10.1109/iccv.2015.169

- [9] Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE transactions on pattern analysis and machine intelligence 39(6), 1137-1149 (2016). https://doi.org/10.1109/tpami.2016.2577031

- [10] Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2016, pp. 779-788. https://doi.org/10.1109/cvpr.2016.91

- [11] Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2015, pp. 3431-3440. https://doi.org/10.1109/cvpr.2015.7298965

- [12] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention 2015, pp. 234-241. Springer. https://doi.org/10.1007/9783319245744_28

- [13] Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence 40(4), 834-848 (2017). https://doi.org/10.1109/tpami.2017.2699184

- [14] Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., Fergus, R.: Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199v4 (2014).

- [15] Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572v3 (2015).

- [16] Eykholt, K., Evtimov, I., Fernandes, E., Li, B., Rahmati, A., Xiao, C., Prakash, A., Kohno, T., Song, D.: Robust physical-world attacks on deep learning visual classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, pp. 1625-1634. https://doi.org/10.1109/cvpr.2018.00175

- [17] Xu, K., Zhang, G., Liu, S., Fan, Q., Sun, M., Chen, H., Chen, P.-Y., Wang, Y., Lin, X.: Adversarial t-shirt! evading person detectors in a physical world. In: European Conference on Computer Vision 2020, pp. 665-681. Springer. https://doi.org/10.1007/9783030585587_39

- [18] Kurakin, A., Goodfellow, I., Bengio, S.: Adversarial examples in the physical world. arXiv preprint arXiv:1607.02533v4 (2017).

- [19] Papernot, N., McDaniel, P., Jha, S., Fredrikson, M., Celik, Z.B., Swami, A.: The limitations of deep learning in adversarial settings. In: 2016 IEEE European symposium on security and privacy (EuroS&P) 2016, pp. 372-387. IEEE. https://doi.org/10.1109/eurosp.2016.36

- [20] Su, J., Vargas, D.V., Sakurai, K.: One pixel attack for fooling deep neural networks. IEEE Transactions on Evolutionary Computation 23(5), 828-841 (2019). https://doi.org/10.1109/tevc.2019.2890858

- [21] Carlini, N., Wagner, D.: Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy (SP) 2017, pp. 39-57. IEEE. https://doi.org/10.1109/sp.2017.49

- [22] Moosavi-Dezfooli, S.-M., Fawzi, A., Frossard, P.: Deepfool: a simple and accurate method to fool deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2016, pp. 2574-2582. https://doi.org/10.1109/cvpr.2016.282

- [23] Chen, P.-Y., Sharma, Y., Zhang, H., Yi, J., Hsieh, C.-J.: Ead: elastic-net attacks to deep neural networks via adversarial examples. In: Proceedings of the AAAI Conference on Artificial Intelligence 2018, vol. 1.

- [24] Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards Deep Learning Models Resistant to Adversarial Attacks. In: International Conference on Learning Representations 2018.

- [25] Zheng, S., Song, Y., Leung, T., Goodfellow, I.: Improving the robustness of deep neural networks via stability training. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2016, pp. 4480-4488. https://doi.org/10.1109/cvpr.2016.485

- [26] Dziugaite, G.K., Ghahramani, Z., Roy, D.M.: A study of the effect of jpg compression on adversarial images. arXiv preprint arXiv:1608.00853v1 (2016).

- [27] Wang, Q., Guo, W., Zhang, K., Ororbia II, A.G., Xing, X., Liu, X., Giles, C.L.: Learning adversary-resistant deep neural networks. arXiv preprint arXiv:1612.01401v2 (2017).

- [28] Gu, S., Rigazio, L.: Towards deep neural network architectures robust to adversarial examples. arXiv preprint arXiv:1412.5068v4 (2015).

- [29] Ross, A., Doshi-Velez, F.: Improving the adversarial robustness and interpretability of deep neural networks by regularizing their input gradients. In: Proceedings of the AAAI Conference on Artificial Intelligence 2018, vol. 1.

- [30] Papernot, N., McDaniel, P., Wu, X., Jha, S., Swami, A.: Distillation as a defense to adversarial perturbations against deep neural networks. In: 2016 IEEE Symposium on Security and Privacy (SP) 2016, pp. 582-597. IEEE. https://doi.org/10.1109/sp.2016.41

- [31] Cisse, M., Adi, Y., Neverova, N., Keshet, J.: Houdini: Fooling deep structured prediction models. arXiv preprint arXiv:1707.05373v1 (2017).

- [32] Gao, J., Wang, B., Lin, Z., Xu, W., Qi, Y.: Deepcloak: Masking deep neural network models for robustness against adversarial samples. arXiv preprint arXiv:1702.06763v8 (2017).

- [33] Akhtar, N., Liu, J., Mian, A.: Defense against universal adversarial perturbations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, pp. 3389-3398. https://doi.org/10.1109/cvpr.2018.00357

- [34] Lee, H., Han, S., Lee, J.: Generative adversarial trainer: Defense to adversarial perturbations with gan. arXiv preprint arXiv:1705.03387v2 (2017).

- [35] Xu, W., Evans, D., Qi, Y.: Feature squeezing: Detecting adversarial examples in deep neural networks. arXiv preprint arXiv:1704.01155v2 (2017).

- [36] Song, Y., Kim, T., Nowozin, S., Ermon, S., Kushman, N.: Pixeldefend: Leveraging generative models to understand and defend against adversarial examples. arXiv preprint arXiv:1710.10766v3 (2018).

- [37] Samangouei, P., Kabkab, M., Chellappa, R.: Defense-gan: Protecting classifiers against adversarial attacks using generative models. arXiv preprint arXiv:1805.06605v2 (2018).

- [38] Meng, D., Chen, H.: Magnet: a two-pronged defense against adversarial examples. In: Proceedings of the 2017 ACM SIGSAC conference on computer and communications security 2017, pp. 135-147. https://doi.org/10.1145/3133956.3134057

- [39] Jin, G., Shen, S., Zhang, D., Dai, F., Zhang, Y.: Ape-gan: Adversarial perturbation elimination with gan. In: ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2019, pp. 3842-3846. IEEE. https://doi.org/10.1109/icassp.2019.8683044

- [40] Athalye, A., Carlini, N., Wagner, D.: Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. In: International Conference on Machine Learning 2018, pp. 274-283. PMLR.

- [41] Carlini, N., Wagner, D.: Magnet and" efficient defenses against adversarial attacks" are not robust to adversarial examples. arXiv preprint arXiv:1711.08478v1 (2017).

- [42] Schmidt, L., Santurkar, S., Tsipras, D., Talwar, K., Madry, A.: Adversarially robust generalization requires more data. arXiv preprint arXiv:1804.11285v2 (2018).

- [43] Shorten, C., Khoshgoftaar, T.M.: A survey on image data augmentation for deep learning. Journal of Big Data 6(1), 60 (2019). ttps://doi.org/10.1186/s4053701901970

- [44] Frid-Adar, M., Diamant, I., Klang, E., Amitai, M., Goldberger, J., Greenspan, H.: GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 321, 321-331 (2018). https://doi.org/10.1016/j.neucom.2018.09.013

- [45] Zhu, X., Liu, Y., Li, J., Wan, T., Qin, Z.: Emotion classification with data augmentation using generative adversarial networks. In: Pacific-Asia conference on knowledge discovery and data mining 2018, pp. 349-360. Springer. https://doi.org/10.1007/9783319930404_28

- [46] Liu, X., Hsieh, C.-J.: Rob-gan: Generator, discriminator, and adversarial attacker. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, pp. 11234-11243. https://doi.org/10.1109/cvpr.2019.01149

- [47] Dhillon, G.S., Azizzadenesheli, K., Lipton, Z.C., Bernstein, J., Kossaifi, J., Khanna, A., Anandkumar, A.: Stochastic activation pruning for robust adversarial defense. arXiv preprint arXiv:1803.01442v1 (2018).

- [48] Zhai, R., Cai, T., He, D., Dan, C., He, K., Hopcroft, J., Wang, L.: Adversarially robust generalization just requires more unlabeled data. arXiv preprint arXiv:1906.00555v2 (2019).

- [49] Uesato, J., Alayrac, J.-B., Huang, P.-S., Stanforth, R., Fawzi, A., Kohli, P.: Are labels required for improving adversarial robustness? arXiv preprint arXiv:1905.13725v4 (2019).

- [50] Carmon, Y., Raghunathan, A., Schmidt, L., Duchi, J.C., Liang, P.S.: Unlabeled data improves adversarial robustness. In: Advances in Neural Information Processing Systems 2019, pp. 11192-11203.

- [51] Najafi, A., Maeda, S.-i., Koyama, M., Miyato, T.: Robustness to adversarial perturbations in learning from incomplete data. In: Advances in Neural Information Processing Systems 2019, pp. 5541-5551.

- [52] Hendrycks, D., Mazeika, M., Kadavath, S., Song, D.: Using self-supervised learning can improve model robustness and uncertainty. arXiv preprint arXiv:1906.12340v2 (2019).

- [53] Chen, T., Liu, S., Chang, S., Cheng, Y., Amini, L., Wang, Z.: Adversarial robustness: From self-supervised pre-training to fine-tuning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, pp. 699-708. https://doi.org/10.1109/cvpr42600.2020.00078

- [54] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in neural information processing systems 2014, pp. 2672-2680.

- [55] Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434v2 (2016).

- [56] Isola, P., Zhu, J.-Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2017, pp. 1125-1134. https://doi.org/10.1109/cvpr.2017.632

- [57] Odena, A., Olah, C., Shlens, J.: Conditional image synthesis with auxiliary classifier gans. In: International conference on machine learning 2017, pp. 2642-2651. PMLR

- [58] Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision 2016, pp. 694-711. Springer. https://doi.org/10.1007/9783319464756_43

- [59] Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2017, pp. 4700-4708. https://doi.org/10.1109/cvpr.2017.243

- [60] He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. In: European conference on computer vision 2016, pp. 630-645. Springer. https://doi.org/10.1007/9783319464930_38

- [61] Göpfert, C., Göpfert, J.P., Hammer, B.: Adversarial Robustness Curves. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases 2019, pp. 172-179. Springer. https://doi.org/10.1007/9783030438234_15

- [62] Risse, N., Göpfert, C., Göpfert, J.P.: How to compare adversarial robustness of classifiers from a global perspective. arXiv preprint arXiv:2004.10882v2 (2020).

- [63] Simonyan, K., Vedaldi, A., Zisserman, A.: Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034v2 (2014).