Improving Generalization in Meta-RL with Imaginary Tasks from Latent Dynamics Mixture

Abstract

The generalization ability of most meta-reinforcement learning (meta-RL) methods is largely limited to test tasks that are sampled from the same distribution used to sample training tasks. To overcome the limitation, we propose Latent Dynamics Mixture (LDM) that trains a reinforcement learning agent with imaginary tasks generated from mixtures of learned latent dynamics. By training a policy on mixture tasks along with original training tasks, LDM allows the agent to prepare for unseen test tasks during training and prevents the agent from overfitting the training tasks. LDM significantly outperforms standard meta-RL methods in test returns on the gridworld navigation and MuJoCo tasks where we strictly separate the training task distribution and the test task distribution.

1 Introduction

Overfitting and lack of generalization ability have been raised as the most critical problems of deep reinforcement learning (RL) [5, 8, 30, 34, 38, 47, 52]. Numbers of meta-reinforcement learning (meta-RL) methods have proposed solutions to the problems by meta-training a policy that easily adapts to unseen but similar tasks. Meta-RL trains an agent in multiple sample tasks to construct an inductive bias over the shared structure across tasks. Most meta-RL works evaluate their agents on test tasks that are sampled from the same distribution used to sample training tasks. Therefore, the vulnerability of meta-RL to test-time distribution shift is hardly revealed [12, 26, 29, 30].

One major category of meta-RL is gradient-based meta-RL that learns an initialization of a model such that few steps of policy gradient are sufficient to attain good performance in a new task [9, 36, 39, 40, 55]. Most of these methods require many test-time rollouts for adaptation that may be costly in real environments. Moreover, the networks are composed of feedforward networks that make online adaptation within a rollout difficult.

Another major category of meta-RL is context-based meta-RL that tries to learn the tasks’ structures by utilizing recurrent or memory-augmented models [6, 13, 23, 25, 32, 35, 49, 56]. A context-based meta-RL agent encodes its collected experience into a context. The policy conditioned on the context is trained to maximize the return. These methods have difficulties generalizing to unseen out-of-distribution (OOD) tasks mainly because of two reasons. (1) The process of encoding unseen task dynamics into a context is not well generalized. (2) Even if the unseen dynamics is well encoded, the policy that has never been trained conditioned on the unseen context cannot interpret the context to output optimal actions.

We propose Latent Dynamics Mixture (LDM), a novel meta-RL method that overcomes the aforementioned limitations and generalizes to strictly unseen test tasks without any additional test-time updates. LDM is based on variational Bayes-adaptive meta-RL that meta-learns approximate inference on a latent belief distribution over multiple reward and transition dynamics [56]. We generate imaginary tasks using mixtures of training tasks’ meta-learned latent beliefs. By providing the agent with imaginary tasks during training, the agent can train its context encoder and policy given the context for unseen tasks that may appear during testing. Since LDM prepares for the test during training, it does not require additional gradient adaptation during testing.

For example, let there be four types of training tasks, each of which must move east, north, west, and south. By mixing the two tasks of moving east and north, we may create a new task of moving northeast. By mixing the training tasks in different weights, we may create tasks with goals in any direction.

We evaluate LDM and other meta-RL methods on the gridworld navigation task and MuJoCo meta-RL tasks, where we completely separate the distributions of training tasks and test tasks. We show that LDM, without any prior knowledge on the distribution of test tasks during training, achieves superior test returns compared to other meta-RL methods.

2 Problem Setup

Our work is motivated by the meta-learning setting of variBAD [56], therefore we follow most of the problem setup and notations except for a key difference that the test and training task distributions are strictly disjoint in our setup. A Markov decision process (MDP) consists of a set of states , a set of actions , a reward function , a transition function , an initial state distribution , a discount factor and a time horizon .

During meta-training, a task (or a batch of tasks) is sampled following over the set of MDPs at every iteration. Each MDP has individual reward function (e.g., goal location) and transition function (e.g., amount of friction), while sharing some general structures. We assume that the agent does not have access to the task index , which determines the MDP. At meta-test, standard meta-RL methods evaluate agents on tasks sampled from the same distribution that is used to sample the training tasks. To evaluate the generalization ability of agents in environments unseen during training, we split into two strictly disjoint training and test sets of MDPs, i.e., and . The agent does not have any prior information about and cannot interact in during training.

Since the MDP is initially unknown, the best the agent can do is to update its belief about the environment according to its experience . According to the Bayesian RL formulation, the agent’s belief about the reward and transition dynamics at timestep can be formalized as a posterior over the MDP given the agent’s trajectory, . By augmenting the belief to the state, a Bayes-Adaptive MDP (BAMDP) can be constructed [7]. The agent’s goal in a BAMDP is to maximize the expected return while exploring the environment by minimizing the uncertainty about the initially unknown MDP.

The inference and posterior update problem in a BAMDP can be solved by combining meta-learning and approximate variational inference [56]. An inference model encodes the experience into a low-dimensional stochastic latent variable to represent the posterior belief over the MDPs.111We use the terms context, latent belief, and latent (dynamics) model interchangeably to denote . The reward and transition dynamics can be formulated as shared functions across MDPs: and . Then the problem of computing the posterior becomes inferring the posterior over . By conditioning the policy on the posterior , an approximately Bayes-optimal policy can be obtained.

Refer to Figure 1 for the gridworld navigation example that is the same as the example used in variBAD [56] except for the increased number of cells and that the task set is divided into disjoint and . A Bayes-optimal agent for a task in first assigns a uniform prior to the goal states of (Figure 1a) and then explores these states until it discovers a goal state as the dashed path in Figure 1a. If this agent, trained to solve the tasks in only, is put in to solve a task in without any prior knowledge (Figure 1b), the best policy an agent can take first is to maintain its initial belief learned in and explore the goal states of . Once the agent realizes that there are no goal states in , it could start exploring the states that are not visited (i.e., ), and discover an unseen goal state in . However, it is unlikely that the agent trained only in will encode its experience into beliefs for unseen tasks accurately and explore the goal states of efficiently conditioned on the unseen context without any prior knowledge or test-time adaptation.

3 Latent Dynamics Mixture

Our work aims to train an agent that prepares for unseen test tasks during training as in Figure 1b. We provide the agent during training with imaginary tasks created from mixtures of training tasks’ latent beliefs. By training the agent to solve the imaginary tasks, the agent learns to encode unseen dynamics and to produce optimal policy given the beliefs for tasks not only in but also for more general tasks that may appear during testing.

Refer to Figure 2 for an overview of the entire process. We train normal workers and a mixture worker in parallel. For the convenience of explanation, we first focus on the case with only one mixture worker. At the beginning of every iteration, we sample MDPs from and assign each MDP to each normal worker . All normal and mixture workers share a single policy network and a single latent dynamics network. Normal workers train the shared policy network and latent dynamics network using true rewards from the sampled MDPs. A mixture worker trains the policy network with imaginary rewards from the learned decoder’s output given mixture beliefs.

3.1 Policy Network

Any type of recurrent network that can encode the past trajectory into a belief state is sufficient for the policy network. We use an [6] type of policy network (Figure 2). Each normal worker trains a recurrent Encoder-p (parameterized by ) and a feedforward policy network (parameterized by ) to maximize the return for its assigned MDP . A mixture worker trains the same policy network to maximize the return in an imaginary task where the imaginary reward is from the decoder given the mixture model . Any online RL algorithm can be used to train the policy network. We use A2C for the gridworld and PPO [37] for MuJoCo tasks to optimize and end-to-end.

3.2 Latent Dynamics Network

We use the same network structure and training methods of VAE introduced in variBAD [56] for our latent dynamics network (Figure 2). The only difference is that the policy network and the latent dynamics network do not share an encoder. Therefore Encoder-v (parameterized by ) of the latent dynamics network does not need to output the context necessary for the policy but only needs to encode the MDP dynamics into a low-dimensional stochastic latent embedding . The latent dynamics model changes over time as the agent explores an MDP (denoted as ), but converges as the agent collects sufficient information to infer the dynamics of the current MDP. The latent dynamics network is not involved in the action selection of workers. We store trajectories from in a buffer and use samples from the buffer to train the latent dynamics network offline. Each normal worker trains the latent dynamics network to decode the entire trajectory, including the future, to allow inference about unseen future transitions. In this work, we focus on MDPs where only reward dynamics varies [9, 10, 12, 13, 17, 26] and only train the reward decoder (parameterized by ) as in [56]. The parameters and are optimized end-to-end to maximize the ELBO [20] using a reparameterization trick.

3.3 Imaginary Task Generation from Latent Dynamics Mixture

While training the policy network of the normal workers in parallel, we generate an imaginary latent model as a randomly weighted sum of the latent models of the normal workers.

| (1) |

’s are random mixture weights multiplied to each latent model . At the beginning of every iteration when the normal workers are assigned to new MDPs, we also sample new mixture weights fixed for that iteration. There are many distributions suitable for sampling mixture weights, and we use the Dirichlet distribution in Equation 1. is a hyperparameter that controls the mixture’s degree of extrapolation. The sum of mixture weights equals 1 regardless of the hyperparameter . If , all ’s are bounded between 0 and 1. Then the mixture model becomes a convex combination of the training models. If , the resulting mixture model may express extrapolated dynamics of training tasks. We find suits best for most of our experiments among that we tried. Refer to extrapolation results in Section 5.1.2 where greater than 1 can be effective.

A mixture worker interacts with an MDP sampled from , but we replace the environment reward with the imaginary reward to construct a mixture task . A mixture worker trains the policy network to maximize the return for the imaginary task . We expect the imaginary task to share some common structures with the training tasks because the mixture task is generated using the decoder that is trained to fit the training tasks’ reward dynamics. On the other hand, the decoder can generate unseen rewards because we feed the decoder unseen mixture beliefs. The mixture worker only trains the policy network but not the decoder with imaginary dynamics of .

Dropout of state and action input for the decoder

As the latent dynamics network is trained for the tasks in , we find that the decoder easily overfits the state and action observations, ignoring the latent model . Returning to the gridworld example, if we train the decoder with the tasks in (Figure 1a), and feed the decoder one of the goal states in , the decoder refers to the next state input only and always returns zero rewards regardless of the latent model (Figure 4a). We apply dropout of rate to all inputs of the decoder except the latent model . It forces the decoder to refer to the latent model when predicting the reward and generate general mixture tasks. Refer to Appendix E for ablations on dropout.

Training the decoder with a single-step regression loss is generally less complex than training the policy network with a multi-step policy gradient loss. Therefore the decoder can be stably trained even with input dropout, generalizing better than the meta-trained policy. Refer to Appendix B for empirical results on the test-time generalization ability of the latent dynamics network with dropout.

3.4 Implementation Details

Multiple episodes of the same task in one iteration

Following the setting of variBAD [56], we define an iteration as a sequence of episodes of the same task and train the agent to act Bayes-optimally within the rollout episodes (i.e., steps). After every episodes, new tasks are sampled from for the normal workers, and new mixture weights are sampled for the mixture worker. Then we can compare our method to other meta-RL methods that are designed to maximize the return after rollouts of many episodes.

Multiple mixture workers

We may train more than one mixture worker at the same time by sampling different sets of mixture weights for different mixture workers. Increasing the ratio of mixture workers to normal workers may help the agent generalize to unseen tasks faster, but the normal workers may require more iterations to learn optimal policies in . We train normal workers and mixture workers in parallel unless otherwise stated. Refer to Appendix D for empirical analysis on the ratio of workers.

4 Related Work

Meta-Reinforcement Learning

Although context-based meta-RL methods require a large amount of data for meta-training, they can learn within the task and make online adaptations. [6] is the most simple, yet effective context-based model-free meta-RL method that utilizes a recurrent network to encode the experience into a policy. PEARL [35] integrates an off-policy meta-RL method with online probabilistic filtering of latent task variables to achieve high meta-training efficiency. MAML [9] learns an initialization such that a few steps of policy gradient is enough for the agent to adapt to a new test task. E-MAML and ProMP [36, 40] extend MAML by proposing exploration strategies for collecting rollouts for adaptation.

Bayesian Reinforcement Learning

Bayesian RL quantifies the uncertainty or the posterior belief over the MDPs using past experience. Conditioning on the environment’s uncertainty, a Bayes-optimal policy can set the optimal balance between exploration and exploitation to maximize the return during training [1, 11, 19, 28]. In a Bayes-adaptive MDP (BAMDP), where the agent augments the state space of MDP with its belief, it is almost impossible to find the optimal policy due to the unknown parameterization and the intractable belief update. VariBAD [56] proposes an approximate but tractable solution that combines meta-learning and approximate variational inference. However, it is restricted to settings where the training and test task distributions are almost the same. Furthermore, the learned latent model is only used as additional information for the policy. LDM uses the learned latent model more actively by creating mixture tasks to train the policy for more general test tasks out of training task distribution.

Curriculum, Goal and Task Generation

The idea of generating new tasks for RL is not new. Florensa et al. [10] proposes generative adversarial training to generate goals. Gupta et al. [12] proposes an automatic task design process based on mutual information. SimOpt [3] learns the randomization of simulation parameters based on a few real-world rollouts. POET and enhanced POET [45, 46] generate the terrain for a 2D walker given access to the environment parameters. Dream to Control [14] solves a long-horizon task using a latent-imagination. BIRD [54] learns from imaginary trajectories by maximizing the mutual information between imaginary and real trajectories. Chandak et al. [2] trains an agent to forecast the future tasks in non-stationary MDPs. Most of these works require prior knowledge or control of the environment parameters, or the pool of the generated tasks is restricted to the training task distribution.

Data-augmentation for Reinforcement Learning

Many image augmentation techniques such as random convolution, random shift, l2-regularization, dropout, batch normalization and noise injection are shown to improve the generalization of RL [5, 16, 22, 24, 33, 50]. Mixreg [44] that applies the idea of mix-up [53] in RL, generates new training data as a convex interpolation of input observation and output reward. LDM can be thought of as a data-augmentation method in a way that it generates a mixture task using the data from training tasks. However, LDM generates a new task in the latent space with the latent model that fully encodes the MDPs’ dynamics. Instead of the pre-defined augmentation techniques with heuristics, we generate mixture tasks using the learned decoder, which contains the shared structure of the MDPs.

Out-of-distribution Meta-Reinforcement Learning

Some recent works aim to generalize RL to OOD tasks [8]. MIER [30] relabels past trajectories in a buffer during a test to generate synthetic training data suited for the test MDP. FLAP [31] learns a shared linear representation of the policy. To adapt to a new task FLAP only needs to predict a set of linear weights for fast adaptation. MetaGenRL [21] meta-learns an RL objective to train a randomly initialized policy on a test task. Most of these methods require experience from the test for additional training or updates for the network. AdMRL [26] performs adversarial virtual training with varying rewards. AdMRL assumes known reward space and parameterization, while LDM meta-learns.

5 Experiments

We evaluate LDM and other meta-RL methods on the gridworld example (Figure 1) and three MuJoCo meta-RL tasks [42]. We slightly modify the standard MuJoCo tasks by splitting the task space into disjoint and . We use RL2 [6] and variBAD [56] as baselines representing the context-based meta-RL methods. LDM without mixture training and the latent dynamics network reduces to RL2. LDM without mixture training reduces to variBAD if the policy and latent dynamics networks share an encoder. We use E-MAML [40] and ProMP [36] as baselines representing the gradient-based meta-RL methods. We implement RL2-based Mixreg [44] to evaluate the difference between generating mixture tasks in the latent space and the observation space. Refer to Appendix A for the details of implementations and hyperparameters.

All methods are trained on tasks sampled from uniformly at random, except for the oracle methods that are trained on tasks in the entire task set . Note that the oracle performance is only for reference and can not be achieved by any non-oracle methods theoretically. Non-oracle methods without prior knowledge on require additional exploration during testing to experience the changes from , whereas the oracle agent can exploit the knowledge of the test distribution. In the gridworld example, the oracle agent can search for goals in before navigating the outermost goal states of . Therefore the main focus should be on the relative improvement of LDM compared to non-oracle methods, bridging the gap between the oracle and the non-oracle methods. We report mean results using 8 random seeds and apply a moving average of window size 5 for all main experiments (4 seeds for ablations). Shaded areas indicate standard deviations for all plots.

5.1 Gridworld Navigation

Experimental setup

We use the gridworld navigation task introduced in Figure 1. The agent is allowed 5 actions: up, down, left, right, and stay. The reward is 1 for reaching or staying at the hidden goal and for all other transitions. Each episode lasts for steps. All baselines are given rollout episodes for a fixed task except for ProMP and E-MAML that are given rollouts. Such choice of follows from the reference implementations of the baselines. The time horizon has been carefully set so that the agent can not visit all states within the first episode but can visit them within two episodes. If a rollout is over, the agent is reset to the origin. The optimal policy is to search for the hidden goal and stay at the goal or return to the goal as quickly as possible. After episodes, a new task is sampled from uniformly at random. The reward decoder requires only the latent model and the next state as input for this task. We apply dropout with the rate to the next state input of the reward decoder.

Results

Except for ProMP and E-MAML that use feedforward networks, all the baseline methods achieve the same optimal performance in at the -th episode (Figure 3). However, our method outperforms the non-oracle baselines in . Out of the 27 tasks in , our agent succeeds to visit the goals in 19 tasks on average. Although LDM still does not reach 8 test goals on average, considering that there is no prior information on the test distribution and that the optimal policy for (dashed path in Figure 1a) does not visit most of the goal states in , the improvement of LDM over RL2 and variBAD is significant. RL2 achieves a high test return initially, but as the policy overfits the training tasks, its test return decays. VariBAD achieves high returns in , but fails to generalize in most of the tasks in . Mixreg performs better than RL2, but does not match LDM.

Refer to Figure 8a for example of LDM’s test-time behavior for a task in that was introduced in Figure 1a. The agent searches for a goal in first. Once it explores all the goal states in , it starts to search for the goals in . From the second episode, the agent directly heads to the goal based on the context made during the first episode. Note that the initial prior is nonzero even for the goals in due to the dropout applied to the decoder’s state input.

5.1.1 Tasks generated by LDM

We present an empirical analysis in Figure 4 to verify that LDM indeed generates meaningful new tasks that help to solve the test tasks. Without prior knowledge or interaction with , it is impossible to create exactly the same reward maps of . But the reward maps in Figure 4c,d,e are sufficient to induce exploration toward the goal states of . Due to the dropout applied to the next state input of the decoder, the decoder assigns high rewards to some goals that belong to . Note that the decoder does not generalize without dropout therefore assigns high rewards only to training goals (Figure 4a).

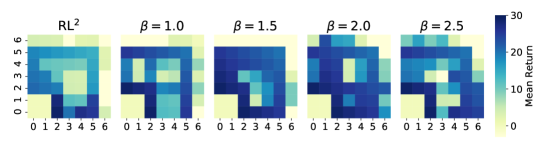

5.1.2 Extrapolation ability of LDM

To demonstrate that LDM is not restricted to target tasks inside the convex hull of training tasks, we design gridworld-extrapolation task as in Figure 5. This task is similar to the previous gridworld task in Section 5.1, except that we shift the outermost cells of inside to construct extrapolation tasks (Figure 5c). Refer to Figure 6 for the final return for each task when we train LDM () with different values of . For small values of and , LDM focuses on training the interpolation tasks in . As increases, the returns for tasks in decrease, but the returns for tasks in increase.

5.2 MuJoCo

Experimental setup

We evaluate our agent and baselines on three standard MuJoCo domains (Ant-direction, Ant-goal, and Half-cheetah-velocity) to verify how effective LDM is for continuous control tasks. We evaluate the agents on a fixed set of evaluation tasks for each domain to ensure the test result is not affected by the sampling of evaluation tasks (Table 1). For MuJoCo tasks we report the results of PEARL [35], MQL [8], and MIER [30] with number of rollouts per iteration () equal to 3, 11 and 3 respectively. ProMP and E-MAML are given and all other methods are given rollouts (following the reference implementations). steps for each episode of all MuJoCo tasks. Because PEARL, MQL, and MIER use an off-policy RL algorithm, their performance converges with much less training data. Therefore, for the three baselines, we report the converged asymptotic performance after 5 million steps of environment interaction. We set LDM’s for all MuJoCo tasks.

| Ant-direction | Ant-goal | Half-cheetah-velocity | ||

|---|---|---|---|---|

Ant-direction

We construct the Ant-direction task based on the example discussed in the introduction. denotes the angle of the target direction from the origin. A major component of the reward is the dot product of the target direction and the agent’s velocity.

Ant-goal

We construct the Ant-goal task similar to the gridworld task, where the agent has to reach the unknown goal position. The training task distribution (shaded region of Figure 8c) is continuous, unlike the gridworld and Ant-direction tasks. and denote the radius and angle of the goal from the origin, respectively. A major component of the reward is the negative value of the taxicab distance between the agent and the goal. We set because LDM with generates goals near the origin mostly due to the symmetry of training tasks. Refer to Appendix C for a detailed analysis regarding the choice of . One may argue that the training and test tasks are not disjoint because the agent passes through the goal states of momentarily while learning to solve . However, the task inference with the unseen rewards from cannot be learned during training. The policy to stay at the goals of until the end of the time horizon is also not learned.

Half-cheetah-velocity

We train the Half-cheetah agent to match the target velocity sampled from the two distributions at extremes and test for target velocities in between. This task is relatively easier than the previous Ant tasks due to the reduced dimension of the task space. Therefore we train more mixture workers than the other tasks, training normal workers and mixture workers in parallel. Refer to Appendix D for additional results with different numbers of mixture workers. The target velocity is , where the velocity is measured as a change of position per second (or 20 environment steps). A major component of the reward is the negative value of the difference between the target velocity and the agent’s velocity. Similar to Ant-Goal, although the test velocities in are achieved momentarily in the process of reaching a target velocity in , the agent does not learn to maintain the target velocities in during training in .

Results

Refer to Figure 7 for the test returns in . LDM outperforms the non-oracle baselines at the -th episode. VariBAD oracle achieves the best test returns for all MuJoCo tests, revealing the strength of variBAD’s task inference ability for tasks seen during training. However, the non-oracle variBAD has difficulty generalizing to . The performance of Mixreg is lower than that of RL2, which reveals the limitation of mixing in complex observation space. In all MuJoCo tasks, the agent can infer the true task dynamics using the reward at every timestep, even at the first step of an episode. Therefore gradient-based methods with feedforward networks also make progress, unlike the gridworld task. Because PEARL is designed to collect data for the first two episodes, it achieves low returns before the -th episode. Even after accumulating context, the policy of PEARL is not prepared for the unseen contexts in Ant-direction and Half-cheetah-velocity. Note that MQL and MIER require additional buffer-based re-labeling or re-training for testing after collecting some rollouts of the test task. LDM can prepare in advance during training so that we do not require collection of test rollouts and extra buffer-based training during testing.

Because LDM is not trained in , it can not solve the target tasks optimally from the beginning of each task in the Ant-direction and Ant-goal tasks, unlike variBAD Oracle (Figure 8b and 8c). However, LDM reaches the target direction or goal close enough after a sufficiently small amount of exploration, unlike RL2. For the Half-cheetah-velocity task, LDM generates well-separated policies compared to RL2 (Figure 8d). Refer to Appendix F for additional experimental results on the test returns at the first rollout episode, the training returns, and sample trajectories on training tasks.

6 Conclusion

We propose Latent Dynamics Mixture to improve the generalization ability of meta-RL by training policy with generated mixture tasks. Our method outperforms baseline meta-RL methods in experiments with strictly disjoint training and test task distributions, even reaching the oracle performance in some tasks. Because our latent dynamics network and the task generation process are independent of the policy network, we expect LDM to make orthogonal contributions when combined with not only RL2 but most of other meta-RL methods.

We believe that our work can be a starting point for many interesting future works. For example, instead of a heuristic weight-sampling for generating the mixture, we may incorporate OOD generation techniques in the latent space. Another extension is to train the latent dynamics network to decode not only the reward but also the state transition dynamics. Then we can generate a purely imaginary mixture task without additional environment interaction.

Acknowledgments

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) [2021R1A2C2007518]. We thank Dong Hoon Lee, Minguk Jang, and Dong Geun Shin for constructive feedback and discussions.

References

- Cassandra et al. [1994] A. R. Cassandra, L. P. Kaelbling, and M. L. Littman. Acting optimally in partially observable stochastic domains. In National Conference on Artificial Intelligence, 1994.

- Chandak et al. [2020] Y. Chandak, G. Theocharous, S. Shankar, M. White, S. Mahadevan, and P. Thomas. Optimizing for the future in non-stationary mdps. In International Conference on Machine Learning, (ICML), pages 119:1414–1425, 2020.

- Chebotar et al. [2019] Y. Chebotar, A. Handa, V. Makoviychuk, M. Macklin, J. Issac, N. D. Ratliff, and D. Fox. Closing the sim-to-real loop: Adapting simulation randomization with real world experience. In International Conference on Robotics and Automation, (ICRA), pages 8973–8979, 2019.

- Chen et al. [2018] B. Chen, W. Deng, and H. Shen. Virtual class enhanced discriminative embedding learning. In Advances in Neural Information Processing Systems (NeurIPS), pages 31:1942–1952, 2018.

- Cobbe et al. [2019] K. Cobbe, O. Klimov, C. Hesse, T. Kim, and J. Schulman. Quantifying generalization in reinforcement learning. In International Conference on Machine Learning, (ICML), pages 97:1282–1289, 2019.

- Duan et al. [2016] Y. Duan, J. Schulman, X. Chen, P. L. Bartlett, I. Sutskever, and P. Abbeel. : Fast reinforcement learning via slow reinforcement learning. arXiv preprint arXiv: 1611.02779, 2016.

- Duff [2002] M. O. Duff. Optimal Learning: Computational Procedures for Bayes-adaptive Markov Decision Processes. University of Massachusetts at Amherst, 2002.

- Fakoor et al. [2020] R. Fakoor, P. Chaudhari, S. Soatto, and A. J. Smola. Meta-q-learning. In International Conference on Learning Representations, (ICLR), 2020.

- Finn et al. [2017] C. Finn, P. Abbeel, and S. Levine. Model-agnostic meta-learning for fast adaptation of deep networks. In International Conference on Machine Learning, (ICML), pages 70:1126–1135, 2017.

- Florensa et al. [2018] C. Florensa, D. Held, X. Geng, and P. Abbeel. Automatic goal generation for reinforcement learning agents. In International Conference on Machine Learning (ICML), pages 80:1515–1528, 2018.

- Ghavamzadeh et al. [2015] M. Ghavamzadeh, S. Mannor, J. Pineau, and A. Tamar. Bayesian reinforcement learning: A survey. Found. Trends Mach. Learn., pages 8(5–6):359–483, 2015.

- Gupta et al. [2018a] A. Gupta, B. Eysenbach, C. Finn, and S. Levine. Unsupervised meta-learning for reinforcement learning. arXiv preprint arXiv: 1806.04640, 2018a.

- Gupta et al. [2018b] A. Gupta, R. Mendonca, Y. Liu, P. Abbeel, and S. Levine. Meta-reinforcement learning of structured exploration strategies. In Advances in Neural Information Processing Systems (NeurIPS), pages 31:5302–5311, 2018b.

- Hafner et al. [2020] D. Hafner, T. P. Lillicrap, J. Ba, and M. Norouzi. Dream to control: Learning behaviors by latent imagination. In International Conference on Learning Representations, (ICLR), 2020.

- Humplik et al. [2019] J. Humplik, A. Galashov, L. Hasenclever, P. A. Ortega, Y. W. Teh, and N. Heess. Meta reinforcement learning as task inference. arXiv preprint arXiv: 1905.06424, 2019.

- Igl et al. [2019] M. Igl, K. Ciosek, Y. Li, S. Tschiatschek, C. Zhang, S. Devlin, and K. Hofmann. Generalization in reinforcement learning with selective noise injection and information bottleneck. In Neural Information Processing Systems (NeurIPS), pages 32:13978–13990, 2019.

- Jabri et al. [2019] A. Jabri, K. Hsu, B. Eysenbach, A. Gupta, S. Levine, and C. Finn. Unsupervised curricula for visual meta-reinforcement learning. In Advances in Neural Information Processing Systems (NeurIPS), volume 32, 2019.

- Kaddour et al. [2020] J. Kaddour, S. Sæmundsson, and M. P. Deisenroth. Probabilistic active meta-learning. In Advances in Neural Information Processing Systems (NeurIPS), pages 33:20813–20822, 2020.

- Kaelbling et al. [1998] L. P. Kaelbling, M. L. Littman, and A. R. Cassandra. Planning and acting in partially observable stochastic domains. Artificial Intelligence, 101:99–134, 1998.

- Kingma and Welling [2014] D. P. Kingma and M. Welling. Auto-encoding variational bayes. In International Conference on Learning Representations (ICLR), 2014.

- Kirsch et al. [2020] L. Kirsch, S. van Steenkiste, and J. Schmidhuber. Improving generalization in meta reinforcement learning using learned objectives. In International Conference on Learning Representations, (ICLR), 2020.

- Laskin et al. [2020] M. Laskin, K. Lee, A. Stooke, L. Pinto, P. Abbeel, and A. Srinivas. Reinforcement learning with augmented data. In Neural Information Processing Systems (NeurIPS), pages 33:19884–19895, 2020.

- Lee et al. [2020a] A. X. Lee, A. Nagabandi, P. Abbeel, and S. Levine. Stochastic latent actor-critic: Deep reinforcement learning with a latent variable model. In Advances in Neural Information Processing Systems (NeurIPS), pages 33:741–752, 2020a.

- Lee et al. [2020b] K. Lee, K. Lee, J. Shin, and H. Lee. Network randomization: A simple technique for generalization in deep reinforcement learning. In International Conference on Learning Representations, (ICLR), 2020b.

- Lee et al. [2020c] K. Lee, Y. Seo, S. Lee, H. Lee, and J. Shin. Context-aware dynamics model for generalization in model-based reinforcement learning. In International Conference on Machine Learning, (ICML), pages 119:5757–5766, 2020c.

- Lin et al. [2020] Z. Lin, G. Thomas, G. Yang, and T. Ma. Model-based adversarial meta-reinforcement learning. In Advances in Neural Information Processing Systems (NeurIPS), pages 33:10161–10173, 2020.

- Liu et al. [2019] S. Liu, A. J. Davison, and E. Johns. Self-supervised generalisation with meta auxiliary learning. In Neural Information Processing Systems (NeurIPS), pages 32:1679–1689, 2019.

- Martin [1967] J. J. Martin. Bayesian Decision Problems and Markov Chains. Wiley, 1967.

- Mehta et al. [2020] B. Mehta, T. Deleu, S. C. Raparthy, C. J. Pal, and L. Paull. Curriculum in gradient-based meta-reinforcement learning. arXiv preprint arXiv:2002.07956, 2020.

- Mendonca et al. [2020] R. Mendonca, X. Geng, C. Finn, and S. Levine. Meta-reinforcement learning robust to distributional shift via model identification and experience relabeling. arXiv preprint arXiv: 2006.07178, 2020.

- Peng et al. [2021] M. Peng, B. Zhu, and J. Jiao. Linear representation meta-reinforcement learning for instant adaptation. arXiv preprint arXiv: 2101.04750, 2021.

- Raileanu et al. [2020a] R. Raileanu, M. Goldstein, A. Szlam, and R. Fergus. Fast adaptation to new environments via policy-dynamics value functions. In International Conference on Machine Learning, (ICML), pages 119:7920–7931, 2020a.

- Raileanu et al. [2020b] R. Raileanu, M. Goldstein, D. Yarats, I. Kostrikov, and R. Fergus. Automatic data augmentation for generalization in deep reinforcement learning. arXiv preprint arXiv:2006.12862, 2020b.

- Rajeswaran et al. [2017] A. Rajeswaran, K. Lowrey, E. Todorov, and S. M. Kakade. Towards generalization and simplicity in continuous control. In Advances in Neural Information Processing Systems (NeurIPS), pages 30:6550–6561, 2017.

- Rakelly et al. [2019] K. Rakelly, A. Zhou, C. Finn, S. Levine, and D. Quillen. Efficient off-policy meta-reinforcement learning via probabilistic context variables. In International Conference on Machine Learning, (ICML), pages 97:5331–5340, 2019.

- Rothfuss et al. [2019] J. Rothfuss, D. Lee, I. Clavera, T. Asfour, and P. Abbeel. Promp: Proximal meta-policy search. In International Conference on Learning Representations, (ICLR), 2019.

- Schulman et al. [2017] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv: 1707.06347, 2017.

- Song et al. [2020a] X. Song, Y. Jiang, S. Tu, Y. Du, and B. Neyshabur. Observational overfitting in reinforcement learning. In International Conference on Learning Representations, (ICLR), 2020a.

- Song et al. [2020b] Y. Song, A. Mavalankar, W. Sun, and S. Gao. Provably efficient model-based policy adaptation. In International Conference on Machine Learning (ICML), pages 119:9088–9098, 2020b.

- Stadie et al. [2018] B. C. Stadie, G. Yang, R. Houthooft, X. Chen, Y. Duan, Y. Wu, P. Abbeel, and I. Sutskever. The importance of sampling in meta-reinforcement learning. In Advances in Neural Information Processing Systems (NeurIPS), pages 31:9280–9290, 2018.

- Tobin et al. [2017] J. Tobin, R. Fong, A. Ray, J. Schneider, W. Zaremba, and P. Abbeel. Domain randomization for transferring deep neural networks from simulation to the real world. In International Conference on Intelligent Robots and Systems (IROS), pages 23–30, 2017.

- Todorov et al. [2012] E. Todorov, T. Erez, and Y. Tassa. Mujoco: A physics engine for model-based control. In International Conference on Intelligent Robots and Systems (IROS), pages 5026–5033, 2012.

- Wang et al. [2016] J. X. Wang, Z. Kurth-Nelson, D. Tirumala, H. Soyer, J. Z. Leibo, R. Munos, C. Blundell, D. Kumaran, and M. Botvinick. Learning to reinforcement learn. In Annual Meeting of the Cognitive Science Community (CogSci), 2016.

- Wang et al. [2020a] K. Wang, B. Kang, J. Shao, and J. Feng. Improving generalization in reinforcement learning with mixture regularization. In Advances in Neural Information Processing Systems (NeurIPS), pages 33:7968–7978, 2020a.

- Wang et al. [2019] R. Wang, J. Lehman, J. Clune, and K. O. Stanley. Paired open-ended trailblazer (POET): endlessly generating increasingly complex and diverse learning environments and their solutions. arXiv preprint arXiv: 1901.01753, 2019.

- Wang et al. [2020b] R. Wang, J. Lehman, A. Rawal, J. Zhi, Y. Li, J. Clune, and K. Stanley. Enhanced poet: Open-ended reinforcement learning through unbounded invention of learning challenges and their solutions. In International Conference on Machine Learning, (ICML), pages 119:9940–9951, 2020b.

- Whiteson et al. [2011] S. Whiteson, B. Tanner, M. E. Taylor, and P. Stone. Protecting against evaluation overfitting in empirical reinforcement learning. In IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL), pages 120–127, 2011.

- Xie et al. [2020] A. Xie, J. Harrison, and C. Finn. Deep reinforcement learning amidst lifelong non-stationarity. arXiv preprint arXiv: 2006.10701, 2020.

- Yang et al. [2020] J. Yang, B. K. Petersen, H. Zha, and D. Faissol. Single episode policy transfer in reinforcement learning. In International Conference on Learning Representations, (ICLR), 2020.

- Yarats et al. [2021] D. Yarats, I. Kostrikov, and R. Fergus. Image augmentation is all you need: Regularizing deep reinforcement learning from pixels. In International Conference on Learning Representations, (ICLR), 2021.

- Yin et al. [2020] M. Yin, G. Tucker, M. Zhou, S. Levine, and C. Finn. Meta-learning without memorization. In International Conference on Learning Representations, (ICLR), 2020.

- Zhang et al. [2018a] C. Zhang, O. Vinyals, R. Munos, and S. Bengio. A study on overfitting in deep reinforcement learning. arXiv preprint arXiv: 1804.06893, 2018a.

- Zhang et al. [2018b] H. Zhang, M. Cissé, Y. N. Dauphin, and D. Lopez-Paz. mixup: Beyond empirical risk minimization. In International Conference on Learning Representations, (ICLR), 2018b.

- Zhu et al. [2020] G. Zhu, M. Zhang, H. Lee, and C. Zhang. Bridging imagination and reality for model-based deep reinforcement learning. In Advances in Neural Information Processing Systems (NeurIPS), pages 33:8993–9006, 2020.

- Zintgraf et al. [2019] L. M. Zintgraf, K. Shiarlis, V. Kurin, K. Hofmann, and S. Whiteson. Fast context adaptation via meta-learning. In International Conference on Machine Learning, (ICML), pages 97:7693–7702, 2019.

- Zintgraf et al. [2020] L. M. Zintgraf, K. Shiarlis, M. Igl, S. Schulze, Y. Gal, K. Hofmann, and S. Whiteson. Varibad: A very good method for bayes-adaptive deep RL via meta-learning. In International Conference on Learning Representations, (ICLR), 2020.

Checklist

-

1.

For all authors…

-

(a)

Do the main claims made in the abstract and introduction accurately reflect the paper’s contributions and scope? [Yes]

-

(b)

Did you describe the limitations of your work? [Yes] In the conclusion section

-

(c)

Did you discuss any potential negative societal impacts of your work? [N/A]

-

(d)

Have you read the ethics review guidelines and ensured that your paper conforms to them? [Yes]

-

(a)

-

2.

If you are including theoretical results…

-

(a)

Did you state the full set of assumptions of all theoretical results? [N/A]

-

(b)

Did you include complete proofs of all theoretical results? [N/A]

-

(a)

-

3.

If you ran experiments…

-

(a)

Did you include the code, data, and instructions needed to reproduce the main experimental results (either in the supplementary material or as a URL)? [Yes] as a URL in Appendix

-

(b)

Did you specify all the training details (e.g., data splits, hyperparameters, how they were chosen)? [Yes] in Appendix

-

(c)

Did you report error bars (e.g., with respect to the random seed after running experiments multiple times)? [Yes]

-

(d)

Did you include the total amount of compute and the type of resources used (e.g., type of GPUs, internal cluster, or cloud provider)? [Yes] in Appendix

-

(a)

-

4.

If you are using existing assets (e.g., code, data, models) or curating/releasing new assets…

-

(a)

If your work uses existing assets, did you cite the creators? [Yes] source codes of the baselines

-

(b)

Did you mention the license of the assets? [Yes] in the supplementary material

-

(c)

Did you include any new assets either in the supplementary material or as a URL? [Yes] Links to the baseline codes in Appendix

-

(d)

Did you discuss whether and how consent was obtained from people whose data you’re using/curating? [N/A]

-

(e)

Did you discuss whether the data you are using/curating contains personally identifiable information or offensive content? [N/A]

-

(a)

-

5.

If you used crowdsourcing or conducted research with human subjects…

-

(a)

Did you include the full text of instructions given to participants and screenshots, if applicable? [N/A]

-

(b)

Did you describe any potential participant risks, with links to Institutional Review Board (IRB) approvals, if applicable? [N/A]

-

(c)

Did you include the estimated hourly wage paid to participants and the total amount spent on participant compensation? [N/A]

-

(a)

Appendix A Implementation Details

A.1 Baselines

RL2 and variBAD

We use the open-source reference implementation of variBAD at https://github.com/lmzintgraf/varibad to report the results of RL2 and variBAD. All gridworld and MuJoCo environments used for our experiments are based on this implementation. We modify the environments to contain separate and . The oracle versions of RL2 and variBAD use the original environment . We keep all the network structures and hyperparameters of the reference implementation except for the gridworld task. We increase the GRU hidden size from 64 to 128 and the GRU output size from 5 to 32 for the gridworld task because our gridworld task () has more cells than the gridworld task in variBAD ().

LDM

We implement LDM based on the implementation of variBAD. Refer to Table 3 for the hyperparameters used to train LDM. Most of the network structures and hyperparameters follow the reference implementation of variBAD. The policy network of LDM is from the RL2 implementation of variBAD. The latent dynamics network is from the VAE part of variBAD. VariBAD uses a multi-head structure (each head for a goal state in ) with binary cross-entropy (BCE) loss for the decoder output for the gridworld task. Because LDM needs to generate rewards for tasks out of as well, we modify the decoder to a general single head structure. The latent model is multidimensional, therefore we sample the weight corresponding to each dimension independently. The weights in 4a represent the mean weights of all dimensions. Refer to our reference implementation at https://github.com/suyoung-lee/LDM.

Mixreg

The original Mixreg is based on Deep Q-Network (DQN). Therefore, we implement a variant of Mixreg based on RL2 by modifying the code we use for RL2. We use the same Dirichlet mixture weights used for LDM and multiply the weights to the states and rewards to generate mixture tasks. We keep the ratio between the true tasks and mixture tasks as 14:2.

ProMP and E-MAML

We use the open-source reference implementation of ProMP at https://github.com/jonasrothfuss/ProMP for ProMP and E-MAML. We only modify the environments to contain separate and and keep all the reference implementation setup.

PEARL, MQL, and MIER

We use the open-source reference implementation of PEARL at https://github.com/katerakelly/oyster, MQL at https://github.com/amazon-research/meta-q-learning and MIER at https://github.com/russellmendonca/mier_public. We only modify the environments to contain separate and and keep all the reference implementation setup. We report the performance at 5 million steps as asymptotic performance for MuJoCo tasks.

A.2 Runtime Comparison

We report the average runtime spent to train Half-cheetah-velocity for 5e7 environment steps (5e6 steps for PEARL) in Table 2. We ran multiple experiments on our machine (Nvidia TITAN X) simultaneously, therefore consider the following as relative ordering of complexity.

| LDM | Mixreg | RL2 | variBAD | ProMP | E-MAML | PEARL | |||

|---|---|---|---|---|---|---|---|---|---|

|

31 | 28 | 25 | 10 | 2 | 2 | 25 |

ProMP and E-MAML require the least training time because they do not use recurrent networks. VariBAD requires less train time than RL2 because variBAD does not backpropagate the policy network’s gradient to the recurrent encoder. LDM requires more training time than RL2 because LDM trains the policy network and a separate latent dynamics network.

A.3 Hyperparameters

| Gridworld | MuJoCo | |||||||||||||||||

| RL algorithm |

|

|

||||||||||||||||

|

30 | 200 | ||||||||||||||||

|

4 | 2 | ||||||||||||||||

|

1.0 | 1.0 (2.0 for Ant-goal) | ||||||||||||||||

|

0.7 | 0.5 | ||||||||||||||||

| Number of parallel processes | Normal workers () | 14 | 14 (12 for Cheetah-vel) | |||||||||||||||

| Mixture workers () | 2 | 2 (4 for Cheetah-vel) | ||||||||||||||||

| Policy Network | Encoder-p () |

|

1 FC layer, 32 dim | 1 FC layer, 32 dim | ||||||||||||||

|

0 dim | 1 FC layer, 16 dim | ||||||||||||||||

|

1 FC layer, 8 dim | 1 FC layer, 16 dim | ||||||||||||||||

| GRU | 128 hidden size | 128 hidden size | ||||||||||||||||

|

32 | 128 | ||||||||||||||||

|

1 FC layer, 32 nodes | 1 FC layer, 128 nodes | ||||||||||||||||

| Activation | tanh | tanh | ||||||||||||||||

| Learning rate | 7.0e-4 | 7.0e-4 | ||||||||||||||||

| Latent Dynamics Network | Encoder-v () |

|

1 FC layer, 32 dim | 1 FC layer, 32 dim | ||||||||||||||

|

0 dim | 1 FC layer, 16 dim | ||||||||||||||||

|

1 FC layer, 8 dim | 1 FC layer, 16 dim | ||||||||||||||||

| GRU | 128 hidden size | 128 hidden size | ||||||||||||||||

|

32 |

|

||||||||||||||||

|

|

|

||||||||||||||||

|

BCE | MSE | ||||||||||||||||

| Activation | ReLU | ReLU | ||||||||||||||||

| Learning rate | 0.001 | 0.001 | ||||||||||||||||

| Buffer size | 100000 | 10000 | ||||||||||||||||

Appendix B Test-time Generalization of the Latent Model

We empirically demonstrate that the structure of the test task is well reflected in the latent models although the latent dynamics network is not trained in and (Figure 9). For each task in the gridworld task, we collect the latent model at the last step (). Then we reduce the dimension of the collected latent models to two dimensions via t-SNE (Figure 9b). The latent models of the tasks in are between the inner subset and the outer subset of the training tasks. Similarly, we evaluate the latent dynamics model in Ant-goal. We evaluate the latent models on 48 tasks as in Figure 9c where and . Although LDM is not trained on the tasks with and , the latent models are between the training tasks’ latent models (Figure 9d). On the other hand, the policy network can not be trained stably with a large input dropout (RL2 dropout in Figure 13). These empirical results support our claim that our latent dynamics network with dropout can generalize to unseen test dynamics although the policy can not. Therefore the mixtures of the latent models can generate tasks similar to the test tasks.

Appendix C Analysis on the Ant-goal Task (Extrapolation Level )

At the beginning of an iteration, we sample training tasks from on the Ant-goal task. Because we sample a sufficiently large number of training tasks, a mixture task’s goal is located near the origin with high probability if we set (Figure 10). Therefore we use that effectively improves the test returns on the Ant-goal task.

Appendix D Number of Mixture Workers

Refer to Figure 11 for the returns in and (Table 4) on the Half-cheetah-velocity task for different values of . We report the results for and keep the total number of workers fixed at 16. For all values of , LDM outperforms RL2 in test returns at the beginning of training. However, the test return of LDM decays as training tasks dominate the policy updates. If is small, training of the policy is easily dominated by the training tasks, and the test return converges that of RL2 quickly. If is too large, normal workers have difficulty in learning the optimal policy for training tasks ( in Figure 11, second row). We use that sets a balance in between.

Appendix E Ablations on Dropout

E.1 Amount of Dropout

We report the performance of LDM with different dropout rates in Figure 12. LDM without the input dropout () slightly outperforms RL2 at the end of training, but the improvement is insignificant. The test performance improves as the dropout rate increases. But if the rate is too large (), it becomes difficult to train the decoder and the performance decreases.

E.2 Dropout on other baselines

To demonstrate that dropout is not all the regularization required to achieve better generalization, we evaluate RL2 dropout and variBAD dropout, both with in Figure 13. RL2 doesn’t use a decoder, therefore we apply dropout on the state input of the policy encoder. RL2 dropout cannot be trained stably, since training policy network with a multi-step policy gradient loss is much more complex than training the decoder with a single-step regression loss. For variBAD dropout, we apply dropout on the state input of the decoder (same as LDM). VariBAD shares an encoder for both VAE and the policy network and does not backpropagate the policy loss to the encoder. Therefore, the encoding, trained with decoder with dropout, may disturb the policy network from stable training. We evaluate LDM with a shared encoder, where we use a single encoder instead of separate encoder-p and encoder-v. We encountered instability in policy training when the encoding, trained for a decoder with dropout, was used for policy. If we have separate encoders, even when the VAE is not perfectly trained due to the dropout, it does not affect the policy.

Appendix F Additional Experimental Results

F.1 Gridworld First Rollout Episode

The non-oracle methods spend most of the timesteps in the first episode to explore the goal states of . Therefore the mean returns in are significantly lower than those at the -th episode (Figure 3). The oracle methods explore the goal states of before exploring the outermost states of during the first episode. Therefore the mean returns of oracle methods in are lower than those of non-oracle methods.

F.2 MuJoCo First Rollout Episode

Since the task can be inferred from the reward at any timestep of training, the performance of LDM at the first episode is nearly the same as that at the -th episode (Figure 7). ProMP, E-MAML, and PEARL need to collect trajectories until the -th episode.

F.3 MuJoCo Training Results

As we defined , we define to report the training results (Table 4).

| Ant-direction | Ant-goal | Half-cheetah-velocity | ||

|---|---|---|---|---|

Refer to Figure 16 for the mean returns on the training tasks and Figure 17 for the trajectories. LDM achieves higher training returns for all tasks than its baseline RL2, although LDM devotes some portion of training steps to train mixture tasks. LDM achieves the best training performance in the Ant-direction task while RL2’s performance gradually decreases. When there are only a few training tasks, RL2-based methods often collapse into a single-mode, unable to construct sharp decision boundaries between tasks (Figure 17a). VariBAD achieves high training returns in Ant-direction and Ant-goal, although its test returns in are low.