Improved Autoregressive Modeling

with Distribution Smoothing

Abstract

While autoregressive models excel at image compression, their sample quality is often lacking. Although not realistic, generated images often have high likelihood according to the model, resembling the case of adversarial examples. Inspired by a successful adversarial defense method, we incorporate randomized smoothing into autoregressive generative modeling. We first model a smoothed version of the data distribution, and then reverse the smoothing process to recover the original data distribution. This procedure drastically improves the sample quality of existing autoregressive models on several synthetic and real-world image datasets while obtaining competitive likelihoods on synthetic datasets.

1 Introduction

Autoregressive models have exhibited promising results in a variety of downstream tasks. For instance, they have shown success in compressing images (Minnen et al., 2018), synthesizing speech (Oord et al., 2016a) and modeling complex decision rules in games (Vinyals et al., 2019). However, the sample quality of autoregressive models on real-world image datasets is still lacking.

Poor sample quality might be explained by the manifold hypothesis: many real world data distributions (e.g. natural images) lie in the vicinity of a low-dimensional manifold (Belkin & Niyogi, 2003), leading to complicated densities with sharp transitions (i.e. high Lipschitz constants), which are known to be difficult to model for density models such as normalizing flows (Cornish et al., 2019). Since each conditional of an autoregressive model is a 1-dimensional normalizing flow (given a fixed context of previous pixels), a high Lipschitz constant will likely hinder learning of autoregressive models.

Another reason for poor sample quality is the “compounding error” issue in autoregressive modeling. To see this, we note that an autoregressive model relies on the previously generated context to make a prediction; once a mistake is made, the model is likely to make another mistake which compounds (Kääriäinen, 2006), eventually resulting in questionable and unrealistic samples. Intuitively, one would expect the model to assign low-likelihoods to such unrealistic images, however, this is not always the case. In fact, the generated samples, although appearing unrealistic, often are assigned high-likelihoods by the autoregressive model, resembling an “adversarial example” (Szegedy et al., 2013; Biggio et al., 2013), an input that causes the model to output an incorrect answer with high confidence.

Inspired by the recent success of randomized smoothing techniques in adversarial defense (Cohen et al., 2019), we propose to apply randomized smoothing to autoregressive generative modeling. More specifically, we propose to address a density estimation problem via a two-stage process. Unlike Cohen et al. (2019) which applies smoothing to the model to make it more robust, we apply smoothing to the data distribution. Specifically, we convolve a symmetric and stationary noise distribution with the data distribution to obtain a new “smoother” distribution. In the first stage, we model the smoothed version of the data distribution using an autoregressive model. In the second stage, we reverse the smoothing process—a procedure which can also be understood as “denoising”—by either applying a gradient-based denoising approach (Alain & Bengio, 2014) or introducing another conditional autoregressive model to recover the original data distribution from the smoothed one. By choosing an appropriate smoothing distribution, we aim to make each step easier than the original learning problem: smoothing facilitates learning in the first stage by making the input distribution fully supported without sharp transitions in the density function; generating a sample given a noisy one is easier than generating a sample from scratch.

We show with extensive experimental results that our approach is able to drastically improve the sample quality of current autoregressive models on several synthetic datasets and real-world image datasets, while obtaining competitive likelihoods on synthetic datasets. We empirically demonstrate that our method can also be applied to density estimation, image inpainting, and image denoising.

2 Background

We consider a density estimation problem. Given -dimensional i.i.d samples from a continuous data distribution , the goal is to approximate with a model parameterized by . A commonly used approach for density estimation is maximum likelihood estimation (MLE), where the objective is to maximize .

2.1 Autoregressive models

An autoregressive model (Larochelle & Murray, 2011; Salimans et al., 2017) decomposes a joint distribution into the product of univariate conditionals:

| (1) |

where stands for the -th component of , and refers to the components with indices smaller than . In general, an autoregressive model parameterizes each conditional using a pre-specified density function (e.g. mixture of logistics). This bounds the capacity of the model by limiting the number of modes for each conditional.

Although autoregressive models have achieved top likelihoods amongst all types of density based models, their sample quality is still lacking compared to energy-based models (Du & Mordatch, 2019) and score-based models (Song & Ermon, 2019). We believe this can be caused by the following two reasons.

2.2 Manifold Hypothesis

Several existing methods (Roweis & Saul, 2000; Tenenbaum et al., 2000) rely on the manifold hypothesis, i.e. that real-world high-dimensional data tends to lie on a low-dimensional manifold (Narayanan & Mitter, 2010). If the manifold hypothesis is true, then the density of the data distribution is not well defined in the ambient space; if the manifold hypothesis holds only approximately and the data lies in the vicinity of a manifold, then only points that are very close to the manifold would have high density, while all other points would have close to zero density. Thus we may expect the data density around the manifold to have large first-order derivatives, i.e. the density function has a high Lipschitz constant (if not infinity).

To see this, let us consider a 2-d example where the data distribution is a thin ring distribution (almost a unit circle) formed by rotating the 1-d Gaussian distribution around the origin. The density function of the ring has a high Lipschitz constant near the “boundary”. Let us focus on a data point travelling along the diagonal as shown in the leftmost panel in figure 2. We plot the first-order directional derivatives of the density for the point as it approaches the boundary from the inside, then lands on the ring, and finally moves outside the ring (see figure 2). As we can see, when the point is far from the boundary, the derivative has a small magnitude. When the point moves closer to the boundary, the magnitude increases and changes significantly near the boundary even with small displacements in the trajectory. However, once the point has landed on the ring, the magnitude starts to decrease. As it gradually moves off the ring, the magnitude first increases and then decreases just like when the point approached the boundary from the inside. It has been observed that certain likelihood models, such as normalizing flows, exhibit pathological behaviors on data distributions whose densities have high Lipschitz constants (Cornish et al., 2019). Since each conditional of an autoregressive model is a 1-d normalizing flow given a fixed context, a high Lipschitz constant on data density could also hinder learning of autoregressive models.

2.3 Compounding errors in autoregressive modeling

Autoregressive models can also be susceptible to compounding errors from the conditional distributions (Lamb et al., 2016) during sampling time. We notice that an autoregressive model learns the joint density by matching each of the conditional with . In practice, we typically have access to a limited amount of training data, which makes it hard for an autoregressive model to capture all the conditional distributions correctly due to the curse of dimensionality. During sampling, since a prediction is made based on the previously generated context, once a mistake is made at a previous step, the model is likely to make more mistakes in the later steps, eventually generating a sample that is far from being an actual image, but is mistakenly assigned a high-likelihood by the model.

The generated image , being unrealistic but assigned a high-likelihood, resembles an adversarial example, i.e., an input that causes the model to make mistakes. Recent works (Cohen et al., 2019) in adversarial defense have shown that random noise can be used to improve the model’s robustness to adversarial perturbations — a process during which adversarial examples that are close to actual data are generated to fool the model. We hypothesize that such approach can also be applied to improve an autoregressive modeling process by making the model less vulnerable to compounding errors occurred during density estimation. Inspired by the success of randomized smoothing in adversarial defense (Cohen et al., 2019), we propose to apply smoothing to autoregressive modeling to address the problems mentioned above.

3 Generative models with distribution smoothing

In the following, we propose to decompose a density estimation task into a smoothed data modeling problem followed by an inverse smoothing problem where we recover the true data density from the smoothed one.

3.1 Randomized smoothing process

Unlike Cohen et al. (2019) where randomized smoothing is applied to a model, we apply smoothing directly to the data distribution . To do this, we introduce a smoothing distribution — a distribution that is symmetric and stationary (e.g. a Gaussian or Laplacian kernel) — and convolve it with to obtain a new distribution . When is a normal distribution, this convolution process is equivalent to perturbing the data distribution with Gaussian noise, which, intuitively, will make the data distribution smoother. In the following, we formally prove that convolving a 1-d distribution with a suitable noise can indeed “smooth” .

Theorem 1.

Given a continuous and bounded 1-d distribution that is supported on , for any 1-d distribution that is symmetric (i.e. ), stationary (i.e. translation invariant) and satisfies for any given , we have , where and denotes the Lipschitz constant of the given 1-d function.

Theorem 1 shows that convolving a 1-d data distribution with a suitable noise distribution (e.g. ) can reduce the Lipschitzness (i.e. increase the smoothness) of . We provide the proof of Theorem 1 in Appendix A.

Given with a high Lipschitz constant, we empirically verify that density estimation becomes an easier task on the smoothed distribution than directly on . To see this, we visualize a 1-d example in figure 3(a), where we want to model a ten-mode data distribution with a mixture of logistics model. If our model has three logistic components, there is almost no way for the model, which only has three modes, to perfectly fit this data distribution, which has ten separate modes with sharp transitions. The model, after training (see figure 3(a)), mistakenly assigns a much higher density to the low density regions between nearby modes. If we convolve the data distribution with , the new distribution becomes smoother (see figure 3(b)) and can be captured reasonably well by the same mixture of logistics model with only three modes (see figure 3(b)). Comparing the same model’s performance on the two density estimation tasks, we can see that the model is doing a better job at modeling the smoothed version of the data distribution than the original data distribution, which has a high Lipschitz constant.

This smoothing process can also be understood as a regularization term for the original maximum likelihood objective (on the un-smoothed data distribution), encouraging the learned model to be smooth, as formalized by the following statement:

Proposition 1 (Informal).

Assume that the symmetric and stationary smoothing distribution has small variance and negligible higher order moments, then

for some constant .

Proposition 1 shows that our smoothing process provides a regularization effect on the original objective when no noise is added, where the regularization aims to maximize . Since samples from should be close to a local maximum of the model, this encourages the second order gradients computed at a data point to become closer to zero (if it were positive then will not be a local maximum), creating a smoothing effect. This extra term is also the trace of the score function (up to a multiplicative constant) that can be found in the score matching objective (Hyvärinen, 2005), which is closely related to many denoising methods (Vincent, 2011; Hyvärinen, 2008). This regularization effect can, intuitively, increase the generalization capability of the model. In fact, it has been demonstrated empirically that training with noise can lead to improvements in network generalization (Sietsma & Dow, 1991; Bishop, 1995). Our argument is also similar to that used in (Bishop, 1995) except that we consider a more general generative modeling case as opposed to supervised learning with squared error. We provide the formal statement and proof of Proposition 1 in Appendix A.

3.2 Autoregressive distribution smoothing models

Motivated by the previous 1-d example, instead of directly modeling , which can have a high Lipschitz constant, we propose to first train an autoregressive model on the smoothed version of the data distribution . Although the smoothing process makes the distribution easier to learn, it also introduces bias. Thus, we need an extra step to debias the learned distribution by reverting the smoothing process.

If our goal is to generate approximate samples for , when and is small, we can use the gradient of for denoising (Alain & Bengio, 2014). More specifically, given smoothed samples from , we can “denoise” samples via:

| (2) |

which only requires the knowledge of and the ability to sample from it. However, this approach does not provide a likelihood estimate and Eq. (2) only works when is Gaussian (though alternative denoising updates for other smoothing processes could be derived under the Empirical Bayes framework (Raphan & Simoncelli, 2011)). Although Eq. (2) could provide reasonable denoising results when the smoothing distribution has a small variance, obtained in this way is only a point estimation of and does not capture the uncertainty of .

To invert more general smoothing distributions (beyond Gaussians) and to obtain likelihood estimations, we introduce a second autoregressive model . The parameterized joint density can then be computed as . To obtain our approximation of , we need to integrate over on the joint distribution to obtain , which is in general intractable. However, we can easily obtain an evidence lower bound (ELBO):

| (3) |

Note that when is fixed, the entropy term is a constant with respect to the optimization parameters. Maximizing ELBO on is then equivalent to maximizing:

| (4) |

From equation 4, we can see that optimizing the two models and separately via maximum likelihood estimation is equivalent to optimizing .

3.3 Tradeoff in modeling

In general, there is a trade-off between the difficulty of modeling and . To see this, let us consider two extreme cases for the variance of — when has a zero variance and an infinite variance. When has a zero variance, is a distribution with all its probability mass at , meaning that no noise is added to the data distribution. In this case, modeling the smoothed distribution would be equivalent to modeling , which can be hard as discussed above. The reverse smoothing process, however, would be easy since can simply be an identity map to perfectly invert the smoothing process. In the second case when has an infinite variance, modeling would be easy because all the information about the original data is lost, and would be close to the smoothing distribution. Modeling , on the other hand, is equivalent to directly modeling , which can be challenging.

Thus, the key here is to appropriately choose a smoothing level so that both and can be approximated relatively well by existing autoregressive models. In general, the optimal variance might be hard to find. Although one can train by jointly optimizing ELBO, in practice, we find this approach often assigns a very large variance to , which can trade-off sample quality for better likelihoods on high dimensional image datasets. We find empirically that a pre-specified chosen by heuristics (Saremi & Hyvarinen, 2019; Garreau et al., 2017) is able to generate much better samples than training via ELBO. In this paper, we will focus on the sample quality and leave the training of for future work.

4 Experiments

In this section, we demonstrate empirically that by appropriately choosing the smoothness level of randomized smoothing, our approach is able to drastically improve the sample quality of existing autoregressive models on several synthetic and real-world datasets while retaining competitive likelihoods on synthetic datasets. We also present results on image inpainting in Appendix C.2.

4.1 Choosing the smoothing distribution

To help us build insights into the selection of the smoothing distribution , we first focus on a 1-d multi-modal distribution (see figure 4 leftmost panel). We use model-based methods to invert the smoothed distribution and provide analysis on “single-step denoising” in Appendix B.1. We start with the exploration of three different types of smoothing distributions – Gaussian distribution, Laplace distribution, and uniform distribution. For each type of distribution, we perform a grid search to find the optimal variance. Since our approach requires the modeling of both and , we stack and together, and use a MADE model (Germain et al., 2015) with a mixture of two logistic components to parameterize and at the same time. For the baseline model, we train a mixture of logistics model directly on . We compare the results in the middle two panels in figure 4.

We find that although the baseline with eight logistic components has the capacity to perfectly model the multi-modal data distribution, which has six modes, the baseline model still fails to do so. We believe this can be caused by optimization or initialization issues for modeling a distribution with a high Lipschitz constant. Our method, on the other hand, demonstrates more robustness by successfully modeling the different modes in the data distribution even when using only two mixture components for both and .

For all the three types of smoothing distributions, we observe a reverse U-shape correlation between the variance of and ELBO values — with ELBO first increasing as the variance increases and then decreasing as the variance grows beyond a certain point. The results match our discussion on the trade-off between modeling and in Section 3.3. We notice from the empirical results that Gaussian smoothing is able to obtain better ELBO than the other two distributions. Thus, we will use for the later experiments.

4.2 2-D synthetic datasets

In this section, we consider two challenging 2-d multi-modal synthetic datasets (see figure 5). We focus on model-based denoising methods and present discussion on “single-step denoising” in Appendix B.2. We use a MADE model with comparable number of total parameters for both the baseline and our approach. For the baseline, we train the MADE model directly on the data. For our randomized smoothing model, we choose to be the smoothing distribution. We observe that with this randomized smoothing approach, our model is able to generate better samples than the baseline (according to a human observer) even when using less logistic components (see figure 5). We provide more analysis on the model’s performance in Appendix B.2. We also provide the negative log-likelihoods in Tab. 1.

4.3 Image experiments

In this section, we focus on three common image datasets, namely MNIST, CIFAR-10 (Krizhevsky et al., 2009) and CelebA (Liu et al., 2015). We select to be the smoothing distribution. We use PixelCNN++ (Salimans et al., 2017) as the model architecture for both and . We provide more details about settings in Appendix C.

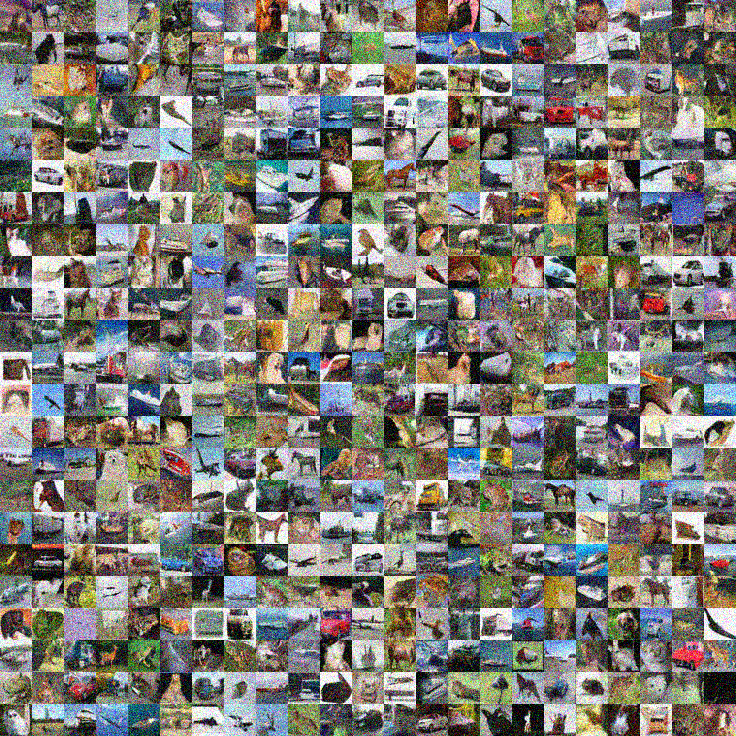

Image generation. For image datasets, we select the of according to analysis in (Saremi & Hyvarinen, 2019) (see Appendix C for more details). Since is a Gaussian distribution, we can apply “single-step denoising” to reverse the smoothing process for samples drawn from . In this case, the model is not required for sampling since the gradient of can be used to denoise samples (also from ) (see equation 2). We present smoothed samples from , reversed smoothing samples processed by “single-step denoising” and processed by in figure 6. For comparison, we also present samples from a PixelCNN++ with parameters comparable to the sum of total parameters of and . We find that by using this randomized smoothing approach, we are able to drastically improve the sample quality of PixelCNN++ (see the rightmost panel in figure 6). We note that with only , a PixelCNN++ optimized on the smoothed data, we already obtain more realistic samples compared to the original PixelCNN++ method. However, is needed to compute the likelihood lower bounds. We report the sample quality evaluated by Fenchel Inception Distance (FID (Heusel et al., 2017)), Kernel Inception Distance (KID (Bińkowski et al., 2018)), and Inception scores (Salimans et al., 2016) in Tab. 2. Although our method obtains better samples compared to the original PixelCNN++, our model has worse likelihoods as evaluated in BPDs. We believe this is because likelihood and sample quality are not always directly correlated as discussed in Theis et al. (2015). We also tried training the variance for by jointly optimizing ELBO. Although training the variance can produce better likelihoods, it does not generate samples with comparable quality as our method (i.e. choosing variance by heuristics). Thus, it is hard to conclusively determine what is the best way of choosing . We provide more image samples in Appendix C.4 and nearest neighbors analysis in Appendix C.5.

Model Inception FID KID BPD PixelCNN (Oord et al., 2016b) - PixelIQN (Ostrovski et al., 2018) - - PixelCNN++ (Salimans et al., 2017) 0.046 EBM (Du & Mordatch, 2019) - - i-ResNet (Behrmann et al., 2019) - 65.01 - 3.45 MADE (Germain et al., 2015) - - - 5.67 Glow (Kingma & Dhariwal, 2018) - 46.90 - 3.35 Single-step (Ours) 57.53 0.052 - Two-step (Ours) 0.022

5 Additional experiments on normalizing flows

In this section, we demonstrate empirically on 2-d synthetic datasets that randomized smoothing techniques can also be applied to improve the sample quality of normalizing flow models (Rezende & Mohamed, 2015). We focus on RealNVP (Dinh et al., 2016). We compare the RealNVP model trained with randomized smoothing, where we use (also a RealNVP) to revert the smoothing process, with a RealNVP trained with the original method but with comparable number of parameters. We observe that smoothing is able to improve sample quality on the datasets we consider (see figure 7) while also obtaining competitive likelihoods. On the checkerboard dataset, our method has negative log-likelihoods 3.64 while the original RealNVP has 3.72; on the Olympics dataset, our method has negative log-likelihoods 1.32 while the original RealNVP has 1.80. This example demonstrates that randomized smoothing techniques can also be applied to normalizing flow models.

6 Related Work

Our approach shares some similarities with denoising autoencoders (DAE, Vincent et al. (2008)) which recovers a clean observation from a corrupted one. However, unlike DAE which has a trainable encoder and a fixed prior distribution, our approach fixes the encoder and models the prior using an autoregressive model. Generative stochastic networks (GSN, Bengio et al. (2014)) use DAEs to train a Markov chain whose equilibrium distribution matches the data distribution. However, GSN needs to start the chain from a sample that is very close to the training distribution. Denoising diffusion model (Sohl-Dickstein et al., 2015; Ho et al., 2020) and NCSN (Song & Ermon (2019; 2020)) address the issue of GSNs by considering a sequence of distributions corresponding to data corrupted with various noise levels. By setting multiple noise levels that are close to each other, the sample from the previous level can serve as a proper initialization for the sampling process at the next level. This way, the model can start from a distribution that is easy to model and gradually move to the desired distribution. However, due to the large number of noise levels, such approaches require many steps for the chain to converge to the right data distribution.

In this paper, we instead propose to use only one level of smoothing by modeling each step with a powerful autoregressive model instead of deterministic autoencoders. Motivated by the success of “randomized smoothing” techniques in adversarial defense (Cohen et al., 2019), we perform randomized smoothing directly on the data distribution. Unlike denoising score matching (Vincent, 2011), a technique closely related to denoising diffusion models and NCSN, which requires the perturbed noise to be a Gaussian distribution, we are able to work with different noise distributions.

Our smoothing method is also relevant to “dequantization” approaches that are common in normalizing flow models, where the discrete data distribution is converted to a continuous one by adding continuous noise (Uria et al., 2013; Ho et al., 2019). However the added noise for “dequantization” in flows is often indistinguishable to human eyes, and the reverse “dequantization” process is often ignored. In contrast, we consider noise scales that are significantly larger and thus a denoising process is required.

Our method is also related to “quantization” approaches which reduce the number of “significant” bits that are modeled by a generative model (Kingma & Dhariwal, 2018; Menick & Kalchbrenner, 2018). For instance, Glow (Kingma & Dhariwal, 2018) only models the 5 most significant bits of an image, which improves the visual quality of samples but decreases color fidelity. SPN (Menick & Kalchbrenner, 2018) introduces another network to predict the remaining bits conditioned on the 3 most significant bits already modeled. Modeling the most significant bits can be understood as capturing a data distribution perturbed by bit-wise correlated noise, similar to modeling smoothed data in our method. Modeling the remaining bits conditioned on the most significant ones in SPN is then similar to denoising. However, unlike these quantization approaches which process an image at the “significant” bits level, we apply continuous data independent Gaussian noise to the entire image with a different motivation to smooth the data density function.

7 Discussion

In this paper, we propose to incorporate randomized smoothing techniques into autoregressive modeling. By choosing the smoothness level appropriately, this seemingly simple approach is able to drastically improve the sample quality of existing autoregressive models on several synthetic and real-world datasets while retaining reasonable likelihoods. Our work provides insights into how recent adversarial defense techniques can be leveraged to building more robust generative models. Since we apply randomized smoothing technique directly to the target data distribution other than the model, we believe our approach is also applicable to other generative models such as variational autoencoders (VAEs) and generative adversarial networks (GANs).

Acknowledgements

The authors would like to thank Kristy Choi for reviewing the draft of the paper. This research was supported by NSF (#1651565, #1522054, #1733686), ONR (N00014-19-1-2145), AFOSR (FA9550-19-1-0024), ARO, and Amazon AWS.

References

- Alain & Bengio (2014) Guillaume Alain and Yoshua Bengio. What regularized auto-encoders learn from the data-generating distribution. The Journal of Machine Learning Research, 15(1):3563–3593, 2014.

- Behrmann et al. (2019) Jens Behrmann, Will Grathwohl, Ricky TQ Chen, David Duvenaud, and Jörn-Henrik Jacobsen. Invertible residual networks. In International Conference on Machine Learning, pp. 573–582, 2019.

- Belkin & Niyogi (2003) Mikhail Belkin and Partha Niyogi. Laplacian eigenmaps for dimensionality reduction and data representation. Neural computation, 15(6):1373–1396, 2003.

- Bengio et al. (2014) Yoshua Bengio, Eric Laufer, Guillaume Alain, and Jason Yosinski. Deep generative stochastic networks trainable by backprop. In International Conference on Machine Learning, pp. 226–234, 2014.

- Biggio et al. (2013) Battista Biggio, Igino Corona, Davide Maiorca, Blaine Nelson, Nedim Šrndić, Pavel Laskov, Giorgio Giacinto, and Fabio Roli. Evasion attacks against machine learning at test time. In Joint European conference on machine learning and knowledge discovery in databases, pp. 387–402. Springer, 2013.

- Bińkowski et al. (2018) Mikołaj Bińkowski, Dougal J Sutherland, Michael Arbel, and Arthur Gretton. Demystifying mmd gans. arXiv preprint arXiv:1801.01401, 2018.

- Bishop (1995) Chris M Bishop. Training with noise is equivalent to tikhonov regularization. Neural computation, 7(1):108–116, 1995.

- Cohen et al. (2019) Jeremy M Cohen, Elan Rosenfeld, and J Zico Kolter. Certified adversarial robustness via randomized smoothing. arXiv preprint arXiv:1902.02918, 2019.

- Cornish et al. (2019) Rob Cornish, Anthony L Caterini, George Deligiannidis, and Arnaud Doucet. Relaxing bijectivity constraints with continuously indexed normalising flows. arXiv preprint arXiv:1909.13833, 2019.

- Dinh et al. (2016) Laurent Dinh, Jascha Sohl-Dickstein, and Samy Bengio. Density estimation using real nvp. arXiv preprint arXiv:1605.08803, 2016.

- Du & Mordatch (2019) Yilun Du and Igor Mordatch. Implicit generation and generalization in energy-based models. arXiv preprint arXiv:1903.08689, 2019.

- Garreau et al. (2017) Damien Garreau, Wittawat Jitkrittum, and Motonobu Kanagawa. Large sample analysis of the median heuristic. arXiv preprint arXiv:1707.07269, 2017.

- Germain et al. (2015) Mathieu Germain, Karol Gregor, Iain Murray, and Hugo Larochelle. Made: Masked autoencoder for distribution estimation. In International Conference on Machine Learning, pp. 881–889, 2015.

- Heusel et al. (2017) Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. Gans trained by a two time-scale update rule converge to a local nash equilibrium. arXiv preprint arXiv:1706.08500, 2017.

- Ho et al. (2019) Jonathan Ho, Xi Chen, Aravind Srinivas, Yan Duan, and Pieter Abbeel. Flow++: Improving flow-based generative models with variational dequantization and architecture design. arXiv preprint arXiv:1902.00275, 2019.

- Ho et al. (2020) Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. arXiv preprint arXiv:2006.11239, 2020.

- Hyvärinen (2005) Aapo Hyvärinen. Estimation of non-normalized statistical models by score matching. Journal of Machine Learning Research, 6(Apr):695–709, 2005.

- Hyvärinen (2008) Aapo Hyvärinen. Optimal approximation of signal priors. Neural Computation, 20(12):3087–3110, 2008.

- Kääriäinen (2006) Matti Kääriäinen. Lower bounds for reductions. In Atomic Learning Workshop, 2006.

- Kingma & Dhariwal (2018) Durk P Kingma and Prafulla Dhariwal. Glow: Generative flow with invertible 1x1 convolutions. In Advances in neural information processing systems, pp. 10215–10224, 2018.

- Krizhevsky et al. (2009) Alex Krizhevsky et al. Learning multiple layers of features from tiny images. 2009.

- Lamb et al. (2016) Alex M Lamb, Anirudh Goyal Alias Parth Goyal, Ying Zhang, Saizheng Zhang, Aaron C Courville, and Yoshua Bengio. Professor forcing: A new algorithm for training recurrent networks. In Advances in neural information processing systems, pp. 4601–4609, 2016.

- Larochelle & Murray (2011) Hugo Larochelle and Iain Murray. The neural autoregressive distribution estimator. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, pp. 29–37. JMLR Workshop and Conference Proceedings, 2011.

- Liu et al. (2015) Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Deep learning face attributes in the wild. In Proceedings of the IEEE international conference on computer vision, pp. 3730–3738, 2015.

- Menick & Kalchbrenner (2018) Jacob Menick and Nal Kalchbrenner. Generating high fidelity images with subscale pixel networks and multidimensional upscaling. arXiv preprint arXiv:1812.01608, 2018.

- Minnen et al. (2018) David Minnen, Johannes Ballé, and George D Toderici. Joint autoregressive and hierarchical priors for learned image compression. In Advances in Neural Information Processing Systems, pp. 10771–10780, 2018.

- Narayanan & Mitter (2010) Hariharan Narayanan and Sanjoy Mitter. Sample complexity of testing the manifold hypothesis. In Advances in neural information processing systems, pp. 1786–1794, 2010.

- Oord et al. (2016a) Aaron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. Wavenet: A generative model for raw audio. arXiv preprint arXiv:1609.03499, 2016a.

- Oord et al. (2016b) Aaron van den Oord, Nal Kalchbrenner, and Koray Kavukcuoglu. Pixel recurrent neural networks. arXiv preprint arXiv:1601.06759, 2016b.

- Ostrovski et al. (2018) Georg Ostrovski, Will Dabney, and Rémi Munos. Autoregressive quantile networks for generative modeling. arXiv preprint arXiv:1806.05575, 2018.

- Raphan & Simoncelli (2011) Martin Raphan and Eero P Simoncelli. Least squares estimation without priors or supervision. Neural computation, 23(2):374–420, 2011.

- Rezende & Mohamed (2015) Danilo Jimenez Rezende and Shakir Mohamed. Variational inference with normalizing flows. arXiv preprint arXiv:1505.05770, 2015.

- Roweis & Saul (2000) Sam T Roweis and Lawrence K Saul. Nonlinear dimensionality reduction by locally linear embedding. science, 290(5500):2323–2326, 2000.

- Salimans et al. (2016) Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. Improved techniques for training gans. arXiv preprint arXiv:1606.03498, 2016.

- Salimans et al. (2017) Tim Salimans, Andrej Karpathy, Xi Chen, and Diederik P Kingma. Pixelcnn++: Improving the pixelcnn with discretized logistic mixture likelihood and other modifications. arXiv preprint arXiv:1701.05517, 2017.

- Saremi & Hyvarinen (2019) Saeed Saremi and Aapo Hyvarinen. Neural empirical bayes. Journal of Machine Learning Research, 20:1–23, 2019.

- Sietsma & Dow (1991) Jocelyn Sietsma and Robert JF Dow. Creating artificial neural networks that generalize. Neural networks, 4(1):67–79, 1991.

- Sohl-Dickstein et al. (2015) Jascha Sohl-Dickstein, Eric A Weiss, Niru Maheswaranathan, and Surya Ganguli. Deep unsupervised learning using nonequilibrium thermodynamics. arXiv preprint arXiv:1503.03585, 2015.

- Song & Ermon (2019) Yang Song and Stefano Ermon. Generative modeling by estimating gradients of the data distribution. In Advances in Neural Information Processing Systems, pp. 11918–11930, 2019.

- Song & Ermon (2020) Yang Song and Stefano Ermon. Improved techniques for training score-based generative models. arXiv preprint arXiv:2006.09011, 2020.

- Szegedy et al. (2013) Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199, 2013.

- Tenenbaum et al. (2000) Joshua B Tenenbaum, Vin De Silva, and John C Langford. A global geometric framework for nonlinear dimensionality reduction. science, 290(5500):2319–2323, 2000.

- Theis et al. (2015) Lucas Theis, Aäron van den Oord, and Matthias Bethge. A note on the evaluation of generative models. arXiv preprint arXiv:1511.01844, 2015.

- Uria et al. (2013) Benigno Uria, Iain Murray, and Hugo Larochelle. Rnade: The real-valued neural autoregressive density-estimator. In Advances in Neural Information Processing Systems, pp. 2175–2183, 2013.

- Vincent (2011) Pascal Vincent. A connection between score matching and denoising autoencoders. Neural computation, 23(7):1661–1674, 2011.

- Vincent et al. (2008) Pascal Vincent, Hugo Larochelle, Yoshua Bengio, and Pierre-Antoine Manzagol. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th international conference on Machine learning, pp. 1096–1103, 2008.

- Vinyals et al. (2019) Oriol Vinyals, Igor Babuschkin, Wojciech M Czarnecki, Michaël Mathieu, Andrew Dudzik, Junyoung Chung, David H Choi, Richard Powell, Timo Ewalds, Petko Georgiev, et al. Grandmaster level in starcraft ii using multi-agent reinforcement learning. Nature, 575(7782):350–354, 2019.

Appendix A Proofs

See 1

Proof.

First, we have that:

| (5) |

and if we assume symmetry, i.e. then by integration by parts we have:

| (6) |

Therefore,

| (7) | ||||

| (8) |

which proves the result. ∎

[Formal]propositionregular Given a -dimensional data distribution and model distribution , assume that the smoothing distribution satisfies:

-

•

is infinitely differentiable on the support of

-

•

is symmetric (i.e. )

-

•

is stationary (i.e. translation invariant)

-

•

is bounded and fully supported on

-

•

is element-wise independent

-

•

is bounded, and at each dimension .

Denote , then

where is a function of such that . Thus when , we have

| (9) |

Proof.

To see this, we first note that the new training objective for the smoothed data distribution is . Let , because of the assumptions we have, the PDF function can be reparameterized as which satisfies: is bounded and fully supported on ; is element-wise independent and at each dimension (). Then we have

| (10) |

Using Taylor expansion, we have:

Since is independent of and

where is the Kronecker delta function, the right hand side of Equation 10 becomes

| (11) | ||||

| (12) |

When

| (13) |

we have

| (14) |

∎

Appendix B Denoising experiments

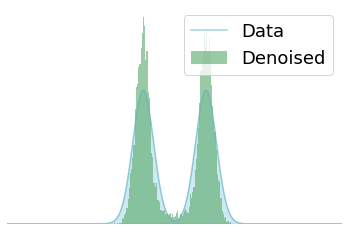

B.1 Analysis on 1-d denoising

To provide more insights into denoising, we first study “single-step denoising” (see equation 2) on a 1-d dataset. We choose the data distribution to be a two mixture of Gaussian distribution and the smoothing distribution to be (see figure 8(a)). Since the convolution of two Gaussian distributions is also a Gaussian distribution, the smoothed data is a mixture of Gaussian distribution given by . The ground truth of can then be calculated in closed form. Thus, given the smoothed data , we can calculate the ground truth in equation 2 and obtain using “single-step denoising”. We visualize the denoising results in figure 8(b). We find that the low density region between the two modes in are not modeled properly in figure 8(b). However, this is very expected since “single-step denosing” uses as the substitute for the denoised result. When the smoothing distribution has a large variance (like in figure 8(a) where the smoothed data has merged into a one mode distribution), datapoints like in the middle low density region of can have high density in the smoothed distribution. Since , as well as other points in the middle low density region of , can come from both modes of with high probability before the smoothing process (see figure 11(a)), the denoised can still be located in the middle low density region (see figure 8(b)). Since a large proportion of the smoothed data is located in the middle low density region of , we would expect certain proportion of the density to remain in the low density region after “single-step denoising” just as shown in figure 8(b). However, when the smoothing distribution has a smaller variance, “single-step denoising” can achieve much better denoising results (see figure 9, where we use ). Although denoising can be easier when the smoothing distribution has a smaller variance, modeling the smoothed distribution could be harder as we discussed before.

In general, the right denoising results should be samples coming from , which is the reason why samples from (i.e. introducing the model ) is more ideal than using as a denoising substitute (i.e. “single-step denoising”). In general, the capacity of the denoising model also matters in terms of denoising results. Let us again consider the datapoint shown in figure 8(a). If the invert smoothing model is a one mode logistic distribution, due to the mode covering property of maximum likelihood estimation, given the smoothed observation , the best the model can do is to center its only mode at for approximating (see figure 10(c)). Thus, like , the smoothed datapoints at the low density region between the two modes of are still likely to remain between the two modes after denoising (see figure 10(b)). To solve this issue, we can increase the capacity of by making it a two mixture of logistics. In this case, the distribution can be captured in a better way (see figure 11(c) and figure 11(a)). After the invert smoothing process, like , most smoothed datapoints in the low density can be mapped to one of the two high density modes (see figure 11(b)), resulting in much better denoising effects.

B.2 Analysis on 2-d denoising

On the 2-d Olympics dataset in section 4.2, we find that the intersections between rings can be poorly modeled with the proposed smoothing approach when only two mixture of logistics are used (see figure 12(e)). We believe this can be caused if the denoising model is not flexible enough to capture the distribution . More specifically, we note that the ground truth distribution for at the intersections of the rings is a highly complicated distribution and can be hard to capture using our model which only has two mixtures of logistics for each dimension. If we increase the flexibility of by using three or four mixtures of logistics components (note that we still use fewer mixture components than the MADE baseline and we use comparable number of parameters), the intersection of the rings can be modeled in an improved way (see figure 12).

We also provide “single-step denoising” results for the experiments in Section 4.2 (see figure 13), where we use the same smoothing distribution, and the MADE model with three mixture components as used in section 4.2. We note that “single-step denoising” results are not very good, which is also expected. As discussed in section B.1, when the smoothing distribution has a relatively large variance, is not a good approximation for the denoised result, and we want the denoised sample to come from the distribution , in which case introducing a denoising model could be a better option. Although we could select to have a smaller variance so that “single-step denoing” could work reasonably well, but modeling in this case could be more challenging.

Appendix C Image experiments

C.1 Settings

For the image experiments, we first rescale images to and then perturb the images with . We use for MNIST and for both CIFAR-10 and CelebA. The selection of is mainly based on analysis in (Saremi & Hyvarinen, 2019). More specifically, given an image, we consider the median value of the Euclidean distance between two data points in a dataset, and then divide it by , where is the dimension of the data. This provides us with a way of selecting the variance of , when is a Gaussian distribution. We find this selection of variance able to generate reasonably well samples in practice. We train all the models with Adam optimizer with learning rate . To model , we stack and together at the second dimension to obtain , which ensures that comes before in the pixel ordering. For instance, this stacking would provide an image with size on a MNIST image, and an image with size on a CIFAR-10 image. Since PixelCNN++ consists of convolutional layers, we can directly feed into the default architecture without modifying the model architecture. As the latter pixels of the input only depend on the previous pixels in an autoregressive model and comes before , we can parameterize by computing the likelihoods only on using the outputs from the autoregressive model.

C.2 Image Inpainting

Since both and are parameterized by an autoregressive model, we can also perform image inpainting using our method. We present the inpainting results on CIFAR-10 in figure 14(a) and CelebA in figure 14(b), where the bottom half of the input image is being inpainted.

C.3 Image Denoising

We notice that the reverse smoothing process can also be understood as a denoising process. Besides the “single-step denoising” approach shown above, we can also apply to denoise images. To visualize the denoising performance, we sample from the test set and perturb with to obtain a noisy sample . We feed into and draw samples from the model. We visualize the results in figure 15. As we can see, the model exhibits reasonable denoising results, which shows that the autoregressive model is capable of learning the data distribution when conditioned on the smoothed data.

C.4 More samples

C.5 Nearest neighbors

C.6 Ablation studies

In this section, we show that gradient-based “single-step denoising” will not improve sample qualities without performing randomized smoothing. To see this, we draw samples from a PixelCNN++ trained directly on (i.e. without smoothing). We perform “single-step denoising” update defined as

| (15) |

We explore various values for , and report the results in figure 26. This shows that “single-step denoising” alone (without randomized smoothing) will not improve sample quality of PixelCNN++.