Improve GAN-based Neural Vocoder using Pointwise Relativistic Least Square GAN

Abstract

GAN-based neural vocoders, such as Parallel WaveGAN and MelGAN have attracted great interest due to their lightweight and parallel structures, enabling them to generate high fidelity waveform in a real-time manner. In this paper, inspired by Relativistic GAN[1], we introduce a novel variant of the LSGAN framework under the context of waveform synthesis, named Pointwise Relativistic LSGAN (PRLSGAN). In this approach, we take the truism score distribution into consideration and combine the original MSE loss with the proposed pointwise relative discrepancy loss to increase the difficulty of the generator to fool the discriminator, leading to improved generation quality. Moreover, PRLSGAN is a general-purposed framework that can be combined with any GAN-based neural vocoder to enhance its generation quality. Experiments have shown a consistent performance boost based on Parallel WaveGAN and MelGAN, demonstrating the effectiveness and strong generalization ability of our proposed PRLSGAN neural vocoders.

Index Terms: generative adversarial networks, speech synthesis, neural vocoder, relativistic GAN

1 Introduction

In recent years, deep neural network based vocoder has been developing rapidly. Compared with conventional vocoders([2], [3]), neural vocoders can significantly enhance the speech synthesis quality of the current text-to-speech (TTS) system. Most early studies of neural vocoders are based on autoregressive(AR) models, such as Wavenet[4], WaveRNN[5], SampleRNN[6], FeatherWave[7] etc. In such models, samples are generated sequentially while RNNs are utilized in modeling the long-term relationship that existed in the natural waveform. Although they produce very high-quality waves, the generation speed is unfavorable because of the sequential structure, limiting their practical usage in real-time TTS systems.

To address the efficiency issue, many approaches are proposed to accelerate the inference speed of AR models, Yu et al.[8] modify the original WaveRNN[5] and divide the full-band audio signal into four subbands and predict the four subband parameters simultaneously, which leads to reduced prediction duration and model parameters. [9] proposes another lightweight vocoder based on the WaveRNN framework. It can synthesize high-quality waveform using linear prediction coefficients (LPC).

Recently, non-AR models have drawn increasing attention from researchers. Those models are able to generate a waveform in a highly parallelizable manner to take full advantage of modern hardware with inference speed. Among all the methods, knowledge distill technique plays a major role [10],[11]. Specifically, the ’knowledge’ of one AR teacher model is transferred to a small student model based on the inverse autoregressive flows combined with an extra perceptual loss. Although the resulting model can synthesize high-quality waveform with a reasonable speed, it requires a well-trained teacher model as well as complex training strategies. The gigantic size of their model also restricts it from achieving real-time computation. Another notable endeavor in this field is made by flow-based models including waveglow[12], flowavenet[13], Melflow[14], waveflow[15] and FBWAVE[16] etc. They apply a single log-likelihood loss to train specially designed invertible models. The inference speed is faster than AR models and can be deployed even on mobile CPU after extra efforts in engineering[16]. However, its unstable training process and unsatisfying synthesis quality prevent it from being deployed in industrial applications.

Some recent works have utilized generative adversarial network(GAN) to train the vocoders. Concretely, the training process of such a model can be summarized as an adversarial game, the generator tries to synthesize the waveform to fool the discriminator, whereas the discriminator distinguishes the difference between the synthesized wave and the ground truth wave. As it reaches a Nash equalization point, the generator is expected to synthesize a high-quality waveform. GAN-based methods([17],[18],[19],[20],[21],[22],[23]) are promising given some models can even synthesize waves in real-time on a single GPU and achieve a higher MOS at the same time, suited for actual industrial deployment. In particular, MelGAN[17] and ParallelWaveGAN[18](short for PWGAN) are two fundamental GAN-based neural vocoder architectures. Parallel WaveGAN and MelGAN both use auxiliary loss, i.e., multi-resolution STFT loss and feature matching loss, respectively, so they converge significantly faster than the original MelGAN[17]. MB-MelGAN[19] adopts the same idea as Multi-band WaveRNN and synthesizes the subband signals to accelerate the inference speed. VocGAN[20] modifies the original MelGAN model by appending a hierarchical conditional discriminator to the multi-scale waveform generated in the intermediate layers as well as deepen the receptive field. TFGAN [21] considers the time-domain loss for generator and discriminator, which encourages the generator and discriminator to learn waveform both in time and frequency domain aiming at eliminating the artifacts in the high frequency, such as metallic sense and reverb in hearing sense. [22] proposes a two-way discriminator for voiced and unvoiced parts of the synthesized waveform respectively.

Despite the mentioned advancements, the quality of synthesized speech is far from satisfactory. The synthesized audio is prone to have artifacts in the high-frequency domain, while the frequent occurrence of phase mismatch is another challenge hard to neglect. All previous techniques make modifications based on LSGAN[24], where the loss of the discriminator is computed using MSE, which ignores the actual score distribution of each wave segment. As illustrated in figure 1, there exist many truism score distributions that lead to the MSE equilibrium state, most of which will obtain large scores in some positions, leading to high quality, while those with small score will lead to a local audible artifact, affecting the final MOS score. The resulting adversarial gradients from the discriminator may be strongly dominated by the score indicating the global equilibrium state, thus paying less attention to those local possible artifacts. In this paper, inspired by RaGAN[1], we propose PRLSGAN, an enhanced GAN-based architecture for synthesizing waveform, which is supervised by score distribution instead of a single score, leading to the stricter discriminator and thus push the generator to synthesize higher quality wave. The key novelty of our approach is the combination of pointwise relative score discrepancy loss with the conventional MSE loss. The proposed PRLSGAN can be integrated with almost any existing GAN-based neural vocoders, further improving their synthesis quality. In our experiments, we have demonstrated that when combined MelGAN or PWGAN with the PRLSGAN, the resulting model consistently achieves better performance in objective scores such as PESQ, STOI, and subjective MOS score.

2 Proposed Method

| Vocoder | Total | Total | Length | Optimizer for G | Optimizer for D | Start |

|---|---|---|---|---|---|---|

| iterations | batchsize | training D | ||||

| Basic PWGAN | 500K | 16*4 | 20480 | Radam, lr=1e-4, | Radam, lr=1e-4, | 100K |

| betas=(0.9, 0.999), | betas=(0.9, 0.999), | |||||

| grad clip=10 | grad clip=1 | |||||

| Basic MelGAN | 220K | 64*4 | 20480 | Adam, lr=1e-3, | Adam, lr=1e-3, | 50K |

| betas=(0.9, 0.999), | betas=(0.9, 0.999), | |||||

| no grad clip | grad clip=1 |

2.1 Basic Methods

2.1.1 Parallel WaveGAN

Parallel WaveGAN (PWGAN) [18] can produce high-fidelity waveforms in real-time on a modern GPU. As a GAN-based model, PWGAN consists of a generator (G), a discriminator (D) and multi-resolution STFT auxiliary loss. The generator is a WaveNet-like architecture conditioned on auxiliary acoustic features (e.g., Mel-spectrogram) which transforms the standard Gaussian noise sequence into the high-fidelity waveform in parallel. In PWGAN, a least-squares GAN is adopted to minimize the adversarial () as follows:

| (1) |

where z represents the input noise. Moreover, the discriminator is trained to minimize the adversarial loss () formulated as:

| (2) |

where represents the raw waveform and represents the data distribution of the natural samples.

2.1.2 Multi-resolution STFT loss

It’s hard to build a robust PWGAN while trained with only adversarial losses. In PWGAN, a multi-resolution STFT loss () is adopted to improve the stability of the GAN training. Besides, it also can accelerate the convergence of the training process. STFT loss is the sum of spectral convergence loss () and log STFT magnitude (), which are defined as follows:

| (3) |

| (4) |

where represents the generated sample (i.e., G(z)), and and represent the Frobenius norm and the L1 norm, respectively; is the stft magnitudes, and N is the number of magnitude elements.

The multi-resolution STFT loss is the sum of multiple STFT losses with different parameters (i.e. FFT size, window length, and frame shift.), which is defined as follows:

| (5) |

where is the number of STFT loss. To balance the two loss terms, we added a hyperparameter . The final training loss of PWGAN generator () is formulated as:

| (6) |

2.1.3 MelGAN

Basic MelGAN adopts a stack of transposed convolutional blocks to upsample the Mel-spectrogram to match the length of a waveform. To enhance the naturalness, MelGAN uses multiple-scale discriminators that can handle audio at different levels. Basic MelGAN conducts adversarial training with objectives as:

| (7) |

| (8) |

where x denotes the natural samples, c denotes the acoustic features (e.g., Mel-spectrogram) and z denotes Gaussian noise vector.

In addition to the discriminator’s signal, the feature matching objective is also used to train the generator. This objective minimizes the L1 distance between the discriminator feature maps of real and synthesized audio. As suggested in [19], we replace feature matching with multi-resolution STFT loss. Therefore, the final generator loss for MelGAN can be formulated as:

| (9) |

| Method | MCD | FFE | PESQ(wb) | PESQ(nb) | MOS |

|---|---|---|---|---|---|

| Basic PWGAN | 3.417 | 0.040 | 3.181 | 3.505 | 4.0260.073 |

| +PRLSGAN | 3.365 | 0.038 | 3.238 | 3.597 | 4.1820.049 |

| Basic MelGAN | 3.334 | 0.039 | 3.278 | 3.603 | 4.176 0.045 |

| +PRLSGAN | 3.279 | 0.032 | 3.351 | 3.631 | 4.3610.039 |

| Ground True | 0.0 | 0.0 | 4.5 | 4.5 | 4.6870.046 |

2.2 Pointwise Relativistic Least Square GAN

The human sense of hearing can be abstracted as a variety of convolution filters in the discriminator in the GAN-based neural vocoders, which are used to judge the wave quality. Our sense of hearing is sensitive to any local audible artifacts when it is surrounded by those seemingly perfect wave segments. However, the least square loss used in the discriminator of LSGAN only considers the average truism scores, making it more likely to ignore the occurrence of local artifacts in its generated wave segments, as illustrated in figure 1. Note that the synthetic and real waveform in the discriminator input has the same sample length and same semantic meaning. Therefore, we argue that it is vital to consider the score distribution of each wave segment, forcing the generator to avoid the generation of possible local artifacts. In our proposed model, we combine the original MSE loss with the pointwise relativistic discrepancy loss as follows:

| (10) |

| (11) |

where is experimentally chosen as 0.4, the margin is set to 1 and is set to 4.0.

To further boost the generated waveform quality, we expect the model to refine on obvious artifacts rather than fixing trivial waveform difference which is too subtle to be recognized by human. Specifically, we add a ( is chosen as 10 percent of the whole segment length) loss which emphasizes those large discrepancy wave segments:

| (12) |

| (13) |

where denotes the th largest number in the scalar sequence.

Above all, The final adversarial loss of our PRLSGAN is as follows:

| (14) |

| (15) |

where the is set to 0.01.

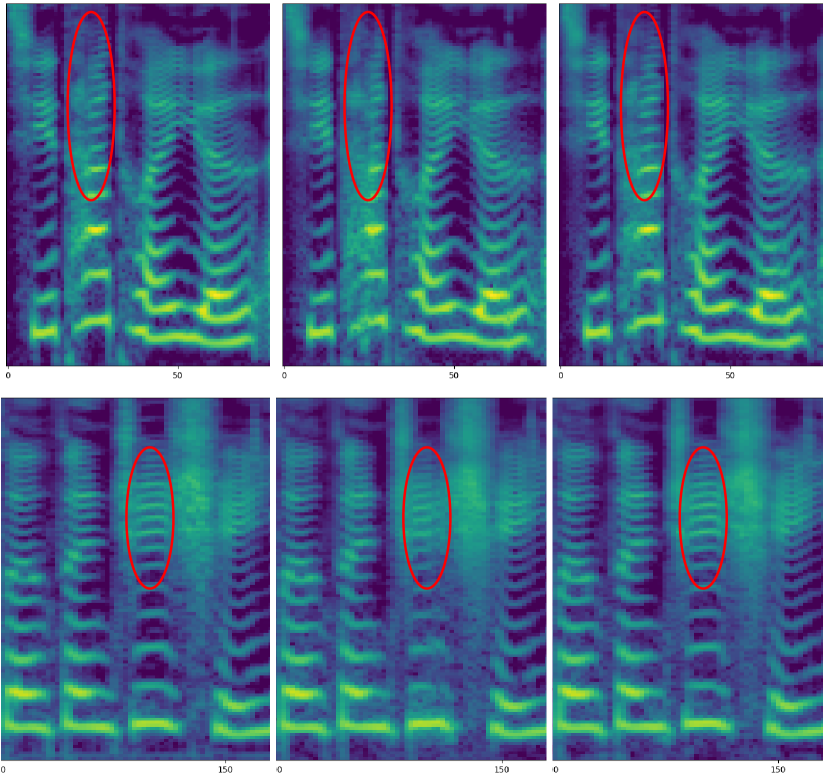

For the PWGAN, the original MSE loss can be directly replaced by our proposed loss, while for the MelGAN, the same loss must be applied in multiple scales. As demonstrated in table 2, it is obvious that both the subjective and objective scores are improved with the proposed PRLSGAN framework, comparing with the original LSGAN framework, indicating the effectiveness of our method when implemented in main stream GAN-based neural vocoders. It is also worth noticing in figure 2, the high-frequency details are greatly improved by the proposed PRLSGAN framework.

3 Experiments

3.1 Experiments setup and dataset detail

We perform experiments on an open-source studio-quality Chinese dataset 111available at www.data-baker.com/open-source.html, which contains 10,000 audio samples from a Chinese female speaker. The total length of the audio samples are 12 hours. All the recordings were down-sampled to 22050 Hz sampling rate with the 16-bit format. 100 utterances are selected as the test set while the rest samples are used for training. We extracted Mel-spectrograms using 1024-point Fourier transform with 256 hop lengths.

3.2 Implementation details

For the MelGAN structure, we adopt a model called full band MelGAN as proposed in [19] which expanded the receptive field by increasing the number of residual blocks to transposed convolutional block. The generator of full-band MelGAN is consists of three transposed convolutional blocks which each block adopted 8, 8, 4 stride, respectively. Each transposed block contains 4 residual blocks with dilated convolutions, and their dilation factors are 1, 3, 9, 27. We did not change the architecture of PWGAN’s generator, and all discriminators.

Adam[25] is chosen as the optimizer for MelGAN and RAdam[26] for PWGAN respectively. As for the learning rate configuration, for MelGAN, the learning rate of a generator was initialized to 1e-3, reducing by half at 50K, 10K iterations. The discriminator was set to 1e-3 and halved at 10K iterations. For PWGAN, we set the learning rate of the discriminator to 1e-4. For multi-resolution STFT loss, we applied three STFT losses with frame sizes of 512, 1,024, and 2,048, window sizes of 240, 600, and 1,200, and frameshifts of 50, 120, and 240, respectively. Most of the training parameters of our basic models were listed in Table 1. While training models with PRLSGAN, we set to 0.4 and start-up at the beginning of training discriminators.

We have used three objective metrics and one subjective metric to evaluate our methods. To measure the accuracy of the waveform that vocoder transforms, we use the MCD[27] and FFE[28] between the ground truth and the synthesized waveform. For the evaluation of waveform quality, we measured PESQ[29], and use MOS to evaluate subjective synthesis quality.

3.3 Ablation Study

We conducted an ablation study to analyze the effect of the proposed methods on this Chinese dataset. Starting from the baseline model, MelGAN and PWGAN, which apply LSGAN and STFT loss, we added each of the proposed methods one at a time measuring MCD, FFE, and PESQ. Table 2 displays the results. Additionally, we observed that using a large batch size greatly speeds up MelGAN training and increases the quality of synthesized waveforms.

3.4 Comparison on MOS

To compare subjective speech quality between LSGAN-based vocoders and PRLSGAN-based vocoders, we measured the MOS score of the speech waveforms synthesized by each vocoder. To perform a fair comparison, we randomly selected 10 utterances222The audio samples are presented in the following URL: https://anonymous1086.github.io/prlsgan-vocoder/ from a test set for MOS testing and 20 native Mandarin speakers participated in the listening test. The results of the subjective MOS evaluation are presented in Table 2. The results show that the proposed PRLSGAN vocoders consistently outperformed the typical MelGAN and Parallel WaveGAN in MOS scoring, indicating the effectiveness of combining PRLSGAN with MelGAN and PWGAN frameworks.

4 Conclusions

In this work, we have proposed PRLSGAN, an improved GAN framework that considers the pointwise relative gap between the truism score of the generator and discriminator. We design a novel pointwise adversarial loss to increase the difficulty of the min-max adversarial process, forcing the generator to refine on its local artifacts. Our experimental results have shown that PRLSGAN can be seamlessly adapted into MelGAN or Parallel WaveGAN based neural vocoders, achieving a great performance gain, while keeping their original inference speed.

References

- [1] A. Jolicoeur-Martineau, “The relativistic discriminator: a key element missing from standard gan,” arXiv preprint arXiv:1807.00734, 2018.

- [2] M. Morise, F. Yokomori, and K. Ozawa, “World: a vocoder-based high-quality speech synthesis system for real-time applications,” IEICE TRANSACTIONS on Information and Systems, vol. 99, no. 7, pp. 1877–1884, 2016.

- [3] H. Kawahara, “Straight, exploitation of the other aspect of vocoder: Perceptually isomorphic decomposition of speech sounds,” Acoustical science and technology, vol. 27, no. 6, pp. 349–353, 2006.

- [4] A. v. d. Oord, S. Dieleman, H. Zen, K. Simonyan, O. Vinyals, A. Graves, N. Kalchbrenner, A. Senior, and K. Kavukcuoglu, “Wavenet: A generative model for raw audio,” arXiv preprint arXiv:1609.03499, 2016.

- [5] N. Kalchbrenner, E. Elsen, K. Simonyan, S. Noury, N. Casagrande, E. Lockhart, F. Stimberg, A. v. d. Oord, S. Dieleman, and K. Kavukcuoglu, “Efficient neural audio synthesis,” arXiv preprint arXiv:1802.08435, 2018.

- [6] S. Mehri, K. Kumar, I. Gulrajani, R. Kumar, S. Jain, J. Sotelo, A. Courville, and Y. Bengio, “Samplernn: An unconditional end-to-end neural audio generation model,” 2017.

- [7] Q. Tian, Z. Zhang, H. Lu, L.-H. Chen, and S. Liu, “Featherwave: An efficient high-fidelity neural vocoder with multi-band linear prediction,” arXiv preprint arXiv:2005.05551, 2020.

- [8] C. Yu, H. Lu, N. Hu, M. Yu, C. Weng, K. Xu, P. Liu, D. Tuo, S. Kang, G. Lei et al., “Durian: Duration informed attention network for multimodal synthesis,” arXiv preprint arXiv:1909.01700, 2019.

- [9] J.-M. Valin and J. Skoglund, “Lpcnet: Improving neural speech synthesis through linear prediction,” in ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019, pp. 5891–5895.

- [10] A. Oord, Y. Li, I. Babuschkin, K. Simonyan, O. Vinyals, K. Kavukcuoglu, G. Driessche, E. Lockhart, L. Cobo, F. Stimberg et al., “Parallel wavenet: Fast high-fidelity speech synthesis,” in International conference on machine learning. PMLR, 2018, pp. 3918–3926.

- [11] W. Ping, K. Peng, and J. Chen, “Clarinet: Parallel wave generation in end-to-end text-to-speech,” arXiv preprint arXiv:1807.07281, 2018.

- [12] R. Prenger, R. Valle, and B. Catanzaro, “Waveglow: A flow-based generative network for speech synthesis,” in ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019, pp. 3617–3621.

- [13] S. Kim, S. gil Lee, J. Song, J. Kim, and S. Yoon, “Flowavenet : A generative flow for raw audio,” 2019.

- [14] Z. Zeng, J. Wang, N. Cheng, and J. Xiao, “Melglow: Efficient waveform generative network based on location-variable convolution,” arXiv preprint arXiv:2012.01684, 2020.

- [15] W. Ping, K. Peng, K. Zhao, and Z. Song, “Waveflow: A compact flow-based model for raw audio,” in International Conference on Machine Learning. PMLR, 2020, pp. 7706–7716.

- [16] B. Wu, Q. He, P. Zhang, T. Koehler, K. Keutzer, and P. Vajda, “Fbwave: Efficient and scalable neural vocoders for streaming text-to-speech on the edge,” arXiv preprint arXiv:2011.12985, 2020.

- [17] K. Kumar, R. Kumar, T. de Boissiere, L. Gestin, W. Z. Teoh, J. Sotelo, A. de Brébisson, Y. Bengio, and A. C. Courville, “Melgan: Generative adversarial networks for conditional waveform synthesis,” in Advances in Neural Information Processing Systems, 2019, pp. 14 910–14 921.

- [18] R. Yamamoto, E. Song, and J.-M. Kim, “Parallel wavegan: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram,” in ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2020, pp. 6199–6203.

- [19] G. Yang, S. Yang, K. Liu, P. Fang, W. Chen, and L. Xie, “Multi-band melgan: Faster waveform generation for high-quality text-to-speech,” arXiv preprint arXiv:2005.05106, 2020.

- [20] J. Yang, J. Lee, Y. Kim, H. Cho, and I. Kim, “Vocgan: A high-fidelity real-time vocoder with a hierarchically-nested adversarial network,” arXiv preprint arXiv:2007.15256, 2020.

- [21] Q. Tian, Y. Chen, Z. Zhang, H. Lu, L. Chen, L. Xie, and S. Liu, “Tfgan: Time and frequency domain based generative adversarial network for high-fidelity speech synthesis,” 2020.

- [22] R. Yamamoto, E. Song, M.-J. Hwang, and J.-M. Kim, “Parallel waveform synthesis based on generative adversarial networks with voicing-aware conditional discriminators,” arXiv e-prints, pp. arXiv–2010, 2020.

- [23] Z. Zeng, J. Wang, N. Cheng, and J. Xiao, “Lvcnet: Efficient condition-dependent modeling network for waveform generation,” arXiv preprint arXiv:2102.10815, 2021.

- [24] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. Paul Smolley, “Least squares generative adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2794–2802.

- [25] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [26] L. Liu, H. Jiang, P. He, W. Chen, X. Liu, J. Gao, and J. Han, “On the variance of the adaptive learning rate and beyond,” 2020.

- [27] R. Kubichek, “Mel-cepstral distance measure for objective speech quality assessment,” in Proceedings of IEEE Pacific Rim Conference on Communications Computers and Signal Processing, vol. 1. IEEE, 1993, pp. 125–128.

- [28] W. Chu and A. Alwan, “Reducing f0 frame error of f0 tracking algorithms under noisy conditions with an unvoiced/voiced classification frontend,” in 2009 IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, 2009, pp. 3969–3972.

- [29] A. W. Rix, J. G. Beerends, M. P. Hollier, and A. P. Hekstra, “Perceptual evaluation of speech quality (pesq)-a new method for speech quality assessment of telephone networks and codecs,” in 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), vol. 2. IEEE, 2001, pp. 749–752.