Implicit Regularization of Dropout

Abstract

It is important to understand how dropout, a popular regularization method, aids in achieving a good generalization solution during neural network training. In this work, we present a theoretical derivation of an implicit regularization of dropout, which is validated by a series of experiments. Additionally, we numerically study two implications of the implicit regularization, which intuitively rationalizes why dropout helps generalization. Firstly, we find that input weights of hidden neurons tend to condense on isolated orientations trained with dropout. Condensation is a feature in the non-linear learning process, which makes the network less complex. Secondly, we experimentally find that the training with dropout leads to the neural network with a flatter minimum compared with standard gradient descent training, and the implicit regularization is the key to finding flat solutions. Although our theory mainly focuses on dropout used in the last hidden layer, our experiments apply to general dropout in training neural networks. This work points out a distinct characteristic of dropout compared with stochastic gradient descent and serves as an important basis for fully understanding dropout.

1 Introduction

Dropout is used with gradient-descent-based algorithms for training neural networks (NNs) [1, 2], which can improve the generalization in deep learning [3, 4]. For example, common neural network frameworks such as PyTorch default to utilizing dropout during transformer training. Dropout works by multiplying the output of each neuron by a random variable with probability being and being zero during training. Note that every time the concerning quantity is calculated, the variable is randomly sampled at each feedforward operation.

The effect of dropout is equivalent to adding a specific noise to the gradient descent training. Theoretically, based on the method of the modified gradient flow [5], we derive implicit regularization terms of the dropout training for networks with dropout on the last hidden layer. The implicit regularization of dropout can lead to two important implications, condensed weights and flat solutions, verified by a series of experiments111Code can be found at: https://github.com/sjtuzzw/torch_code_frame under general settings.

Firstly, we study weight feature learning in dropout training. Previous works [6, 7, 8] find that, in the nonlinear training regime, input weights of hidden neurons (the input weight of a hidden neuron is a vector consisting of the weight from its input layer to the hidden layer and its bias term) are clustered into several groups under gradient flow training. The weights in each group have similar orientations, which is called condensation. By analyzing the implicit regularization terms, we theoretically find that dropout tends to find solutions with weight condensation. To verify the effect of dropout on condensation, we conduct experiments in the linear regime, such as neural tangent kernel initialization [9], where the weights are in proximity to the random initial values and condensation does not occur in common gradient descent training. We find that even in the linear regime, with dropout, weights show clear condensation in experiments, and for simplicity, we only show the output here (Fig. 1(a)). As condensation reduces the complexity of the NN, dropout may help the generalization by constraining the model’s complexity.

Secondly, we study the flatness of the solution in dropout training. We theoretically show that the implicit regularization terms of dropout lead to a flat minimum. We experimentally verify the effect of the implicit regularization terms on flatness (Fig. 1(b)). As suggested by many existing works [10, 11, 12], flatter minima have a higher probability of better generalization and stability.

This work provides a comprehensive investigation into the implicit regularization of dropout and its associated implications. Although our theoretical analysis mainly focuses on the dropout used in the last hidden layer, our experimental results extend to the general use of dropout in training NNs. Our results show that dropout has a distinct implicit regularization for facilitating weight condensation and finding flat minima, which may jointly improve the generalization performance of NNs.

2 Related Works

Dropout is proposed as a simple approach to prevent overfitting in the training of NNs, thus improving the generalization of the network [1, 2]. Many works aim to find an explicit form of dropout regularization. A previous work [13] presents PAC-Bayesian bounds, and others [14, 15] derive Rademacher generalization bounds. These results show that the reduction of complexity brought by dropout is , where is the probability of keeping an element in dropout. All of the above works need specific settings, such as norm assumptions and logistic loss, and they only give a rough estimate of the generalization error bound, which usually consider the worst case. [16, 17] study the implicit bias of dropout for linear models. However, it is not clear what is the characteristic of the dropout training process and how to bridge the training with the generalization in non-linear neural networks. In this work, we show the implicit regularization of dropout, which may be a key factor in enabling dropout to find solutions with better generalization.

The modified gradient flow is defined as the gradient flow which is close to discrete iterates of the original training path up to some high-order learning rate term [5]. [18] derive the modified gradient flow of discrete full-batch gradient descent training as , where is the training loss on dataset , is the learning rate and denotes the -norm. In a similar vein, [19] derive the modified gradient flow of stochastic gradient descent training as , where is the th batch loss and the last term is also called “non-uniform” term [20]. Our work shows that there exist several distinct features between dropout and SGD. Specifically, in the limit of the vanishing learning rate, the modified gradient flow of dropout still has an additional implicit regularization term, whereas that of SGD converges to the full-batch gradient flow [21].

The parameter initialization of the network determines the final fitting result of the network. [6, 8] mainly identify the linear regime and the condensed regime for two-layer and three-layer wide ReLU NNs. In the linear regime, the training dynamics of NNs are approximately linear and similar to a random feature model with an exponential loss decay. In the condensed regime, active neurons are condensed at several discrete orientations, which may be an underlying reason why NNs outperform traditional algorithms.

[22, 23] show that NNs of different widths often exhibit similar condensation behavior, e.g., stagnating at a similar loss with almost the same output function. Based on this observation, they propose the embedding principle that the loss landscape of an NN contains all critical points of all narrower NNs. The embedding principle provides a basis for understanding why condensation occurs from the perspective of loss landscape.

Several works study the mechanism of condensation at the initial training stage, such as for ReLU network [24, 25] and network with continuously differentiable activation functions [7]. However, studying condensation throughout the whole training process is generally challenging, with dropout training being an exception. The regularization terms we derive in this work show that the dropout training tends to condense in the whole training process.

3 Preliminary

3.1 Deep Neural Networks

Consider a -layer () fully-connected neural network (FNN). We regard the input as the th layer and the output as the th layer. Let represent the number of neurons in the th layer. In particular, and . For any and , we denote . In particular, we denote .

Given weights and biases for , we define the collection of parameters as a -tuple (an ordered list of elements) whose elements are matrices or vectors

where the th layer parameters of is the ordered pair . We may misuse notation and identify with its vectorization , where .

Given , the FNN function is defined recursively. First, we denote for all . Then, for , is defined recursively as , where is a non-linear activation function. Finally, we denote

For notational simplicity, we denote

where is the th column of , and is the th element of vector . In this work, we denote the -norm as for convenience.

3.2 Loss Function

The training data set is denoted as , where and . For simplicity, we assume an unknown function satisfying for . The empirical risk reads as

| (1) |

where the loss function is differentiable and the derivative of with respect to its first argument is denoted by . The error with respect to data sample is defined as

For notation simplicity, we denote .

3.3 Dropout

For , we randomly sample a scaling vector with coordinates of are sampled i.i.d that,

where , indices the coordinate of . It is important to note that is a zero-mean random variable. We then apply dropout by computing

and use instead of . Here we use for the Hadamard product of two matrices of the same dimension. To simplify notation, we let denote the collection of such vectors over all layers. We denote the output of model on input using dropout noise as . The empirical risk associated with the network with dropout layer is denoted by , given by

| (2) |

4 Modified Gradient Flow

In this section, we theoretically analyze the implicit regularization effect of dropout. We derive the modified gradient flow of dropout in the sense of expectation. We first summarize the settings and provide the necessary definitions used for our theoretical results below. Note that, the settings of our experiments are much more general.

Setting 1 (dropout structure).

Consider an -layer () FNN with only one dropout layer after the th layer of the network,

Setting 2 (loss function).

Take the mean squared error (MSE) as our loss function,

Setting 3 (network structure).

For convenience, we set the model output dimension to one, i.e. .

In the following, we introduce two key terms that play an important role in our theoretical results:

| (3) |

| (4) |

where is the -th column of , is the -th element of , is the expectation with respect to , and is the learning rate.

Based on the above settings, we obtain a modified equation based on dropout gradient flow.

Lemma 1 (the expectation of dropout loss).

Given an -layer FNN with dropout , under Setting 1–3, we have the expectation of dropout MSE:

Based on the above lemma, we proceed to study the discrete iterate training of gradient descent with dropout, resulting in the derivation of the modified gradient flow of dropout training.

Modified gradient flow of dropout. Under Setting 1–3, the mean iterate of , with a learning rate , stays close to the path of gradient flow on a modified loss , where the modified loss satisfies:

| (5) |

Contrary to SGD [19], the term is independent of the learning rate , thus the implicit regularization of dropout still affects the gradient flow even as the learning rate approaches zero. In Section 6, we show the term makes the network tend to find solutions with lower complexity, that is, solutions with weight condensation, which is also illustrated and supported numerically. In Section 7, we show the term plays a more important role in improving the generalization and flatness of the model than the term, which explicitly aims to find a flatter solution.

5 Numerical Verification of Implicit Regularization Terms

In this section, we numerically verify the validity of two implicit regularization terms, i.e., defined in Equation (3) and defined in Equation (4), under more general settings than out theoretical results. The detailed experimental settings can be found in Appendix A.

5.1 Validation of the Effect of

As is independent of the learning rate and vanishes in the limit of zero learning rate, we select a small learning rate to verify the validity of . According to Equation (5), the modified equation of dropout training dynamics can be approximated by when the learning rate is sufficiently small. Therefore, we verify the validity of through the similarity of the NN trained by the two loss functions, i.e., and under a small learning rate.

Fig. 2(a) presents the test accuracy of two losses trained under different dropout rates. For the network trained with , there is no dropout layer, and the dropout rate affects the weight of in the loss function. For different dropout rates, the networks obtained by the two losses above exhibit similar test accuracy. It is worth mentioning that for the network trained with , the obtained accuracy is only , which is significantly lower than the accuracy of the network trained through the two loss functions above (over in Fig. 2(a)). In Fig. 2(b), we show the values of and for the two networks at different dropout rates. Note that for the network obtained by training, we can calculate the two terms through the network’s parameters. It can be seen that for different dropout rates, the values of and of the two networks are almost indistinguishable.

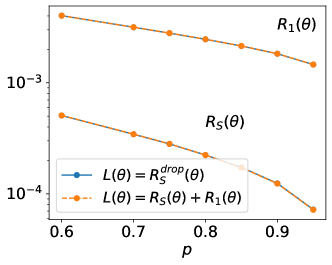

5.2 Validation of the Effect of

As shown in Theorem 4, the modified loss satisfies the equation:

In order to validate the effect of in the training process, we verify the equivalence of the following two training methods: (i) training networks with a dropout layer by MSE with different learning rates ; (ii) training networks with a dropout layer by MSE with an explicit regularization:

with different values of and a fixed learning rate much smaller than . The exact form of has an expectation with respect to , but in this subsection, we ignore this expectation in experiments for convenience.

As shown in Fig. 3, we train the NNs by the MSE with different learning rates (blue), and the regularized MSE with a fixed small learning rate and different values of (orange). In Fig. 3(a), the learning rate and the regularization coefficient are close when they reach their corresponding maximum test accuracy (red point). In addition, as shown in Fig. 3(b), we study the value of under different learning rates (blue) and regularization coefficients (orange). In practical experiments, we take 3000 different dropout noises to approximate the expectation after the training process. The results indicate that the same learning rate and regularization coefficient result in similar ratios.

Due to the computational cost of full-batch GD, we only use a few training samples in the above experiments. We conduct similar experiments with dropout under different learning rates and regularization coefficients using SGD as detailed in Appendix C.1.

6 Dropout Facilitates Condensation

A condensed network, which refers to a network with neurons having aligned input weights, is equivalent to another network with a reduced width [7, 6]. Therefore, the effective complexity of the network is smaller than its superficial appearance. Such low effective complexity may be an underlying reason for good generalization. In addition, the embedding principle [22, 23, 26] shows that although the condensed network is equivalent to a smaller one in the sense of approximation, it has more degeneracy and more descent directions that may lead to a simpler training process.

In this section, we experimentally and theoretically study the effect of dropout on the condensation phenomenon.

6.1 Experimental Results

To empirically validate the effect of dropout on condensation, we examine ReLU and tanh activations in one-dimensional and high-dimensional fitting problems, as well as image classification problems. Due to space limitations, some experimental results and detailed experimental settings are left in Appendices A, C.

6.1.1 Network with One-dimensional Input

We train a tanh NN with 1000 hidden neurons for the one-dimensional fitting problem to fit the data shown in Fig. 4 with MSE. Additional experimental verifications on ReLU NNs are provided in Appendix C. The experiments performed with and without dropout under the same initialization can both well fit the training data. In order to clearly study the effect of dropout on condensation, we take the parameter initialization distribution in the linear regime [6], where condensation does not occur without additional constraints. The dropout layer is used after the hidden layer of the two-layer network (top row) and used between the hidden layers and after the last hidden layer of the three-layer network (bottom row). Upon close inspection of the fitting process, we find that the output of NNs trained without dropout in Fig. 4(a, e) has much more oscillation than the output of NNs trained with dropout in Fig. 4(b, f). To better understand the underlying effect of dropout, we study the feature of parameters.

The parameter pair of each neuron can be separated into a unit orientation feature and an amplitude indicating its contribution222Due to the homogeneity of ReLU neurons, this amplitude can accurately describe the contribution of ReLU neurons. For tanh neurons, the amplitude has a certain positive correlation with the contribution of each neuron. to the output, i.e., . For a one-dimensional input, is two-dimensional due to the incorporation of bias. Therefore, we use the angle to the -axis in to indicate the orientation of each . For simplicity, for the three-layer network with one-dimensional input, we only consider the input weight of the first hidden layer. The scatter plots of and of tanh activation are presented in Appendix C.3 to eliminate the impact of the non-homogeneity of tanh activation.

The scatter plots of of the NNs are shown in Fig. 4(c,d,g,h). For convenience, we normalize the feature distribution of each model parameter such that the maximum amplitude of neurons in each model is . Compared with the initial weight distribution (blue), the weight trained without dropout (orange) is close to its initial value. However, for the NNs trained with dropout, the parameters after training are significantly different from the initialization, and the non-zero parameters tend to condense on several discrete orientations, showing a condensation tendency.

In addition, we study the stability of the model trained with the loss function under the two loss functions and . As shown in the left panel of Fig. 5, we use as the loss function to train the model before the dashed line where when is small, and we then replace the loss function by or . The outputs and features of the models trained with these three loss functions are shown in the middle and right panels of Fig. 5, respectively. The results reveal that dropout ( term) aids the training process in escaping from the minima obtained by training and finding a condensed solution.

One may wonder if any noise injected into the training process could lead to condensation. We also perform similar experiments for SGD. As shown in Fig. 6, no significant condensation occurs even in the presence of noise during training. Therefore, the experiments in this section reveal the special characteristic of dropout that facilitate condensation.

6.1.2 Network with High-dimensional Input

We conducted further investigation into the effect of dropout on high-dimensional two-layer tanh NNs under the teacher-student setting. Specifically, we utilize a two-layer tanh NN with only one hidden neuron and 10-dimensional input as the target function. The orientation similarity of two neurons is calculated by taking the inner product of their normalized weights. As shown in Fig. 7(a,b), for the NN with dropout, the neurons of the network have only two orientations, indicating the occurrence of condensation, while the NN without dropout does not exhibit such a phenomenon.

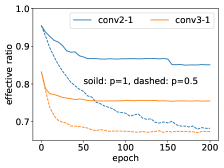

To visualize the condensation during the training process, we define the ratio of effective neurons as follows.

Definition 1 (effective ratio).

For a given NN, the input weight of neuron in the -th layer is vectorized as . Let be the set of vectors such that for any , there exists an element satisfying . The effective neuron number of the -th layer is defined as the minimal size of all possible . The effective ratio is defined as .

We study the training process of using ResNet-18 to learn CIFAR-10. As shown in Fig. 7(c), NNs with dropout tend to have lower effective ratios, and thus tend to exhibit condensation.

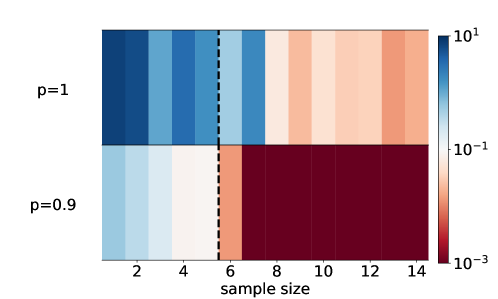

6.1.3 Dropout Improves Generalization

As the effective neuron number of a condensed network is much smaller than its actual neuron number, it is expected to generalize better. To verify this, we use a two-layer tanh network with neurons to learn a teacher two-layer tanh network with two neurons. The number of free parameters in the teacher network is . As shown in Fig. 8, the model with dropout generalizes well when the number of samplings is larger than 6, while the model without dropout generalizes badly. This result is consistent with the rank analysis of non-linear models [27].

6.2 The Effect of on Condensation

As can be seen from the implicit regularization term , dropout regularization imposes an additional -norm constraint on the output of each neuron. The constraint has an effect on condensation. We illustrate the effect of by a toy example of a two-layer ReLU network.

We use the following two-layer ReLU network to fit a one-dimensional function:

where , , . For simplicity, we set , and suppose the network can perfectly fit a training data set of two data points generated by a target function of , denoted as . We further assume . Denote the output of the -th neuron over samples as

The network output should equal to the target on the training data points after long enough training, i.e.,

There are infinitely many pairs of and that can fit well. However, the term leads the training to a specific pair. can be written as

and the components of perpendicular to need to cancel each other at the well-trained stage to minimize . As a result, and need to be parallel with , i.e., , which is the condensation phenomenon.

In the following, we show that minimizing term can lead to condensation under several settings. We first give some definitions that capture the characteristic of ReLU neurons (also shown in Fig. 9).

Definition 2 (convexity change of ReLU NNs).

Consider piecewise linear function , , and its linear interval sets . For any two intervals , if on one of the intervals, is convex and on the other is concave, then we call there exists a convexity change.

Definition 3 (direction and intercept point of ReLU neurons).

For a one-dimensional ReLU neuron , its direction is defined as , and its intercept point is defined as .

Drawing inspiration from the methodology employed to establish the regularization effect of label noise SGD [28], we show that under the setting of two-layer ReLU NN and one-dimensional input data, the implicit bias of term corresponds to “simple” functions that satisfy two conditions: (I) they have the minimum number of convexity changes required to fit the training points, and (ii) if the intercept points of neurons are in the same inner interval, and the neurons have the same direction, then their intercept points are identical.

Theorem 1 (the effect of on facilitating condensation).

Consider the following two-layer ReLU NN,

trained with a one-dimensional dataset , where . When the MSE of training data , if any of the following two conditions holds:

(i) the number of convexity changes of NN in can be reduced while ;

(ii) there exist two neurons with indexes , such that they have the same sign, i.e., , and different intercept points in the same interval, i.e., , and for some ;

then there exists parameters , an infinitesimal perturbation of , s.t.,

(i) ;

(ii) .

It should be noted that not all functions trained with dropout exhibit obvious condensation. For example, a function with dropout shows no condensation while training with a dataset consisting of only one data point. However, for general datasets, such as the example shown in Fig. 1 and Fig. 4, NNs reach the condensed solution due to the limit of the convexity changes and the intercept points (also illustrated in Fig. 10).

Although the current study only demonstrates the result for ReLU NNs, it is expected that for general activation functions, such as tanh, the term also has the effect on facilitating condensation, which is left for future work. This is also confirmed by the experimental results conducted on the tanh NNs above. Furthermore, it is believed that the linear term utilized to ensure in certain cases is not a fundamental requirement. Our experiments have confirmed that neural networks without the linear term also exhibit the condensation phenomenon.

7 Implicit Regularization of Dropout on the Flatness of Solution

Understanding the mechanism by which dropout improves the generalization of NNs is of great interest and significance. In this section, we study the flatness of the minima found by dropout as inspired by the study of SGD on generalization [10]. Our primary focus is to study the effect of and on the flatness of loss landscape and network generalization.

7.1 Dropout Finds Flatter Minima

We first study the effect of dropout on model flatness and generalization. For a fair comparison of the flatness between different models, we employ the approach used in [29] as follows. To obtain a direction for a network with parameters , we begin by producing a random Gaussian direction vector with dimensions compatible with . Then, we normalize each filter in to have the same norm as the corresponding filter in . For FNNs, each layer can be regarded as a filter, and the normalization process is equivalent to normalizing the layer, while for convolutional neural networks (CNNs), each convolution kernel may have multiple filters, and each filter is normalized individually. Thus, we obtain a normalized direction vector by replacing with , where and represent the th filter of the th layer of the random direction and the network parameters , respectively. Here, denotes the Frobenius norm. It is crucial to note that refers to the filter index. We use the function to characterize the loss landscape around the minima obtained with and without dropout layers.

For all network structures shown in Fig. 11, dropout improves the generalization of the network and finds flatter minima. In Fig. 11(a, b), for both networks trained with and without dropout layers, the training loss values are all close to zero, but their flatness and generalization are still different. In Fig. 11(c, d), due to the complexity of the dataset, i.e., CIFAR-100 and Multi30k, and network structures, i.e., ResNet-20 and transformer, networks with dropout do not achieve zero training error but the ones with dropout find flatter minima with much better generalization. The accuracy of different network structures is shown in Table 1.

| Structure | Dataset | With Dropout | Without Dropout |

| FNN | MNIST | 98.7% | 98.1% |

| VGG-9 | CIFAR-10 | 60.6% | 59.2% |

| ResNet-20 | CIFAR-100 | 54.7% | 34.1% |

| Transformer | Multi30k | 49.3% | 34.7% |

7.2 The Effect of on Flatness

In this subsection, we study the effect of on flatness under the two-layer ReLU NN setting. Different from the flatness described above by loss interpolation, we define the flatness of the minimum as the sum of the eigenvalues of the Hessian matrix in this section, i.e., . Note that when , we have,

thus the definition of flatness above is equivalent to .

Theorem 2 (the effect of on facilitating flatness).

The regularization effect of also has a positive effect on flatness by constraining the norm of the gradient. In the next subsection, we compare the effect of these two regularization terms on generalization and flatness.

7.3 Effect of Two Implicit Regularization Terms on Generalization and Flatness

Although the modified gradient flow is noise-free during training, the model trained with the modified gradient flow can also find a flat minimum that generalizes well, due to the effect of and . However, the magnitude of their impact on flatness is not yet fully understood. In this subsection, we study the effect of each regularization term through training networks by the following four loss functions:

| (6) | ||||

where is defined as for convenience, and we have . For each , we explicitly add or subtract the penalty term of either or to study their effect on dropout regularization. Therefore, and are used to study the effect of , while and are for .

We first study the effect of two regularization terms on the generalization of NNs. As shown in Fig. 12, we compare the test accuracy obtained by training with the above four distinct loss functions under different dropout rates and utilize the results of and as reference benchmarks. Two different learning rates are considered, with the solid and dashed lines corresponding to and , respectively. As shown in Fig. 12(a), both approaches show that the training with the regularization term finds a solution that almost has the same test accuracy as the training with dropout. For , as shown in Fig. 12(b), the effect of only marginally improves the generalization ability of full-batch gradient descent training in comparison to the utilization of .

Then we study the effect of two regularization terms on flatness. To this end, we show a one-dimensional cross-section of the loss by the interpolation between two minima found by the training of two different loss functions. For either or , we use addition or subtraction to study its effect. As shown in Fig. 13(a), for , the loss value of the interpolation between the minima found by the addition approach () and the subtraction approach () stays near zero, which is similar for in Fig. 13(b), showing that the higher-order terms of the learning rate in the modified equation have less influence on the training process. We then compare the flatness of minima found by the training with and as illustrated in Fig. 13(c-f). The results indicate that the minima obtained by the training with exhibit greater flatness than those obtained by training with .

The experiments in this section show that, compared with SGD, the unique implicit regularization of dropout, , plays a significant role in improving the generalization and finding flat minima.

8 Conclusion and Discussion

In this work, we theoretically study the implicit regularization of dropout and its role in improving the generalization performance of neural networks. Specifically, we derive two implicit regularization terms, and , and validate their efficacy through numerical experiments. One important finding of this work is that the unique implicit regularization term in dropout, unlike SGD, is a key factor in improving the generalization and flatness of the dropout solution. We also found that can facilitate the weight condensation during training, which may establish a link among weight condensation, flatness, and generalization for further study. This work reveals rich and unique properties of dropout, which are fundamental to a comprehensive understanding of dropout.

Our study also sheds light on the broader issue of simplicity bias in deep learning. We observed that dropout regularization tends to impose a bias toward simple solutions during training, as evidenced by the weight condensation and flatness effects. This is consistent with other perspectives on simplicity bias in deep learning, such as the frequency principle[30, 31, 32, 33, 34], which reveals that neural networks often learn data from low to high frequency. Our analysis of dropout regularization provides a detailed understanding of how simplicity bias works in practice, which is essential for understanding why over-parameterized neural networks can fit the training data well and generalize effectively to new data.

Finally, our work highlights the potential benefits of dropout regularization in training neural networks, particularly in the linear regime. As we have shown, dropout regularization can induce weight condensation and avoid the slow training speed often encountered in highly nonlinear networks due to the fact that the training trajectory is close to the stationary point [22, 23]. This may have important implications for the development of more efficient and effective deep learning algorithms.

Acknowledgments

This work is sponsored by the National Key R&D Program of China Grant No. 2022YFA1008200, the Shanghai Sailing Program, the Natural Science Foundation of Shanghai Grant No. 20ZR1429000, the National Natural Science Foundation of China Grant No. 62002221, Shanghai Municipal of Science and Technology Major Project No. 2021SHZDZX0102, and the HPC of School of Mathematical Sciences and the Student Innovation Center, and the Siyuan-1 cluster supported by the Center for High Performance Computing at Shanghai Jiao Tong University.

References

- [1] Geoffrey E Hinton, Nitish Srivastava, Alex Krizhevsky, Ilya Sutskever, and Ruslan R Salakhutdinov. Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv:1207.0580, 2012.

- [2] Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1):1929–1958, 2014.

- [3] Mingxing Tan and Quoc Le. Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning, pages 6105–6114. PMLR, 2019.

- [4] David P Helmbold and Philip M Long. On the inductive bias of dropout. The Journal of Machine Learning Research, 16(1):3403–3454, 2015.

- [5] Ernst Hairer, Christian Lubich, and Gerhard Wanner. Geometric numerical integration illustrated by the störmer–verlet method. Acta numerica, 12:399–450, 2003.

- [6] Tao Luo, Zhi-Qin John Xu, Zheng Ma, and Yaoyu Zhang. Phase diagram for two-layer relu neural networks at infinite-width limit. Journal of Machine Learning Research, 22(71):1–47, 2021.

- [7] Hanxu Zhou, Qixuan Zhou, Tao Luo, Yaoyu Zhang, and Zhi-Qin John Xu. Towards understanding the condensation of neural networks at initial training. arXiv preprint arXiv:2105.11686, 2021.

- [8] Hanxu Zhou, Qixuan Zhou, Zhenyuan Jin, Tao Luo, Yaoyu Zhang, and Zhi-Qin John Xu. Empirical phase diagram for three-layer neural networks with infinite width. Advances in Neural Information Processing Systems, 2022.

- [9] Arthur Jacot, Clément Hongler, and Franck Gabriel. Neural tangent kernel: Convergence and generalization in neural networks. In Advances in Neural Information Processing Systems, pages 8580–8589, 2018.

- [10] Nitish Shirish Keskar, Dheevatsa Mudigere, Jorge Nocedal, Mikhail Smelyanskiy, and Ping Tak Peter Tang. On large-batch training for deep learning: Generalization gap and sharp minima. arXiv preprint arXiv:1609.04836, 2016.

- [11] Behnam Neyshabur, Srinadh Bhojanapalli, David McAllester, and Nathan Srebro. Exploring generalization in deep learning. arXiv preprint arXiv:1706.08947, 2017.

- [12] Zhanxing Zhu, Jingfeng Wu, Bing Yu, Lei Wu, and Jinwen Ma. The anisotropic noise in stochastic gradient descent: Its behavior of escaping from sharp minima and regularization effects. arXiv preprint arXiv:1803.00195, 2018.

- [13] David McAllester. A pac-bayesian tutorial with a dropout bound. arXiv preprint arXiv:1307.2118, 2013.

- [14] Li Wan, Matthew Zeiler, Sixin Zhang, Yann Lecun, and Rob Fergus. Regularization of neural networks using dropconnect. In In Proceedings of the International Conference on Machine learning. Citeseer, 2013.

- [15] Wenlong Mou, Yuchen Zhou, Jun Gao, and Liwei Wang. Dropout training, data-dependent regularization, and generalization bounds. In International conference on machine learning, pages 3645–3653. PMLR, 2018.

- [16] Stefan Wager, Sida Wang, and Percy S Liang. Dropout training as adaptive regularization. Advances in neural information processing systems, 26:351–359, 2013.

- [17] Poorya Mianjy, Raman Arora, and Rene Vidal. On the implicit bias of dropout. In International Conference on Machine Learning, pages 3540–3548. PMLR, 2018.

- [18] David Barrett and Benoit Dherin. Implicit gradient regularization. In International Conference on Learning Representations, 2020.

- [19] Samuel L Smith, Benoit Dherin, David Barrett, and Soham De. On the origin of implicit regularization in stochastic gradient descent. In International Conference on Learning Representations, 2020.

- [20] Lei Wu, Chao Ma, and Weinan E. How sgd selects the global minima in over-parameterized learning: A dynamical stability perspective. Advances in Neural Information Processing Systems, 31, 2018.

- [21] Sho Yaida. Fluctuation-dissipation relations for stochastic gradient descent. In International Conference on Learning Representations, 2018.

- [22] Yaoyu Zhang, Zhongwang Zhang, Tao Luo, and Zhiqin J Xu. Embedding principle of loss landscape of deep neural networks. Advances in Neural Information Processing Systems, 34:14848–14859, 2021.

- [23] Yaoyu Zhang, Yuqing Li, Zhongwang Zhang, Tao Luo, and Zhi-Qin John Xu. Embedding principle: a hierarchical structure of loss landscape of deep neural networks. Journal of Machine Learning vol, 1:1–45, 2022.

- [24] Hartmut Maennel, Olivier Bousquet, and Sylvain Gelly. Gradient descent quantizes relu network features. arXiv preprint arXiv:1803.08367, 2018.

- [25] Franco Pellegrini and Giulio Biroli. An analytic theory of shallow networks dynamics for hinge loss classification. Advances in Neural Information Processing Systems, 33, 2020.

- [26] Zhiwei Bai, Tao Luo, Zhi-Qin John Xu, and Yaoyu Zhang. Embedding principle in depth for the loss landscape analysis of deep neural networks. arXiv preprint arXiv:2205.13283, 2022.

- [27] Yaoyu Zhang, Zhongwang Zhang, Leyang Zhang, Zhiwei Bai, Tao Luo, and Zhi-Qin John Xu. Linear stability hypothesis and rank stratification for nonlinear models. arXiv preprint arXiv:2211.11623, 2022.

- [28] Guy Blanc, Neha Gupta, Gregory Valiant, and Paul Valiant. Implicit regularization for deep neural networks driven by an ornstein-uhlenbeck like process. In Conference on learning theory, pages 483–513. PMLR, 2020.

- [29] Hao Li, Zheng Xu, Gavin Taylor, Christoph Studer, and Tom Goldstein. Visualizing the loss landscape of neural nets. arXiv preprint arXiv:1712.09913, 2017.

- [30] Zhi-Qin John Xu, Yaoyu Zhang, and Yanyang Xiao. Training behavior of deep neural network in frequency domain. In International Conference on Neural Information Processing, pages 264–274. Springer, 2019.

- [31] Zhi-Qin John Xu, Yaoyu Zhang, Tao Luo, Yanyang Xiao, and Zheng Ma. Frequency principle: Fourier analysis sheds light on deep neural networks. Communications in Computational Physics, 28(5):1746–1767, 2020.

- [32] Yaoyu Zhang, Tao Luo, Zheng Ma, and Zhi-Qin John Xu. A linear frequency principle model to understand the absence of overfitting in neural networks. Chinese Physics Letters, 38(3):038701, 2021.

- [33] Tao Luo, Zheng Ma, Zhi-Qin John Xu, and Yaoyu Zhang. Theory of the frequency principle for general deep neural networks. CSIAM Transactions on Applied Mathematics, 2(3):484–507, 2021.

- [34] Zhi-Qin John Xu, Yaoyu Zhang, and Tao Luo. Overview frequency principle/spectral bias in deep learning. arXiv preprint arXiv:2201.07395, 2022.

- [35] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [36] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In ICLR (Poster), 2015.

- [37] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [38] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017.

Appendix A Experimental Setups

For Fig. 1, Fig. 15, Fig. 16 and Fig. 17, we use the ReLU FNN with the width of to fit the target function as follows,

where . For Fig. 16, we train the network using Adam with a learning rate of and a batch size of . For Fig. 15, we add dropout layers behind the hidden layer with for the two-layer experiments and add dropout layers between two hidden layers and behind the last hidden layer with for the three-layer experiments. We train the network using Adam with a learning rate of . We initialize the parameters in the linear regime, , where is the width of the hidden layer.

For Fig. 2, Fig. 12, and Fig. 13, we use the FNN with size -- to classify the MNIST dataset (the first 1000 images). We add dropout layers behind the hidden layer with different dropout rates. We train the network using GD with a learning rate of .

For Fig. 3, Fig. 14(b), we use VGG-9 [35] w/o dropout layers to classify CIFAR-10 using GD or SGD. For experiments with dropout layers, we add dropout layers after the pooling layers, the dropout rates of dropout layers are . For the experiments shown in Fig. 3, we only use the first 1000 images for training to compromise with the computational burden.

For Fig. 4, Fig. 5 Fig. 6 and Fig. 8, we use the tanh FNN with the width of to fit the target function as follows,

where . We add dropout layers behind the hidden layer with for the two-layer experiments and add dropout layers between two hidden layers and behind the last hidden layer with for the three-layer experiments. We train the network using Adam with a learning rate of . We initialize the parameters in the linear regime, , where is the width of the hidden layer. For Fig. 6, we train the network using Adam with a batch size of . For Fig. 8, each test error is averaged over trials with random initialization.

For Fig. 7(a,b), we use the FNN with size -- to fit the target function,

where , , is the th component of and the training size is . We add dropout layers behind the hidden layer with . We train the network using Adam with a learning rate of .

For Fig. 7(c), we use ResNet-18 to classify CIFAR-10 with and without dropout. We add dropout layers behind the last activation function of each block with . We train the network using Adam with a learning rate of and a batch size 0f .

For Fig. 11(a), we use the FNN with size ---. We add dropout layers behind the first and the second layers with and , respectively. We train the network using default Adam optimizer [36] with a learning rate of .

For Fig. 11(b), we use VGG-9 to compare the loss landscape flatness w/o dropout layers. For experiments with dropout layers, we add dropout layers after the pooling layers, with . Models are trained using full-batch GD with Nesterov momentum, with the first images as training set for epochs. The learning rate is initialized at , and divided by a factor of 10 at epochs 150, 225, and 275.

For Fig. 11(c), we use ResNet-20 [37] to compare the loss landscape flatness w/o dropout layers. For experiments with dropout layers, we add dropout layers after the convolutional layers with . We only consider the parameter matrix corresponding to the weight of the first convolutional layer of the first block of the ResNet-20. Models are trained using full-batch GD, with a training size of for epochs. The learning rate is initialized at .

For Fig. 11(d), we use transformer [38] with , the meaning of the parameters is consistent with the original paper. For experiments with dropout layers, we apply dropout to the output of each sub-layer, before it is added to the sub-layer input and normalized. In addition, we apply dropout to the sums of the embeddings and the positional encodings in both the encoder and decoder stacks. We set for dropout layers. For the English-German translation problem, we use the cross-entropy loss with label smoothing trained by full-batch Adam based on the Multi30k dataset. The learning rate strategy is the same as that in [38]. The warm-up step is epochs, the training step is epochs. We only use the first examples for training to compromise with the computational burden.

For Fig. 14(a), we classify the Fashion-MNIST dataset by training four-layer NNs of width with a training batch size of . We add dropout layers behind the hidden layers with different dropout rates. The learning rate is .

Appendix B Proofs for Main Paper

B.1 Proof for Lemma 1

Lemma (the expectation of dropout loss).

Given an -layer FNN with dropout , under Setting 1–3, we have the expectation of dropout MSE:

Proof.

With the definition of MSE, we have,

With the definition of , we have , thus,

At the same time, we have and . Thus we have,

∎

B.2 Proof for the modified gradient flow of dropout

Modified gradient flow of dropout. Under Setting 1–3, the mean iterate of , with a learning rate , stays close to the path of gradient flow on a modified loss , where the modified loss satisfies:

Proof.

We assume the ODE of the GD with dropout has the form , and introduce a modified gradient flow , where,

Thus by Taylor expansion, for small but finite , we have,

For GD, we have,

Combining , , we have,

Thus, with the random variable ,

We have,

∎

B.3 Proof for Theorem 1

Theorem (the effect of on facilitating condensation).

Consider the following two-layer ReLU NN,

trained with a one-dimensional dataset , where . When the MSE of training data , if any of the following two conditions holds:

(i) the number of convexity changes of NN in can be reduced while ;

(ii) there exist two neurons with indexes , such that they have the same sign, i.e., , and different intercept points in the same interval, i.e., , and for some ;

then there exists parameters , an infinitesimal perturbation of , s.t.,

(i) ;

(ii) .

Proof.

The implicit regularization term can be expresses as follows:

where is the width of the NN, and . It is worth noting that the term will be absorbed by , so it will not affect the value of .

First, we show the proof outline, which is inspired by [28]. However, [28] study the setting of noise SGD, and only consider the limitation of convexity changes. For the first part, i.e., the limitation of the number of convexity changes, the proof outline is as follows. For any set of consecutive datapoints , we assume the linear interpolation of three datapoints is convex. If is not convex in , there exist two neurons, one with a positive output layer weight and the other with a negative output layer weight, and the intercept point of both neurons are within the interval . Then, we can make the intercept points of the two neurons move to both sides by giving a specific perturbation direction, and through this movement, the intercept point of the neurons can be gathered at the data points . At the same time, we can verify that this moving mode can reduce the value of term while keeping the training error at . The main proof mainly classifies the different directions of the above two neurons to construct different types of perturbation direction. For the second part, i.e., the limitation of the number of intercept points in the same inner interval, the proof outline is as follows. For two neurons with the same direction and different intercept points in the same interval , without loss of generality, suppose is the input dataset activated by two neurons at the same time. According to the mean value inequality, when the output values of two neurons are constant at each data point , the term is minimized. We can achieve this by moving the two intercept points of neurons to the interval within the two intercept points through perturbation.

The limitation of convexity changes. Without loss of generality, suppose on the interval is convex. If fits these three points, but is not convex, then it must have a convexity change. This convexity change corresponds to at least two ReLU neurons , and the output weights of the two have opposite signs. WLOG, we assume the intercept point of the first neuron is less than the intercept point of the second neuron, i.e., . After reasonably assuming that the output weights of neurons with small turning points are negative, we classify the signs of the two neurons as follows,

| Case (1) : | |||

| Case (2) : | |||

| Case (3) : | |||

| Case (4) : |

Case (1) : For any sufficiently small , we perturb the parameters of the two neurons as follows:

Here we only consider the case where the intercept point of the first neuron . For the case where , it is easy to obtain from the proof of the limitation of the number of intercept points below. In the same way, we can only consider the case where . We first consider the case where and . By studying the movement of the intercept point of the two neurons, we have,

where , and . As for the second neuron, we have,

where , , , , and . Thus the intercept point of the first neuron moves left and the other moves right under the above perturbation.

We then verify the invariance of the values of NN’s output on the input data. We study this invariance within three data point sets , and . For , the outputs of both neurons remain zero, so the output of the network is unchanged. For , the output of the second neuron remains zero. As for the first neuron, we have,

thus the output of the network on is unchanged. For , we study the output value changes of the two neurons separately. For the first neuron, we have,

For the second neuron, we have,

Thus output value changes of both neurons remain zero. After verifying that the output of the network remains constant on the input data points, we study the effect of this perturbation on the term. Noting that the output values of these two neurons on the input data remain unchanged, we only need to study the effect of the output changes on the input data . For , we have,

| (7) | ||||

Thus we have,

As for (or ), we can still get a consistent conclusion through the above perturbation, the only difference is that the intercept point of the first(or second) neuron will not move to the left(or right).

For the remaining three cases, we show the direction of the perturbation respectively, and we can similarly verify that the loss value remains unchanged and the term decreases by .

Case (2) and Case (3): The perturbation is shown as follows:

Case (4): The perturbation is shown as follows:

The limitation of the number of intercept points. For any inner interval , we take two neurons with the same direction and different intercept points in the same inner interval denoted as , . In order to ensure consistency with the above proof, we denote the boundary points of the inner interval are and . Without loss of generality, we assume that the input weights of two neurons , and the output weight of the first neuron , and we assume the intercept point of the first neuron is less than the intercept point of the second neuron, i.e., . We study and respectively, where the first case corresponds to the case in Case (1) above. We first study the case where . We perturb the parameters of two neurons as follows:

By studying the movement of the intercept points of the two neurons, we have,

where , and . As for the second neuron, we have,

where , , , , and . Thus the intercept points of two neurons move right under the above perturbation.

We then verify the invariance of the values of NN’s output on the input data. We study this invariance within two data point sets and . For , the outputs of both neurons remain zero, so the output of the network is unchanged. For , we study the output value changes of the two neurons separately. For the first neuron, we have,

For the second neuron, we have,

With the same relation shown in Equution (LABEL:equ:inequ), we have,

For , we consider three cases:

| Case (1): | |||

| Case (2): | |||

| Case (3): |

For Case (1), we perturb the parameters of two neurons as follows:

We first study the movement of the intercept points of the two neurons,

where , . In the same way, we have

For the invariance of the values of NN’s output on the input dataset , we have,

Thus, we can easily get, for each input point in ,

Then, we have,

For the remaining three cases, we show the direction of the perturbation respectively, and we can similarly verify that the loss value remains unchanged and the term decreases by .

Case (2): The perturbation is shown as follows:

Case (3): The perturbation is shown as follows:

∎

Theorem (the effect of on facilitating flatness).

Proof.

Recall the quantity that characterizes the flatness of model ,

Then, under the assumption of , we obtain that , we notice that for sufficiently small amount of time , the sign of remains the same. Hence given data , and parameter set , if , for some indices , then . The analysis above reveals that for certain neuron whose index is , we shall focus the data satisfying , i.e., the following set constitutes our data of interest for the -th neuron

as is trained under the gradient flow of , i.e.

then the gradient flow of the flatness reads

by definition of , we obtain that the first term can be written as

hence

which finishes the proof.

∎

Appendix C Additional Experimental Results

C.1 Verification of on Complete Datasets

As shown in Fig. 14, the learning rate and the regularization coefficient are similar when they reach the maximum test accuracy (red point).

C.2 Dropout Facilitates Condensation on ReLU NNs

In this subsection, We verify that the ReLU network facilitates condensation under dropout shown in Fig. 15, similar to the situation in the tanh NNs shown in Fig. 4 in the main text. We only plot the neurons with non-zero output value in the data interval in the situation in the ReLU NNs. For the neurons with constant zero output value in the data interval, they will not affect the training process and the NN’s output. Further, we study the results of ReLU NNs training under SGD, and the relationship between the model rank and generalization error, as shown in Figs. 16, 17, which correspond to the tanh NNs shown in Figs. 6, 8 in the main text.

C.3 Detailed Features of Tanh NNs

In order to eliminate the influence of the inhomogeneity of the tanh activation function on the parameter features of Fig. 4, we drew the normalized scatter diagrams between , and the orientation, as shown in Fig. 18. Obviously, for the network with dropout, both the input weight and the output weight have weight condensation, while the network without dropout does not have weight condensation.