Impact of Stricter Content Moderation on Parler’s Users’ Discourse

Abstract.

Social media platforms employ various content moderation techniques to remove harmful, offensive, and hate speech content. The moderation level varies across platforms; even over time, it can evolve in a platform. For example, Parler, a fringe social media platform popular among conservative users, was known to have the least restrictive moderation policies, claiming to have open discussion spaces for their users. However, after linking the 2021 US Capitol Riots and the activity of some groups on Parler, such as QAnon and Proud Boys, on January 12, 2021, Parler was removed from the Apple and Google App Store and suspended from Amazon Cloud hosting service. Parler would have to modify their moderation policies to return to these online stores. After a month of downtime, Parler was back online with a new set of user guidelines, which reflected stricter content moderation, especially regarding the hate speech policy.

In this paper, we studied the moderation changes performed by Parler and their effect on the toxicity of its content. We collected a large longitudinal Parler dataset with 17M parleys from 432K active users from February 2021 to January 2022, after its return to the Internet and App Store. To the best of our knowledge, this is the first study investigating the effectiveness of content moderation techniques using data-driven approaches and also the first Parler dataset after its brief hiatus. Our quasi-experimental time series analysis indicates that after the change in Parler’s moderation, the severe forms of toxicity (above a threshold of 0.5) immediately decreased and sustained. In contrast, the trend did not change for less severe threats and insults (a threshold between 0.5 - 0.7). Finally, we found an increase in the factuality of the news sites being shared, as well as a decrease in the number of conspiracy or pseudoscience sources being shared.

1. Introduction

Social media has become a powerful tool that reflects the best and worst aspects of human communication. On one hand, they allow individuals to freely express opinions, engage in interpersonal communication, and learn about new trends and stories. On the other hand, they have also become fertile grounds for several forms of abuse, harassment, and the dissemination of misinformation (Micro, 2017; Khan et al., 2019; Anderson et al., 2016; Singhal et al., 2023a). Social media platforms, hence, continue to adopt and evolve their content moderation techniques and policies to address these issues while trying to respect freedom of speech and promote a healthier online environment.

Social media platforms, however, do not follow unified methods and policies for content moderation (Singhal et al., 2023b). While some social media platforms adopt more stringent content moderation rules, others, like Parler, pursue a laissez-faire approach. Parler, launched in 2018, adhered to this hands-off moderation philosophy, contending that it promoted richer discussions and protected users’ freedom of speech (Rothschild, 2021). This was until January 6th, 2021, when Parler gained much notoriety for being home to several groups and protesters who stormed Capitol Hill (Groeger et al., 2017; Rondeaux et al., 2022). Subsequently, due to its content moderation policies and concerns about the spread of harmful or extremist content, Parler faced significant consequences. It was not only terminated by its cloud service provider, Amazon Web Services but also removed from major app distribution platforms, including the App Store and the Google Play store (Fung, 2021).

For Paler to return to the Apple App Store, it had to enact substantial revisions to its hate speech policies.111Example of changes in Parler CG: https://tinyurl.com/yda6pfmj This included a complete removal of the ability for users on iOS devices to access objectionable and Not Safe for Work (NSFW) content. As a result, Parler’s updated policies introduced more stringent moderation policies aimed at curbing hate speech on the platform (Lerman, 2021).

While several other prior studies have focused on the impact of de-platforming users or certain communities (Rogers, 2020; Rauchfleisch and Kaiser, 2021; Ali et al., 2021; Jhaver et al., 2021; Ribeiro et al., 2020), or investigated how content moderation has an impact on activities of problematic users (Trujillo and Cresci, 2022; Ali et al., 2021), our work is the first that investigates the impact of stricter content moderation policies on the platform’s content and userbase. In particular, we investigated two research questions: RQ1: What are the immediate and long-term effects of Parler’s content moderation revisions on the user-generated content? RQ2: How have Parler’s content moderation revisions changed its user base?

The immediate effects of Parler’s content moderation revisions on its user-generated content may include reduced hate speech, changes in user behavior, and user base shift. Stricter content moderation policies are likely to lead to an immediate reduction in hate speech and other objectionable content on the platform. Users posting such content may find their posts removed or their accounts suspended. Users may also quickly adapt to the new moderation policies by altering their behavior. They might refrain from posting controversial content to avoid sanctions. Also, some users may leave the platform in response to the changes, while others may join because they find the environment more welcoming. These shifts can occur relatively quickly. In our study, we could investigate the first two immediate effects of Parler content moderation changes. However, we did not examine user base shift due to lack of data and its ethical issues.

The long-term effects of Parler’s content moderation revisions on its user-generated content may include user base transformation, and content quality, and User base transformation, when over time, the platform’s user base may shift in terms of its demographic, values, and the types of content posted. Content quality indicates when the overall quality of content on the platform may improve. Users may engage in more civil and constructive discussions, which can attract a different user base. To assess user base transformation and content quality, we conducted a quasi-experimental time series analysis, monitoring user posts for toxic content, insults, identity attacks, profanity, and threats. In addition, we explored shifts in conversation topics and quantified the presence of biased posts and posts with non-factual links, utilizing data sourced from Media Bias Fact Check (MBFC) (mbf, 2022a).

To answer the above research questions, we used the data from Aliapoulios et al. (Aliapoulios et al., 2021a) as the seed dataset (we call this dataset a pre-moderation change dataset), and we tried to collect the posts for the same sample of users (i.e., 4M). Using our custom build crawler, we could collect about 17M parleys of 432K active users from February 2021 to January 2022. We labeled our dataset as a post-moderation policy change dataset. To the best of our knowledge, ours is the first dataset that was collected after Parler came back online. We will make our dataset available to the public. To measure the immediate and long-term effect of Parler’s content moderation changes, we used the Interrupted Time Series (ITS) regression analysis, which is arguably one of the strongest and widely used quasi-experimental methods in causal inference (Bernal et al., 2017; Horta Ribeiro et al., 2023; Trujillo and Cresci, 2022; Jhaver et al., 2021). This analysis helped us understand how and if the outcomes, e.g., the number of toxic posts, have changed after this intervention and if the effects have been sustained over time.

Thus, in this paper, we have the following contributions and findings:

-

(1)

Our work shows how content moderation effectiveness can be tested employing data-driven analysis on data obtained from the platform (here Parler).

-

(2)

We collected Parler data after its return to the Internet and App Store, hence, the first-ever post-de-platforming dataset.

-

(3)

Using a quasi-experimental approach, we found that Parler was effective in removing the most severe forms of toxicity and profanity. We also found that Parler users’ identity attacks increased just after Parler came online (0.5 – 0.8). However, in the long-term, Parler was effective in decreasing identity attacks.

-

(4)

Our findings showed an increase in both follower and following counts, as well as an uptick in users with verified and gold badges. This suggests the potential growth of Parler’s user base and the continued presence of older users who were active before the moderation policy changes.

-

(5)

We observed an improvement in factuality and credibility scores from the pre-moderation dataset to the post-moderation dataset. Additionally, we noted a reduction in the sharing of conspiracy and pseudoscience source links. However, there was an increase in the sharing of questionable source links in the post-moderation change dataset.

2. Related Works

Fringe Communities: Over the past few years, scholarships have extensively studied various fringe platforms such as Gab, Voat, 4chan (Papasavva et al., 2020a; Hine et al., 2017; Bernstein et al., 2011; Tuters and Hagen, 2020; Zannettou et al., 2018; Sipka et al., 2022; Israeli and Tsur, 2022; Jasser et al., 2023). When compared to other fringe social media, Parler is younger. Due to this, we notice that not a lot of studies have focused on collecting or establishing a framework to collect data from Parler (Aliapoulios et al., 2021a; donk_enby, 2021; Prabhu et al., 2021). There have been studies comparing topics of discussion on Parler and Twitter (Prabhu et al., 2021; Sipka et al., 2022; Jakubik et al., 2022). Although there exists work in this domain, most of the work is focused on exploring the existence or prevalence of a single topic. Hitkul et al. (Prabhu et al., 2021) uses the capitol riots, a pivotal movement in Parler’s history, to compare topics of discussion between Parler and Twitter. Works have analysed the language in Parler in several aspects such as QAnon content (Sipka et al., 2022; Bär et al., 2023; Jakubik et al., 2023), and COVID-19 vaccines (Baines et al., 2021). We believe that our work differs in this aspect as we are studying the changes localized to Parler and how users reacted to a brief hiatus of Parler.

Studies about Deplatforming: Jhaver et al. (Jhaver et al., 2021) examined how deplatforming users on Twitter could impact their userbase. They found that banning significantly reduced the number of conversations about all three individuals on Twitter and the toxicity levels of supporters declined. Trujillo & Cresci (Trujillo and Cresci, 2022) found that interventions had strong positive effects on reducing the activity of problematic users both inside and outside of r/The_Donald. Some scholarships have examined the effects of de-platforming individuals on the sites that sanctioned influencers move to post-deplatforming (Ali et al., 2021; Rauchfleisch and Kaiser, 2021; Rogers, 2020; Newell et al., 2016; Ribeiro et al., 2020; Russo et al., 2023; Horta Ribeiro et al., 2023). These researchers found a common result, that de-platforming significantly decreased the reach of the de-platformed users, however, the hateful and toxic rhetoric increased. Unlike previous studies, instead of exploring how users’ discourse changes when switching to different platforms, we examine the same users’ discourse when the platform undergoes stricter content moderation policies.

Hate Speech Detection and Classification: Empirical work on toxicity has employed machine learning based detection algorithms to identify and classify offensive language, hate speech, and cyberbully (Zhang et al., 2016; Davidson et al., 2017; Koratana and Hu, 2018; Pitsilis et al., 2018). The machine learning methods use a variety of features, including lexical properties, such as n-gram features (Nobata et al., 2016), character n-gram features (Mehdad and Tetreault, 2016), character n-gram, demographic and geographic features (Waseem and Hovy, 2016), sentiment scores (Dinakar et al., 2012; Sood et al., 2012; Gitari et al., 2015) average word and paragraph embeddings (Nobata et al., 2016; Djuric et al., 2015), and linguistic, psychological, and effective features inferred using an open vocabulary approach (ElSherief et al., 2018). The state-of-the-art toxicity detection tool is available through Google’s Perspective API (Google Perspective API, 2020). Perspective API has been extensively used in the previous studies (Kumarswamy et al., 2022; Salehabadi et al., 2022; Zannettou et al., 2020; Gröndahl et al., 2018; ElSherief et al., 2018; Saveski et al., 2021; Papasavva et al., 2020b; Aleksandric et al., 2022; Hickey et al., 2023).

Media Bias Fact Check: Gruppi et al. (Gruppi et al., 2021b) used MBFC service to label websites and the tweets pertaining to COVID-19 and 2020 Presidential elections embedded inside these articles. Weld et al. (Weld et al., 2021) analyzed more than 550 million links spanning 4 years on Reddit using MBFC. MBFC is widely used for labeling credibility and factuality of news sources for downstream analysis (Main, 2018; Heydari et al., 2019; Starbird, 2017; Darwish et al., 2017; Nelimarkka et al., 2018; Etta et al., 2022; Bovet and Makse, 2019; Cinelli et al., 2020, 2021; Weld et al., 2021) and as ground truth for prediction tasks (Dinkov et al., 2019; Stefanov et al., 2020; Patricia Aires et al., 2019; Bozarth et al., 2020; Gruppi et al., 2021a).

3. Background & Data Collection

We will now describe in detail about Parler and also our data collection methodology.

Parler: Parler was a microblogging website that was launched in August 2018. Parler marketed itself as being “built upon a foundation of respect for privacy and personal data, free speech, free markets, and ethical, transparent corporate policy” (Herbert, 2020). Parler is known for its minimal restrictions and many right-wing individuals, citing censorship from mainstream platforms joined Parler (Singhal et al., 2023b; con, 2020). However, after linking the 2021 US Capitol Riots and the activity of some groups on Parler, such as QAnon and Proud Boys, on January 12, 2021, Parler was removed from the Apple App Store, the Google Play Store, and Amazon Web Services (Fung, 2021). Parler had to change considerably its hate speech policy to return to the Apple App Store (Lerman, 2021). Parler came back online with a new cloud service provider in early February 2021 (Roberston, 2021). On April 14th, 2023, Parler was bought a digital media conglomerate Starboard, and it has been offline after that (Weatherbed, 2023).

Pre Policy Change Dataset: The data collection tool used in Aliapoulios et al. (Aliapoulios et al., 2021a) managed to collect user information for almost all of the users present at the time based on estimates published by Parler. They also managed to collect posts and comments from these users dating back to 2018 when Parler was created. The study collected user information from more than 13.25M users’ and randomly selected 4M users, from which they obtained about 99M posts (or parleys), and about 85M comments from August 1st, 2018 to January 11, 2021. In our study, we call this dataset the pre-moderation change dataset. We used the 4M users as the seed dataset to collect data post-moderation changes instituted by Parler.

Post Policy Change Parler Dataset: Parler started to employ a different mechanism for authenticating API requests in February of 2021. Due to these changes, we could no longer collect data from all users. Hence, we first obtained the list of 4M users which is a random set from 13.25M users provided in the pre-moderation change dataset (Aliapoulios et al., 2021b) and used our custom-build framework to get the content posted by the same users. Using this framework, we collected information about the post body, any URLs posted, a URL to the location of any media posted, the date posted, the number of echoes, and other metadata, such as the badges of the poster. Authors in (Aliapoulios et al., 2021a) obtained the metadata of 13.25M users, hence we also tried to collect the metadata of these users.

Post Policy Change Dataset Statistics: From the 4M users, we could collect 17,389,610 parleys from 432,654 active users. Our dataset consists of parleys from February 1st, 2021 to January 15th, 2022. We used the /pages/feed endpoint, which returns the parleys (posts) posted by a specific user using their username. Note that, this endpoint is different from the endpoint that is used to collect the 13.25M users’ metadata, and hence we were only able to obtain 432K users’ parleys. Several users from the initial seed dataset of 4M were no longer active or had deleted their accounts, or changed their usernames. We label them as missing users. Even though we are unsure if these users were suspended by Parler or they decided to leave Parler, we nevertheless analyzed and compared these users with those that remained active. Since we only collected posts from 432,654 users, we acknowledge that certain trends and analyses conducted might not be accurately reflected on the platform. However, as of January 2022, months after returning to the Apple app store, Parler disclosed that they estimate to have around 700,000 to 1M active users (par, 2022). This ensures that we have collected a significant part of the data to study and base our findings on.

These parleys (17M) consisted of users posting around 9M links and plain text in the body. A majority of these posts were primary posts that had no parent. If a parley is an original parley and is not an echo of another parley, it is known as a primary post with no parent. We collected the full-text body, a URL if a link was shared, the title of the parley, the date of creation, flags for trolling, sensitive and self-reported, an upvoted flag, a counter of echoes and likes. We noticed that Parler has a trolling flag, which might be set manually by moderators or automatically by the platform. We also tried to collect profile information for 13.25M users from the pre-policy change dataset. We used the pages/profile/view endpoint, that returns the metadata of the user. We found that 12,497,131 of these users still had a valid Parler account, so we could collect metadata for these users. For a vast majority of the accounts, Parler returned the number of followers, the number of following, status (account available or deleted), the number of and types of badges given to the user, a description of all badges available on Parler at the time of collection, date of parley creation, whether the account is private or public, and also whether the account is being followed by or a follower of the user logged in. A minority of profiles have one or more of these fields missing due to changes on the Parler platform from when the user created the account and the time of data collection.

Twitter Data Collection (Baseline): Major and contentious events, such as the U.S. presidential election, can influence the toxicity in any social media platform. Since our statistical analysis cannot account for such confounding factors, we hypothesize that similar trends may be observable on other social media platforms like Twitter, which did not implement policy changes during the same period. If we observe different trends or varying effect levels, we can be more confident that the shifts in outcomes are attributable to Parler’s content moderation adjustments. For a comparative baseline, we gathered a sample of Twitter data from the same timeframe and analyzed the trends before and after Parler’s policy changes. To collect the data, we used Twitter V2 API (Twitter, 2022) and collected posts daily using the exact timeline of the datasets. To circumvent the API restrictions, we collected 27K posts daily and restricted the posts to English. After the data collection was done, we were able to collect 24.16M posts for a pre-policy timeline and 9.69M for a post-policy timeline.

Ethical consideration. We only gathered posts from Parler profiles set to public and did not attempt to access private accounts. We also made no attempts to gather this data maliciously. Instead, we used the same backend APIs that a user browser would request data from. We only obtained the random sample from Twitter API and did not collect any metadata information about the users’ whose profile were set as private. We also follow standard ethical guidelines (Rivers and Lewis, 2014), not attempting to track users across sites or deanonymize them.

4. Methodology

We now describe how we operationalized users’ behavior and characteristics to answer our four research questions.

Measuring Toxicity Scores. To understand the differences in the user discourse in the pre-moderation dataset vs. in the post-moderation dataset, we utilized the Google Perspective API, which is a state-of-the-art toxicity detection tool (Google Perspective API, 2020). This AI-based tool investigates the provided text and assigns a score between 0 to 1, with a higher score indicating more severity for a particular attribute. We obtained the following attributes:

- Toxicity : Toxicity is defined as a rude, disrespectful, or unreasonable comment that is likely to make people leave a discussion.

- Severe toxicity: Perspective defines it as a very hateful, aggressive, disrespectful comment or otherwise very likely to make a user leave a discussion or give up on sharing their perspective.

- Profanity: Perspective defines profanity as swear words, curse words, or another obscene or profane language.

- Identity Attacks: Defined as negative or hateful comments targeting someone because of their identity.

- Threats: Describes an intention to inflict pain, injury, or violence against an individual or group.

- Insults: This attribute is defined by Perspective as an insulting, inflammatory, or negative comment towards a person or a group of people.

For our study, we collected the likelihood scores for each attribute. While collecting the scores, English was used as the default language for all posts since previous studies showed us that a large majority of Parler’s userbase was using English as their language of choice to communicate with other Parler users (Aliapoulios et al., 2021a). Before sending the posts to Perspective API, we pre-processed the posts by removing URLs, hashtags, etc. as these can lead to wrong scores or errors computing the scores by the API. We also pre-processed our Twitter dataset (Baseline) and obtained the scores via Perspective API. While we acknowledge that Perspective API has weaknesses in detecting toxicity (Hosseini et al., 2017; Welbl et al., 2021), however, prior works have found that it is successful in detecting forms of toxic language in the generated text (Ovalle et al., 2023), and also multiple prior works have used Perspective API for toxicity detection (Aleksandric et al., 2022; ElSherief et al., 2018; Saveski et al., 2021; Hickey et al., 2023), hence it is reasonable to use the API for our study.

Assessing Bias and Factuality: We analyzed the links that were shared on Parler using the Media Bias Fact Check (MBFC) service (mbf, 2022a). MBFC is an independent organization that uses volunteer and paid contributors to rate and store information about news websites (mbf, 2022a). MBFC can be used to measure the factuality of the URL, the presence of any bias, the country of origin, and the presence of conspiracy or pseudoscience, questionable sources, and pro-science sources. We used a list of links shared from both of our datasets to obtain labels for:

- Factuality: Referred to as how factual a website is. Scored between 0-5, where a score of 0 means that a website is not factual and a five is very factual. MBFC defines that for a website to be very factual and get a score of 5, it should pass its fact-checking test as well as make sure that critical information is not omitted.

- Bias: MBFC assigns a bias rating of Extreme left, left, left-center, least biased, right-center, right, and extreme right. To assign a bias rating to a website, MBFC contributors check the website’s stance on American issues, which divides left-biased websites from right-biased websites (mbf, 2021).

- Presence of conspiracy-pseudoscience: Websites that publish unverified information related to known conspiracies such as the New World Order, Illuminati, False flags, aliens, anti-vaccine, etc.

- Usage of questionable sources: MBFC defines this as a questionable source exhibits any of the following: extreme bias, overt propaganda, poor or no sourcing to credible information, a complete lack of transparency, and/or is fake news. Fake News is the deliberate attempt to publish hoaxes and/or disinformation for profit or influence (mbf, 2022b).

Casual Inference: We employed a quasi-experimental approach to measure the effect of Parler’s content moderation change. We leveraged a causal inference strategy called Interrupted Time Series (ITS) Regression Analysis (Bernal et al., 2017). In an ITS analysis, we track the dependent variable, i.e., toxicity attributes over time and use regression to determine if a treatment at a specific time (i.e., Changes in Parler’s content moderation policies after its return) caused a significant change in the toxicity attributes. The ITS regression models the behavior of the pre-treatment time series to predict how the series would have looked if the treatment had not been applied (Jhaver et al., 2021). It is important to regress the behavior on time; otherwise, we could easily misinterpret a steadily increasing (or decreasing) time series as a treatment effect when comparing the averages of behavior prior to and post treatment (Bernal et al., 2017). Hence, ITS analysis allows us to claim that the toxicity changed at the time of de-platforming, instead of simply reflecting a general trend. Herein we employ a linear model and a separate logistic regression model for analyzing users’ rhetoric, based on different thresholds of the perspective scores:

| (1) |

where is the toxicity score; T is a continuous variable which indicates the time in days passed from the start of the observational period; D is a dummy variable indicating observation collected before (=0) or after (=1) de-platforming; P is a continuous variable indicating time passed since the intervention has occurred (before intervention has occurred P is equal to 0). indicated the trend before de-platforming, indicates the immediate change upon de-platforming, and indicates the sustained effect after de-platforming.

5. Results

5.1. Impact of Changes to Parler Content Moderation

To have a balanced dataset, and since our post-moderation dataset spans approximately 11 months, we filtered the pre-moderation dataset for 11 months (i.e., February 2020 to January 2021). We also employed the same step for our Twitter dataset. Table 1 shows the results of our ITS analysis. We compared the trends that we observed in Parler to that of Twitter. since we cannot control for various co-founding factors, we used Twitter as a baseline to understand if the changes were just local to Parler or was this a trend in other social media platforms also).

| Toxicity | ||

| Event | Parler | Twitter (baseline) |

| Pre-change | -4.922e-10 (0.000)∗∗∗ | 1.466e-05 (0.003)∗∗ |

| Immediate effect | 0.1478 (0.000)∗∗∗ | -0.0212 (0.001)∗∗ |

| Long-term effect | 5.807e-07 (0.000)∗∗∗ | -1.466e-05 (0.003)∗∗ |

| Severe Toxicity | ||

| Event | Parler | Twitter (baseline) |

| Pre-change | -9.119e-11 (0.000)∗∗∗ | 8.008e-07 (0.114) |

| Immediate effect | -0.0334 (0.000)∗∗∗ | -0.0020 (0.002)∗∗ |

| Long-term effect | -2.068e-08 (0.000)∗∗∗ | -8.009e-07 (0.114) |

| Profanity | ||

| Event | Parler | Twitter (baseline) |

| Pre-change | 2.235e-10 (0.000)∗∗∗ | 1.501e-05 (0.002)∗∗ |

| Immediate effect | -0.0439 (0.000)∗∗∗ | -0.0192 (0.001)∗∗ |

| Long-term effect | -3.335e-08 (0.000)∗∗∗ | -1.501e-05 (0.002)∗∗ |

| Threat | ||

| Event | Parler | Twitter (baseline) |

| Pre-change | -9.392e-10 (0.000)∗∗∗ | 3.218e-07 (0.769) |

| Immediate effect | 0.2229 (0.000)∗∗∗ | -0.0033 (0.018)∗ |

| Long-term effect | 6.897e-07 (0.000)∗∗∗ | -3.218e-07 (0.769) |

| Insult | ||

| Event | Parler | Twitter (baseline) |

| Pre-change | -4.902e-10 (0.000)∗∗∗ | 2.444e-06 (0.423) |

| Immediate effect | 0.1742 (0.000)∗∗∗ | 0.0054 (0.161) |

| Long-term effect | 6.538e-07 (0.000)∗∗∗ | -2.444e-06 (0.423) |

| Identity Attack | ||

| Event | Parler | Twitter (baseline) |

| Pre-change | -1.56e-09 (0.000)∗∗∗ | -1.495e-06 (0.300) |

| Immediate effect | -0.0032 (0.000)∗∗∗ | 0.0036 (0.045)∗ |

| Long-term effect | -2.493e-08 (0.000)∗∗∗ | 1.495e-06 (0.300) |

| Note: | ∗p0.05; ∗∗p0.01; ∗∗∗p0.001 | |

As we can observe from the table, after Parler returned back online, Severe Toxicity, Profanity, and Identity Attack all decreased significantly. Interestingly, we observe a similar trend in Twitter, where these attributes decreased. However, we see a slight different observation for sustained effect of changes to moderation in Parler compared to Twitter, as both Severe Toxicity and Identity Attack, the decrease was small (however statistically significant ) in Parler, it was not the same for Twitter as the results were not statistically significant. Interestingly, we see that Toxicity, Threat, and Insult of Parler users’ increased significantly and had a sustained long term effect. Comparing these attributes to Twitter, we see that Toxicity was the only one that decreased and had a sustained long term effect.

| Toxicity (0.5) | Toxicity (0.6) | Toxicity (0.7) | Toxicity (0.8) | Toxicity (0.9) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Event | Parler | Parler | Parler | Parler | Parler | |||||

| Pre-change | -7.9e-09 (0.0)∗∗∗ | 0.0003 (0.03)∗ | -4.1e-09 (0.0)∗∗∗ | 0.001 (0.01)∗∗ | -1.14e-09 (0.0)∗∗∗ | 0.001 (0.002)∗∗ | 1.04e-09 (0.0)∗∗∗ | 0.001 (0.001)∗∗ | 1.28e-08 (0.0)∗∗∗ | 0.0004 (0.47) |

| Immediately effect | 0.71 (0.000)∗∗∗ | -0.4 (0.03)∗ | 0.88 (0.0)∗∗∗ | -0.63 (0.002)∗∗ | -0.61 (0.0)∗∗∗ | -1.3 (0.0)∗∗∗ | -0.8 (0.0)∗∗∗ | -2.1 (0.0)∗∗∗ | -0.9(0.0)∗∗∗ | -1.34 (0.04)∗ |

| Long-term effect | 7.9e-06 (0.0)∗∗∗ | -0.0003 (0.03)∗ | 8.1e-06 (0.0)∗∗∗ | -0.001 (0.01)∗∗ | -7.3e-06 (0.0)∗∗∗ | -0.001 (0.002)∗∗ | -7.2e-06 (0.0)∗∗∗ | -0.001 (0.001)∗∗ | -7.3e-06 (0.0)∗∗∗ | -0.0004 (0.47) |

| Severe Toxicity (0.5) | Severe Toxicity (0.6) | Severe Toxicity (0.7) | Severe Toxicity (0.8) | Severe Toxicity (0.9) | ||||||

| Event | Parler | Parler | Parler | Parler | Parler | |||||

| Pre-change | -3.78e-09 (0.0)∗∗∗ | 0.001 (0.39) | 2.02e-10 (0.45) | -0.002 (0.39) | 4.52e-09 (0.0)∗∗∗ | 0.001 (0.99) | 1.03e-08 (0.0)∗∗∗ | N/A | 1.5e-08 (0.0)∗∗∗ | N/A |

| Immediately effect | -0.6 (0.0)∗∗∗ | -2 (0.014)∗ | -0.7 (0.0)∗∗∗ | 2 (0.64) | -1.3 (0.0)∗∗∗ | 7 (0.99) | -1.4 (0.0)∗∗∗ | N/A | -1.9 (0.0)∗∗∗ | N/A |

| Long-term effect | -7.4e-06 (0.0)∗∗∗ | 0.0006 (0.39) | -7.7e-06 (0.0)∗∗∗ | 0.002 (0.39) | -6.7e-06 (0.0)∗∗∗ | -0.001 (0.99) | -7.3e-06 (0.0)∗∗∗ | N/A | -8.98e-06 (0.0)∗∗∗ | N/A |

| Profanity (0.5) | Profanity (0.6) | Profanity (0.7) | Profanity (0.8) | Profanity (0.9) | ||||||

| Event | Parler | Parler | Parler | Parler | Parler | |||||

| Pre-change | 1.5e-09 (0.0)∗∗∗ | 0.0004 (0.01)∗ | 3.3e-09 (0.0)∗∗∗ | 0.001 (0.005)∗∗ | 6.5e-09 (0.0)∗∗∗ | 0.001 (0.005)∗∗ | 1.1e-08 (0.0)∗∗∗ | 0.001 (0.02)∗ | 2.1e-08 (0.0)∗∗∗ | 0.001 (0.02)∗ |

| Immediately effect | -0.41 (0.0)∗∗∗ | -0.38 (0.05) | -0.55 (0.0)∗∗∗ | -0.62 (0.002)∗∗ | -0.65 (0.0)∗∗∗ | -0.93 (0.0)∗∗∗ | -0.84 (0.0)∗∗∗ | -1.71 (0.0)∗∗∗ | -1.12 (0.0)∗∗∗ | -3.38 (0.0)∗∗∗ |

| Long-term effect | -7.5e-06 (0.0)∗∗∗ | -0.0004 (0.01)∗ | -7.7e-06 (0.0)∗∗∗ | -0.001 (0.005)∗∗ | -7.5e-06 (0.0)∗∗∗ | -0.001 (0.005)∗∗ | -7.1e-06 (0.0)∗∗∗ | -0.001 (0.02)∗ | -7e-06 (0.0)∗∗∗ | -0.001 (0.02)∗ |

| Threat (0.5) | Threat (0.6) | Threat (0.7) | Threat (0.8) | Threat (0.9) | ||||||

| Event | Parler | Parler | Parler | Parler | Parler | |||||

| Pre-change | -2.2e-08 (0.0)∗∗∗ | 0.0001 (0.78) | -2.14e-08 (0.0)∗∗∗ | -0.004 (0.16) | -1.9e-08 (0.0)∗∗∗ | 0.001 (0.98) | -1.2e-08 (0.0)∗∗∗ | 0.001 (N/A) | 6.7e-09 (0.0)∗∗∗ | 0.002 (0.99) |

| Immediately effect | 1.48 (0.0)∗∗∗ | -1.05 (0.02)∗ | 1.84 (0.0)∗∗∗ | 5.48 (0.28) | 2.12 (0.0)∗∗∗ | 4.32 (0.91) | -0.41 (0.0)∗∗∗ | 5.9 (0.98) | -1.12 (0.0)∗∗∗ | 3.33 (0.99) |

| Long-term effect | 8.1e-06 (0.0)∗∗∗ | -0.0001 (0.78) | 8.3e-06 (0.0)∗∗∗ | 0.004 (0.161) | 8.3e-06 (0.0)∗∗∗ | -0.001 (0.98) | -8.1e-06 (0.0)∗∗∗ | -0.001 (N/A) | -7.9e-06 (0.0)∗∗∗ | -0.002 (0.99) |

| Insult (0.5) | Insult (0.6) | Insult (0.7) | Insult (0.8) | Insult (0.9) | ||||||

| Event | Parler | Parler | Parler | Parler | Parler | |||||

| Pre-change | -6.9e-09 (0.0)∗∗∗ | 0.0004 (0.22) | -4.4e-09 (0.0)∗∗∗ | 0.001 (0.17) | -2.8e-09 (0.0)∗∗∗ | -0.001 (0.47) | -2.6e-09 (0.0)∗∗∗ | 0.002 (0.37) | -0.58 (0.0)∗∗∗ | 23.86 (0.99) |

| Immediately effect | 0.76 (0.0)∗∗∗ | 0.43 (0.27) | 0.91 (0.0)∗∗∗ | -0.03 (0.94) | -0.64 (0.0)∗∗∗ | 1.91 (0.19) | -0.71 (0.0)∗∗∗ | -1.1 (0.46) | -1.12 (0.0)∗∗∗ | 3.33 (0.99) |

| Long-term effect | 7.9e-06 (0.0)∗∗∗ | -0.0004 (0.22) | 8.1e-06 (0.0)∗∗∗ | -0.001 (0.17) | -7e-06 (0.0)∗∗∗ | 0.001 (0.47) | -7.1e-06 (0.0)∗∗∗ | -0.002 (0.37) | -7.3e-06 (0.0)∗∗∗ | 0.005 (0.98) |

| Identity Attack (0.5) | Identity Attack (0.6) | Identity Attack (0.7) | Identity Attack (0.8) | Identity Attack (0.9) | ||||||

| Event | Parler | Parler | Parler | Parler | Parler | |||||

| Pre-change | -4.8e-08 (0.0)∗∗∗ | -0.001 (0.2) | -5.9e-08 (0.0)∗∗∗ | -0.002 (0.39) | -7.9e-08 (0.0)∗∗∗ | -0.002 (0.38) | -9.4e-08 (0.0)∗∗∗ | 0.002 (N/A) | -9.2e-08 (0.0)∗∗∗ | N/A |

| Immediately effect | 0.39 (0.0)∗∗∗ | 2 (0.07) | 0.41 (0.0)∗∗∗ | 4.57 (0.27) | 0.64 (0.0)∗∗∗ | 3.03 (0.46) | 0.94 (0.0)∗∗∗ | 9.17 (0.99) | -0.9 (0.0)∗∗∗ | N/A |

| Long-term effect | -6.8e-06 (0.0)∗∗∗ | 0.001 (0.2) | -6.9e-06 (0.0)∗∗∗ | 0.002 (0.39) | -7.2e-06 (0.0)∗∗∗ | 0.002 (0.38) | -9.4e-06 (0.0)∗∗∗ | -0.002 (N/A) | -5.8e-06 (0.0)∗∗∗ | N/A |

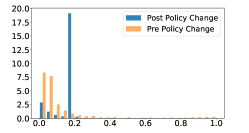

To understand, why Toxicity, Threat, and Insult did not decrease, we plotted the histograms of all 6 attributes to compare the trends in pre- and post- moderation change dataset. Figure 1 shows the result. Interestingly, we can observe in Figure 1b, Figure 1e, and Figure 1f, there is a sharp jump in the scores in the post-moderation change dataset around the values of 0.7 in both Insult and Threat and 0.65 for Toxicity. On the other hand, we can observe that there is a left skew for Identity Attacks (Figure. 1a), Profanity (Figure. 1c), and Severe Toxicity (Figure. 1d), and after 0.5, we can clearly observe that the post-moderation change dataset had less frequency than those in pre-moderation change dataset. Hence, results from our ITS linear regression analysis, suggests that, after Parler came back online, there was reduction in Identity Attacks, Profanity, and Severe Toxicity of users and we observed that there was a sustained long term impact on these attributes, i.e., a significant decrease, however, Toxicity, Threat, and Insult increased significantly, and had a sustained long term impact.

Further Analysis for Temporal Changes using Thresholds.

In Table 1 we observed that some attributes increased and had a sustained long term effect on Parler, we further analyzed the mechanics that could explain if Parler was actually moderating users’ content that were severe, i.e., were above certain threshold. Hence, we decided to run other ITS regression models, but with binary variables, where our dependent variable i.e., Perspective Score, was set as 0, if it was below a threshold (t), else it was set as 1. (e.g., toxic or not toxic, or insult or no insult) We employed multiple threshold values e.g., 0.5, 0.6, 0.7, 0.8, and 0.9. Table 2 show the results.

Interestingly, we can observe that Parler was actively moderating Toxic posts for threshold . We observe that immediately after Parler returned online, there is a downward trend and this trend is also sustained in long term, as there is a small yet a statistically significant decrease in users’ toxic rhetoric. We also note that Severe Toxicity and Profanity had a significant reduction with and it had a sustained long term impact. While, in Table 1, we found that Identity Attack decreased after Parler came back online and had a sustained effect, we see a slight different result in Table 2, where from thresholds 0.5 to 0.8, we can observe that the Identity Attack increased, however, it only decreased when the . We can also notice that Threat and Insult were being moderated when they were severe in nature ( and respectively).

Summary: In summary, our Interrupted Time Series (ITS) linear regression analysis revealed that there was a statistically significant decrease for Severe Toxicity, Profanity, and Identity Threat of Parler users’ after Parler returned back online with stricter moderation guidelines (as shown in Table 1). We see that there was a statistically significant decrease in Severe Toxicity when Parler returned back online, however we do not observe a sustained decrease in users’ rhetoric. We also observe that Toxicity, Threat, and Insult of Parler users increased significantly and sustained in the long term. Comparing it to our baseline, we notice that generally, Toxicity and Threat, were decreasing. Interestingly, while for Profanity, we see that there is a decrease in both Parler and Twitter, we observe that coefficients for Twitter were bigger for both events than those of Parler (-0.02 vs. -0.04 and -1.501e-05 vs. -3.335e-08).

To further scrutinize, why toxicity, threat, and insult, increased, we conducted another experiment were we used an ITS logistic regression model. We used different thresholds (e.g., 0.5, 0.6, 0.7, 0.8, 0.9), and we can clearly notice (results shown in Table 2) that Parler was moderating highly toxic and insult posts (). We also observe that this trend was sustained over a long term. Comparing that to our baseline, we observe that Twitter was already moderating posts that were toxic from 0.5 onward. Interestingly, we can notice an unusual pattern emerging in Identity Attack, where for all the thresholds, except 0.9, we see that identity attack of Parler users increased significantly, however it decreased in the long term.

Hence, we can conclude that, Parler’s changes to moderation policy had an effect in decreasing the toxic and abusive content and while some attributes had a decrease from a less threshold () there were some attributes that were only moderated for higher threshold values. Overall, in the long term there was a sustained decrease in users toxic and abusive discourse, hence answering our RQ1.

5.2. Analysis on Parleys

To understand if Parler moderation change had impact on the user base, other than users’ speech, we also performed various analysis, on no. of following, followers, badges changed, the topic of conversation, and what were the changes in the bias and credibility in the urls’ being shared changed or not. These analysis helped us in answering our RQ2.

Comparing Users’ Characteristics Pre- and Post-Moderation Change Datasets: We extracted following and followers counts from both datasets to understand if any of these metrics have changed significantly after the moderation policy changes. Since these variables are not captured in time, and we have two different distributions, we cannot perform ITS regression analysis. The resulting values did not form a standard distribution, so we used the Mann-Whitney test. We could reject the null hypothesis that users in the pre and post-moderation change datasets have the same distribution for followers and followings. We found that there is an increase in the number of followings ( vs. , ), hence indicating that users are still active on Parler. Interestingly, we can also observe that both following and followers increased in the post-moderation dataset. We hypothesize that these are new users’ who joined Parler.

| Pre Moderation Change | Post Moderation Change | |||||||

| Metric | Min | Max | Mean | Median | Min | Max | Mean | Median |

| Followers | 0 | 2,300,000 | 20.65 | 1 | 0 | 6,048,750 | 34.8 | 1 |

| Following | 0 | 126,000 | 28.28 | 6 | 0 | 479,412 | 33.4 | 8 |

| Website | Pre Moderation | Post Moderation | Change(%) |

|---|---|---|---|

| image-cdn.parler.com | 7,318,992 | 1 | 99.99 |

| youtube.com | 2,499,198 | 225,562 | 83.44 |

| youtu.be | 1,812,871 | 19 | 99.99 |

| bit.ly | 893,603 | 5 | 99.99 |

| twitter.com | 803,514 | 42,638 | 89.92 |

| media.giphy.com | 539,389 | 545 | 99.79 |

| i.imgur.com | 532,365 | 5,779 | 97.85 |

| facebook.com | 520,796 | 318 | 99.87 |

| thegatewaypundit.com | 469,855 | 610,512 | 13.01 |

| breitbart.com | 328,953 | 240,547 | 15.52 |

| foxnews.com | 298,285 | 136,956 | 37.06 |

| instagram.com | 168,160 | 22,932 | 75.99 |

| rumble.com | 164,949 | 744,132 | 63.71 |

| theepochtimes.com | 136,294 | 33,937 | 60.12 |

| hannity.com | 13,017 | 148,026 | 83.83 |

| justthenews.com | 50,638 | 147,984 | 49.01 |

| www.theblaze.com | 2,006 | 122,111 | 96.76 |

| www.westernjournal.com | 6,399 | 119,551 | 89.83 |

| bongino.com | 17,251 | 114,334 | 73.77 |

| www.bitchute.com | 104,462 | 87,672 | 8.73 |

| Badge | Description | Pre Change | Post Change |

| Verified | This badge means Parler has verified the account belongs to a real person and not a bot. Since verified users can change their screen name, the badge does not guarantee one’s identity. | 25,734 | 236,431 |

| Gold | A Gold Badge means Parler has verified the identity of the person or organization. Gold Badges can be influencers, public figures, journalists, media outlets, public officials, government entities, businesses, or organizations (including nonprofits). If the account has a Gold Badge, its parleys and comments come from real people. | 589 | 668 |

| Integration Partner | Used by publishers to import articles and other content from their websites | 64 | N/A |

| RSS feed | These accounts automatically post articles directly from an outlet’s website | 99 | 13 |

| Private | If you see this badge, the account owner has chosen to make the account private. This badge may also be applied to accounts that are locked due to community guideline violations | 596,824 | 337,717 |

| Verified Comments | Users with a verified badge who are restricting comments to only other verified users. | 4,147 | N/A |

| Parody | Parler approved parody accounts. | 37 | N/A |

| Parler Employee | This badge is applied to Parler employees’ personal accounts, should they wish. Their parleys are their own views and not Parler’s. | 25 | 28 |

| Real Name | Users using their real name | 2 | N/A |

| Parler Early | Signifying Parler’s earliest members, this badge appears on accounts opened in 2018. | 81 | 822 |

| Parler Official | These accounts - @Parler, @ParlerDev, and others - issue official statements from the Parler team. | N/A | 5 |

We extracted badges for every user in the dataset of pre and post-moderation policy change as seen in Table 5. Interestingly, we found that the number of users with the Private badge has decreased in the post-moderation policy dataset. Please note that we did not attempt to collect any parleys of users who had private badge. The badge information was extracted from the metadata of these users. We also see quite a large number of users going through Parler’s verification process to prove that their account is not a bot. We notice an increase which could prove that users are still active on Parler since receiving the verified badge requires user action and is not automatic. A sharp increase in the number of verified users might be due to users’ fear of an influx of bots as Parler was growing as a platform and attracting attention from other social media users. There is an increase in the number of Gold badges which could mean that existing Parler users have gained popularity to require a Gold badge.

Parley Content Analysis: We used textual data collected with Parleys from our data collection phase, to investigate what were users discussing about on Parler, in both the pre and post moderation change dataset. To extract the most popular topics we used the Latent Dirichlet Allocation (LDA) topic modeling technique (Blei et al., 2003). First, we removed all the URLs any unicode characters, stopwords present in the text before using LDA to extracting popular topics. We use a corpus of stopwords from the Natural Language Toolkit as a list of stopwords to remove from our data.

We noticed a lot of interest in the 2020 US Elections in the pre-moderation change dataset. This can be attributed to the fact that the elections took place during the period of pre-moderation dataset collection and most news and discussions about the subject were before post- moderation parleys were collected (Aliapoulios et al., 2021b). We noticed a reduction in the usage of words like Where We Go as 1, We Go as All (WWG1WGA). This term is associated with the QAnon conspiracy movement. We also found several Parler specific words such as Parleys present in the earlier dataset. We theorize that the cause for this might be due to users migrating from other social media platforms like Twitter and Facebook which do not use these terms. We also noticed Parler users were increasingly using the word patriots, which is how Republican lawmakers described the rioters (Levin, 2021).

Links Shared in Parleys: We examined the shared links on parleys to understand any trends, with the goal that aligning these trends with the rhetoric around online communities would allow a better understanding of the changes. To extract links being shared to external websites from Parleys, we used every Parley in both the pre- and post-moderation change datasets and checked for any valid URLs being present. Then, we extracted only the top-level domain names from each URL being shared. We also stored the number of times each domain has been shared and use it to measure the popularity of websites in each dataset.

From Table 4, we can see the sharp rise in the popularity of Rumble links (64%) on Parler, as Rumble does not remove content regarding misinformation and election integrity and MBFC label this website as Right Biased and Questionable (Singhal et al., 2023b; MBFC, 2023b). Also, during our data collection period, the USA recently had its Presidential elections, hence we see that highlighted in our analysis. We can also notice a decline in Twitter links being shared. These observations could be explained by the sharp rise in the rhetoric surrounding censorship on Twitter and other popular social media platforms (A. Vogels et al., 2020). We also saw that there was a sharp increase in the number of The Blaze links (97%) being shared. Using the MBFC service we found that this website is labelled by the service as Strongly Right Biased and Questionable (MBFC, 2023a).

Observing that a large number of sites are being shared, we studied links shared on Parler using the Media Bias Fact Check (MBFC) service. We were able to collect labels for 3,937 (2.59%) and 1,081 (1.75%) of all links being shared on Parleys from the pre and post-moderation policy change datasets, respectively. We were not able to collect labels for all the URLs as majority of them were websites such as YouTube, Twitter, Instagram (please see Table 4) and MBFC do not provide labels for them, hence our results are generalizable, as we were able to capture a majority of the websites for which MBFC provides labels and hence, understanding how the policy change had an impact on users’ speech.

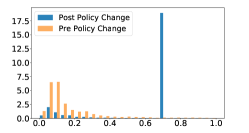

Conspiracy-Pseudoscience

Sources

Figure 2 shows our results. At first, we noticed a decrease in the number of conspiracy-pseudoscience news articles in Figure 2c. However, interestingly, we saw an increase in the number of questionable source articles that were being shared in the post moderation change dataset, as shown in Figure 2d. This implies that Parler still does not remove URLs that are spreading overt propaganda and fake news, hence concurring with the findings of (Singhal et al., 2023b). In Figure 2a we also notice that most links with a score between Very Low and Low were from the pre-moderation change while post-moderation links are scattered across higher ranges between Low and High. Interestingly, in Figure 2b, we see that Parler users are sharing more URLs, from the Left Center & Right websites. This is interesting, as the majority of Parler users are highly conservatives (Collins, 2021). In summary, using the labels returned by MBFC, we found that the credibility (factuality) of the URLs being shared did increase. We also notice a substantial decrease in the number of conspiracy-pseudoscience news articles. However, interestingly Parler users were now sharing more questionable source URLs then before.

Summary In summary, we observed that there was a statistically significant increase in the number of followings after Parler came back online. We also found that Parler users’ Verified their accounts more than in the pre-moderation change dataset. Interestingly, we found that Parler users’ were increasingly using the word patriots. Using MBFC, we found that credibility (factuality) of the URLs being shared did increase. We also notice a substantial decrease in the number of conspiracy-pseudoscience news articles. However, interestingly Parler users were now sharing more questionable source URLs then before. Hence, there was considerable changes of Parler users’ hence answering our RQ2.

6. Limitations & Future Work

In our current dataset, i.e., the post-moderation change dataset, we were not able to collect a random sample of users, hence our analysis might not yield a full-scale impact of the moderation policy change. The other limitation of our work is that users might have changed their usernames when Parler was reinstated back online, as to evade possible detection. We also did not run any analysis for the comments on these posts. Comments can also shed light on the moderation changes that Parler undertook.

In the future, we plan to study the user comments on posts to understand if the moderation changes are being reflected in the comments also, as comments can also shed light on the moderation changes that Parler undertook. We also plan to use other toxicity detection methods such as GPT-4 to detect toxicity of users’ parleys.

7. Conclusion

On January 12, 2021, Parler a social media platform popular among conservative users was removed from Apple and Google App Stores and Amazon Web Services, stopped hosting Parler content shortly after. This was blamed on Parler’s refusal to remove posts inciting violence following the 2021 US Capitol Riots. Parler was eventually allowed back after they promised that they will strengthen their moderation to remove hateful content. Our study looks into the effect of these policy changes on the user discourse in the pre policy dataset that was collected is published in previous studies, and the post policy dataset, which we collected by first establishing a new framework to collect posts and user information from Parler.

Our quasi-experimental time series analysis indicates that after the change in Parler’s moderation, the severe forms of toxicity (above a threshold of 0.5) immediately decreased and sustained. In contrast, the trend did not change for less severe threats and insults (a threshold between 0.5 - 0.7). Finally, we found an increase in the factuality of the news sites being shared, as well as a decrease in the number of conspiracy or pseudoscience sources being shared.

References

- (1)

- con (2020) 2020. Parler, MeWe, Gab gain momentum as conservative social media alternatives in post-Trump age. https://www.usatoday.com/story/tech/2020/11/11/parler-mewe-gab-social-media-trump-election-facebook-twitter/6232351002/.

- mbf (2021) 2021. Left vs. right bias: How we rate the bias of media sources. https://mediabiasfactcheck.com/left-vs-right-bias-how-we-rate-the-bias-of-media-sources/

- mbf (2022a) 2022a. About MBFC. https://mediabiasfactcheck.com/about/

- par (2022) 2022. From the flag-bearer for free speech to ‘scapegoat’, Parler is fighting back. https://www.thetimes.co.uk/article/from-the-flag-bearer-for-free-speech-to-scapegoat-parler-is-fighting-back-bmwcdfgf5.

- mbf (2022b) 2022b. Methodology. https://mediabiasfactcheck.com/methodology/

- A. Vogels et al. (2020) Emily A. Vogels, Andrew Perrin, and Monica Anderson. 2020. Most Americans Think Social Media Sites Censor Political Viewpoints. https://www.pewresearch.org/internet/2020/08/19/most-americans-think-social-media-sites-censor-political-viewpoints/

- Aleksandric et al. (2022) Ana Aleksandric, Mohit Singhal, Anne Groggel, and Shirin Nilizadeh. 2022. Understanding the Bystander Effect on Toxic Twitter Conversations. arXiv preprint arXiv:2211.10764 (2022).

- Ali et al. (2021) Shiza Ali, Mohammad Hammas Saeed, Esraa Aldreabi, Jeremy Blackburn, Emiliano De Cristofaro, Savvas Zannettou, and Gianluca Stringhini. 2021. Understanding the Effect of Deplatforming on Social Networks. In 13th ACM Web Science Conference 2021. 187–195.

- Aliapoulios et al. (2021a) Max Aliapoulios, Emmi Bevensee, Jeremy Blackburn, Barry Bradlyn, Emiliano De Cristofaro, Gianluca Stringhini, and Savvas Zannettou. 2021a. An early look at the parler online social network. arXiv preprint arXiv:2101.03820 (2021).

- Aliapoulios et al. (2021b) Max Aliapoulios, Emmi Bevensee, Jeremy Blackburn, Barry Bradlyn, Emiliano De Cristofaro, Gianluca Stringhini, and Savvas Zannettou. 2021b. A Large Open Dataset from the Parler Social Network. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 15. 943–951.

- Anderson et al. (2016) Ashley A Anderson, Sara K Yeo, Dominique Brossard, Dietram A Scheufele, and Michael A Xenos. 2016. Toxic talk: How online incivility can undermine perceptions of media. International Journal of Public Opinion Research 30, 1 (2016), 156–168.

- Baines et al. (2021) Annalise Baines, Muhammad Ittefaq, and Mauryne Abwao. 2021. # Scamdemic,# plandemic, or# scaredemic: What parler social media platform tells us about COVID-19 vaccine. Vaccines 9, 5 (2021), 421.

- Bär et al. (2023) Dominik Bär, Nicolas Pröllochs, and Stefan Feuerriegel. 2023. Finding Qs: Profiling QAnon supporters on Parler. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 17. 34–46.

- Bernal et al. (2017) James Lopez Bernal, Steven Cummins, and Antonio Gasparrini. 2017. Interrupted time series regression for the evaluation of public health interventions: a tutorial. International journal of epidemiology 46, 1 (2017), 348–355.

- Bernstein et al. (2011) Michael Bernstein, Andrés Monroy-Hernández, Drew Harry, Paul André, Katrina Panovich, and Greg Vargas. 2011. 4chan and/b: An Analysis of Anonymity and Ephemerality in a Large Online Community. In Proceedings of the international AAAI conference on web and social media, Vol. 5. 50–57.

- Blei et al. (2003) David M Blei, Andrew Y Ng, and Michael I Jordan. 2003. Latent dirichlet allocation. Journal of machine Learning research 3, Jan (2003), 993–1022.

- Bovet and Makse (2019) Alexandre Bovet and Hernán A Makse. 2019. Influence of fake news in Twitter during the 2016 US presidential election. Nature communications 10, 1 (2019), 7.

- Bozarth et al. (2020) Lia Bozarth, Aparajita Saraf, and Ceren Budak. 2020. Higher ground? How groundtruth labeling impacts our understanding of fake news about the 2016 US presidential nominees. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 14. 48–59.

- Cinelli et al. (2021) Matteo Cinelli, Gianmarco De Francisci Morales, Alessandro Galeazzi, Walter Quattrociocchi, and Michele Starnini. 2021. The echo chamber effect on social media. Proceedings of the National Academy of Sciences 118, 9 (2021), e2023301118.

- Cinelli et al. (2020) Matteo Cinelli, Walter Quattrociocchi, Alessandro Galeazzi, Carlo Michele Valensise, Emanuele Brugnoli, Ana Lucia Schmidt, Paola Zola, Fabiana Zollo, and Antonio Scala. 2020. The COVID-19 social media infodemic. Scientific reports 10, 1 (2020), 1–10.

- Collins (2021) Ben Collins. 2021. Increasingly militant ’parler refugees’ and Anxious Qanon adherents prep for Doomsday. https://www.nbcnews.com/tech/internet/increasingly-militant-parler-refugees-anxious-qanon-adherents-prep-doomsday-n1254775

- Darwish et al. (2017) Kareem Darwish, Walid Magdy, and Tahar Zanouda. 2017. Trump vs. Hillary: What went viral during the 2016 US presidential election. In International conference on social informatics. Springer, 143–161.

- Davidson et al. (2017) Thomas Davidson, Dana Warmsley, Michael Macy, and Ingmar Weber. 2017. Automated hate speech detection and the problem of offensive language. In Eleventh international aaai conference on web and social media.

- Dinakar et al. (2012) Karthik Dinakar, Birago Jones, Catherine Havasi, Henry Lieberman, and Rosalind Picard. 2012. Common sense reasoning for detection, prevention, and mitigation of cyberbullying. ACM Transactions on Interactive Intelligent Systems (TiiS) 2, 3 (2012), 18.

- Dinkov et al. (2019) Yoan Dinkov, Ahmed Ali, Ivan Koychev, and Preslav Nakov. 2019. Predicting the leading political ideology of YouTube channels using acoustic, textual, and metadata information. arXiv preprint arXiv:1910.08948 (2019).

- Djuric et al. (2015) Nemanja Djuric, Jing Zhou, Robin Morris, Mihajlo Grbovic, Vladan Radosavljevic, and Narayan Bhamidipati. 2015. Hate speech detection with comment embeddings. In Proceedings of the 24th international conference on world wide web. ACM, 29–30.

- donk_enby (2021) donk_enby. 2021. Parler Tricks. https://doi.org/10.5281/zenodo.4426283.

- ElSherief et al. (2018) Mai ElSherief, Vivek Kulkarni, Dana Nguyen, William Yang Wang, and Elizabeth Belding. 2018. Hate lingo: A target-based linguistic analysis of hate speech in social media. In Twelfth International AAAI Conference on Web and Social Media.

- Etta et al. (2022) Gabriele Etta, Matteo Cinelli, Alessandro Galeazzi, Carlo Michele Valensise, Walter Quattrociocchi, and Mauro Conti. 2022. Comparing the impact of social media regulations on news consumption. IEEE Transactions on Computational Social Systems (2022).

- Fung (2021) Brian Fung. 2021. Parler has now been booted by Amazon, Apple and Google — CNN business. https://www.cnn.com/2021/01/09/tech/parler-suspended-apple-app-store/index.html.

- Gitari et al. (2015) Njagi Dennis Gitari, Zhang Zuping, Hanyurwimfura Damien, and Jun Long. 2015. A lexicon-based approach for hate speech detection. International Journal of Multimedia and Ubiquitous Engineering 10, 4 (2015), 215–230.

- Google Perspective API (2020) Google Perspective API. 2020. https://www.perspectiveapi.com/.

- Groeger et al. (2017) Lena V Groeger, Jeff Kao, Al Shaw, Moiz Syed, and Maya Eliahou. 2017. What Parler saw during the attack on the Capitol. Propublica. New York: ProPublica, Inc (2017).

- Gröndahl et al. (2018) Tommi Gröndahl, Luca Pajola, Mika Juuti, Mauro Conti, and N Asokan. 2018. All You Need is” Love” Evading Hate Speech Detection. In Proceedings of the 11th ACM Workshop on Artificial Intelligence and Security. 2–12.

- Gruppi et al. (2021a) Maurício Gruppi, Benjamin D Horne, and Sibel Adalı. 2021a. NELA-GT-2020: A large multi-labelled news dataset for the study of misinformation in news articles. arXiv preprint arXiv:2102.04567 (2021).

- Gruppi et al. (2021b) Maurício Gruppi, Benjamin D. Horne, and Sibel Adalı. 2021b. NELA-GT-2020: A Large Multi-Labelled News Dataset for The Study of Misinformation in News Articles. https://doi.org/10.48550/ARXIV.2102.04567

- Herbert (2020) G Herbert. 2020. What is Parler¿Free speech’social network jumps in popularity after Trump loses election.

- Heydari et al. (2019) Aarash Heydari, Janny Zhang, Shaan Appel, Xinyi Wu, and Gireeja Ranade. 2019. YouTube Chatter: Understanding Online Comments Discourse on Misinformative and Political YouTube Videos. arXiv preprint arXiv:1907.00435 (2019).

- Hickey et al. (2023) Daniel Hickey, Matheus Schmitz, Daniel Fessler, Paul E Smaldino, Goran Muric, and Keith Burghardt. 2023. Auditing elon musk’s impact on hate speech and bots. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 17. 1133–1137.

- Hine et al. (2017) Gabriel Emile Hine, Jeremiah Onaolapo, Emiliano De Cristofaro, Nicolas Kourtellis, Ilias Leontiadis, Riginos Samaras, Gianluca Stringhini, and Jeremy Blackburn. 2017. Kek, cucks, and god emperor trump: A measurement study of 4chan’s politically incorrect forum and its effects on the web. In Eleventh International AAAI Conference on Web and Social Media.

- Horta Ribeiro et al. (2023) Manoel Horta Ribeiro, Homa Hosseinmardi, Robert West, and Duncan J Watts. 2023. Deplatforming did not decrease Parler users’ activity on fringe social media. PNAS nexus 2, 3 (2023), pgad035.

- Hosseini et al. (2017) Hossein Hosseini, Sreeram Kannan, Baosen Zhang, and Radha Poovendran. 2017. Deceiving google’s perspective api built for detecting toxic comments. arXiv preprint arXiv:1702.08138 (2017).

- Israeli and Tsur (2022) Abraham Israeli and Oren Tsur. 2022. Free speech or Free Hate Speech? Analyzing the Proliferation of Hate Speech in Parler. In Proceedings of the Sixth Workshop on Online Abuse and Harms (WOAH). 109–121.

- Jakubik et al. (2022) Johannes Jakubik, Michael Vössing, Dominik Bär, Nicolas Pröllochs, and Stefan Feuerriegel. 2022. Online Emotions During the Storming of the US Capitol: Evidence from the Social Media Network Parler. arXiv preprint arXiv:2204.04245 (2022).

- Jakubik et al. (2023) Johannes Jakubik, Michael Vössing, Nicolas Pröllochs, Dominik Bär, and Stefan Feuerriegel. 2023. Online emotions during the storming of the US Capitol: evidence from the social media network Parler. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 17. 423–434.

- Jasser et al. (2023) Greta Jasser, Jordan McSwiney, Ed Pertwee, and Savvas Zannettou. 2023. ‘Welcome to# GabFam’: Far-right virtual community on Gab. New Media & Society 25, 7 (2023), 1728–1745.

- Jhaver et al. (2021) Shagun Jhaver, Christian Boylston, Diyi Yang, and Amy Bruckman. 2021. Evaluating the effectiveness of deplatforming as a moderation strategy on Twitter. Proceedings of the ACM on Human-Computer Interaction 5, CSCW2 (2021), 1–30.

- Khan et al. (2019) Sayeed Ahsan Khan, Mohammed Hazim Alkawaz, and Hewa Majeed Zangana. 2019. The use and abuse of social media for spreading fake news. In 2019 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS). IEEE, 145–148.

- Koratana and Hu (2018) Animesh Koratana and Kevin Hu. 2018. Toxic speech detection. URL: https://web. stanford. edu/class/archive/cs/cs224n/cs224n 1194 (2018).

- Kumarswamy et al. (2022) Nihal Kumarswamy et al. 2022. “Strict Moderation?” The Impact of Increased Moderation on Parler Content and User Behavior. Ph. D. Dissertation.

- Lerman (2021) Rachel Lerman. 2021. Parler’s revamped app will be allowed back on Apple’s App Store. https://www.washingtonpost.com/technology/2021/04/19/parler-apple-app-store-reinstate/.

- Levin (2021) Bess Levin. 2021. REPUBLICAN LAWMAKERS CLAIM JANUARY 6 RIOTERS WERE JUST FRIENDLY GUYS AND GALS TAKING A TOURIST TRIP THROUGH THE CAPITOL. https://www.vanityfair.com/news/2021/05/capitol-attack-tourist-visit.

- Main (2018) Thomas J Main. 2018. The rise of the alt-right. Brookings Institution Press.

- MBFC (2023a) MBFC. 2023a. The Blaze – Bias and Credibility. https://mediabiasfactcheck.com/the-blaze/

- MBFC (2023b) MBFC. 2023b. Rumble – Bias and Credibility. https://mediabiasfactcheck.com/rumble/

- Mehdad and Tetreault (2016) Yashar Mehdad and Joel Tetreault. 2016. Do characters abuse more than words?. In Proceedings of the 17th Annual Meeting of the Special Interest Group on Discourse and Dialogue. 299–303.

- Micro (2017) Trend Micro. 2017. Fake news and Cyber Propaganda: The use and abuse of social media. https://www.trendmicro.com/vinfo/pl/security/news/cybercrime-and-digital-threats/fake-news-cyber-propaganda-the-abuse-of-social-media

- Nelimarkka et al. (2018) Matti Nelimarkka, Salla-Maaria Laaksonen, and Bryan Semaan. 2018. Social media is polarized, social media is polarized: towards a new design agenda for mitigating polarization. In Proceedings of the 2018 Designing Interactive Systems Conference. 957–970.

- Newell et al. (2016) Edward Newell, David Jurgens, Haji Mohammad Saleem, Hardik Vala, Jad Sassine, Caitrin Armstrong, and Derek Ruths. 2016. User migration in online social networks: A case study on reddit during a period of community unrest. In Tenth International AAAI Conference on Web and Social Media.

- Nobata et al. (2016) Chikashi Nobata, Joel Tetreault, Achint Thomas, Yashar Mehdad, and Yi Chang. 2016. Abusive language detection in online user content. In Proceedings of the 25th international conference on world wide web. International World Wide Web Conferences Steering Committee, 145–153.

- Ovalle et al. (2023) Anaelia Ovalle, Palash Goyal, Jwala Dhamala, Zachary Jaggers, Kai-Wei Chang, Aram Galstyan, Richard Zemel, and Rahul Gupta. 2023. “I’m fully who I am”: Towards Centering Transgender and Non-Binary Voices to Measure Biases in Open Language Generation. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency. 1246–1266.

- Papasavva et al. (2020a) Antonis Papasavva, Jeremy Blackburn, Gianluca Stringhini, Savvas Zannettou, and Emiliano De Cristofaro. 2020a. ” Is it a Qoincidence?”: A First Step Towards Understanding and Characterizing the QAnon Movement on Voat. co. arXiv preprint arXiv:2009.04885 (2020).

- Papasavva et al. (2020b) Antonis Papasavva, Savvas Zannettou, Emiliano De Cristofaro, Gianluca Stringhini, and Jeremy Blackburn. 2020b. Raiders of the lost kek: 3.5 years of augmented 4chan posts from the politically incorrect board. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 14. 885–894.

- Patricia Aires et al. (2019) Victoria Patricia Aires, Fabiola G. Nakamura, and Eduardo F. Nakamura. 2019. A link-based approach to detect media bias in news websites. In Companion Proceedings of The 2019 World Wide Web Conference. 742–745.

- Pitsilis et al. (2018) Georgios K Pitsilis, Heri Ramampiaro, and Helge Langseth. 2018. Detecting offensive language in tweets using deep learning. arXiv preprint arXiv:1801.04433 (2018).

- Prabhu et al. (2021) Avinash Prabhu, Dipanwita Guhathakurta, Mallika Subramanian, Manvith Reddy, Shradha Sehgal, Tanvi Karandikar, Amogh Gulati, Udit Arora, Rajiv Ratn Shah, Ponnurangam Kumaraguru, et al. 2021. Capitol (Pat) riots: A comparative study of Twitter and Parler. arXiv preprint arXiv:2101.06914 (2021).

- Rauchfleisch and Kaiser (2021) Adrian Rauchfleisch and Jonas Kaiser. 2021. Deplatforming the far-right: An analysis of YouTube and BitChute. Available at SSRN (2021).

- Ribeiro et al. (2020) Manoel Horta Ribeiro, Shagun Jhaver, Savvas Zannettou, Jeremy Blackburn, Emiliano De Cristofaro, Gianluca Stringhini, and Robert West. 2020. Does Platform Migration Compromise Content Moderation? Evidence from r/The_Donald and r/Incels. arXiv preprint arXiv:2010.10397 (2020).

- Rivers and Lewis (2014) Caitlin M Rivers and Bryan L Lewis. 2014. Ethical research standards in a world of big data. F1000Research 3 (2014).

- Roberston (2021) Adi Roberston. 2021. Parler is back online after a month of downtime. https://www.theverge.com/2021/2/15/22284036/parler-social-network-relaunch-new-hosting.

- Rogers (2020) Richard Rogers. 2020. Deplatforming: Following extreme Internet celebrities to Telegram and alternative social media. European Journal of Communication 35, 3 (2020), 213–229.

- Rondeaux et al. (2022) Candace Rondeaux, Ben Dalton, Cuong Nguyen, Michael Simeone, Thomas Taylor, and Shawn Walker. 2022. Parler and the Road to the Capitol Attack. https://www.newamerica.org/future-frontlines/reports/parler-and-the-road-to-the-capitol-attack/. (2022).

- Rothschild (2021) Mike Rothschild. 2021. Parler wants to be the ’free speech’ alternative to Twitter. https://www.dailydot.com/debug/what-is-parler-free-speech-social-media-app/

- Russo et al. (2023) Giuseppe Russo, Luca Verginer, Manoel Horta Ribeiro, and Giona Casiraghi. 2023. Spillover of antisocial behavior from fringe platforms: The unintended consequences of community banning. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 17. 742–753.

- Salehabadi et al. (2022) Nazanin Salehabadi, Anne Groggel, Mohit Singhal, Sayak Saha Roy, and Shirin Nilizadeh. 2022. User Engagement and the Toxicity of Tweets. https://doi.org/10.48550/ARXIV.2211.03856

- Saveski et al. (2021) Martin Saveski, Brandon Roy, and Deb Roy. 2021. The structure of toxic conversations on Twitter. In Proceedings of the Web Conference 2021. 1086–1097.

- Singhal et al. (2023a) Mohit Singhal, Nihal Kumarswamy, Shreyasi Kinhekar, and Shirin Nilizadeh. 2023a. Cybersecurity Misinformation Detection on Social Media: Case Studies on Phishing Reports and Zoom’s Threat. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 17. 796–807.

- Singhal et al. (2023b) Mohit Singhal, Chen Ling, Pujan Paudel, Poojitha Thota, Nihal Kumarswamy, Gianluca Stringhini, and Shirin Nilizadeh. 2023b. SoK: Content moderation in social media, from guidelines to enforcement, and research to practice. In 2023 IEEE 8th European Symposium on Security and Privacy (EuroS&P). IEEE, 868–895.

- Sipka et al. (2022) Andrea Sipka, Aniko Hannak, and Aleksandra Urman. 2022. Comparing the Language of QAnon-related content on Parler, Gab, and Twitter. In 14th ACM Web Science Conference 2022. 411–421.

- Sood et al. (2012) Sara Sood, Judd Antin, and Elizabeth Churchill. 2012. Profanity use in online communities. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 1481–1490.

- Starbird (2017) Kate Starbird. 2017. Examining the alternative media ecosystem through the production of alternative narratives of mass shooting events on Twitter. In Proceedings of the International AAAI Conference on Web and Social Media, Vol. 11. 230–239.

- Stefanov et al. (2020) Peter Stefanov, Kareem Darwish, Atanas Atanasov, and Preslav Nakov. 2020. Predicting the topical stance and political leaning of media using tweets. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 527–537.

- Trujillo and Cresci (2022) Amaury Trujillo and Stefano Cresci. 2022. Make Reddit Great Again: Assessing Community Effects of Moderation Interventions on r/The_Donald. arXiv preprint arXiv:2201.06455 (2022).

- Tuters and Hagen (2020) Marc Tuters and Sal Hagen. 2020. (((They))) rule: Memetic antagonism and nebulous othering on 4chan. New media & society 22, 12 (2020), 2218–2237.

- Twitter (2022) Twitter. 2022. Twitter API. https://developer.twitter.com/en/docs/twitter-api

- Waseem and Hovy (2016) Zeerak Waseem and Dirk Hovy. 2016. Hateful symbols or hateful people? predictive features for hate speech detection on twitter. In Proceedings of the NAACL student research workshop. 88–93.

- Weatherbed (2023) Jess Weatherbed. 2023. Parler goes offline after being sold to a new owner. https://www.theverge.com/2023/4/14/23683207/parler-shutting-down-relaunch-aquisition-announcement-conservative-social-media.

- Welbl et al. (2021) Johannes Welbl, Amelia Glaese, Jonathan Uesato, Sumanth Dathathri, John Mellor, Lisa Anne Hendricks, Kirsty Anderson, Pushmeet Kohli, Ben Coppin, and Po-Sen Huang. 2021. Challenges in detoxifying language models. arXiv preprint arXiv:2109.07445 (2021).

- Weld et al. (2021) Galen Weld, Maria Glenski, and Tim Althoff. 2021. Political Bias and Factualness in News Sharing across more than 100,000 Online Communities. ICWSM (2021).

- Zannettou et al. (2018) Savvas Zannettou, Barry Bradlyn, Emiliano De Cristofaro, Haewoon Kwak, Michael Sirivianos, Gianluca Stringini, and Jeremy Blackburn. 2018. What is gab: A bastion of free speech or an alt-right echo chamber. In Companion Proceedings of the The Web Conference 2018. 1007–1014.

- Zannettou et al. (2020) Savvas Zannettou, Mai ElSherief, Elizabeth Belding, Shirin Nilizadeh, and Gianluca Stringhini. 2020. Measuring and Characterizing Hate Speech on News Websites. In 12TH ACM WEB SCIENCE CONFERENCE. ACM.

- Zhang et al. (2016) Justine Zhang, Ravi Kumar, Sujith Ravi, and Cristian Danescu-Niculescu-Mizil. 2016. Conversational flow in Oxford-style debates. arXiv preprint arXiv:1604.03114 (2016).