Image-to-Image Translation-based Data Augmentation for Robust EV Charging Inlet Detection

Abstract

This work addresses the task of electric vehicle (EV) charging inlet detection for autonomous EV charging robots. Recently, automated EV charging systems have received huge attention to improve users’ experience and to efficiently utilize charging infrastructures and parking lots. However, most related works have focused on system design, robot control, planning, and manipulation. Towards robust EV charging inlet detection, we propose a new dataset (EVCI dataset) and a novel data augmentation method that is based on image-to-image translation where typical image-to-image translation methods synthesize a new image in a different domain given an image. To the best of our knowledge, the EVCI dataset is the first EV charging inlet dataset. For the data augmentation method, we focus on being able to control synthesized images’ captured environments (e.g., time, lighting) in an intuitive way. To achieve this, we first propose the environment guide vector that humans can intuitively interpret. We then propose a novel image-to-image translation network that translates a given image towards the environment described by the vector. Accordingly, it aims to synthesize a new image that has the same content as the given image while looking like captured in the provided environment by the environment guide vector. Lastly, we train a detection method using the augmented dataset. Through experiments on the EVCI dataset, we demonstrate that the proposed method outperforms the state-of-the-art methods. We also show that the proposed method is able to control synthesized images using an image and environment guide vectors.

I INTRODUCTION

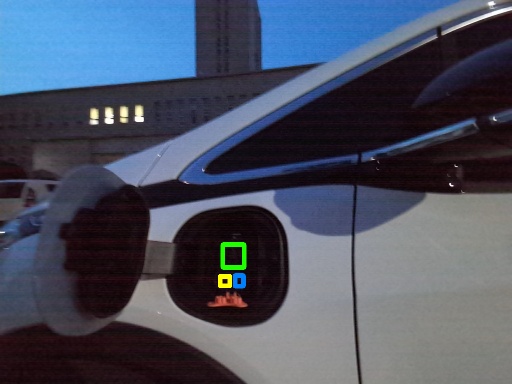

Original Image

Localizing the charging inlet of an electric vehicle (EV) is an essential task for autonomous EV charging robots to accurately plug-in a charging coupler. As the market share of EVs has recently increased explosively, charging infrastructures have also huge demands. Current charging stations are, however, inconvenient since human is required in-between charging a vehicle to another one. For instance, if a charger is coupled to a vehicle, to charge another one, a human needs to plug-out the charger from the former and to plug-in it into the latter. For fast EV chargers, this is even harder as power cables are heavier (around 10kg). Hence, to alleviate the inconvenience, autonomous EV charging robots have been proposed as an efficient and effective charging method [1, 2, 3, 4, 5].

While a robot needs to locate the charging inlet of a vehicle to charge the EV automatically, very limited researches have focused on EV charging inlet localization. Accordingly, we were not able to find a public dataset. Moreover, to the best of our knowledge, most (if not all) experiments were conducted under lab environments without a real vehicle. Hence, we, first of all, propose the first public EV charging inlet localization dataset (EVCI dataset)111https://github.com/machinevision-seoultech/evci.. Furthermore, to achieve robust localization, we propose a novel image-to-image translation network and employ the network for data augmentation. As image-to-image translation networks generate a synthesized image given an image, these networks can naturally increase the diversity of images in the dataset unless the synthesized image is exactly the same as the one in the dataset. In the proposed translation network, to be able to guide synthesized images’ captured environments, we propose to utilize explainable environment guide vectors. By this, we were able to generate various and explainable images given an image and varying environment vectors (see Fig. 1). Lastly, a state-of-the-art detection network is trained using the augmented dataset and is employed to achieve robust localization. The overall framework is shown in Fig. 2.

The contributions of this paper are as follow: (1) We present, to the best of our knowledge, the first EV charging inlet localization dataset that is essential for the research related to autonomous EV charging robots; (2) To be able to control the conditions of synthesized images, we propose the explainable environment guide vector. By using the vector, we are able to synthesize various corresponding images given an image (see Fig. 1); (3) We propose a novel image-to-image translation network that utilizes the interpretable environment vector. The translation network is employed to augment training data; (4) We demonstrate the effectiveness of the proposed data augmentation method by training a state-of-the-art detection network using the augmented data.

II Related works

II-A EV Charging Inlet Detection

Miseikis et al. used a shape-based template matching algorithm to localize charging ports in a pair of stereo images under a lab environment [2]. Depth is then estimated using corresponding coordinates on the image pair. Given 3D coordinates, perspective transformation is estimated using a least-squares fit algorithm to obtain refined position and orientation. Behl et al. presented a proof-of-concept robot to automatically charge an EV [1]. They divided the robot system into three subsystems and evaluated each subsystem independently. Regarding charging inlet plug-in/out, they used a 3D printed charging inlet instead of a real vehicle. They localized the inlet on a captured image from a camera by using the Hough transform [6] and a template matching algorithm. Long et al. proposed a design that uses both color camera(s) and range sensor(s) to achieve accurate localization [3]. Lou et al. proposed a cable-driven auto-charging robot that uses a vision sensor to localize charging ports [4]. However, the experiments were limited to a lab environment and used a simulated charging port. For both [3] and [4], localization algorithms were not specifically reported.

Another relevant work is charging inlet recognition. Sun et al. presented a CNN-based method that predicts whether an input image contains a complete, incomplete, fake charging port, or none [5]. However, since it does not include localization, it is not enough for automatic charging robots.

To the best of our knowledge, regarding charging inlet detection, previous works only conducted experiments using a simulated charging inlet under a lab environment. Also, the dataset is currently not publicly available. Hence, in this paper, we collected a novel dataset from real vehicles and will make it publicly available for further research.

II-B Data Augmentation using Day-to-night Image Translation

Recently, day-to-night image translation has been studied for data augmentation [7, 8, 9, 10, 11]. Huang et al. proposed AugGAN that focuses on preserving objects on images during translation [7, 8]. They utilized the network to augment training data by day-to-night image translation to improve nighttime vehicle detection. Lin et al. extended AugGAN to Multimodal AugGAN to generate diversely translated images given an input image [9]. To achieve this, they utilized a low-dimensional latent vector to specify a condition for a translated image. Lee et al. presented a framework that uses CycleGAN [12] to translate daytime images to nighttime ones and trains a vehicle detector using both original and synthetic images [10]. Zheng et al. proposed ForkGAN that decouples domain-invariant content and domain-specific style information to translate nighttime images to daytime images or vice versa [11]. It was utilized to improve image localization/retrieval, semantic segmentation, and object detection.

While it was not utilized for data augmentation, HiDT network is also about day-to-night image translation [13]. Anokhin et al. presented the network to re-render an image to another one with different illuminations.

Another related work is translating nighttime images to daytime images to directly utilize the translated images for further applications [14, 11]. As the method aims to translate a test image to another one in an easier domain rather than augmenting training data, it does not require re-training final networks (e.g., object detection network). However, it requires translating images in a test phase, and accordingly increases computational demands during testing. Hence, it is less appropriate for real-time applications.

The proposed network is related to day-to-night image translation methods for data augmentation [7, 8, 9, 10, 11]. However, the proposed network utilizes an explainable environment vector to guide translated images. Also, by varying the vector, the network is able to generate various images given an image.

II-C Object detection

The methods for object detection can be divided into two main categories: one-stage methods and multi-stage methods. One-stage methods have advantages in being more efficient and simpler while multi-stage methods are more accurate and more flexible in general.

R-CNN is one of the earliest object detection methods that use CNNs [15]. Its improved versions were also proposed that reduce training and testing time [16, 17, 18]. They are all multi-stage methods that consist of region proposal step and detection step.

YOLO [19] and SSD [20] are two of the earliest one-stage methods. These methods employ a single neural network to predict class probabilities and bounding boxes directly from a whole image instead of each proposed region. As they do not have a separate region proposal step, they are usually faster than multi-stage methods. While they are faster, their accuracies are often lower. Lin et al. thought that this could be because of the extreme foreground-background class imbalance [21]. To overcome this, they proposed RetinaNet that utilizes focal loss. Tan et al. proposed EfficientDet [22] that uses a compound scaling method similar to EfficientNet [23] to generate a family of one-stage object detectors.

DetectoRS [24] proposed the recursive feature pyramid and applied it to a multi-stage detector, HTC [25]. The recursive pyramid adds an additional feedback connection from the top-down path to the bottom-up path in the feature pyramid network. It also utilizes switchable atrous convolution that outputs the weighted summation of the outputs from two convolution paths with different atrous rates.

Recently, methods that utilize Transformer [26] were also proposed [27, 28, 29]. Carion et al. proposed DETR that first extracts features using CNNs and employs transformer encoder/decoder [27]. Given object queries, the decoder outputs the corresponding embeddings based on the encoder’s output in parallel. The embeddings are then processed by a shared feed-forward network to obtain detection results. To reduce computational demands of DETR, Zhu et al. proposed Deformable DETR [28], and Zheng et al. proposed Adaptive Clustering Transformer [29].

III Proposed method

In order to improve the robustness of EV charging inlet detection given limited data, we need a method for data augmentation. A central component of our proposed approach is the image-to-image translation network that synthesizes images that look similar to those captured in another environment (time, lighting, etc.). To be controllable and explainable, we first propose the environment guide vector that consists of human interpretable values. We then augment training data using the environment guide vector and the image-to-image network, which we call the Environment Translation GAN (EnT-GAN). Lastly, we utilize augmented training data to train a state-of-the-art object detection network to achieve robust EV charging inlet detection.

In this section, we first introduce a novel EV Charging Inlet (EVCI) dataset in Section III-A. We then present environment guide vector and EnT-GAN in Section III-B and in Section III-C, respectively. Finally, we present detection method in Section III-D.

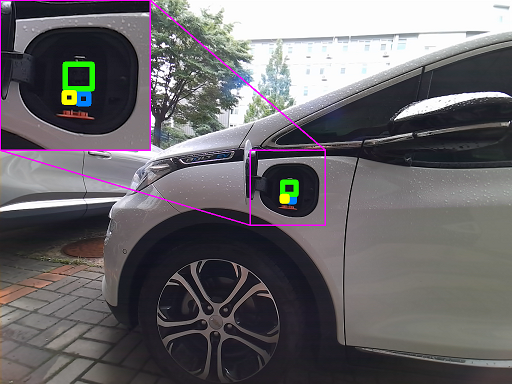

(Bolt, Indoor)

(Bolt, Daytime)

(Bolt, Night)

(Bolt, Evening)

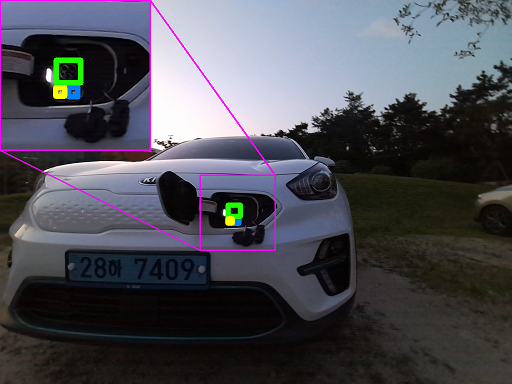

(Niro, Indoor)

(Niro, Morning)

(Niro, Night)

(Niro, Evening)

III-A EV Charging Inlet (EVCI) dataset

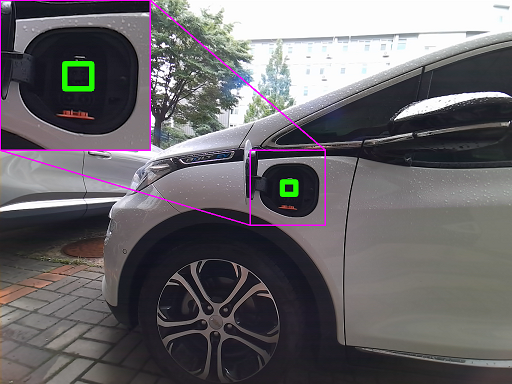

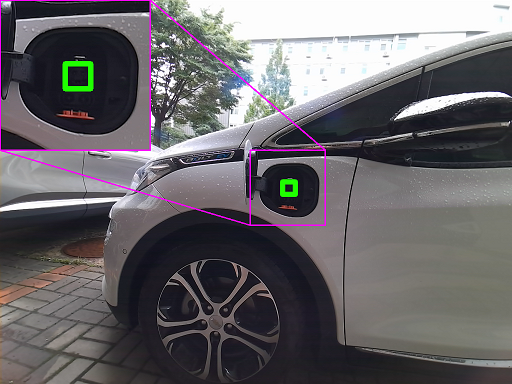

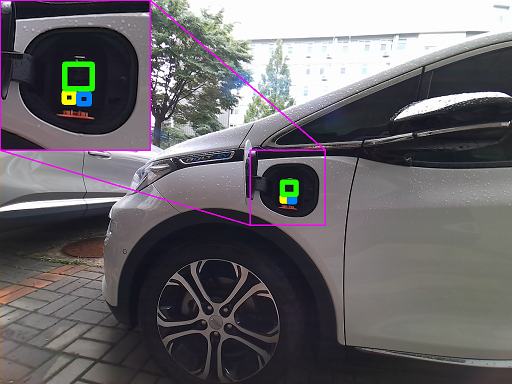

We collected a new dataset using Intel RealSense Depth Camera D435i since we were not able to find a publicly available dataset for EV inlet detection. The collected dataset consists of 4,153 pairs of color images and depth maps. Depth maps were only used to get more robust ground truth annotations, especially for images collected at night. The dataset was collected from two vehicles at various environments (locations, times, and others). The two vehicles are Chevrolet Bolt EV 2019 and Kia Niro EV 2018. Ground truth bounding boxes were labeled by human annotators. Specifically, three components of a charging inlet were annotated so that not only location but also rotation can also be estimated (see Fig. 3). Example images are shown in Fig. 4, and detailed information is provided in Table I.

Among 4,153 images, 1,009 and 1,194 images were collected at daytime/morning and at night/evening, respectively. The remaining 1,950 images were collected at indoor parking facilities at various times. The dataset is used to create two benchmark datasets (EVCI-A and EVCI-B dataset). EVCI-A dataset is designed to train, validate, and test on all environments (indoor, daytime/morning, and night/evening) (see Table II). For the EVCI-B dataset, the training dataset consists of images collected in daytime/morning and at indoor facilities while the validation/testing datasets consist of images captured at night/evening and indoor facilities mostly. Hence, by utilizing the EVCI-B dataset, given only images from daytime/morning/indoor for training, performances on images from night/evening can be measured.

| Vehicle | Places | Time | Weather | # of images |

|---|---|---|---|---|

| Indoor | - | - | 1,059 | |

| Bolt | Daytime | Sunny | 364 | |

| EV | Outdoor | Morning | Rainy | 341 |

| Night | Sunny | 326 | ||

| Evening | Sunny | 373 | ||

| Indoor | - | - | 891 | |

| Niro | Daytime | Sunny | 151 | |

| EV | Outdoor | Morning | Sunny | 153 |

| Night | Sunny | 254 | ||

| Evening | Sunny | 241 |

| Dataset | Places | Time | # of images | |

|---|---|---|---|---|

| split | EVCI-A | EVCI-B | ||

| Indoor | - | 1,273 | 1,207 | |

| Training | Outdoor | Daytime/Morning | 562 | 832 |

| Night/Evening | 409 | - | ||

| Indoor | - | 267 | 319 | |

| Validation | Outdoor | Daytime/Morning | 74 | - |

| Night/Evening | 196 | 287 | ||

| Indoor | - | 410 | 424 | |

| Test | Outdoor | Daytime/Morning | 373 | 177 |

| Night/Evening | 589 | 907 | ||

III-B Environment Guide Vector

In general, contrast, brightness, and saturation vary depending on whether an image is captured under daytime lighting levels or night-time lighting [30]. Since our goal is, given an image, generating various images that look like images captured under different lighting conditions, we propose to utilize brightness, contrast, and saturation to guide an environment. So, given an image () and an environment guide vector (), a corresponding image is generated.

To validate the effectiveness of the proposed environment guide vector, we analyzed the brightness, contrast, and saturation of the images in the EVCI dataset. Specifically, cumulative distribution functions of each of them are computed for images captured under indoor, daytime, morning, night, evening environments (see Fig. 5). We also show the 3D scatter plot of data where each axis corresponds to each of them in Fig. 6. The left and right plots are the same except for their axes. We can observe that most night-time images have low brightness and low contrast. We can also observe that most indoor images have low saturation while most evening images have high saturation. Please note that the distribution might be different from natural images as the captured environments might have lighting from vehicles, street lamps, or any artificial lighting (see Fig. 3 and Fig. 4).

(a)

(b)

(c)

In more detail, brightness () is computed by the mean of pixel-wise luminance, which is calculated by the method in [31]. Root mean square (RMS) contrast is used for contrast (), which is the standard deviation of pixel-wise luminance. Saturation () is computed by the mean of pixel-wise saturation, which is calculated by the same way as the HSV color representation. The environment vector is then determined by normalizing each component of the computed vector to . The normalization is processed using the minimum and maximum of each component of all images in the dataset.

III-C Environment Translation GAN (EnT-GAN)

The proposed image-to-image translation network is designed to be able to synthesize an image that a human can easily control and explain. Also, it is to generate various images given an image. The training framework of the proposed network is shown in Fig. 7. and represent the content encoder and the environment guide vector extractor, respectively. aims to encode content information and consists of three convolution layers and five residual blocks where each residual block contains two convolution layers and one skip connection. aims to extract environment information such as lighting conditions, the existence of artificial lighting, etc. It is designed to be able to intuitively control synthesized images. It extracts brightness, contrast, and saturation as explained in Section III-B and is a non-learnable component. represents the generator that synthesizes an image given an encoded content map and an environment guide vector . The environment vector is first processed by an MLP that consists of three fully connected layers. Then, adaptive instance normalization (AdaIN) [32] is applied to the encoded content map using the MLP-processed environment vector. Finally, the output of the AdaIN layer is processed by four residual blocks, two transposed convolution layers, and one convolution layer to obtain synthesized images.

In the inference stage, given an image and an environment vector, the generator synthesizes an image along with the content encoder . The environment vector can either be human selected, randomly generated, or extracted from an image (using environment vector extractor ).

In the training phase, the network is trained using the following objective functions:

where denotes the loss that is used to train the generator and the content encoder. The loss consists of image reconstruction loss, cycle consistency loss, environment translation loss, perceptual loss, and adversarial loss for generators. contains the adversarial loss for discriminators.

Image reconstruction loss. The loss is to be able to reconstruct an input image if its encoded content map and its extracted environment vector are given to the generator as inputs. The loss is defined as the -norm of the pixel-wise differences between the input image and the reconstructed image . Formally, it is defined as .

Cycle consistency loss. The loss has similarity with the image reconstruction loss as it is also about reconstructing an input image. The difference is that the reconstructed image is generated given the encoded content map of another synthesized image along with the input image’s environment vector . is generated using the encoded content map of the target input image and an environment vector from another image . It is defined as follows:

Environment translation loss. The loss is to ensure that a generated image has the characteristics that are guided by the given environment vector. It measures the differences between the given environment guide vector to a generator and the extracted environment vector from the synthesized image from the generator. Considering Fig. 7, as three generators exist, three corresponding environment translation losses are computed. The total environment translation loss is the summation of them (, , ).

Perceptual loss. The loss is to enforce the consistency of contents between an input image and the generated image using the encoded content map of the input image. To achieve this, the loss measures the difference between the encoded content map of the input image and that of the synthesized image where the latter image is generated using and another image’s environment vector . The loss is defined as follows:

Adversarial loss. The loss is employed to ensure that the distribution of generated images is similar to that of real images in the dataset. Similar to [11, 12, 13], we utilize the least squares loss from LSGAN [33]. We also employ multi-scale discriminators where we used two scales. One discriminator is applied at generated images’ resolution, and the other takes images at half resolution. It is defined as follows:

where denotes downsampling by 2.

Final loss. The generator and the content encoder are trained by minimizing that is the weighted summation of , , , , and . The discriminator is trained by minimizing .

The network is trained using the Adam optimizer for 20 epochs with the initial learning rate of 0.0002. The learning rate was linearly decayed after 10 epochs. We used the following hyperparameters: , , , where and denote the height and width of an input image and the corresponding content map, respectively. In the training phase, the input image is resized to and then is scaled by a factor that is randomly sampled from the uniform distribution . It is then randomly cropped to be and is processed by random horizontal flipping.

III-D Detection

To demonstrate that the data augmentation using the proposed network improves a state-of-the-art detection network, we first analyzed multiple detection networks [17, 18, 21, 24, 27] (see Table III). Among them, the highest accuracy is achieved by DetectoRS [24]. Hence, we compare the performances of it trained using the original training dataset, using the ForkGAN-augmented dataset [11], and using the proposed EnT-GAN-augmented dataset.

For both ForkGAN [11] and the proposed EnT-GAN, a detection network is trained using augmented training data that consists of both original training images and translated images. The distribution of data during training is 50% from original training images and the remaining 50% from translated images. For the proposed EnT-GAN, environment vectors are randomly generated from the uniform distribution unless specifically mentioned. Also, since EnT-GAN can generate various images given an image, varying sets of translated images are used during training.

The detection network is trained using stochastic gradient descent (SGD) optimizer for 30 epochs with linearly increasing learning rate until 500 iterations to 0.002/0.001 for the EVCI-A/B dataset. The input image is first resized to while keeping aspect ratio and is randomly flipped horizontally. We also show the result of utilizing random scaling/cropping. For the random scaling, a scaling factor is sampled from the uniform distribution . The scaled image is then randomly cropped to be .

IV Experiments and results

In this section, we demonstrate the controllability and explainability of the proposed EnT-GAN and the effectiveness of the proposed framework.

| Method | EVCI-A | EVCI-B | |||

|---|---|---|---|---|---|

| Detection networks | Backbone | EnT-GAN | Random scaling/cropping | ||

| Faster R-CNN | ResNet-101 | - | - | 59.2 | 54.3 |

| [17, 18] | ✓ | - | 61.6 | 56.5 | |

| RetinaNet | - | - | 58.5 | 55.5 | |

| [21] | ✓ | - | 59.8 | 57.0 | |

| DETR | - | ✓ | 54.9 | 51.7 | |

| [27] | ✓ | ✓ | 55.7 | 52.4 | |

| ResNet-50 | - | - | 64.1 | 61.5 | |

| DetectoRS | ForkGAN [11] | - | 63.6 | 60.4 | |

| [24] | ✓ | - | 65.0 | 62.0 | |

| ✓ | ✓ | 66.5 | 63.0 | ||

| Sampling distribution | |

|---|---|

| 62.0 | |

| Vector from target domain + | 62.5 |

| Mosaic | Random scaling/cropping | |

| - | - | 62.0 |

| ✓ | - | 62.6 |

| - | ✓ | 63.0 |

| ✓ | ✓ | 63.2 |

DetectoRS [24]

DetectoRS with ForkGAN [11]

Proposed

Ground truth

DetectoRS [24]

DetectoRS with ForkGAN [11]

Proposed

Ground truth

Proposed

Ground truth

Image-to-Image Translation. To demonstrate the controllability and the explainability of the proposed EnT-GAN, we show the synthesized images given an image and various environment guide vectors in Fig. 8 and Fig. 1. This obviously shows that the proposed EnT-GAN can generate various images given an input image by varying environment guide vectors. The left-most column shows the input images, and the second column shows the synthesized images using ForkGAN [11]. The other columns show the synthesized images using the proposed EnT-GAN given various environment vectors (presented at the bottom of each image). As ForkGAN uses predefined two domains, it is only able to translate an image in one domain to the other domain. Consequently, it cannot synthesize various images and is not able to control generated images. The generated images further show that the quality of the EnT-GAN is superior to that of the previous state-of-the-art method [11].

EV Charging Inlet Detection. To evaluate EV charging inlet detection quantitatively, mean average precision (mAP) is computed. We report that is the average AP for intersection over union (IoU) from 0.5 to 0.95 with a step size of 0.05, which is used in the COCO dataset [34].

Table III shows the quantitative results of the proposed method and other comparing methods [17, 18, 24, 27, 11]. First of all, the experimental results demonstrate that by utilizing the proposed data augmentation method, the performances of detection methods are improved consistently. Moreover, the experimental results show that the accuracy can be further improved by combining the proposed augmentation method with traditional data augmentation methods such as random scaling and cropping (see the last row).

Fig. 10 shows the qualitative results. We can observe that the proposed method localizes all three components while other methods often fail to localize small components. Localizing all components is important to be able to estimate both location and rotation. A failure case is shown in Fig. 11. Images with significant motion blur or very dark scenes were found to be challenging.

An ablation study on the environment guide vector is shown in Table IV. For all the experiments except this table, the proposed data augmentation is performed by utilizing the vector that is randomly sampled from the uniform distribution as mentioned in Section III-D. For this ablation study, we consider the case that while ground truth annotations of charging inlets are not given for test images, we have access to those images. Then, while we cannot, of course, train detection methods using them, we can translate training images towards the distribution of them for data augmentation. The first row shows the result of using random sampling from uniform distribution. The other row is the result of utilizing the distribution of environments of validation and test images along with additive noises from uniform distribution.

We also investigate EnT-GAN-based Mosaic data augmentation in Table V. Given an original image in the training set, parts of the image are replaced by translated images as shown in Fig. 9. Specifically, the number of selected regions for each image is between zero and four. The width/height of the region is 2080% of that of the image. The Mosaic images are utilized for training detection networks.

In this work, detection network and data augmentation network are trained separately. We believe that the performance can be further improved when they are trained concurrently.

V CONCLUSIONS

Towards autonomous EV charging robots, we first introduce a novel dataset and present an experimental analysis of the existing methods on the dataset. Then, to improve the robustness of localization, we investigate a data augmentation method with a focus on controllable and explainable image-to-image translation. To achieve this, we propose to utilize an intuitive environment guide vector in the proposed image-to-image translation network. We demonstrate that the proposed method is able to successfully synthesize various and expected images given an image and environment vectors. The experimental results show that by utilizing the proposed data augmentation method to detection networks, their performances are improved on EV charging inlet localization.

References

- [1] M. Behl, J. DuBro, T. Flynt, I. Hameed, G. Lang, and F. Park, “Autonomous electric vehicle charging system,” in 2019 Systems and Information Engineering Design Symposium (SIEDS), 2019.

- [2] J. Miseikis, M. Rüther, B. Walzel, M. Hirz, and H. Brunner, “3d vision guided robotic charging station for electric and plug-in hybrid vehicles,” in OAGM/AAPR ARW 2017: Joint Workshop on Vision, Automation, and Robotics, 2017.

- [3] Y. Long, C. Wei, C. Cao, X. Hu, B. Zhu, and F. Long, “Design of high-power fully automatic charging device,” in 2019 IEEE Sustainable Power and Energy Conference (iSPEC), 2019.

- [4] Y. Lou and S. Di, “Design of a cable-driven auto-charging robot for electric vehicles,” IEEE Access, vol. 8, 2020.

- [5] C. Sun, M. Pan, Y. Wang, J. Liu, H. Huang, and L. Sun, “Method for electric vehicle charging port recognition in complicated environment based on cnn,” in 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), 2018.

- [6] R. O. Duda and P. E. Hart, “Use of the hough transformation to detect lines and curves in pictures,” Commun. ACM, vol. 15, no. 1, Jan. 1972.

- [7] S.-W. Huang, C.-T. Lin, S.-P. Chen, Y.-Y. Wu, P.-H. Hsu, and S.-H. Lai, “Auggan: Cross domain adaptation with gan-based data augmentation,” in Computer Vision – ECCV 2018, 2018.

- [8] C.-T. Lin, S.-W. Huang, Y.-Y. Wu, and S.-H. Lai, “Gan-based day-to-night image style transfer for nighttime vehicle detection,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 2, 2021.

- [9] C.-T. Lin, Y.-Y. Wu, P.-H. Hsu, and S.-H. Lai, “Multimodal structure-consistent image-to-image translation,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, Apr. 2020.

- [10] H. Lee, M. Ra, and W.-Y. Kim, “Nighttime data augmentation using gan for improving blind-spot detection,” IEEE Access, vol. 8, 2020.

- [11] Z. Zheng, Y. Wu, X. Han, and J. Shi, “Forkgan: Seeing into the rainy night,” in Computer Vision – ECCV 2020, 2020.

- [12] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017.

- [13] I. Anokhin, P. Solovev, D. Korzhenkov, A. Kharlamov, T. Khakhulin, A. Silvestrov, S. Nikolenko, V. Lempitsky, and G. Sterkin, “High-resolution daytime translation without domain labels,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- [14] A. Anoosheh, T. Sattler, R. Timofte, M. Pollefeys, and L. V. Gool, “Night-to-day image translation for retrieval-based localization,” in 2019 International Conference on Robotics and Automation (ICRA), 2019.

- [15] R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,” in 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014.

- [16] R. Girshick, “Fast r-cnn,” in 2015 IEEE International Conference on Computer Vision (ICCV), 2015.

- [17] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” in Advances in Neural Information Processing Systems, vol. 28, 2015.

- [18] ——, “Faster r-cnn: Towards real-time object detection with region proposal networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 6, 2017.

- [19] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

- [20] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “Ssd: Single shot multibox detector,” in Computer Vision – ECCV 2016, 2016.

- [21] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017.

- [22] M. Tan, R. Pang, and Q. V. Le, “Efficientdet: Scalable and efficient object detection,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- [23] M. Tan and Q. Le, “EfficientNet: Rethinking model scaling for convolutional neural networks,” in Proceedings of the 36th International Conference on Machine Learning, vol. 97, 2019.

- [24] S. Qiao, L.-C. Chen, and A. Yuille, “Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2021.

- [25] K. Chen, J. Pang, J. Wang, Y. Xiong, X. Li, S. Sun, W. Feng, Z. Liu, J. Shi, W. Ouyang, C. C. Loy, and D. Lin, “Hybrid task cascade for instance segmentation,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [26] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. u. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in Neural Information Processing Systems, vol. 30, 2017.

- [27] N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-end object detection with transformers,” in Computer Vision – ECCV 2020, 2020.

- [28] X. Zhu, W. Su, L. Lu, B. Li, X. Wang, and J. Dai, “Deformable detr: Deformable transformers for end-to-end object detection,” arXiv, 2021.

- [29] M. Zheng, P. Gao, X. Wang, H. Li, and H. Dong, “End-to-end object detection with adaptive clustering transformer,” in 32nd British Machine Vision Conference (BMVC), 2021.

- [30] W. B. Thompson, P. Shirley, and J. A. Ferwerda, “A spatial post-processing algorithm for images of night scenes,” Journal of Graphics Tools, vol. 7, no. 1, 2002.

- [31] “Bt.601-7: Studio encoding parameters of digital television for standard 4:3 and wide screen 16:9 aspect ratios,” ITU-R, 2011.

- [32] X. Huang and S. Belongie, “Arbitrary style transfer in real-time with adaptive instance normalization,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017.

- [33] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. P. Smolley, “Least squares generative adversarial networks,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017.

- [34] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in Computer Vision – ECCV 2014, 2014.