IGN : Implicit Generative Networks

Abstract

In this work, we build recent advances in distributional reinforcement learning to give a state-of-art distributional variant of the model based on the IQN. We achieve this by using the GAN model’s generator and discriminator function with the quantile regression to approximate the full quantile value for the state-action return distribution. We demonstrate improved performance on our baseline dataset - 57 Atari 2600 games in the ALE. Also, we use our algorithm to show the state-of-art training performance of risk-sensitive policies in Atari games with the policy optimization and evaluation.

Index Terms:

Reinforcement Learning, Implicit Quantile Networks, Generative adversarial networkI Introduction

Distributional reinforcement learning [1] focus on the reward with intrinsic randomness within the reinforcement learning framework. Distributional reinforcement learning aims to model the distribution over returns and to use these distributions to evaluate and optimize a policy. Any distributional reinforcement learning algorithm is characterized by two aspects: the parameter of the return distribution and the distance metric or loss function being optimized. Distributional reinforcement learning with Quantile regression [2] gives a considerable direction for Distributional reinforcement learning. The work based on the Deep Q-network [3] became a popular direction in the reinforcement learning research work, especially in the game theory. It helps the distribution over returns is modelled explicitly instead of only estimating the mean. In recent years, a lot of work shows a good improvement in the game theory with the reinforcement learning policy, such as Implicit Quantile Networks (IQN) [4] and Fully Parameterized Quantile Function (FQF) [5]. They give a great improvement on the policy, especially risk-sensitive policy. However, those works still show limited work. How to use the Generative Network to help improve the performance of the Distributional reinforcement learning has become a controversial problem. Because our training resource is 57 Atari 2600 games in the ALE, which is high-quality images, it gives us an idea using the transfer learning to improve the performance of the algorithm in our work. We introduced a new model called Implicit Generative Networks (IGN) based on the IQN. The Generative Network can work by sending the embeddings of the source and target task to the discriminator and using the generator to generate the learning thread of the model. The resulting loss is then (inversely) backpropagated through the encoder. This method is also regarded as one kind of transfer learning [6] which can significantly reduce training time. In our work, we freeze both the critic and generator of the original GAN’s low-level layers. Also, we designed a method that gives out an auto-encoder constraint in order to compatible the internal representations of the critic and the generator. This assumption explains the accelerate in training time. Also, the generator can provide us with a clear boundary of coverage to help the model acceleration the training. In our experiment, we will use 57 Atari 2600 games in the ALE as the dataset to compare with our baselines, such as IQN and FQF, to show start-of-art performance on policy. The main contributions are as follows:

-

•

Using the generator and discriminator to rewrite the quantile function in the IQN model

-

•

Build our new algorithm IGN with the base of the IQN model

-

•

Show our algorithm state-of-art performance with the baselines in the Atari dataset.

II Related Work

II-A Dataset

There are a lot of datasets we can use to show our models’ performance and compare them. In our work, we want to compare our model with baselines with several classic datasets. One of them is Atari games datasets (see Fig. 1) [7], which is one of the classic datasets in reinforcement learning in game theory. We use the 57 Atari 2600 games in the ALE, and it can help show our contribution to our algorithm in the risk-sensitive policies, in which the Atari 2600 games have 200k frames collected using the OpenAI baseline and implemented based on Advantage Actor Critic (A2C). Also, they use sticky actions to make the problem become more challenging. There is a probability that the agent’s previous action is executed. It will greatly help us show our algorithm performance on risk-sensitive policy.

II-B Quantile Networks and Transfer Learning

Before our work, there was a lot of research already work in reinforcement learning. Implicit Quantile Networks (IQN) [4] is one of the models that shows a good result. It brings a huge improvement on the risk-sensitive policy training. However, the model based on the Quantile distribution make a huge fragile on the performance of the model, and it should cost huge time for training the model. We expected to design a state-of-art algorithm that has more robustness and has more effectiveness in training the policy. One of the possible methods is using transfer learning. Transfer learning [8] is an optimization method that can improve the performance of the model or rapid progress in the second task. In our research, transfer learning can help us have a higher start and higher slope from the second training. Also, it will have great help in the policy evaluation. There are some state-of-art transfer learning methods, such as VGG [9], ResNet [10] Model. From several model pooling, there is one algorithm using Gradient method is GAN model, which can help our model have a more competitive algorithm with origin IQN model

II-C Existing Offline Policy Evaluation Methods

Most existing offline policy evaluation methods under the above setting with mean criteria can be grouped into three categories. The first category is direct methods, which directly estimate the state-action value function [11, 12], also known as the Q-function. The second category of offline policy evaluation methods is motivated by marginal importance sampling [13]. The last category of methods combines direct and marginal importance sampling methods to construct some doubly robust estimator, which is also motivated by the efficient influence function like equation 1. [14]. In which, and are two nuisance functions.

| (1) |

| (2) |

II-D Gradient Method

In our work, we hope to use the generative method to improve our model. The most popular method on the generative method is Generative Adversarial Networks (GAN) [15]. The GAN model shows a great improvement for our model in the gradient periods and make our model have high efficiency to get the best reward score.

GANs can train two competing models (typically NNs)[15]. The generator takes noise as input and generates samples according to some transformation, . The discriminator takes samples from both the generator output and the training set as input and aims to distinguish between the input sources. Goodfellow[15] measured the discrepancy between the generated and the real distribution using the Kullback-Leibler divergence. However, this approach was improved by Arjovsky[16] by using the Wasserstein-1 distance [17]. WassersteinGANs exploit the Kantorovich-Rubinstein duality[18],

| (3) |

where is the class of Lipschitz functions with Lipschitz constant 1, in order to approximate the distance between the real distribution, , and the generated one, . The GAN objective is then to train a generator model with noise distribution at its input, and a critic , achieving

| (4) |

The WGAN-GP[16] (Fig. 2(b)) is a stable training algorithm for equation 4 [17] that employs stochastic gradient descent and a penalty on the norm of the gradient of the critic with respect to its input.

III Method

III-A Task Definition

The problem is driven by recent development in distributional reinforcement learning. [4] Consider a single trajectory where denotes the state-action-reward triplet collected at time t. We use and denote the state and action space, respectively. We assume A is discrete and finite, and rewards are uniformly bounded by . Consider the offline setting. Then we observed data consisting of N trajectories, corresponding to N independent and identically distributed copies of . For any i = data collected from the trajectory can be summarized by , where denotes the termination time. For simplicity, we assume = T for i =

A policy defines the agent’s way of choosing the action at each decision time. We focus on the stationary policy. Specifically, at each time point t, a stationary policy maps the current state value into a probability mass function over the action space, i.e., denotes the probability of choosing action a given the state value s.

In our work, we will work on the policy evaluation and policy optimization. In policy evaluation, we want to see the performance of the model what would have happened in the absence of a policy. We will use the Pong environment agent as a fixed agent, which we will use as one of the offline policy, and we will use our model to train the online policy and see the performance of the model training. In our work, we will compare the reward scores and efficiency of the timestamps because comparing with the complex model with a simple model in the resource using is not a fit game, we will compare the timestamps( measured with the training cycle time, not the real time). In our future work, if there is one model that requires less real time is one of the potential research directions in our work. For the policy optimization, we will train a model with different environments to evaluate our model performance in timestamps and reward gain with other baselines models.

III-B Distributional Reinforcement Learning

We introduce a state that uses quantile regression to approximate risk-sensitive strategies, in other words, an optimization method for reinforcement learning via a full quantile function of the behavioural reward distribution.

We always model the agent and the environment as a Markov decision model[19] in classical reinforcement learning designs. Here, we define the rule as a mapping from states to distributions constantly changing with actions. For any agent that follows the rule , we define the discounted sum of future rewards as a function . Clearly, varies with state and action as following

| (5) |

, where is a discount factor and . The action-value function can be defined as , in which is the Bellman equation operator[20].

| (6) |

Azer’s research[21] on reinforcement learning proposed a distributed reinforcement learning theory that values the benefit distribution rather than the action-value function . According to the analysis[22], it can be found that distributed reinforcement learning methods have substantial advantages in terms of sample complexity, final performance, and hyperparameter processing robustness.

III-C Quantile Regression Approaching

According to Bellemare’s study[23], the distributed Bellman operator is a contraction in the p-Wasserstein metric. There are two main theories for minimizing the Wasserstein metric. One is distributed reinforcement learning using quantile regression and shows that the resulting predictive distributed Bellman operator is a contraction of the -Wasserstein metric by properly selecting the quantile target[24]. Another study shows that the original class of classification algorithms is a contraction in the Cramér distance, the L2 metric on the cumulative distribution function.[25]

QR-DQN minimizes the Wasserstein distance distribution Bellman objective by estimating the quantile function at precisely chosen points. This estimation uses quantile regression, which has been shown to converge to the true quantile function value when minimized using stochastic approximation. In QR-DQN, the stochastic returns are approximated by a homogeneous mixture of N Diracs,

| (7) |

in which, is assigned a fixed quantile target . We can use Huber quantile regression loss to train this quantile target with threshold .

| (8) |

| (9) |

In practice, we use the IQN as the baseline and implement the gradient method with the GAN model and calculate the GAN score as the gradient score and calculate the new gradient with the Huber loss (see equation 9) to get the reward score and use this new reward score into the agent.

| (10) |

III-D Distributional Bellman Equation

Define a random variable , whose support is

by assumption. Given a target policy , our goal is to estimate the distribution of given any state

and . We use to denote the product space of cumulative rewards, state and actions, i.e., . For any , define either a conditional density

or a conditional probability mass function of given a state-action pair as

where indicates actions are selected according to . In order to estimate given any , we have the distributional Bellman equation that under Assumptions (A1) and (A2), for any , , and , the following equation holds.

Motivated by the distributional Bellman operator is -contraction under a modified Wasserstein distance, and the recently developed generative adversarial networks (GANs), we propose a Wasserstein GAN-based distributional policy evaluation method to estimate will discuss the benefits of using GANs. For any continuous function defined over , the following equation holds. {strip}

| (11) |

Motivated by equation 11, we propose to use generative adversarial networks to estimate of given state-action pair . Specifically, we aim to learn a generator that takes a uniform random variable , i.e., uniform (0, 1), as the input, and the output is a pseduo sample as one realization of target conditional distribution . This is also inspired by the conditional GANs introduced by Mirza and Osindero[26]. In typical GANs, the generator is trained by minimizing some divergence between the conditional distribution of and . Alternatively, a generator is trained such that the joint distribution of is the same as , where is a random variable that has the same distribution of given and . However, different from conventional distribution learning tasks, neither nor is not observed in our problem. Nevertheless, the distributional Bellman equation and the equation11 indicate us to obtain by solving the following min-max optimization problem, which has the similar spirit of Wasserstein-GANs[27]. {strip}

where are set to independently follow uniform(0,1), and is a function mapping from to .

In practice, as in conventional GANs, we restrict to some set of neural networks in addition, which can provide tractable computation. In addition, by powerful deep neural networks, we can handle potential high-dimensional state space . Similarly, we also use some set of neural networks to approximate the generator .

III-E Estimation and Algorithm

Our goal is to find a generator to approximate the conditional distribution of given and . This is, in general, a very challenging problem, especially when is a high-dimensional state, e.g., the number of states in is huge. Thus, suggested by Haas and Richter[28], we impose some structure assumptions on the underlying true distribution. In particular, we assume the distribution of lie in a -dimensional subspace with . Based on this consideration, we define the following function class for the generator.

Let be the class of all measurable functions such that any component only depends on arguments and lies in .

Given the offline dataset , we use the empirical average to approximate the objective function and solve the following optimization to estimate the generator . {strip}

| (12) |

| (13) |

where and are two independent samples from uniform (0, 1). The optimal solution of the optimization above always exists because the inner maximization problem is Lipschitz with respect to and is Lipschitz with respect to all parameters in with bounded support. Similarly, the inner maximization problem also has an optimal solution. Stochastic gradient descent algorithms as the state-of-the-art can be implemented to solve the above optimization problem. We denote the final minimizer as by , which can generate a sequence of pseudo samples for some integer for approximating the distribution of given state-action pair under the target policy . This provides a flexible way for estimating interesting quantities related to the target policy .

In all our examples, if is consistent and (or ) diverges to infinity, , and are all consistent.

IV Implementation

Consider the neural network structure used by the IQN [4]. Let be the function of the functions computed by the convolutional layers and f be the function with the subsequent fully-connected layers mapping to the estimated action-values. Also, there is one additional function computing an embedding for the sample point .For our network, we use the same functions , and f as in IQN. We make an improvement on the Quantile loss with our new methods.

Our training algorithm in IGN after the agent generates the states and actions, we use the original DQN model as the generator and use the three hidden layers neural network and ReLU activation function as the discriminator to train the model in critic with the algorithm 2 [17]. In the calculation of the Quantile loss, we put the result of the quantile result into the discriminator and use the equation 14 to get the GAN loss. Our IGN model optimizes with the gradient result of the GAN loss. Finally, we will use the Huber loss quantile result like an equation 9 to update the discriminator parameter in the IGN models .

Furthermore, We expected that N, the number of samples in U([0, 1]), would increase the sample complexity of IQN, with larger values leading to faster learning. Also, when we set the with N = 1, we would reach DQN performance. This would confirm the theory that many distributional RL algorithms’ higher performance is due to their role as auxiliary loss functions, which would vanish if N = 1 was used.

To achieve model evaluation work, we make evaluation agent into our IQN model as the fixed policy. We use the fixed policy to get the result of the fixed network and use the new policy training in the online policy. We use the same quantile loss calculation function in the policy evaluation.

Also we use policy optimization and policy evaluation to show the performance of our IGN with the baselines. We implement the data collector function with Tensor-board logs. We also implement the Wasserstein-1 distance [17] and discriminator loss calculation function in the visualization method. This will be discussed more on the experiment part.

| (14) |

V Experiment

In order to show the state-of-art of our IGN model, we will show the performance of policy evaluation and policy optimization in our experiment. In our work, we compare IGN performance with the final reward and the timestamp the final reward get. And all of the work is based on the dataset - 57 Atari 2600 games in the ALE. We focus on two main questions, as follows.

-

1.

Whether the fixed model in IGN will use fewer timestamps in policy evaluation

-

2.

Whether our IGN model will use fewer timestamps to achieve the final reward than IQN, FQF.

Firstly, let us discuss policy optimization. We compare our method with IQN, FQF and other baselines from the efficiency of the model and best reward getting with the policy. And We measure Mean, Q-value and W-distance from eight different models (IQN, IGN, Seaquest, Qbert, Pong, Breakout, MsPacman, Freeway) and comparing with the result with our model.

Then in policy evaluation, we use several policies to train the specific environment with the fixed policy. In our work, we want to show whether the fixed policy can make new environment training become more efficient. We have Q-distribution for a specific evaluated policy based on different training steps (timestamps).

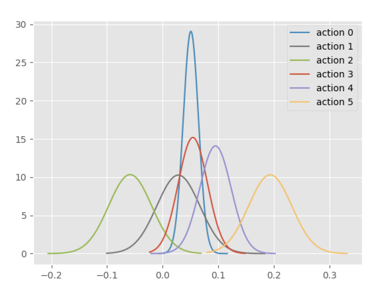

Also, we introduce the method of Monte Carlo Estimation to determine the policy. Monte Carlo Estimation is being used when state values are not enough since we do not have the model of the environment. The make use of this method will estimate action values instead of state values; for here, action values include LEFT, RIGHT, LEFTFIRE, RIGHTFIRE, NOOP and FIRE. The goal for this method is to have an estimation of the optimal action values. The result graphs show different actions with different scores. For comparison, Q-online estimation is also graphed for six actions.

VI Result

Firstly, we do the experiment to show the performance of our model work in the policy evaluation. The graphs on the left side will have Q-Fixed and Q-Online on the graph, where Q-Fixed is the Q-function of the fixed policy. The graphs on the right side will have Wasserstein-1 distance and Wasserstein-2 distance between Q-Fixed and Q-Online. The example graph is based on IQN and IGN for the Pong environment (Fig. 3, Fig. 4). For other fixed policies, graphs will be in the appendix. In our experiment, It is easy to realize our IGN can reduce the W-distance in the policy evaluation quicker than the IQN.

In order to see how the model evaluation work in our IGN model, we use the fixed policy ( Pong environment and IGN pretrained policy) to see how the training work in the several timestamps. In each graph, the left figure represents the state of the game; the top-mid number represents the training steps where k means thousands; the mid indicate current action; the right graph will show Q-distribution of Q-Fixed and Q-online where Q-value on the X-axis and density on the Y-axis. The example graph is being shown here for 150k training steps(Fig. 5) and 1700K training steps (Fig. 6), other results with different training steps will be in the appendix.

Also, we use the Monte Carlo Estimation and Q-online Estimation to visualize the estimation distribution of the reward in our policy evaluation work. The Fig. 7 shows Monte Carlo Estimation on six actions and the Fig. 8 shows Q-online Estimation on six actions. The x-axis of both figures shows the reward of the actions getting in estimation. The y-axis shows the number of estimates getting a specific reward score for actions with a unit of thousands (k).

Finally, we will use the tensor-board to show the result of the policy optimization result and compare it with other policy methods to show our model is states-of-art. The graphs will have the training process of IGN and IQN with blue and red color. The left graph shows the returned test result, and the right graph shows the returned train result. (Fig. 9). In the graph, we found our model IGN can get the same final reward score as the IQN model but use fewer timestamps to arrive at the highest reward score than the IQN model. It indicates our model can have efficiency in the model training.

VII Conclusion and Future work

In our work, we make a new model, IGN, which shows a state-of-art performance in distributional reinforcement learning. To increase the algorithm’s data efficiency, IGN may be trained using as little as one sample from each state-action value distribution or as many as computational restrictions allow. In addition, IGN enables us to broaden the scope of control rules to include a wide range of risk-sensitive policies linked to distortion risk measurements. Also, we show the state-of-art performance of our IGN with other baselines on the Atari-57 benchmark. However, our model still has some limitations. Firstly, although our model needs less timestamp in the policy optimization and evaluation, the model still needs a lot of real-time training. Because we use the GAN model, it requires a higher cost than the IQN, FQF model. We hope we can find one method which has a similar or better performance than IGN but less cost required. Also, our model still has some sensitivity with the robustness of the noise in the training process work. We are considering using the transformer[29] and Kernel prepossessor [30] to see whether the model performance will become better.

References

- [1] M. J. Sobel, “The variance of discounted markov decision processes,” Journal of Applied Probability, vol. 19, no. 4, p. 794–802, 1982.

- [2] W. Dabney, M. Rowland, M. G. Bellemare, and R. Munos, “Distributional reinforcement learning with quantile regression,” 2017.

- [3] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. Riedmiller, “Playing atari with deep reinforcement learning,” arXiv preprint arXiv:1312.5602, 2013.

- [4] W. Dabney, G. Ostrovski, D. Silver, and R. Munos, “Implicit quantile networks for distributional reinforcement learning,” 2018.

- [5] D. Yang, L. Zhao, Z. Lin, T. Qin, J. Bian, and T. Liu, “Fully parameterized quantile function for distributional reinforcement learning,” 2020.

- [6] Y. Frégier and J.-B. Gouray, “Mind2mind: transfer learning for gans,” in International Conference on Geometric Science of Information. Springer, 2021, pp. 851–859.

- [7] V. Kurin, S. Nowozin, K. Hofmann, L. Beyer, and B. Leibe, “The atari grand challenge dataset,” 2017.

- [8] F. Zhuang, Z. Qi, K. Duan, D. Xi, Y. Zhu, H. Zhu, H. Xiong, and Q. He, “A comprehensive survey on transfer learning,” 2020.

- [9] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” 2015.

- [10] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” 2015.

- [11] D. J. Luckett, E. B. Laber, A. R. Kahkoska, D. M. Maahs, E. Mayer-Davis, and M. R. Kosorok, “Estimating dynamic treatment regimes in mobile health using v-learning,” Journal of the American Statistical Association, 2019.

- [12] C. Shi, S. Zhang, W. Lu, and R. Song, “Statistical inference of the value function for reinforcement learning in infinite horizon settings,” arXiv preprint arXiv:2001.04515, 2020.

- [13] Q. Liu, L. Li, Z. Tang, and D. Zhou, “Breaking the curse of horizon: Infinite-horizon off-policy estimation,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- [14] M. R. Kosorok and E. B. Laber, “Precision medicine,” Annual review of statistics and its application, vol. 6, pp. 263–286, 2019.

- [15] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” 2014.

- [16] I. Gulrajani, F. Ahmed, M. Arjovsky, V. Dumoulin, and A. C. Courville, “Improved training of wasserstein gans,” Advances in neural information processing systems, vol. 30, 2017.

- [17] D. Freirich, T. Shimkin, R. Meir, and A. Tamar, “Distributional multivariate policy evaluation and exploration with the bellman gan,” in International Conference on Machine Learning. PMLR, 2019, pp. 1983–1992.

- [18] C. Villani, Optimal transport: old and new. Springer, 2009, vol. 338.

- [19] J. Von Neumann and O. Morgenstern, “Theory of games and economic behavior,” 2007.

- [20] R. E. Bellman, “Dynamic programming,” 1957.

- [21] M. G. Azar, R. Munos, and B. Kappen, “On the sample complexity of reinforcement learning with a generative model,” arXiv preprint arXiv:1206.6461, 2012.

- [22] G. Barth-Maron, M. W. Hoffman, D. Budden, W. Dabney, D. Horgan, D. Tb, A. Muldal, N. Heess, and T. Lillicrap, “Distributed distributional deterministic policy gradients,” arXiv preprint arXiv:1804.08617, 2018.

- [23] M. G. Bellemare, W. Dabney, and R. Munos, “A distributional perspective on reinforcement learning,” in International Conference on Machine Learning. PMLR, 2017, pp. 449–458.

- [24] W. Dabney, M. Rowland, M. Bellemare, and R. Munos, “Distributional reinforcement learning with quantile regression,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, no. 1, 2018.

- [25] M. Rowland, M. Bellemare, W. Dabney, R. Munos, and Y. W. Teh, “An analysis of categorical distributional reinforcement learning,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2018, pp. 29–37.

- [26] M. Mirza and S. Osindero, “Conditional generative adversarial nets,” arXiv preprint arXiv:1411.1784, 2014.

- [27] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein generative adversarial networks,” in International conference on machine learning. PMLR, 2017, pp. 214–223.

- [28] M. Haas and S. Richter, “Statistical analysis of wasserstein gans with applications to time series forecasting,” arXiv preprint arXiv:2011.03074, 2020.

- [29] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [30] H. Wang, H. Luo, and Y. Wang, “Mbgdt:robust mini-batch gradient descent,” 2022. [Online]. Available: https://arxiv.org/abs/2206.07139