IDRetracor: Towards Visual Forensics Against Malicious Face Swapping

Abstract

The face swapping technique based on deepfake methods poses significant social risks to personal identity security. While numerous deepfake detection methods have been proposed as countermeasures against malicious face swapping, they can only output binary labels (Fake/Real) for distinguishing fake content without reliable and traceable evidence. To achieve visual forensics and target face attribution, we propose a novel task named face retracing, which considers retracing the original target face from the given fake one via inverse mapping. Toward this goal, we propose an IDRetracor that can retrace arbitrary original target identities from fake faces generated by multiple face swapping methods. Specifically, we first adopt a mapping resolver to perceive the possible solution space of the original target face for the inverse mappings. Then, we propose mapping-aware convolutions to retrace the original target face from the fake one. Such convolutions contain multiple kernels that can be combined under the control of the mapping resolver to tackle different face swapping mappings dynamically. Extensive experiments demonstrate that the IDRetracor exhibits promising retracing performance from both quantitative and qualitative perspectives.

Index Terms:

AI Security, Deepfake Retracing, Visual Forensics.I Introduction

Face swapping by deepfake methods [5, 3, 9, 30, 40, 20, 32] has achieved remarkable advancements in recent years. Face swapping refers to generating a fake face by replacing the identity (ID) of the target face with the source face ID while preserving the target face attributes (e.g., pose, facial expression, and lighting condition). Such face swapping techniques can pose serious risks to personal identity security. Fake faces that are convincingly realistic can distort public perception and potentially endanger the reputation of the source subjects. Hence, many deepfake detection methods have been proposed to tackle malicious face swapping content. Deepfake detection can output a binary label that indicates whether an image is forged, which can provide judgment results for people to identify deepfake images. However, given that the output of deepfake detection is simply a binary label, there is an inherent lack of traceable and reliable evidence. Meanwhile, identity information and reputation are of extraordinary value to some people (like politicians and celebrities). This situation consequently demonstrates the inadequacy of deepfake detection [25, 1] and thus urges toward visual forensics [35] and target face attribution.

In previous works for visual forensics, [35] and the following works [34, 8] introduce the GAN fingerprint to attribute the input forgery content to the source architecture. Furthermore, Sequential Deepfake Detection (Seq-Deepfake) [25, 31] is proposed to recover the original face against sequential facial editing. Motivated by these works, we advance beyond mere detection against malicious face swapping content.

Supposing the forged fake face could be retraced and reconstructed back to its original target face, it would yield more reliable evidence to assess the authenticity of the fake faces for the source subjects who suffered from malicious face swapping. Such a process not only allows for the identification of the original target face based on the retraced one, confirming the deception of the fake face image with reliability, but it can also facilitate tracing the potential perpetrator, aiding in accountability and rights enforcement. We illustrate the face retracing task in Fig. 1.

There has been an initial attempt at inversing the original target face from the fake one via DRNet [1]. However, DRNet requires that the target ID has been seen during training, thereby limiting their ability to handle arbitrary source IDs. Whereas in real-world applications, it is uncommon for the target ID to be included in the training data. Moreover, prior methods are designed to retrace a specific face swapping method, yet the face swapping method used by the malicious perpetrators is usually unknown, which further limits the applicability of DRNet. Consequently, proposing a retracing method with feasibility to resist malicious face swapping remains a considerable challenge.

The feasibility of the retracing task is based on two premises: 1) The fake faces generated by existing deepfake methods inevitably retain implicit target ID information [13, 7]. 2) The input fake faces share high ID similarity with the source faces [3, 9, 30, 20]. Then, we posit that it is feasible to solve the original target face based on the given fake face via inverse mapping. Firstly, we craft a source-fake dataset that contains a large number of various original target IDs and multiple face swapping methods for a designated source subject. This dataset includes abundant information for the trained network to retrace arbitrary target IDs and multiple face swapping methods. However, learning from such a complex dataset poses two major issues: 1) The abundance of original target IDs leads to more challenging mapping relations to be learned. 2) Since different face swapping methods can lead to different mapping rules from target to fake, the form of residual target ID artifacts may vary with each distinct face swapping method, thus leading to alterations in the rules of inverse mapping when reconstructing the original target face. These issues substantially complicate the process of learning the correct inverse mapping solution space for a retracing network.

In this paper, we present the first practical retracing framework named IDRetracor for visual forensics and target face attribution against malicious face swapping. Unlike previous watermark-based [38] or trigger-based [29] methods, IDRetracor is the first method that does not require any pre-modification on the original benign data. Specifically, we introduce the Mapping-aware Convolution that contains multiple groups of convolution kernels to tackle the common and unique mappings of various target IDs and multiple face swapping methods. We then deploy a Mapping Resolver that can resolve the possible mappings of the input face, thereby guiding the mapping-aware convolutions to recombine their kernels dynamically. Therefore, the recombined kernel can fit different inverse mappings and output retraced faces within the solution space corresponding to the original target face identity. This framework allows us to retrace multiple face swapping methods and arbitrary target IDs, even if the original target ID is not seen during training. The contributions of this paper can be summarized as follows:

-

•

We explore the feasibility of retracing the original target face from the fake one, thus pushing the current countermeasures against malicious face swapping towards visual forensics and target face attribution.

-

•

We designed an IDRetracor based on mapping-aware convolutions for retracing, which is trained on a target-fake dataset with a large number of target IDs. IDRetracor can adjust the solution space of inverse mapping dynamically to ensure the retraced face is within the space of the original target face identity.

-

•

Our experimental results clearly exhibit promising performance under the circumstance of arbitrary target IDs and multiple face swapping methods. Qualitatively, the original identity can be intuitively identified from the retraced faces. Quantitatively, the Arcface similarity to the original target exceeds 0.65.

II Related Work

II-A Deepfake Detection

Deepfake detection is one of the most popular countermeasures against malicious forgery content. Previous methods [22, 37, 18, 10, 27, 33, 7, 11, 21] for deepfake detection can distinguish the statistical or physics-based artifacts and then recognize the authenticity of visual media. Huang et al.[13] propose to leverage the implicit identity in the generated fake faces to detect face forgery. However, these countermeasures have two major problems:

1) Lack of reliability: Rather than furnishing any concrete evidence of forgery content, they offer only a binary label (Fake/Real), which appears less convincing and reliable.

2) Lack of traceability: Individuals may be inclined to pursue accountability and rights enforcement against malicious perpetrators, while no further information for tracing is provided by the results of deepfake detection.

These issues strictly constrain the utility of deepfake detection and demonstrate the necessity for an approach that facilitates visual forensics [35] and target face attribution.

II-B Visual Forensics against Image Forgery

Toward visual forensics, there are many efforts [35, 34, 8, 36, 14] focusing on the fake contents generated by the Generative Adversarial Network (GAN). Specifically, they are designed to extract GAN fingerprints from the forgery images for visual forensics and model attribution. Given that a specific GAN architecture has a unique GAN fingerprint, it can be employed to attribute fake images to the source architecture. Such previous works on GAN fingerprints have demonstrated the severe inadequacy of numerical detection results for forensics, while also validating the feasibility of learning network mappings and the common feature of residual artifacts on the generated images.

Moreover, Shao et al. [25] propose Sequential Deepfake Detection (Seq-Deepfake) to recover the original face against sequential facial editing. Xia et al. [31] further propose MMNet to enhance the performance of Seq-Deepfake. Their task is relatively easier since the modification of facial editing is essentially minor than face swapping. Nevertheless, Seq-Deepfake also demonstrates the prevalent concern about the inadequacy of deepfake detection (i.e., mere binary outcome) and the strong demand for visual forensics.

Focusing on malicious face swapping, Ai et al. [1] propose the Disentangling Reversing Network (DRNet) for face swapping inversion. However, DRNet is limited to retracing target IDs that have been seen during training, whereas in application scenarios, the reversing network often deals with a large number of unknown target IDs. This issue essentially undermines the capability of DRNet to provide substantial value for practical applications. Therefore, DRNet can only be considered a preliminary exploratory attempt.

III Methodology

III-A Premise of Retracing the Target Face

The process of face swapping can be defined as a mapping:

| (1) |

where , , denote the face images of fake, source, target, and denotes a mapping for a specific face swapping method. Our retracing task aims to reconstruct the based on the given , and obtain the final retraced face (). The complete solution space of can be written as:

| (2) |

where , , and denote the channel, height, and width of the image, respectively. is a subset of that includes solutions that share an identical identity with image . We can define as:

| (3) |

where denotes the face similarity that can be objectively calculated via pre-trained models (e.g., Arcface [6] and Cosface [28]), and denotes the threshold of similarity score.

The integrated task of face swapping and retracing fundamentally serves as an image translation task, the feasibility of which is substantiated by CycleGAN [39]. Concurrently, the feasibility of the retracing task can be justified from a more intuitive perspective. Suppose that we are managing to retrace the current fake face , if we traverse through all possible in set and generate fake faces using every with the source face, we can establish an assert that it is guaranteed to find a specific for which the generated fake face is pixel-wise identical to , and thus determines that is the retraced face of .

However, there is one exceptional many-to-one mapping condition that undermines the feasibility: a series of target faces that have completely distinct identities are mapping to a pixel-wise identical fake face. Formally, there exists such that while and . In this condition, it becomes inherently infeasible to locate the specific since its inverse mapping becomes a one-to-many mapping that the corresponding original cannot be specified from . Consequently, given that the identities within are distinct, a misguided choice in inverse mapping will culminate in an incorrect identity being attributed to the retraced face. Such a condition could arise in the scenario of the face swapping method being flawless and leaving no residual ID information in the fake face. Alternatively, it could also happen in the scenario in which the swapping method is too poor that the generated fake face shares little identity similarity with the source face. Therefore, the feasibility of the retracing task cannot be fundamentally established without excluding the two mentioned scenarios that lead to the many-to-one mappings with distinct target identities.

Fortunately, a flawless face swapping method that perfectly swaps the identity and leaves no implicit identity information may not exist currently. Specifically, the definition of target and source identity for the existing face swapping methods appears vague. That is, the existing face swapping methods deploy distinct deep learning networks to extract the identity information for training, which is a black-box extracting process and cannot strictly and consistently represent the real abstracted identity. Consequently, as demonstrated by Huang et al.[13] and [7], the implicit identity information related to the target face is inevitably retained in the fake one. For the second scenario, a poorly forged fake face cannot pose a threat to the source subject, hence there is no necessity for retracing the poorly forged faces. Therefore, we assume two premises for the retracing task:

- Premise 1.

-

retains implicit identity related to .

- Premise 2.

-

.

As shown in Fig. 2, these premises contribute to further constraining the solution space for the reconstructed , making it possible to fall within . In summary, within the scope of this paper, we study the face swapping methods that empirically obey these two premises, which include all existing face swapping methods to the best of our knowledge. While under conditions where these premises remain intact, the retracing task for visual forensics is theoretically feasible.

III-B Constructing an IDRetracor

Here, we extend the discussion from the feasibility to the practical application, and the applicability scope of the proposed method is illustrated in Fig. 4. Notably, the ideal applicability scope conflicts with Premise 2: Without prior experience of the source identity, it is inherently impossible to verify the authenticity of Premise 2 and thus leads to its ineffectiveness. Hence, the proposed method successfully achieves both feasibility and the broadest possible scope of applicability.

To ensure tackling malicious face swapping in real-world scenarios comprehensively, it is necessary that arbitrary target and multiple face swapping methods can be correctly retraced. We consider utilizing a neural network to solve for in Eq. 1. It is obvious that the network should learn the mapping of and the feature of to successfully reconstruct . And under the premise of identity residuals, we may achieve visual forensics and target face attribution.

First, we craft a target-fake dataset for a specific source subject, which incorporates a large number of different target IDs and multiple face swapping methods. The abundant information included in the dataset allows the network to learn different mappings and extend its generalization to arbitrary target faces. Notably, we further integrate real source faces as the source-source sample pairs. By training on these sample pairs, the network is capable of producing output that is nearly identical to the input when it is a real one. This implementation allows the network to handle the input images comprehensively, that is, a fake face yields a retraced output, while a real face leads to an output that closely approximates the input.

However, learning on such a complex dataset is a challenging endeavor. Specifically, retracing arbitrary target faces requires learning common features of across a large variety of target-fake image pairs, which intensifies the complexity of network optimization. Meanwhile, learning multiple face swapping methods involves the learning of diverse mappings represented by , as opposed to solely focusing on a single .

To address these issues, we propose IDRetracor to retrace the original target faces. The architecture of IDRetracor is shown in Fig. 3. We use mapping-aware convolutions to tackle complex mappings and introduce a mapping resolver to dynamically resolve the potential solution space of the current input. The resolved results will guide the mapping-aware convolutions to retrace the solution and ensure falls within .

Mapping-aware Convolution.

For and , there exist common mappings and features as well as unique ones. Consequently, employing one part of parameters to discern the common mappings and features, while adaptively assigning different parameters for the unique ones, can achieve the effect of fitting the current through parameter recombination.

Inspired by [4], we introduce mapping-aware convolution that contains groups of convolution kernels, denoted by . can learn the common contents and unique ones by different kernels. During the retracing process, the kernels will be adaptively recombined to different final kernels and they will be employed for different input images. The recombination is guided by a weight vector , which is produced by the mapping resolver. Subsequently, the final kernels for retracing can be written as , where denotes the index of the convolution kernel in our mapping-aware convolution. Hence, through recombination, the generated can tackle both common and unique parts dynamically, thereby fitting to the corresponding and produce .

Mapping Resolver.

To resolve the potential mappings of the input images, we deploy a mapping resolver, denoted by . The mapping resolver aims to learn how to resolve different mappings and represent the resolved results through a weight vector , which can be utilized to guide the subsequent mapping-aware convolutions.

Given that different face swapping methods are considered different mappings, could be interpreted as a mapping classifier to some extent. Consequently, the mapping resolver can utilize a standard classifier as its backbone architecture, for which we introduce ResNet18 [12]. Then, we employ the Cross-Entropy Loss, a widely adopted constraint in classification tasks, to regulate the resolver. Additionally, inspired by label smoothing [26], we introduce fusion regularization to promote the generation of non-binary weight, which brings three advantages to the IDRetracor: 1) Prevents the IDRetracor from regressing into an ensemble of different single-mapping models. 2) Encourages processing common content with sharing parameters while processing unique content with distinct parameters. 3) Provides the resolver with adaptability and generalization to some anomalous training image pairs, which inevitably occurs during crafting such a large dataset. Finally, the resolving loss to constrain the resolver can be written as:

| (4) |

where denotes the index of the current face swapping method and denotes the one-hot value after fusion regularization. Additionally, is set to when the input training sample is the source-source sample pair, which allows each group of convolutions to evenly learn the principles for processing real faces.

Overall Architecture.

Firstly, given an input fake face , the IDRetracor can resolve the mappings of and produce a weight vector via mapping resolver: , where is parameterized by . Then, a three-layer UNet [23] is employed as our reconstruction network for the original target face retracing. Notably, the UNet we deployed is a modified version, denoted by , where all vanilla convolutions are replaced by the proposed mapping-aware convolutions. During retracing, is guided by and recombines its kernels dynamically for the final retraced face , where is parameterized by . To constrain , we introduce a pixel-wise reconstruction loss , defined as follows:

| (5) |

where denotes the Euclidean distance.

Moreover, since we aim to reconstruct the target face that has an identical ID to the original one, we implement to constrain the identity information of the retraced face. is calculated based on the cosine similarity:

| (6) |

where and denote the feature vectors of and extracted by Arcface recognition model [6]. Despite a complete pixel-wise identicalness (i.e., solely optimizing to zero without introducing ) suggesting a complete identity alignment, introducing remains significant. This is due to the fact that cannot be always effectively minimized while relaxing the pixel-wise constraint via may lead to superior alignment with high identity similarity.

Overall, the total loss function that is used to train the IDRetracor can be written as:

| (7) |

where and denote the trade-off parameters for and , respectively.

IV Experiments

IV-A Implementation Details

Dataset.

The most widely adopted public datasets for face swapping are Celeb-DF-v2 [19] and FaceForensics++ [24]. However, these datasets do not align with the demands of our task due to two primary reasons: 1) They offer an inadequate number of target IDs that pair with one specific source ID. This inadequacy severely limits the generalization of a retracing network to arbitrary target IDs. 2) The face swapping methods employed to generate these datasets remain undisclosed, resulting in the dysfunction of .

Consequently, we craft datasets for different source subjects based on VGGFace2 [2]. The dataset includes four face swapping methods reproduced following their official codes, that is, SimSwap [3], InfoSwap [9], HifiFace [30], and E4S [20]. For each face swapping method, we pair the specific source subject with various target IDs from VggFace2. Given that each ID includes multiple faces with distinct face attributes, we pair each different face and finally obtain 150000 training samples and 10000 testing samples for each source subject and face swapping method. The training set for each face swapping method contains 150,000 target-fake sample pairs and 4,636 target IDs, while the test set contains 10,000 target-fake sample pairs and 1,301 target IDs. Following Fig. 4, the target IDs and specific source faces for generating fake faces are completely unrepeated between the testing and training sets. We introduce 180 source-source sample pairs where 140 for training and 40 for testing. All faces are aligned and cropped to 224224. Examples of the fake faces in the dataset are presented in Fig. 6. Considering the inconsistent performances of every face swapping method, they are not always capable of generating optimal fake faces for training (as shown in row 5, column 4). Such suboptimal data, which can be perceived as noise, may obstruct the learning process of the network. By introducing label smoothing [26] into IDRetracor, we can to some extent mitigate this problem by preventing the model from overfitting to noisy data.

Although this dataset can be easily reproduced following the details we provided, we are still willing to release the dataset to the public subsequent to the publication of this paper. In this paper, the experimental results on the dataset of Bingbing Fan (Fan) will serve as the representative for comparisons and validations (See Fig. 5(a) for Fan).

Baselines.

Since we introduced the first applicable retracing method, there exists no previous baseline for comparison. To further investigate the retracing task, we trained two more models as described below. 1) VU-S: Vanilla UNet [23] trained with samples generated by a Single face swapping method. In our experiments, we manually deploy the model corresponding to the face swapping method that generates the input fake images. 2) VU-M: Vanilla UNet trained with samples generated by Multiple face swapping methods. UNet backbone is selected to experimentally verify the feasibility of the retracing task. It can be optionally replaced by other image-to-image translation backbones. All methods are trained in an end-to-end manner with 150 epochs and batch size 32. We adopt Adam [17] optimizer with and and the learning rate is set to 0.003. The hyper-parameters for IDRetracor are set as , , , and . All experiments are implemented in PyTorch on one NVIDIA Tesla A100 GPU.

Metrics.

The quantitative evaluations are performed in terms of two metrics: ID similarity and ID retrieval. For ID similarity, we employ two face recognition models (i.e., Arcface [6] and Cosface [28]) to extract the feature vectors of identity, denoted by ID Sim. (arc/cos). ID retrieval is employed to measure the model capability of retracing the potential perpetrators. To compute ID retrieval, we first randomly select 1000 target faces with different IDs for each face swapping method from the testing set and extract their feature vectors via the Arcface model. Then, we retrace the fake faces that are paired with the selected target faces. Finally, the ID retrieval is measured as the top-1 and top-5 (ID Ret. (t1/t5)) matching rates (%) of the retraced faces and their corresponding target faces.

IV-B Retracing Performance

| Method | ID Sim. (arc/cos) | ID Ret. (t1/t5) | Cross-FS |

|---|---|---|---|

| Fake | 0.1102/0.1397 | 5.53/10.35 | – |

| VU-S | 0.5335/0.6570 | 72.70/85.13 | ✗ |

| VU-M | 0.4848/0.6267 | 72.63/83.18 | ✓ |

| IDRetracor | 0.6520/0.7011 | 85.70/96.00 | ✓ |

Quantitative Results.

As shown in Tab. III, we present the ID Sim. (arc/cos) and ID Ret. (t1/t5) between the retraced faces and the original target faces. Cross-FS denotes the ability to cross multiple face swapping methods. Notably, the proposed IDRetracor outperforms VU-M by over 20% and is even higher than VU-S, which is not Cross-FS. Such performances reveal the superiority of the proposed mapping-aware convolutions, that is, they can adapt the mappings of current input dynamically and retrace within the precise solution space of the original target face.

For a better illustration, we visualize the distribution of Arcface similarity of models that are Cross-FS on the testing set (see Fig. 8). The distributions demonstrate that the retraced faces can be used as reliable evidence for visual forensics and target face attribution.

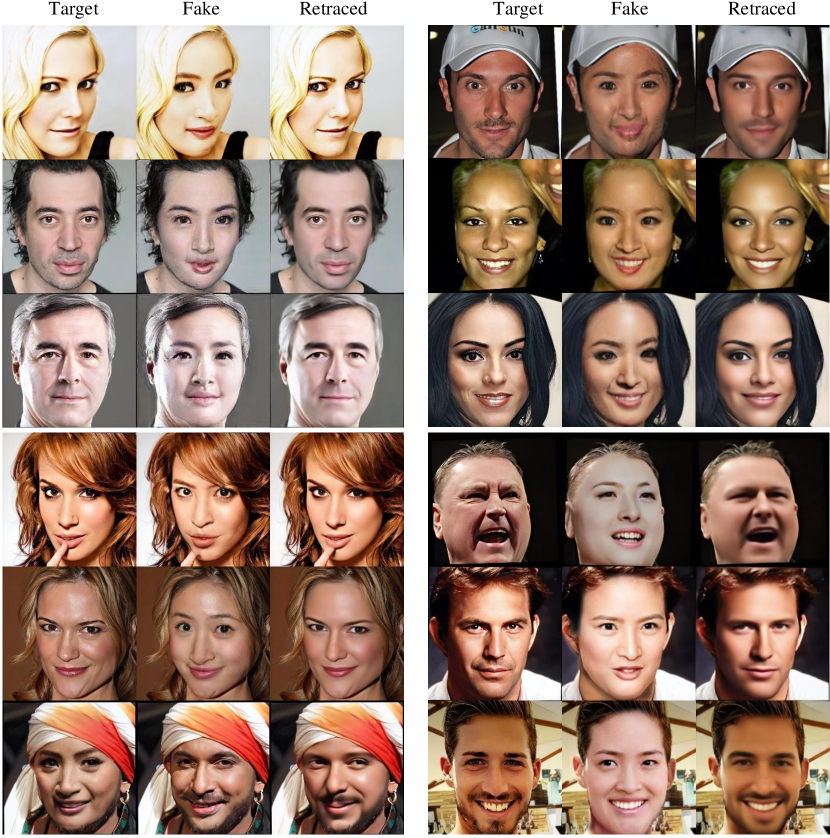

Qualitative Results.

As shown in Fig. 7, we present the retraced faces of VU-S, VU-M, and IDRetracor against four state-of-the-art face swapping methods [3, 9, 30, 20]. Considering that SimSwap modifies minor content of the target face during face swapping, the process of retracing becomes considerably easier. Consequently, all three models exhibit strong performance when retracing fake faces produced by SimSwap, while IDRetracor demonstrates a heightened capability of detail restoration. For HifiFace and InfoSwap, which erase more target ID information from the original target faces, IDRetracor can produce retraced faces that are more consistent with the original ID of the target faces. As for the hardest task E4S, the target feature residuals including skin color and facial expression are excessively removed from their original target faces. Despite the immense challenge of fully reconstructing details (e.g., makeup and wrinkles), IDRetracor can still produce retraced faces with the highest identity similarity with the original target faces.

IV-C Ablation Study

Effect of Identity Loss.

To validate the effectiveness of intensity loss (), we present the retraced results with different in Fig. 9. Without , the retraced results may contain some erroneous eye details (see the third row), while other facial features appear overly smooth (see the first and second rows). This could be due to the challenging conditions in the solution space, where solely constrained by causes the model to lean towards creating a generic face, rather than a result more closely aligned with the original target face ID. In contrast, with the constraint of , the model tends to supplement details that contribute to enhancing identity similarity. We also conduct additional experiments when is large (i.e., ) and solely using . The results demonstrate that primarily functions to preserve the contour and color of the retraced results, while acts as an auxiliary constraint to enhance the details.

Effect of Fusion Regularization.

Here, we analyze and validate the effectiveness of fusion regularization through experiments. As shown in Fig. 10, we measure the average Arcface similarities under different theta values on the testing set. When , the model degrades to a manner of mixed training like VU-M, where the model has a poor resolving ability for shared content and unique content. The worst performance occurs when , as the generated weight vector is almost evenly distributed. When , that is, when fusion regularization is not deployed, the model degrades to a framework that first identifies the face swapping method, and then processes the fake faces with the corresponding VU-S. Therefore, we set where the IDRetracor exhibits the best performance.

| ID Sim. (arc/cos) | ID Ret. (t1/t5) | |

|---|---|---|

| Unseen | 0.6520/0.7011 | 85.70/96.00 |

| Seen-SF | 0.6608/0.7327 | 87.53/96.35 |

| Seen-TI | 0.6700/0.7458 | 86.96/96.75 |

Performances on Seen Target IDs and Source Faces

In Tab. II, we present the performances of IDRetracor on the testing set of seen target IDs (Seen-TI), seen source faces (Seen-SF), and unseen data. See Fig. 4 for the visualization and illustration of seen and unseen data. Notice that Seen-SF refers to the specific source faces that are applied to generate fake faces in the training set, and Unseen is the protocol we followed in all other experiments. The results show that there is minor variation in performance on seen and unseen data, which suggests that the abundant number of samples in the dataset has addressed the distinctions among Seen-TI, Seen-SF, and Unseen, thus achieving generalization.

IV-D Analysis of IDRetracor

Real Face Identity Preservation.

In Fig. 11, we substantiate the efficacy of incorporating source-source sample pairs into our model. For testing, we exclusively use source faces that are not seen during training. Qualitatively, the real-face identities are well-preserved in the output faces. We also show the Arcface similarity as the quantitative metric and the results are correspondingly promising. Therefore, the proposed IDRetracor is logically comprehensive for input reconstruction.

Semantic Consistency Verification.

To further assess the reliability of visual forensics, we design a semantic consistency verification experiment. Specifically, we randomly selected 1,000 fake faces from the test set and obtained their retraced faces. Subsequently, we perform face swapping between these retraced faces and their corresponding source faces used in the original fake face generation, yielding a set of new fake faces. If this set of new fake faces is consistent with the original fake faces, we can validate the semantic consistency of the retraced faces. As shown in Fig. 12, the experimental results verify the semantic consistency of the retraced faces.

IV-E Results on Various Datasets

Training on Public Deepfake Datasets

In Sec. IV-A, we mentioned that the two public datasets have too few target IDs, namely, a maximum of 26 target IDs for Celeb-DF-v2 [19] and a mere single one for FaceForensics++ [24]. Given that the target ID in FaceForensics++ is obviously insufficient for training a retracing network, we skip FaceForensics++ and conduct training experiments on Celeb-DF-v2 (see Fig. 13 ) following the same setting as on the crafted dataset. The poor experimental results show that we cannot train a retracing network on these public datasets, thus demonstrating the necessity of crafting our target-fake dataset. Moreover, it is also impractical to craft datasets applying the manipulation methods used by Celeb-DF-v2 or FaceForensics++. That is because they are traditional one-to-one methods that require training a new model for each target ID, while we need a massive number of target IDs in the dataset for retracing.

Cross-dataset Evaluation

Considering cross-dataset evaluation is important for real-world applications, we conduct the cross-dataset evaluation on FF-HQ [16] and CelebA-HQ [15] (trained on VGGFace2). The cross-dataset fake images are generated using target faces from FF-HQ and CelebA-HQ and exactly following the protocol that we apply for VGGFace2. Fig. 15 shows that our method can maintain promising performance in the cross-dataset evaluation both qualitative and quantitative, which further demonstrates the application potential of IDRetracor.

| Method | ID Sim. (arc/cos) | ID Ret. (t1/t5) |

|---|---|---|

| Fake (Lin) | 0.1319/0.1770 | 6.15/10.90 |

| Ours (Lin) | 0.6691/0.7110 | 87.13/96.05 |

| Fake (Ronaldo) | 0.1019/0.1585 | 5.73/8.95 |

| Ours (Ronaldo) | 0.6474/0.6998 | 86.43/93.15 |

Retracing Various Source IDs

Here, we demonstrate that the framework of IDRetracor can be applied to other source IDs effectively. We craft datasets and train the corresponding IDRetracors for two other celebrities (i.e., Ariel Lin and Cristiano Ronaldo from VGGFace2). As shown in Fig. 16, the IDRetracor can also exhibit strong retracing performance for these two source IDs, where the identity and detailed facial characters are promisingly reconstructed. In Tab. III, we also provide the quantitative metrics on these identities. Due to the varying performances of face swapping methods when dealing with different source faces, there are minor differences in the retracing results on quantitative metrics. Nonetheless, IDRetracor can still maintain effective retracing performances, indicating that our framework can be applied to any source ID.

V Failure Cases, Limitation, and Future Works

As shown in Fig. 14, we provide some faces that the IDRetracor fails to retrace. Since the learned feature of IDRetracor is majorly dependent on the residual artifacts and the specific source identity, two types of fake faces are challenging to be retraced: 1) The target attributes are undermined after face swapping (see the first and the second rows). 2) The fake faces share less similarity with the source faces, that is, the violation of Premise 2 (see the third and the fourth rows).

The dependence on a specific source ID influences the practical utility of IDRetracor. This leads to the requirement to rebuild the target-fake dataset and retrain the model for each unique source subject, thus incurring extra time consumption.

In future works, it is vital to first conduct iterative experiments to find a backbone network most suitable for retracing tasks. Then, finding an efficient way to incorporate source face adaptively based on Premise 2 is crucial for the real-world application of IDRetracor.

VI Conclusion

In this paper, we analyze the urgent toward visual forensics and introduce the face retracing task to address it. Firstly, we discuss the feasibility and premise of the retracing task. Then, we propose a framework named IDRetracor for the retracing task, which can locate the solution space of the original target face and reconstruct the retraced face with the correct ID. Finally, extensive experiments demonstrate that the IDRetracor can retrace fake faces of arbitrary target IDs generated by multiple face swapping methods.

References

- [1] Jiaxin Ai, Zhongyuan Wang, Baojin Huang, and Zhen Han. Deepfake face traceability with disentangling reversing network. arXiv preprint arXiv:2207.03666, 2022.

- [2] Qiong Cao, Li Shen, Weidi Xie, Omkar M Parkhi, and Andrew Zisserman. Vggface2: A dataset for recognising faces across pose and age. In IEEE International Conference on Automatic Face & Gesture Recognition (FG), pages 67–74. IEEE, 2018.

- [3] Renwang Chen, Xuanhong Chen, Bingbing Ni, and Yanhao Ge. Simswap: An efficient framework for high fidelity face swapping. In ACM International Conference on Multimedia (MM), pages 2003–2011, 2020.

- [4] Yinpeng Chen, Xiyang Dai, Mengchen Liu, Dongdong Chen, Lu Yuan, and Zicheng Liu. Dynamic convolution: Attention over convolution kernels. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 11030–11039, 2020.

- [5] DeepFakes. Deepfakes. https://github.com/deepfakes/faceswap, 2019. Online; accessed March 1, 2021.

- [6] Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou. Arcface: Additive angular margin loss for deep face recognition. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4690–4699, 2019.

- [7] Shichao Dong, Jin Wang, Renhe Ji, Jiajun Liang, Haoqiang Fan, and Zheng Ge. Implicit identity leakage: The stumbling block to improving deepfake detection generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3994–4004, 2023.

- [8] Joel Frank, Thorsten Eisenhofer, Lea Schönherr, Asja Fischer, Dorothea Kolossa, and Thorsten Holz. Leveraging frequency analysis for deep fake image recognition. In International Conference on Machine Learning (ICML), pages 3247–3258, 2020.

- [9] Gege Gao, Huaibo Huang, Chaoyou Fu, Zhaoyang Li, and Ran He. Information bottleneck disentanglement for identity swapping. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3404–3413, 2021.

- [10] Xiao Guo, Xiaohong Liu, Zhiyuan Ren, Steven Grosz, Iacopo Masi, and Xiaoming Liu. Hierarchical fine-grained image forgery detection and localization. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3155–3165, 2023.

- [11] Zhiqing Guo, Zhenhong Jia, Liejun Wang, Dewang Wang, Gaobo Yang, and Nikola Kasabov. Constructing new backbone networks via space-frequency interactive convolution for deepfake detection. IEEE Transactions on Information Forensics and Security, 19:401–413, 2024.

- [12] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 770–778, 2016.

- [13] Baojin Huang, Zhongyuan Wang, Jifan Yang, Jiaxin Ai, Qin Zou, Qian Wang, and Dengpan Ye. Implicit identity driven deepfake face swapping detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4490–4499, 2023.

- [14] Shan Jia, Xin Li, and Siwei Lyu. Model attribution of face-swap deepfake videos. In IEEE International Conference on Image Processing (ICIP), pages 2356–2360. IEEE, 2022.

- [15] Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, 2017.

- [16] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition (CVPR), pages 4401–4410, 2019.

- [17] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [18] Lingzhi Li, Jianmin Bao, Ting Zhang, Hao Yang, Dong Chen, Fang Wen, and Baining Guo. Face x-ray for more general face forgery detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), page 5001–5010, 2020.

- [19] Yuezun Li, Xin Yang, Pu Sun, Honggang Qi, and Siwei Lyu. Celeb-df: A large-scale challenging dataset for deepfake forensics. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3207–3216, 2020.

- [20] Zhian Liu, Maomao Li, Yong Zhang, Cairong Wang, Qi Zhang, Jue Wang, and Yongwei Nie. Fine-grained face swapping via regional gan inversion. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 8578–8587, 2023.

- [21] Anwei Luo, Chenqi Kong, Jiwu Huang, Yongjian Hu, Xiangui Kang, and Alex C. Kot. Beyond the prior forgery knowledge: Mining critical clues for general face forgery detection. IEEE Transactions on Information Forensics and Security, 19:1168–1182, 2024.

- [22] João C. Neves, Ruben Tolosana, Ruben Vera-Rodriguez, Vasco Lopes, Hugo Proença, and Julian Fierrez. Ganprintr: Improved fakes and evaluation of the state of the art in face manipulation detection. IEEE Journal of Selected Topics in Signal Processing (JSTSP), 14(5):1038–10484, 2020.

- [23] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI), pages 234–241. Springer, 2015.

- [24] Andreas Rossler, Davide Cozzolino, Luisa Verdoliva, Christian Riess, Justus Thies, and Matthias Niessner. Faceforensics++: Learning to detect manipulated facial images. In IEEE/CVF International Conference on Computer Vision (ICCV), page 1–11, 2019.

- [25] Rui Shao, Tianxing Wu, and Ziwei Liu. Detecting and recovering sequential deepfake manipulation. In European Conference on Computer Vision (ECCV), pages 712–728. Springer, 2022.

- [26] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. Rethinking the inception architecture for computer vision. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2818–2826, 2016.

- [27] Lingfeng Tan, Yunhong Wang, Junfu Wang, Liang Yang, Xunxun Chen, and Yuanfang Guo. Deepfake video detection via facial action dependencies estimation. In AAAI Conference on Artificial Intelligence, volume 37, pages 5276–5284, 2023.

- [28] Hao Wang, Yitong Wang, Zheng Zhou, Xing Ji, Dihong Gong, Jingchao Zhou, Zhifeng Li, and Wei Liu. Cosface: Large margin cosine loss for deep face recognition. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 5265–5274, 2018.

- [29] Run Wang, Felix Juefei-Xu, Meng Luo, Yang Liu, and Lina Wang. Faketagger: Robust safeguards against deepfake dissemination via provenance tracking. In Proceedings of the 29th ACM International Conference on Multimedia, pages 3546–3555, 2021.

- [30] Yuhan Wang, Xu Chen, Junwei Zhu, Wenqing Chu, Ying Tai, Chengjie Wang, Jilin Li, Yongjian Wu, Feiyue Huang, and Rongrong Ji. Hififace: 3d shape and semantic prior guided high fidelity face swapping. arXiv preprint arXiv:2106.09965, 2021.

- [31] Ruiyang Xia, Decheng Liu, Jie Li, Lin Yuan, Nannan Wang, and Xinbo Gao. Mmnet: Multi-collaboration and multi-supervision network for sequential deepfake detection. IEEE Transactions on Information Forensics and Security, 19:3409–3422, 2024.

- [32] Zhiliang Xu, Hang Zhou, Zhibin Hong, Ziwei Liu, Jiaming Liu, Zhizhi Guo, Junyu Han, Jingtuo Liu, Errui Ding, and Jingdong Wang. Styleswap: Style-based generator empowers robust face swapping. In European Conference on Computer Vision (ECCV), pages 661–677. Springer, 2022.

- [33] Zhiyuan Yan, Yong Zhang, Yanbo Fan, and Baoyuan Wu. Ucf: Uncovering common features for generalizable deepfake detection. arXiv preprint arXiv:2304.13949, 2023.

- [34] Tianyun Yang, Ziyao Huang, Juan Cao, Lei Li, and Xirong Li. Deepfake network architecture attribution. AAAI Conference on Artificial Intelligence, 36(4):4662–4670, 2022.

- [35] Ning Yu, Larry S, Davis, and Mario Fritz. Attributing fake images to gans: Learning and analyzing gan fingerprints. In IEEE/CVF International Conference on Computer Vision (ICCV), page 7556–7566, 2019.

- [36] Ning Yu, Vladislav Skripniuk, Sahar Abdelnabi, and Mario Fritz. Artificial fingerprinting for generative models: Rooting deepfake attribution in training data. In IEEE/CVF International Conference on Computer Vision (ICCV), pages 14448–14457, 2021.

- [37] Peipeng Yu, Jianwei Fei, Zhihua Xia, Zhili Zhou, and Jian Weng. Improving generalization by commonality learning in face forgery detection. IEEE Transactions on Information Forensics and Security (TIFS), 17:547–558, 2022.

- [38] Yuan Zhao, Bo Liu, Ming Ding, Baoping Liu, Tianqing Zhu, and Xin Yu. Proactive deepfake defence via identity watermarking. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pages 4602–4611, 2023.

- [39] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. Unpaired image-to-image translation using cycle-consistent adversarial networks. In IEEE International Conference on Computer Vision (ICCV), pages 2223–2232, 2017.

- [40] Yuhao Zhu, Qi Li, Jian Wang, Cheng-Zhong Xu, and Zhenan Sun. One shot face swapping on megapixels. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4834–4844, 2021.