Identifiability and inference for copula-based semiparametric models for random vectors with arbitrary marginal distributions

Abstract.

In this paper, we study the identifiability and the estimation of the parameters of a copula-based multivariate model when the margins are unknown and are arbitrary, meaning that they can be continuous, discrete, or mixtures of continuous and discrete. When at least one margin is not continuous, the range of values determining the copula is not the entire unit square and this situation could lead to identifiability issues that are discussed here. Next, we propose estimation methods when the margins are unknown and arbitrary, using pseudo log-likelihood adapted to the case of discontinuities. In view of applications to large data sets, we also propose a pairwise composite pseudo log-likelihood. These methodologies can also be easily modified to cover the case of parametric margins. One of the main theoretical result is an extension to arbitrary distributions of known convergence results of rank-based statistics when the margins are continuous. As a by-product, under smoothness assumptions, we obtain that the asymptotic distribution of the estimation errors of our estimators are Gaussian. Finally, numerical experiments are presented to assess the finite sample performance of the estimators, and the usefulness of the proposed methodologies is illustrated with a copula-based regression model for hydrological data. The proposed estimation is implemented in the R package CopulaInference (Nasri and Rémillard,, 2023), together with a function for checking identifiability.

Key words and phrases:

Copula; Pseudo-observations; Estimation; Identifiability; Arbitrary distributions1. Introduction

Copula-based models have been widely used in many applied fields such as finance (Embrechts et al.,, 2002, Nasri et al.,, 2020), hydrology (Genest and Favre,, 2007, Zhang and Singh,, 2019) and medicine (Clayton,, 1978, de Leon and Wu,, 2011), to cite a few. According to Sklar’s representation (Sklar,, 1959), for a random vector with joint distribution function and margins , there exists a non-empty set of copula functions so that for any ,

If all margins are continuous, then contains a unique copula which is the distribution function of the random vector , with , . However, in many applications, discontinuities are often present in one or several margins. Whenever at least one margin is discontinuous, is infinite, and in this case,

any is only uniquely defined on the closure of the range of , where

is the range of , . This can lead to identifiability issues raised in

Faugeras, (2017), Geenens, (2020, 2021), creating also estimation problems that need to be addressed.

However, even if the copula is not unique,

it still makes sense to use a copula family , to define multivariate models, provided one is aware of the possible limitations. Indeed, the copula-based model , , is a well-defined family of distributions for which estimating is a challenge.

In the literature, the case of discontinuous margins is not always treated properly. It is either ignored or, in some cases, continuous margins are fitted to the data (Chen et al.,, 2013). This procedure does not solve the problem since there still will be ties.

An explicit example that underlines the problem with ignoring ties is given in Section 3 and Remark 2. A solution proposed in the literature is to use jittering, where data are perturbed by independent random variables, introducing extra variability. Our first aim is to address the identifiability issues for the model

, when the margins are unknown and arbitrary. Our second aim is to present formal inference methods for the estimation of .

More precisely, we consider a semiparametric approach for the estimation of , when the margins are arbitrary, i.e. each margin can be continuous, discrete, or even a mixture of a discrete and continuous distribution. The estimation approach is based on pseudo log-likelihood taking into account the discontinuities. We also propose a pairwise composite log-likelihood. In the literature, few articles focused on the estimation of the copula parameters in the case of noncontinuous margins (Song et al.,, 2009, de Leon and Wu,, 2011, Zilko and Kurowicka,, 2016, Ery,, 2016, Li et al.,, 2020). Most of them have considered only the case when the components of the copula are discrete or continuous and a full parametric model, except Ery, (2016) and Li et al., (2020). In Ery, (2016), in the bivariate discrete case, the author considered a semiparametric approach and studied the asymptotic properties. In Li et al., (2020), the authors have proposed a semiparametric approach for the estimation of the copula parameters for bivariate data having arbitrary distributions, without presenting any asymptotic results, neither discussing identifiability issues.

The article is organized as follows: In Section 2, we discuss the important topic of identifiability, as well as the limitations of the copula-based approach for multivariate data with arbitrary margins. Conditions are stated in order to have identifiability so that the estimation problem is well-posed. Next, in Section 3, we describe the estimation methodology, using the pseudo log-likelihood approach as well as the composite pairwise approach, while the estimation error is studied in Section 3.3. By using an extension of the asymptotic behavior of rank-based statistics (Ruymgaart et al.,, 1972) to data with arbitrary distributions (Theorem 2), we show that the limiting distribution of the pseudo log-likelihood estimator is Gaussian. Similar results are obtained for pairwise composite pseudo log-likelihood. In addition, we can obtain similar asymptotic results if the margins are estimated from parametric families, instead of using the empirical margins, i.e., we consider the multivariate model , where the margins are estimated first, and then the copula parameter . This is discussed in Remark 5. Finally, in Section 4, numerical experiments are performed to assess the convergence and precision of the proposed estimators in bivariate and trivariate settings, while in Section 5, as an example of application, a copula-based regression approach is proposed to investigate the relationship between the duration and severity, using hydrological data (Shiau,, 2006).

2. Identifiability and limitations

As exemplified in Faugeras, (2017), Geenens, (2020), there are several problems and limitations when applying copula-based models to data with arbitrary distributions, the most important being identifiability. This is discussed first. Then, we will examine some limitations of these models.

2.1. Identifiability

For the discussion about identifiability, we consider two cases: copula family identifiability, and copula parameter identifiability.

For copula family identifiability, it may happen that two copulas from different families, say and , belong to .

For example, in Faugeras, (2017), the author considered the bivariate Bernoulli case, whose distribution is completely determined by , , and . Provided that the copula families and are rich enough, there exist unique parameters and so that

. For calculation purposes, the choice of the copula in this case is immaterial, and there is no possible interpretation of the copula or its parameter or the type of dependence induced by each copula. All that matters is , or the associated odd ratio (Geenens,, 2020), or Kendall’s tau of , given in this case by . Here, is the so-called multilinear copula, depending on the margins and belonging to ; see, e.g., Genest et al., (2017). To illustrate the fact that the computations are the same for any , consider the conditional distribution of given , given by

, if is continuous at and , if is not continuous at ,

where .

The value of is independent of the choice of , since all copulas in have the same value on the closure of the range .

So apart from the lack of interpretation of the type of dependence, there is no problem for calculations, as long as for the chosen copula family, its parameter is identifiable, as defined next.

We now consider the identifiability of the copula parameter for a given copula family . Since one of the aims is to estimate the parameter of the family , the following definition of identifiability is needed.

Definition 1.

For a copula family and a vector of margins , the parameter is identifiable with respect to if the mapping is injective, i.e., if for , implies that . This is equivalent to the existence of such that , where for a vector of margins ,

The following result, proven in the Supplementary Material, is essential for checking that a given mapping is injective whenever is finite.

Proposition 1.

Suppose is a continuously differentiable mapping from a convex open set to . If , then is not injective. Also, is injective if the rank of the derivative is . Furthermore, if the rank of is for some , the rank of is for any in some neighborhood of . Finally, if the maximal rank is , attained at some , then the rank of is in some neighborhood of , and is not injective.

Example 1.

A 1-dimensional parameter is identifiable for any for the following copula families and their rotations: the bivariate Gaussian copula, the Plackett copula, as well as the multivariate Archimedean copulas with one parameter like the Clayton, Frank, Gumbel, Joe. This is because for any fixed , is strictly monotonic. Note also that by (Joe,, 1990, Theorem A2), every meta-elliptic copula , with the correlation matrix parameter, is ordered with respect to .

In practice, for copula models with bivariate or multivariate parameters, it might be impossibly difficult to verify injectivity since the margins are never known. In this case, since converges to , where , , , one could verify that the parameter is identifiable with respect to , i.e., that the mapping is injective. The latter does not necessarily imply identifiability with respect to but if the parameter is not identifiable with respect to , one should choose another parametric family . Next, the cardinality of is , where is the size of the support of , . Let be an enumeration of . As a result, is injective iff the mapping is injective, There are two cases to consider: or .

-

•

First, one should not choose a copula family with , since in this case, according to Proposition 1, the mapping cannot be injective, so the parameter is not identifiable.

-

•

Next, if , it follows from Proposition 1, that is injective if has rank . Also, it follows from Proposition 1 that if the rank of is , then there is a neighborhood of for which the rank of is , for any . One could then restrict the estimation to this neighborhood if necessary. Note that the matrix is composed of the (column) gradient vectors , . The latter can be calculated explicitly or it can be approximated numerically. To check that the rank of is , one needs to choose a bounded neighborhood for , choose an appropriate covering of by balls of radius , with respect to an appropriate metric, corresponding to a given accuracy needed for the estimation, and then compute the rank of the mappings , for all the centers of the covering balls. This appears in the R package CopulaInference (Nasri and Rémillard,, 2023).

Example 2.

In the bivariate Bernoulli case, , so a copula parameter is identifiable if for any fixed , the mapping is injective. As a result of the previous discussion, , i.e., the parameter should be 1-dimensional and for any fixed , the mapping must be strictly monotonic since must be always positive or always negative.

Remark 1 (Student copula).

There are cases when is needed. For example, for the bivariate Student copula, is sometimes necessary. To see why, note that the point determines uniquely since for any , . Take for example . Then is not injective, having solutions, namely and , so the rank of is at points and . However, if is restricted to a finite set like , then the mapping is injective. This restriction of the values of makes sense if one wants to use the Student copula. In fact, since exact values of can be computed explicitly only when is an integer (Dunnett and Sobel,, 1954). It is the case for example in the R package copula. Otherwise, one needs to use numerical integration (Genz,, 2004), with might lead to numerical inconsistencies while differentiating the copula with respect to in a given open interval.

2.2. Limitations

Having discussed identifiability issues, we are now in a position to discuss limitations of the copula-based approach for modeling multivariate data. Two main issues have been identified when one or some margins have discontinuities: interpretation and dependence on margins.

2.2.1. Interpretation

As exemplified in the extreme case of the bivariate Bernoulli distribution discussed in Example 2, interpretation of the copula or its parameter can be hopeless. Recall that the object of interest is the family , not the copula family. Even if has only one atom at and is continuous, then one only “knows” on . For example, how can we interpret the form of dependence induced by a Gaussian copula or a Student copula restricted to such ? Apart from tail dependence, there is not much one can say. As it is done in the case for bivariate copula, one could plot the graph of a large sample, say . The scatter plot is not very informative. This is even worse if the support of is finite. As an illustration, we generated 1000 pairs of observations from Gaussian, Student(2.5), Clayton, and Gumbel copulas with , where the margin is a mixture of a Gaussian and an atom at (with probability ), and is Gaussian. The scatter plots are given in Figure 1, together with the scatter plots of the associated pseudo-observations . The raw datasets in panel a) of Figure 1 do not say much, apart from the fact that has an atom at , while pabel b) of Figure 1 illustrates the fact that the copula is only known on . All the graphs are similar but for the Clayton copula. So one can see that even if there is only one atom, one cannot really interpret the type of dependence.

a)  b)

b)

2.2.2. Dependence on margins

In the bivariate Bernoulli case, Geenens, (2020) proposed the odds-ratio as a “margin-free” measure of dependence. In fact, Geenens, (2020) quotes Item 3 of (Rudas,, 2018, Theorem 6.3 (p. 116)) which says that if are the margins’ parameters and is a parameter, whose range does not depend on (called variation independent parametrization in Rudas, (2018)), and if determines the full distribution , then is a one-to-one function of the odd-ratio . However, as a proof of this statement, Rudas, (2018) says that it is because determines . This only proves that is a one-to-one function of , for a fixed , i.e., for fixed margins, not that it is a one-to-one function of alone. In fact, according to Rudas’ definition, for the Gaussian copula , , it follows that is also a variation independent parametrization of , by simply setting . Therefore, qualifies as a “margin-free” association measure as well, if one agrees that margin-free means “variation independent in the sense of Rudas, (2018)”. Using the same argument as in Rudas, (2018), it follows that any variation independent parameter is a function of . This construction works for any rich enough copula family such as Clayton, Frank, or Plackett (yielding the omega ratio), and that it also applies to all margins, not only Bernoulli margins. In fact, the property of being one-to-one is the same as what we defined as “parameter identifiability” in Section 2.1.

3. Estimation of parameters

In this section, we will show how to estimate the parameter of the semiparametric model , where is convex. It is assumed that the given copula family satisfies the following assumption.

Assumption 1.

on is thrice continuously differentiable with respect to , for any , the density exists, is thrice continuously differentiable, and is strictly positive on . Furthermore, for a given vector of margins , is injective on .

Suppose that are iid with , for some . Without loss of generality, we may suppose that , where are iid with . Estimating parameters for arbitrary distributions is more challenging that in the continuous case. For continuous (unknown) margins, copula parameters can be estimated using different approaches, the most popular ones based on pseudo log-likelihood being the normalized ranks method (Genest et al.,, 1995, Shih and Louis,, 1995) and the IFM method (Inference Functions for Margin) (Joe and Xu,, 1996, Joe,, 2005). Since the margins are unknown, it is tempting to ignore that some margins are discontinuous and consider maximizing the (averaged) pseudo log-likelihood , , . Note than one could also replace the nonparametric margins with parametric ones, as in the IFM approach. However, in either cases, there is a problem with using when there are atoms. To see this, consider a simple bivariate model with Bernoulli margins, i.e., , , . Then, the full (averaged) log-likelihood is

where . Hence, the pseudo log-likelihood for , when and are estimated by and , is

It is clear that under general conditions, when the parameter is 1-dimensional, and the copula family is rich enough, maximizing produces a consistent estimator for while maximizing will not. In fact, for , the estimator of is the solution of (Genest and Nešlehová,, 2007, Example 13). Note also that converges almost surely to

where , . However, the estimator obtained by maximizing is not consistent in general. In fact, for any copula with a density which is continuous and non-vanishing on ,

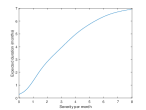

converges almost surely to In particular, for a Clayton copula with , one gets , with . As displayed in Figure 2, for the limit of , the supremum is attained at , while for the limit of , the supremum is attained at the correct value .

a)  b)

b)

Remark 2.

This simple example shows that one must be very careful in estimating copula parameters when the margins are not continuous. In particular, one should not use the usual pseudo-MLE method based on or the IFM method with continuous margins fitted to data with ties.

3.1. Pseudo log-likelihoods

For any , let be the (countable) set of atoms of , where for a general univariate distribution function , , with . Throughout this paper, we assume that is closed. For , denotes the counting measure on , and let be Lebesgue’s measure; both measures are defined on , and , also defined on , is -finite. Further let be the product measure on defined by , which is also -finite. In what follows, , . Since our aim is to estimate the parameter of the copula family without knowing the margins, the following assumption is necessary.

Assumption 2.

The margins do not depend on the parameter . In addition, for any , has density with respect to the measure .

What is meant in Assumption 2 is that if the margins were parametric (e.g., Poisson), their parameters are not related to the parameter of the copula. This assumption is needed when one wants to estimate the margins first, and then estimate .

Assumption 2 really means that , which is a natural assumption. This assumption is also implicit in the continuous case.

To estimate the copula parameters, one could use at least two different pseudo log-likelihoods: an informed one, if are known, and a non-informed one, if some atoms are not known. The latter is the approach proposed in Li et al., (2020) in the bivariate case. From a practical point of view, it is easier to implement, since there is no need to define the atoms. However, it requires more assumptions, and one could argue that in practice, atoms should be known. Before writing these pseudo log-likelihoods, we need to introduce some notations. Suppose that is a copula having a continuous density on . For any and for any , set , where is the density of and . Finally, for any vector of margins, and any , set

| (1) |

Under Assumptions 1–2, it follows from Equation (3) in the Supplementary Material, that the full (averaged) log-likelihood is given by

where, for any , and

| (2) |

with the usual convention that a product over the empty set is . If the margins were known or estimated first, then maximizing or maximizing with respect to would be equivalent, since by Assumption 2, the margins do not depend on . As a result, one gets the “informed” (averaged) pseudo log-likelihood , defined by

| (3) |

where for any , , and is defined by (12), with . Setting , then we define . As Li et al., (2020) did in the bivariate case, we can also define the “non-informed” (averaged) pseudo log-likelihood , where replaces in (3). Of course, for a given , if is an atom, then, when is large enough, . However, for a given , it is possible that even when is an atom. If the real value of the parameter is and , we set .

Example 3.

If , then , , , and .

Remark 3.

One can see that computing the pseudo log-likelihoods might be cumbersome when is large. To overcome this problem, we propose to use a pairwise composite pseudo log-likelihood. For a review on this approach in other settings, see, e.g., Varin et al., (2011). See also Oh and Patton, (2016) for the composite method in a particular copula context. Here, the pairwise composite pseudo log-likelihood is simply defined as , where is the pseudo log-likelihood defined by (3) for the pairs , . One can also replace by is the previous expression. If , set . The asymptotic behavior of the estimators , , and is studied next.

3.2. Notations and other assumptions

For sake of simplicity, the gradient column vector of a function with respect to is often denoted or , while the associated Hessian matrix is denoted by or . Before stating the main convergence results, for any , , , , , define

| (4) |

where for , In particular, , and if , , one has

Next, set . Finally, set and .

Next, to be able to deal with and the associated composite pseudo log-likelihood, one needs extra assumptions. First, one needs to control the gradient of the likelihood.

Assumption 4.

There is a neighborhood of such that for any ,

Second, in order to measure the errors one makes by considering instead of , set

Then, , .

Assumption 5.

For any , .

Assumption 5 means that the average number of indices so that when is bounded. This assumption holds true when the discrete part of the margin is a finite discrete distribution, a Geometric distribution, a Negative Binomial distribution, or a Poisson distribution, using Remark 1 in the Supplementary Material.

3.3. Convergence of estimators

Recall that for any and any , , where

, are iid observations from copula . Also, let be the associated probability distribution of , for any .

Now, for any , and any , ,

where

, .

Note that if for some , , , i.e., , then .

It is well-known that the processes , , , converge jointly in to , denoted , where are Brownian bridges, i.e., are continuous centered Gaussian processes with

, .

In particular, for any , .

Before stating the main convergence results for the estimation errors and

, for any , define and set .

Next, set and let

where the functions are defined in Appendix B.1. Finally, set . Basically, is what one should have if the margins were known, while is the price to pay for not knowing the margins. Finally, is needed for obtaining bootstrapping results (Genest and Rémillard,, 2008, Nasri and Rémillard,, 2019). The following result is a consequence of Theorem 3 and Theorem 4, proven in Appendix A and Appendix B.2 respectively.

Theorem 1.

Corollary 1.

and are regular estimator of in the sense that and converge to and .

Proof.

Theorem 1 yields . ∎

Remark 4.

As shown in Genest and Rémillard, (2008), regularity of estimators is necessary for parametric bootstrap to work, using LeCam’s third lemma.

Having got the asymptotic behavior of and , it is easy to obtain the following result.

Corollary 2.

Remark 5.

The previous results hold if one uses parametric margins instead of nonparametric margins, provided the margins are smooth enough and the estimated parameters converge in law. In fact, assume that for , , , and converges in law to , with , and is a centered Gaussian random vector. Then, under Assumptions 1–3, replacing respectively , , and with , , and , one obtains the analogs of Theorem 1 and Corollaries 1–2. Furthermore, this shows that one could also consider pair copula models.

4. Numerical experiments

In this section, we study the quality and performance of the proposed estimators, for various choices of copula families, margins and sample sizes. Note that all simulations were done with the more demanding case . In the first set of experiments, we deal with the bivariate case, and in a second set of experiments, we compute the composite estimator in a trivariate setting. First, in the bivariate case, we consider five copula families and five pairs of margins for each copula family. For the first experiment (Exp1), both margins are standard Gaussian, i.e., . In the second experiment (Exp2), the margins are Poisson with parameters and respectively, i.e., and , while in the third experiment (Exp3), and . In the fourth experiment (Exp4), is a rounded Gaussian, namely , with , and . Finally, for the fifth experiment (Exp5), is zero-inflated, with , , , and . To estimate the parameter of the Clayton, Frank, Gumbel, Gaussian and Student (with ) copula families corresponding to a Kendall’s tau , samples of size were generated for each copula family, and was computed. Here, is Kendall’s tau of the copula family , written . For results to be comparable throughout copula families, we computed the relative bias and the relative root mean square error (RMSE) of , instead of . The results for samples are reported in Table 1. As one can see, the estimator performs quite well for the five numerical experiments and the five copula families. Furthermore, the precision depends on the copula family, but for a given copula family, the precision does not vary significantly with the margins. Even the case when both margins are continuous (Exp1) does not yield the best results. When the sample size is 250 or more, the relative bias is always smaller than 2%. Finally, as expected, both the bias and the RMSE decrease when the sample size increases.

| Copula | |||||

| Margins | Clayton | Frank | Gumbel | Gaussian | Student |

| Exp1 | -0.42 (9.62) | 0.40 (9.30) | 3.02 (10.9) | 2.92 (9.40) | 2.18 (11.1) |

| Exp2 | 0.52 (10.2) | 0.86 (9.64) | 3.70 (11.4) | 3.16 (9.80) | 2.64 (11.5) |

| Exp3 | 0.02 (9.84) | 0.58 (9.40) | 3.34 (11.1) | 2.96 (9.52) | 2.36 (11.3) |

| Exp4 | -0.44 (9.62) | 0.40 (9.30) | 3.02 (10.9) | 2.88 (9.38) | 2.14 (11.1) |

| Exp5 | -1.00 (9.90) | 0.36 (9.30) | 2.76 (10.8) | 2.42 (9.28) | 1.68 (11.0) |

| Exp1 | 0.18 (6.06) | -0.32 (5.76) | 0.56 (6.12) | 1.30 (5.92) | 0.92 (6.52) |

| Exp2 | 0.50 (6.44) | -0.00 (5.92) | 1.52 (6.36) | 1.72 (6.18) | 1.52 (6.82) |

| Exp3 | 0.40 (6.22) | -0.20 (5.84) | 0.96 (6.16) | 1.42 (6.02) | 1.16 (6.60) |

| Exp4 | 0.12 (6.08) | -0.32 (5.76) | 0.60 (6.12) | 1.30 (5.92) | 0.92 (6.52) |

| Exp5 | -0.10 (6.22) | -0.34 (5.76) | 0.42 (6.12) | 1.06 (5.90) | 0.62 (6.56) |

| Exp1 | 0.08 (4.44) | -0.04 (3.88) | 0.38 (4.44) | 0.64 (4.40) | 0.34 (4.48) |

| Exp2 | 0.36 (4.60) | 0.06 (4.02) | 0.72 (4.64) | 0.76 (4.30) | 0.54 (4.68) |

| Exp3 | 0.28 (4.50) | -0.00 (3.90) | 0.52 (4.52) | 0.70 (4.24) | 0.42 (4.56) |

| Exp4 | 0.04 (4.44) | -0.04 (3.88) | 0.40 (4.44) | 0.62 (4.19) | 0.32 (4.48) |

| Exp5 | 0.10 (4.48) | -0.04 (3.88) | 0.34 (4.44) | 0.54 (4.20) | 0.24 (4.48) |

In the second set of experiments, using the pairwise composite estimator , we estimated the parameters of a trivariate non-central squared Clayton copula (Nasri,, 2020) with Kendall’s tau , and non-centrality parameters , , and . In this case, for all five experiments, and are defined as before, while . The results are displayed in Table 2. As one could have guessed, the estimation of is not as good as in the bivariate case, but the results are good enough. As for the non-centrality parameters, the estimation of , which has a large value (the upper bound being ), is not as good as the other values, but this is coherent with the simulations in Nasri, (2020). All in all, the composite method yields quite satisfactory results.

| Parameters | ||||

| Margins | ||||

| Exp1 | 4.01 (11.21) | -4.92 (24.95) | -30.67 (41.09) | -5.08 (21.56) |

| Exp2 | 4.24 (11.68) | -4.70 (25.27) | -28.71 (40.58) | -4.83 (21.33) |

| Exp3 | 4.31 (11.50) | -5.74 (23.72) | -30.02 (40.95) | -5.32 (21.08) |

| Exp4 | 3.99 (11.22) | -4.93 (25.04) | -30.74 (41.10) | -5.12 (21.52) |

| Exp5 | 3.99 (11.18) | -4.98 (24.34) | -30.63 (41.12) | -5.22 (21.18) |

| Exp1 | 1.34 (6.80) | -4.10 (13.05) | -21.93 (34.13) | -3.82 (13.07) |

| Exp2 | 1.32 (6.97) | -2.82 (13.24) | -20.59 (33.63) | -3.15 (13.07) |

| Exp3 | 1.41 (6.85) | -3.47 (13.47) | -20.46 (33.45) | -3.66 (13.08) |

| Exp4 | 1.33 (6.77) | -4.01 (12.98) | -22.01 (34.03) | -3.77 (12.83) |

| Exp5 | 1.34 (6.80) | -4.10 (13.04) | -21.93 (34.14) | -3.82 (13.08) |

| Exp1 | 0.63 (4.56) | -2.07 (8.81) | -12.30 (25.44) | -2.19 (8.09) |

| Exp2 | 0.74 (4.74) | -1.98 (9.13) | -12.81 (26.67) | -2.19 (8.54) |

| Exp3 | 0.73 (4.61) | -2.07 (8.93) | -12.24 (25.45) | -2.30 (8.20) |

| Exp4 | 0.63 (4.56) | -2.06 (8.84) | -12.31 (25.44) | -2.21 (8.13) |

| Exp5 | 0.63 (4.56) | -2.05 (8.78) | -12.20 (25.33) | -2.17 (8.06) |

5. Example of application

In this section, we propose a rigorous method to study the relationship between duration and severity for hydrological data used in Shiau, (2006). The data were kindly provided by the author. There are many articles in the hydrology literature about modeling drought duration and severity with copulas; see, e.g., Chen et al., (2013), Shiau, (2006). One of the main tools to compute the drought duration and severity is the so-called Standardized Precipitation Index (SPI) (McKee et al.,, 1993). Basically, McKee et al., (1993) suggest to fit a gamma distribution over a moving average (1-month, 3-month, etc.) of the precipitations and then transform them into a Gaussian distribution. However, it may happen that there are several zero values in the observations so fitting a continuous distribution is not possible. Using the data kindly provided by Professor Shiau (daily precipitations in millimeters for the Wushantou gauge station from 1932 to 2001), we see from Figure 3 that even taking a 1-month moving average leads to a zero-inflated distribution. So, instead of fitting a gamma distribution to the moving average, as it is often done, we suggest to simply apply the inverse Gaussian distribution to the empirical distribution. Then, one can compute the duration and severity: a drought is defined as a sequence of consecutive days with negative SPI values, say : the length the sequence is the duration , i.e., , and the severity is defined by . It makes sense to consider the severity as a continuous random variable but the duration is integer-valued. Again, in the literature, a continuous distribution is usually fitted to , which is incorrect. These variables are then divided by in order to represent months. With the dataset, we obtained 175 drought periods. A non-parametric estimation of the density of the severity per month is displayed in Figure 3 which seems to be a mixture of at least two distributions. We tried mixtures of up to gamma distributions without success. A scatter plot of the duration and the severity also appears in Figure 3. With the copula-based methodology developed here, based on a measure of fit, we chose the Frank copula. In contrast, the preferred copula families in Shiau, (2006) were the Galambos and Gumbel families. Using a smoothed distribution for the severity , we can compute the conditional probability for to months, in addition to the conditional expectation . These functions are displayed in Figure 4.

6. Conclusion

We presented methods based on pseudo log-likelihood for estimating the parameter of copula-based models for arbitrary multivariate data. These pseudo log-likelihoods depend on the non-parametric margins and are adapted to take into account the ties. We have also shown that the methodology can be extended to the case of parametric margins. According to numerical experiments, the proposed estimators perform quite well. As a example of application, we estimated the relationship between drought duration and severity in hydrological data, where the problem of ties if often ignored. The proposed methodologies can also be applied to high dimensional data. For this reason, we have shown in Corollary 2 that the pairwise composite applied to our bivariate pseudo-likelihood is valid. Finally, in a future work, we will also develop bootstrapping methods and formal tests of goodness-of-fit.

Acknowledgements

The first author is supported by the Fonds de recherche du Québec – Nature et technologies, the Fonds de recherche du Québec – santé, the École de santé publique de l’Université de Montréal, the Centre de recherche en santé publique (CRESP), and the Natural Sciences and Engineering Research Council of Canada. The second author is supported by the Natural Sciences and Engineering Research of Canada. We would also like to thank Professor Jenq-Tzong Shiau of National Cheng Kung University for sharing his data with us.

Appendix A Rank-based Z-estimators when margins are arbitrary

Suppose that , where are iid observations from copula . For any , set . Also, the set of atoms of is assumed to be closed for any . This implies that if , and , then . Further set , and , where and . Here, is a -value continuously differentiable function defined on . To simplify notations, one also writes if for all . Finally, set , , and .

Example 4.

Take . This can be used to define Spearman’s rho.

Example 5 (Estimation of copula parameter).

In this case, is defined by (4).

Next, define and . We are interested in the convergence in law of , which is a multivariate extension of Ruymgaart et al., (1972) to arbitrary distributions, where Ruymgaart et al., (1972) considered the bivariate case with continuous margins, and where is a product and independent of . Before stating the result, one needs to define the following class of functions.

Definition 2.

Let is the set of all positive continuous functions on , such that for any , the exists a constant independent of so that

| (5) |

Note that (5) is an extension of reproducing u-shaped functions defined in Tsukahara, (2005). One can see that is closed under finite sums and finite products. In particular, if for all , then and .

Remark 6.

Note that , if are positive, continuous, non-increasing functions such that , , . For example, and belong to if . In Ruymgaart et al., (1972), it was assumed that .

For any , set , and define , . The following hypotheses are needed in the proof.

Assumption 6.

For any , , there exists a neighborhood of and a constant such that for any , , and ,

| (6) | |||||

| (7) | |||||

| (8) | |||||

| (9) |

where is integrable with respect to , and for some , is integrable with respect to and . Also, is square integrable with respect to .

Remark 7.

If , with integrable for any , then is integrable with respect to any copula, since its margins are uniform! In the copula literature, when the margins are continuous, as well as in Ruymgaart et al., (1972) for , all results about the convergence are proven on the assumption that , symmetric, (Genest et al.,, 1995, Fermanian et al.,, 2004, Tsukahara,, 2005). In some cases, those hypotheses are too restrictive, for example for Clayton, Gaussian, and Gumbel families.

Before stating the main result, define , and set , where

Theorem 2.

Under Assumption 6, there exists a neighborhood of such that as ,

Furthermore, , , and converge jointly in to continuous centered Gaussian processes , , and , where , and

Proof.

Set . On and , for some neighborhood of , converges uniformly in probability to . As a result,

For fixed, set , where , . Then, for given, one can find so that for any , . Then, setting , one gets

where, for any ,

since a.s., . By hypothesis (7)–(8), it follows that for , , . By hypothesis, is integrable with respect to . Also, by hypothesis (9), for , , and by hypothesis, one can find so that for any and , is integrable with respect to . As a result, for , if is small enough. Therefore, by choosing , , and appropriately. Next, set

with

where, for any , there exists such that and . Now, for , one can such that , where , and , . As a result, on , . It then follows that . Hence, since , for any with , one has . It then follows that on , , which can be made arbitrarily small by the strong law of large numbers since is integrable with respect to . Finally, , and by hypothesis, one can find so that for any and , is integrable with respect to . One may then conclude that for , one can find so that . The rest of the proof follows easily. ∎

The following assumption is needed for proving convergence of estimators.

Assumption 7.

There exists a neighborhood of such that and the Jacobian exists, is continuous, and is positive definite or negative definite at .

Remark 8.

From Proposition 1, there is a neighborhood of on which is injective, and is either positive definite or negative definite for all . In particular, has a unique zero in .

We can now prove the following general convergence result for rank-based Z-estimators.

Proof.

Suppose that is such that and suppose that is a sub-sequence converging to . Such a sub-sequence exists by choosing a bounded neighborhood of . Then, on one hand, . On the other hand, from Theorem 2, . As a result, and by Remark 8, . Since every subsequence converges to the same limit, it follows that converges in probability to . Using Theorem 2 again, on one hand, one may conclude that . On the other hand, for some , one gets that

where . By Assumption 7, is positive definite. As a result, . ∎

Appendix B Application to copula families

In the case of estimation of copula parameters, i.e., when is defined by formula (4), set and . For any , define , and . Next, for , , set , where if and if . Then

| (10) |

where

.

Note that if , then

using the previous notations, one gets

Before stating the next result, we define additional functions that will appear in the expression of .

B.1. Auxiliary functions

For , , and , define , and set , where

Next, for and , define and , where

with .

B.2. Asymptotic behavior of the estimator

Recall that and , .

Theorem 4.

Proof.

For any , set . It follows from formula (10) that

Thus, . As a result, the independent random variables are centered, and we may conclude that , where . Also, since converge jointly to the Brownian bridges . Now

Using the same trick as in the proof that , one gets, for any , and

yielding the representation for . Next, it is then easy to show that for any , so . Next, for any ,

Therefore, . Next, for any ,

Hence, for any ,

so

As a result, . ∎

Appendix C Supplementary material

Here, some results necessary to identifiability are proven, together with multilinear representations and results on densities with respect to product of mixtures of Lebesgue’s measure and counting measure, as well as a result on the verification of Assumption 5.

C.1. Proof of Proposition 2.1

Suppose that the maximal rank of is and let so that the rank of is . Set . Then there exists an submatrix with non-zero determinant. By continuity, there is a neighborhood of such that has non-zero determinant. Since the maximal rank is , it follows that the rank of is for all . One can now apply the famous Rank Theorem (Rudin,, 1976, Theorem 9.32) to deduce that there is a diffeomorphism from an open set onto a neighborhood , , such that , where is a continuously differentiable mapping into the null space of a projection withe same range as . Thus, choose in the null space of and so that . Then, for some , for any . As a result, for all , , showing that is not injective. Next, suppose that has rank and is not injective, i.e, one can find , with . Set . This is well defined on since is convex. Since and is continuous, is a closed curve and there exist with , for all and for all . It then follows that either or . Otherwise is constant and for all . So suppose that ; the case is similar. Then , which implied that for any and for small enough, . As a result, for all . Therefore, from this extension of Rolle’s Theorem, . As a result, the Jacobian has not rank at , which is a contradiction. Hence is injective. Suppose now that the rank of is . Set . Then the rank of is iff . Since and is continuous on , one can find a neighborhood of for which for every . Finally, if the maximal rank is , then if follows from the proof of the first statement that the rank of is also in a neighborhood of , and from the Rank Theorem (Rudin,, 1976, Theorem 9.32) , is not injective in a neighborhood of . ∎

C.2. Density with respect to

First, for a given cdf having density with respect to , where is a countable set, one has , for , and

| (11) |

Then, by the fundamental theorem of calculus, , -a.e. If in addition is closed, then for any , and small enough, , so a.e. Next, to find the expression of the density of distribution function with respect to , suppose that are atoms of , while are not atoms of . On one hand, if , for small enough, one gets

On the other hand, if , with having a continuous density over , using the fact that for any , the Lebesgue’s measure of is by (11), one gets

Therefore,

where is defined by

| (12) |

As a result, if , then the density with respect to is

| (13) |

In particular, if is the set of indices for which , and is a copula associated with the joint law of , where has margin , then (13) yields

| (14) |

C.3. Verification of Assumption 5

Let be a discrete distribution function concentrated on . Recall that .

Proposition 2.

Suppose that there exists a constant so that for any small enough,

| (15) |

Then .

Proof.

First, recall that for any . For simplicity, for any , set . Next, using (15), if is large enough, one has

As a result, . ∎

Note that when is finite, then (15) holds with .

Remark 9.

Note that (15) holds for the geometric distribution with , , with . In this case, since , . (15) holds for Poisson distribution with parameter since , , with . In this case, . In general a sufficient condition for (15) to hold on is that , . Another example of an important distribution satisfying (15) is the Negative Binomial distribution with parameters and , where , . In this case . So if is small enough, is decreasing, so let be such that . It then follows that if is large enough,

where .

References

- Chen et al., (2013) Chen, L., Singh, V. P., Guo, S., Mishra, A. K., and Guo, J. (2013). Drought analysis using copulas. J. Hydrol. Eng., 18(7):797–808.

- Clayton, (1978) Clayton, D. G. (1978). A model for association in bivariate life tables and its application in epidemiological studies of familial tendency in chronic disease incidence. Biometrika, 65:141–151.

- de Leon and Wu, (2011) de Leon, A. R. and Wu, B. (2011). Copula-based regression models for a bivariate mixed discrete and continuous outcome. Stat. Med., 30(2):175–185.

- Dunnett and Sobel, (1954) Dunnett, C. W. and Sobel, M. (1954). A bivariate generalization of Student’s t-distribution, with tables for certain special cases. Biometrika, 41:153–169.

- Embrechts et al., (2002) Embrechts, P., McNeil, A. J., and Straumann, D. (2002). Correlation and dependence in risk management: properties and pitfalls. In Risk Management: Value at Risk and Beyond (Cambridge, 1998), pages 176–223. Cambridge Univ. Press, Cambridge.

- Ery, (2016) Ery, J. (2016). Semiparametric inference for copulas with discrete margins. Master’s thesis, École Polytechnique Fédérale de Lausanne, Faculty of Mathematics.

- Faugeras, (2017) Faugeras, O. P. (2017). Inference for copula modeling of discrete data: a cautionary tale and some facts. Depend. Model., 5(1):121–132.

- Fermanian et al., (2004) Fermanian, J.-D., Radulović, D., and Wegkamp, M. H. (2004). Weak convergence of empirical copula processes. Bernoulli, 10:847–860.

- Geenens, (2020) Geenens, G. (2020). Copula modeling for discrete random vectors. Depend. Model., 8(1):417–440.

- Geenens, (2021) Geenens, G. (2021). Dependence, Sklar’s copulas and discreteness. Online presentation available at https://sites.google.com/view/apsps/previous-speakers. Asia-Pacific Seminar on Probability and Statistics.

- Genest and Favre, (2007) Genest, C. and Favre, A.-C. (2007). Everything you always wanted to know about copula modeling but were afraid to ask. J. Hydrol. Eng., 12(4):347–368.

- Genest et al., (1995) Genest, C., Ghoudi, K., and Rivest, L.-P. (1995). A semiparametric estimation procedure of dependence parameters in multivariate families of distributions. Biometrika, 82:543–552.

- Genest and Nešlehová, (2007) Genest, C. and Nešlehová, J. (2007). A primer on copulas for count data. The Astin Bulletin, 37:475–515.

- Genest et al., (2017) Genest, C., Nešlehová, J. G., and Rémillard, B. (2017). Asymptotic behavior of the empirical multilinear copula process under broad conditions. J. Multivariate Anal., 159:82–110.

- Genest and Rémillard, (2008) Genest, C. and Rémillard, B. (2008). Validity of the parametric bootstrap for goodness-of-fit testing in semiparametric models. Ann. Inst. Henri Poincaré Probab. Stat., 44(6):1096–1127.

- Genz, (2004) Genz, A. (2004). Numerical computation of rectangular bivariate and trivariate normal and t probabilities. Stat. Comput., 14(3):251–260.

- Joe, (1990) Joe, H. (1990). Multivariate concordance. J. Multivariate Anal., 35(1):12–30.

- Joe, (2005) Joe, H. (2005). Asymptotic efficiency of the two-stage estimation method for copula-based models. J. Multivariate Anal., 94:401–419.

- Joe and Xu, (1996) Joe, H. and Xu, J. J. (1996). The estimation method of inference functions for margins for multivariate models. Technical Report 166, UBC.

- Li et al., (2020) Li, Y., Li, Y., Qin, Y., and Yan, J. (2020). Copula modeling for data with ties. Stat. Interface, 13(1):103–117.

- McKee et al., (1993) McKee, T. B., Doesken, N. J., Kleist, J., et al. (1993). The relationship of drought frequency and duration to time scales. In Proceedings of the 8th Conference on Applied Climatology, volume 17, pages 179–183.

- Nasri, (2020) Nasri, B. R. (2020). On non-central squared copulas. Statist. Probab. Lett., 161:108704.

- Nasri and Rémillard, (2019) Nasri, B. R. and Rémillard, B. N. (2019). Copula-based dynamic models for multivariate time series. J. Multivariate Anal., 172:107–121.

- Nasri and Rémillard, (2023) Nasri, B. R. and Rémillard, B. N. (2023). CopulaInference: Estimation and Goodness-of-Fit of Copula-Based Models with Arbitrary Distributions. R package version 0.5.0.

- Nasri et al., (2020) Nasri, B. R., Rémillard, B. N., and Thioub, M. Y. (2020). Goodness-of-fit for regime-switching copula models with application to option pricing. Canad. J. Statist., 48(1):79–96.

- Oh and Patton, (2016) Oh, D. H. and Patton, A. J. (2016). High-dimensional copula-based distributions with mixed frequency data. J. Econom., 193(2):349–366.

- Rudas, (2018) Rudas, T. (2018). Lectures on Categorical Data Analysis. Springer Texts in Statistics. Springer, New York.

- Rudin, (1976) Rudin, W. (1976). Principles of Mathematical Analysis. McGraw-Hill Book Co., New York-Auckland-Düsseldorf, third edition. International Series in Pure and Applied Mathematics.

- Ruymgaart et al., (1972) Ruymgaart, F. H., Shorack, G. R., and van Zwet, W. R. (1972). Asymptotic normality of nonparametric tests for independence. Ann. Math. Statist., 43:1122–1135.

- Shiau, (2006) Shiau, J. T. (2006). Fitting drought duration and severity with two-dimensional copulas. Water. Resour. Manag., 20(5):795–815.

- Shih and Louis, (1995) Shih, J. H. and Louis, T. A. (1995). Inferences on the association parameter in copula models for bivariate survival data. Biometrics, 51:1384–1399.

- Sklar, (1959) Sklar, M. (1959). Fonctions de répartition à n dimensions et leurs marges. Publ. Inst. Statist. Univ. Paris, 8:229–231.

- Song et al., (2009) Song, P. X.-K., Li, M., and Yuan, Y. (2009). Joint regression analysis of correlated data using Gaussian copulas. Biometrics, 65(1):60–68.

- Tsukahara, (2005) Tsukahara, H. (2005). Semiparametric estimation in copula models. Canad. J. Statist., 33(3):357–375.

- Varin et al., (2011) Varin, C., Reid, N., and Firth, D. (2011). An overview of composite likelihood methods. Statist. Sinica, 21(1):5–42.

- Zhang and Singh, (2019) Zhang, L. and Singh, V. P. (2019). Copulas and Their Applications in Water Resources Engineering. Cambridge University Press.

- Zilko and Kurowicka, (2016) Zilko, A. A. and Kurowicka, D. (2016). Copula in a multivariate mixed discrete–continuous model. Comput. Statist. Data Anal., 103:28–55.