33email: [email protected]

ICDAR 2021 Competition on Components Segmentation Task of Document Photos††thanks: Supported by University of Pernambuco, CAPES and CNPq.

Abstract

This paper describes the short-term competition on “Components Segmentation Task of Document Photos” that was prepared in the context of the “16th International Conference on Document Analysis and Recognition” (ICDAR 2021). This competition aims to bring together researchers working on the filed of identification document image processing and provides them a suitable benchmark to compare their techniques on the component segmentation task of document images. Three challenge tasks were proposed entailing different segmentation assignments to be performed on a provided dataset. The collected data are from several types of Brazilian ID documents, whose personal information was conveniently replaced. There were 16 participants whose results obtained for some or all the three tasks show different rates for the adopted metrics, like “Dice Similarity Coefficient” ranging from to . Different Deep Learning models were applied by the entrants with diverse strategies to achieve the best results in each of the tasks. Obtained results show that the current applied methods for solving one of the proposed tasks (document boundary detection) are already well stablished. However, for the other two challenge tasks (text zone and handwritten sign detection) research and development of more robust approaches are still required to achieve acceptable results.

Keywords:

ID document images visual object detection and segmentation processing of identification document images1 Introduction

This paper describes the short-term competition on “Components Segmentation Task of Document Photos” organized in the context of the “16th International Conference on Document Analysis and Recognition” (ICDAR 2021).

The traffic of identification document images (ID document) through digital media is already a common practice in several countries. A large amount of data can be extracted from these images through computer vision and image processing techniques, as well machine learning approaches. The extracted data can serve for different purposes, such as names and dates retrieved from text fields to be processed by OCR systems or extracted handwritten signatures to be checked by biometric systems, in addition to other characteristics and patterns present in images of identification documents. Actually there are few developed image processing applications that focus on the treatment of ID document images, specially related with image segmentation of ID documents.

The main goal of this contest was to stimulate researchers and scientists in the search for new techniques of image segmentation for the treatment of these ID document images. The availability of an adequate experimental dataset of ID documents is another factor of great importance, given that there are few of them free-available to the community [26]. Due to privacy constraints, for the provide dataset all text with personal information was synthesized with fake data. Furthermore, the original signatures were substituted by new ones collected randomly from different sources. All these changes were performed by well-designed algorithms and post-processed by humans whenever needed to keep the real-world conditions as much as possible.

2 Competition Challenges and Dataset Description

In this contest, entrants have to employ their segmentation techniques on given ID document images according to tasks defined for different levels of segmentation. Specifically, the goal here is to evaluate the quality of the applied image segmentation algorithms to ID document images acquired by mobile cameras, where several issues may affect the segmentation task performance, such as: location, texture and background of the document, camera distance to the document, perspective distortions, exposure and focus issues, reflections or shadows due to ambient light conditions, among others.

To evaluate tasks entailing different segmentation levels, the following three challenges have to be addressed by the participants as described below.

2.1 Challenge Tasks

1st Challenge - Document Boundary Segmentation:

The objective of this challenge is to develop boundary detection algorithms for different kinds of documents [21]. The entrants should develop an algorithm that takes as input an image containing a document, and return a new image of the same size with the background in black pixels and the region occupied by the document in white pixels. Figure 1-left shows an example of this detection process.

2nd Challenge - Zone Text Segmentation:

This challenge encourages the development of algorithms for automatic text detection in ID documents [21]. The entrants have to develop an algorithm capable of detecting text patterns in the provided set of images; that is, to process an image of a document (without background), and return a new image of the same size with non-interest regions in black pixels and regions of interest (text regions) in white pixels. This detection process is illustrated in Figure 1-right.

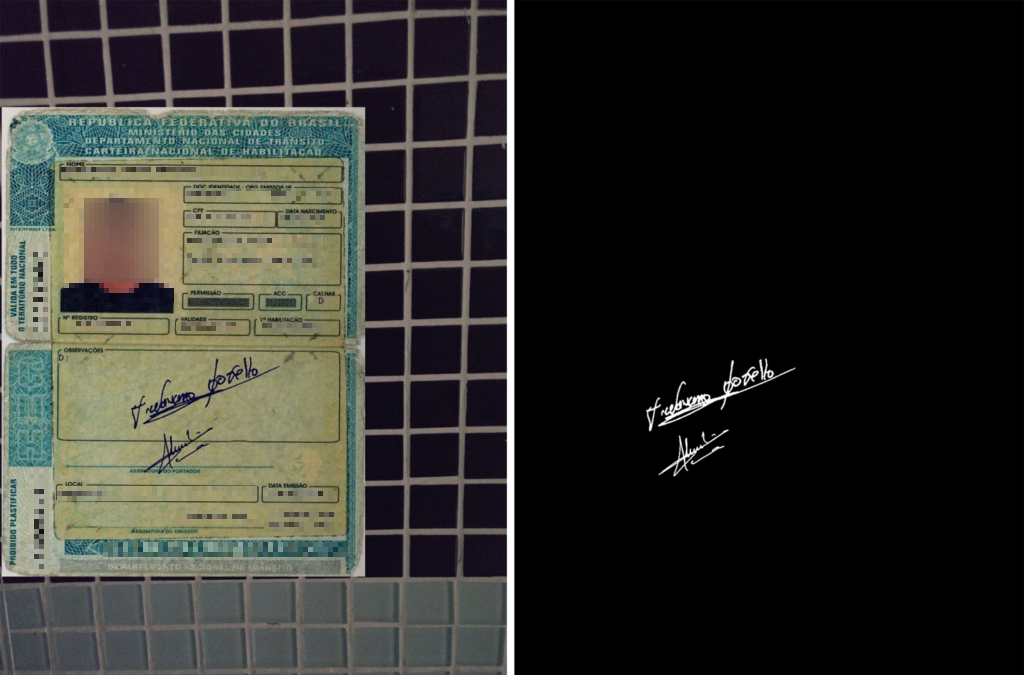

3rd Challenge - Signature Segmentation:

This challenge aims at developing algorithms to detect and segment handwritten signatures on ID documents [12, 28]. Given an image of a document, the model or technique applied should return an image with the same size of the input image with the handwritten signature strokes as foreground in white pixels, and everything else in black pixels. Figure 2 displays an example of this detection.

2.2 ID Document Image Dataset

For this competition, the provided dataset is composed of thousands of images of ID documents from the following Brazilian document types: National Driver’s License (CNH), Natural Persons Register (CPF) and General Registration (RG). The document images were captured through different cell phone cameras at different resolutions. Documents that appear in the images are characterized by having different textures, colors and lighting, on different real-world backgrounds with non-uniform patterns.

The images are all in RGB color mode and PNG format. The ground truth of this dataset is different for each challenge. For the first challenge, the interest regions are the document areas themselves, while the printed text areas are the ones for the second challenge. For the third challenge, we are only interested in the pixels of detected handwritten signature strokes. As commented before, for all the challenges, the interest regions/pixels are represented by white pixel areas, while non-interest ones in black pixel areas.

As personal information contained in the documents cannot be made public, the original data was replaced with synthetic data. We generate fake information for all fields present in the document (such as name, date of birth, affiliation, number of document, among others), as described in [26].

On the other hand, handwritten signatures were acquired from the MCYT [22] and GPDS [9] datasets, which were synthetically incorporated into the images of ID documents.

Both the ID document images and the corresponding ground truth of the dataset were manually verified, and they are intended to be used for model training and for computing evaluation metrics. Table 1 summarizes the basic statistics of the dataset. The whole dataset is available for the research community under request (visit: https://icdar2021.poli.br/).

| Number of: | Train-C1 | Test-C1 | Train-C2 | Test-C2 | Train-C3 | Test-C3 |

|---|---|---|---|---|---|---|

| Images | 15,000 | 5,000 | 15,000 | 5,000 | 15,000 | 5,000 |

3 Competition Protocol

As introduced in the previous section, this competition presents three challenges:

-

•

1st Challenge: Document Boundary Segmentation

-

•

2nd Challenge: Zone Text Segmentation

-

•

3rd Challenge: Signature Segmentation

Competitors can decide how many tasks they want to compete for, choosing between one or more challenges. In addition to the competition data, it was allowed to use additional datasets for model training. In this sense, some participants used the BID Dataset [26], and Imagenet [7] for improving the prediction of their approaches.

Participants could also use solutions from a challenge task as support to develop solutions for other challenges. Some participants used image segmentation predictions of the 1st challenge to remove background from images before they were used for the 2nd and 3rd challenge tasks.

Participants could also use pre or post-image processing techniques to develop their solutions.

3.1 Assessment Methods

The Structural Similarity Index (SSIM) metric was employed in the evaluation process. However, the results obtained using SSIM were very similar in all systems and techniques. Therefore we did not report their results in this work.

The following similarity metrics were used for evaluating the approaches applied by the participants:

3.1.1 Dice Similarity Coefficient (DSC)

The Dice Similarity Coefficient (DSC), presented by Equation 1 is a statistical metric proposed by Dice, Lee R [8], which can be used to measure the similarity between two images.

| (1) |

where and are elements of the ground truth and segmented image, respectively.

3.1.2 Scale Invariant Feature Transform (SIFT)

The scale-invariant feature transform (SIFT) is a feature extraction algorithm, proposed by David Lowe [20], that describe local features in images.

The feature extraction performed by SIFT consists in the detection of Key-Points at the image. These key-points denote information related to the regions of interest (represented by the white pixels) of the ground truth and the segmented image. Once mapped, the key-points of the ground truth images are stored and compared with the key-points identified in the predicted image. From this comparison, it is possible to define a similarity rate.

4 Briefly Description of the Entrant’s Approaches

In total there were 16 research groups from different countries that have participated in the contest. The entrants submitted their segmentation hypotheses computed on the corresponding test sets for some or all of the three challenge tasks. The main characteristics of the approaches used by each entrant in each challenge task are described below.

-

1.

58 CV Group (58 Group, China) - Task 1: The approach is based on “Efficient and Accurate Arbitrary-Shaped Text Detection with Pixel Aggregation Network” (PANNet) [34] with improvements for edge segmentation. For model training, the segmentation effect has been improved by employing two different loss functions: the edge segmentation loss and the focal loss [17].

-

2.

Ambilight Lab Group (NetEase Inc., China) - Task 1: Document boundary detection has been carried out by using the composed model HRnet+OCR+DCNv2 [30, 36, 42]. Task 2: On the segmentation results in Task 1, a text angle classify model based on ResNet-50 [11] and a text segmentation model HRnet+OCR+DCNv2 were applied. Task 3: On the segmentation results in Task 1, an approach based on YOLO-v5111https://zenodo.org/record/4679653#.YKkfQSUpBFQ for signature detection and HRnet+OCR+DCNv2 for signature segmentation were applied.

-

3.

Arcanite Group (Arcanite Solutions LLC, Switzerland) - Tasks 1, 2 & 3: U-Net [25] is employed as model architecture, where the encoder is based on ResNet-18 [11] and ImageNet [7], while the decoder is composed basically by transpose convolutions for up-sampling the outputs. Cross-entropy [39] and Dice [29] was used as loss functions in the training process.

-

4.

Cinnamon AI Group (Hochiminh City Univ. of Tech., Vietnam) - Tasks 1, 2 & 3: Methods in these Tasks employ U-Net [25] as model architecture with Resnet-34 [11] backbone. This model has been trained to resolve the assignment proposed in each Task, where corresponding data augmentation has been applied for improving generalization and robustness.

-

5.

CUDOS Group (Anonymous) - Tasks 1, 2 & 3: The approaches are based on encoder-decoder SegNet [1] architecture and “Feature Pyramid Network” (FPN) [16] for object detection with MobileNetV2 [27] backbone of the network. Data augmentation and cross-entropy [39] loss were employed in the training of the network.

-

6.

Dao Xianghu light of TianQuan Group (CCB Financial Technology Co. Ltd, China) - The approaches performed on the three tasks employ encoder-decoder model architectures. Task 1: The approach uses two DL models (both based on ImageNet pretrained weights): DeepLabV3 [5] (with ResNet-50 backbone) and Segfix [37] for refinement. Task 2: The applied approach is based on a model named DeepLabV3+ [6]. Task 3: In this case, the used approach is the Unet++ [41]. In general, training of the models for the three tasks were carried out with data augmentation, using pretrained weights initialized on ImageNet, and SOTA (or FocalDice) [17] loss function.

- 7.

-

8.

dotLAB Group (Universidade Federal de Pernambuco, Brazil) - Tasks 1, 2 & 3: The applied approaches are based on a network architecture called “Self-Calibrated U-Net” (SC-U-Net) [18] which uses self-calibrated convolutions to learn better discriminative representations.

-

9.

NAVER Papago Group (NAVER Papago, South Korea) - Tasks 1, 2 & 3: The approaches are based on Unet++ [41] with encoder based on ResNet [11]. Training of models were conducted with data augmentation, using cross-entropy [39] and Dice [29] as loss functions. The models were also finetuned using the Lovász loss function [2].

-

10.

NUCTech Robot Group (NUCTech Limited Co., China) - Tasks 1 & 2 and 1 & 3 are resolved simultaneously through two unified models named “Double Segmentation Network” (DSegNet). Feature extraction of DSegNet is carried out by the ResNet-50 [11] backbone. These networks have two output channels: one outputs the document boundary and text segmentation zones and the other outputs document boundary and signature segmentation. Data augmentation has also been used in the training of the models with Dice [29] as loss function.

-

11.

Ocean Group (Lenovo Research and Xi’an Jiaotong University, China) - Task 1: Ensemble framework consisting of two segmentation models, Mask R-CNN [10] with PointRend module [13] and DeepLab [4] with decouple SegNet [14], whose outputs are merged to get the final result. The models were also trained with data augmentation. Task 3: A one-stage detection model is used to detect handwritten signature bounding box. Then a full convolutional encoder-decoder segmentation network combined with a refinement block was used to predict accurately the handwritten signature location.

-

12.

PA Group (AI Research Institute, OneConnect, China) - Tasks 1 & 3: The approaches are based on ResNet-34 followed by a “Global Convolution Network” (ResNet34+GCN) [11, 40]. Task 2: The approach is based on ResNet-18 followed by a “Pyramid Scene Parsing Network” (ResNet18+PSPNet) [11, 40]. Dice loss function [29] was used in the training of all task’s models.

-

13.

SPDB Lab Group (Shanghai Pudong Development Bank, China) - Task 1: The approach based on three deep networks to predict the mask and vote on the prediction results of each pixel: 1) Mask R-CNN [10] which uses HRNet [31] as backbone, HR feature pyramid networks (HRFPN) [35] and PointRend [13]; 2) Multi-scale DeepLabV3 [5]; and 3) U2Net [23]. Task 2: The approach has three different DL models participate in the final pixel-wise voting prediction: 1) Multi-scale OCRNet [33, 36]; 2) HRNet+OCRNet [36]; and 3) DeepLabV3+ [6], which uses ResNeSt101 [38] as backbone. Task 3: The approach employs object detector YOLO [24], whose cropped image output is sent to several segmentation models to get the final pixel-wise voting prediction: 1) U2Net [23]; 2) HRNet+OCRNet [36]; 3) ResNeSt+DeepLabV3 +[6, 38]; and 4) Swin-Transformer [19].

-

14.

SunshineinWinter Group (Xidian University, China) - Tasks 1 & 2: Addressed approaches use the so-called EfficientNet [32] as the backbone of the segmentation method and the “Feature Pyramid Networks” (FPN) [16] for object detection. Balanced cross-entropy [39] was used as a loss function. Task 3: The approach is based on the classical two-stage detection model Mask R-CNN [10], while the segmentation model is the same as the ones in Tasks 1 & 2.

- 15.

-

16.

Wuhan Tianyu Document Algorithm Group (Wuhan Tianyu Information Industry Co. Ltd., China) - Task 1: DeepLabV3 [5] model was used for document boundary detection. Task 2: A two-stage approach which uses a DeepLabV3 [5] for roughly text field detection and OCRNet [15] for locating accurately text zones. Task 3: OCRNet [15] was employed for handwritten signature location.

5 Results and Discussion

The set of tests made available to the participants covers the three tasks of the competition. The teams were able to choose between solving one, two, or three tasks. For each Task, we had the following distribution: Task 1 with 16 teams, Task 2 with 12 teams, and Task 3 with 11 teams. Thus, 39 submissions were evaluated for each metric.

We present the results for each task of the challenge in the following tables: Table 2 shows the results for Task 1; in Table 3 are the results of Task 2; and Table 4 shows Task 3 results. The results of the teams were evaluated using the metrics described in Section 3. We removed the SSIM metric from our analysis, since it did not prove to represent (all participants achieved results above ). A possible reason for this behaviour is that the images resulting from the segmentation have predominantly black pixels for all Tasks. This shows that none of the teams had problems with the structural information of the image. Therefore, the metrics used to evaluate all systems were DSC and SIFT.

For ranking the submissions, we used at first the DSC metric and as a tiebreaker criteria the SIFT one. The reason for this decision is that SIFT is invariant to rotation and scale and less sensitive to small divergences between the output images and the Ground Truth images. The results of each task will be described further and commented on the best results.

5.1 Results of the Task 1: Document Boundary Segmentation

In Task 1, all competitors achieved excellent results for the two metrics, DSC and SIFT. Table 2 shows the results and the classification of the teams. The three teams with the best classification were Ocean (1st), SPDB Lab (2nd), and PA (3rd), in this order. The first two teams achieved a result above , and both approach a similar architecture, the Mask-RCNN model. More details on the approach of the teams can be seen in Section 4.

| Teams results and methods — Mean; | ||

|---|---|---|

| Team Name | DSC | SIFT |

| 58 CV | 0.969594; | 0.998698; |

| Ambilight | 0.983825; | 0.999223; |

| Arcanite | 0.986441; | 0.994290; |

| Cinnamon AI | 0.983389; | 0.999656; |

| CUDOS | 0.989061; | 0.999450; |

| Dao Xianghu light of TianQuan | 0.987495; | 0.998966; |

| DeepVision | 0.976849; | 0.999599; |

| dotLAB Brazil | 0.988847; | 0.999234; |

| NAVER Papago | 0.989194; | 0.999565; |

| Nuctech robot | 0.986910; | 0.999891; |

| Ocean | 0.990971; | 0.999176; |

| PA | 0.989830; | 0.999624; |

| SPDB Lab | 0.990570; | 0.999891; |

| SunshineinWinter | 0.970344; | 0.998358; |

| USTC-IMCCLab | 0.985350; | 0.996469; |

| Wuhan Tianyu Document Algorithm Group | 0.980272; | 0.999370; |

5.2 Results of the Task 2: Zone Text Segmentation

Table 3 shows the result of Task 2. This task was slightly more complex than Task 1, as it is possible to observe slightly greater distances between the results achieved by the teams. Here the three teams with the best scores were Ambilight (1st), Dao Xianghu light of TianQuan (2nd), and USTC-IMCCLab (3rd). Here, the winner uses a model composition, HRnet+ORC+DCNv2. Based on Task 1 segmentation, the component area is cut out and then rotated to one side. Finally, the High-Resolution Net model is trained to identify the text area.

| Teams results and methods — Mean; | ||

|---|---|---|

| Team Name | DSC | SIFT |

| Ambilight | 0.926624; | 0.998829; |

| Arcanite | 0.837609; | 0.997631; |

| Cinnamon AI | 0.866914; | 0.995777; |

| Dao Xianghu light of TianQuan | 0.909789; | 0.998308; |

| DeepVision | 0.861304; | 0.994713; |

| dotLAB Brazil | 0.887051; | 0.997471; |

| Nuctech robot | 0.883612; | 0.997728; |

| PA | 0.880801; | 0.997607; |

| SPDB Lab | 0.881975; | 0.998220; |

| SunshineinWinter | 0.390526; | 0.981881; |

| USTC-IMCCLab | 0.890434; | 0.997933; |

| Wuhan Tianyu Document Algorithm Group | 0.814985; | 0.993798; |

5.3 Results of the Task 3: Signature Segmentation

For Task 3, as reported in the Table 4, results show very marked divergences. The distances between the results are much greater than those observed in the two previous tasks. The three teams that achieved the best results were SPDB Lab (1st), Cinnamon IA (2nd), and dotLAB Brasil (3rd). This task includes the lowest number of participating teams and lower rates results than tasks 1 and 2. These numbers raise the perception that segmenting handwritten signatures presents greater complexity among the 3 tasks addressed in the competition.

| Teams results and methods — Mean; | ||

|---|---|---|

| Team Name | DSC | SIFT |

| Ambilight | 0.812197; | 0.839524; |

| Arcanite | 0.627745; | 0.835515; |

| Cinnamon AI | 0.841275; | 0.848724; |

| Dao Xianghu light of TianQuan | 0.795448; | 0.839367; |

| dotLAB Brazil | 0.837492; | 0.852816; |

| Nuctech robot | 0.538511; | 0.842416; |

| Ocean | 0.768071; | 0.838772; |

| PA | 0.407228; | 0.836094; |

| SPDB Lab | 0.863063; | 0.839501; |

| SunshineinWinter | 0.465849; | 0.835916; |

| Wuhan Tianyu Document Algorithm Group | 0.061841; | 0.589850; |

The winning group, SPDB Lab, used a strategy that involves several deep models. The Yolo model was used by this group to detect the bounding box (bbox) of the handwritten signature area. Then, the bbox is filled with 5 pixels so that the overlapping bounding box is later merged. These images are sent to various segmentation models for prediction, which are U2Net, HRNet+OCRNet, ResNeSt+Deeplabv3plus, Swin-Transformer. The results of the segmentation of various models are merged by the weighted sum, and then the results are pasted back to the original position according to the recorded location information.

5.4 Discussion

All tasks proposed in this competition received interesting strategies and some of them achieved promising results for the problems posed by the taks.

Task 1 was completed with excellent results, all above for DSC metric. With this result of the competition, it is possible to affirm that Document Boundary Segmentation is no longer a challenge for computer vision due to the power of deep learning architectures.

The results of Task 2 show that the challenge for segmenting text zones needs an additional effort of the research community to get a definitive solution, since a minor error in this task may produce substantial errors when recognizing the texts in those documents.

Task 3, Handwritten signature segmentation, showed a more complex task than the other two presented in this competition. Despite the good results presented specially by Top 3 systems, they are far from the rates of page detection algorithms. Therefore, if we need the signature pixels to further verify its similarity against a reference signature, there is still room for new strategies and improvements in this task.

All groups used deep learning models, most of which made use of encoder-decoder architectures like the U-net. Another characteristic worth noting is the strategy of composing several deep models working together or sequentially to detect and segment regions of interest.

In the following, we can see some selected results of the 3 winners teams of each task.

6 Conclusion

The models proposed by the competitors were based on consolidated state-of-the-art architectures. This increased the level and complexity of the competition. Strategies such as using one task as the basis for another task were also successfully applied by some groups.

Nevertheless, we are worried about the response time of such approaches which is important in the case of real-time applications. In addition, one must also consider the application for embedded systems and mobile devices with limited computational resources. A possible future competition may include in the scope these aspects.

To conclude, we see that there is still room for other strategies and solutions for the tasks of text and signature segmentation proposed in this competition. We expect the datasets produced in this contest (https://icdar2021.poli.br/) can be used by the research community to improve their proposals for such tasks.

Acknowledgment

This study was financed in part by: Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001, and CNPq - Brazilian research agencies.

References

- [1] Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE transactions on pattern analysis and machine intelligence 39(12), 2481–2495 (2017)

- [2] Berman, M., Triki, A.R., Blaschko, M.B.: The lovász-softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 4413–4421 (2018)

- [3] Cai, Z., Vasconcelos, N.: Cascade r-cnn: high quality object detection and instance segmentation. IEEE transactions on pattern analysis and machine intelligence (2019)

- [4] Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence 40(4), 834–848 (2017)

- [5] Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587 (2017)

- [6] Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European conference on computer vision (ECCV). pp. 801–818 (2018)

- [7] Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition. pp. 248–255. Ieee (2009)

- [8] Dice, L.R.: Measures of the amount of ecologic association between species. Ecology 26(3), 297–302 (1945)

- [9] Ferrer, M.A., Diaz-Cabrera, M., Morales, A.: Static signature synthesis: A neuromotor inspired approach for biometrics. IEEE Transactions on Pattern Analysis and Machine Intelligence 37(3), 667–680 (2014)

- [10] He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE international conference on computer vision. pp. 2961–2969 (2017)

- [11] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016)

- [12] Junior, C.A., da Silva, M.H.M., Bezerra, B.L.D., Fernandes, B.J.T., Impedovo, D.: Fcn+ rl: A fully convolutional network followed by refinement layers to offline handwritten signature segmentation. In: 2020 International Joint Conference on Neural Networks (IJCNN). pp. 1–7. IEEE (2020)

- [13] Kirillov, A., Wu, Y., He, K., Girshick, R.: Pointrend: Image segmentation as rendering. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 9799–9808 (2020)

- [14] Li, X., Li, X., Zhang, L., Cheng, G., Shi, J., Lin, Z., Tan, S., Tong, Y.: Improving semantic segmentation via decoupled body and edge supervision. arXiv preprint arXiv:2007.10035 (2020)

- [15] Liao, M., Wan, Z., Yao, C., Chen, K., Bai, X.: Real-time scene text detection with differentiable binarization. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 34, pp. 11474–11481 (2020)

- [16] Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2117–2125 (2017)

- [17] Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision. pp. 2980–2988 (2017)

- [18] Liu, J.J., Hou, Q., Cheng, M.M., Wang, C., Feng, J.: Improving convolutional networks with self-calibrated convolutions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 10096–10105 (2020)

- [19] Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: Hierarchical vision transformer using shifted windows. arXiv preprint arXiv:2103.14030 (2021)

- [20] Lowe, D.G.: Object recognition from local scale-invariant features. In: Proceedings of the seventh IEEE international conference on computer vision. vol. 2, pp. 1150–1157. Ieee (1999)

- [21] das Neves Junior, R.B., Verçosa, L.F., Macêdo, D., Bezerra, B.L.D., Zanchettin, C.: A fast fully octave convolutional neural network for document image segmentation. In: 2020 International Joint Conference on Neural Networks (IJCNN). IEEE. pp. 1–8 (2020)

- [22] Ortega-Garcia, J., Fierrez-Aguilar, J., Simon, D., Gonzalez, J., Faundez-Zanuy, M., Espinosa, V., Satue, A., Hernaez, I., Igarza, J.J., Vivaracho, C., et al.: Mcyt baseline corpus: a bimodal biometric database. IEE Proceedings-Vision, Image and Signal Processing 150(6), 395–401 (2003)

- [23] Qin, X., Zhang, Z., Huang, C., Dehghan, M., Zaiane, O.R., Jagersand, M.: U2-net: Going deeper with nested u-structure for salient object detection. Pattern Recognition 106, 107404 (2020)

- [24] Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 779–788 (2016)

- [25] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention. pp. 234–241. Springer (2015)

- [26] de Sá Soares, A., das Neves Junior, R.B., Bezerra, B.L.D.: Bid dataset: a challenge dataset for document processing tasks. In: Anais Estendidos do XXXIII Conference on Graphics, Patterns and Images. pp. 143–146. SBC (2020)

- [27] Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.C.: Mobilenetv2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4510–4520 (2018)

- [28] Silva, P.G., Junior, C.A., Lima, E.B., Bezerra, B.L., Zanchettin, C.: Speeding-up the handwritten signature segmentation process through an optimized fully convolutional neural network. In: 2019 International Conference on Document Analysis and Recognition (ICDAR). pp. 1417–1423. IEEE (2019)

- [29] Sudre, C.H., Li, W., Vercauteren, T., Ourselin, S., Cardoso, M.J.: Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In: Deep learning in medical image analysis and multimodal learning for clinical decision support, pp. 240–248. Springer (2017)

- [30] Sun, K., Zhao, Y., Jiang, B., Cheng, T., Xiao, B., Liu, D., Mu, Y., Wang, X., Liu, W., Wang, J.: High-resolution representations for labeling pixels and regions. arxiv 2019. arXiv preprint arXiv:1904.04514 (2019)

- [31] Sun, K., Xiao, B., Liu, D., Wang, J.: Deep high-resolution representation learning for human pose estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 5693–5703 (2019)

- [32] Tan, M., Le, Q.: Efficientnet: Rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning. pp. 6105–6114. PMLR (2019)

- [33] Tao, A., Sapra, K., Catanzaro, B.: Hierarchical multi-scale attention for semantic segmentation. arXiv preprint arXiv:2005.10821 (2020)

- [34] Wang, W., Xie, E., Song, X., Zang, Y., Wang, W., Lu, T., Yu, G., Shen, C.: Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 8440–8449 (2019)

- [35] Wei, S., Su, H., Ming, J., Wang, C., Yan, M., Kumar, D., Shi, J., Zhang, X.: Precise and robust ship detection for high-resolution sar imagery based on hr-sdnet. Remote Sensing 12(1), 167 (2020)

- [36] Yuan, Y., Chen, X., Wang, J.: Object-contextual representations for semantic segmentation. arXiv preprint arXiv:1909.11065 (2019)

- [37] Yuan, Y., Xie, J., Chen, X., Wang, J.: Segfix: Model-agnostic boundary refinement for segmentation. In: European Conference on Computer Vision. pp. 489–506. Springer (2020)

- [38] Zhang, H., Wu, C., Zhang, Z., Zhu, Y., Lin, H., Zhang, Z., Sun, Y., He, T., Mueller, J., Manmatha, R., et al.: Resnest: Split-attention networks. arXiv preprint arXiv:2004.08955 (2020)

- [39] Zhang, Z., Sabuncu, M.R.: Generalized cross entropy loss for training deep neural networks with noisy labels. arXiv preprint arXiv:1805.07836 (2018)

- [40] Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2881–2890 (2017)

- [41] Zhou, Z., Siddiquee, M.M.R., Tajbakhsh, N., Liang, J.: Unet++: A nested u-net architecture for medical image segmentation. In: Deep learning in medical image analysis and multimodal learning for clinical decision support, pp. 3–11. Springer (2018)

- [42] Zhu, X., Hu, H., Lin, S., Dai, J.: Deformable convnets v2: More deformable, better results. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 9308–9316 (2019)