Hyperspectral super-resolution via low rank tensor triple decomposition

Abstract.

Hyperspectral image (HSI) and multispectral image (MSI) fusion aims at producing a super-resolution image (SRI). In this paper, we establish a nonconvex optimization model for image fusion problem through low rank tensor triple decomposition. Using the L-BFGS approach, we develop a first-order optimization algorithm for obtaining the desired super-resolution image (TTDSR). Furthermore, two detailed methods are provided for calculating the gradient of the objective function. With the aid of the Kurdyka-ojasiewicz property, the iterative sequence is proved to converge to a stationary point. Finally, experimental results on different datasets show the effectiveness of our proposed approach.

Key words and phrases:

Tensor triple decomposition, nonconvex programming, Kurdyka-ojasiewicz property, image fusion, hyperspectral image, multispectral image1991 Mathematics Subject Classification:

Primary: 90C30, 90C90, 90-08, 90-10, 49M37.Xiaofei Cui and Jingya Chang

1School of Mathematics and Statistics, Guangdong University of Technology, China

1. Introduction

Equipment for hyperspectral imaging uses an unusual sensor to produce images with great spectral resolution. The key factor in an image is the electromagnetic spectrum obtained by sampling at hundreds of continuous wavelength intervals. Because hyperspectral images have hundreds of bands, spectral information is abundant [32]. Hyperspectral images are now the core of a large and increasing number of remote sensing applications, such as environmental monitoring [4, 25], target classification [41, 8] and anomaly detection [7, 37].

With optical sensors, there is a fundamental compromise among spectral resolution, spatial resolution and signal-to-noise ratio. Hyperspectral images therefore have a high spectral resolution but a low spatial resolution. Contrarily, multispectral sensors, which only have a few spectral bands, can produce images with higher spatial resolution but poorer spectral resolution [5].

The approaches for addressing hyperspectral and multispectral images fusion issues can be categorized into four classes [42]. The pan-sharpening approach falls within the first category, which includes component substitution [27, 1] and multi-resolution analysis [6]. Selva [30] and Liu [22] proposed multi-resolution analysis method to extract spatial details that were injected into the interpolated hyperspectral image. Chen et al. [9] divided all bands of the images into several regions and then fused the images of each region by the pan-sharpening approach. Unfortunately, these methods may lead to spectral distortion. The second category is subspace-based formulas. The main idea is to explore the low-dimensional representation of hyperspectral images, which are regarded as a linear combination of a set of basis vectors or spectral features [18, 38, 34]. Hardie et al. [15] employed Bayesian formulas to solve the image fusion problem with the maximum posteriori probability. The method based on matrix factorization falls within the third category. Under these circumstances, HSI and MSI are regarded as the degradation versions of the reference SRI. Yokoya et al. [40] proposed a coupled nonnegative matrix factorization method, which estimated the basic vectors and its coefficients blindly and then calculated the endmember matrix and degree matrix by alternating optimization algorithm. In addition, there are methods combining sparse regularization and spatial regularization [19, 31], such as a low rank matrix decomposition model for decomposing a hyperspectral image into a super-resolution image and a difference image in [12, 42].

Tensor decomposition methods fall within the fourth category. Tensor, a higher-order extension of matrix, can capture the correlation between the spatial and spectral domains in hyperspectral images simultaneously. Representing hyperspectral and multispectral images as third-order tensors has been widely used in hyperspectral image denoising and super-resolution problems. The hyperspectral image is a three-dimensional data cube with two spatial dimensions (height and width) and one spectral dimension [17, 33, 23]. The spatial details and spectral structures of the SRI therefore are both more accurate.

By utilizing low rank tensor decomposition technology to explore the low rank properties of hyperspectral images, high spatial resolution and accurate restoration guarantees are obtained. Kanatsoulis et al. [20] proposed treating the super-resolution problem as a coupled tensor approximation, where the super-resolution image satisfied low rank Canonical Polynomial (CP) decomposition. Later, Li et al. [28, 24] considered a novel approach based on tucker decomposition. Xu et al. [39] first considered that the target SRI exhibited both the sparsity and the piecewise smoothness on three modes and then used tucker decomposition to construct model. Dian et al. [13] applied tensor kernel norm to explore low rank property of SRI. He et al. [16] proposed tensor ring decomposition model for addressing the image fusion problem.

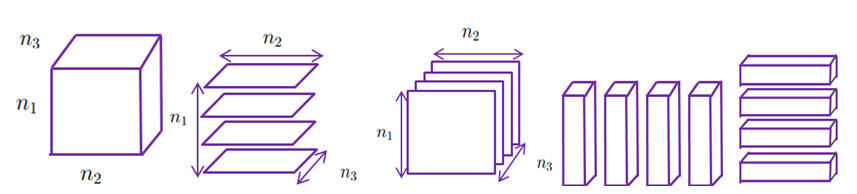

In this paper, we propose a novel model based on low rank tensor triple decomposition to address the hyperspectral and multispectral images fusion problem. Triple decomposition breaks down a third-order tensor into three smaller tensors with predetermined dimensions. It is effectively applied in tensor completion and image restoration [29, 11]. The structures of image fusion and triple decomposition are presented in Figure 1. The optimization model is solved by the L-BFGS algorithm. The convergent results are given with the help of Kurdyka-ojasiewicz property of the objective function. Numerical experiments demonstrate that the proposed TTDSR method performs well and the convergent conclusion is also validated numerically.

The rest of this paper is organized as follows. In section 2, we first provide definitions used in the paper and present the basic algebraic operations of tensors. In section 3, we introduce our motivation and model for the hyperspectral and multispectral images fusion. In section 4, the L-BFGS algorithm and the gradient calculation are also presented in detail. Convergence analysis of the algorithm is available in section 5. In section 6, experimental results on two datasets are given. The conclusion is given in section 7.

2. Preliminary

Let be the real field. We use lowercase letters and boldface lowercase letters to represent scalars and vectors respectively, while capital letters and calligraphic letters stand for matrices and tensors respectively.

An th order dimensional tensor is a multidimensional array with its entry being

Similarly, the th entry in a vector a is symbolized by , and the th entry of a matrix is Unless otherwise specified, the order of tensor is set to three hereafter in this paper. Without loss of generality, we denote A fiber of is a vector produced by fixing two indices of such as for any . A slice of is a matrix by varying two of its indices while fixing another one, such as for any The geometric structures of the tensor expansion are shown in Figure 2.

The mode- product of the tensor and a matrix is an extension of matrix product. Suppose matrices , , and . The mode- product of and is denoted as with its elements being

Also we have

| (1) |

for It is easy to verify that

| (2) |

The mode- matricization of denoted by arranges its mode- fibers as the columns of in order. The Frobenius norm of tensor is given by

| (3) |

The unfolding related no matter with tensors, matrices or vectors in the following part is carried out under the precondition that the left index varies more rapidly than the right one. Subscript represents dimension. We note that creates a column vector by stacking the columns of below one another, i.e., . In addition, the kronecker product of matrices and is

for It generates a large matrix , whose entries are with and . By this rule, unfolding matrices of tensor could be represented by

| (4) |

Moreover,

3. The tensor triple decomposition (TTD) model for HSI-MSI fusion

Tensors can describe high dimensional relationship. The hyperspectral and multispectral images are naturally third-order tensors. Methods based on low rank matrix decomposition reconstruct the 3-d tensor data into a 2-d matrix data at beginning, which destroy the original 3-d structure and may cause negative influence on the fusion consequences. Therefore, hyperspectral and multispectral image fusion problems have been investigated with the aid of various tensor decompositions in recent years. The tensor triple decomposition model has certain advantages over the classical CP and tucker decompositions. The triple rank is less than or equal to CP rank, which has been proved theoretically and practically. As described in theorem 2.1 of [29], low rank triple decomposition and triple ranks are well-defined. The proposed triple decomposition has the low rank properties and performs well in image restoration. When facing large-scale problems, the lower rank indicates that we transform the problem into a lower dimensional subspace, which is relatively easy to solve. Thus, we employ the tensor triple decomposition framework to establish the hyperspectral super-resolution model.

Define , and as hyperspectral image (HSI), multispectral image (MSI) and super-resolution image (SRI) respectively. Here the first two indices and or and denote the spatial dimensions. The third index or indicate the number of spectral bands. Usually, the MSI contains more spatial information than the HSI, that is The HSI has more spectral bands than MSI, which means Our target is to find the SRI that possesses the high spectral resolution of HSI and the spatial resolution of the MSI, i.e. a super-resolution image.

3.1. The links among SRI, HSI and MSI

Let the mode- matricization of , and be and The key point which helps us to construct the relationship among SRI, HSI and MSI is that there exist two linear operators and such that and [20]. Thus we have

| (5) |

where , and We also have

| (6) |

where represents a fiber of and is a fiber of respectively. Therefore, , can be rewritten as

| (7) |

Equations and also are known as the spatial and spectral degradation processes of The matrices , respectively describe the downsampling and blurring of the spatial degradation process. Downsampling is considered as linear compression, while blurring describes a linear mixing of neighbouring pixels under a specific kernel in both the row and column dimensions. The matrix is usually modeled as a band-selection matrix that selects the common spectral bands of the SRI and MSI.

Based on the correlation of spatial and spectral information in hyperspectral and multispectral images, various low rank tensor decomposition models are established to study the problem. For example, suppose is decomposed by Canonical Polyadic decomposition into the sum of several rank- tensors, which is represented as The HSI-MSI fusion model [20] is denoted as and . Besides, tucker decomposition is considered [28, 44] and it is expressed as and .

3.2. The new model for HSI-MSI fusion

In [29], Qi et al. proposed a new tensor decomposition, named tensor triple decomposition, which can effectively express the original tensor information by three low rank tensors. Therefore, we establish the hyperspectral and multispectral image fusion model by low rank tensor triple decomposition.

First, we introduce the tensor triple decomposition in detail. Tensor triple decomposition of a third-order representing the target SRI takes the following form

| (8) |

where , and It can also be denoted as with its entries

| (9) |

for , , and The smallest value of such that (9) holds is called the triple rank of and denoted as . If , equation (8) is called low rank triple decomposition of , where and mid represents the larger number in the middle [29, 11]. In particular, according to [11, Theorem 2.2], triple decomposition satisfies the following equations

| (10) |

where is an identity matrix with a proper size.

Next, we propose a new model with the help of low rank tensor triple decomposition. Assume , and are the low rank triple decomposition tensors of tensor For the connection (7), we have

| (11) |

Since the best low rank approximation problem of tensors may be ill-posed, we add the Tikhonov regularization term and get the following optimization model

| (12) |

where is regularization parameter. Thus, we employ the optimization model (12) to obtain the triple decomposition tensors and , and to produce the super-resolution image SRI by .

4. The L-BFGS algorithm for solving the model

In this section, we focus on numerical approaches for computing a first-order stationary point of the optimization problem (12). Since the limited memory BFGS (L-BFGS) algorithm [21] is powerful for large scale nonlinear unconstrained optimization, we apply it to produce a search direction and consider using inexact line search techniques to update the iteration step size. In the computaion process, we either matricize or vectorize the tensor variable to get the gradient of the objective function. For convenience, we demonstrate the algorithm and its convergence analysis for

| (13) |

4.1. Limited memory BFGS algorithm

BFGS is a quasi-Newton method which updates the approximation of the inverse of a Hessian iteratively. In the current iteration , it constructs an approximation matrix to estimate the inverse of Hessian of . The gradient of is defined as At the beginning, we introduce the basic BFGS update. Let

| (14) |

and

| (15) |

where is an identity matrix, represents transposition and is a small positive constant. The matrix is updated by

| (16) |

To deal with large-scale optimization problems, Liu and Nocedal [21] proposed the L-BFGS algorithm that implemented BFGS in an economical manner. Given a constant When only information of matrices are used to calculate the matrix by the following recursive form

| (17) |

In order to save memory, the initial matrix is replaced by

| (18) |

Here is usually determined by the Barzilai-Borwein method [3] as follows

| (19) |

If , is generated by the traditional BFGS method. The L-BFGS method can be implemented in an inexpensive two-loop recursion way which is shown in Algorithm 1.

The generated by L-BFGS is a gradient-related descent direction for beging a positive definite matrix, where the proof process is presented in Lemma B.2. Then we find a proper step size along the direction that satisfies the Armijo condition. The computation method for the model (12) is obtained and we demonstrate it in Algorithm 2.

| (20) |

4.2. Gradient calculation

At each iteration in Algorithm 2, we need to compute the gradient of the objective function. The gradient can be calculated via reshaping the tensor variable into vector or matrix form. For small scale problems, the gradient is easier to obtain when the the tensor variable is turned into a vector than into a matrix, while for large scale problems, the gradient in vector form always leads to insufficient memory in the computation process. In this subsection, we derive the matrix form of the gradient and the vector form is given in the Appendix A.

Denote , , and According to (10) and (11), we get the following equations

| (21) | ||||

| (22) | ||||

| (23) | ||||

| (24) | ||||

| (25) | ||||

| (26) |

Define and The function in (12) is transformed into the objective function of matrix variables , and as follows

| (27) |

| (28) |

| (29) |

For any matrix we have Therefore we review the derivatives of some useful trace functions with respect to

| (30) |

For simplicity, we denote

Thus, the partial derivatives with respect to and are

| (31) |

| (32) |

| (33) |

5. Convergence analysis

In this section, we analyze the convergence of and show that our proposed method produces a globally convergent iteration.

Lemma 5.1.

There exists a positive number such that

| (34) |

Proof.

Furthermore, we have the following Lemma 5.2.

Lemma 5.2.

There exist constants and satisfy ,

| (35) |

Proof.

The proof can be found in the Appendix B. ∎

Because of boundedness and monotonicity of , the sequence of function value converges. The conclusion is given in Theorem 5.3.

Theorem 5.3.

Assume that Algorithm 2 generates an infinite sequence of function values . Then, there exists a constant such that

| (36) |

Next, we prove that every accumulation point of iterates is a first-order stationary point. At last, by utilizing the Kurdyka-ojasiewicz property [2], we show that the sequence of iterates is also convergent. The following lemma means that the step size is lower bounded.

Lemma 5.4.

There exists a constant such that

| (37) |

Proof.

The following theorem proves that every accumulation point of iterates is a first-order stationary point.

Theorem 5.5.

Suppose that Algorithm 2 generates an infinite sequence of iterates . Then,

| (40) |

Proof.

Analysis of proximal methods for nonconvex and nonsmooth optimization frequently uses the Kurdyka- ojasiewicz (KL) property [2]. Since the objective function is a polynomial and the KL property below holds, we use the KL property to prove the convergence of algorithm.

Proposition 5.6.

(Kurdyka-ojasiewicz (KL) property) Suppose that is a stationary point of . There is a neighborhood of , an exponent and a positive constant such that for all , the following inequality holds:

| (44) |

In particular, we define .

Lemma 5.7.

Suppose that is a stationary point of and is a neighborhood of Let be an initial point satisfying

| (45) |

Then, the following assertions hold:

| (46) |

and

| (47) |

Proof.

The theorem is proved by induction. Obviously, . Now, we assume for all and KL property holds at these points. Define a concave function

| (48) |

Its derivative function is

| (49) |

For , the first-order condition of the concave function at is

| (50) | ||||

The equation (50) and KL property mean

| (51) |

By Lemma 5.2 and (41), we have

| (52) |

where the last inequality is valid because

| (53) |

The upper bound of is

| (54) | ||||

which means and (46) holds. Moreover, according to (52), we obtain

| (55) |

Thus, the proof of (47) is complete. ∎

The sequence of iterates is demonstrated to converge to a unique accumulation point next.

Theorem 5.8.

Suppose that Algorithm 2 generates an infinite sequence of iterates . converges to a unique first-order stationary point , i.e.

| (56) |

6. Numerical experiments

In this section we demonstrate the performance of our proposed TTDSR method on two datasets. The method is implemented with parameters , , , . The stopping criteria is

or

The maximum number of iteration is set to 400. All simulations are run on a HP notebook with 2.5 GHz Intel Core i5 and 4 GB RAM. We use tensorlab 3.0 [26] for basic tensor operations.

In the numerical experiments, the groundtruth image SRI is artificially degraded to HSI and MSI based on , and For matrices and of spatial degradation, we follow the Wald’s protocol [36] and the degradation process from SRI to HSI is a combination of spatial blurring Gaussian kernel and downsampling. The downsampling and Gaussian kernel have parameters and , respectively. It is common to set the downsampling ratio and in the Gaussian kernel. In the following experiments, we also conduct simulations under situations such as and , and respectively. In order to obtain MSI from SRI, we generate the spectral degradation matrix through spectral specifications, which are taken from LANDSAT or QuickBird specifications of multispectral sensors. The Indian pines and Salinas-A scene datasets are available online at [14]. For Indian pines, the groundtruth image SRI is degraded with the former sensor, while the SRI of Salinas-A scene is degraded with the latter as in [28]. The dimensions of HSI, MSI, SRI images are demonstrated in Table 1.

| Image name | SRI | HSI | MSI |

|---|---|---|---|

| Indian pines, d=4 | |||

| Indian pines, d=6 | |||

| Salinas-A scene |

6.1. Comparison with other algorithms

In this subsection we compare the proposed algorithm with state-of-the-art approaches, including HySure [31] and FUSE [35], which are based on matrix decompositions. Furthermore, tensor CP [20] and tucker decomposition [28] methods are also considered. The HySure method is about a convex formulation for SRI via subspace-based regularization proposed by Simos et al, while FUSE describes fast fusion of multiband images based on solving a Sylvester equation proposed by Wei et al. We calculate the following metrics to evaluate the effect of image fusion, which includes re-constructed signal-to-noise ratio (R-SNR), correlation koeffizient (CC), spectral angle mapper (SAM) and the relative dimensional global error (ERGAS) used in [28]. R-SNR and CC are given by

| (58) |

and

| (59) |

where is the pearson correlation coefficient between the original and estimated spectral slices. The metric SAM is

| (60) |

and calculates the angle between the original and estimated spectral fiber. The performance measurement ERGAS is

| (61) |

where is the mean value of . It represents the relative dimensionless global error between SRI and the estimated one. It is the root mean-square error averaged by the size of the SRI.

| Algorithm | quality evaluation metrics | |||||

|---|---|---|---|---|---|---|

| R-SNR | CC | SAM | ERGAS | TIME(s) | ||

| best value | 1 | 0 | 0 | - | ||

| STERTO | 24.8691 | 0.8335 | 2.8220 | 1.2812 | 2.3025 | |

| d=4 | SCOTT | 16.4046 | 0.7617 | 7.2446 | 2.3651 | 0.5865 |

| HySure | 18.9055 | 0.6971 | 5.7052 | 2.3045 | 39.9998 | |

| q=9 | FUSE | 10.3359 | 0.6126 | 13.8561 | 4.5692 | 0.3635 |

| TTDSR | 17.0350 | 0.6712 | 6.7452 | 3.1165 | 2.3624 | |

| STERTO | 23.7512 | 0.7874 | 3.2923 | 0.9875 | 2.1344 | |

| d=6 | SCOTT | 17.1569 | 0.7414 | 6.7436 | 1.5801 | 0.8730 |

| HySure | 17.8228 | 0.6879 | 6.4205 | 1.6793 | 38.6143 | |

| q=9 | FUSE | 11.9157 | 0.6134 | 11.8385 | 2.8082 | 0.3006 |

| TTDSR | 17.0350 | 0.6712 | 6.7452 | 2.0777 | 2.1966 | |

| STERTO | 24.8723 | 0.8338 | 2.8213 | 1.2791 | 1.7986 | |

| d=4 | SCOTT | 15.5634 | 0.7218 | 7.6436 | 2.9810 | 0.5197 |

| HySure | 16.8333 | 0.6729 | 6.9514 | 2.8192 | 37.9540 | |

| q=5 | FUSE | 9.5533 | 0.5678 | 14.6419 | 5.8727 | 0.3169 |

| TTDSR | 17.0350 | 0.6712 | 6.7452 | 3.1165 | 2.386 | |

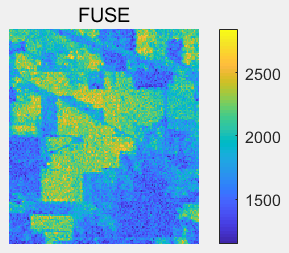

In practical applications, it is common that the hyperspectral and multispectral images generated by special sensors are noisy. Therefore in the experiments, we add white Gaussian noise to HSI and MSI. The first experiment is performed using Indian pines dataset from hyperspectral remote sensing data platform [14]. White Gaussian noise to is 21dB, while to is 25dB. The results are presented in Table 2 and Figure 3. The rank of tucker decomposition in SCOTT is . According to [20], tensor rank of the STEREO method often yields good performance. For HySure method, ‘E’ represents groundtruth number of materials and is set to which is chosen as the number of endmembers as [20]. In Table 2, when and , the STERTO method performs the best and our proposed algorithm has advantages over the FUSE method in terms of metrics R-SNR, CC, SAM, and ERGAS. The HySure method has comparable performance to our algorithm, but requires more computational time. Moreover, our proposed algorithm gets a higher R-SNR value and lower SAM value when compared to the SCOTT. Figure 3 also provides an intuitive and reasonable display of super-resolution images.

In addition, we demonstrate the results given by different algorithms for Indian pines in Table 2 under the conditions and It seems that the values and affect the performances of all methods in some degree. However, the ranking of each evaluation parameters of different methods almost do not change when and vary.

| Algorithm | quality evaluation metrics | |||||

|---|---|---|---|---|---|---|

| R-SNR | CC | SAM | ERGAS | TIME(s) | ||

| best value | 1 | 0 | 0 | - | ||

| STERTO | 17.1952 | 0.987 | 0.4548 | 4.3075 | 1.1343 | |

| d=4 | SCOTT | 18.8878 | 0.9903 | 0.3651 | 3.8203 | 0.1726 |

| HySure | 18.3815 | 0.9890 | 0.3519 | 4.0037 | 9.0540 | |

| q=9 | FUSE | 9.5258 | 0.8919 | 0.3769 | 12.2427 | 0.1248 |

| TTDSR | 17.1458 | 0.9858 | 0.1089 | 4.5147 | 1.5402 | |

In the second example, Salinas-A scene dataset comes from the hyperspectral remote sensing data platform, which is available in [28]. Similarly, white Gaussian noise is added to and with an input SNR of dB. Consistently, we conduct experiments on the dataset under the conditions of , , and the results are shown in Table 3. It is found that when or the metrics of the listed algorithms are almost consistent with the results of , We omit results of under these two conditions. In Table 3, compared with the STERTO, our proposed algorithm has comparable signal-to-noise ratio and time. Compared to other algorithms, our method get the lowest SAM value. Furthermore, it is evident that the TTDSR algorithm performs better than FUSE. In Figure 4, the super-resolution images obtained by different algorithms are shown. Due to the low SAM value, the image recovered by TTDSR algorithm are relatively clearer.

6.2. Further numerical reuslts of TTDSR

In this subsection we further show the numerical results of TTDSR implemented on Indian pines dataset. The curve in Figure 5(a) displays the objective function value in each iteration, which verifies the theoretical conclusion that the sequence is decreasing.

In theory, the SRI is a low rank tensor. However, in the numerical experiments, we have no prior knowledge of the triple rank of the SRI tensor and the rank is given artificially. For Indian pines dataset, we run TTDSR algorithm ten times with the triple rank changing from 1 to 10 accordingly. In Figure 5(b), we demonstrate the values of R-SNR corresponding to different triple ranks. In this example, the best R-SNR is attained when the triple rank is 1.

From the above analysis, we can see that among all algorithms, the HySure method performs the best but costs much more time than others. This is because it establishes an optimization problem of convex objective function with vector total variation regularization. The TV regularizer calculates the dispersion difference of the image in the horizontal and vertical directions. Our method has a significant advantage over the SCOTT in that we only need to consider a triple rank, while the rank of tucker decomposition [28] is an array.

7. Conclusion

In this work, we provide a novel tensor triple decomposition model for hyperspectral super-resolution. Firstly, in order to capture the global interdependence between hyperspectral data of different modes, we use triple rank to characterize its low rank. Then we propose a optimization algorithm TTDSR to get the desired hyperspectral super-resolution image. Using the triple decomposition theorem, we cleverly obtain the gradient of the objective function of the model, which provides great help for solving the problem. Due to the algebraic nature of the objective function , we apply the Kurdyka-ojasiewicz property in analyzing the convergence of the sequence of iterates generated by TTDSR. In addition, experiments on two datasets show the feasibility and effectiveness of the TTDSR. This work opens up a new prospect for realizing hyperspectral super-resolution by using various tensor decompositions.

Appendix A The process of vectorization of the variable

The gradient of the objective function in (12) is calculated by vectorization of the variable as

| (62) |

We directly vectorize the optimal variables of (12) in accordance with preset principles while dealing with small-scale data. Firstly, noting that Secondly, vectorizing the known tensors , , we get

The symbol indicates the vectorization operator. It yields from (21) that

Hence,

It is easy to see

Similarly, it yields from (24) that

Hence,

and

For , we have

In a word,

| (63) |

It yields from (22) and (25) that

and

Therefore,

| (64) | ||||

It also yields from (23) and (26) that

and

Thus,

| (65) |

Then, its gradient is

| (66) |

Appendix B We introduce five lemmas for the proof of Lemma 5.2

First, we consider the BFGS update .

Lemma B.1.

Suppose that is generated by . Then, we have

| (67) |

Proof.

Lemma B.2.

Suppose that is positive definite and is generated by BFGS . Let be the smallest eigenvalue of a symmetric matrix . Then, we get is positive definite and

| (71) |

Proof.

For any unit vector , we have

| (72) |

Let and

| (73) |

Because is positive definite, is convex and attaches its minimum at . Hence,

| (74) | ||||

where the penultimate inequality holds because the Cauchy-Schwarz inequality is valid for the positive definite matrix norm ,

| (75) |

So is also positive definite. From (68), it is easy to verify that

| (76) |

Thus, we have

| (77) |

Hence, we get the validation of (71). ∎

Second, we turn to L-BFGS. Regardless of the selection of in (19), we get the following lemma.

Lemma B.3.

Suppose that the parameter takes Barzilai-Borwein steps . Then, we have

| (78) |

Proof.

Lemma B.4.

Suppose that the approximation of a Hessian’s inverse is generated by L-BFGS . Then, there exists a positive constant such that

| (82) |

Proof.

Lemma B.5.

Suppose that the approximation of a Hessian’s inverse is generated by L-BFGS . Then, there exists a constant such that

| (84) |

References

- [1] B. Aiazzi, S. Baronti and M. Selva, \doititleImproving component substitution pansharpening through multivariate regression of MS +Pan data, IEEE Trans. Geosci. Remote Sens., 45 (2007), 3230-3239.

- [2] H. Attouch, J. Bolte, P. Redont and A. Soubeyran, \doititleProximal alternating minimization and projection methods for nonconvex problems: An approach based on the Kurdyka-ojasiewicz inequality, Math. Oper. Res., 35 (2010), 438-457.

- [3] J. Barzilai and J. M. Borwein, \doititleTwo-point step size gradient methods, IMA. J. Numer. Anal., 8 (1988), 141-148.

- [4] J. M. Bioucas-Dias, A. Plaza, G. Camps-Valls, P. Scheunders, N. Nasrabadi and J. Chanussot, \doititleHyperspectral remote sensing data analysis and future challenges, IEEE Geosci. Remote Sens. Mag., 1 (2013), 6-36.

- [5] R. A. Borsoi, C. Prvost, K. Usevich, D. Brie, J. C. Bermudez, and C. Richard, \doititleCoupled tensor decomposition for hyperspectral and multispectral image fusion with inter-image variability, IIEEE J. Sel. Top. Signal Process., 15 (2021),702-717.

- [6] P. Chavez, S. C. Sides and J. A. Anderson, \doititleComparison of three different methods to merge multiresolution and multispectral data-Landsat TM and SPOT panchromatic, Photogramm. Eng. Remote Sens., 57 (1991), 295-303.

- [7] C. I. Chang and S.S. Chiang, \doititle Anomaly detection and classification for hyperspectral imagery, IEEE Trans. Geosci. Remote Sens., 40 (2002), 1314-1325.

- [8] G. Camps-Valls and L. Bruzzone, \doititle Kernel-based methods for hyperspectral image classification, IEEE Trans. Geosci. Remote Sens., 43 (2005), 1351-1362.

- [9] Z. Chen, H. Pu, B. Wang and G. M. Jiang, \doititleFusion of hyperspectral and multispectral images: A novel framework based on generalization of pan-sharpening methods, IEEE Geosci. Remote Sens. Lett., 11 (2014), 1418-1422.

- [10] J. Chang J, Y. Chen, L. Qi, \doititleComputing eigenvalues of large scale sparse tensors arising from a hypergraph, SIAM J. Sci. Comput., 38 (2016), A3618-A3643.

- [11] Y. Chen, X. Zhang, L. Qi and Y. Xu, \doititleA Barzilai Borwein gradient algorithm for spatio-temporal internet traffic data completion via tensor triple decomposition, SIAM J. Sci. Comput., 88 (2021), 1-24.

- [12] E. J. Cands, X. Li, Y. Ma and J. Wright, \doititleA robust principal component analysis, Statistics: A Journal of Theoretical and Applied Statistics, 58 (2011), 1-37.

- [13] R. Dian and S. Li, \doititleHyperspectral image super-resolution via subspace-based low tensor multi-rank regularization, IEEE Trans. Image Process., 28 (2019), 5135-5146.

- [14] GIC, Grupo de inteligencia computacional, 2014. Available from: http://www.ehu.eus/ccwintco/index.php?title=HyperspectralRemoteSensingScenes.

- [15] R. C. Hardie, M. T. Eismann and G. L. Wilson, \doititleMAP estimation for hyperspectral image resolution enhancement using an auxiliary sensor, IEEE Trans. Image Process., 13 ( 2004), 1174-1184.

- [16] W. He, Y. Chen, N. Yokoya, C. Li and Q. Zhao, \doititleHyperspectral super-resolution via coupled tensor ring factorization, Pattern Recognit., 122 (2022), 108280.

- [17] T. Imbiriba, R. A. Borsoi, J. C. M. Bermudez, \doititleLow-rank tensor modeling for hyperspectral unmixing accounting for spectral variability, IEEE Trans. Geosci. Remote Sens., 58 (2019), 1833-1842.

- [18] N. Keshava, J. F. Mustard, \doititleSpectral unmixing, IEEE Signal Process. Mag., 19 (2002), 44-57.

- [19] R. Kawakami, Y. Matsushita, J. Wright, M. Ben-Ezra, Y. W. Tai, and K. Ikeuchi, \doititleHigh-resolution hyperspectral imaging via matrix factorization, Conference on Computer Vision and Pattern Recognition(CVPR), IEEE, (2011), 2329-2336.

- [20] C. I. Kanatsoulis, X. Fu, N. D. Sidiropoulos and W. K. Ma, \doititleHyperspectral super-resolution: A coupled tensor factorization approach, IEEE Trans. Signal Process., 66 (2018), 6503-6517.

- [21] D. C. Liu and J. Nocedal, \doititleOn the limited memory BFGS method for large scale optimization, Math. Program., 45 (1989), 503-528.

- [22] J. G. Liu, \doititleSmoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details, Int. J. Remote Sens., 21 (2000), 3461-3472.

- [23] X. Liu, S. Bourennane, C. Fossati, \doititleDenoising of hyperspectral images using the PARAFAC model and statistical performance analysis, IEEE Trans. Geosci. Remote Sens., 50 ( 2012), 3717-3724.

- [24] S. Li, R. Dian, L. Fang and J. M. Bioucas-Dias, \doititleFusing hyperspectral and multispectral images via coupled sparse tensor factorization, IEEE Trans. Image Process., 27 (2018), 4118-4130.

- [25] D. Manolakis and G. Shaw, \doititleDetection algorithms for hyperspectral imaging applications, IEEE Signal Process. Mag., 19 (2002), 29-43.

- [26] N. Vervliet, O. Debals, L. Sorber, M. Van Barel and L. De Lathauwer, \doititleTensorlab 3.0, 2016. Available from: http://www.tensorlab.net.

- [27] X. Otazu, M. Gonzlez-Audcana and O. Fors, \doititleIntroduction of sensor spectral response into image fusion methods. Application to wavelet-based methods, IEEE Trans. Geosci. Remote Sens., 43 (2005), 2376-2385.

- [28] C. Prvost, K. Usevich, P. Comon and D. Brie, \doititleHyperspectral super-resolution with coupled tucker approximation: Recoverability and SVD-based algorithms, IEEE Trans. Signal Process., 68 (2020), 931-946.

- [29] L. Qi, Y. Chen, M. Bakshi and X. Zhang, \doititleTriple decomposition and tensor recovery of third order tensors, SIAM J Matrix Anal Appl., 42 (2021), 299-329.

- [30] M. Selva, B. Aiazzi, F. Butera, L. Chiarantini and S. Baronti, \doititleHyper-sharpening: A first approach on SIM-GA data, IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens., 8 (2015), 3008-3024.

- [31] M. Simoes, J. Bioucas-Dias, L. B. Almeida and J. Chanussot, \doititleA convex formulation for hyperspectral image superresolution via subspace-based regularization, IEEE Trans. Geosci. Remote Sens., 53 (2014), 3373-3388.

- [32] G. Vivone, L. Alparone, J. Chanussot, M. Dalla Mura, A. Garzelli, G. A. Licciardi and L. Wald, \doititleA critical comparison among pansharpening algorithms, IEEE Trans. Geosci. Remote Sens., 53 (2014), 2565-2586.

- [33] M. A. Veganzones, J. E. Cohen, R. C. Farias, J. Chanussot and P. Comon, \doititleNonnegative tensor CP decomposition of hyperspectral data, IEEE Trans. Geosci. Remote Sens., 54 (2015), 2577-2588.

- [34] Q. Wei, J. Bioucas-Dias, N. Dobigeon and J. Y. Tourneret, \doititleHyperspectral and multispectral image fusion based on a sparse representation, IEEE Trans. Geosci. Remote Sens., 53 (2015), 3658-3668.

- [35] Q. Wei, N. Dobigeon and J. Y. Tourneret, \doititleFast fusion of multi-band images based on solving a Sylvester equation, IEEE Trans. Image Process., 24 (2015), 4109-4121.

- [36] L. Wald, T. Ranchin and M. Mangolini, \doititleFusion of satellite images of different spatial resolutions: Assessing the quality of resulting images, Photogramm. Eng. Remote Sens., 63 (1997), 691-699.

- [37] Y. Xu, Z. Wu, J. Li, A. Plaza and Z. Wei, \doititleAnomaly detection in hyperspectral images based on low-rank and sparse representation, IEEE Trans. Geosci. Remote Sens, 54 (2015), 1990-2000.

- [38] X. Xu and Z. Shi, \doititleMulti-objective based spectral unmixing for hyperspectral images, ISPRS-J. Photogramm. Remote Sens., 124 (2017), 54-69.

- [39] T. Xu, T. Z. Huang, L. J. Deng, X. L. Zhao and J. Huang, \doititleHyperspectral image superresolution using unidirectional total variation with tucker decomposition, IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens., 13 (2020), 4381-4398.

- [40] N. Yokoya, T. Yairi, A. Iwasaki, X. L. Zhao and J. Huang, \doititleCoupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion, IEEE Trans. Geosci. Remote Sens., 50 (2011), 528-537.

- [41] N. Yokoya, C. Grohnfeldt and J. Chanussot, \doititleHyperspectral and multispectral data fusion: A comparative review of the recent literature, IEEE Geosci. Remote Sens. Mag., 5 (2017), 29-56.

- [42] K. Zhang, M. Wang, S. Yang and L. Jiao, \doititleSpatial-spectral-graph-regularized low-rank tensor decomposition for multispectral and hyperspectral image fusion, IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens., 11 (2018), 1030-1040.

- [43] K. Zhang, M. Wang, S. Yang, X. L. Zhao and J. Huang, \doititleMultispectral and hyperspectral image fusion based on group spectral embedding and low-rank factorization, IEEE Trans. Geosci. Remote Sens., 55 (2016), 1363-1371.

- [44] M. Zare, M. S. Helfroush, K. Kazemi and P. Scheunders, \doititleHyperspectral and multispectral image fusion using coupled non-negative tucker tensor decomposition, Remote Sens., 13 (2021), 2930.