Hyperparameter Optimization: Foundations, Algorithms, Best Practices and Open Challenges

?abstractname?

Most machine learning algorithms are configured by a set of hyperparameters whose values must be carefully chosen and which often considerably impact performance. To avoid a time-consuming and irreproducible manual process of trial-and-error to find well-performing hyperparameter configurations, various automatic hyperparameter optimization (HPO) methods – e.g., based on resampling error estimation for supervised machine learning – can be employed. After introducing HPO from a general perspective, this paper reviews important HPO methods, from simple techniques such as grid or random search to more advanced methods like evolution strategies, Bayesian optimization, Hyperband and racing. This work gives practical recommendations regarding important choices to be made when conducting HPO, including the HPO algorithms themselves, performance evaluation, how to combine HPO with machine learning pipelines, runtime improvements, and parallelization. This work is accompanied by an appendix that contains information on specific software packages in R and Python, as well as information and recommended hyperparameter search spaces for specific learning algorithms. We also provide notebooks that demonstrate concepts from this work as supplementary files.

1 Introduction

Machine learning (ML) algorithms are highly configurable by their hyperparameters (HPs). These parameters often substantially influence the complexity, behavior, speed as well as other aspects of the learner, and their values must be selected with care in order to achieve optimal performance. Human trial-and-error to select these values is time-consuming, often somewhat biased, error-prone and computationally irreproducible.

As the mathematical formalization of hyperparameter optimization (HPO) is essentially black-box optimization, often in a higher-dimensional space, this is better delegated to appropriate algorithms and machines to increase efficiency and ensure reproducibility. Many HPO methods have been developed to assist in and automate the search for well-performing hyperparameter configuration (HPCs) over the last 20 to 30 years. However, more sophisticated HPO approaches in particular are not as widely used as they could (or should) be in practice. We postulate that the reason for this may be a combination of the following factors:

-

•

poor understanding of HPO methods by potential users, who may perceive them as (too) complex ?black-boxes?;

-

•

poor confidence of potential users in the superiority of HPO methods over trivial approaches and resulting skepticism of the expected return on (time) investment;

-

•

missing guidance on the choice and configuration of HPO methods for the problem at hand;

-

•

difficulty to define the search space of an HPO process appropriately.

With these obstacles in mind, this paper formally and algorithmically introduces HPO, with many hints for practical application. Our target audience are scientists and users with a basic knowledge of ML and evaluation.

In this article, we mainly discuss HP for supervised ML, which is arguably the default scenario for HPO. We mainly do this to keep notation simple and to not overwhelm less experienced readers, especially for less experienced readers. Nevertheless, all covered techniques can be applied to practically any algorithm in ML in which the algorithm is trained on a collection of instances and performance is quantitatively measurable – e.g., in semi-supervised learning, reinforcement learning, and potentially even unsupervised learning111where the measurement of performance is arguably much less straightforward, especially via a single metric..

Subsequent sections of this paper are organized as follows: Section 2 discusses related work. Section 3 introduces the concept of supervised ML and discusses the evaluation of ML algorithms. The principle of HPO is introduced in Section 4. Major classes of HPO methods are described, including their strengths and limitations. The problem of over-tuning, the handling of noise in the context of HPO, and the topic of threshold tuning are also addressed. Section 5 introduces the most common preprocessing steps and the concept of ML pipelines, which enables us to include preprocessing and model selection within HPO. Section 6 offers practical recommendations on how to choose resampling strategies as well as define tuning search spaces, provides guidance on which HPO algorithm to use, and describes how HPO can be parallelized. In Section 7, we also briefly discuss how HPO directly connects to a much broader field of algorithm selection and configuration beyond ML and other related fields. Section 8 concludes with a discussion of relevant open issues in HPO.

The appendices contain additional material of particularly practical relevance for HPO: Appendix LABEL:app:important_ml_algorithms lists the most popular ML algorithms, describes some of their properties, and proposes sensible HP search spaces; Appendix A does the same for preprocessing methods; Appendix B contains a table of common evaluation metrics; Appendix C lists relevant considerations and software packages for ML and HPO in the two popular ML scripting languages R (C.1) and Python (C.2). Furthermore, we provide several R markdown notebooks as ancillary files which demonstrate many practical HPO concepts and implement them in mlr3 [226].

2 Related Work

As one of the most studied sub-fields of automated ML (AutoML), there exist several previous surveys on HPO. [45] offered a thorough overview about existing HPO approaches, open challenges, and future research directions. In contrast to our paper, however, that work does not focus on specific advice for issues that arise in practice. [145] provide a very high-level overview of search spaces, HPO techniques, and tools. Although we expect that the paper by [145] will be a more accessible paper for first-time users of HPO compared to the survey by [45], it does not explain HPO’s mathematical and algorithmic details or practical tips on how to apply HPO efficiently. Last but not least, [2] provides an overview about HPO methods, but with a focus on computational complexity aspects. We see this work described here as filling the gap between these papers by providing all necessary details both for first-time users of HPO as well as experts in ML and data science who seek to understand the concepts of HPO in sufficient depth.

Our focus is on providing a general overview of HPO without a special focus on concrete ML model classes. However, since the ML field has many large sub-communities by now, there are also several specialized HPO and AutoML surveys. For example, [58] and [133] focus on AutoML for deep learning models, [78] on HPO for forecasting models in smart grids, and [149] on AutoML on graph models. [6] investigate model-based HPO and also give search spaces and examples for many specific ML algorithms. Other more general reviews of AutoML are [146], [37], and [147].

3 Supervised Machine Learning

3.1 Terminology and Notations

Supervised ML addresses the problem of inferring a model from labeled training data that is then used to predict data from the same underlying distribution with minimal error. Let be a labeled data set with observations, where each observation consists of a -dimensional feature vector222More generally, with a slight abuse of notation, the feature vector could be taken as a tensor (for example when the feature is an image). and its label . Hence, we define the data set333More precisely, is an indexed tuple, but we will continue to use common terminology and call it a data set. . We assume that has been sampled i.i.d. from an underlying, unknown distribution, so . Each dimension of a -dimensional will usually be of numerical, integer, or categorical type. While some ML algorithms can handle all of these data types natively (e.g., tree-based methods), others can only work on numeric features and require encoding techniques for categorical types. The most common supervised ML tasks are regression and classification, where for regression and is finite and categorical for classification with classes. Although we mainly discuss HPO in the context of regression and classification, all covered concepts easily generalize to other supervised ML tasks, such as Poisson regression, survival analysis, cost-sensitive classification, multi-output tasks, and many more. An ML model is a function that assigns a prediction in to a feature vector from . For regression, is , while in classification the output represents the decision scores or posterior probabilities of the candidate classes. Binary classification is usually simplified to , with a single decision score in or only the posterior probability for the positive class. The function space – usually parameterized – to which a model belongs is called the hypothesis space and denoted as .

The goal of supervised ML is to fit a model given observations sampled from , so that it generalizes well to new observations from the same data generating process. Formally, an ML learner or inducer configured by HPs maps a data set to a model or equivalently to its associated parameter vector , i.e.,

| (1) |

where is the set of all data sets. While model parameters are an output of the learner , HPs are an input. We also write for or for if we want to stress that the inducer was configured with or that the model was learned on by an inducer configured by . A loss function measures the discrepancy between the prediction and the true label. Many ML learners use the concept of empirical risk minimization (ERM) in their training routine to produce their fitted model , i.e., they optimize or over all candidate models

| (2) |

on the training data (c.f. Figure 1). This empirical risk is only a stochastic proxy for what we are actually interested in, namely the theoretical risk or true generalization error . For many complex hypothesis spaces, can become considerably smaller than its true risk . This phenomenon is known as overfitting, which in ML is usually addressed by either constraining the hypothesis space or regularized risk minimization, i.e., adding a complexity penalty to (2).

3.2 Evaluation of ML Algorithms

After training an ML model , a natural follow-up step is to evaluate its future performance given new, unseen data. We seek to use an unbiased, high-quality statistical estimator, which numerically quantifies the performance of our model when it is used to predict the target variable for new observations drawn from the same data-generating process .

3.2.1 Performance Metrics

A general performance measure for an arbitrary data set of size is defined as a two-argument function that maps the -size vector of true labels and the matrix of prediction scores to a scalar performance value:

| (3) |

This more general set-based definition is needed for performance measures – such as area under the ROC curve (AUC) – or for most measures from survival time analysis, where loss values cannot be computed with respect to only a single observation. For usual point-wise losses , we can simply extend to by averaging over the size- set used for testing:

| (4) |

where is the -th row of ; this corresponds to estimating the theoretical risk corresponding to the given loss. Popular performance metrics corresponding to different loss functions can be found in Table 1 in Appendix B.

Furthermore, the introduction of allows the evaluation of a learner with respect to a different performance metric than the loss used for risk minimization. Because of this, we call the loss used in (2) inner loss, and the outer performance measure or outer loss444Surrogate loss for the inner loss and target loss for the outer loss are also commonly used terminologies.. Both can coincide, but quite often we select an outer performance measure based on the prediction task we would like to solve, and opt to approximate this metric with a computationally cheaper and possibly differentiable version during inner risk minimization.

3.2.2 Generalization Error

Due to potential overfitting, every predictive model should be evaluated on unseen test data to ensure unbiased performance estimation. Assuming (for now) dedicated train and test data sets and of sizes and , respectively, we define the generalization error of a learner with HPs trained on observations, w.r.t. measure as

| (5) |

where we take the expectation over the data sets and , both i.i.d. from , and is the matrix of predictions when the model is trained on and predicts on . Note that in the simpler and common case of a point-wise loss , the above trivially reduces to the more common form

| (6) |

with expectation over data set and test sample , both independently sampled from . This corresponds to the expectation of – which references a given, fixed model – over all possible models fitted to different realizations of of size (see Figure 1).

3.2.3 Data splitting and Resampling

The generalization error must usually be estimated from a single given data set . For a simple estimator based on a single random split, and can be represented as index vectors and , which usually partition the data set. For an index vector of length , one can define the corresponding vector of labels , and the corresponding matrix of prediction scores for a model . The holdout estimator is then:

| (7) |

The holdout approach has the following trade-off: (i) Because must be smaller than , the estimator is a pessimistically biased estimator of , as we do not use all available data for training. In a certain sense, we are estimating with respect to the wrong training set size. (ii) If is large, will be small, and the estimator (7) has a large variance. This trade-off not only depends on relative sizes of and , but also the absolute number of observations, as learning with respect to sample size and test error estimation based on samples both show a saturating effect for larger sample sizes. However, a typical rule of thumb is to choose [83, 33].

Resampling methods offer a partial solution to this dilemma. These methods repeatedly split the available data into training and test sets, then apply an estimator (7) for each of these, and finally aggregate over all obtained performance values. Formally, we can identify a resampling strategy with a vector of corresponding splits, i.e., where are index vectors and is the number of splits. Hence, the estimator for Eq. (5) is:

| (8) | ||||

where the aggregator is often chosen to be the mean. For Eq. (8) to be a valid estimator of Eq. (6), we must specify to what training set size an estimator refers in . As the training set sizes can be different during resampling (they usually do not vary much), it should at least hold that , and we could take the average for such a required reference size with .

Resampling uses the data more efficiently than a single holdout split, as the repeated estimation and averaging over multiple splits results in an estimate of generalization error with lower variance [83, 123]. Additionally, the pessimistic bias of simple holdout is also kept to a minimum and can be reduced to nearly by choosing training sets of size close to . The most widely-used resampling technique is arguably -fold-cross-validation (CV), which partitions the available data in subsets of approximately equal size, and uses each partition to evaluate a model fitted on its complement. For small data sets, it makes sense to repeat CV with multiple random partitions and to average the resulting estimates in order to average out the variability, which results in repeated -fold-cross-validation. Furthermore, note that performance values generated from resampling splits and especially CV splits are not statistically independent because of their overlapping traing sets, so the variance of is not proportional to . Somewhat paradoxically, a leave-one-out strategy is not the optimal choice, and repeated cross-validation with many (but fewer than ) folds and many repetitions is often a better choice [8]. An overview of existing resampling techniques can be found in [21] or [25].

4 Hyperparameter Optimization

4.1 HPO Problem Definition

Most learners are highly configurable by HPs, and their generalization performance usually depends on this configuration in a non-trivial and subtle way. HPO algorithms automatically identify a well-performing HPC for an ML algorithm . The search space contains all considered HPs for optimization and their respective ranges:

| (9) |

where is a bounded subset of the domain of the -th HP , and can be either continuous, discrete, or categorical. This already mixed search space can also contain dependent HPs, leading to a hierarchical search space: An HP is said to be conditional on if is only active when is an element of a given subset of and inactive otherwise, i.e., not affecting the resulting learner [135]. Common examples are kernel HPs of a kernelized machine such as the SVM, when we tune over the kernel type and its respective hyperparameters as well. Such conditional HPs usually introduce tree-like dependencies in the search space, and may in general lead to dependencies that may be represented by directed acyclic graphs.

The general HPO problem as visualized in Figure 2 is defined as:

| (10) |

where denotes the theoretical optimum, and is a shorthand for the estimated generalization error when are fixed. We therefore estimate and optimize the generalization error of a learner , w.r.t. an HPC , based on a resampling split .555Note again that optimizing the resampling error will result in biased estimates, which are problematic when reporting the generalization error; use nested CV for this, see Section 4.4. Note that is a black-box, as it usually has no closed-form mathematical representation, and hence no analytic gradient information is available. Furthermore, the evaluation of can take a significant amount of time. Therefore, the minimization of forms an expensive black-box optimization problem.

Taken together, these properties define an optimization problem of considerable difficulty. Furthermore, they rule out many popular optimization methods that require gradients or entirely numerical search spaces or that must perform a large number of evaluations to converge to a well-performing solution, like many meta-heuristics. Furthermore, as , which is defined via resampling and evaluates on randomly chosen validation sets, should be considered a stochastic objective – although many HPO algorithms may ignore this fact or simply handle it by assuming that we average out the randomness through enough resampling replications.

We can thus define the HP tuner that proposes its estimate of the true optimal configuration given a dataset , an inducer with corresponding search space to optimize, and a target measure . The specific resampling splits used can either be passed into as well or are internally handled to facilitate adaptive splitting or multi-fidelity optimization (e.g., as done in [82]).

4.2 Well-Established HPO Algorithms

All HPO algorithms presented here work by the same principle: they iteratively propose HPCs and then evaluate the performance on these configurations. We store these HPs and their respective evaluations successively in the so-called archive , with if a single point is proposed by the tuner.

Many algorithms can be characterized by how they handle two different trade-offs: a) The exploration vs. exploitation trade-off refers to how much budget an optimizer spends on either attempting to directly exploit the currently available knowledge base by evaluating very close to the currently best candidates (e.g., local search) or exploring the search space to gather new knowledge (e.g., random search). b) The inference vs. search trade-off refers to how much time and overhead is spent to induce a model from the currently available archive data in order to exploit past evaluations as much as possible. Other relevant aspects that HPO algorithms differ in are: Parallelizability, i.e., how many configurations a tuner can (reasonably) propose at the same time; global vs. local behavior of the optimizer, i.e., if updates are always quite close to already evaluated configurations; noise handling, i.e., if the optimizer takes into account that the estimated generalization error is noisy; multifidelity, i.e., if the tuner uses cheaper evaluations, for example on smaller subsets of the data, to infer performance on the full data; search space complexity, i.e., if and how hierarchical search spaces as introduced in Section 5 can be handled.

4.2.1 Grid Search and Random Search

Grid search (GS) is the process of discretizing the range of each HP and exhaustively evaluating every combination of values. Numeric and integer HP values are usually equidistantly spaced in their box constraints. The number of distinct values per HP is called the resolution of the grid. For categorical HPs, either a subset or all possible values are considered. A second simple HPO algorithm is random search (RS). In its simplest form, values for each HP are drawn independently of each other and from a pre-specified (often uniform) distribution, which works for (box-constrained) numeric, integer, or categorical parameters (c.f. Figure 3). Due to their simplicity, both GS and RS can handle hierarchical search spaces.

RS often has much better performance than GS in higher-dimensional HPO settings [11]. GS suffers directly from the curse of dimensionality [7], as the required number of evaluations increases exponentially with the number of HPs for a fixed grid resolution. This seems to be true as well for RS at first glance, and we certainly require an exponential number of points in to cover the space well. However, in practice, HPO problems often have low effective dimensionality [11]: The set of HPs that have an influence on performance is often a small subset of all available HPs. Consider the example illustrated in Figure 3, where an HPO problem with HPs and is shown. A GS with resolution resulting in HPCs is evaluated, and we discover that only HP has any relevant influence on the performance, so only 3 of 9 evaluations provided any meaningful information. In comparison, RS would have given us 9 different configurations for HP , which results in a higher chance of finding the optimum. Another advantage of RS is that it can easily be extended by further samples; in contrast, the number of points on a grid must be specified beforehand, and refining the resolution of GS afterwards is more complicated. Altogether, this makes RS preferable to GS and a surprisingly strong baseline for HPO in many practical settings. Notably, there are sampling methods that attempt to cover the search space more evenly than the uniform sampling of RS, e.g., Latin Hypercube Sampling [99], or Sobol sequences [3]. However, these do not seem to significantly outperform naive i.i.d. sampling [11].

4.2.2 Evolution Strategies

Evolution strategies (ES) are a class of stochastic population-based optimization methods inspired by the concepts of biological evolution, belonging to the larger class of evolutionary algorithms. They do not require gradients, making them generally applicable in black-box settings such as HPO. In ES terminology, an individual is a single HPC, the population is a currently maintained set of HPCs, and the fitness of an individual is its (inverted) generalization error . Mutation is the (randomized) change of one or a few HP values in a configuration. Crossover creates a new HPC by (randomly) mixing the values of two other configurations. An ES follows iterative steps to find individuals with high fitness values (c.f. Figure 4): (i) An initial population is sampled at random. (ii) The fitness of each individual is evaluated. (iii) A set of individuals is selected as parents for reproduction.666Either completely at random, or with a probability according to their fitness, the most popular variants being roulette wheel and tournament selection. (iv) The population is enlarged through crossover and mutation of the parents. (v) The offspring is evaluated. (vi) The top-k fittest individuals are selected.777In ES-terminology this is called a () elite survival selection, where denotes the population size, and the number of offspring; other variants like () selection exist. (vii) Steps (ii) to (v) are repeated until a termination condition is reached. For a more comprehensive introduction to ES, see [13].

ES were limited to numeric spaces in their original formulation, but they can easily be extended to handle mixed spaces by treating components of different types independently, e.g., by adding a normally distributed random value to real-valued HPs while adding the difference of two geometrically distributed values to integer-valued HPs [90]. By defining mutation and crossover operations that operate on tree structures or graphs, it is even possible to perform optimization of preprocessing pipelines [104, 41] or neural network architectures [114] using evolutionary algorithms. The properties of ES can be summarized as follows: ES have a low likelihood to get stuck in local minima, especially if so-called nested ES are used [13]. They can be straightforwardly modified to be robust to noise [14], and can also be easily extended to multi-objective settings [30]. Additionally, ES can be applied in settings with complex search spaces and can therefore work with spaces where other optimizers may fail [59]. ES are more efficient than RS and GS but still often require a large number of iterations to find good solutions, which makes them unsatisfactory for expensive optimization settings like HPO.

4.2.3 Bayesian Optimization

Bayesian optimization (BO) has become increasingly popular as a global optimization technique for expensive black-box functions, and specifically for HPO [76, 67, 125].

BO is an iterative algorithm whose key strategy is to model the mapping based on observed performance values found in the archive via (non-linear) regression. This approximating model is called a surrogate model, for which a Gaussian process or a random forest are typically used. BO starts on an archive filled with evaluated configurations, typically sampled randomly, using Latin Hypercube Sampling or the Sobol sampling [24]. BO then uses the archive to fit the surrogate model, which for each produces both an estimate of performance as well as an estimate of prediction uncertainty , which then gives rise to a predictive distribution for one test HPC or a joint distribution for a set of HPCs. Based on the predictive distribution, BO establishes a cheap-to-evaluate acquisition function that encodes a trade-off between exploitation and exploration: The former means that the surrogate model predicts a good, low value for a candidate HPC , while the latter implies that the surrogate is very uncertain about , likely because the surrounding area has not been explored thoroughly.

Instead of working on the true expensive objective, the acquisition function is then optimized in order to generate a new candidate for evaluation. The optimization problem inherits most characteristics from ; so it is often still multi-modal and defined on a mixed, hierarchical search space. Therefore, may still be quite complex, but it is at least cheap to evaluate. This allows the usage of more budget-demanding optimizers on the acquisition function. If the space is real-valued and the combination of surrogate model and acquisition function supports it, even gradient information can be used.

Among the possible optimization methods are: iterated local search (as used by [69]), evolutionary algorithms (as in [140]), ES using derivatives (as used by [120, 254]), and a focusing RS called DIRECT [75].

The true objective value of the proposed HPC – generated by optimization of – is finally evaluated and added to the archive . The surrogate model is updated, and BO iterates until a predefined budget is exhausted, or a different termination criterion is reached. These steps are summarized in Algorithm 1. BO methods can use different ways of deciding which to return, referred to as the identification step by [70]. This can either be the best observed during optimization, the best (mean, or quantile) predicted from the archive according to the surrogate model [110, 70], or the best predicted overall [119]. The latter options serve as a way of smoothing the observed performance values and reducing the influence of noise on the choice of .

Surrogate model

The choice of surrogate model has great influence on BO performance and is often linked to properties of . If is purely real-valued, Gaussian process (GP) regression [113] – sometimes referred to as Kriging – is used most often. In its basic form, BO with a GP does not support HPO with non-numeric or conditional HPs, and tends to show deteriorating performance when has more than roughly ten dimensions. Dealing with integer-valued or categorical HPs requires special care [52]. Extensions for mixed-hierarchical spaces that are based on special kernels [130] exist, and the use of random embeddings has been suggested for high-dimensional spaces [139, 102]. Most importantly, standard GPs have runtime complexity that is cubic in the number of samples, which can result in a significant overhead when the archive becomes large.

[98] propose to use an adapted, sparse GP that restrains training data from uninteresting areas. Local Bayesian optimization [189] is implemented in the TuRBO algorithm and has been successfully applied to various black-box problems.

Random forests, most notably used in SMAC [67], have also shown good performance as surrogate models for BO. Their advantage is their native ability to handle discrete HPs and, with minor modifications, e.g., in [67], even dependent HPs without the need for preprocessing. Standard random forest implementations are still able to handle dependent HPs by treating infeasible HP values as missing and performing imputation. Random forests tend to work well with larger archives and introduce less overhead than GPs. SMAC uses the standard deviation of tree predictions as a heuristic uncertainty estimate [67]. However, more sophisticated alternatives exist to provide unbiased estimates [121]. Since trees are not distance-based spatial models, the uncertainty estimator does not increase the further we extrapolate away from observed training points. This might be one explanation as to why tree-based surrogates are outperformed by GP regression on purely numerical search spaces [35].

Neural networks (NNs) have shown good performance in particular with nontrivial input spaces, and they are thus increasingly considered as surrogate models for BO [126]. Discrete inputs can be handled by one-hot encoding or by automatic techniques, e.g., entity embedding where a dense representation is learned from the output of a simple, direct encoding, such as one-hot encoding by the NN. [56]. NNs offer efficient and versatile implementations that allow the use of gradients for more efficient optimization of the acquisition function. Uncertainty bounds on the predictions can be obtained, for example, by using Bayesian neural networks (BNNs), which combine NNs with a probabilistic model of the network weights or adaptive basis regression where only a Bayesian linear regressor is added to the last layer of the NN [126].

Acquisition function

The acquisition function balances out the surrogate model’s prediction and its posterior uncertainty to ensure both exploration of unexplored regions of , as well as exploitation of regions that have performed well in previous evaluations. A very popular acquisition function is the expected improvement (EI) [76]:

| (11) | ||||

where denotes the best observed outcome of so far, and and are the cumulative distribution function and density of the standard normal distribution, respectively. The EI was introduced in connection with GPs that have a Bayesian interpretation, expressing the posterior distribution of the true performance value given already observed values as a Gaussian random variable with . Under this condition, Eq. can be analytically expressed as above, and the resulting formula is often heuristically applied to other surrogates that supply and .

Adaptive Explore-Exploit Tradeoffs

In a theoretical analysis without a limit on evaluations, [128] suggest to increase the -parameter of LCB over time to encourage exploration in later phases of optimization. However, in the context of practical HPO with a finite budget for evaluations, it seems plausible to decrease over time to enforce exploration in the beginning and exploitation at the end in a similar cooldown scheme as in simulated annealing, such as e.g. suggested by [150], which uses a further cyclical scheme to escape local minima. [118] propose a cooldown scheme for expected improvement: They use the “generalized expected improvement” , where larger values for exponent also enforce more exploration. They suggest to start with a large value in the beginning and to gradually decrease it to enforce exploitation at the end. [73] propose to dynamically give exploitation more weight as a function of mean model-uncertainty in what they call “contextual improvement”. This has a similar effect of encouraging late exploitation, as model uncertainty generally decreases over the course of optimization. Finally, [32] show that a very simply -greedy BO strategy can perform well or even better than established acquisition functions. They simply propose HPCs that maximize the predicted mean, but interleave random HPCs with probability. They show that this strategy performs well in settings with a low evaluation budget or with many dimensions, which is consistent with the other proposed methods, which adaptively emphasize exploitation more when remaining budget is low.

Multi-point proposal

In its original formulation, BO only proposes one candidate HPC per iteration and then waits for the performance evaluation of that configuration to conclude. However, in many situations, it is preferable to evaluate multiple HPCs in parallel by proposing multiple configurations at once, or by asynchronously proposing HPCs while other proposals are still being evaluated.

While in the sequential variant, the best point can be determined unambiguously from the full information of the acquisition function. In the parallel variant, many points must be proposed at the same time without information about how the other points will perform. The objective here is to some degree to ensure that the proposed points are sufficiently different from each other.

The proposal of configurations in one BO iteration is called batch proposal or synchronous parallelization and works well if the runtimes of all black-box evaluations are somewhat homogeneous. If the runtimes are heterogeneous, one may seek to spontaneously generate new proposals whenever an evaluation thread finishes in what is called asynchronous parallelization. This offers some advantages to synchronous parallelization, but is more complicated to implement in practice.

The simplest option to obtain proposals is to use the LCB criterion in Eq. (12) with different values for . For this so-called qLCB (also referred to as qUCB) approach, [68] propose to draw from an exponential distribution with rate parameter . This can work relatively well in practice but has the potential drawback of creating proposals that are too similar to each other [23]. [23] instead propose to maximize both and simultaneously, using multi-objective optimization, and to choose points from the approximated Pareto-front. Further ways to obtain proposals are constant liar, Kriging believer (both described in [54]), and q-EI [28]. Constant liar sets fake constant response values for the first points proposed in the batch to generate additional one via the normal EI principle and the approach; Kriging believer does the same but uses the GP model’s mean prediction as fake value instead of a constant. The qEI optimizes a true multivariate EI criterion and is computationally expensive for larger batch sizes, but [158] implement methods to efficiently calculate the qEI (and qNEI for noisy observations) through MC simulations.

Efficient Performance Evaluation

While BO models only optimize the HPC prediction performance in its standard setup, there are several extensions that aim to make optimization more efficient by considering runtime or resource usage. These extensions mainly modify the acquisition function to influence the HPCs that are being proposed. [125] suggests the expected improvement per second (EIPS) as a new acquisition function. The EIPS includes a second surrogate model that predicts the runtime of evaluating a HPC in order to compromise between expected improvement and required runtime for evaluation. Most methods that trade off between runtime and information gain fall under the category of multi-fidelity methods, which is further discussed in Section 4.2.4. Acquisition functions that are especially relevant here consider information gain-based criteria like Entropy Search [60] or Predictive Entropy Search [61]. These acquisition functions can be used for selective subsample evaluation [82], reducing the number of necessary resampling iterations [131], and stopping certain model classes, such as NNs, early.

4.2.4 Multifidelity and Hyperband

The multifidelity (MF) concept in HPO refers to all tuning approaches that can efficiently handle a learner with a fidelity HP as a component of , which influences the computational cost of the fitting procedure in a monotonically increasing manner. Higher values imply a longer runtime of the fit. This directly implies that the lower we set , the more points we can explore in our search space, albeit with much less reliable information w.r.t. their true performance. If has a linear relationship with the true computational costs, we can directly sum the values for all evaluations to measure the computational costs of a complete optimization run. We assume to know box-constraints of in form of a lower and upper limit, so , where the upper limit implies the highest fidelity returning values closest to the true objective value at the highest computational cost. Usually, we expect higher values of to be better in terms of predictive performance yet naturally more computationally expensive. However, overfitting can occur at some point, for example when controls the number of training epochs when fitting an NN. Furthermore, we assume that the relationship of the fidelity to the prediction performance changes somewhat smoothly. Consequently, when evaluating multiple HPCs with small , this at least indicates their true ranking. Typically, this implies a sequential fitting procedure, where is, for example, the number of (stochastic) gradient descent steps or the number of sequentially added (boosting) ensemble members. A further, generally applicable option is to subsample the training data from a small fraction to 100% before training and to treat this as a fidelity control [82]. HPO algorithms that exploit such a parameter – usually by spending budget on cheap HPCs with low values earlier for exploration, and then concentrating on the most promising ones later – are called multifidelity methods. One can define two versions of the MF-HPO problem. (a) If overfitting can occur with higher values of (e.g., if it encodes training iterations), simply minimizing is already appropriate. (b) If the assumption holds that a higher fidelity always results in a better model (e.g., if controls the size of the training set), we are interested in finding the configuration for which the inducer will return the best model given the full budget, so . Of course, in both versions, the optimizer can make use of cheap HPCs with low settings of on its path to its result.

Hyperband [229] can best be understood as repeated execution of the successive halving (SH) procedure [71]. SH assumes a fidelity-budget for the sum of for all evaluations. It starts with a given, fixed number of candidates that we denote with and ?races them down? in stages to a single best candidate by repeatedly evaluating all candidates with increased fidelity in a certain schedule. Typically, this is controlled by the control multiplier of Hyperband with (typically set to 2 or 3): After each batch evaluation of the current population of size , we reduce the population to the best fraction and set the new fidelity for a candidate evaluation to . Thus, promising HPCs are assigned a higher fidelity overall, and sub-optimal ones are discarded early on. The starting fidelity and the number of stages are computed in a way such that each batch evaluation of an SH population has approximately amount of fidelity units spent. Overall, this ensures that approximately, but not more than, fidelity units are spent in SH:

| (13) |

However, the efficiency of SH strongly depends on a sensible choice of the number of starting configurations and the resulting schedule. If we assume a fixed fidelity-budget for HPO, the user has the choice of running either (a) more configurations but with less fidelity, or (b) fewer configurations, but with higher fidelity. While the former naturally explores more, the latter schedules evaluations with stronger correlation to the true objective value and more informative evaluations. As an example, consider how is discarded in favor of at 25% in Figure 6. Because their performance lines would have crossed close to 100%, is ultimately the better configuration. However, in this case, the superiority of was only observable after full evaluation.

As we often have no prior knowledge regarding this effect, HB simply runs SH for different numbers of starting configurations , and each SH run or schedule is called a bracket. As input, HB takes and the maximum fidelity . HB then constructs the target fidelity budget for each bracket by considering the most explorative bracket: Here, the number of batch evaluations is chosen to be for which , and we collect these values in .

Since we want to spend approximately the same total fidelity and reduce the candidates to one winning HPC in every batch evaluation, the fidelity budget of each bracket is .

For every , a bracket is defined by setting the starting fidelity of the bracket to , resulting in brackets and an overall fidelity budget of spent by HB. Consequently, every bracket consists of batch evaluations, and the starting population size is the maximum value that fulfills Eq. (13). The full algorithm is outlined in Algorithm 2, and the bracket design of HB with and is shown in Figure 6.

Starting configurations are usually sampled uniformly, but [229] also show that any stationary sampling distribution is valid. Because HB is a random-sampling-based method, it can trivially handle hierarchical HP spaces in the same manner as RS.

uses a stationary sampling distribution to generate the initial HPC population of size ,

selects the HPCs in associated to the best performances in as the next HPC population.

Multifidelity Bayesian Optimization

The idea behind Hyperband – trying to discard HPCs that do not perform well early on – is somewhat orthogonal to the idea behind BO, i.e. intelligently proposing HPCs that are likely to improve performance or to otherwise gain information about the location of the optimum. It is therefore natural to combine these two methods. This has first been achieved with BOHB by [192], who progressively increase of suggested HPCs as in Hyperband. However, instead of proposing HPCs randomly, they use a model-based approach equivalent to maximizing expected improvement. They show that BOHB performs similar to HB in the low-budget regime, where it is superior to normal BO methods, but outperforms HB and perform similar or better to BO when enough budget for tens of full-budget evaluations are available. BOHB was later extended to A-BOHB [136] to efficiently perform asynchronously parallelized optimization by sampling possible outcomes of evaluations currently under way.

Hyperband-based multi-fidelity methods have a control parameter that functions similar to described above, which determines the fraction of configurations that are discarded at every value for which evaluations are performed. However, the optimal proportion of configurations to discard may vary depending on how strong the correlation is between performance values at different fidelities. An alternative approach is to use the surrogate model from BO to make adaptive decisions about what values to use, or what HPCs to discard. Algorithms following this approach typically use a method first proposed by [129], who use a surrogate model for both the performance, as well as the resources used to evaluate an HPC. Entropy search [60] is then used to maximize the information gained about the maximum for when per unit of predicted resource expenditure. Low-fidelity HPCs are evaluated whenever they contribute disproportionately large amounts of information to the maximum compared to their needed resources. A special challenge that needs to be solved by these methods is the modeling of performance with varying , which often has a different influence than other HPs and is therefore often considered as a separate case. An early HPO method building on this concept is freeze-thaw BO [132], which considers optimization of iterative ML methods such as deep learning that can be suspended (“frozen”) and continued (“thawed”). Another HPO method that specifically considers the training set size as fidelity HP is FABOLAS [80], which actively decides the training set size for each evaluation by trading off computational cost of an evaluation with a lot of data against the information gain on the potential optimal configuration.

In general, there could be other proposal mechanisms instead of random sampling as in Hyperband or BO as in BOHB. For example, [4] showed that differential evolution can perform even better; however the evolution of population members across fidelities needs to be adjusted accordingly.

4.2.5 Iterated Racing

The iterated racing (IR, [20]) procedure is a general algorithm configuration method that optimizes for a configuration of a general (not necessarily ML) algorithm that performs well over a given distribution of (arbitrary) problems. In most HPO algorithms, HPCs are evaluated using a resampling procedure such as CV, so a noisy function (error estimate for single resampling iterations) is evaluated multiple times and averaged. In order to connect racing to HPO, we now define a problem as a single holdout resampling split for a given ML data set, as suggested in [135, 84], and we will from now on describe racing only in terms of HPO.

The fundamental idea of racing [97] is that HPCs that show particularly poor performance when evaluated on the first problem instances (in our case: resampling folds) are unlikely to catch up in later folds and can be discarded early to save computation time for more interesting HPCs. This is determined by running a (paired) statistical test w.r.t. HPC performance values on folds. This allows for an efficient and dynamic allocation of the number of folds in the computation of – a property of IR that is unique, at least when compared to the algorithms covered in this article.

Racing is similar to HB in that it discards poorly-performing HPCs early. Like HB, racing must also be combined with a sampling metaheuristic to initialize a race. Particularly well-suited for HPO are iterated races [234], and we will use the terminology of that implementation to explain the main control parameters of IR. IR starts by racing down an initial population of randomly sampled HPCs and then uses the surviving HPCs of the race to stochastically initialize the population of the subsequent race to focus on interesting regions of the search space.

Sampling is performed by first selecting a parent configuration among the survivors of the previous generation, according to a categorical distribution with probabilities , where is the rank of the configuration . A new HPC is then generated from this parent by mutating numeric HPs using a truncated normal distribution, always centered at the numeric HP value of the parent. Discrete parameters use a discrete probability distribution. This is visualized in Figure 7. The parameters of these distributions are updated as the optimization continues: The standard deviation of the Gaussian is narrowed to enforce exploitation and convergence, and the categorical distribution is updated to more strongly favor the values of recent ancestors. IR is able to handle search spaces with dependencies by sampling HPCs that were inactive in a parent configuration from the initial (uniform) distribution.

This algorithmic principle of having a distribution that is centered around well-performing candidates, is continuously sampled from and updated, is close to an estimation-of-distribution algorithm (EDA), a well-known template for ES [86]. Therefore, IR could be described as an EDA with racing for noise handling.

IR has several control parameters that determine how the racing experiments are executed. We only describe the most important ones here; many of these have heuristic defaults set in the implementation introduced by [234]. (nbIterations) determines the number of performed races, defaulting to (with the number of HPs being optimized). Within a race, each HPC is first evaluated on (firstTest) folds before a first comparison test is made. Subsequent tests are then made after every (eachTest) evaluation. IR can be performed as elitist, which means surviving configurations from a generation are part of the next generation. The statistical test that discards individuals can be the Friedman test or the -test [19], the latter possibly with multiple testing correction. [234] recommend the -test when when performance values for evaluations on different instances are commensurable and the tuning objective is the mean over instances, which is usually the case for our resampled performance metric where instances are simply resampling folds.

4.3 HPO - A Bilevel Inference Perspective

As discussed in Section 4.1, HPO produces an approximately optimal HPC by optimizing it w.r.t. the resampled performance . This is still risk minimization w.r.t. (hyper)parameters, where we search for optimal parameters so that the risk of our predictor becomes minimal when measured on validation data via

| (14) |

where and is the prediction matrix of on validation data, for a pointwise loss function. The above is formulated for a single holdout split in order to demonstrate the tight connection between (first level) risk minimization and HPO; Eq. provides the generalization for arbitrary resampling with multiple folds. This is somewhat obfuscated and complicated by the fact that we cannot evaluate Eq. (8) in one go, but must rather fit one or multiple models during its computation (hence also its black-box nature). It is useful to conceptualize this as a bilevel inference mechanism; while the parameters of for a given HPC are estimated in the first level, in the second level we infer the HPs . However, both levels are conceptually very similar in the sense that we are optimizing a risk function for model parameters which should be optimal for the the data distribution at hand. In case of the second level, this risk function is not , but the harder-to-evaluate generalization error . An intuitive, alternative term for HPO is second level inference [55], visualized in Figure 8.

There are mainly two reasons why such a bilevel optimization is preferable to a direct, joint risk minimization of parameters and HPs [55]:

-

•

Typically, learners are constructed in such a way that optimized first-level parameters can be more efficiently computed for fixed HPs, e.g., often the first-level problem is convex, while the joint problem is not.

-

•

Since the generalization error is eventually optimized for the bilevel approach, the resulting model should be less prone to overfitting.

Thus, we can define a learner with integrated tuning as a mapping , , which maps a data set to the model that has the HPC set to as optimized by on and is then itself trained on the whole of ; all for a given inducer , performance measure , and search space . Algorithmically, this learner has a 2-step training procedure (see Figure 8), where tuning is performed before the final model fit. This ?self-tuning? learner ?shadows? the tuned HPs of its search space from the original learner and integrates their configuration into the training procedure 888The self-tuning learner actually also adds new HPs that are the control parameters of the HPO procedure.. If such a learner is cross-validated, we naturally arrive at the concept of nested CV, which is discussed in the following Section 4.4.

4.4 Nested Resampling and Meta-Overfitting

As discussed in Section 3.2, the evaluation of any learner should always be performed via resampling on independent test sets to ensure non-biased estimation of its generalization error. This is necessary because evaluating on the data set that was used for its construction would lead to an optimistic bias. In the general HPO problem as in Eq. (10), we already minimize this generalization error by resampling:

| (15) |

If we simply report the estimated value of the returned best HPC, this also creates an optimistically biased estimator of the generalization error, as we have violated the fundamental ?untouched test set? principle by optimizing on the test set(s) instead.

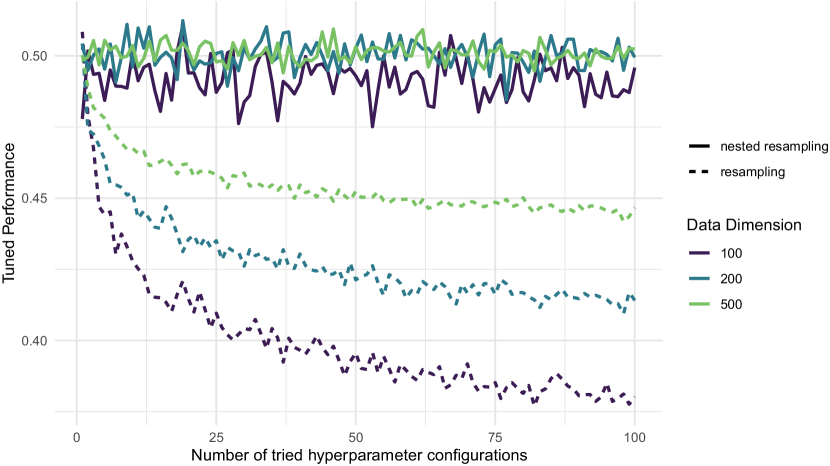

To better understand the necessity of an additional resampling step, we consider the following example in Figure 9, introduced by [21]. Assume a balanced binary classification task and an inducer that ignores the data. Hence, has no effect, but rather ?predicts? the class labels in a balanced but random manner. Such a learner always has a true misclassification error of (using as a metric), and any normal CV-based estimator will provide an approximately correct value as long as our data set is not too small. We now ?tune? this learner, for example, by RS – which is meaningless, as has no effect. The more tuning iterations are performed, the more likely it becomes that some model from our archive will produce partially correct labels simply by random chance, and the (only randomly) ?best? of these is selected by our tuner at the end. The more we tune, the smaller our data set, or the more variance our GE estimator exhibits, the more expressed this optimistic bias will be.

To avoid this bias, we introduce an additional outer resampling loop around this inner HPO-resampling procedure – or as discussed in Section 4.3, we simply regard this as cleanly cross-validating the self-tuned learner . This is called nested resampling, which is illustrated in Figure 10.

The procedure works as follows: In the outer loop, an outer model-building or training set is selected, and an outer test set is cleanly set aside. Each proposed HPC during tuning is evaluated via inner resampling on the outer training set. The best performing HPC returned by tuning is then used to fit a final model for the current outer loop on the outer training set, and this model is then cleanly evaluated on the test set. This is repeated for all outer loops, and all outer test performances are aggregated at the end.

Some further comments on this general procedure: (i) Any resampling scheme is possible on the inside and outside, and these schemes can be flexibly combined based on statistical and computational considerations. Nested CV and nested holdout are most common. (ii) Nested holdout is often called the train-validation-test procedure, with the respective terminology for the generated three data sets resulting from the 3-way split. (iii) Many users often wonder which ?optimal? HPC they are supposed to report or study if nested CV is performed, with multiple outer loops, and hence multiple outer HPCs . However, the learned HPs that result from optimizations within CV are considered temporary objects that merely exist in order to estimate . The comparison to first-level risk minimization from Section 4.3 is instructive here: The formal goal of nested CV is simply to produce the performance distribution on outer test sets; the can be considered as the fitted HPs of the self-tuned learner . If the parameters of a final model are of interest for further study, the tuner should be fitted one final time on the complete data set. This would imply a final tuning run on the complete data set for second-level inference.

Nested resampling ensures unbiased outer evaluation of the HPO process, but, as CV for the first level, it is only a process that is used to estimate performance – it does not directly help in constructing a better model. The biased estimation of performance values is not a problem for the optimization itself, as long as all evaluated HPCs are still ranked correctly. But after a considerably large amount of evaluations, wrong HPCs might be selected due to stochasticity or overfitting to the splits of the inner resampling. This effect has been called either overtuning, meta-overfitting or oversearching [103, 112]. At least parts of this problem seem directly related to the problem of multiple hypothesis testing. However, it has not been analysed very well yet, and unlike regularization for (first level) ERM, not many counter measures are currently known for HPO.

4.5 Threshold Tuning

Most classifiers do not directly output class labels, but rather probabilities or real-valued decision scores, although many metrics require predicted class labels. A score is converted to a predicted label by comparing it to a threshold so that , where we use the Iverson bracket with if and in all other cases. For binary classification, the default thresholds are for probabilities and for scores. However, depending on the metric and the classifier, different thresholds can be optimal. Threshold tuning [122] is the practice of optimizing the classification threshold for a given model to improve performance. Strictly speaking, the threshold constitutes a further HP that must be chosen carefully. However, since the threshold can be varied freely after a model has been built, it does not need to be tuned jointly with the remaining HPs and can be optimized in a separate, subsequent, and cheaper step for each proposed HPC .

After models have been fitted and predictions for all test sets have been obtained when is computed via resampling, the vector of joint test set scores can be compared against the joint vector of test set labels to optimize for an optimal threshold , where the Iverson bracket is evaluated component-wise and for a binary probabilistic classifier and for a score classifier. Since is scalar and evaluations are cheap, can be found easily via a line search. This two-step approach ensures that every HPC is coupled with its optimal threshold. is then defined as the optimal performance value for in combination with .

The procedure can be generalized to multi-class classification. Class probabilities or scores are divided by threshold weights , , and the that yields the maximal is chosen as the predicted class label. The weights are optimized in the same way as in the binary case. Generally, threshold tuning can be performed jointly with any HPO algorithm. In practice, threshold tuning is implemented as a post-processing step of an ML pipeline (Sections 5.1 & 5.2).

5 Pipelining, Preprocessing, and AutoML

ML typically involves several data transformation steps before a learner can be trained. If one or multiple preprocessing steps are executed successively, the data flows through a linear graph, also called pipeline. Subsection 5.1 explains why a pipeline forms an ML algorithm itself, and why its performance should be evaluated accordingly through resampling. Finally, Subsection 5.2 introduces the concept of flexible pipelines via hierarchical spaces.

5.1 Linear Pipelines

In the following, we will extend the HPO problem for a specific learner towards configuring a full pipeline including preprocessing. A linear ML pipeline is a succession of preprocessing methods followed by a learner at the end, all arranged as nodes in a linear graph. Each node acts in a very similar manner as the learner, and has an associated training and prediction procedure. During training, each node learns its parameters (based on the training data) and sends a potentially transformed version of the training data to its successor. Afterwards, a pipeline can be used to obtain predictions: The nodes operate on new data according to their model parameters. Figure 11 shows a simple example.

Pipelines are important to properly embed the full model building procedure, including preprocessing, into cross-validation, so every aspect of the model is only inferred from the training data. This is necessary to avoid overfitting and biased performance evaluation [21, 63], as it is for basic ML. As each node represents a configurable piece of code, each node can have HPs, and the HPs of the pipeline are simply the joint set of all HPs of its contained nodes. Therefore, we can model the whole pipeline as a single HPO problem with the combined search space .

5.2 Operator Selection and AutoML

More flexible pipelining, and especially the selection of appropriate nodes in a data-driven manner via HPO, can be achieved by representing our pipeline as a directed acyclic graph. Usually, this implies a single source node that accepts the original data and a single sink node that returns the predictions. Each node represents a preprocessing operation, a learner, a postprocessing operation, or a directive operation that directs how the data is passed to the child node(s).

One instance of such a pipeline is illustrated in Figure 12.

Here, we consider the choice between multiple mutually exclusive preprocessing steps, as well as the choice between different ML algorithms. Such a choice is represented by a branching operator, which can be configured through a categorical parameter and can determine the flow of the data, resulting in multiple ?modeling paths? in our graph.

The HP space induced by a flexible pipeline is potentially more complex. Depending on the setting of the branching HP, different nodes and therefore different HPs are active, resulting in a very hierarchical search space. If we build a graph that includes a sufficiently large selection of preprocessing steps combined with sufficiently many ML models, the result can be flexible enough to work well on a large number of data sets – assuming it is correctly configured in a data-dependent manner. Combining such a graph with an efficient tuner is the key principle of AutoML [47, 100, 104].

6 Practical Aspects of HPO

In this section, we discuss practical aspects of HPO, which are more qualitative in nature and less often discussed in an academic context. Some of these recommendations are more ?rules of thumb?, based solely on experience, while others are at least partially confirmed by empirical benchmarks – keeping in mind that empirical benchmarks are only as good as the selection of data sets. Even if a proper empirical study cannot be cited for every piece of advice in this section, we still propose that such a compilation is highly valuable for HPO beginners.

6.1 Choosing Resampling and Performance Metrics

A resampling procedure is usually chosen based on two fundamental properties of the available data: (i) the number of observations, i.e., to what degree do we face a small sample size situation, and (ii) whether the i.i.d. assumption for our data sampling process is violated.

For smaller data sets, e.g., , repeated CV with a high number of repetitions should be used to reduce the variance while keeping the pessimistic bias small [21]. The larger the data set, the fewer splits are necessary. Consequently, for data sets of ?medium? size with , usually 5- or 10-fold CV is recommended. Beyond that, simple holdout might be sufficient. Note that even for large , sample size problems can occur, for example, when data is imbalanced. In that case, repeated resampling might still be required to obtain properly accurate performance estimates. Stratified sampling, which ensures that the relative class frequencies for each train/test split are consistent with the original data set, helps in such a case.

One fundamental assumption about our data is that observations are i.i.d., i.e., This assumption is often violated in practice. A typical example is repeated measurements, where observations occur in ?blocks? of multiple, correlated data, e.g., from different hospitals, cities or persons. In such a scenario, we are usually interested in the ability of the model to generalize to new blocks. We must then perform CV with respect to the blocks, e.g., ?leave one block out?. A related problem occurs if data are collected sequentially over a period of time. In such a setting, we are usually interested in how the model will generalize in the future, and the rolling or expanding window forecast must be used for evaluation [9] instead of regular CV. However, discussing these special cases is out of scope for this work.

In HPO, resampling strategies must be specified for the inner as well as the outer level of nested resampling. The outer level is simply regular ML evaluation, and all comments from above hold. We advise readers to study further material such as [72]. The inner level concerns the evaluation of through resampling during tuning. While the same general comments from above apply, in order to reduce runtime, repetitions can also be scaled down. We are not particularly interested in very accurate numerical performance estimates at the inner level, and we must only ensure that HPCs are properly ranked during tuning to achieve correct selection, as discussed in Section 4.4. Hence, it might be appropriate to use a 10-fold CV on the outside to ensure proper generalization error estimation of the tuned learner, but to use only 2 folds or simple holdout on the inside. In general, controlling the number of resampling repetitions on the inside should be considered an aspect of the tuner and should probably be automated away from the user (without taking away flexible control in cases of i.i.d. violations, or other deviations from standard scenarios). However, not many current tuners provide this, although racing is one of the attractive exceptions.

The choice of the performance measure should be guided by the costs that suboptimal predictions by the model and subsequent actions in the real-word context of applying the model would incur. Often, popular but simple measures like accuracy do not meet this requirement. Misclassification of different classes can imply different costs. For example, failing to detect an illness may have a higher associated cost than mistakenly admitting a person to the hospital. There exists a plethora of performance measures that attempt to emphasize different aspects of misclassification with respect to prediction probabilities and class imbalances, c.f. [72]and many listed in Table 1 in Appendix B. For other applications, it might be necessary to design a performance measure from scratch or based on underlying business key performance indicators (KPIs).

While a further discussion of metrics is again out of scope for this article, two pieces of advice are pertinent. First, as HPO is a black-box, no real constraints exist regarding the mathematical properties of (or the associated outer loss). For first-level risk minimization, on the other hand, we usually require differentiability and convexity of . If this is not fulfilled, we must approximate the KPI with a more convenient version. Second, for many applications, it is quite unclear whether there is a single metric that captures all aspects of model quality in a balanced manner. In such cases, it can be preferable to optimize multiple measures simultaneously, resulting in a multi-criteria optimization problem [62].

6.2 Choosing a Pipeline and Corresponding Search Space

For HPO, it is necessary to define the search space over which the optimization is to be performed. This choice can have a large impact on the performance of the tuned model. A simple search space is a (lower dimensional) Cartesian product of individual HP sets that are either numeric (continuous or integer-valued) or categorical. Encoding categorical values as integers is a common mistake that degrades the performance of optimizers that rely on information about distances between HPCs, such as BO. The search intervals of numeric HPs typically must be bounded within a region of plausibly well-performing values for the given method and data set.

Many numeric HPs are often either bounded in a closed interval (e.g., ) or bounded from below (e.g., ). The former can usually be tuned without modifications. HPs bounded by a left-closed interval should often be tuned on a logarithmic scale with a generous upper bound, as the influence of larger values often diminishes. For example, the decision whether -NN uses vs. 3 neighbors will have a larger impact than whether it uses vs. neighbors. The logarithmic scale can either be defined in the tuning software or must be set up manually by adjusting the algorithm to use transformations: If the desired range of the HP is , the tuner optimizes on , and any proposed value is transformed through an exponential function before it is passed to the ML algorithm. The logarithm and exponentiation must refer to the same base here, but which base is chosen does not influence the tuning process.

The size of the search space will also considerably influence the quality of the resulting model and the necessary budget of HPO. If chosen too small, the search space may not contain a particularly well-performing HPC. Choosing too wide HP intervals or including inadequate HPs in the search space can have an adverse effect on tuning outcomes in the given budget. If is simply too large, it is more difficult for the optimizer to find the optimum or promising regions within the given budget. Furthermore, restricting the bounds of an HP may be beneficial to avoid values that are a priori known to cause problems due to unstable behavior or large resource consumption. If multiple HPs lead to poor performance throughout a large part of their range – for example, by resulting in a degenerate ML model or a software crash – the fraction of the search space that leads to fair performance then shrinks exponentially in the number of HPs with this problem. This effect can be viewed as a further manifestation of the so-called curse of dimensionality.

Due to this curse of dimensionality and the considerable runtime costs of HPO, we would like to tune as few HPs as possible. If no prior knowledge from earlier experiments or expert knowledge exists, it is common practice to leave other HPs at their software default values with the assumption that the developers of the algorithm chose values that work well under a wide range of conditions, which is not necessarily given and its often not documented how these defaults were specified. Recent approaches have studied how to empirically find optimal default values, tuning ranges and HPC prior distributions based on extensive meta-data [142, 141, 47, 109, 138, 251, 108, 53].

It is possible to optimize several learning algorithms in combination, as described in Section 5.2, but this introduces HP dependencies. The question then arises of which of the large number of ML algorithms (or preprocessing operations) should be considered. However, [193] showed that in many cases, only a small but diverse set of learners is necessary to choose one ML algorithm that performs sufficiently well.

6.3 Choosing an HPO Algorithm

The number of HPs considered for optimization has a large influence on the difficulty of an HPO problem. Particularly large search spaces typically arise from optimizing over large pipelines or multiple learners (Section 5). With very few HPs (up to about 2-3) and well-functioning discretization, GS may be useful due to its interpretable, deterministic, and reproducible nature; however, it is not recommended beyond this [11]. BO with GPs work well for up to around 10 HPs. However, more HPs typically require more function evaluations – which in turn is problematic runtime-wise, since GPs scale cubically with the number of dimensions. On the other hand, BO with RFs have been used successfully on search spaces with hundreds of HPs [135] and can usually handle mixed hierarchical search spaces better. Pure sampling-based methods, such as RS and Hyperband, work well even for very large HP spaces as long as the ?effective? dimension (i.e., the number of HPs that have a large impact on performance) is low, which is often observed in ML models [11]. Evolutionary algorithms (and those using similar metaheuristics, such as racing) can also work with truly large search spaces, and even with search spaces of arbitrarily complex structure if one is willing do use non-custom mutation and crossover operators. Evolutionary algorithms may also require fewer objective evaluations than RS. Therefore, they occupy a middle ground between (highly complex, sample-efficient) BO and (very simple but possibly wasteful) RS.

Another property of algorithms that is especially relevant to practitioners with access to large computational resources is parallelizability, which is discussed in Subsection 6.7.2. Furthermore, HPO algorithms differ in their simplicity, both in terms of algorithmic principle and in terms of usability of available implementations, which can often have implications for their usefulness in practice. While more complex optimization methods, such as those based on BO, are often more sample-efficient or have other desirable properties compared to simple methods, they also have more components that can fail. When performance evaluations are cheap and the search space is small, it may therefore be beneficial to fall back on simple and robust approaches such as RS, Hyperband, or any tuner with minimal inference overhead. The availability of any implementation at all (and the quality of that implementation) is also important; there may be optimization algorithms based on beautiful theoretical principles that have a poorly maintained implementation or are based on out-of-date software. The additional cost of having to port an algorithm to the software platform being used, or even implement it from scratch, could be spent on running more evaluations with an existing algorithm.

One might wish to select an HPO algorithm that performed best on previous benchmarks. However, no single benchmark exists which includes all relevant scenarios and whose results generalize to all possible applications. Specific benchmark results can therefore only indicate how well an algorithm works for a selected set of data sets, a predefined budget, specific parallelization, specific learners, and search spaces. Even worse, extensive comparison studies are missing in the current literature, although efforts have been made to establish unified benchmarks. [35] showed that (i) BO with GPs were a strong approach for small continuous spaces with few evaluations, (ii) BO with Random Forests performed well for mixed spaces with a few hundred evaluations, and (iii) for large spaces and many evaluations, ES were the best optimizers.

6.4 Choosing an Implementation

In addition to the choice of algorithm, the choice of implementation is also important in practice. We note that a thorough comparison of all available software packages and frameworks is not within the scope of this article. Nevertheless, we provide a brief list of popular implementations and frameworks as reasonable starting points for newcomers.

Simple and established tuning algorithms for HPO are usually shipped with general purpose ML frameworks such as Scikit-learn [248], Tensorflow999https://www.tensorflow.org/, PyTorch [247], MXNet [176], mlr3 [226, 163], tidymodels101010https://www.tidymodels.org/ or h2o.ai111111https://github.com/h2oai/h2o-3. Although modern state-of-the-art algorithms often build on, extend, or connect to such an ML framework, they are usually developed in independent software projects.

For Python, there exist a plethora of HPO toolkits, e.g., Spearmint [262], SMAC121212https://github.com/automl/SMAC3 [213], BoTorch [158], Dragonfly [77], or Oríon131313https://github.com/Epistimio/orion. Multiple HPO methods are supported by toolkits like Hyperopt [160], Optuna [153] or Weights & Biases141414https://www.wandb.com/. A popular framework that combines modern HPO approaches with the Scikit-learn toolbox for ML in Python is auto-sklearn [194].

The availability of HPO implementations in R is comparably smaller. However, more established HPO tuners are either already shipped with the ML framework, or can be found, e.g., in the packages mlrMBO151515https://github.com/mlr-org/mlrMBO, irace [234], DiceOptim [254], or rBayesianOptimization161616https://github.com/yanyachen/rBayesianOptimization.

See Appendix C for more information

6.5 When to Terminate HPO