HYPER-SNN: Towards Energy-efficient Quantized Deep Spiking Neural Networks for Hyperspectral Image Classification

Abstract

Hyperspectral images (HSIs) provide rich spectral–spatial information across a series of contiguous spectral bands. However, the accurate processing of the spectral and spatial correlation between the bands requires the use of energy-expensive 3-D Convolutional Neural Networks (CNNs). To address this challenge, we propose the use of Spiking Neural Networks (SNNs) that are generated from iso-architecture CNNs and trained with quantization-aware gradient descent to optimize their weights, membrane leak, and firing thresholds. During both training and inference, the analog pixel values of a HSI are directly applied to the input layer of the SNN without the need to convert to a spike-train. The reduced latency of our training technique combined with high activation sparsity yields significant improvements in computational efficiency. We evaluate our proposal using three HSI datasets on a 3-D and a 3-D/2-D hybrid convolutional architecture. We achieve overall accuracy, average accuracy, and kappa coefficient of , , and respectively with time steps (inference latency) and -bit weight quantization on the Indian Pines dataset. In particular, our models achieved accuracies similar to state-of-the-art (SOTA) with and less compute energy on average over three HSI datasets than an iso-architecture full-precision and 6-bit quantized CNN, respectively.

Index Terms:

hyperspectral images, spiking neural networks, quantization-aware, gradient descent, indian pinesI Introduction

Hyperspectral imaging, which extracts rich spatial-spectral information about the ground surface, has shown immense promise in remote sensing [1]. It is currently used in several applications ranging from geological surveys [2], to the detection of camouflaged vehicles [3]. In hyperspectral images (HSIs), each pixel can be considered as a high-dimensional vector where each entry corresponds to the spectral reflectivity [1] of a particular wavelength. The goal of the classification task is to assign a unique semantic label to each pixel [4].

For HSI classification, several spectral feature-based methods have been proposed, including support vector machine [5], random forest [6], canonical correlation forest [7], and multinomial logistic regression [8]. To improve the accuracy of HSI classification, researchers have integrated spatial features into existing learning methods [9]. Some spectral-spatial methods for classifying HSIs include fusing correlation coefficient and sparse representation [10], Boltzmann entropy-based band selection [11], joint sparse model and discontinuity preserving relaxation [12], and extended morphological profiles [13, 14]. Some of these methods have also been proposed to exploit the spatial context with various morphological operations for HSI classification. However, these spectral-spatial feature extraction methods rely on hand-designed descriptions, prior information, and empirical hyperparameters [1].

Lately, convolutional neural networks (CNNs) have yielded higher accuracy than some hand-designed features [15]. CNNs have shown promise in multiple applications where visual information processing is required, including image classification [16], object detection [17], semantic segmentation [18], and depth estimation [19]. In particular, CNN-based methods act as an end-to-end feature extractor that consists of a series of hierarchical filtering layers for global optimization. The 2-D CNN stacked autoencoder [1] was the first attempt to extract deep features from its compressed latent space to classify HSIs. Following this work, [20] employed a 2-D CNN model to extract the spatial information in a supervised manner and classify the raw hyperspectral images. The multibranch selective kernel network with attention [21] and pixel-block pair based data augmentation techniques [22] were developed to address the gradient vanishing and overfitting problems. To extract the spatial-spectral features jointly from the raw HSI, researchers proposed a 3-D CNN architecture [23], which achieves even better classification results. Authors in [24, 25, 26] created multiscale spatiospectral relationships using 3-D CNN and fused the features using a 2-D CNN to extract more robust representation of spectral–spatial information.

However, the performance and success of multi-layer CNNs are generally associated with high power and energy costs [27]. A typical hyperspectral image cube consists of several hundred spectral frequency bands, and hence, classifying these images using traditional CNNs require a large amount of computational power, especially when real time processing is necessary, as in target tracking or identification [28]. The high energy cost and the demand for deployment of HSI sensors in battery-powered edge devices motivates exploring alternative lightweight energy-efficient HSI classification models.

In particular, low-latency spiking neural networks (SNNs) [29] have gained attention because they can be more computational efficient than CNNs for a variety of applications, including image analysis. To achieve this goal, analog inputs are first encoded into a sequence of spikes using one of a variety of proposed encoding methods, including rate coding [30, 31], direct coding [32], temporal coding [33], rank-order coding [34], phase coding [35], and other exotic coding schemes [36, 37]. Among these, rate and direct coding have shown competitive performance on complex tasks [30, 31] while others are either limited to simpler tasks such as learning the XOR function and classifying MNIST images or require a large number of spikes for inference.

In particular, for rate coding, the analog value is converted to a spike train using a Poisson generator function with a rate proportional to the input pixel value. The number of timesteps in each train is inversely proportional to the quantization error in the representation, as illustrated in Fig. 1(b). [31]. In contrast, in direct-input encoding, the analog pixel values are fed into the first convolutional layer as multi-bit values that are fixed for all timesteps. [32].

In addition to accommodating various forms of encoding inputs, supervised learning algorithms for SNNs have overcome various roadblocks associated with the discontinuous derivative of the spike activation function [38, 39]. In particular, recent works have shown that SNNs can be efficiently converted from artifical neural networks (ANNs) by approximating the activation value of ReLU neurons with the firing rate of spiking neurons [31]. Low-latency SNNs trained using ANN-SNN conversion, coupled with supervised training, have been able to perform at par with ANNs in terms of classification accuracy in traditional image classification tasks [32]. This motivates this work which explores the effectiveness of SNNs for HSI classification.

More specifically, this paper provides the following contributions:

-

•

We propose two convolutional architectures for HSI classification that can yield classification accuracies similar to state-of-the-art (SOTA) and are compatible with our ANN-SNN conversion framework.

-

•

We propose a hybrid training algorithm that first converts an ANN for HSI classification to an iso-architecture SNN, and then trains the latter using a novel quantization-aware spike timing dependent backpropagation (Q-STDB) algorithm.

-

•

We evaluate and compare the energy-efficiency of the SNNs obtained by our training framework, with standard ANNs, using appropriate energy models, which reveal that our SNNs trained for HSI classification can offer significant improvement in compute efficiency.

The remainder of this paper is structured as follows. In Section II we present necessary background and related work. Section III and IV describe our quantization-aware SNN training method and network architectures respectively. We present the detailed experimental evaluations of our proposal in Section V. We show the improvement in energy-efficiency of our proposed SNN for all the HSI classification tasks in Section VI. Finally, the paper concludes in Section VII.

II Background and Related Work

II-A SNN Modeling

An SNN consists of a network of neurons that communicate via a sequence of spikes modulated by synaptic weights. The activity of pre-synaptic neurons modulates the membrane potential of postsynaptic neurons, generating an action potential or spike when the membrane potential crosses a firing threshold. The spiking dynamics of a neuron are generally modeled using either the Integrate-and-Fire (IF) [40] or Leaky-Integrate-and-Fire (LIF) model [41]. Both IF and LIF neurons accumulate the input current into their respective states or membrane potentials. The difference between the two models is that the membrane potential of a IF neuron does not change during the time period between successive input spikes while the LIF neuronal membrane potential leaks at a constant rate. In this work, we use the LIF model to convert ANNs trained with ReLU activations, to SNNs, because the leak term provides a tunable control knob that can reduce inference latency and spiking activity.The IF model can be characterized by the following differential equation

| (1) |

where is the membrane capacitance, and are the membrane potential and input synaptic current of the neuron at time . As illustrated in Fig. 1(a), integrates the incoming (pre-neuron) binary spikes multiplied by weights . The neuron generates an output spike when exceeds the firing threshold . However, because of its continuous-time representation, Eq. 1 is incompatible for implementation in common Machine Learning (ML) frameworks (e.g. Pytorch). Hence, we follow an iterative version evaluated in discrete time, within which spikes are characterized as binary values (1 represents the presence of a spike) [42].

| (2) |

| (3) |

is the output spike at time step . Note that the third term in Eq. 2 exhibits soft reset by reducing the membrane potential by the threshold at time step , if an output spike is generated at the time step. Alternatively, hard reset implies resetting to . Soft reset minimizes the information loss by allowing the spiking neuron to carry forward the surplus potential above the firing threshold to the next time step [42].

II-B SNN Training Techniques

Recent research on training supervised deep SNNs can be broadly divided into three categories: 1) ANN-to-SNN conversion-based training, 2) Spike timing dependent backpropagation (STDB), and 3) Hybrid training.

II-B1 ANN-to-SNN Conversion

Recent works have demonstrated that SNNs can be efficiently converted from ANNs by approximating the activation value of ReLU neurons with the firing rate of spiking neurons [43, 44, 45, 31, 46]. This technique uses standard backpropagation-based training for the ANN models and helps an iso-architecture SNN achieve superior classification accuracy in image recognition tasks [44, 31]. However, the SNNs resulting from these ANN-SNN conversion algorithms require an order of magnitude higher latency compared to other training techniques [31]. In this work, we use ANN-SNN conversion as an initial step in Q-STDB because it is of relatively low complexity and yields high classification accuracy on deep networks.

II-B2 STDB

The threshold comparator in the IF neuronal model yields a discontinuous and thus non-differentiable function, making it incompatible with the powerful gradient-descent based learning methods. Consequently, several approximate training methodologies have been proposed to overcome the challenges associated with non-differentiability [38, 47, 48, 49]. The key idea of these works is to approximate the spiking neuron functionality with a continuous differentiable model or use surrogate gradients as an approximate version of the real gradients to perform gradient descent based training. Unfortunately, SNNs trained using this approach generally require a large number of time steps, in the order of few hundreds, to process an input. As a result, the backpropagation step requires the gradients of the unrolled SNN to be integrated over all these time steps. This multiple-iteration backpropagation-through-time (BPTT) coupled with the exploding memory complexity has hindered the applicability of surrogate gradient based learning methods to deep convolutional architectures.

II-B3 Hybrid Training

A recent paper [42] proposed a hybrid training methodology where the ANN-SNN conversion is performed as an initialization step and is followed by an approximate gradient descent algorithm. The authors observed that combining the two training techniques helps the SNNs converge within a few epochs while requiring fewer time steps. Another recent paper [32] proposed a training scheme for deep SNNs in which the membrane leak and the firing threshold along with other network parameters (weights) are updated at the end of every batch via gradient descent after ANN-SNN conversion. Moreover, [32] applied direct-input encoding where the pixel intensities of an image are fed into the SNN input layer as fixed multi-bit values each timestep to reduce the number of required fewer time steps needed to achieve SOTA accuracy. Thus, the first convolutional layer composed of LIF neurons acts as both a feature extractor and spike-generator. This is similar to rate-coding except that the spike-rate of the first hidden layer is a function of its weights, membrane leak, and threshold parameters that are all learned by gradient descent. This work extends these hybrid learning techniques by incorporating weight quantization, as defined below.

III Proposed Quantized SNN Training Method

In this section, we evaluate and compare the different choices for SNN quantization in terms of compute efficiency and model accuracy. We then incorporate the chosen quantization technique into STDB, which we refer to as Q-STDB.

III-A Study of Quantization Choice

Uniform quantization transforms a weight element to a range where is the bit-width of the quantized integer representation. There are primarily two choices for the above transformation, known as affine and scale quantization. Detailed descriptions of these two types of quantization can be found in [50].

Our key motivation for SNN weight quantization is the hardware acceleration of inference using energy-efficient integer or fixed-point computational units implemented as crossbar array based processing-in-memory (PIM) accelerators. Note that the six transistor SRAM array based in-memory computing requires low-precision weights for multiply-and-accumulate (MAC) operations due to low density of the bit-cells. Previous research [51, 52] have proposed post-training SNN quantization tailored towards unsupervised learning, which may not scale to complex vision tasks without requiring high-precision ( bits). In contrast, in this work, we propose quantization-aware training, where the weights are fake quantized (see [50]) in the forward path computations, while the gradients and weight updates are calculated using the full precision weights. There are several choices for sharing quantization parameters among the tensor elements in a SNN. We refer to this choice as quantization granularity. We employ per-tensor (or per-layer) granularity where the same quantization parameters are shared by all elements in the tensor, because this reduces the computational cost compared to other granularity choices with no impact on model accuracy. Activations are similarly quantized, but only in the SNN input layer, since they are binary spikes in the remaining layers.

To evaluate the compute cost, let us consider a 3-D convolutional layer , the dominant layer in HSI classification models, that performs a tensor operation where is the input activation tensor, and is the output activation tensor, where , , , are the input height, width, channel size, and spectral size, respectively. Similarly, , , and are the output height, width, number of filters and the output spectral size, respectively, and , , represent the filter size in the three spatial dimensions. The result of the real-valued operation can be approximated with quantized tensors and , by first dequantizing them producing and respectively, and then performing the convolution. Note that both and have similar dimensions as and respectively. Assuming the tensors are scale-quantized per layer,

| (4) |

where and are scalar values for scale quantization representing the levels of the input and weight tensor respectively. Hence, scale quantization results in an integer convolution, followed by a point-wise floating-point multiplication for each output element. Given that a typical convolution operation involves a few hundred MAC operations (accumulate for binary spike inputs) to compute an output element, a single floating-point operation for the scaling shown in Eq. 4 is a negligible computational cost. Note that only needs to be quantized if is the input layer. In all other cases, and .

Although both affine and scale quantization enable the use of low-precision arithmetic, affine quantization results in higher computationally expensive inference as shown below.

| (5) |

where and are tensors of sizes equal to that of and respectively that consist of repeated elements of the scalar zero-values of the input activation and weight tensor respectively. On the other hand, and are the corresponding scale values. The first term in the numerator of Eq. III-A is the integer convolution operation similar to the one performed in scale quantization shown in Eq. 4. The second term contains integer weights and zero-points, which can be computed offline, and adds an element-wise addition during inference. The third term, however, involves the quantized activation , which cannot be computed offline. This extra computation, depending on the implementation, can introduce considerable overhead, reducing or even eliminating the throughput and energy advantage that low precision PIM accelerators offer over floating-point MAC units. Hence, we use scale quantization during inference.

Note, however, that our experiments detailed in Section V show that using scale quantization during SNN training degrades the test accuracy significantly. Hence, we propose that training should use affine quantization of both the weights and input layer activations. Note that for a integer math unit or PIM accelerator, we do not necessarily need to quantize the SNN membrane potentials which are obtained as results of the accumulate operations of the weight elements. This is because the membrane potentials only need to be compared with the threshold voltage once for each time step, which consumes negligible energy, and can be performed using high precision fixed-point comparators (in the periphery of the crossbar array for PIM accelerators). However, quantizing the potentials can reduce the data movement cost as discussed in Section VI-B.

III-B Q-STDB based Training

In this subsection, we derive the expressions to compute the gradients of the parameters at all layers for our training framework. Our framework, which is illustrated in Fig. 2, incorporates the quantization methodology described above into the STDB technique used to train SNNs [32], where the spatial and temporal credit assignment is performed by unrolling the network in time and employing BPTT.

Output Layer: The neuron model in the output layer only accumulates the incoming inputs without any leakage, does not generate an output spike, and is described by

| (6) |

where is the number of output labels, is a vector containing the membrane potential of output neurons, is the fake quantized weight matrix connecting the last two layers ( and ), and is a vector containing the spike signals from layer . The loss function is defined on at the last time step . We employ the cross-entropy loss and compute the softmax of . The output of the network is passed through a softmax layer that outputs a probability distribution. The loss function is defined as the cross-entropy between the true output () and the SNN’s predicted distribution ().

| (7) |

The derivative of the loss function with respect to the membrane potential of the neurons in the final layer is described by , where and are vectors containing the softmax and one-hot encoded values of the true label respectively. To compute the gradient at the current time step, the membrane potential at the previous step is considered as an input quantity [32]. With the weights being fake quantized, gradient descent updates the network parameters of the output layer as

| (8) | ||||

| (9) | ||||

| (10) |

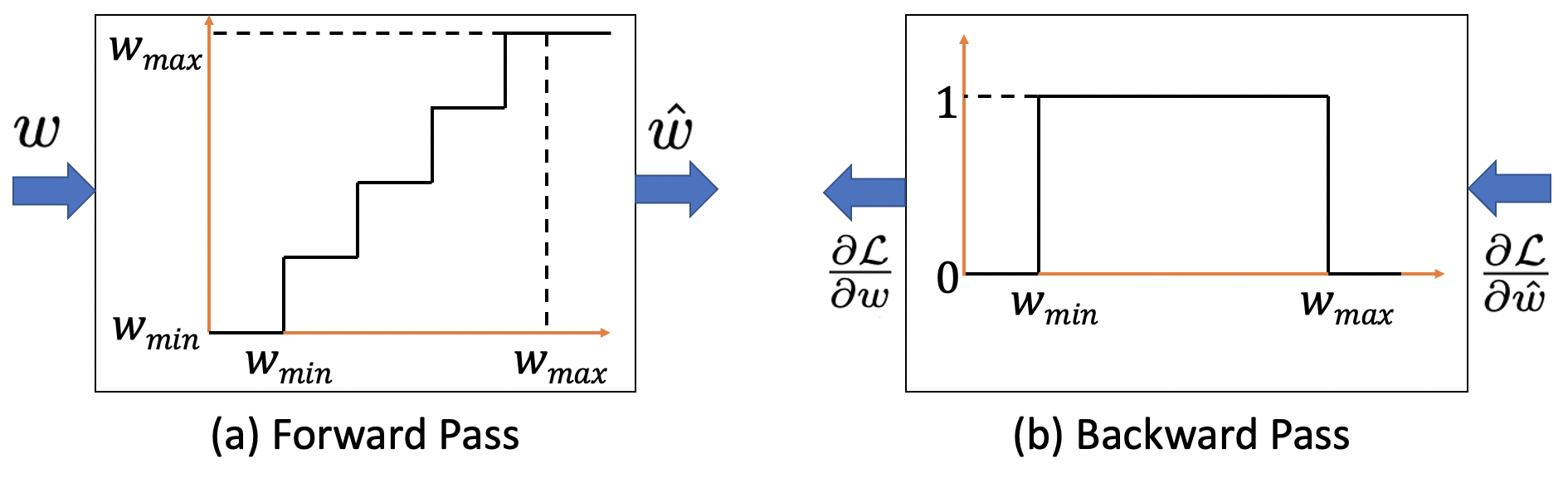

where is the learning rate (LR). Note that the derivative of the fake quantization function of the weights () is undefined at the step boundaries and zero everywhere, as shown in Fig. 3(a). Our training framework addresses this challenge by using the Straight-through Estimator (STE) [53], which approximates the derivative to be equal to 1 for inputs in the range as shown in Fig. 3(b), where and are the minimum and maximum values of the weights in a particular layer. Note that and are updated at the end of every mini-batch to ensure all the weights lie between and during the forward and backward computations in each training iteration. Hence, we use to compute the loss gradients in Eq. 9.

Hidden layers: The neurons in the hidden convolutional and fully-connected layers are defined by the quantized LIF model as

| (11) | ||||

| (12) |

where and represent the leak and threshold potential for all neurons in layer . All neurons in a layer possess the same leak and threshold value. This reduces the number of trainable parameters and we did not observe any significant improvement by assigning individual threshold/leak to each neuron. Given that the threshold is same for all neurons in a particular layer, it may seem redundant to train both the weights and threshold together. However, we observe that the number of time steps required to obtain the state-of-the-art classification accuracy decreases with this joint optimization. We hypothesize that this is because the optimizer is able to reach an improved local minimum when both parameters are tunable. The weight update in Q-STDB is calculated as

| (13) |

where and are the two discontinuous gradients. We calculate the former using STE described above, while the latter is approximated using surrogate gradient [48] shown below.

| (14) |

Note that is a hyperparameter denoting the maximum value of the gradient. The threshold and leak update is computed similarly using BPTT [32].

IV Proposed Architectures

We developed two models, a 3-D and a hybrid fusion of 3-D and 2-D convolutional architectures, that are inspired by the recently proposed CNN models [23, 26, 25] used for HSI classification and compatible with our ANN-SNN conversion framework. We refer to the two models CNN-3D and CNN-32H. The models are trained without the bias term because it complicates parameter space exploration which increases the conversion difficulty and tends to increase conversion loss. The absence of the bias term implies that batch normalization [54] cannot be used as a regularizer during the training process. Instead, we use dropout [55] as the regularizer for both ANN and SNN training. Moreover, our models employ ReLU nonlinearity after each convolutional and linear layer (except the classifier layer) to further decrease the conversion loss due to the similarity between ReLU and LIF neurons. Also, our pooling operations use average pooling because for binary spike based activation layers, max pooling incurs significant information loss. Additionally, we modified the number of channels and convolutional layers to obtain a reasonable tradeoff between accuracy and compute efficiency. 2-D patches of sizes and were extracted for CNN-3D and CNN-32H respectively, without any reduction in dimensionality from each dataset. Higher sized patches increase the computational complexity without any significant improvement in test accuracy. Our model architectures are explicitly described in Table I.

V Experiments

V-A Datasets

We used three publicly available datasets, namely Indian Pines, Pavia University, and Salinas scene. A brief description follows for each one [56].

Indian Pines: The Indian Pines (IP) dataset consists of spatial pixels and spectral bands in a range of nm. It was captured using the AVIRIS sensor over North-Western Indiana, USA, with a ground sample distance (GSD) of m and has vegetation classes.

Pavia University: The Pavia University (PU) dataset consists of hyperspectral images with pixels in the spatial dimension, and spectral bands, ranging from to nm in wavelength. It was captured with the ROSIS sensor with GSD of m over the University of Pavia, Italy. It has a total of 9 urban land-cover classes.

Salinas Scene: The Salinas Scene (SA) dataset contains images with spatial dimension and spectral bands in the wavelength range of to nm. The water absorbing spectral bands have been discarded. It was captured with the AVIRIS sensor over Salinas Valley, California with a GSD of m. In total classes are present in this dataset.

For preprocessing, images in all the data sets are normalized to have a zero mean and unit variance. For our experiments, all the samples are randomly divided into two disjoint training and test sets. The limited 40% samples are used for training and the remaining 60% for performance evaluation.

| Layer | Number of | Size of | Stride | Padding | Dropout |

| type | filters | each filter | value | value | value |

| Architecture : CNN-3D | |||||

| 3-D Convolution | 20 | (3,3,3) | (1,1,1) | (0,0,0) | - |

| 3-D Convolution | 40 | (3,1,1) | (2,1,1) | (1,0,0) | - |

| 3-D Convolution | 84 | (3,3,3) | (1,1,1) | (1,0,0) | - |

| 3-D Convolution | 84 | (3,1,1) | (2,1,1) | (1,0,0) | - |

| 3-D Convolution | 84 | (3,1,1) | (1,1,1) | (1,0,0) | - |

| 3-D Convolution | 84 | (2,1,1) | (2,1,1) | (1,0,0) | - |

| Architecture : CNN-32H | |||||

| 3-D Convolution | 90 | (18,3,3) | (7,1,1) | (0,0,0) | - |

| 2-D Convolution | 64 | (3,3) | (1,1) | (0,0) | - |

| 2-D Convolution | 128 | (3,3) | (1,1) | (0,0) | - |

| Avg. Pooling | - | (4,4) | (4,4) | (0,0) | - |

| Dropout | - | - | - | - | 0.2 |

| Linear | 30998528 | - | - | - | - |

V-B Experimental Setup

V-B1 ANN Training

We performed full-precision ANN training for epochs using the standard SGD optimizer with an initial learning rate (LR) of that decayed by a factor of 0.1 after , , and epochs.

V-B2 Conversion and SNN Training

We first examine the distribution of the neuron input values over the total number of time steps across all neurons of the first layer for a small batch of HSI images (of size in our case) and set the layer threshold to the percentile of the scaled value of the evaluated threshold [32]. In our experiments we scale the initial thresholds by 0.8. Similarly, we then compute the thresholds of the subsequent layers sequentially by examining the distribution of their input values. Note that we use time steps to evaluate the thresholds, while the SNN training and inference are performed with only time steps. We keep the leak of each layer set to unity while evaluating initial thresholds. At the start of SNN training, we initialize the weights with those from the trained ANN and initialize the leak parameters to . We then perform the quantization-aware SNN training described in Section III for another epochs. We set = [48] and used the ADAM optimizer with a starting LR of which decays by a factor of after , , and epochs.

| A. ANN | B. Accuracy after | C. Accuracy after | D. Accuracy after | E. Accuracy after | F. Accuracy after | |||||||||||||

| Dataset | accuracy () | ANN-to-SNN conv. () | FP SNN training () | 4-bit SNN training (%) | 5-bit SNN training (%) | 6-bit SNN training (%) | ||||||||||||

| OA | AA | Kappa | OA | AA | Kappa | OA | AA | Kappa | OA | AA | Kappa | OA | AA | Kappa | OA | AA | Kappa | |

| Architecture : CNN-3D | ||||||||||||||||||

| IP | 98.86 | 98.42 | 98.55 | 57.68 | 50.88 | 52.88 | 98.92 | 98.76 | 98.80 | 97.08 | 95.64 | 95.56 | 98.38 | 97.78 | 98.03 | 98.68 | 98.34 | 98.20 |

| PU | 99.69 | 99.42 | 99.58 | 91.16 | 88.84 | 89.03 | 99.47 | 99.06 | 99.30 | 98.21 | 97.54 | 97.75 | 99.26 | 98.48 | 98.77 | 99.50 | 99.18 | 99.33 |

| SS | 98.89 | 98.47 | 98.70 | 81.44 | 76.72 | 80.07 | 98.49 | 97.84 | 98.06 | 96.47 | 93.16 | 94.58 | 97.25 | 95.03 | 95.58 | 97.95 | 97.09 | 97.43 |

| Architecture : CNN-32H | ||||||||||||||||||

| IP | 97.60 | 97.08 | 97.44 | 70.88 | 66.56 | 67.89 | 97.27 | 96.29 | 96.35 | 96.63 | 95.81 | 95.89 | 97.23 | 96.08 | 96.56 | 97.45 | 96.73 | 96.89 |

| PU | 99.50 | 99.09 | 99.30 | 94.96 | 90.12 | 93.82 | 99.38 | 98.83 | 99.13 | 99.17 | 98.41 | 98.68 | 99.25 | 98.84 | 98.86 | 99.35 | 98.88 | 98.95 |

| SS | 98.88 | 98.39 | 98.67 | 88.16 | 84.19 | 85.28 | 97.92 | 97.20 | 97.34 | 97.34 | 96.32 | 96.77 | 97.65 | 96.81 | 96.97 | 97.99 | 97.26 | 97.38 |

| Authors | ANN/SNN | Architecture | OA () | AA () | Kappa () |

| Dataset : Indian Pines | |||||

| Alipour-Fard | ANN | MSKNet | 81.73 | 71.4 | 79.2 |

| et al. (2020) [21] | |||||

| Song et al. | ANN | DFFN | 98.52 | 97.69 | 98.32 |

| (2018)[22] | |||||

| Zhong et al. | ANN | SSRN | 99.19 | 98.93 | 99.07 |

| (2018) [57] | |||||

| Roy et al. | ANN | HybridSN | 98.39 | 98.01 | 98.16 |

| (2020) [25] | |||||

| Hamida et al. | ANN | 6-layer | 98.29 | 97.52 | 97.72 |

| (2018) [23] | SNN | 3D CNN | 95.88 | 94.26 | 95.34 |

| Luo et al. | ANN | Hybrid | 96.15 | 94.96 | 95.73 |

| (2018) [26] | SNN | CNN | 94.90 | 94.08 | 94.78 |

| This work | ANN | CNN-3D | 98.86 | 98.42 | 98.55 |

| SNN | 98.68 | 98.34 | 98.20 | ||

| This work | ANN | CNN-32H | 97.60 | 97.08 | 97.44 |

| SNN | 97.45 | 96.73 | 96.89 | ||

| Dataset : Pavia University | |||||

| Alipour-Fard | ANN | MSKNet | 90.66 | 88.09 | 87.64 |

| et al. (2020) [21] | |||||

| Song et al. | ANN | DFFN | 98.73 | 97.24 | 98.31 |

| (2018)[22] | |||||

| Zhong et al. | ANN | SSRN | 99.61 | 99.56 | 99.33 |

| (2018) [57] | |||||

| Hamida et al. | ANN | 6-layer | 99.32 | 99.02 | 99.09 |

| (2018) [23] | SNN | 3D CNN | 98.55 | 98.02 | 98.28 |

| Luo et al. | ANN | Hybrid | 99.05 | 98.35 | 98.80 |

| (2018) [26] | SNN | CNN | 98.40 | 97.66 | 98.21 |

| This work | ANN | CNN-3D | 99.69 | 99.42 | 99.58 |

| SNN | 99.50 | 99.18 | 99.33 | ||

| This work | ANN | CNN-32H | 99.50 | 99.09 | 99.30 |

| SNN | 99.35 | 98.88 | 98.95 | ||

V-C ANN & SNN Inference Results

We have used the Overall Accuracy (OA), Average Accuracy (AA), and Kappa Coefficient evaluation measures to evaluate the HSI classification performance for our proposed architectures, similar to [23]. Here, OA represents the number of correctly classified samples out of the total test samples. AA represents the average of class-wise classification accuracies, and Kappa is a statistical metric used to assess the mutual agreement between the ground truth map and classification map. Column- in Table II shows the ANN accuracies, column- shows the accuracy after ANN-SNN conversion with timesteps. Column- shows the accuracy when we perform our proposed training without quantization, while columns 5 to 7 shows the SNN test accuracies obtained with Q-STDB for different bit precisions (4 to 6 bits) of the weights. We observe that for all the datasets, SNNs trained with 6-bit weights result in reduction in bit-precision compared to full-precision (32-bit) models and perform almost at par with the full precision ANNs on both the architectures. 4-bit weights do not incur significant accuracy drop as well, and can be used for applications demanding high energy-efficiency and low latency. Fig. 5 shows the confusion matrix for the HSI classification performance of the ANN and proposed SNN over the IP dataset for both the architectures. Although the membrane potentials do not need to be quantized as described in Section III, we observed that the model accuracy does not drop significantly even if we quantize them, and hence, the SNN results shown in Table II correspond to 6-bit membrane potentials. Moreover, quantized membrane potentials can reduce the data movement cost as discussed in Section VI-B.

The performance of our ANNs and SNNs trained via Q-STDB are compared with the current state-of-the-art ANNs used for HSI classification in Table III. Note that mere porting the ANN architectures used in [23, 26] to SNNs, and performing 6-bit Q-STDB results in significant accuracy drop, and hence, shows the efficacy of our proposed architectures.

V-C1 Q-STDB vs Post-Training Quantization (PTQ)

PTQ cannot always yield ultra low-precision SNNs with SOTA test accuracy. For example, for the IP dataset and CNN-32H architecture, the lowest bit precision of the weights that the SNNs can be trained with PTQ for no more than reduction in SOTA test accuracy is , if we limit the total number of time steps to . Fig. 4(b) shows the test accuracies for different bit precisions () of weights with PTQ on IP dataset. The weights can be further quantized to -bits if we increase the time steps to , which increases the latency. On the other hand, Q-STDB results in accurate ( deviation from ANN accuracy) bit SNNs with only time steps, which improves both the energy-efficiency and latency. The energy-efficiency of our proposed architectures trained with Q-STDB are quantified in Section VI.

V-C2 Affine vs Scale Quantization during Training

As illustrated in Section III, performing scale quantization during the forward path in training degrades the SNN accuracy significantly. Fig. 4(a) shows the test accuracies for affine and scale quantization during training with CNN-3D architecture on IP dataset.

VI Improvement in Energy-Delay Product

VI-A Spiking Activity

To model energy consumption, we assume a generated SNN spike consumes a fixed amount of energy [43]. Based on this assumption, earlier works [42, 31] have adopted the average spiking activity (also known as average spike count) of an SNN layer , denoted , as a measure of compute-energy of the model. In particular, is computed as the ratio of the total spike count in steps over all the neurons of the layer to the total number of neurons in that layer. Thus lower the spiking activity, the better the energy efficiency.

Fig. 6 shows the average number of spikes for each layer with Q-STDB when evaluated for samples from the IP testset for the CNN-3D architecture. Let the average be denoted by which is computed by summing all the spikes in a layer over time steps and dividing by the number of neurons in that layer. For example, the average spike count of the convolutional layer of the SNN is , which implies that over a timestep period each neuron in that layer spikes times on average over all input samples.

VI-B Floating point operations count (FLOPs) & Total Energy

Let us assume a 3-D convolutional layer having weight tensor that operates on an input activation tensor , where the notations are similar to the one used in Section III. We now quantify the energy consumed to produce the corresponding output activation tensor for an ANN and SNN, respectively. Our model can be extended to fully-connected layers with and as the number of input and output features respectively, and to 2-D convolutional layers, by shrinking a dimension of the feature maps. In particular, for an ANN, the total number of FLOPS for layer , denoted , is shown in row of Table IV [58, 59]. The formula can be easily adjusted for an SNN in which the number of FLOPs at layer is a function of the average spiking activity at the layer denoted as in Table IV. Thus, as the activation output gets sparser, the compute energy decreases.

For ANNs, FLOPs primary consist of multiply accumulate (MAC) operations of the convolutional and linear layers. On the contrary, for SNNs, except the first and last layer, the FLOPs are limited to accumulates (ACs) as the spikes are binary and thus simply indicate which weights need to be accumulated at the post-synaptic neurons. For the first layer, we need to use MAC units as we consume analog input111Note that for the hybrid coded data input we need to perform MAC at the first layer at , and AC operation during remaining timesteps at that layer. For the direct coded input, only MAC during the timestep is sufficient, as neither the inputs nor the weights change during remaining timesteps (i.e. ). (at timestep one). Hence, the compute energy for an ANN and an iso-architecture SNN model can be written as

| (15) | ||||

| (16) |

where is the total number of layers. Note that and are the energy consumption for a MAC and AC operation respectively. As shown in Table V, is lower than [60] in nm CMOS technology for 32-bit precision. To compute and for any arbitrary bit precision (6-bits in our work), we use [61], and [62]. These numbers may vary for different technologies, but generally, in most technologies, an AC operation is significantly less expensive than a MAC operation and its’ energy scales close to linearly with bit precision.

| Model | Number of FLOPs | |||

| Notation | 3-D Conv. layer | 2-D Conv. layer | FC layer | |

Fig. 7 illustrates the compute energy consumption and FLOPs for full precision ANN and 6-bit quantized SNN models of the two proposed architectures while classifying the IP, PU, and SS datasets, where the energy is normalized to that of an equivalent ANN. We also consider -bit ANN models to compare the energy-efficiency of low-precision ANNs and SNNs. As seen in Fig. 7, 6-bit ANN models are energy-efficient compared to 32-bit ANN models due to the similar factor of improvement in MAC energy (see Table V). Note that we can achieve the HSI test accuracies shown in Table II with quantized ANNs as well.

The FLOPs for SNNs obtained by our proposed training framework is smaller than that for an ANN with similar number of parameters due to low spiking activity. Moreover, because the ACs consume significantly less energy than MACs for all bit precisions, SNNs are significantly more compute efficient. In particular, for CNN-3D on IP, our proposed SNN consumes and less compute energy than an iso-architecture full-precision and 6-bit ANN with similar parameters respectively. The improvements become and respectively on averaging across the two network architectures and three datasets. Note that we did not consider the memory access energy in our evaluation because it is dependent on the underlying system architecture. In general, SNNs incur significant data movement because both the membrane potentials and weights need to be fetched from the on-chip memory. Q-STDB addresses the memory cost by reducing their bit precisions by (see Section V-C) compared to full-precision models. Moreover, there have been many proposals to reduce the memory cost by data buffering [63], computing in non-volatile crossbar memory arrays [64], and data reuse with energy-efficient dataflows [65]. All these techniques can be complemented with Q-STDB to further decrease the memory cost.

| Bit-precision | Operation | Energy () |

| 32 | Mutiply-and-Accumulate (MAC) | |

| Accumulate (AC) | ||

| 6 | Mutiply-and-Accumulate (MAC) | |

| Accumulate (AC) |

VII Conclusions and Broader Impact

In this paper, we propose a spiking version of a 3-D and hybrid combination of 3-D and 2-D convolutional architectures for HSI classification. We present a quantization-aware training technique, that yields highly accurate low-precision SNNs, which can be accelerated by integer math units or PIM accelerators. Our quantized SNNs offer significant improvements in energy consumption compared to both full and low-precision ANNs for HSI classification.

Our proposal results in energy-efficient SNN models, which can be readily deployed in HSI sensors, thereby eliminating the bandwidth and privacy concerns of going to the cloud. Since the commercial applications of HSI analysis are broadly expanding and the models required to train HSI are becoming deeper, energy-efficiency becomes a key concern, as seen in traditional computer vision tasks. To the best of our knowledge, this work is the first to address energy-efficiency of HSI models, and can hopefully inspire more research in low power algorithm-hardware co-design of neural network models for HSI classification.

References

- [1] Y. Chen, Z. Lin, X. Zhao, G. Wang, and Y. Gu, “Deep learning-based classification of hyperspectral data,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 7, no. 6, pp. 2094–2107, 2014.

- [2] Y. Wan, Y. Fan, and M. Jin, “Application of hyperspectral remote sensing for supplementary investigation of polymetallic deposits in huaniushan ore region, northwestern china,” Scientific Reports, vol. 11, p. 440, 01 2021.

- [3] A. Papp, J. Pegoraro, D. Bauer, P. Taupe, C. Wiesmeyr, and A. Kriechbaum-Zabini, “Automatic annotation of hyperspectral images and spectral signal classification of people and vehicles in areas of dense vegetation with deep learning,” Remote Sensing, vol. 12, no. 13, 2020.

- [4] Z. Zheng, Y. Zhong, A. Ma, and L. Zhang, “FPGA: Fast patch-free global learning framework for fully end-to-end hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 58, no. 8, pp. 5612–5626, 2020.

- [5] F. Melgani and L. Bruzzone, “Classification of hyperspectral remote sensing images with support vector machines,” IEEE Transactions on Geoscience and Remote Sensing, vol. 42, no. 8, pp. 1778–1790, 2004.

- [6] M. Pal, “Random forests for land cover classification,” in IGARSS 2003. 2003 IEEE International Geoscience and Remote Sensing Symposium. Proceedings (IEEE Cat. No.03CH37477), vol. 6, no. 1, 2003, pp. 3510–3512 vol.6.

- [7] J. Xia, N. Yokoya, and A. Iwasaki, “Hyperspectral image classification with canonical correlation forests,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 1, pp. 421–431, 2017.

- [8] B. Krishnapuram, L. Carin, M. A. T. Figueiredo, and A. J. Hartemink, “Sparse multinomial logistic regression: fast algorithms and generalization bounds,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 6, pp. 957–968, 2005.

- [9] G. Camps-Valls, L. Gomez-Chova, J. Munoz-Mari, J. Vila-Frances, and J. Calpe-Maravilla, “Composite kernels for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 3, no. 1, pp. 93–97, 2006.

- [10] B. Tu, X. Zhang, X. Kang, G. Zhang, J. Wang, and J. Wu, “Hyperspectral image classification via fusing correlation coefficient and joint sparse representation,” IEEE Geoscience and Remote Sensing Letters, vol. 15, no. 3, pp. 340–344, 2018.

- [11] P. Gao, J. Wang, H. Zhang, and Z. Li, “Boltzmann entropy-based unsupervised band selection for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 16, no. 3, pp. 462–466, 2019.

- [12] Q. Gao, S. Lim, and X. Jia, “Hyperspectral image classification using joint sparse model and discontinuity preserving relaxation,” IEEE Geoscience and Remote Sensing Letters, vol. 15, no. 1, pp. 78–82, 2018.

- [13] J. A. Benediktsson, J. A. Palmason, and J. R. Sveinsson, “Classification of hyperspectral data from urban areas based on extended morphological profiles,” IEEE Transactions on Geoscience and Remote Sensing, vol. 43, no. 3, pp. 480–491, 2005.

- [14] J. Li, P. R. Marpu, A. Plaza, J. M. Bioucas-Dias, and J. A. Benediktsson, “Generalized composite kernel framework for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 51, no. 9, pp. 4816–4829, 2013.

- [15] A. Krizhevsky et al., “ImageNet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems, 2012, pp. 1097–1105.

- [16] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [17] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 39, no. 6, p. 1137–1149, Jun. 2017.

- [18] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” arXiv preprint arXiv:1703.06870, 2018.

- [19] V. K. Repala and S. R. Dubey, “Dual CNN models for unsupervised monocular depth estimation,” arXiv preprint arXiv:1804.06324, 2019.

- [20] K. Makantasis, K. Karantzalos, A. Doulamis, and N. Doulamis, “Deep supervised learning for hyperspectral data classification through convolutional neural networks,” in 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), vol. 1, no. 1, 2015, pp. 4959–4962.

- [21] T. Alipour-Fard, M. E. Paoletti, J. M. Haut, H. Arefi, J. Plaza, and A. Plaza, “Multibranch selective kernel networks for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 1, no. 1, pp. 1–5, 2020.

- [22] W. Song, S. Li, L. Fang, and T. Lu, “Hyperspectral image classification with deep feature fusion network,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 6, pp. 3173–3184, 2018.

- [23] A. Ben Hamida, A. Benoit, P. Lambert, and C. Ben Amar, “3-D deep learning approach for remote sensing image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 8, pp. 4420–4434, 2018.

- [24] H. Lee and H. Kwon, “Going deeper with contextual cnn for hyperspectral image classification,” IEEE Transactions on Image Processing, vol. 26, no. 10, pp. 4843–4855, 2017.

- [25] S. K. Roy, G. Krishna, S. R. Dubey, and B. B. Chaudhuri, “HybridSN: Exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 17, no. 2, pp. 277–281, 2020.

- [26] Y. Luo, J. Zou, C. Yao, X. Zhao, T. Li, and G. Bai, “HSI-CNN: A novel convolution neural network for hyperspectral image,” in 2018 International Conference on Audio, Language and Image Processing (ICALIP), vol. 1, no. 1, 2018, pp. 464–469.

- [27] D. Li, X. Chen, M. Becchi, and Z. Zong, “Evaluating the energy efficiency of deep convolutional neural networks on CPUs and GPUs,” in 2016 IEEE International Conferences on Big Data and Cloud Computing (BDCloud), Social Computing and Networking (SocialCom), Sustainable Computing and Communications (SustainCom) (BDCloud-SocialCom-SustainCom), vol. 1, no. 1, 2016, pp. 477–484.

- [28] Hien Van Nguyen, A. Banerjee, and R. Chellappa, “Tracking via object reflectance using a hyperspectral video camera,” in 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition - Workshops, vol. 1, no. 1, 2010, pp. 44–51.

- [29] M. Pfeiffer and T. Pfeil, “Deep learning with spiking neurons: Opportunities and challenges,” Frontiers in Neuroscience, vol. 12, p. 774, 2018.

- [30] P. U. Diehl, G. Zarrella, A. Cassidy, B. U. Pedroni, and E. Neftci, “Conversion of artificial recurrent neural networks to spiking neural networks for low-power neuromorphic hardware,” in 2016 IEEE International Conference on Rebooting Computing (ICRC). IEEE, 2016, pp. 1–8.

- [31] A. Sengupta, Y. Ye, R. Wang, C. Liu, and K. Roy, “Going deeper in spiking neural networks: VGG and residual architectures,” Frontiers in Neuroscience, vol. 13, p. 95, 2019.

- [32] N. Rathi and K. Roy, “DIET-SNN: Direct input encoding with leakage and threshold optimization in deep spiking neural networks,” arXiv preprint arXiv:2008.03658, 2020.

- [33] I. M. Comsa, K. Potempa, L. Versari, T. Fischbacher, A. Gesmundo, and J. Alakuijala, “Temporal coding in spiking neural networks with alpha synaptic function,” in ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), vol. 1, no. 1, 2020, pp. 8529–8533.

- [34] S. R. Kheradpisheh and T. Masquelier, “Temporal backpropagation for spiking neural networks with one spike per neuron,” International Journal of Neural Systems, vol. 30, no. 06, May 2020.

- [35] J. Kim, H. Kim, S. Huh, J. Lee, and K. Choi, “Deep neural networks with weighted spikes,” Neurocomputing, vol. 311, pp. 373–386, 2018.

- [36] D. A. Almomani, M. Alauthman, M. Alweshah, O. Dorgham, and F. Albalas, “A comparative study on spiking neural network encoding schema: implemented with cloud computing,” Cluster Computing, vol. 22, 06 2019.

- [37] G. Datta, S. Kundu, and P. A. Beerel, “Training energy-efficient deep spiking neural networks with single-spike hybrid input encoding,” arXiv preprint arXiv:2107.12374, 2021.

- [38] J. H. Lee, T. Delbruck, and M. Pfeiffer, “Training deep spiking neural networks using backpropagation,” Frontiers in Neuroscience, vol. 10, p. 508, 2016.

- [39] Y. Wu, L. Deng, G. Li, J. Zhu, Y. Xie, and L. Shi, “Direct training for spiking neural networks: Faster, larger, better,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, 2019, pp. 1311–1318.

- [40] S. Lu and A. Sengupta, “Exploring the connection between binary and spiking neural networks,” arXiv preprint arXiv:2002.10064, 2020.

- [41] C. Lee, S. S. Sarwar, P. Panda, G. Srinivasan, and K. Roy, “Enabling spike-based backpropagation for training deep neural network architectures,” Frontiers in Neuroscience, vol. 14, p. 119, 2020.

- [42] N. Rathi, G. Srinivasan, P. Panda, and K. Roy, “Enabling deep spiking neural networks with hybrid conversion and spike timing dependent backpropagation,” arXiv preprint arXiv:2005.01807, 2020.

- [43] Y. Cao, Y. Chen, and D. Khosla, “Spiking deep convolutional neural networks for energy-efficient object recognition,” International Journal of Computer Vision, vol. 113, pp. 54–66, 05 2015.

- [44] B. Rueckauer, I.-A. Lungu, Y. Hu, M. Pfeiffer, and S.-C. Liu, “Conversion of continuous-valued deep networks to efficient event-driven networks for image classification,” Frontiers in Neuroscience, vol. 11, p. 682, 2017.

- [45] P. U. Diehl, D. Neil, J. Binas, M. Cook, S. Liu, and M. Pfeiffer, “Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing,” in 2015 International Joint Conference on Neural Networks (IJCNN), vol. 1, no. 1, 2015, pp. 1–8.

- [46] Y. Hu, H. Tang, and G. Pan, “Spiking deep residual network,” arXiv preprint arXiv:1805.01352, 2018.

- [47] P. Panda and K. Roy, “Unsupervised regenerative learning of hierarchical features in spiking deep networks for object recognition,” arXiv preprint arXiv:1602.01510, 2016.

- [48] G. Bellec, D. Salaj, A. Subramoney, R. Legenstein, and W. Maass, “Long short-term memory and learning-to-learn in networks of spiking neurons,” arXiv preprint arXiv:1803.09574, 2018.

- [49] E. O. Neftci, H. Mostafa, and F. Zenke, “Surrogate gradient learning in spiking neural networks: Bringing the power of gradient-based optimization to spiking neural networks,” IEEE Signal Processing Magazine, vol. 36, no. 6, pp. 51–63, 2019.

- [50] S. R. Jain, A. Gural, M. Wu, and C. H. Dick, “Trained quantization thresholds for accurate and efficient fixed-point inference of deep neural networks,” arXiv preprint arXiv:1903.08066, 2020.

- [51] N. Rathi, P. Panda, and K. Roy, “STDP based pruning of connections and weight quantization in spiking neural networks for energy efficient recognition,” arXiv preprint arXiv:1710.04734, 2017.

- [52] M. B. G. Sulaiman, K. C. Juang, and C. C. Lu, “Weight quantization in spiking neural network for hardware implementation,” in 2020 IEEE International Conference on Consumer Electronics - Taiwan (ICCE-Taiwan), vol. 1, no. 1, 2020, pp. 1–2.

- [53] M. Courbariaux, I. Hubara, D. Soudry, R. El-Yaniv, and Y. Bengio, “Binarized neural networks: Training deep neural networks with weights and activations constrained to +1 or -1,” arXiv preprint arXiv:1602.02830, 2016.

- [54] N. Bjorck, C. P. Gomes, B. Selman, and K. Q. Weinberger, “Understanding batch normalization,” in Advances in Neural Information Processing Systems, 2018, pp. 7694–7705.

- [55] N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: A simple way to prevent neural networks from overfitting,” Journal of Machine Learning Research, vol. 15, pp. 1929–1958, 06 2014.

- [56] M. Graña, M. A. Veganzons, and B. Ayerdi, “Hyperspectral remote sensing scenes,” http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes.

- [57] Z. Zhong, J. Li, Z. Luo, and M. Chapman, “Spectral–spatial residual network for hyperspectral image classification: A 3-D deep learning framework,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 2, pp. 847–858, 2018.

- [58] S. Kundu, M. Nazemi, M. Pedram, K. M. Chugg, and P. Beerel, “Pre-defined sparsity for low-complexity convolutional neural networks,” IEEE Transactions on Computers, 2020.

- [59] S. Kundu, S. Prakash, H. Akrami, P. A. Beerel, and K. M. Chugg, “pSConv: A pre-defined sparse kernel based convolution for deep CNNs,” in 2019 57th Annual Allerton Conference on Communication, Control, and Computing (Allerton). IEEE, 2019, pp. 100–107.

- [60] M. Horowitz, “1.1 Computing’s energy problem (and what we can do about it),” in 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC). IEEE, 2014, pp. 10–14.

- [61] B. Moons, R. Uytterhoeven, W. Dehaene, and M. Verhelst, “14.5 envision: A 0.26-to-10TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable convolutional neural network processor in 28nm fdsoi,” in 2017 IEEE International Solid-State Circuits Conference (ISSCC), vol. 1, no. 1, 2017, pp. 246–247.

- [62] W. Simon, J. Galicia, A. Levisse, M. Zapater, and D. Atienza, “A fast, reliable and wide-voltage-range in-memory computing architecture,” in 2019 56th ACM/IEEE Design Automation Conference (DAC), vol. 1, no. 1, 2019, pp. 1–6.

- [63] Y. Shen, M. Ferdman, and P. Milder, “Escher: A CNN accelerator with flexible buffering to minimize off-chip transfer,” in 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), vol. 1, no. 1, 2017, pp. 93–100.

- [64] B. Chen, F. Cai, J. Zhou, W. Ma, P. Sheridan, and W. D. Lu, “Efficient in-memory computing architecture based on crossbar arrays,” in 2015 IEEE International Electron Devices Meeting (IEDM), vol. 1, no. 1, 2015, pp. 1–4.

- [65] Y.-H. Chen, J. Emer, and V. Sze, “Eyeriss: A spatial architecture for energy-efficient dataflow for convolutional neural networks,” ACM SIGARCH Computer Architecture News, vol. 44, 06 2016.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/26f74f48-c2be-4ba7-baaa-9606d0edf81a/bio_gourav_white.png) |

Gourav Datta received his bachelors’ degree in Instrumentation Engineering with a minor in Electronics and Electrical Communication Engineering from Indian Institute of Technology (IIT) Kharagpur, India in 2018. He then joined the Ming Hsieh Department of Electrical and Computer Engineering at the University of Southern California where he is currently pursuing a PhD degree. He interned at Apple Inc. and INRIA Research Centre in the summers of 2019 and 2017, respectively. His research focuses on the entire computing stack, including devices, circuits, architectures and algorithms for accelerating machine learning workloads. During his tenure at IIT Kharagpur, he has received the Institute Silver medal and was adjudged the best outgoing student in academics in his batch. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/26f74f48-c2be-4ba7-baaa-9606d0edf81a/bio_sk_pic.jpg) |

Souvik Kundu received his M. Tech degree in Microelectronics and VLSI design from Indian Institute of Technology Kharagpur, India in 2015. He worked as R & D Engineer II at Synopsys India Pvt. Ltd. and as Digital Design Engineer at Texas Instruments India Pvt. Ltd. from 2015 to 2016 and from 2016 to 2017, respectively. He is currently working towards the Ph.D. degree in Electrical and Computer Engineering at the University of Southern California, Los Angeles, CA, USA. His research focuses on energy aware sparsity, model search, algorithm-hardware co-design of robust and energy-efficient neural networks for CMOS and beyond CMOS technology. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/26f74f48-c2be-4ba7-baaa-9606d0edf81a/headshot.jpg) |

Akhilesh R. Jaiswal is a Research Assistant Professor of Electrical and Computer Engineering and a Scientist at USC’s Information Sciences Institute’s (ISI) Application Specific Intelligent Computing (ASIC) Lab. Prior to USC/ISI, Dr. Jaiswal was a Senior Research Engineer with GLOBALFOUNDIRES (GF) at Malta. Dr. Jaiswal received his Ph.D. degree in Nano-electronics from Purdue University in May 2019. As a part of doctoral program his research focused on 1) CMOS based analog and digital in-memory and near-memory computing using standard memory bit-cells for beyond von-Neumann computing. 2) Exploration of bio-mimetic devices and circuits using emerging non-volatile technologies for Neuromorphic computing. His current research interest includes exploration of ’alternate computing paradigms’ using ’alternate state variables’. Dr. Jaiswal has authored several publications and holds 15+ issued and several pending patents with the USPTO. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/26f74f48-c2be-4ba7-baaa-9606d0edf81a/bio_Beerel_pic.jpg) |

Peter A. Beerel received his B.S.E. degree in Electrical Engineering from Princeton University, Princeton, NJ, in 1989 and his M.S. and Ph.D. degrees in Electrical Engineering from Stanford University, Stanford, CA, in 1991 and 1994, respectively. He then joined the Ming Hsieh Department of Electrical and Computer Engineering at the University of Southern California where he is currently a professor and the Associate Chair of the Computer Engineering Division. He is also a Research Director at the Information Science Institute at USC. Previously, he co-founded TimeLess Design Automation to commercialize an asynchronous ASIC flow in 2008 and sold the company in 2010 to Fulcrum Microsystems which was bought by Intel in 2011. His interests include a variety of topics in computer-aided design, machine learning, hardware security, and asynchronous VLSI and the commercialization of these technologies. He is a Senior Member of the IEEE. |