Hybrid PHD-PMB Trajectory Smoothing Using Backward Simulation

Abstract

The probability hypothesis density (PHD) and Poisson multi-Bernoulli (PMB) filters are two popular set-type multi-object filters. Motivated by the fact that the multi-object filtering density after each update step in the PHD filter is a PMB without approximation, in this paper we present a multi-object smoother involving PHD forward filtering and PMB backward smoothing. This is achieved by first running the PHD filtering recursion in the forward pass and extracting the PMB filtering densities after each update step before the Poisson Point Process approximation, which is inherent in the PHD filter update. Then in the backward pass we apply backward simulation for sets of trajectories to the extracted PMB filtering densities. We call the resulting multi-object smoother hybrid PHD-PMB trajectory smoother. Notably, the hybrid PHD-PMB trajectory smoother can provide smoothed trajectory estimates for the PHD filter without labeling or tagging, which is not possible for existing PHD smoothers. Also, compared to the trajectory PHD filter, which can only estimate alive trajectories, the hybrid PHD-PMB trajectory smoother enables the estimation of the set of all trajectories. Simulation results demonstrate that the hybrid PHD-PMB trajectory smoother outperforms the PHD filter in terms of both state and cardinality estimates, and the trajectory PHD filter in terms of false detections.

I Introduction

Multi-object filtering concerns the estimation of the number of objects and their current states based on a sequence of noisy measurements [1, 2]. This problem is compounded by missed detections, clutter, and data association uncertainties. To address the multi-object filtering problem in a computationally efficient way, the probability hypothesis density (PHD) filter recursively propagates the first-order moment approximation of the multi-object posterior density, which is also known as the PHD [3]. By doing so, the PHD filter operates on the single-object space and, consequently, avoids the data association problem arising from multiple objects. Due to its computational efficiency, the PHD filter has been widely used in many applications [4, 5, 6, 7, 8, 9].

The estimation of multi-object states by also using measurements at later time steps is called multi-object smoothing. The PHD smoother involves first a forward PHD filtering recursion and then a backward smoothing recursion [10, 11]; the latter is performed by propagating the PHD of the multi-object smoothing density backward in time. Particle filter implementations of the PHD smoother were provided in [10, 11, 12, 13, 14] and the closed-form Gaussian implementations were presented in [15, 16]. However, it has been reported in [11, 12, 16] that, compared to the PHD filter, the PHD smoother reduces the localization error, but it does not necessarily improve the cardinality estimate. In particular, the PHD smoother suffers from premature object deaths [12] by reporting missed detections at earlier time steps when objects disappear, with a lag equal to the smoothing lag.

A common drawback of the PHD filter and smoother is that they do not maintain track continuity, and thus, cannot provide object trajectory estimates. A simple track building procedure for the PHD filter is by adding labels/tags to the object states or PHD components, and track continuity can be established by linking the object states or PHD components with the same label/tag over time [17, 18]. These methods may work well in some scenarios but can also result in track switches, and even missed and false detections, see [19].

A mathematically rigorous way to build trajectories for the PHD filter without using any labels/tags is by considering sets of trajectories [20]. The resulting filter is called the trajectory PHD filter, which directly provides object trajectory estimates by recursively propagating a Poisson multi-object density on the space of sets of trajectories [19]. Furthermore, smoothed trajectories can be obtained in forward filtering by considering smoothing-while-filtering. However, the trajectory PHD filter is mainly used for estimating trajectories of alive objects, and it cannot properly estimate the set of all trajectories, which also includes trajectories of dead objects.

The possibility of generating sets of smoothed trajectories for both alive and dead objects using a sequence of (unlabeled) multi-object filtering densities has been explored in [21]. In particular, this work showed that we can sample sets of trajectories using backward simulation [22] even when the forward multi-object filter is an unlabeled filter that does not explicitly maintain track continuity. The presented multi-trajectory smoothing solution has been applied to the Poisson multi-Bernoulli (PMB) filters [23, 24], and the resulting multi-trajectory smoother has achieved state-of-the-art multi-trajectory estimation performance. However, there are no similar methods for the PHD filters111Backward simulation was first applied to the particle-based PHD filter in [13] to generate smoothed trajectories with labels, but the algorithm developed in [13] can only track up to two objects..

In this paper, we present a hybrid PHD-PMB trajectory smoother, which involves PHD forward filtering and PMB backward smoothing. In PHD filtering, the predicted multi-object density is of the form Poisson Point Process (PPP) without approximation, but the updated multi-object density is of the form PMB [23]. The Bayesian filtering recursion is achieved by finding the Poisson approximation of the PMB density that matches its PHD. The key difference between our proposed hybrid PHD-PMB trajectory smoother and all the existing PHD smoothers [10, 11, 12, 14, 15, 16, 13] is that we perform backward smoothing on the PMB multi-object densities in the PHD filtering recursion before the Poisson approximation. Considering that the PMB representation of the multi-object posterior is an inherent intermediate result that we obtain for free in the PHD update step (as we will show in Section IV), it makes sense to perform smoothing using this more informative multi-object posterior representation.

The hybrid PHD-PMB trajectory smoother leverages the simplicity of PHD filtering [4] and the ability to generate smoothed trajectories using backward simulation [25, 21]. Importantly, the hybrid PHD-PMB trajectory smoother makes it possible to generate smoothed trajectories for all the objects that have existed in a theoretically sound manner without using any labels/tags. We compare the proposed hybrid PHD-PMB trajectory smoother to the PHD [4] and trajectory PHD [19] filters in a simulation study, and the results demonstrate that the proposed method has superior multi-object state and trajectory estimation performance. The results also show that the hybrid PHD-PMB trajectory smoother is more robust to the premature object death problem [11, 12].

The rest of the paper is organized as follows. In Section II, we introduce the background. The problem formulation is given in Section III. We present the filtering and smoothing recursions of the hybrid PHD-PMB trajectory smoother in Section IV. The results are presented in Section V, and the conclusions are drawn in Section VI.

II Background

In this section, we introduce the state variables of interest, densities on sets of objects and sets of trajectories, and the multi-object models. The set of finite subsets of a generic space is denoted by , and the cardinality of a set is . The sequence of ordered positive integers is . We use to denote a union of sets that are mutually disjoint and to denote the inner product . In addition, we use and to represent the Dirac and Kronecker delta functions, respectively, centered at .

II-A State Variables

The single-object state is described by a vector . A trajectory is represented as a variable where is the initial time step of the trajectory, is its length, and denotes a finite sequence of length that contains the object states at time steps . For two time steps and , , a trajectory in the time interval existing from time step to satisfies that , and the variable hence belongs to the set . A single trajectory in time interval therefore belongs to the space [20].

A set of single-object states is a finite subset of , and a set of trajectories is a finite subset of . The subset of trajectories in that were alive at time step where is denoted by

| (1) |

Given a single-object trajectory , the set of object states at time step is

| (2) |

and given a set of trajectories, the set of object states at time step is .

II-B Densities on Sets of Objects

Given a real-valued function on the space of finite sets of objects, its set integral is [26]

| (3) |

A function on the space is a multi-object density if and its set integral is one.

II-C Densities on Sets of Trajectories

Given a real-valued function on the single trajectory space , its integral is [20]

| (8) |

which goes through all possible start times, lengths and object states of trajectory . A function on the space is a multi-trajectory density if and its set integral is one. The trajectory PPP and trajectory MB densities are defined similar to their counterparts in (5) and (6), respectively. Given two single-object trajectories and , the trajectory Dirac delta function is defined as

| (9) |

and the multi-trajectory Dirac delta function centred at is defined as [26, Sec. 11.3.4.3]

| (10) |

Given a set of object states at time step , the set of trajectories in the time interval is

| (11) |

where trajectory has start time and length . Therefore, it holds that the multi-trajectory density , which is zero for trajectories outside the interval , takes the same value as the multi-object state density .

II-D Multi-Object Models

We consider the standard multi-object models [26]. For a given multi-object state at time , each object state is either detected with probability and generates one measurement with density , or missed detected with probability . The set of measurements at time step is the union of the object-generated measurements and PPP clutter with intensity .

Given the current multi-object state , each object survives with probability , and moves to a new state with a Markovian transition density , or dies with probability . The multi-object state at the next time step is the union of the surviving objects and new objects, which are born independently of the rest. The set of newborn objects is a PPP with intensity .

II-E Multi-Trajectory Dynamic Model

Performing backward smoothing on multi-trajectory density requires a dynamic model for the set of all trajectories that have existed up to the current time step. Given a set of all trajectories in the time interval , each trajectory “survives” with probability one, , and moves to a new state according to [20]

| (12) |

That is, if the object trajectory has died before time step , the trajectory remains unaltered with probability one. If trajectory exists at time step , it remains unaltered with probability , or the new final object state is generated according to the single-object transition density with probability . The set of trajectories in the time interval is the union of “surviving” trajectories and newborn trajectories, where each new trajectory has a deterministic start time , length , and its multi-object state is a PPP with intensity . The trajectory PPP birth at time step has intensity

| (13) |

III Problem Formulation

In backward smoothing for sets of trajectories, we aim to compute the multi-trajectory posterior density in a given time interval using a sequence of multi-object filtering densities and the multi-trajectory dynamic model.

We denote the multi-object state density at time step and the multi-trajectory density in time interval , both conditioned on the sequence of sets of measurements up to and including time step , by and , respectively. In forward filtering, the Bayes filter propagates the multi-object posterior density of in time via the prediction and update steps:

| (14) | ||||

| (15) |

where is the multi-object transition density and is the measurement likelihood. Given the multi-object models described in Section II-D, the predicted and posterior densities on the current set of object states are PMB mixtures (PMBMs) [23]. A PMB is a special case of PMBM with a single MB.

The multi-trajectory density can be obtained by applying the following multi-trajectory smoothing equation recursively backwards in time [21, Theorem 2]:

| (16) |

where the predicted multi-trajectory density for the multi-trajectory dynamic model in Section II-E is given by [21, Theorem 1]

| (17) |

It can be observed that the predicted multi-trajectory density can be evaluated by multiplying the multi-trajectory density , which takes the same value as , for trajectories that end at time step , for trajectories that exist at both time step and , and the density of newborn trajectories at time step .

The backward smoothing equation (16), in general, cannot be computed in closed form. An effective solution to evaluate the multi-trajectory posterior is given by backward simulation, which allows us to draw samples in the multi-object trajectory space [21]. Performing backward simulation requires a backward kernel to recursively draw samples of sets of trajectories.

The backward kernel for sets of trajectories conditioned on the set of trajectories in the time interval and the sequence of measurement sets up to and including time step , satisfies [21, Lemma 3]

| (18) |

That is, the backward kernel is proportional to the predicted multi-trajectory density if , and zero otherwise. Evaluating the backward kernel considers all different ways of associating the trajectories in to the trajectories in .

In this paper, the forward filtering is achieved using a PHD filter [3], and the PMB multi-object filtering densities before the Poisson approximation are stored at each time step. Then backward simulation is performed on these PMB filtering densities to obtain the approximate multi-trajectory posterior.

IV Hybrid PHD-PMB Trajectory Smoother

In this section, we present the forward filtering and backward smoothing recursions of the hybrid PHD-PMB trajectory smoother. An illustration of the complete recursion is shown in Fig. 1. We also discuss some practical considerations for a tractable linear Gaussian implementation.

IV-A Forward PHD Filtering

Lemma 1

Lemma 2

Given the Poisson multi-object predicted density of the form (5) with Poisson intensity and the multi-object measurement model described in Section II-D, the updated multi-object density by the measurement set is a PMB of the form (4), with Bernoulli components, one for each measurement. The updated Poisson intensity is

| (20) |

and the -th new Bernoulli component created by measurement , , is parameterized by

| (21a) | ||||

| (21b) | ||||

Note that we use superscript in (20) to represent the updated PPP intensity before the Kullback-Leibler divergence (KLD) minimization. Lemma 2 is a special case of the PMBM update step [23, Theorem 2] with the predicted MB mixture being empty. In the updated PMB, the PPP represents missed detected objects, whereas the MB represents detected objects.

Lemma 3

The best PPP approximation of the updated PMB density in terms of minimizing the KLD yields the Poisson multi-object density with intensity

| (22) |

Lemma 3 is proved in [28, Sec. IV], and it is the same as the PHD update step [3]. Note that in PHD filtering, the multi-object intensity (3) is recursively computed over time without explicitly computing the Bernoulli densities (21). Lemma 2 and Lemma 3 describe the PHD filter update as consisting of two steps, where the first step is implicit and gives a PMB representation of the multi-object posterior as an intermediate result before PPP approximation. Lemma 3 can be also interpreted as recycling all the Bernoulli components in PMB filtering [28], meaning that every Bernoulli component is implicitly approximated as a PPP. This yields a less accurate cardinality distribution, but it greatly simplifies the subsequent update steps as the approximate multi-object posterior only contains a single global hypothesis. Since the PMB is a more accurate representation of the multi-object posterior than the PPP, performing backward smoothing on the PMB filtering densities before PPP approximation allows us to exploit more information freely available in forward filtering.

IV-B Backward PMB Smoothing

We first present the backward kernel (18) for PMB filtering densities. Then, we describe how to recursively draw samples of sets of trajectories from the backward kernel.

Theorem 1

Given the PMB filtering density , parameterized by Poisson intensity (20) and Bernoulli densities (21), at time step , the set of trajectories in the time interval , and the multi-trajectory dynamic model described in Section II-E, the backward kernel in the time interval (18) is a PMBM of the form

| (23) | ||||

| (24) | ||||

| (25) |

The set of global hypotheses is defined as

| (26) |

and the weight of global hypothesis satisfies

| (27) |

where the proportionality means that normalization is required to ensure that .

In (26), is the set of indices of trajectories in with being trajectories of objects that existed at time step , and being trajectories of objects that appeared after time step . Each trajectory in set creates a unique trajectory Bernoulli component, and thus the number of trajectory Bernoulli components in (25) is , indexed by variable . A global hypothesis is , where is the index to the local hypothesis for the -th trajectory Bernoulli component, and is its number of local hypotheses.

The Poisson intensity in (24) is

| (28) |

For each trajectory Bernoulli component in the predicted trajectory PMB , there are local hypotheses. The local hypothesis that corresponds to the case that the trajectory ended at time step , is given by and

| (29a) | ||||

| (29b) | ||||

| (29c) | ||||

The local hypothesis that corresponds to the case that the trajectory Bernoulli component is updated by trajectory , (present at time step , i.e., ), is given by and

| (30a) | ||||

| (30b) | ||||

| (30c) | ||||

The trajectory Bernoulli component created by trajectory , , has two local hypotheses , . The first local hypothesis represents the case that the Bernoulli comment does not exist, and it is given by and

| (31) |

The second one is given by and

| (32a) | ||||

| (32b) | ||||

| (32c) | ||||

| (32d) | ||||

| (32e) | ||||

Finally, the trajectory Bernoulli component created by trajectory , (not present at time step ) has a single local hypothesis , , given by and

| (33) |

By running the backward simulation for sets of trajectories times for , where we recursively draw samples of from the backward kernel (18), we can obtain samples of . This gives a particle representation of the multi-trajectory density

| (34) |

where the -th particle has state and weight .

IV-C Practical Considerations for a Tractable Implementation

In this work, we consider the linear Gaussian implementations of the hybrid PHD-PMB trajectory smoother. The linear Gaussian implementations of the PHD filter and the PMB smoother using backward simulation have been described in [4] and [21], respectively. Here, we highlight some practical considerations that facilitate an efficient implementation.

In forward PHD filtering, the Poisson intensity (3) is of the form Gaussian mixture, and its number of mixture components increases with the number of measurements over time without bound. To obtain a tractable implementation, Gaussian mixture reduction needs to be performed by pruning Gaussian components with small weights and merging similar Gaussian components [4].

We extract the PMB filtering densities after the PHD update step before PPP approximation. It should be noted that doing so only involves minor modifications of an existing implementation of the PHD update step. By comparing (21) and (3), we can see that the Poisson intensity in PMB is directly given by the first term in (3), and that the single-object density of the -th Bernoulli density can be easily obtained by normalizing the -th term in the summation in (3), where the normalization factor gives the existence probability in (21). In addition, since the predicted PPP intensity (19) is a Gaussian mixture, the single-object density (21b) of each Bernoulli component in the PMB is also a Gaussian mixture. This means that in backward simulation we may need many particles to find the mode of the multi-trajectory density . For efficient sampling, we can reduce each single-object density (21b) to a single Gaussian by moment matching.

In backward simulation, it is trivial to parallize the sampling process since multiple backward sets of trajectories can be generated independently. The main challenge lies in sampling the MB mixture (25) in the PMBM backward kernel (23), which has an intractable number of MB components due to the unknown associations between the different components in the PMB filtering density and the trajectories. To avoid enumerating every global hypothesis, which is of combinatorial complexity, we can draw samples only from MB components with non-negligible weights [21]. One way to do so is by solving a ranked assignment problem using Murty’s algorithm [29]. In addition, ellipsoidal gating can be applied to remove unlikely local hypotheses to simplify the computation of the assignment problem.

V Simulation Results

In this section, we evaluate the performance of the proposed hybrid PHD-PMB trajectory smoother in a simulation study in comparison with the PHD filter [4] and the trajectory PHD filter [19].

We consider the same two-dimensional scenario as in [19] with 100 time steps and 4 objects. The single object state is , consisting of position and velocity. The single object dynamic model is nearly constant velocity with transition matrix and process noise covariance given by

where is a identity matrix, denotes the Kronecker product, is the sampling period, and . Each object survives with probability . The birth model is a PPP, and its Poisson intensity is a Gaussian mixture with three components. Each component has the same weight and covariance ; the means are , , and . The ground truth object trajectories are illustrated in Fig. 2.

The single object measurement model is linear and Gaussian with observation matrix and measurement noise covariance given by

where . The detection probability is . The clutter is uniformly distributed in the area with Poisson clutter rate .

The PHD filter [4] is implemented with pruning threshold , merging threshold , and maximum number of Gaussian components . Object state estimates are obtained using the estimator described in [26, Sec. 9.5.4.4]. The trajectory PHD filter [19] is implemented also with pruning threshold , absorption threshold , and maximum number of Gaussian components , and it performs smoothing-while-filtering without -scan approximation. Its trajectory estimates are obtained using the estimator described in [19, Sec. VII-D]. The hybrid PHD-PMB trajectory smoother is implemented with particles, and only a maximum of 100 global hypotheses are sampled. Its trajectory estimates are obtained using the estimator described in [21, App. G]. An exemplar output of the hybrid PHD-PMB trajectory smoother is shown in Fig. 3.

The multi-object state estimation performance is evaluated using the generalized optimal subpattern assignment (GOSPA) metric [30] at each time step with , , and . GOSPA allows for the decomposition of the estimation error into localization error, missed and false detection errors. The multi-trajectory smoothing estimation performance is evaluated for the set of all trajectories using the trajectory GOSPA (TGOSPA) metric [31] at the last time step with , , and . TGOSPA allows for the decomposition of the estimation error into localization error, missed and false detection errors, and track switch error. We note that the trajectory PHD filter cannot estimate the set of all trajectories accurately. To report the set of all trajectory estimates, we tag each Gaussian component and extract the trajectory estimates with unique tags via post-processing. We report the results averaged over 100 Monte Carlo runs.

| PHD | Hybrid PHD-PMB | |||||||

|---|---|---|---|---|---|---|---|---|

| GOSPA | Localization | Missed | False | GOSPA | Localization | Missed | False | |

| No change | 7.82 | 5.38 | 1.58 | 0.86 | 4.20 | 3.79 | 0.31 | 0.10 |

| 7.26 | 5.42 | 1.55 | 0.28 | 4.06 | 3.76 | 0.23 | 0.06 | |

| 8.28 | 5.41 | 1.59 | 1.27 | 4.30 | 3.81 | 0.37 | 0.12 | |

| 6.53 | 5.73 | 0.35 | 0.45 | 3.80 | 3.58 | 0.16 | 0.06 | |

| 10.95 | 5.21 | 2.55 | 3.19 | 4.76 | 4.11 | 0.56 | 0.10 | |

| Trajectory PHD | Hybrid PHD-PMB | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| TGOSPA | Localization | Missed | False | Track switch | TGOSPA | Localization | Missed | False | Track switch | |

| No change | 5.43 | 4.61 | 0.12 | 0.71 | 0.00 | 5.22 | 4.79 | 0.31 | 0.10 | 0.01 |

| 5.01 | 4.65 | 0.13 | 0.23 | 0.00 | 5.14 | 4.82 | 0.23 | 0.07 | 0.02 | |

| 5.90 | 4.68 | 0.18 | 1.04 | 0.00 | 5.34 | 4.84 | 0.37 | 0.12 | 0.01 | |

| 4.92 | 4.51 | 0.01 | 0.39 | 0.00 | 4.78 | 4.56 | 0.16 | 0.06 | 0.00 | |

| 6.28 | 4.86 | 0.29 | 1.13 | 0.00 | 5.91 | 5.22 | 0.56 | 0.10 | 0.02 | |

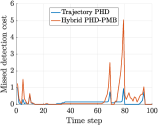

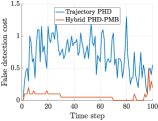

Let us first study the multi-object estimation performance. The GOSPA error and its decomposition for the the PHD filter and the hybrid PHD-PMB trajectory smoother over time are shown in Fig. 4. Overall, the hybrid PHD-PMB trajectory smoother outperforms the PHD filter in terms of localization errors, missed and false detection errors by a significant margin. One exception is that the hybrid PHD-PMB trajectory smoother presents higher localization errors at the first few time steps due to the mismatch between the Gaussian means in the birth intensity and the true object birth positions. Moreover, the PHD-PMB trajectory smoother shows one apparent anomaly that it has increased missed detection error a few time steps before object disappearing. This anomaly is due to the premature object death, a problem also observed in PHD smoothers [11, 12], where missed detections happen at earlier time steps when objects disappear, with a lag equal to the smoothing lag. However, we note that in the hybrid PHD-PMB trajectory smoother this problem is less severe in the sense that it does not always happen for every disappearing object, and that missed detections due to premature object death are not propagated all the way back to earlier time steps. We also show the average GOSPA error and its decomposition per time step for different scenario parameters in Table I. We can see that the hybrid PHD-PMB trajectory smoother consistently outperforms the PHD filter by a significant margin, and it is especially robust to false detections.

We then discuss the trajectory estimation performance of the hybrid PHD-PMB trajectory smoother and the trajectory PHD filter. The TGOSPA error and its decomposition for the trajectory PHD filter and the hybrid PHD-PMB trajectory smoother over time are shown in Fig. 5. The hybrid PHD-PMB trajectory smoother surpasses the trajectory PHD filter by producing fewer false detections. However, the trajectory PHD filter exhibits slightly superior performance in localization accuracy, reduced missed detections, and fewer track switches. The average TGOSPA error and its decomposition per time step for different scenario parameters are presented in Table II. We can see that the hybrid PHD-PMB trajectory smoother generally outperforms the trajectory PHD filter, except for the scenario with low clutter rate.

VI Conclusions

In this paper, we have presented a hybrid PHD-PMB multi-object smoother, which performs backward simulation using the PMB densities obtained in the update step of forward PHD filtering to provide smoothed trajectory estimates. The hybrid PHD-PMB trajectory smoother makes it possible for the PHD filter to generate smoothed trajectory estimates for all the objects. Possible future work includes the development of a hybrid PHD-PMB trajectory smoother for non-Gaussian, non-linear scenarios using sequential Monte Carlo implementations and experiments with real data [32].

References

- [1] F. Meyer, T. Kropfreiter, J. L. Williams, R. Lau, F. Hlawatsch, P. Braca, and M. Z. Win, “Message passing algorithms for scalable multitarget tracking,” Proceedings of the IEEE, vol. 106, no. 2, pp. 221–259, 2018.

- [2] R. L. Streit, R. B. Angle, and M. Efe, Analytic combinatorics in multiple object tracking. Springer, 2021.

- [3] R. P. Mahler, “Multitarget bayes filtering via first-order multitarget moments,” IEEE Transactions on Aerospace and Electronic systems, vol. 39, no. 4, pp. 1152–1178, 2003.

- [4] B.-N. Vo and W.-K. Ma, “The Gaussian mixture probability hypothesis density filter,” IEEE Transactions on Signal Processing, vol. 54, no. 11, p. 4091, 2006.

- [5] B. Ristic, B.-N. Vo, and D. Clark, “A note on the reward function for PHD filters with sensor control,” IEEE Transactions on Aerospace and Electronic Systems, vol. 47, no. 2, pp. 1521–1529, 2011.

- [6] K. Granström, C. Lundquist, and O. Orguner, “Extended target tracking using a Gaussian-mixture PHD filter,” IEEE Transactions on Aerospace and Electronic Systems, vol. 48, no. 4, pp. 3268–3286, 2012.

- [7] K. Granström and U. Orguner, “A PHD filter for tracking multiple extended targets using random matrices,” IEEE Transactions on Signal Processing, vol. 60, no. 11, pp. 5657–5671, 2012.

- [8] C. Lundquist, L. Hammarstrand, and F. Gustafsson, “Road intensity based mapping using radar measurements with a probability hypothesis density filter,” IEEE Transactions on Signal Processing, vol. 59, no. 4, pp. 1397–1408, 2010.

- [9] L. Gao, G. Battistelli, and L. Chisci, “PHD-SLAM 2.0: Efficient SLAM in the presence of missdetections and clutter,” IEEE Transactions on Robotics, vol. 37, no. 5, pp. 1834–1843, 2021.

- [10] N. Nadarajah, T. Kirubarajan, T. Lang, M. McDonald, and K. Punithakumar, “Multitarget tracking using probability hypothesis density smoothing,” IEEE Transactions on Aerospace and Electronic Systems, vol. 47, no. 4, pp. 2344–2360, 2011.

- [11] R. P. Mahler, B.-T. Vo, and B.-N. Vo, “Forward-backward probability hypothesis density smoothing,” IEEE Transactions on Aerospace and Electronic Systems, vol. 48, no. 1, pp. 707–728, 2012.

- [12] S. Nagappa and D. E. Clark, “Fast sequential Monte Carlo PHD smoothing,” in 14th International Conference on Information Fusion. IEEE, 2011, pp. 1–7.

- [13] R. Georgescu, P. Willett, and L. Svensson, “Particle PHD forward filter-backward simulator for targets in close proximity,” in IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, 2013, pp. 6387–6391.

- [14] P. Feng, W. Wang, S. M. Naqvi, and J. Chambers, “Adaptive retrodiction particle PHD filter for multiple human tracking,” IEEE Signal Processing Letters, vol. 23, no. 11, pp. 1592–1596, 2016.

- [15] B.-N. Vo, B.-T. Vo, and R. P. Mahler, “Closed-form solutions to forward-backward smoothing,” IEEE Transactions on Signal Processing, vol. 60, no. 1, pp. 2–17, 2011.

- [16] X. He and G. Liu, “Improved Gaussian mixture probability hypothesis density smoother,” Signal Processing, vol. 120, pp. 56–63, 2016.

- [17] K. Panta, D. E. Clark, and B.-N. Vo, “Data association and track management for the Gaussian mixture probability hypothesis density filter,” IEEE Transactions on Aerospace and Electronic Systems, vol. 45, no. 3, pp. 1003–1016, 2009.

- [18] Z. Lu, W. Hu, and T. Kirubarajan, “Labeled random finite sets with moment approximation,” IEEE Transactions on Signal Processing, vol. 65, no. 13, pp. 3384–3398, 2017.

- [19] Á. F. García-Fernández and L. Svensson, “Trajectory PHD and CPHD filters,” IEEE Transactions on Signal Processing, vol. 67, no. 22, pp. 5702–5714, 2019.

- [20] Á. F. García-Fernández, L. Svensson, and M. R. Morelande, “Multiple target tracking based on sets of trajectories,” IEEE Transactions on Aerospace and Electronic Systems, vol. 56, no. 3, pp. 1685–1707, 2020.

- [21] Y. Xia, L. Svensson, Á. F. García-Fernández, J. L. Williams, D. Svensson, and K. Granström, “Multiple object trajectory estimation using backward simulation,” IEEE Transactions on Signal Processing, vol. 70, pp. 3249–3263, 2022.

- [22] F. Lindsten and T. B. Schön, “Backward simulation methods for Monte Carlo statistical inference,” Foundations and Trends® in Machine Learning, vol. 6, no. 1, pp. 1–143, 2013.

- [23] J. L. Williams, “Marginal multi-Bernoulli filters: RFS derivation of MHT, JIPDA, and association-based member,” IEEE Transactions on Aerospace and Electronic Systems, vol. 51, no. 3, pp. 1664–1687, 2015.

- [24] ——, “An efficient, variational approximation of the best fitting multi-Bernoulli filter,” IEEE Transactions on Signal Processing, vol. 63, no. 1, pp. 258–273, 2015.

- [25] Y. Xia, L. Svensson, Á. F. García-Fernández, K. Granström, and J. L. Williams, “Backward simulation for sets of trajectories,” in IEEE 23rd International Conference on Information Fusion (FUSION). IEEE, 2020, pp. 1–8.

- [26] R. P. Mahler, Statistical Multisource-Multitarget Information Fusion. Artech House Norwood, MA, 2007.

- [27] K. Granström, L. Svensson, Y. Xia, J. Williams, and Á. F. García-Femández, “Poisson multi-Bernoulli mixture trackers: Continuity through random finite sets of trajectories,” in 21st International Conference on Information Fusion. IEEE, 2018, pp. 1–8.

- [28] J. L. Williams, “Hybrid Poisson and multi-Bernoulli filters,” in Proceedings of International Conference on Information Fusion. IEEE, 2012, pp. 1103–1110.

- [29] D. F. Crouse, “On implementing 2D rectangular assignment algorithms,” IEEE Transactions on Aerospace and Electronic Systems, vol. 52, no. 4, pp. 1679–1696, 2016.

- [30] A. S. Rahmathullah, Á. F. García-Fernández, and L. Svensson, “Generalized optimal sub-pattern assignment metric,” in Proceedings of International Conference on Information Fusion. IEEE, 2017, pp. 1–8.

- [31] Á. F. García-Fernández, A. S. Rahmathullah, and L. Svensson, “A metric on the space of finite sets of trajectories for evaluation of multi-target tracking algorithms,” IEEE Transactions on Signal Processing, vol. 68, pp. 3917–3928, 2020.

- [32] J. Liu, L. Bai, Y. Xia, T. Huang, B. Zhu, and Q.-L. Han, “GNN-PMB: A simple but effective online 3D multi-object tracker without bells and whistles,” IEEE Transactions on Intelligent Vehicles, vol. 8, no. 2, pp. 1176–1189, 2022.