Hybrid Models for Facial Emotion Recognition in Children

Abstract.

This paper focuses on the use of emotion recognition techniques to assist psychologists in performing children´s therapy through remotely robot operated sessions. In the field of psychology, the use of agent-mediated therapy is growing increasingly given recent advances in robotics and computer science. Specifically, the use of Embodied Conversational Agents (ECA) as an intermediary tool can help professionals connect with children who face social challenges such as Attention Deficit Hyperactivity Disorder (ADHD), Autism Spectrum Disorder (ASD) or even who are physically unavailable due to being in regions of armed conflict, natural disasters, or other circumstances. In this context, emotion recognition represents an important feedback for the psychotherapist. In this article, we initially present the result of a bibliographical research associated with emotion recognition in children. This research revealed an initial overview on algorithms and datasets widely used by the community. Then, based on the analysis carried out on the results of the bibliographical research, we used the technique of dense optical flow features to improve the ability of identifying emotions in children in uncontrolled environments. From the output of a hybrid model of Convolutional Neural Network, two intermediary features are fused before being processed by a final classifier. The proposed architecture was called HybridCNNFusion. Finally, we present the initial results achieved in the recognition of children’s emotions using a dataset of Brazilian children.

1. Introduction

Human cognitive development goes through several stages from birth to maturity. Childhood represents the phase where one acquires the basis of learning to relate with others and with the world (piaget1952origins, 27). Unfortunately, the mental development process of a child can be hampered by mental disorders such as anxiety, stress, obsessive-compulsive behavior or emotional, sexual or physical abuse (weisz2010evidence, 38). The solution or reduction of consequences for these afflictions is achieved with therapeutic processes carried out by professionals in the field of psychology. Due to limited child maturity, the process involves not only assessment sessions with the child, but also interviews with parents and educators, observation of the child in the residential and school environments and data collection through drawings, compositions, games and other activities (Cuadrado2019, 4), (WOS:000629356500006, 39).

In this process, leisure resources such as: games, theater activities, puppets, toys and others gain special prominence and are used as support in therapy (Moura2000, 7). As a way to contribute to this approach, Embodied Conversational Agents (ECA) are used as a tool in psycho-therapeutic applications. Provoost et al. (Provoost2017, 28) performed a scoping review on the use of ECAs in psychology. After selection, the search revealed 49 references associated with the following mental disorders: autism, depression, anxiety disorder, post-traumatic stress disorder, psychotic disorder and substance use. According to the authors, ”ECA applications are very interesting and show promising results, but their complex nature makes it difficult to prove that they are effective and safe for use in clinical practice”. Actually, the strategy suggested by Provoost et al. involves increasing the evidence base through interventions using low-technology agents that are rapidly developed, tested, and applied in responsible clinical practice.

The recognition of emotions during psycho-therapeutic sessions can act as an aid to the psychology professional involved in the process, with a still big room for improvement considering the depth of the task at hand (WOS:000556121100052, 2).

The objective of this work is to discuss and comment on the use of images generated by cameras in uncontrolled children’s psychotherapy sessions to classify their emotional state at any given moment in one of the following basic emotion categories: anger, disgust, fear, happiness, sadness, surprise, contempt (ekman1984, 9). Given the diversity of Machine Learning algorithms for emotion recognition tasks overall, correctly addressing our objective is much more complex than simply choosing the most powerful or recent algorithm (WOS:000483067100012, 25). For applications in psychology, compared to other human-centered tasks, the solution has to be almost fail-proof and be able to function in real uncontrolled scenarios, which is in itself extremely challenging and therefore raises multiple ethical and morally debatable questions about the viability of such models (WOS:000697827200029, 16). In this context, it is important to study and consider the environments for which a specific algorithm will be used for even before beginning to develop or train it (WOS:000447336700033, 24).

In Section 2, we briefly discuss the performed bibliographical research on the state of the art for emotion recognition in children. The training datasets, as well as the implemented model architecture and produced code are presented in Sections 3.1 and 3.2 respectively. Results obtained using the suggested model and conclusions are discussed in Sections 4 and 5.

2. Bibliographical Research

A bibliographical research was performed to determine the State of The Art (SOTA) for emotion recognition tasks (FER) in children using computer algorithms. The search was made using the ”Web of Science” repository (WEB2023, 37), covering the last 5 years, with the following search key:

| (1) |

An initial number of 152 references were selected, with a total of 42 accepted for in-depth reading (39 from the original search, and 3 additional references). A further reading analysis was done, by tagging each paper according to a select number of categories, including, but not limited to: datasets used; age of the patients; psychological procedure adopted; data format (such as video, photos or scans); algorithm category (deep learning techniques, pure machine learning, etc). The detailed result of this categorization can be seen in the spreadsheet available in the Google Drive (SPREED2023, 40).

2.1. Types of algorithms and datasets

In Fig. 1 we present the main datasets identified during the bibliographical research. The FER-2013 dataset (Sambare2022, 30) is one of the most used by researchers with 9 references. We can mention the works by Sreedharan et al. (Sreedharan2018, 32) which makes use of this dataset for training FER model using a novel optimisation technique (Grey Wolf optimisation), for instance.

Overall, we found out that Facial Emotion Recognition (FER) algorithms have had significant improvement in recent years (WOS:000772182600055, 21), driven by the success of deep learning-based approaches. In Fig. 2 we present the most frequently used algorithms for emotion recognition. The convolutional neural network architecture (DL-CNN) was the most used, with 22 references. As DL-CNN examples, we can cite the works of Haque and Valles (Haque2018, 12) and Cuadrado et al.(Cuadrado2019, 4). Both of these propose an architecture for a Deep Convolutional Neural Network for a specific FER task, namely for robot tutors in primary schools and identifying emotions in children with autism spectrum disorder.

With the demand for high-performance algorithms, numerous novel models, such as the DeepFace system (Taigman2014, 34) or the Transformer architecture for sequential features (vaswani2017attention, 35) have also made great steps in improving the overall accuracy and time efficiency for emotion classification models.

Among the most popular paradigms currently used for FER, Convolutional Neural Networks (CNNs) have demonstrated high performance in detecting and recognizing emotion features from facial expressions in images (WOS:000697827200029, 16) by applying moving filters over an image, also called convolution kernels. These models use hierarchical feature extraction techniques to construct region-based information from facial images, which are then used for classification. One of the first wide-spread CNN-based models that have been used for FER is the VGG-16 network, which uses 16 convolution layers and 3 fully connected layers to classify emotions (vgg, 31). In addition to CNNs, other models such as Recurrent Neural Networks (RNNs) or a combination of both have also been proposed for FER.

Overall, FER is an active area of research, and there is ongoing work to improve the accuracy and robustness of existing solutions.

2.2. Classic emotion capture strategies

In Fig. 3 we present the origin of the still emotion pictures present in the datasets. We can observe that 48.8% of the studies used ”Posed” emotions, such that the emotions expressed are artificial, and their enactment requested by an evaluator. As an example of works that use ”Posed” emotions we mention Sreedharan et al. (Sreedharan2018, 32) which uses the CK+ dataset of posed emotions, and Kalantarian et. al (Kalantarian2020, 19), in which children with Autism Spectrum Disorder (ASD) are requested to imitate the emotions shown by prompts in a mobile game.

The ”Induced” group of emotions contributes to 23.3% of the found papers, for which we can mention the work of Goulart et al. (Goulart2019, 11), where children emotions are induced by interaction with a robot tutor and recorded. Differently from posed emotions, which are obtained by explicitly requesting participants to imitate the facial expression, induced emotions are implicitly obtained by showing the participants emotion inducing scenes, such as videos, photographs and texts.

The ”Spontaneous” group appears in only 16.3% of the studies, possibly due to the difficulty in capturing emotions in-the-wild (ITW), such as the dataset discussed in Kahou et. al (Kahou2015, 18), that is, when the individual is not aware of the purpose or the existence of ongoing video recording or photography. It is important to note that facial expressions do not completely correlate to what the individual is feeling, as is the case with posed facial expressions, but is generally used as an acceptable indicator for emotion, even when used in combination with other indicators (WOS:000475910800001, 1), (WOS:000807364500004, 23).

2.3. Hybrid Architectures

Models that combine multiple networks into one architecture, called hybrid models, are becoming increasingly accurate, particularly those that combine convolutional neural networks (CNNs) and recurrent neural networks (RNNs) (WOS:000518657800086, 22) for facial emotion recognition (FER) tasks.

In addition, recent research has shown that the integration of recurrent layers, such as the long-short term memory layer (LSTM layer) (hochreiter1997long, 14), which processes inputs recursively, making them particularly useful for capturing the temporal dynamics of facial expressions and inserting these layers into hybrid models can further improve their performance (WOS:000756540200001, 15).

Another promising research line for improving FER accuracy is the use of multiple features such as audio and processed images in addition to facial color (RGB) images (WOS:000460064700015, 3). These additional features can provide complementary information that can improve the robustness and accuracy of the FER system.

However, there are still challenges that need to be addressed, such as how to effectively fuse multiple features and how to effectively train such time-consuming models. Anyhow, hybrid models with transformers or LSTMs classifiers, as well as multiple features are a promising direction for improving the state of the art in FER (WOS:000756540200001, 15), (WOS:000783834000109, 29).

3. Methods

3.1. Datasets used for Training and Prediction

Considering the need for an architecture that can provide adequate accuracy and real-time response for predicting emotions in children in uncontrolled environments, we create the architecture HybridCNNFusion to process the real-time sequence of frames.

To accomplish the task in hand, we planned on training our model on the two datasets publicly available with the highest accuracy for FER task in children (WOS:000556121100052, 2). The datasets used are the FER-2013 (Sambare2022, 30) and the Karolinska Directed Emotional Faces (kdef, 5). Most datasets for FER tasks are aimed towards adults and with posed expressions, therefore we decided to use the ChildEFES (childefes, 26), a private video dataset of Brazilian children posing emotions for fine-tunning.

3.2. HybridCNNFusion Architecture Model

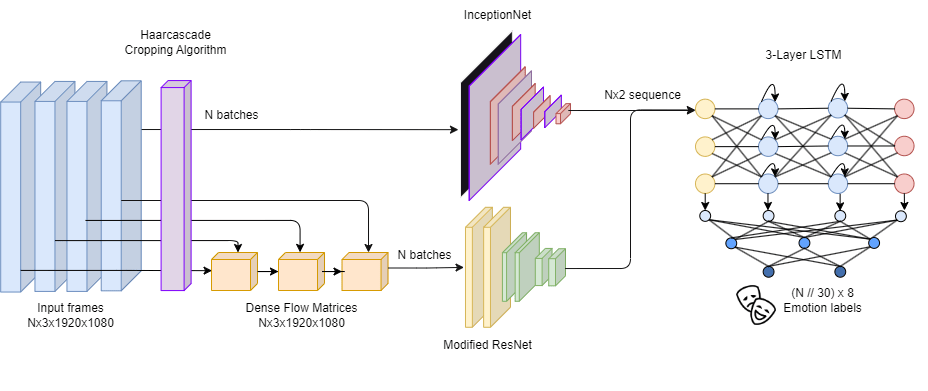

In Fig. 4 we present the elements that make up the HybridCNNFusion architecture. The first step in building our architecture was to allow the model to be used in in-the-wild scenarios, by implementing a Haarcascade (haarcascade, 36) region detection algorithm to center and crop the children faces.

These cropped images are then passed to a Convolutional Neural Network (CNN), specifically the InceptionNet (szegedy2014going, 33), to process the cropped RGB pixels generated by the Haarcascade algorithm. In parallel, we use the Gunner Farneback’s algorithm (optical-flow, 10) to retrieve the dense optical flow values from the current and previous cropped frames. This is made to allow the network to process the variation in facial muscles and skin movement over time. The optical flow matrices are then passed to a second CNN, specifically a variation of the ResNet (he2015deep, 13).

After calculating these two separate features, they are concatenated and used as input for a final recurrent block, specifically made with layers of LSTM cells to generate the concatenated intermediary output. This takes advantage of the sequential nature of the video frames to output a final vector of predicted probabilities for each emotion. The aforementioned model uses a technique called Late Fusion (WOS:000756540200001, 15), in which two separate features are concatenated inside the architecture and used as input for the final output layers.

The Late fusion technique allows for a better usage of the motion generated by separate facial Action Units (ekman1978facial, 8) by having to distinctly trained CNNs, one for raw RGB values (outputed by the InceptionNet) and another for dense HSV motion matrices (outputed by the ResNet + OpticalFlow combination). The use of the optical flow features as input for the ResNet allows for processing sequential information, specifically, that of motion, through clever manipulation of the raw RGB values.

The step by step used for a single video classification iteration is presented in the Algorithm 1 section below.

3.3. Ethical aspects and considerations of the solution

The task of facial emotion recognition (FER) in children is particularly challenging due to the ethical issues and the need for a high level of precision and interpretability.

Most existing FER approaches focus on non-ethically critical situations, such as customer satisfaction or in controlled lab conditions (WOS:000483067100012, 25). On the other hand, the task of FER in emotionally vulnerable children requires a much greater level of trustworthiness in accordance to the ethical constraints of the psychologist-patient relationship (WOS:000647216000001, 6).

This specific research branch of FER tasks demands the ability to accurately detect and interpret facial expressions in real-time videos of children in in-the-wild (ITW) situations, all the while ensuring the confidence of the information being generated (WOS:000523305700001, 20), (WOS:000447336700033, 24).

4. Results

The final model implementation had memory limitations that compromised the deployment of the HybridCNNFusion architecture. Despite this limitation, the final model was trained on both the FER2013 and KDEF datasets and fine tuned on ChildEFES dataset to maximize accuracy. The entire model could not be entirely fitted through our private dataset, so we measured partial accuracy for the intermediary models. The InceptionNet had an accuracy of about 70%, while the ResNet had an accuracy of about 72%. Overall, the model had a speed averaging 2.5s for a single iteration, for videos averaging 10s of duration.

The input images are cropped to the required size of both networks. The output consists of a stochastic vector of probabilities predicting one of 7 possible base emotions, as well as a neutral emotion, totaling 8 possible labels (ekman1984, 9). Both intermediary CNNs have an output vector of size 32, and the concatenated feature is a vector with 64 entries. The final output layer has a size of , with equal to the total duration of the video divided in groups of 30 frames, each group with a separate predicted emotion label.

5. Conclusion

Considering the technological aspects and the initial results obtained, the architecture proposed is a continuous push towards identifying children emotions in in-the-wild conditions, altough not yet fit for real-world usage.

The fusion of dense optical flow features in conjunction with a hybrid CNN and a recurrent model represents a promising approach in the challenging task of facial emotion recognition (FER) in children, specifically in uncontrolled environments. Being a critical need in the field of psychology, this approach offers a potential solution.

For ethically sensible situations, there are still important metrics that have to be calculated, such as the Area under the ROC Curve (AOC), which can indicate whether the model is prone to miss important emotion predictions within small and specific frames, also called micro-expressions (WOS:000549823900015, 17).

In fact, there is a large gap on current ethical questions for the task, but we believe that improving the interpretability of the architecture, explainability and security of transmission of the processed information should be the focus of future models and frameworks instead of just the overall accuracy. This will ensure that the technology can be used safely and effectively to support the emotional well-being of children.

References

- (1) Lisa Feldman Barrett et al. “Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements” In PSYCHOLOGICAL SCIENCE IN THE PUBLIC INTEREST 20.1 1 OLIVERS YARD, 55 CITY ROAD, LONDON EC1Y 1SP, ENGLAND: SAGE PUBLICATIONS LTD, 2019, pp. 1–68 DOI: 10.1177/1529100619832930

- (2) De’Aira Bryant and Ayanna Howard “A Comparative Analysis of Emotion-Detecting Al Systems with Respect to Algorithm Performance and Dataset Diversity” In AIES ‘19: Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society, 2019, pp. 377–382 DOI: 10.1145/3306618.3314284

- (3) M. Camacho, Helmet T. Karim and Susan B. Perlman “Neural architecture supporting active emotion processing in children: A multivariate approach” In NEUROIMAGE 188 525 B ST, STE 1900, SAN DIEGO, CA 92101-4495 USA: ACADEMIC PRESS INC ELSEVIER SCIENCE, 2019, pp. 171–180 DOI: 10.1016/j.neuroimage.2018.12.013

- (4) L.. Cuadrado, M.. Angeles and F.. Lopez “FER in Primary School Children for Affective Robot Tutors” In FROM BIOINSPIRED SYSTEMS AND BIOMEDICAL APPLICATIONS TO MACHINE LEARNING, PT II 11487, Lecture Notes in Computer Science, 2019, pp. 461–471 Spanish CYTED; Red Nacl Computac Nat & Artificial, Programa Grupos Excelencia Fundac Seneca & Apliquem Microones 21 s l DOI: 10.1007/978-3-030-19651-6˙45

- (5) A. D..Lundqvist “The Karolinska Directed Emotional Face” CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, 1998 URL: https://www.kdef.se/

- (6) Arnaud Dapogny et al. “On Automatically Assessing Children’s Facial Expressions Quality: A Study, Database, and Protocol” AVENUE DU TRIBUNAL FEDERAL 34, LAUSANNE, CH-1015, SWITZERLAND: FRONTIERS MEDIA SA, 2019 DOI: 10.3389/fcomp.2019.00005

- (7) Cynthia Borges Moura and M.R.Z.S. Azevedo “Estratégias lúdicas para uso em terapia comportamental infantil” In Sobre comportamento e cognição: questionando e ampliando a teoria e as intervenções clínicas e em outros contextos 6, 2000, pp. 163–170

- (8) P. Ekman and W.V. Friesen “Facial Action Coding System” Consulting Psychologists Press, 1978 URL: https://books.google.com.br/books?id=08l6wgEACAAJ

- (9) P. Ekman and K. Scherer “Expression and the Nature of Emotion” In Approaches to Emotion lawrence Erlbaum Associates, 1984 URL: https://www.paulekman.com/wp-content/uploads/2013/07/Expression-And-The-Nature-Of-Emotion.pdf

- (10) Gunnar Farnebäck “Two-Frame Motion Estimation Based on Polynomial Expansion” In Image Analysis Berlin, Heidelberg: Springer Berlin Heidelberg, 2003, pp. 363–370

- (11) Christiane Goulart et al. “Visual and Thermal Image Processing for Facial Specific Landmark Detection to Infer Emotions in a Child-Robot Interaction” In SENSORS 19.13 MDPI, 2019 DOI: 10.3390/s19132844

- (12) M… Haque and D. Valles “A Facial Expression Recognition Approach Using DCNN for Autistic Children to Identify Emotions” IEEE, 2018, pp. 546–551 Inst Engn & Management; IEEE Vancouver Sect; UBC; Univ Engn & Management

- (13) Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun “Deep Residual Learning for Image Recognition”, 2015 arXiv:1512.03385 [cs.CV]

- (14) Sepp Hochreiter and Jürgen Schmidhuber “Long short-term memory” In Neural computation 9.8 MIT Press, 1997, pp. 1735–1780

- (15) Jiuk Hong, Chaehyeon Lee and Heechul Jung “Late Fusion-Based Video Transformer for Facial Micro-Expression Recognition” In APPLIED SCIENCES-BASEL 12.3 ST ALBAN-ANLAGE 66, CH-4052 BASEL, SWITZERLAND: MDPI, 2022 DOI: 10.3390/app12031169

- (16) Asha Jaison and C. Deepa “A Review on Facial Emotion Recognition and Classification Analysis with Deep Learning” In BIOSCIENCE BIOTECHNOLOGY RESEARCH COMMUNICATIONS 14.5, SI C-52 HOUSING BOARD COLONY, KOHE FIZA, BHOPAL, MADHYA PRADESH 462 001, INDIA: SOC SCIENCE & NATURE, 2021, pp. 154–161 DOI: 10.21786/bbrc/14.5/29

- (17) Salma Kammoun Jarraya, Marwa Masmoudi and Mohamed Hammami “Compound Emotion Recognition of Autistic Children During Meltdown Crisis Based on Deep Spatio-Temporal Analysis of Facial Geometric Features” In IEEE ACCESS 8 445 HOES LANE, PISCATAWAY, NJ 08855-4141 USA: IEEE-INST ELECTRICAL ELECTRONICS ENGINEERS INC, 2020, pp. 69311–69326 DOI: 10.1109/ACCESS.2020.2986654

- (18) Samira Ebrahimi Kahou et al. “Recurrent Neural Networks for Emotion Recognition in Video” In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction ACM, 2015 DOI: 10.1145/2818346.2830596

- (19) Haik Kalantarian et al. “The Performance of Emotion Classifiers for Children With Parent-Reported Autism: Quantitative Feasibility Study” In JMIR MENTAL HEALTH 7.4 JMIR PUBLICATIONS, INC, 2020 DOI: 10.2196/13174

- (20) Haik Kalantarian et al. “The Performance of Emotion Classifiers for Children With Parent-Reported Autism: Quantitative Feasibility Study” In JMIR MENTAL HEALTH 7.4 130 QUEENS QUAY East, Unit 1100, TORONTO, ON M5A 0P6, CANADA: JMIR PUBLICATIONS, INC, 2020 DOI: 10.2196/13174

- (21) Akhilesh Kumar and Awadhesh Kumar “Analysis of Machine Learning Algorithms for Facial Expression Recognition” In ADVANCED NETWORK TECHNOLOGIES AND INTELLIGENT COMPUTING, ANTIC 2021 1534, 2022, pp. 730–750 DOI: 10.1007/978-3-030-96040-7“˙55

- (22) S. Li et al. “Bi-modality Fusion for Emotion Recognition in the Wild” 1601 Broadway, 10th Floor, NEW YORK, NY, UNITED STATES: ASSOC COMPUTING MACHINERY, 2019, pp. 589–594 DOI: 10.1145/3340555.3355719

- (23) Xiaohong Li “Expression Recognition of Classroom Children’s Game Video Based on Improved Convolutional Neural Network” In SCIENTIFIC PROGRAMMING 2022 ADAM HOUSE, 3RD FLR, 1 FITZROY SQ, LONDON, W1T 5HF, ENGLAND: HINDAWI LTD, 2022 DOI: 10.1155/2022/5203022

- (24) Jose Luis Espinosa-Aranda et al. “Smart Doll: Emotion Recognition Using Embedded Deep Learning” In SYMMETRY-BASEL 10.9 ST ALBAN-ANLAGE 66, CH-4052 BASEL, SWITZERLAND: MDPI, 2018 DOI: 10.3390/sym10090387

- (25) Aleix M. Martinez “The Promises and Perils of Automated Facial Action Coding in Studying Children’s Emotions” In DEVELOPMENTAL PSYCHOLOGY 55.9, SI 750 FIRST ST NE, WASHINGTON, DC 20002-4242 USA: AMER PSYCHOLOGICAL ASSOC, 2019, pp. 1965–1981 DOI: 10.1037/dev0000728

- (26) Juliana Gioia Negrão et al. “The Child Emotion Facial Expression Set: A Database for Emotion Recognition in Children” In Frontiers in Psychology 12, 2021 DOI: 10.3389/fpsyg.2021.666245

- (27) Jean Piaget “The Origins of Intelligence in Children” International Universities Press, 1952

- (28) Simon Provoost, Ho Ming Lau, Jeroen Ruwaard and Heleen Riper “Embodied Conversational Agents in Clinical Psychology: A Scoping Review” In Journal of Medical Internet Research 19.5, 2017, pp. e151 DOI: 10.2196/jmir.6553

- (29) Sergio Pulido-Castro et al. “Ensemble of Machine Learning Models for an Improved Facial Emotion Recognition” IEEE URUCON Conference (IEEE URUCON), Montevideo, URUGUAY, NOV 24-26, 2021 In 2021 IEEE URUCON 345 E 47TH ST, NEW YORK, NY 10017 USA: IEEE, 2021, pp. 512–516 DOI: 10.1109/URUCON53396.2021.9647375

- (30) Manas Sambare “”FER-2013 Learn facial expressions from an image” accessed on 15 fev 2023 Kaggle, https://www.kaggle.com/datasets/msambare/fer2013, 2022

- (31) Karen Simonyan and Andrew Zisserman “Very Deep Convolutional Networks for Large-Scale Image Recognition”, 2015 arXiv:1409.1556 [cs.CV]

- (32) Ninu Preetha Nirmala Sreedharan et al. “Grey Wolf optimisation-based feature selection and classification for facial emotion recognition” In IET BIOMETRICS 7.5 INST ENGINEERING TECHNOLOGY-IET, 2018, pp. 490–499 DOI: 10.1049/iet-bmt.2017.0160

- (33) Christian Szegedy et al. “Going Deeper with Convolutions”, 2014 arXiv:1409.4842 [cs.CV]

- (34) Yaniv Taigman, Ming Yang, Marc’Aurelio Ranzato and Lior Wolf “DeepFace: Closing the Gap to Human-Level Performance in Face Verification” In 2014 IEEE Conference on Computer Vision and Pattern Recognition IEEE, 2014 DOI: 10.1109/cvpr.2014.220

- (35) Ashish Vaswani et al. “Attention Is All You Need”, 2017 arXiv:1706.03762 [cs.CL]

- (36) Paul Viola and Michael Jones “Rapid Object Detection using a Boosted Cascade of Simple Features” In CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION IEEE Computer Society, 2001

- (37) Web of Science “”Web of Science platform.” accessed on 08 may 2022, bit.ly/3McZko4, 2022

- (38) John R. Weisz and Alan E. Kazdin “Evidence-Based Psychotherapies for Children and Adolescents” Guilford Press, 2010

- (39) Guiping Yu “Emotion Monitoring for Preschool Children Based on Face Recognition and Emotion Recognition Algorithms” In COMPLEXITY 2021 ADAM HOUSE, 3RD FL, 1 FITZROY SQ, LONDON, WIT 5HE, ENGLAND: WILEY-HINDAWI, 2021 DOI: 10.1155/2021/6654455

- (40) Zimmer, R. and Sobral, M. and Azevedo, H. “Spreadsheet with Reference Classification Groups” accessed on 08 may 2023, https://tinyurl.com/hybridmodelsbibliography, 2023