Human-Collective Visualization Transparency

Abstract

Interest in collective robotic systems has increased rapidly due to the potential benefits that can be offered to operators, such as increased safety and support, who perform challenging tasks in high-risk environments. Human-collective transparency research has focused on how the design of the algorithms, visualizations, and control mechanisms influence human-collective behavior. Traditional collective visualizations have shown all of the individual entities composing a collective, which may become problematic as collectives scale in size and heterogeneity, and tasks become more demanding. Human operators can become overloaded with information, which will negatively affect their understanding of the collective’s current state and overall behaviors, which can cause poor teaming performance. An analysis of visualization transparency and the derived visualization design guidance, based on remote supervision of collectives, are the primary contributions of this manuscript. The individual agent and abstract visualizations were analyzed for sequential best-of-n decision-making tasks involving four collectives, composed of 200 entities each. The abstract visualization provided better transparency by enabling operators with different individual differences and capabilities to perform relatively the same and promoted higher human-collective performance.

keywords:

Transparency , Visualization , Human-Robot Interaction1 Introduction

Complex human-collective robotic interface design must leverage fundamental human factors visualization principles in order to aid accurate perception and comprehension of information that informs operator actions. Transparency, the principle of providing easily exchangeable information to enhance comprehension [1], can help attain meaningful and insightful information exchanges between an operator and the collective. Integrating transparency into the human-collective interface design, such as implementing different visualization techniques, can help limit poor operator behaviors and improve the human-collective system’s overall effectiveness. Achieving transparency; however, becomes more challenging as the collective robotic system’s scale changes, and the amount of information needed increases in order to understand the collective’s current, or planned actions. Providing too much, or too little transparency may overload, or underload, respectively, the operator and negatively affect the desired outcomes. Designers of collective robotic systems, which are composed of large groups (i.e., ) of simplified individual robotic entities, will experience challenges due to the quantity and quality of the information provided by the individual entities. There is a need to investigate how system components, such as the visualization, impact human-collective behaviors (i.e., human-collective interactions and performance) in order to achieve transparency for realistic collective use scenarios.

Collective robotic systems exhibit biologically inspired behaviors seen in spatial swarms [2], colonies [3], or a combination of both [4]. A bee colony searching for a new hive location exemplifies collective behavior. Initially a subset of the colony population leaves the hive in search of a new hive for a daughter colony [4]. The subset of bees fly to a nearby tree branch, where they wait while scout bees search the surrounding area for a new hive location. During the initial flight, the bees exhibit spatial swarm behaviors, similar to those found in schools of fish [5] and flocks of birds [6], where each bee maintains a particular distance from their neighbor in order to avoid collisions and follow their neighbor in a particular direction. Scout bees find possible hive locations and evaluate each option with respect to ideal hive criteria. The scouts return to the waiting colony in order to begin a selection process (i.e., colony behavior) entailing debate and building consensus on the best hive location (i.e., best-of-n [7]). After completing a consensus decision-making process, the bees travel to the new hive location, transitioning from colony based behaviors back to spatial swarm behaviors. Upon arrival at the new hive, the bees transition to colony based behaviors.

Collective robotic system attributes, such as global intelligence and emergent behaviors, are advantageous for task completion, because collectives are: (1) scalable (i.e., can change in size) [2], (2) resilient to failures (i.e., responsibilities can be redistributed to other collective entities) [8], and (3) flexible in varying environments [9, 10], as well as the type of robotic entities (i.e., heterogeneous members). Collectives have many proposed applications to aid human operators in conducting challenging tasks, such as environmental monitoring, exploration, search and rescue missions, infrastructure support, and protection for military applications [11]. Adding a human operator, who may possess information that a collective does not, can positively influence the collective’s consensus decision-making process by minimizing the time to make decisions and ensuring the collective selects a higher valued option.

The complexity of human-collective systems challenge designers to determine an appropriate quantity and quality of information necessary to support an accurate perception of the collective’s state, comprehension of what the collective is doing currently and why, as well as what, the collective plans to do in the future. Investigations analyzing the system design elements, such as algorithms, visualizations, operator control mechanisms, and the interactions that transpire at the junction of these components are necessary in order to understand how to achieve transparency for human-collective systems. This manuscript analyzed visualization transparency provided to a human supervisor [12] using a traditional collective representation, Individual Agents (IA) [13], and an abstract Collective [14] representation. The single human operator-collective system incorporated four hub-based collectives, each tasked with making a series of sequential best-of-n decisions [7]. Each collective was to choose the best option from a set of n options, as described in the honeybee nest site search example [4]. The objective was to determine which visualization achieved better transparency by evaluating how the visualized information impacted operators with different individual capabilities, their comprehension, the interface usability, and the human-collective team’s performance. Focusing on the visualization is necessary, considering the means of communication and interaction with remote collectives will only occur via an interface. Realistic use scenarios will rely heavily on the operator’s visual sensory system. Understanding how the visualization design, such as the use of information windows, icons, or colors to represent different collective states, impacts the operator’s ability to positively influence the collective’s decision-making process is necessary to inform transparency focused design guidelines for human-collective systems.

2 Related Work

Behaviors of spatial swarms and colonies, which constitute collective behaviors, are provided in order to develop an understanding of what collective characteristics may be important to a human teammate and collective system designers. Understanding how collective entities communicate and interact with one another to influence individual entity and global collective state changes is necessary to ground collective system design. Factors that affect transparency, or are influenced by transparency, such as explainability, usability, and performance, can be measured to assess the visualizations are discussed. Understanding the transparency factors and how they influence the human-collective system is necessary in order to inform design decisions. A review of transparency research focused on designing and evaluating collective visualizations with respect to methods of achieving transparency is presented.

2.1 Collective Behavior

Spatial swarm robotics are inspired by self-organized social animals [2], such as flocks of birds [15] or schools of fish [5], and exhibit intelligent, emergent behaviors as a unit, by responding to locally available information [11]. Spatial swarms rely on distributed, localized, and often implicit communication, in order to spread information across the swarm. Salient movements warning individual entities of a nearby predator [16] or rapid changes in acceleration, such as a streaking bee guiding a swarm in a particular direction [4], are examples of spatial swarm communication. Spatial swarms follow basic rules of repulsion, attraction, and orientation that enable individual entities to position themselves relative to neighboring entities [17]. Couzin et al.’s model states that individual entities in the zone of repulsion attempt to maintain a minimum distance from their neighbors, striving to avoid collisions, while entities that are far from their neighbors will move closer, as a factor of the zone of attraction. The zone of orientation causes individual entities to align themselves in the same direction as their close proximity neighbors.

Robotic colonies are decentralized systems [18] composed of entities that exhibit unique roles, such as foraging, which adapt over time to maintain consistent states in changing conditions [19]. Biological colonies use a centralized space to share information, such as inside of a nest, or embed information into the environment, such as ants depositing pheromones to indicate a route from a food site to the nest [20]. Positive feedback loops support gaining a consensus to change the colony’s behavior [21]. Recruitment for potential changes increases until a quorum is reached. The colony will transition into a decision-making state, implementing different strategies, such as the bee waggle dance [4], in order to reach a consensus. Once a consensus has been reached colony members will indicate to one another that the colony is ready to move to the new nest site, such as physically picking up ants and transporting them to the new nest site [22]. Negative feedback mitigates saturation issues, such as food source exhaustion [18].

2.2 Transparency Factors

Transparency has been defined as the communication [23], process [24], method [25], mechanism [26], property [27], emergent characteristic [28], explanation [29], and quality [30] of an interface to support operator comprehension of a system’s intent. Chen et al.’s transparency definition [30] is commonly used in the robotics domain and uses the three level Situation awareness-based Agent Transparency (SAT) framework, which leverages the human operator trust calibration 3Ps model (purpose, process, and performance) with performance history [31], Endsley’s situation awareness model [32], and the Beliefs, Desires, and Intentions Agent framework [33], in order to achieve transparency.

Transparency for this manuscript builds on Chen et al.’s definition and is defined as the principle of providing information that is easy to use [34] in an exchange between human operators’ and collectives to promote comprehension [35, 36, 37], intent, roles, interactions [38], performance [30], future plans, and reasoning processes [26, 39]. The term principle describes the process of identifying what factors affect and are influenced by transparency, why those factors are important, how the factors may influence one another, and how to design a system to achieve transparency. A subset of transparency factors that originated from human-machine literature [1], shown in Figure 1, will be used to assess the visualization transparency. Five factors impact transparency directly and are represented as blue ovals connected to the transparency node by solid black lines. The three highest total degree (number of in degree + number of out degree) direct factors are explainability, usability, and performance (dark blue). Information and understanding (light blue) are not considered high degree factors, because explainability uses information to communicate and promote understanding. Explainability and usability, which is a multifaceted quality that influences the operator’s perception of a system, are used for the implementation of transparency in the visualization. Performance can be used to assess the visualization transparency by determining how well a human-collective team was able to produce an output when executing a task under particular conditions [37].

The yellow rectangles represent factors that impact visualization transparency indirectly. The timing, frequency, and quantity of information visualized on an interface, such as collective status or feedback, requires consideration of the human operator’s capability limitations [40], system limitations, as well as task and environmental impacts, in order to be explainable [41]. Human-collective efficiency and effectiveness can be improved by visualizing information, such as predicted collective states, in a clear and cohesive manner that helps alleviate the time and effort an operator must exert to integrate information in order to draw conclusions [42] and justify particular actions [43]. Poor judgements may occur if the operator lacks an understanding of what the collective is doing due to poor visualization usability, which may cause operator dissatisfaction. Visualizations that do not provide needed information to the operator and system control mechanisms to effectively influence collective behavior will hinder human-collective performance, lower operator situational awareness, impact workload negatively, and potentially compromise safety of the human-collective team. Understanding the relationships between the direct and indirect factors, and their relationship to transparency, is needed in order to assess metrics that can quantify good design of human-collective visualizations.

2.3 Collective Visualization Transparency

Three design methods that have been used frequently to optimize transparency and desired outcomes (i.e., effective team interactions and high performance) in traditional human-machine systems are: (1) provide system features, such as status, feedback, planning mechanisms, and engagement prompts, (2) use specific guidelines, such as Gestalt principles, to design a system, or (3) train the operators and system [1]. The majority of the collective visualization transparency literature has focused primarily on the first two design methods of providing system features and using specific guidelines to design the collective visualizations. Most evaluations used training, the third method, only to teach operators how to interact with their specific systems [44, 45], but did not analyze how different training strategies may impact transparency. More training may be required for different collective visualizations due to difficulties understanding the presented information. Determining what strategies have been used and their associated outcome success rates in remote teaming with collectives, as well as methods that can be evaluated more in depth, such as training, will aid in the development of transparency guidelines for collective visualizations.

The collective transparency literature that uses traditional collective visualizations (i.e., showing the position of all individual entities) has focused on assessing operator understanding of collective behavior and human-collective performance. A variety of individual collective entity features have been visualized for operators, including current and predicted future position [46] and heading direction [45], health (i.e., speed, strength, capability, and dispersion [47]), and status. The most commonly used visual icon for an individual collective entity has been a circle, where directional information was observed either as the entity moves across a 2-D space, or the circle also encompassed a line pointing in the heading direction. Some visualization design guidance came from subject matter experts [47] and the Gestalt principles [44]. The use of multimodal cues (i.e., visual collective state color coding and written messages, spoken messages, and vibrations) aided operators in the identification and response of signals during a reconnaissance mission (99.9% accuracy), as well as shorter response times, and lower workload. Sharing collective information via multimodal cues may alleviate an operator’s high visual loads when managing multiple tasks on various displays by increasing situational awareness [47] and promoting better transparency. Operators’ using a visualization incorporating Gestalt-based design principles perceived and approximated optimal swarm performance faster than operators using visualizations containing only individual entity position information [44]. Increased visualization transparency enabled operators to learn when to approximate optimal input timing.

Information latency, which occurs due to communication bandwidth limitations, and neglect benevolence, the time allowed for a collective to stabilize before issuing new operator commands, on operator understanding of future collective behavior are important considerations [46]. Latency affected operators’ ability to control a collective, but providing additional transparency via a predictive visualization, which showed the predicted location of each individual entity 20 seconds into the future, mitigated these effects. Operators using the predictive visualization with latency performed as well as operators who experienced no latency. Transparency of human-collective systems can be improved by implementing predictive visualizations of the collective and its entities by allowing the operator more time to think about their future actions. Operators will be able to balance span, the number of individual collective entities they interact with, and persistence, the duration of the interactions with visualizations that provide heading information [45]. Aspects, such as the presence or absence of Couzin et al.’s communication model states, visualized individual collective entities’ velocity, and individual operator characteristics, such as gender, have impacted the identification of swarming behavior [48]. Understanding the influence of factors is necessary to promote perception and comprehension of collective behavior to inform future operator actions.

Abstract collective visualizations have been proposed to improve operator understanding and influence positive collective behavior. Radial visualizations, using the SAT model [30] and heuristic evaluations analyzing the application of collective metrics on visualizations [49], as well as glyphs [50], bounding ellipses [51], convex hulls, and directed arrows [52] have been assessed. Similar system features provided in the traditional visualizations were conveyed using the abstract visualizations. The radial visualizations promoted perception (SAT Level 1) by displaying mission [53] and collective state information [54], such as the direction the entities left the hub to explore environmental targets. Predictions of future collective headings (SAT Level 3), that were provided by elongating the radial display in the direction of more collective support, aided operator actions. Visualizations providing predictive information are needed to achieve transparency for human-collective systems, regardless if the visualization is traditional or abstract. Operators using a glyph were able to acquire information regarding the collective’s power levels, task type, and the number of individual entities, via one icon [50]. Additional information about particular system features was accessible via pop-up windows. Designers of abstract collective visualizations can ensure transparency by providing redundant information via the collective icon and using supplementary information windows. Conflicting results were found for evaluations assessing whether traditional or abstract visualizations aided operators better during different tasks. Abstract visualizations during a go-to and avoid task in the presence or absence of obstacles [52] performed worse than the traditional visualizations, while abstract visualizations performed as well or better when perceiving biological collective structure [55] and under variable bandwidth conditions [51]. Further analysis is needed in order to determine which collective visualization will promote better transparency for a common task by investigating how transparency factors influence human-collective behaviors.

3 Human-Collective Task

The human-collective task involved a single human operator who supervised and assisted four robotic collectives that performed a sequential best-of- decision-making task, where the human-collective team chose the best option from a finite set of options [7]. The human-collective team performed two sequential decisions per collective (moved the collective to a new hub site). The decision-making task entailed the identification and selection of the highest valued target within a 500 range of the current hub, the collective hub moved to the selected target, and initiated the second target selection decision. The consensus decision-making task required a quorum detection mechanism to estimate when the highest valued target was identified by 30% of the collective [14]. Each collective of 200 simulated Unmanned Aerial Vehicles searched an urban area of approximately 2 .

The four collective hubs were visible at the start of each trial. Targets became visible as each was discovered by a collective’s entities. The target’s value was assessed by the collectives’ entities, who returned to their respective hub to report the target location and value. The collectives were only allowed to discover and occupy targets within their search range, but some targets were within proximity of multiple hubs. A collective’s designated search area changed after it moved to a new target to establish the new hub site. The operator was instructed to prevent multiple collectives merging by not permitting their respective hubs to move to the same target. When a collective moved to a target, the hub moved to the target location, and the target was no longer visible to the operator or available to other collectives. The collective that moved its hub to a target’s location first, when two collectives were investigating the same target, moved to the target location, while the second collective returned to its previous location. Both collectives made a decision when a merge occurred, even though only one collective moved its hub to the respective target location.

The general interface design requirements, related to collective autonomy, control, and transparency, are: 1) enable the operator to estimate the collectives’ decision-making process, 2) identify appropriate controls to influence the decision-making process, and 3) implement the desired controls [14]. Two algorithmic models were used. A sequential best-of-n decision-making algorithm () adapted an existing model, which based decisions on the quality (i.e., value) of a target [56]. Information exchanges between a collective’s entities was restricted inside the hub, to mimic honeybees. Episodic queuing cleared messages when the collective entities transitioned to different states, which resulted in more successful and faster decision completion. Interaction delay and interaction frequency were added as bias reduction methods in order to consider a target’s distance from the collective hub and increase interactions among the collectives’ entities regarding possible hub site locations. Interaction delay improved the success of choosing the ground truth best targets, and interaction frequency improved decision time. The baseline model () allows the collective entities to search and investigate potential targets, but was unable to build consensus. The operator was required to influence the consensus-building element and select that final target, based on the consensus. Simulations were ran without an operator for the model in order to understand the operator’s influence on collective behavior, referred to as . The model required operator influence in order to perform the decision-making task; thus, simulation only analysis was not conducted.

The interface controls allowed the operator to alter the collectives’ internal states, including their levels of autonomy, throughout the sequential best-of-n selection process. The collective’s entities were in one of four states. Uncommitted entities explored the environment searching for targets, and were recruited by other entities while inside of the collective’s hub. Collective entities that favored a target reassessed the target’s value periodically, and attempted to recruit other entities within the collective’s hub to investigate the specified target. Collective entities were committed to a particular target once a quorum of support was detected, or after interacting with another committed entity. Executing collective entities moved from the collective’s current hub location to the selected target’s location. A collective operated at a high level of autonomy by executing actions associated with potential targets independently. The operator was able to influence the collective’s actions in order to aid better decision-making, effectively lowering the level of autonomy. Communication from the operator with the collective’s entities occurred inside the hub in order to simulate limited real-world communication capabilities. The control mechanisms, for influencing the collective, were communicated to the specified hub. Two visualizations were designed and evaluated in order to determine which visualization provided better transparency by facilitating the operator’s perception of the collectives’ states, comprehension of the collectives’ decision-making processes, and means to influence future collectives’ actions.

3.1 Individual Agents Interface

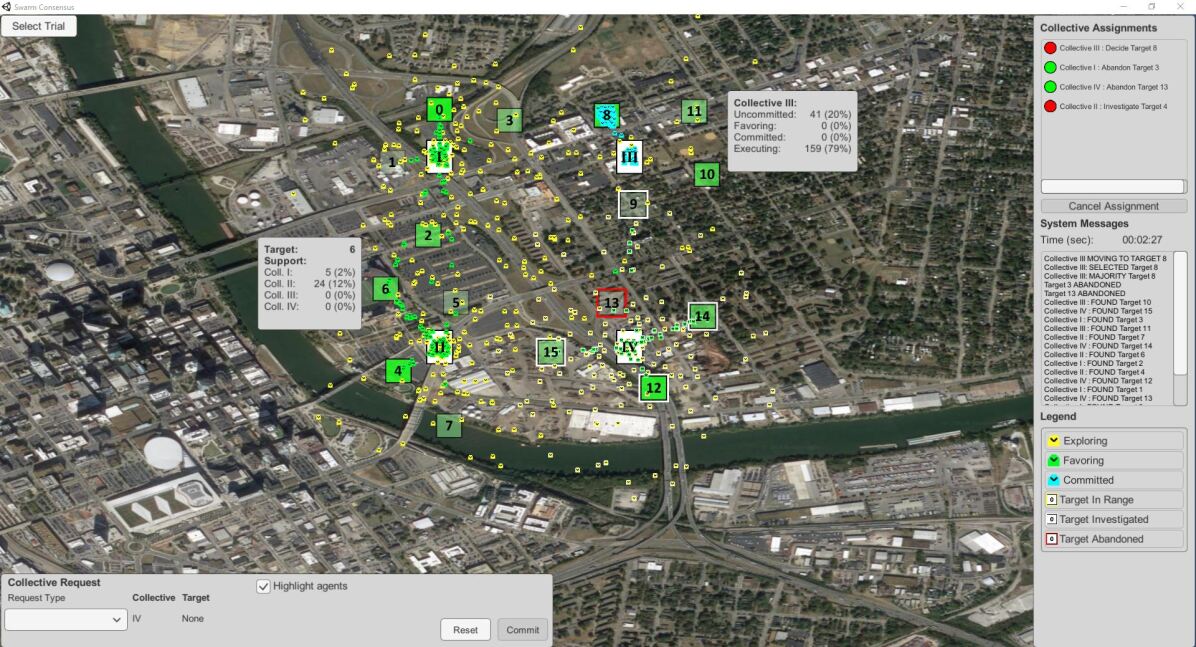

The Individual Agents (IA) interface, see Figure 2, exemplifies a traditional collective visualization by displaying the location of all the individual collective entities [13]. The interface was divided into three primary areas: 1) the central map, 2) the collective request area, and 3) the monitor area. The map, located at the center of the interface visualizes the respective hubs, their individual entities, discovered targets, and other associated information. Both the collectives and targets were rectangular boxes with distinguishing identifiers located at the center of the icon. The collectives had Roman numeral identifiers (I-IV), while the targets used integers (0-15). Discovered targets initially were white and transitioned to a green color when at least two individual collective entities evaluated the target. The highest valued targets were a bright opaque green (e.g., Target 0 in Figure 2), while lower valued targets had a more translucent green color (e.g., Target 9 in Figure 2). Targets that were within the collective’s 500 search range had different colored outlines, depending on the collective’s state: explored targets that were not currently favored had yellow outlines, explored targets that were favored had white outlines (e.g., Target 12 in Figure 2), and targets that were abandoned have red outlines (e.g., Target 13 in Figure 2).

The individual collective entities began each trial by exploring the environment in an uncommitted state, which transitioned to favoring as targets were assessed and supported. The individual collective entities committed to a target once 30% of the collective (60 individual entities) favored a particular target. The collective executed a move to the selected target’s location once 50% of the collective (100 entities) favored the target. The individual collective entities’ state information was conveyed via individual collective entity color coding: uncommitted (yellow), favoring (green), committed (blue), and executing (blue). A legend of the collective entities’ and target border colors was provided in the lower right-hand corner, see Figure 2. The number of individual collective entities in a particular state, or supporting a target was provided via the collective hub and target information pop-up displays, which provided detailed information, represented as gray rectangular boxes, displayed directly on the map in Figure 2. The information displays, when accessed, appeared in a particular location relative to the respective collective’s hub or target. The operator was able to move the information displays by dragging the pop-up display to a desired location.

The operator had the ability to influence an individual collectives’ current state via the collective request area, located on the lower left-hand side of Figure 2. The investigate command permitted increasing a collective’s support for an operator specific target. Ten uncommitted entities (5% of the collective population) transitioned to the favoring state after receiving and acknowledging the investigate command. Additional support for the same target was achieved by reissuing the investigate command repeatedly. The abandon command reduced a collective’s support for a specific target by transitioning favoring individual entities to the uncommitted state. The abandon command only needed to be issued once in order for the collective to ignore a specified target. A collective’s entities stopped exploring alternative targets and moved to the operator selected target when the decide command was issued, which was a valid request when at least 30% of the collective supported the operator specified target.

The collective assignments area logged the operator’s issued commands, shown in the upper right-hand corner of the monitor area in Figure 2. The log displayed what commands were issued with respect to particular collectives, for example, Collective I: Abandon Target 3. The green and red circles next to each command signified whether the command was completed (red) or currently active (green). An investigate command initially had a green circle and transitioned to red once ten individual entities received and acknowledged the investigate command for a particular target. Issued abandon commands for a particular collective and target remained active. Once a collective reached a decision, all prior commands associated with that particular collective were removed from the collective assignments log. The only command the operator was able to cancel was the abandon command, which required selecting the desired abandon command, in Figure 2, the “Collective I: Abandon Target 3”, selecting the cancel assignment button.

System messages indicated the actions taken by the operator and collectives. The illegal message was displayed when an operator requested an invalid command, and explained why the requested action was not viable. Three situations resulted in illegal messages. The first arose when the operator attempted to issue an investigate command for targets that were outside of the collective’s search region. The second situation occurred when the operator attempted to abandon newly discovered targets that did not have an assigned value (white targets). The last message arouse when operators attempted to issue decide commands when less than 30% of the collective supported a target.

3.2 Collective Interface

The Collective interface, shown in Figure 3, provides an abstract visualization that does not represent individual collectives’ entities. The Collective interface was divided into the same three primary areas as the IA interface. The collectives were represented as gray and white rectangles with four quadrants and Roman numeral identifiers located at the top center of the icon. Collective state information was conveyed via the collective icon’s quadrants, color coding, and information windows. The collective icon’s contained four state quadrants (uncommitted (U), favoring (F), committed (C), and executing (X)), which represented the number of individual entities currently in each state, where a brighter white quadrant equated to larger numbers of individual collective entities. The square target icons had integer identifiers positioned on the upper right hand corner. Targets contained two sections, where the top green section represented the target’s value, where the brighter and more opaque the green, the higher the value (e.g., Target 8 in Figure 3). The blue section indicated the number of individual entities favoring a particular target, where the brighter and more opaque the blue, the higher the number of collective entities (e.g., Target 12 in Figure 3).

The collective interface operated similarly to the IA interface with some distinctions. A target was outlined in blue, demonstrated by Target 0 in Figure 3, when the collective’s support exceeded 30%. The target transitioned to a green outline and the collective was outlined in green when the collective began executing a move to the target’s location. The collective’s outline moved from the hub to the target’s location to indicate the hub’s transition to the selected target. The interface’s legend appeared in the upper left corner, see Figure 3.

4 Experimental Design

The primary research question for the between-evaluation analysis was to determine which visualization achieved better transparency? Four secondary questions were developed in order to investigate how the visualization impacted a direct transparency factor, exclusive of trust. The first research question () focused on understanding how the visualization influenced the operator. Individual differences, such as experience level, will impact an operator’s ability to interact with the visualization effectively and cause different responses (i.e., loss of situational awareness or more workload). A visualization that can aid operators with difference capabilities is desired. The explainability factor was encompassed as , which explored whether the visualization promoted operator comprehension. Perception and comprehension of the visualized information are necessary to inform future actions. Understanding which visualization promoted better usability, , will aid designers in promoting effective transparency in human-collective systems. The final research question, , assessed which visualization promoted better human-collective performance. A system that performs a task quickly, safely, and successfully is ideal.

The independent variables included the between visualization variable, IA versus Collective, and trial difficulty (overall, easy, and hard). Trials that had a larger number of high valued targets in closer proximity to a collective’s hub were deemed easy, while hard trials placed high valued targets further away from the hub. The dependent variable details are embedded into the sections associated with each research question.

4.1 Experimental Procedure

The experimental procedure for both evaluations began with a demographic questionnaire, followed by the Mental Rotations test [57], after which the participants received training and practiced using the interface. Two practice sessions occurred prior to each trial in order to ensure familiarity with the underlying sequential best-of-n () and baseline models. The model trial was always completed first, in order to alleviate any learning effects from using . The participants were instructed that the objective was to aid each collective in selecting and moving to the highest valued target two sequential times. A trial began once the respective practice session was completed. Each trial was divided into two components (one easy and one hard) of approximately ten minutes each. Splitting each trial into two components allowed the simulation environment to reset with 16 new (not initially visible) targets. The easy trial contained higher valued targets close to the hub, while the hard trial placed high valued targets further away. The easy and hard trial orderings were randomly assigned, and counterbalanced across the participants. The situational awareness (SA) probe questions [14], intended to serve as a secondary task, were asked beginning at 50 seconds into the trial and were repeated at one-minute increments. Six SA probes were asked during each trial. The trial was terminated once the team completed eight decisions, two per collective, or once six decisions were made, if the trial length exceeded the ten-minute limit. Decision times were not limited. A post-trial questionnaire was completed after each trial and the post-experiment questionnaire was completed before the evaluation termination.

4.2 Participants

The participants from both evaluations completed a demographic questionnaire, which collected information regarding age, gender, education level, weekly hours on a desktop or laptop (0, less than 3, 3-8, and more than 8), and their video game proficiency from little to no proficiency (1) to high proficiency (7). The Mental Rotation Assessment [57] required participants to judge three-dimensional object orientation to assess spatial reasoning within a scoring range of 0 (low) to 24 (high). The mode is reported in parenthesis for questions that required selection to a group.

4.2.1 IA Evaluation

Fourteen females and nineteen males (33 total) completed the IA evaluation at Oregon State University. The predominant (25) age range was between 18 to 30 years, with seven participants in the 31 and 50 range and one participant being 60 and older. Many participants were in the process of obtaining (8) or had an undergraduate degree (13), a master’s degree (9), or a doctorate degree (1). The mean number of weekly hours participant’s used a desktop or laptop was 3.79, with a standard deviation (SD) = 0.5, median = 4, minimum (min) = 2, and maximum (max) = 4. Participants ranked video game proficiency as mean 4.61 (SD = 1.93, median = 5, min = 1, max = 7). The Mental Rotation Assessment [57] mean was 12.36 (SD = 5.85, median = 12, min = 3, and max = 24) [13].

4.2.2 Collective Evaluation

Twenty-eight participants, 15 females and 13 males, from Vanderbilt University, completed the Collective evaluation. The majority of participants (24) were between 18 and 30 years old, with four between 31 and 50. Most of the participants completed high school and were in the process of obtaining (11) or had completed an undergraduate degree (13). The weekly hours participant’s used a desktop or laptop was slightly higher than that of the IA (mean = 3.86, SD = 0.45, median = 4, min = 2, and max = 4). The participants’ video game proficiency was ranked lower than the IA (mean = 3.61, SD = 2.23, median = 2.5, min = 1, and max = 7). The participants’ Mental Rotations Assessment scores were also slightly lower (mean = 10.93, SD = 5.58, median = 10, min = 1, and max = 24) [13].

4.3 Analysis

Five participants were excluded from the IA evaluation due to inconsistent methodology (1) and software failure (4). The between visualization analysis is based on 56 participants from both evaluations. The first twelve decisions made per participant using the model were analyzed. The majority of the objective metrics were analyzed by SA level (overall (), perception (), comprehension (), and projection ()), decision difficulty (overall, easy, and hard), timing with respect to a SA probe question (15 seconds before asking, while asking, or during response to a SA probe question), or per participant. Non-parametric statistical methods, including Mann-Whitney-Wilcoxon tests and Spearman Correlations, were calculated due to a lack of normality. The correlations were with respect to SA Probe Accuracy and Selection Success Rate. The Collective evaluation data was reanalyzed using the same methods. Secondary research question’s hypotheses, associated metrics, results, and discussion are presented in Sections 5-8.

5 : Visualization Influence on Human Operator

Understanding how the visualization influenced the operator, , is necessary to determine if the transparency embedded into the system design aided operators with different capabilities. The associated objective dependent variables were (1) the operator’s ability to influence the collective in order to choose the highest target value, (2) situational awareness (SA), (3) interface clutter, and (4) the operator’s spatial reasoning capability (Mental Rotations Assessment). The relationship between the variables and the corresponding hypotheses, and the direct and indirect transparency factors, are identified in Table 1. Additional relationships (not identified in Figure 1) between the variable and the direct or indirect transparency factors are identified due to correlation analyses.

| Transparency Factors | ||||||||||

| Direct | Indirect | |||||||||

| Performance | Usability | Capability | Effectiveness | SA | Satisfaction | Timing | Understanding | Workload | ||

| Objective Variables | Hypotheses | |||||||||

| Target Value | ✓ | |||||||||

| SA Probe Accuracy | ✓ | ✓ | ✓ | ✓ | ||||||

| Local Clutter Percentage | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| Global Clutter Percentage | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| Mental Rotations Assessment | ✓ | ✓ | ||||||||

| Subjective Variables | ||||||||||

| Weekly Hours on a Desktop or Laptop | ✓ | ✓ | ||||||||

| Video Game Proficiency | ✓ | ✓ | ||||||||

| NASA Task Load Index | , | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| 3-D Situational Awareness Rating Technique | ✓ | ✓ | ✓ | ✓ | ||||||

Operators may have performed differently depending on their individual differences. It was hypothesized () that operators using the Collective visualization will experience significantly higher SA and lower workload. SA represents an operator’s ability to perceive and comprehend information in order to project future actions that must be taken in order to fulfill a task [32]. Usability influences the perception of information [1] and will impact workload, which is the amount of stress an operator experiences in order to accomplish a task during a particular duration of time [58]. It was hypothesized () that operators with different individual capabilities will not perform significantly different using the Collective visualization. An ideal visualization will enable operators with different capabilities to perceive, comprehend, and influence collectives relatively the same. Training can alleviate any disparities between operators, but is only intended to supplement the system’s design. The operator’s attitude and sentiments towards a system, which is dependent on system usability, provides essential information related to the system’s design [59]. Good designs promote higher operator satisfaction. It was hypothesized () that operators using the Collective visualization will experience significantly less frustration (i.e., higher satisfaction).

5.1 Metrics and Results

Assessing variables, such as the selected target value for each human-collective decision, is necessary in order to determine whether operators were able to perceive the target value correctly and influence the collectives positively. The objective of the human-collective team was to select the highest valued target for each decision from a range of target values (67 to 100). The selected target value is the average of all target’s respective values that were selected by the human-collective teams during a trial. The descriptive statistics for the selected target value per decision difficulty (i.e., overall, easy, and hard) are shown in Table 2. Participants using the Collective interface were able to influence the collective to chose higher valued targets, regardless of decision difficulty, on average; however, the Mann-Whitney-Wilcoxon test identified no significant effects between visualizations for the selected target value.

| Decision Difficulty | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|

| IA | Overall | 90.29 (7.11) | 95 (67/97) |

| Easy | 90.21 (7.29) | 95 (67/97) | |

| Hard | 90.4 (6.88) | 94 (68/96) | |

| Collective | Overall | 92.05 (5.08) | 95 (68/96) |

| Easy | 92.09 (5.54) | 95 (68/96) | |

| Hard | 92 (4.5) | 95 (78/96) |

The SA dependent variable was SA probe accuracy, which is the percentage of correctly answered SA probes questions used to assess the operator’s SA during a trial [14]. Each question corresponded to the three SA levels: perception, comprehension, and projection [32]. Participants were asked five , four , and three questions. The questions determined the operator’s ability to perceive information about the collectives and targets, such as “What collectives are investigating Target 3?” The operator’s comprehension of information was represented by the questions, such as “Which Collective has achieved a majority support for Target 7?” questions related to the operator’s ability to estimate the collectives’ future state, such as “Will support for Target 1 decrease?” An overall SA value, , represented the percent of correctly answered SA probes out of 12 total. The SA probe accuracy descriptive statistics are shown in Table 3 [13]. Operators using the Collective visualization had higher SA probe accuracy, regardless of the SA level. The Mann-Whitney-Wilcoxon tests (n = 56, degrees of freedom (DOF) = 1) found highly significant effects between visualizations for (U = 702, 0.001) and (U = 714.5, 0.001). Moderately significant effects were found for (U = 572.5, 0.01) and (U = 554, 0.01).

| SA Level | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|

| IA | 65.3 (18.87) | 68.33 (16.67/83.33) | |

| 58.57 (23.05) | 60 (20/100) | ||

| 72.32 (21.88) | 75 (25/100) | ||

| 65.48 (34.52) | 66.67 (0/100) | ||

| Collective | 89.88 (10.96) | 91.67 (58.33/100) | |

| 91.67 (11.11) | 100 (66.67/100) | ||

| 88.39 (14.6) | 100 (60/100) | ||

| 89.88 (20.46) | 100 (33.33/100) |

Local and global clutter percentages were analyzed for each SA probe question. Clutter is defined as the area occupied by objects on a display, relative to the total area of the display [58]. Presenting too much information in close proximity to one another will require the operator to search longer for information [58] and can negatively influence the accuracy of the SA probe questions. Area coverage for each 2-D item was calculated by the number of pixels the item covered on the computer visualization. The conversion between meters and pixels was different for each visualization due to differences in the display monitor size and software program. One meter for the IA visualization was approximately 1.97 pixels per meter and the Collective visualization was approximately 2.3 pixels per meter. The local clutter percentage variable was the percentage of area obstructed by items that were displayed within the 500 (i.e., approximately 254 pixels for the IA visualization and 218 pixels for the Collective visualization) circular radius from the center of the collective, or target of interest in the SA probe question. Collective IV, for example, is the collective of interest in the following SA probe question: “What is the highest value target available to Collective IV?” The items obstructing the 500 radius when using the IA visualization, in Figure 2, for the previous SA probe question are: the Collective IV, Targets 9 and 12-15, and 200 individual entities. Some SA probe questions encompassed more than one collective or target of interest, which required calculating the local clutter percentage for each collective or target and summing the values together. All calculations first required converting meters into pixels in order to ensure equivalent units. The Collective visualization computer display size was unknown; therefore, local and global clutter percentage calculations use the corresponding item and computer display dimensions from the IA visualization. Local clutter was calculated using Equation 1:

| Timing | SA Level | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|---|

| IA | Before | 33.6 (21.66) | 26.13 (9/124) | |

| 30.79 (19.53) | 24.4 (9/122.33) | |||

| 41.54 (24.39) | 37.75 (9/124) | |||

| 28.61 (19.36) | 22.23 (9.08/97.7) | |||

| Asking | 34.42 (22.16) | 28 (9/124) | ||

| 31.91 (20.54) | 25 (9/122) | |||

| 41.67 (24.74) | 37.17 (9/124) | |||

| 29.73 (19.54) | 24.21 (9/97.67) | |||

| Responding | 34.26 (22.25) | 27.5 (8/124) | ||

| 31.84 (20.61) | 24.83 (9/122) | |||

| 41.28 (24.96) | 36.5 (8/124) | |||

| 29.68 (19.6) | 24 (9/98) | |||

| Collective | Before | 35.42 (28.08) | 25.44 (9/177) | |

| 34.09 (29.09) | 24.04 (9/151.9) | |||

| 35.81 (27.53) | 26.86 (10.3/177) | |||

| 37.98 (26.78) | 28.23 (10.38/131.47) | |||

| Asking | 35.37 (28.78) | 25.75 (9/176.5) | ||

| 34.24 (30.37) | 23.63 (9/176.5) | |||

| 36.47 (26.76) | 27.4 (10.2/130.4) | |||

| 36.35 (28.27) | 27.25 (9/147.56) | |||

| Responding | 35.6 (29.19) | 25.8 (9/176.4) | ||

| 34.29 (29.84) | 25 (9/176.4) | |||

| 36.55 (27.98) | 27 (10/130) | |||

| 37.24 (29.86) | 26.57 (9/147.57) |

| (1) |

where LHA represents the area corresponding to the number of collective hubs (2464 per hub) inside the 500 radius. The area corresponding to the number of highlighted targets (2350 per highlighted target), which have outlines and are in range of the currently selected collective are represented as LHTA, while the targets that are not highlighted (1720 per target) are denoted as LTA. LAICE represents the area corresponding to the number of individual collective entities (64 per agent) inside of the 500 radius, and was excluded from the Collective visualization local clutter percentage calculation, because no individual entities were displayed. The individual collective entities were confined to the 500 search radius around their respective collective hub; therefore, the calculation assumes that the 200 entities associated with each collective are inside of the local radius. The area corresponding to the number of target information pop-up windows (32922 per target information pop-up window) is represented as LTIW, and the corresponding collective information pop-up windows (25740 per collective information pop-up window) are represented as LCIW. Only the target or collective information pop-up windows that belong to targets or collectives inside of the 500 radius are considered.

The local clutter percentage descriptive statistics 15 seconds before asking, while asking, and during a SA probe response are provided in Table 4. The maximum local clutter percentage was 177%, which indicated that the area covered by the associated items of the collective or target of interest in the SA probe exceeded the 500 radius. Local clutter percentages larger than 100% were attributed to the area covered by the collective and target information pop-up windows. The location of the information pop-up windows were not recorded; therefore, the maximum area coverage was considered when information pop-up windows did not occlude items in the environment. The maximum area coverage condition was not reflective of the real trial environment, where information pop-up windows covered items on the central map. The IA visualization had lower local clutter percentage, regardless of when the metric was collected for , , and ; although, no significant effects were found between visualizations. The Spearman correlation analysis revealed no correlations were found between local clutter percentage and SA probe accuracy.

The global clutter percentage, calculated using Equation 2, was the percentage of area obstructed by all objects displayed on the entire IA computer display (2073600 ), since the Collective computer display was unknown.

| Timing | SA Level | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|---|

| IA | Before | 30.2 (3.06) | 28.83 (27/40.22) | |

| 29.88 (2.8) | 28.5 (27/40.22) | |||

| 30.41 (3.05) | 29.1 (27/40) | |||

| 30.45 (3.45) | 28.85 (27/40) | |||

| Asking | 30.25 (3.13) | 29 (27/40) | ||

| 29.95 (2.91) | 28.58 (27/40) | |||

| 30.41 (3.12) | 29 (27/40) | |||

| 30.52 (3.49) | 29 (27/40) | |||

| Responding | 30.09 (3.02) | 29 (27/40) | ||

| 29.83 (2.81) | 28.5 (27/40) | |||

| 30.22 (3) | 29 (27/40) | |||

| 30.37 (3.38) | 28.79 (27/40) | |||

| Collective | Before | 31.37 (4.97) | 29.21 (27.88/53) | |

| 31.38 (5) | 29.13 (28/52.4) | |||

| 31.25 (5.09) | 29.21 (27.88/53) | |||

| 31.56 (4.76) | 29.54 (28/52) | |||

| Asking | 31.43 (5.13) | 29.17 (28/53) | ||

| 31.24 (5.26) | 29 (28/53) | |||

| 31.52 (5.2) | 29.22 (28/51.8) | |||

| 31.69 (4.78) | 29.75 (28/48) | |||

| Responding | 31.41 (5.15) | 29 (28/53) | ||

| 31.43 (5.43) | 29 (28/53) | |||

| 31.34 (5.08) | 29 (28/51.5) | |||

| 31.49 (4.66) | 29.31 (28/48) |

| (2) |

where ICA represents the area of the static interface components (493414 ), which encompass the program bar, the Microsoft Windows program bar, the select trial button, the collective request area, and the monitor area. GHA represents the area covered by Collectives I-IV (9856 ), which were visible throughout the duration of a trial. The area corresponding to the number of highlighted targets (2350 per highlighted target), which have outlines and are in range of the currently selected collective are represented as GHTA. Remaining targets that are not highlighted (1720 per target), are represented as GTA. GAICE represents the area encompassed by 800 individual collective entities (51200 ), which was only considered for the IA visualization. The area corresponding to the number of target information pop-up windows (32922 per target information pop-up window) is represented as GTIW and the corresponding collective information pop-up windows is represented as GCIW (25740 per collective information pop-up window).

The global clutter percentage descriptive statistics 15 seconds before asking, while asking, and during response to a SA probe question are shown in Table 5. The IA visualization had a lower global clutter percentage, regardless of when the metric was assessed across all SA levels. The Mann-Whitney-Wilcoxon tests found highly significant effects between visualizations when responding to a SA probe question (n = 670, DOF = 1) for (U = 64442, 0.001). Moderate significant effects were found for 15 seconds before asking a SA probe (U = 64188, 0.01) and while asking a SA probe question (U = 63728, 0.01). Significant effects were found 15 seconds before asking a SA probe question for (n = 294, DOF = 1, U = 12487, = 0.02) and (n = 152, DOF = 1, U = 3445.5, = 0.03); while asking a SA probe question for (n = 294, DOF = 1, U = 12301, = 0.03) and (n = 152, DOF = 1, U = 3452, = 0.05); and during the response to a SA probe question for (n = 294, DOF = 1, U = 12216, = 0.04). The Spearman correlation analysis revealed a weak correlation for the Collective visualization for between global clutter percentage 15 seconds before asking a SA probe question and SA probe accuracy (r = 0.16, = 0.05).

The Mental Rotations Assessment [57], which assessed the operator’s spatial reasoning, identified no significant effects between visualizations. A Spearman correlation analysis revealed weak correlations between the Mental Rotations Assessment and SA probe accuracy when using the IA visualization for (r = 0.17, 0.01), (r = 0.18, = 0.03), and (r = 0.27, 0.01). The Mann-Whitney-Wilcoxon tests identified no significant effects between visualizations for the weekly hours spent using a desktop or laptop and video game proficiency. Weak correlations were found between weekly hours using a desktop or laptop and SA probe accuracy for the IA visualization for (r = 0.12, = 0.04) and (r = 0.21, = 0.01), as well as when using the Collective visualization for (r = 0.21, = 0.02). No correlations were found between video game proficiency and SA probe accuracy.

The NASA Task Load Index (NASA-TLX) assessed the six workload subscales and the weighted overall workload [60]. The descriptive statistics for the NASA-TLX demands imposed on the operator are presented in Table 6. The Collective visualization imposed a lower overall workload, had lower physical and temporal demands, and caused less frustration. The IA visualization imposed a lower mental demand, which had a significant effect between visualizations (n = 56, DOF = 1, U = 515, = 0.04) and less effort. The IA visualization had higher performance with a highly significant effect between visualizations (n = 56, DOF = 1, U = 159.5, 0.001).

| Overall and Subscales | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|

| IA | Overall | 62.14 (14.81) | 65.67 (24/85.67) |

| Mental | 19.25 (8.8) | 20 (0/33.33) | |

| Physical | 1.68 (3.32) | 0 (0/13) | |

| Temporal | 11.75 (8.24) | 9.67 (0/28.33) | |

| Performance | 10.69 (5.87) | 8.83 (2.67/25) | |

| Effort | 11.35 (6.68) | 11 (2.67/28.33) | |

| Frustration | 7.43 (8.36) | 4.67 (0/33.33) | |

| Collective | Overall | 57.06 (16.47) | 56.83 (5.67/83.33) |

| Mental | 23.58 (6.34) | 25 (3/31.67) | |

| Physical | 0.46 (1.17) | 0 (0/4.67) | |

| Temporal | 10.94 (7.67) | 10.33 (0/24) | |

| Performance | 5.1 (4.7) | 3.67 (0/21.33) | |

| Effort | 12.32 (6.26) | 13 (2/25.33) | |

| Frustration | 4.65 (6.84) | 1.83 (0/30) |

The 3-D Situational Awareness Rating Technique (SART) measured the operator’s perceived situational understanding, demand on attentional resources, and supply of attentional resources [61]. An overall score was calculated using the standard calculation. The SART descriptive statistics are presented in Table 7 [13]. The minimum SART score was -1, which was unexpected as a negative score requires the supply of attentional resources to exceed the demand on attentional resources and a low perceived situational understanding. Both of these conditions are highly unlikely. The Collective visualization had a higher overall score, more situational understanding, high demands of attentional resources (although nearly the same as the IA visualization), and more supply of attentional resources, compared to the IA visualization. The Mann-Whitney-Wilcoxon test indicated moderately significant effects between visualizations for the overall score (n = 56, DOF = 1, U = 560, 0.01), situational understanding (n = 56, DOF = 1, U = 561, 0.01), and supply of attentional resources (n = 56, DOF = 1, U = 561, 0.01).

| Overall and Dimensions | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|

| IA | Overall | 4.64 (2.6) | 4.5 (-1/10) |

| Situational Understanding | 4.96 (1.53) | 5 (2/7) | |

| Demands on Attentional Resources | 5.04 (1.2) | 5 (2/7) | |

| Supply of Attentional Resources | 4.71 (1.36) | 5 (1/7) | |

| Collective | Overall | 6.68 (2.26) | 6.5 (3/13) |

| Situational Understanding | 6.07 (0.9) | 6 (4/7) | |

| Demands on Attentional Resources | 5.07 (1.18) | 5 (1/6) | |

| Supply of Attentional Resources | 5.68 (1.09) | 6 (3/7) |

5.2 Discussion

The analysis of how visualization influenced operators suggests that the Collective visualization promoted better transparency. The variables that directly supported are the SA probe accuracy and SART. was supported, because operators using the Collective visualization had significantly higher objective and subjective SA and lower overall workload. Transparency embedded into the Collective visualization, via color-coded icons (i.e., target value) and outlines, state information identified on the collective icon, information provided in the collective and target information pop-up windows, and feedback provided in the Collective Assignments and System Messages areas, promoted better perception, comprehension, and projection of future operator actions, making the overall human-collective team more effective. The Collective visualization operators; however, had more local and global clutter, which was mainly attributed to the number of collective and target information pop-up windows that were visible. The increased clutter has both positive and negative implications for transparency. From a system design perspective, clutter is not ideal if operators are unable to perform their tasks effectively. The Collective operators, in this evaluation, who had more global clutter were able to answer more SA probe questions accurately, which suggests that operators were not hindered by the clutter and were able to perform better than their counterparts. The dependence on having the collective and target information pop-up windows visible suggests that the collective state information provided on the collective icon was not as effective as the information pop-up window and there is a need to provide support information on the target icons. Other design strategies must be investigated to improve the efficacy of the collective and target icons for the Collective visualization. Further analyses are also required to determine what contributed to more mental demand, more effort, and less perceived performance using the Collective visualization and whether the additional stress may have been experienced due to positive aspects, such as operators being highly motivated to complete their tasks. The Collective visualization may have required more effort on behalf of the operator in order to understand what the collective was doing compared to the IA visualization that showed the dynamic behavior emerging. Collective visualization operators may have additionally been distracted by the secondary SA probe question task and required more time to enter back into the loop to determine the collective behaviors.

The Mental Rotation Assessment and video-game proficiency supported , since operators with different individual capabilities did not perform significantly different using the Collective visualization. One exception to the hypotheses was that more experienced operators may have performed better because of their extensive use of computers, which may have led to faster and more accurate interpretations of information [58] (i.e., different types of iconography), or easier access to the supplemental information. Since the exception was observed in both evaluations, the behavior is inherent to working with a computer interface, rather than a particular visualization. Using an abstract collective visualization will mitigate the need for particular operator capabilities to perform the sequential best-of-n decision-making task. Operators using the Collective visualization experienced less frustration, which supports . Dissatisfaction (i.e., frustration) transpires when the system is not transparent and prohibits the operator from understanding what is currently happening, or there is too much clutter and the interface appears noisy [62]. The abstract visualization may be a solution to mitigate dissatisfaction.

6 : Visualization Promotion of Human Operator Comprehension

The explainability direct transparency factor was encompassed in , which was interested in determining whether the visualization promoted operator comprehension, by embedding transparency into the system design. Perception and comprehension of the presented information are necessary to inform future operator actions. The associated objective dependent variables were (1) SA, (2) collective and target left- or right-clicks, (3) the percentage of times the highest value target was abandoned, and (4) whether the information pop-up window was open when a target was abandoned. The relationship between the variables and the corresponding hypotheses, and the direct and indirect transparency factors, are identified in Table 8. Relationships between the variable and the direct or indirect transparency factors that are not shown in Figure 1, were identified after conducting correlation analyses.

| Transparency Factors | |||||||||

| Direct | Indirect | ||||||||

| Explainability | Performance | Usability | Capability | Effectiveness | Justification | SA | Understanding | ||

| Objective Variables | Hypotheses | ||||||||

| SA Probe Accuracy | ✓ | ✓ | ✓ | ✓ | |||||

| Collective Left-Clicks by SA Level | ✓ | ✓ | ✓ | ✓ | |||||

| Target Right-Clicks by SA Level | ✓ | ✓ | ✓ | ✓ | |||||

| Highest Value Target Abandoned | , | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Abandoned Target Information Pop-Up Window Open | ✓ | ✓ | ✓ | ✓ | |||||

| Subjective Variables | |||||||||

| 3-D Situational Awareness Rating Technique | ✓ | ✓ | ✓ | ✓ | |||||

Thirteen human factors display design principles, associated with perceptual operations, mental models, as well as human attention and memory [58], suggest that information must be legible, clear, concise, organized, easily accessible, and consistent. Providing information, such as the collective state, on the collective icon, rather than using all of the individual collective entities is more clear, concise, organized, and consistent; therefore, it was hypothesized () that operators will have a better understanding of the information provided by the Collective visualization. Providing information redundantly via icons, colors, messages, and the collective and target information pop-up windows can aid operator comprehension and justify their future actions. It was hypothesized () that the Collective visualization provided information used to accurately justify actions. An ideal visualization will enable operators to perceive and comprehend information that is explainable, which will support taking accurate future actions.

6.1 Metrics and Results

The operator had access to supplementary information that was not continually displayed, such as different colored target borders that identified which targets were in range and had been abandoned, or information pop-up windows that provided collective state and target support information, in order to aid comprehension () of collective behavior and inform particular actions. The results of SA probe accuracy, which is the percentage of correctly answered SA probes questions used to assess the operator’s SA during a trial, identified that operators using the Collective visualization had higher SA probe accuracy, regardless of the SA level. Further details regarding the statistical tests were provided in the Metrics and Results Section 5.1 of .

Collective left-clicks identified targets that were in range of a collective (i.e., white borders indicated that the individual collective entities were investigating the target, while yellow indicated no investigation), whether the targets had been abandoned (i.e., red borders), and was the first click required to issue a command. The number of collective left-clicks descriptive statistics 15 seconds before asking, while asking, and during response to a SA probe question are shown in Table 9. Operators using the IA visualization had fewer collective left-clicks, regardless of when the metric was assessed for all SA levels, except for during response to a SA probe question. The Mann-Whitney-Wilcoxon tests found highly significant effects between visualizations for (n = 664, DOF = 1) 15 seconds before asking a SA probe question (U = 64213, 0.001), while asking a SA probe question (U = 67670, 0.001), and during response to a SA probe question (U = 64710, 0.001). A highly significant effect was also found when responding to a SA probe question for (n = 223, DOF = 1, U = 8317, 0.001). Moderate significant effects were found for (n = 290, DOF = 1) 15 seconds before asking a SA probe question (U = 12534, 0.01), while asking a SA probe question (U = 12043, 0.01), and during response to a SA probe question (U = 12414, 0.01). An additional moderate significant effect was found while asking a SA probe question for (n = 151, DOF = 1, U = 3472, 0.01). Significant effects were found 15 seconds before asking a SA probe question for (n = 223, DOF = 1, U = 7210.5, = 0.04) and during response to a SA probe question for (n = 151, DOF = 1, U = 3489, = 0.01). No correlations were found between the number of collective left-clicks and SA probe accuracy.

| Timing | SA Level | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|---|

| IA | Before | 1.64 (1.84) | 1 (0/12) | |

| 1.53 (1.75) | 1 (0/11) | |||

| 1.78 (1.9) | 1 (0/12) | |||

| 1.65 (1.92) | 1 (0/9) | |||

| Asking | 0.49 (0.76) | 0 (0/5) | ||

| 0.3 (0.6) | 0 (0/3) | |||

| 0.42 (0.77) | 0 (0/4) | |||

| 0.33 (0.61) | 0 (0/3) | |||

| Responding | 1.68 (1.79) | 1 (0/11) | ||

| 1.14 (1.46) | 1 (0/7) | |||

| 1.46 (1.8) | 1 (0/10) | |||

| 1.53 (1.98) | 1 (1/9) | |||

| Collective | Before | 1.95 (1.57) | 2 (0/9) | |

| 1.88 (1.47) | 2 (0/8) | |||

| 2.13 (1.68) | 2 (0/9) | |||

| 1.83 (1.61) | 1 (0/7) | |||

| Asking | 0.69 (0.88) | 0 (0/5) | ||

| 0.51 (0.79) | 0 (0/5) | |||

| 0.91 (0.89) | 1 (0/4) | |||

| 0.73 (0.96) | 0 (0/4) | |||

| Responding | 1.52 (1.21) | 1 (0/6) | ||

| 1.32 (1.02) | 1 (0/4) | |||

| 1.57 (1.21) | 1 (0/5) | |||

| 1.89 (1.48) | 2 (0/6) |

Target right-clicks allowed the operator to open or close target information pop-up windows, which provided the percentage of support each collective had for a respective target. Operators may have used the support information to justify issuing commands. The number of target right-clicks descriptive statistics 15 seconds before asking, while asking, and during response to a SA probe question are presented in Table 10. The Collective visualization had fewer target right-clicks for all SA levels, 15 seconds before asking and during response to a SA probe question, while the IA visualization had fewer while asking a SA probe question. The Mann-Whitney-Wilcoxon test found no significant effects between visualizations for the number of target right-clicks. The Spearman correlation analysis revealed weak correlations between the number of target right-clicks and SA probe accuracy for the IA visualization 15 seconds before asking a SA probe question for (r = 0.17, 0.01) and for (r = 0.37, 0.001).

| Timing | SA Level | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|---|

| IA | Before | 1.68 (2.38) | 1 (0/13) | |

| 1.92 (2.62) | 1 (0/13) | |||

| 1.28 (1.91) | 1 (0/11) | |||

| 1.8 (2.5) | 1 (0/12) | |||

| Asking | 0.37 (0.79) | 0 (0/7) | ||

| 0.44 (0.74) | 0 (0/4) | |||

| 0.31 (0.67) | 0 (0/3) | |||

| 0.37 (0.75) | 0 (0/3) | |||

| Responding | 1.07 (1.77) | 0 (0/10) | ||

| 1.11 (1.69) | 0 (0/10) | |||

| 1.1 (1.75) | 0 (0/9) | |||

| 1.68 (2.24) | 1 (0/10) | |||

| Collective | Before | 1.52 (2.41) | 1 (0/18) | |

| 1.79 (2.71) | 0 (0/18) | |||

| 1.17 (1.94) | 0 (0/10) | |||

| 1.49 (2.35) | 0 (0/11) | |||

| Asking | 0.5 (1) | 0 (0/9) | ||

| 0.49 (0.86) | 0 (0/4) | |||

| 0.55 (1.31) | 0 (0/9) | |||

| 0.44 (0.69) | 0 (0/2) | |||

| Responding | 0.99 (1.7) | 0 (0/11) | ||

| 1.01 (1.74) | 0 (0/11) | |||

| 0.84 (1.44) | 0 (0/9) | |||

| 1.21 (1.98) | 0 (0/8) |

The abandon command was provided to operators who desired a collective to discontinue investigating a particular target. Ideally lower valued targets were abandoned, since the objective was to aid each collective in selecting and moving to the highest valued target two sequential times. The percentage of times the highest value target was abandoned per participant is shown in Table 11. Operators using the IA visualization abandoned the highest value target less frequently, but no significant effects were found between the visualizations.

| Decision Difficulty | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|

| IA | Overall | 32.36 (29.53) | 26 (0/100) |

| Easy | 31.2 (27.17) | 25 (0/75) | |

| Hard | 42.1 (40.53) | 23 (0/100) | |

| Collective | Overall | 43.6 (31.94) | 38 (0/100) |

| Easy | 33.25 (35.96) | 27 (0/100) | |

| Hard | 48.75 (36.85) | 38 (0/100) |

The percentage of times an abandoned target information pop-up window was open per participant was evaluated and is represented in Table 12. The operator may have used the support information in order to justify abandoning a particular target. Participants using the IA visualization had fewer abandoned target information pop-up windows open, compared to the Collective visualization operators. No significant effects were found between visualizations.

| Decision Difficulty | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|

| IA | Overall | 23.86 (31.43) | 10.5 (0/100) |

| Easy | 22.2 (30.95) | 13 (0/100) | |

| Hard | 28.7 (37.89) | 8.5 (0/100) | |

| Collective | Overall | 33.8 (34.9) | 15 (0/100) |

| Easy | 30.7 (37.85) | 15 (0/100) | |

| Hard | 36.08 (40.87) | 14 (0/100) |

6.2 Discussion

The analysis of how visualization promoted operator comprehension identified advantages and disadvantages associated with both visualizations. The Collective visualization promoted higher comprehension () and situational awareness; however, because the Collective operators abandoned the highest value target more frequently, was not supported. Mistaking the roman numeral identifiers with the integer identifiers may have caused IA operator confusion and contributed to lower . Ensuring that identifiers are unique and distinct, such as integers versus letters, may mitigate misunderstanding. The target value for the Collective visualization may not have been salient enough to distinguish it from other potential targets. Further investigations are required to determine if the target value must use the entire collective hub icon area, similar to the IA visualization, in order to be recognizable, and to establish what levels of obscurity are needed to ensure that target values are distinguishable from one another.

The use of target borders (collective left-clicks), information pop-up windows (target right-clicks), and target value, were assessed to determine if operators used these types of information to accurately justify actions. Collective right-clicks, which opened and closed collective information pop-up windows, 15 seconds before asking, while asking, and during response to a SA probe question, were not analyzed in this manuscript, because the collective evaluation did not indicate which collective was selected via the right-click. None of the metrics supported , because the information provided by the Collective visualization did not justify accurate actions. Collective left-clicks did not support or hinder SA probe accuracy for either visualization, but fewer target right-clicks 15 seconds before asking a SA probe question supported higher and probe accuracy for operators using the IA visualization. The operators may have learned to anticipate when SA probe questions were going to be asked and took preventative actions, by opening or closing target information windows, which resulted in higher SA probe accuracy. The use of target information pop-up windows aided Collective visualization users to abandon targets more than 30% of the time. Further analysis using technology, such as an eye-tracker, may provide more accurate metrics to determine operator comprehension during SA probe questions by identifying exactly where an operator is focusing their attention.

7 : Visualization Usability

Understanding which visualization promoted better usability, , is necessary to determine which system characteristics promote effective transparency in human-collective systems. The associated objective dependent variables were (1) interface clutter, (2) Euclidean distance, (3) collective and target left- and right-clicks, (4) metrics associated with abandoned targets, and (5) the time between the committed state and an issued decide command. The relationship between the variables and the corresponding hypotheses, as well as the direct and indirect transparency factors are identified in Table 13. Additional relationships that are not identified in Figure 1, between the variable and the direct or indirect transparency factors are provided after conducting correlation analyses.

| Transparency Factors | |||||||||||

| Direct | Indirect | ||||||||||

| Explainability | Performance | Usability | Effectiveness | Information | Justification | Predictability | SA | Timing | Understanding | ||

| Objective Variables | Hypotheses | ||||||||||

| Local Clutter Percentage | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Global Clutter Percentage | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Euclidean Distance Between SA Probe | ✓ | ✓ | |||||||||

| Interest and Clicks | |||||||||||

| Sum of Euclidean Distance Between Clicks | ✓ | ✓ | |||||||||

| Collective Left-Clicks per Participant | ✓ | ✓ | ✓ | ||||||||

| Collective Right-Clicks per Participant | ✓ | ✓ | ✓ | ✓ | |||||||

| Target Left-Clicks per Participant | ✓ | ✓ | |||||||||

| Target Right-Clicks per Participant | ✓ | ✓ | ✓ | ✓ | |||||||

| Highest Value Target Abandoned | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Abandoned Target Information Window | ✓ | ✓ | ✓ | ✓ | |||||||

| Open | |||||||||||

| Abandon Requests Exceeded Abandoned | ✓ | ✓ | ✓ | ✓ | |||||||

| Targets | |||||||||||

| Time Between Committed State and Issued | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||

| Decide Command | |||||||||||

The goal of usability is to design systems that are effective, efficient, safe to use, have good utility, easy to learn, and are memorable [62]. Ensuring good usability is necessary to ensure operators will be able to perceive and understand the information presented on a visualization, and to promote effective interactions. It was hypothesized () that the Collective visualization will promote better usability by being more predictable and explainable. Providing information that is explainable may aid operator comprehension, while predictable information may expedite operator actions. An ideal system will not require constant operator interaction to perform well; therefore, it was hypothesized () that operators using the Collective interface will require fewer interactions.

7.1 Metrics and Results

Many system characteristics were available to the operators in order to aid task completion. The IA visualization had both lower local clutter percentage, which was the percentage of area obstructed by items displayed within the 500 circular radius of a collective, or target, and global clutter percentage, which was the percentage of area obstructed by all objects displayed on the visualization. Operators using the IA visualization had fewer collective and target information pop-up windows open throughout the trial. The statistical test details were provided in Section 5.1.

| Timing | SA Level | Mean (SD) | Median (Min/Max) | |

|---|---|---|---|---|

| IA | Before | 767.1 (262.5) | 768.3 (183.4/1425.9) | |

| 759.5 (251.64) | 765.1 (269/1425.9) | |||

| 768.9 (282.07) | 787.9 (184.4/1312.4) | |||