HSTFormer: Hierarchical Spatial-Temporal Transformers

for 3D Human Pose Estimation

Abstract

Transformer-based approaches have been successfully proposed for 3D human pose estimation (HPE) from 2D pose sequence and achieved state-of-the-art (SOTA) performance. However, current SOTAs have difficulties in modeling spatial-temporal correlations of joints at different levels simultaneously. This is due to the poses’ spatial-temporal complexity. Poses move at various speeds temporarily with various joints and body-parts movement spatially. Hence, a cookie-cutter transformer is non-adaptable and can hardly meet the “in-the-wild” requirement. To mitigate this issue, we propose Hierarchical Spatial-Temporal transFormers (HSTFormer) to capture multi-level joints’ spatial-temporal correlations from local to global gradually for accurate 3D HPE. HSTFormer consists of four transformer encoders (TEs) and a fusion module. To the best of our knowledge, HSTFormer is the first to study hierarchical TEs with multi-level fusion. Extensive experiments on three datasets (i.e., Human3.6M, MPI-INF-3DHP, and HumanEva) demonstrate that HSTFormer achieves competitive and consistent performance on benchmarks with various scales and difficulties. Specifically, it surpasses recent SOTAs on the challenging MPI-INF-3DHP dataset and small-scale HumanEva dataset, with a highly generalized systematic approach. The code is available at: https://github.com/qianxiaoye825/HSTFormer.

1 Introduction

3D human pose estimation (HPE) aims to predict the coordinates of human body joints in 3D space from images and videos. Hence, it plays important roles in numerous applications, such as human activity recognition [17], healthcare [11], augmented reality (AR) [8], virtual reality (VR) [21] and human-robot interaction [22]. Direct inferring 3D pose from a single image is a challenging ill-posed problem because part of the 3D information is lost in 3D to 2D projection. An alternative approach for 3D HPE is from video, given that human pose changes smoothly and structurally through time. With the advances in deep learning, estimating 2D human poses has become a standard technique. By first converting the human image sequence to 2D pose sequences, 3D HPE from the video can be reduced to the problem of inferring a 3D pose sequence from its 2D counterpart.

To solve this problem, earlier approaches[1, 6, 25, 30] use dilated temporal convolution or graph convolution networks to exploit spatial and temporal representations of 2D pose sequences. However, they generally rely on temporal dilation techniques or a predefined adjacency matrix to model temporal relationships. Consequently, the temporal connectivity inside these models is still limited.

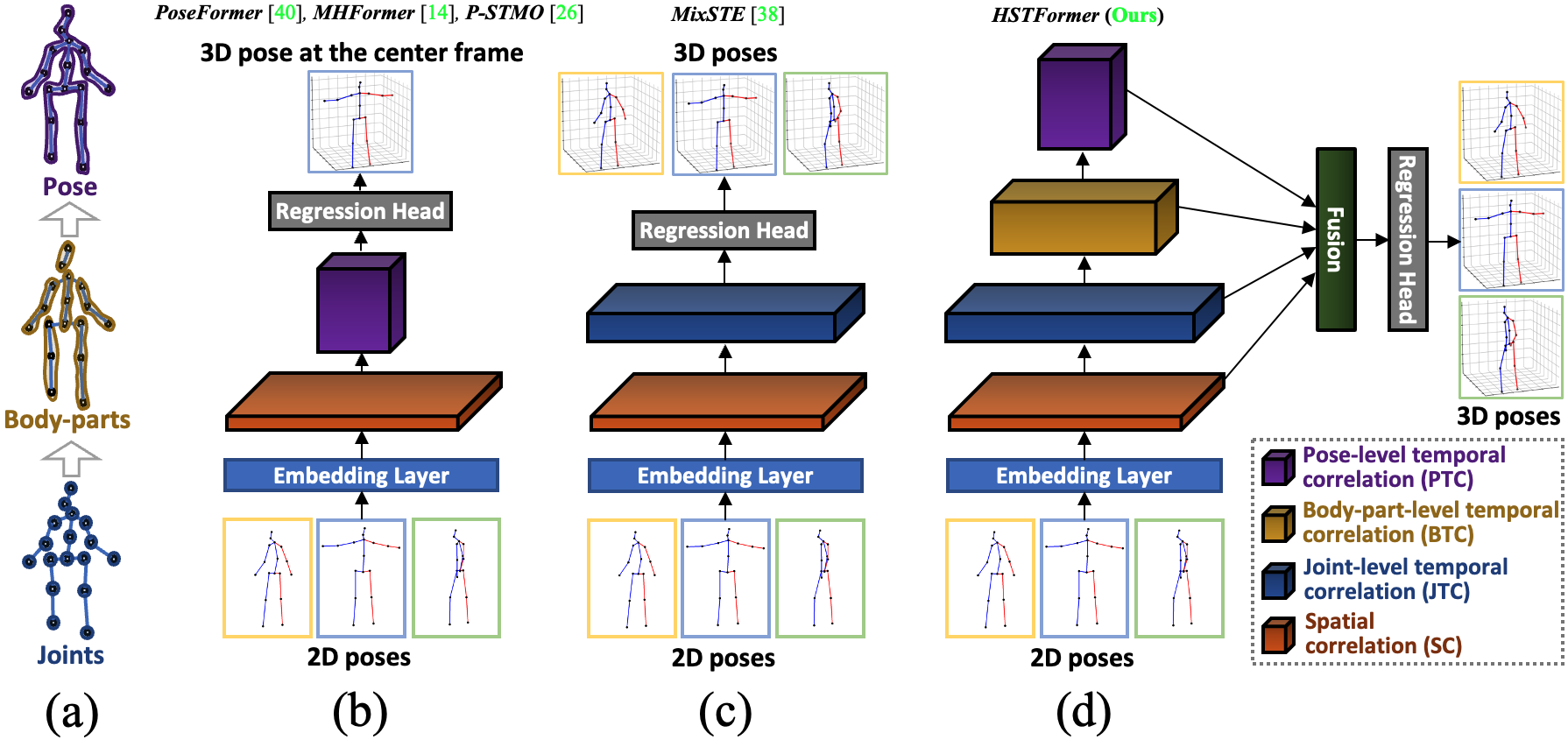

Recently, transformer [28] with self-attention mechanism is shown capable of capturing long-range relationships of the input sequences, and has been successfully applied to many challenging computer vision tasks [2, 7, 34, 40]. The 2D-to-3D pose sequence estimation fits naturally to the transformer. As a matter of fact, most models that achieve current SOTA results are based on transformers [40, 14, 26, 38]. Among these models, PoseFormer [40] is a seminal work followed by MHFormer [14] and P-STMO [26]. This group considers all joints of each frame as a whole to learn the global pose-level temporal correlation across all frames. Their basic architecture is shown in Figure 1(b). As a separate group itself, MixSTE [38]’s basic architecture is shown in Figure 1(c). It decomposes a pose sequence into multiple joint sequences and uses stacks of spatial-temporal transformers to infer 3D poses by learning the local joint-level temporal correlation of each joint separately. These demonstrate that the temporal correlations of joints can be further dug to improve 3D HPE performance.

However, as Figure 1 illustrates, aforementioned transformer-based SOTA approaches focus on spatial nuances and hence are lack of a systematic information propagation from local joints to global poses temporarily. On the other hand, we are inspired from the residual neural network and vision transformers developments, such as the Feature Pyramid Network (FPN) [16] improves the ResNet[9], as well as Pyramid Vision Transformer (PVT) [32] advances the Vision Transformer (ViT) [7]. We found that both the FPN and the PVT in an hierarchical and systematic pyramid structure, improve their predecessors with better information propagation and consequently better task results.

Motivated by the literature, we propose a novel framework based on spatial-temporal transformers that leverages the hierarchical bottom-up structure of a pose shown in Figure 1(a). A pose is a composition of a set of articulated body parts, and each body part is a collection of corresponding joints. Analyzing a pose sequence is performed bottom-up in this hierarchy. First, joint sequences are independently passed through a temporal transformer. Then, the outputs of joint sequences are aggregated to become body part sequences and pass through temporal transformers for different body parts. These outputs are aggregated again to become the pose sequence as input to a temporal transformer for the pose. Finally all the outputs from the joints, parts, and pose transformer are fused to adaptively integrate the multi-level complementary information for 3D pose estimation. This hierarchically bottom-up approach enables us to exploit the structural nature of human poses and extract valuable information locally and globally. Figure 1(d) illustrates the systematic hierarchical spatial-temporal transformers. Specifically, bottom-up structural transformer encoders are organized to learn different correlations of joints from local to global as follows: (i) the Spatial Correlation (SC) of an individual single frame; (ii) the Joint-level Temporal Correlation (JTC) cross frames; (iii) the Body-part-level Temporal Correlation (BTC), which groups joints correlation across frames; and (iv) the Pose-level Temporal Correlation (PTC) of all joints across frames. Compared to the previous approaches illustrated in Figure 1(b) [40, 14, 26], and Figure 1(c) [38], which are less structurally and sporadically use transformer encoders, HSTFormer is able to model the joints’ spatial-temporal correlations more comprehensively and systematically. Our main contribution can be summarized as follows:

-

•

A novel transformer-based framework, hierarchical spatial-temporal transformers (HSTFormer), is proposed to model multiple levels of joints’ spatial-temporal correlations structurally in a bottom-up fashion. It is able to propagate the joints’ movement information smoothly and effectively. Such a framework is capable of accurately estimating 3D human poses in both simple and complex scenes following the 2D-to-3D lifting pipeline.

-

•

A body-part temporal transformer encoder is proposed to tackle the grouped joints correlation across frames. To the best of our knowledge, this is the first study in 3D pose estimation that uses transformer to focus on grouped joints correlation cross-temporal realm. The proposed design solely reduces MPJPE error with a significant amount of mm ().

-

•

Extensive experiments on Human3.6M, MPI-INF-3DHP, and HumanEva datasets are conducted to demonstrate the superior performance and high generalization ability of the proposed HSTFormer for 3D human pose estimation. Specifically, it outperforms the existing SOTA approaches by a remarkable margin on the challenging MPI-INF-3DHP dataset, decreasing the MPJPE by 24.6% (from 54.9 mm to 41.4 mm).

2 Related Work

Existing solutions to this problem can be divided into two categories: direct estimation approaches and 2D-to-3D lifting approaches. Direct estimation approaches [23, 13] designed end-to-end frameworks to estimate the joints’ 3D coordinates directly from images or videos without intermediate 2D pose representations, which are straightforward ways but remain challenges due to the lack of sufficient 3D in-the-wild data. 2D-to-3D lifting approaches [35, 25, 18, 40, 14, 38] have two stages which first detect 2D pose joints and then lift them to 3D. Many existing works are based on the 2D-to-3D lifting pipeline and currently outperform the direct estimation approaches. Meanwhile, their generalizability is improved because of ignoring the persons’ appearances when lifting. However, the 2D-to-3D lifting approaches also remain challenges due to occlusion and depth ambiguity in 2D poses. Especially, it is difficult to predict a 3D pose accurately only based on the spatial information of a 2D pose from a single frame [19].

To alleviate such an issue and to improve accuracy and robustness, many approaches [1, 6, 25, 30, 40, 14, 38] have integrated temporal information from 2D pose sequences to explore the joints’ spatial and temporal correlations. For example, Hossain and Little [10] applied the Long Short-Term Memory (LSTM) to explore the temporal information. Pavllo et al. [25] proposed a dilated temporal convolution network to capture global contextual information and estimate 3D pose from consecutive 2D sequences. Cai et al. [1] utilize graph convolution networks to exploit spatial and temporal graph representations of human skeletons for 3D pose estimation. However, convolutional neural network-based approaches typically rely on temporal dilation techniques and graph convolution network-based approaches depend on a predefined adjacency matrix to model spatial-temporal relationships. Eventually, the spatial-temporal connectivity explored by them is still limited.

Based on the mechanism of the transformer, the correlations through long sequence data can be extracted, which makes it possible to explore the temporal representations across the video frames for 3D HPE. Spatial and temporal transformers are gradually applied for 3D HPE [40, 14, 38, 26]. PoseFormer [40] explored the spatial joint correlations from each frame and the temporal joint across frames by using a spatial-temporal transformer structure, which is the first purely transformer-based approach for 3D HPE. MHFormer [14] was further proposed to learn the spatial-temporal information by presenting a multi-hypothesis transformer. MixSTE [38] developed a mixed spatial-temporal transformer to extract the all joints correlation across frames by applying the spatial transformer encoder and temporal transformer encoder alternatively. P-STMO [26] presented a spatial temporal many-to-one model which is pre-trained by using self-supervised learning for performance improvement. Our designs are inspired by the aforementioned works while making the model have the capability to learn the different levels of spatial-temporal information. Unlike previous works, we design two more modules to extract the spatial-temporal correlation of a group of joints across frames and adaptively integrate the learned multi-level spatial-temporal information of joints for accurate 3D HPE.

3 Hierarchical Spatial-Temporal Transformers

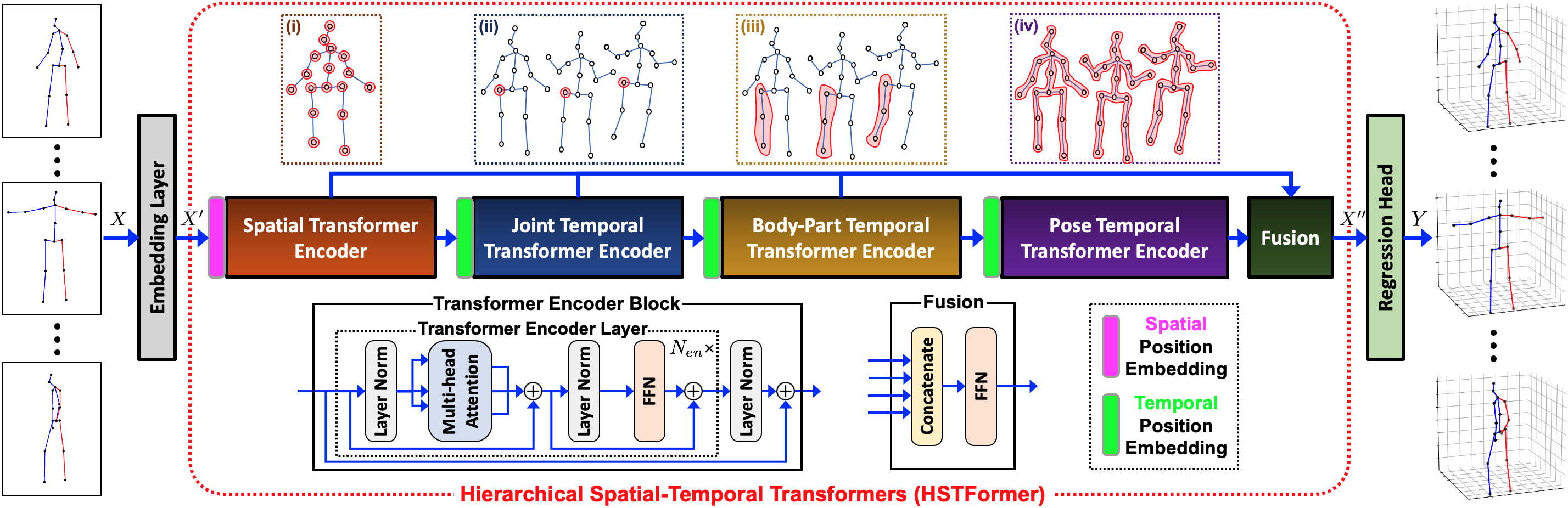

Figure 2 shows the overview of the proposed 3D human pose estimation method which follows the popular 2D-to-3D lifting pipeline. An embedding layer first converts the input 2D pose sequence to high dimensional representations. Let be the input 2D pose sequence with frames and joints per frame. obtained by an off-the-shelf 2D pose detector from a video. The embedding layer maps to high-dimensional representations by:

| (1) |

where is a linear projection and means layer normalization. In this work, we set to trade off the computational efficiency and performance as processed in [40].

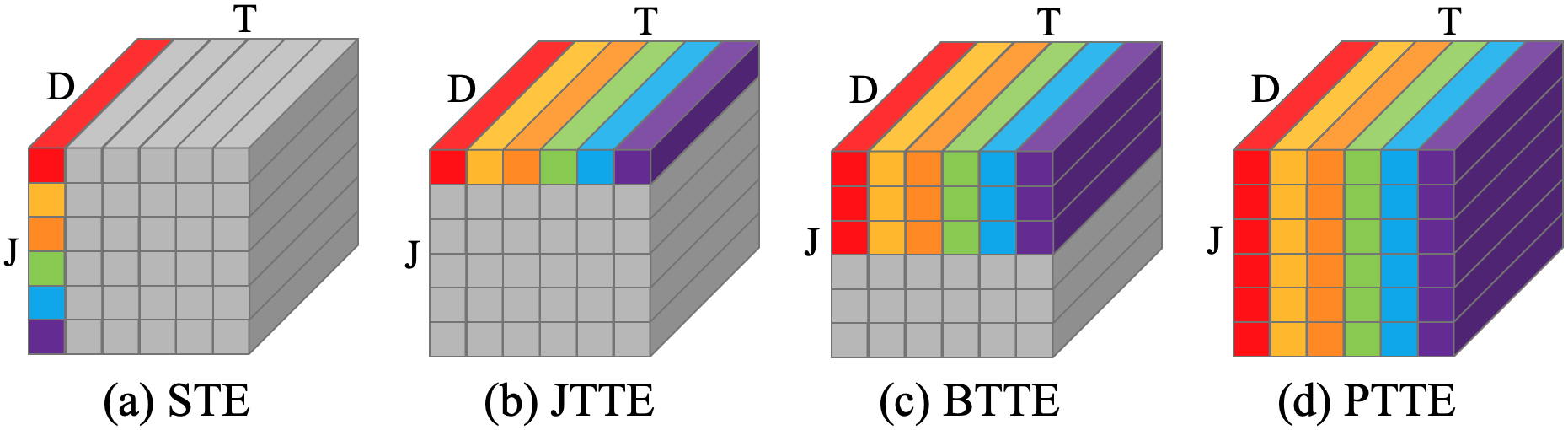

Next, a cascade of different types of transformer encoders process these features in spatial and temporal domain. All these transformer encoders are based on the typical multi-head attention transformer encoder block (TE). Spatial transformer encoder (STE) processes the joint features in each frame independently. Joint temporal transformer encoder (JTTE) works on the temporal feature sequence of each joint separately. Body-part temporal transformer encoder (BTTE) takes grouped joint temporal feature sequences that corresponding to a particular body part as input. Pose temporal transformer encoder (PTTE) groups all joint temporal feature sequences into a temporal sequence as its input. These encoders have multiple layers, and we use to denote the number of layers. In summary, the propsoed STE, JTTE, BTTE and PTTE in Figure 2 correspond to the SC, JTC, BTC and PTC in Figure 1(d), respectively.

The following sections explain each of the aforementioned encoders. If we represent input features as a tensor with shape , each encoder slices this tensor along different axes and with different block sizes as a sequence of input. Before diving into the details, Figure 3 provides an overall illustration on how the slicing is performed at each transformer encoder.

3.1 Spatial Transformer Encoder

The spatial transformer encoder (STE) is designed to learn the spatial correlation among the joints within each frame. It treats 2D joint representations as the input sequence to STE. Let be the features of all joints in the frame sliced from . A learnable positional encoding is added to before it is fed into STE. After adding the matrix , is fed into STE to capture the spatial-level correlation among all joints in the frame using the self-attention mechanism. The output of STE will be after processing all frames as follows:

| (2) |

where is a rearrange operation.

3.2 Joint Temporal Transformer Encoder

As mentioned in [38], the motion trajectories of the different body joints vary for different frames and should be learned separately. In this work, we also design a joint temporal transformer encoder (JTTE) to learn the joint-level temporal correlation by considering temporal motion trajectory information of each joint across all input frames independently. Let be the the joint sequence from A learnable temporal positional encoding is added to before it enters JTTE. JTTE applies the self-attention mechanism to enhance , where . The output of JTTE from all joints will be strack together and rearrange to become .

3.3 Body-Part Temporal Transformer Encoder

is the joint feature sequence enhanced by considering the spatial correlation of different joints in each frame and the temporal correlation of each joint sequence separately. As a fact, a pose is a composition of a set of articulated body parts and each body part is a collection of corresponding joints which are most relevant to each other. Generally, no matter simple or complex, the movements can be performed by the combined local motions of different body parts. Therefore, the global pose motion can be well captured by modeling the local body-part motions accurately and separately. Here we divide into six sequences according to different body parts: Head: {Nose, Head}, Torso: {Hip, Spine, Thorax}, Left Hand: {LShoulder, LElbow, LWrist}, Right Hand: {RShoulder, RElbow, RWrist}, Left Leg: {LHip, LKnee, LFoot}, and Right Leg: {RHip, RKnee, RFoot}. We then apply six body-part temporal transformer encoders (BTTE), one for each specific body part, to these six sequences respectively. These BTTEs are designed to capture the temporal correlation of each body part sequence.

BTTE takes as input. It is split into sequences, , where is the number of joints in the group. That is, the representations of joints from the same body parts are concatenated to form new frame-level features. As done in JTTE, a learnable temporal positional encoding is added to . The output of 6 BTTEs will be stack and rearrange to the orignal shape, .

3.4 Pose Temporal Transformer Encoder

captures the inter-joint spatial-temporal correlation up to the body-part level. Following the work [40, 14, 26], we design a pose temporal transformer encoder (PTTE) to capture the global pose-level temporal correlation of all joints across frames. PTTE reshapes as , which concatenates all joint features in a single frame as a new feature. The output of PTTE will be after rearranging.

3.5 Fusion Module

Following the above four TEs is a fusion layer that collects the output from these encoders and creates the final features, which is sent to the regression head for 3D pose prediction. The output of these TEs capture different-level of granularity of the spatial and temporal information extracted from the input 2D pose sequence. To sufficiently utilize their complementary information, a fusion module is designed for adaptively integrating the outputs of all four encoders. It is simply implemented by a fully connected feed-forward network with a learnable weight .The final enhanced high-dimensional representations can be obtained using the following procedures:

| (3) |

3.6 Regression Head

Our method performs sequence to sequence prediction, so the regression head is used to project the high-dimension output of the fusion module to 3D coordinates of the pose sequence by applying the linear transformation layer.

3.7 Loss Function

As done in [40], the standard MPJPE (Mean Per Joint Position Error) loss is used to train our entire model in an end-to-end manner by minimizing the error between the predicted and ground truth pose sequence as:

| (4) |

where and are the predicted and ground truth 3D coordinates of the joint in the frame, respectively.

| Method (CPN) | Dir. | Disc | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg. | |

| GraphSH [33] (=1) | CVPR’21 | 45.2 | 49.9 | 47.5 | 50.9 | 54.9 | 66.1 | 48.5 | 46.3 | 59.7 | 71.5 | 51.4 | 48.6 | 53.9 | 39.9 | 44.1 | 51.9 |

| GraFormer [39] (=1) | CVPR’22 | 45.2 | 50.8 | 48.0 | 50.0 | 54.9 | 65.0 | 48.2 | 47.1 | 60.2 | 70.0 | 51.6 | 48.7 | 54.1 | 39.7 | 43.1 | 51.8 |

| MGCN [41](=1) | ICCV’21 | 45.4 | 49.2 | 45.7 | 49.4 | 50.4 | 58.2 | 47.9 | 46.0 | 57.5 | 63.0 | 49.7 | 46.6 | 52.2 | 38.9 | 40.8 | 49.4 |

| ST-GCN [1] (=7) | ICCV’19 | 44.6 | 47.4 | 45.6 | 48.8 | 50.8 | 59.0 | 47.2 | 43.9 | 57.9 | 61.9 | 49.7 | 46.6 | 51.3 | 37.1 | 39.4 | 48.8 |

| VPose [25] (=243) | CVPR’19 | 45.2 | 46.7 | 43.3 | 45.6 | 48.1 | 55.1 | 44.6 | 44.3 | 57.3 | 65.8 | 47.1 | 44.0 | 49.0 | 32.8 | 33.9 | 46.8 |

| UGCN [30] (=96) | ECCV’20 | 41.3 | 43.9 | 44.0 | 42.2 | 48.0 | 57.1 | 42.2 | 43.2 | 57.3 | 61.3 | 47.0 | 43.5 | 47.0 | 32.6 | 31.8 | 45.6 |

| Liu et al. [18] (=243) | CVPR’20 | 41.8 | 44.8 | 41.1 | 44.9 | 47.4 | 54.1 | 43.4 | 42.2 | 56.2 | 63.6 | 45.3 | 43.5 | 45.3 | 31.3 | 32.2 | 45.1 |

| PoseFormer [40] (=81) | ICCV’21 | 41.5 | 44.8 | 39.8 | 42.5 | 46.5 | 51.6 | 42.1 | 42.0 | 53.3 | 60.7 | 45.5 | 43.3 | 46.1 | 31.8 | 32.2 | 44.3 |

| Anatomy3D [3] (=243) | TCSVT’21 | 41.4 | 43.2 | 40.1 | 42.9 | 46.6 | 51.9 | 41.7 | 42.3 | 53.9 | 60.2 | 45.4 | 41.7 | 46.0 | 31.5 | 32.7 | 44.1 |

| MHFormer [14] (=351) | CVPR’22 | 39.2 | 43.1 | 40.1 | 40.9 | 44.9 | 51.2 | 40.6 | 41.3 | 53.5 | 60.3 | 43.7 | 41.1 | 43.8 | 29.8 | 30.6 | 43.0 |

| P-STMO [26] (=243) | ECCV’22 | 38.9 | 42.7 | 40.4 | 41.1 | 45.6 | 49.7 | 40.9 | 39.9 | 55.5 | 59.4 | 44.9 | 42.2 | 42.7 | 29.4 | 29.4 | 42.8 |

| MixSTE [38] (=243) | CVPR’22 | 37.6 | 40.9 | 37.3 | 39.7 | 42.3 | 49.9 | 40.1 | 39.8 | 51.7 | 55.0 | 42.1 | 39.8 | 41.0 | 27.9 | 27.9 | 40.9 |

| HSTFormer (=81) | Ours | 39.5 | 42.0 | 39.9 | 40.8 | 44.4 | 50.9 | 40.9 | 41.3 | 54.7 | 58.8 | 43.6 | 40.7 | 43.4 | 30.1 | 30.4 | 42.7 |

| Method (GT) | Dir. | Disc | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg. | |

| VPose [25] (=243) | CVPR’19 | 35.2 | 40.2 | 32.7 | 35.7 | 38.2 | 45.5 | 40.6 | 36.1 | 48.8 | 47.3 | 37.8 | 39.7 | 38.7 | 27.8 | 29.5 | 37.8 |

| GraFormer [39] (=1) | CVPR’22 | 32.0 | 38.0 | 30.4 | 34.4 | 34.7 | 43.3 | 35.2 | 31.4 | 38.0 | 46.2 | 34.2 | 35.7 | 36.1 | 27.4 | 30.6 | 35.2 |

| Liu et al. [18] (=243) | CVPR’20 | 34.5 | 37.1 | 33.6 | 34.2 | 32.9 | 37.1 | 39.6 | 35.8 | 40.7 | 41.4 | 33.0 | 33.8 | 33.0 | 26.6 | 26.9 | 34.7 |

| Ray3D et al. [37] (=9) | CVPR’22 | 31.2 | 35.7 | 31.4 | 33.6 | 35.0 | 37.5 | 37.2 | 30.9 | 42.5 | 41.3 | 34.6 | 36.5 | 32.0 | 27.7 | 28.9 | 34.4 |

| SRNet [35] (=243) | ECCV’20 | 34.8 | 32.1 | 28.5 | 30.7 | 31.4 | 36.9 | 35.6 | 30.5 | 38.9 | 40.5 | 32.5 | 31.0 | 29.9 | 22.5 | 24.5 | 32.0 |

| PoseFormer [40] (=81) | ICCV’21 | 30.0 | 33.6 | 29.9 | 31.0 | 30.2 | 33.3 | 34.8 | 31.4 | 37.8 | 38.6 | 31.7 | 31.5 | 29.0 | 23.3 | 23.1 | 31.3 |

| MHFormer [14] (=351) | CVPR’22 | 27.7 | 32.1 | 29.1 | 28.9 | 30.0 | 33.9 | 33.0 | 31.2 | 37.0 | 39.3 | 30.0 | 31.0 | 29.4 | 22.2 | 23.0 | 30.5 |

| P-STMO [26] (=243) | ECCV’22 | 28.5 | 30.1 | 28.6 | 27.9 | 29.8 | 33.2 | 31.3 | 27.8 | 36.0 | 37.4 | 29.7 | 29.5 | 28.1 | 21.0 | 21.0 | 29.3 |

| MixSTE [38] (=243) | CVPR’22 | 21.6 | 22.0 | 20.4 | 21.0 | 20.8 | 24.3 | 24.7 | 21.9 | 26.9 | 24.9 | 21.2 | 21.5 | 20.8 | 14.7 | 15.7 | 21.6 |

| HSTFormer (=81) | Ours | 24.9 | 27.4 | 28.1 | 25.9 | 28.2 | 33.5 | 28.9 | 26.8 | 33.4 | 38.2 | 27.2 | 26.7 | 27.1 | 20.4 | 20.8 | 27.8 |

| Method (CPN) | Dir. | Disc | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ST-GCN [1] (=7) | ICCV’19 | 35.7 | 37.8 | 36.9 | 40.7 | 39.6 | 45.2 | 37.4 | 34.5 | 46.9 | 50.1 | 40.5 | 36.1 | 41.0 | 29.6 | 33.2 | 39.0 |

| SGNN [36] (=9) | ICCV’21 | 33.9 | 37.2 | 36.8 | 38.1 | 38.7 | 43.5 | 37.8 | 35.0 | 47.2 | 53.8 | 40.7 | 38.3 | 41.8 | 30.1 | 31.4 | 39.0 |

| VPose [25] (=243) | CVPR’19 | 34.1 | 36.1 | 34.4 | 37.2 | 36.4 | 42.2 | 34.4 | 33.6 | 45.0 | 52.5 | 37.4 | 33.8 | 37.8 | 25.6 | 27.3 | 36.5 |

| Liu et al. [18] (=243) | CVPR’20 | 32.3 | 35.2 | 33.3 | 35.8 | 35.9 | 41.5 | 33.2 | 32.7 | 44.6 | 50.9 | 37.0 | 32.4 | 37.0 | 25.2 | 27.2 | 35.6 |

| UGCN [30] (=96) | ECCV’20 | 32.9 | 35.2 | 35.6 | 34.4 | 36.4 | 42.7 | 31.2 | 32.5 | 45.6 | 50.2 | 37.3 | 32.8 | 36.3 | 26.0 | 23.9 | 35.5 |

| Anatomy3D [3] (=243) | TCSVT’21 | 32.6 | 35.1 | 32.8 | 35.4 | 36.3 | 40.4 | 32.4 | 32.3 | 42.7 | 49.0 | 36.8 | 32.4 | 36.0 | 24.9 | 26.5 | 35.0 |

| PoseFormer [40] (=81) | ICCV’21 | 32.5 | 34.8 | 32.6 | 34.6 | 35.3 | 39.5 | 32.1 | 32.0 | 42.8 | 48.5 | 34.8 | 32.4 | 35.3 | 24.5 | 26.0 | 34.6 |

| MHFormer [14] (=351) | CVPR’22 | 31.5 | 34.9 | 32.8 | 33.6 | 35.3 | 39.6 | 32.0 | 32.2 | 43.5 | 48.7 | 36.4 | 32.6 | 34.3 | 23.9 | 25.1 | 34.4 |

| P-STMO [26] (=243) | ECCV’22 | 31.3 | 35.2 | 32.9 | 33.9 | 35.4 | 39.3 | 32.5 | 31.5 | 44.6 | 48.2 | 36.3 | 32.9 | 34.4 | 23.8 | 23.9 | 34.4 |

| MixSTE [38] (=243) | CVPR’22 | 30.8 | 33.1 | 30.3 | 31.8 | 33.1 | 39.1 | 31.1 | 30.5 | 42.5 | 44.5 | 34.0 | 30.8 | 32.7 | 22.1 | 22.9 | 32.6 |

| HSTFormer (=81) | Ours | 31.1 | 33.7 | 33.0 | 33.2 | 33.6 | 38.8 | 31.9 | 31.5 | 43.7 | 46.3 | 35.7 | 31.5 | 33.1 | 24.2 | 24.5 | 33.7 |

4 Experiments

4.1 Datasets and Evaluation Metrics

The proposed method is evaluated on three benchmark datasets, i.e., Human3.6M [12], MPI-INF-3DHP [20], and HumanEva [27].

Human3.6M. It is the most representative benchmark dataset for 3D human pose estimation. It contains over 3.6 million images and their corresponding 3D human pose annotations. 11 subjects perform 15 daily activities in an indoor environment, such as walking, sitting, smoking, etc. High-resolution videos of each subject are recorded from four different views. Following the same policy of others [40, 14, 38, 26], 5 subjects (S1, S5, S6, S7, S8) are used for training and 2 subjects (S9, S11) are used for testing.

MPI-INF-3DHP. It is also a widely-used large-scale and more challenging dataset containing both indoor and complex outdoor scenes for 3D human pose estimation. It has 1.3 million frames with more diverse motions than Human3.6M. The training set has 8 subjects performing 8 activities and the test set has 7 subjects. The same as SOTAs [40, 14, 38, 26], we train our method using the training set and evaluate it using the valid frames in the test set.

HumanEva. It is a smaller but challenging dataset compared to the above two datasets. The HumanEva consists of about 29,000 frames from 7 calibrated video sequences. Given the small volume, it becomes challenging in converging the training model to achieve good performance. Following the same setting to [40, 38], two actions including Walking and jogging in subjects S1, S2, and S3 are evaluated.

Evaluation Metrics. Following [40, 14, 38, 26], we use the same metrics for performance evaluation. For Human3.6M, two evaluation protocols are adopted to calculate the quantitative results. Protocol 1 refers to MPJPE which is the mean Euclidean distance between the predictions and ground truths in millimeters (mm). Protocol 2 refers to P-MPJPE which is the MPJPE between aligned 3D pose predictions and ground truths. For MPI-INF-3DHP, the area under the curve (AUC), percentage of correct keypoints (PCK), and MPJPE are used as evaluation metrics. For HumanEva, MPJPE is used for performance evaluation.

4.2 Implementation Details

HSTFormer is implemented in PyTorch. We train the HSTFormer from scratch for 100 epochs on 4 Nvidia Tesla V100 GPUs using Adam optimizer with an initial learning rate of 0.001 and decaying it to 80% for every 20 epochs. The batchsize is 128 for each GPU. The poses are flipped horizontally to perform data augmentation for both training and testing, following [24, 40].

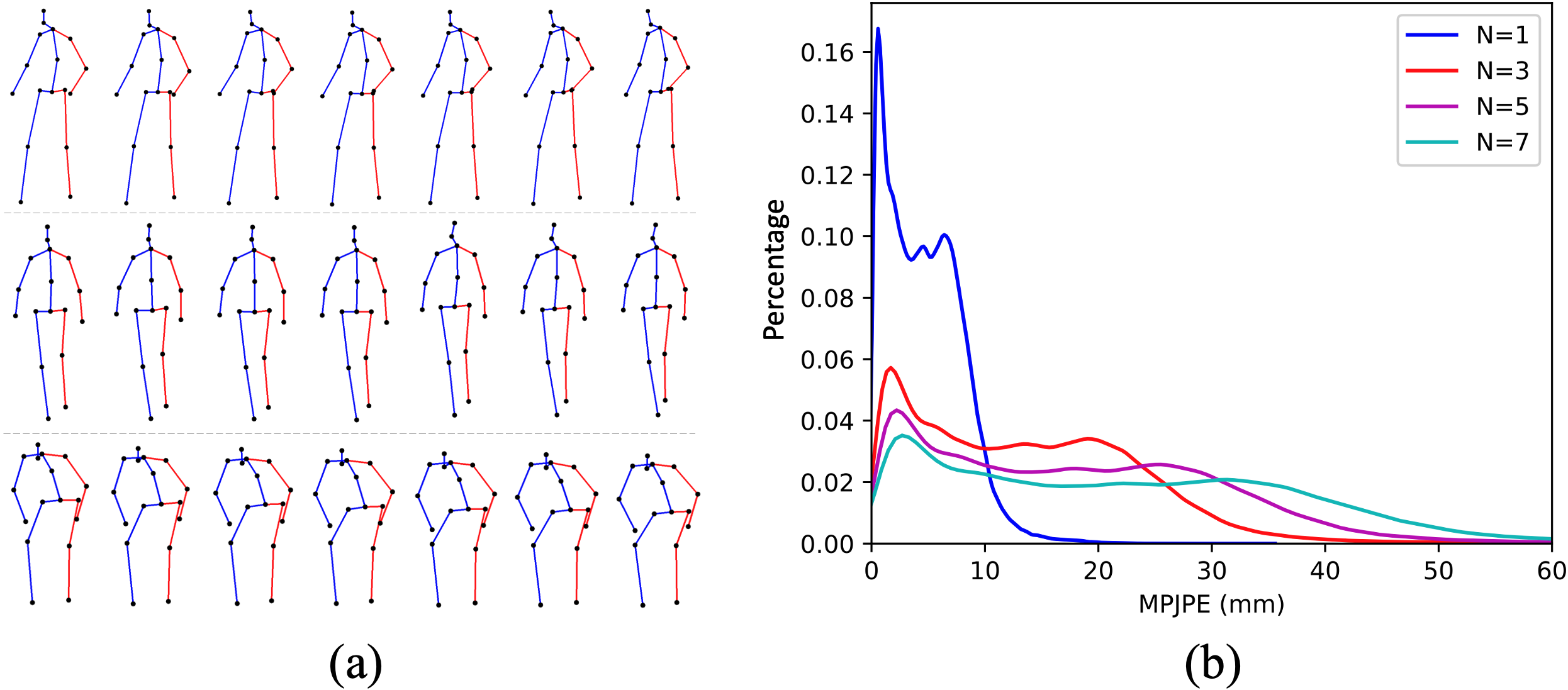

We observe that the poses from adjacent frames usually contain redundant information since few changes happen between them if the video FPS is large (25 or 30). Fig. 8 (a) shows examples of three actions with seven consecutive frames, visually demonstrating this observation. Hence, to increase the diversity of the input poses, one way is to collect a longer pose sequence. But this will increase the computation burden. Another way is to collect a pose sequence with a fixed number of frames and sample the frames with an interval (i.e., , where is the pose index in the collected pose sequence and is the frame index in the video), meaning that the pose sequence will cover more temporal information with fixed computation cost when giving a larger . Therefore, we adopt the second pose sequence collection way in this work. The distribution of MPJPEs computed between adjacent frames with a sample interval of 1, 3, 5, and 7 are shown in Fig. 8 (b). We can see that a larger sample interval produces a more diverse pose sequence of a bigger MPJPE value.

4.3 Comparison with State-of-the-art Models

Results on Human3.6M. The proposed HSTFormer is compared with existing SOTA approaches on the Human3.6M dataset. We trained HSTFormer with the following setting: , , and . As done by others, HSTFormer also takes the sequences of 2D pose predictions detected by the popular cascaded pyramid network (CPN) [5] and ground truths (GT) as inputs for training. Table 1 and Table 2 show their best reported results of different methods in terms of Protocol 1 (MPJPE) using both CPN and GT inputs and Protocol 2 (P-MPJPE) using CPN input, respectively. It can be seen that our method achieves the second best performance for all overall comparisons even its input sequence length is . Additionally, our average MPJPE (27.8 mm) outperforms the third best result (29.3 mm) by 5.1% (1.5 mm) when taking GT poses as input. These demonstrate the competitiveness and effectiveness of the proposed method on the constrained and simple Human3.6M dataset. It is also worth pointing out that our result is about 6.2 mm in average worse than the best result MixSTE. However, we would argue that our approach is more generalized and consistent, which is proven in the following sections.

Results on MPI-INF-3DHP. To verify the generalization ability of different methods on a more challenging large-scale dataset, we evalaute the proposed method on MPI-INF-3DHP by taking GT poses as input and compare its results with SOTA approaches. We observe that P-STMO [26] and others (e.g., PoseFormer [40], MHFormer [14], and MixSTE [38]) use different 3D pose annotations during training. For fair comparison, we train our model using both data with the settings , , and . Table 3 lists our results and the best reported results of others on the MPI-INF-3DHP testset. It can be seen that our method HSTFormer achieves the best performance in three evaluation metrics for all comparisons when and even the second best when . Specifically, HSTFormer outperforms MixSTE [38] (the best method on Human3.6M) by a large margin, e.g., decreasing MPJPE by 24.6% (from 54.9 mm to 41.4 mm). This demonstrates that our method is more powerful to handle the challenging and complex outdoor scenes than others.

We further examine the performance comparison of same method evaluated on different datasets and have the following observation. Comparing to the results in the bottom part of Table 1 and Table 3, we notice that the performance of the SOTA methods drops much more than ours when evaluating on Human3.6M and MPI-INF-3DHP. For example, the average MPJPE is increased by 27.5 mm (90.2%) of MHFormer [14] and 23.3 mm (107.9%) of MixSTE [38] vs 13.6 mm (48.9%) of ours. There are two conclusions drawn from the above observation. First, the proposed HSTFormer has a higher generalization ability than others on challenging and complex scenes. Second, the current SOTAs are prone to overfit on a relative more saturated Human3.6M dataset.

| Method | PCK | AUC | MPJPE | |

| Lin et al. [15] (=25) | BMVC’19 | 83.6 | 51.4 | 79.8 |

| Chen et al. [4] (=243) | TCSVT’21 | 87.8 | 53.8 | 79.1 |

| PoseFormer [40] (=9) | ICCV’21 | 88.6 | 56.4 | 77.1 |

| Wang et al. [31] (=96) | ECCV’20 | 86.9 | 62.1 | 68.1 |

| MHFormer [14] (=9) | CVPR’22 | 93.8 | 63.3 | 58.0 |

| MixSTE [38] (=27) | CVPR’22 | 94.4 | 66.5 | 54.9 |

| HSTFormer (=27) | Ours | 96.6 | 70.5 | 44.5 |

| HSTFormer (=81) | Ours | 97.3 | 71.5 | 41.4 |

| P-STMO [26]⋆ (=81) | ECCV’22 | 97.9 | 75.8 | 32.2 |

| HSTFormer⋆ (=27) | Ours | 98.0 | 78.4 | 28.6 |

| HSTFormer⋆ (=81) | Ours | 98.0 | 78.6 | 28.3 |

| Method | Walk | Jog | Avg. | ||||

| S1 | S2 | S3 | S1 | S2 | S3 | ||

| MixSTE [38] (=43) | 20.3 | 22.4 | 34.8 | 27.3 | 32.1 | 34.3 | 28.5 |

| MHFormer [14]† (=43) | 20.6 | 14.6 | 32.7 | 34.1 | 20.6 | 23.8 | 24.4 |

| PoseFormer [40] (=43) | 16.3 | 11.0 | 45.7 | 25.0 | 15.2 | 15.1 | 21.6 |

| HSTFormer (=9) | 16.7 | 14.3 | 34.2 | 25.9 | 18.1 | 19.4 | 21.4 |

| HSTFormer (=43) | 15.4 | 12.0 | 33.2 | 25.5 | 16.5 | 18.4 | 20.2 |

Results on HumanEva. To further explore the generalization ability of different methods on a smaller dataset, we evaluate our method HSTFormer on the HumanEva dataset. The challenge of a small dataset such as HumanEva is due to its short video lengths. This particularly requires more robust spatial and temporal correlations of joints to avoid the overfitting during training. We configure our model with the following settings: , , and . All compared methods and ours are trained from scratch on HumanEva. Table 4 reports the MPJPE results of different methods. It can be seen that HSTFormer achieves the best performance on both and compared to others. Specifically, it outperforms PoseFormer [40] by 6.5% (1.4 mm), MHFormer [14] by 17.2% (4.2 mm), and MixSTE [38] by 29.1% (8.3 mm) in average MPJPE. This demonstrates that HSTFormer is more general or suitable than the others on small datasets due to comprehensively capturing joints’ multi-level spatial and temporal correlations.

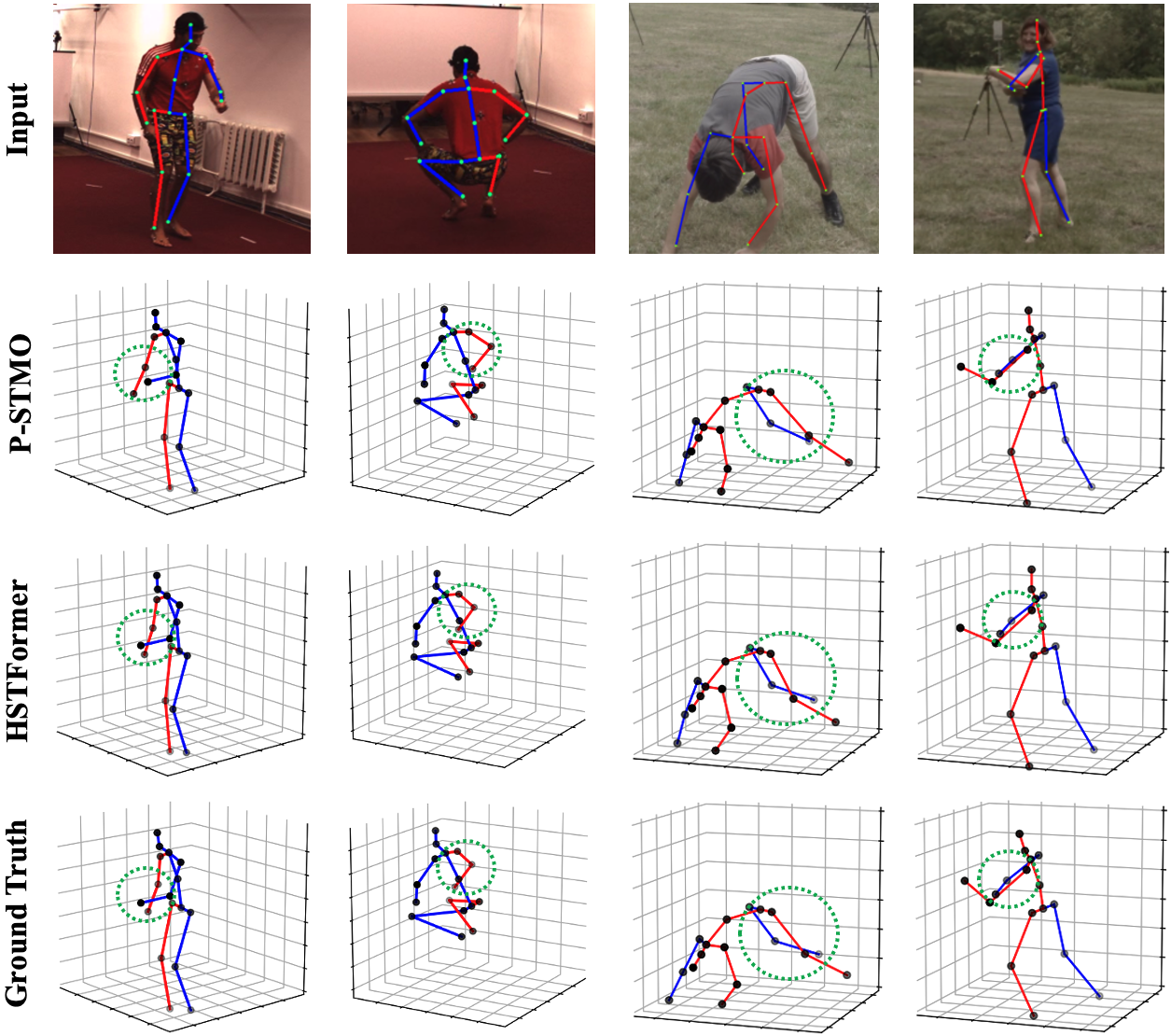

Qualitative Results. As shown in Fig. 5, we also visualize some 3D pose estimation results produced by HSTFormer and P-STMO [26] on both Human3.6M and MPI-INF-3DHP datasets. This visually demonstrates that our model can estimate accurate 3D poses in both constrained indoor and complex outdoor scenes/activities, especially on the parts of limbs that may move fast or be occluded.

4.4 Ablation Study

To verify the effect of different modules and hyper-parameters in HSTFormer, extensive ablation experiments are conducted on Human3.6M with GT poses as input and MPJPE (mm) as evaluation metric.

Effect of Architecture Modules. We first study the module choices of HSTFormer by configuring them with different combinations. The results are reported in Table 5. It can be seen that the model makes steady and accumulated improvement in MPJPE (from 41.6 mm to 30.6 mm) as more modules are hierarchically built on with temporal emphasis and fusion. The joint-wise correlations information is effectively modeled as more modules are included and aggregated. Notably, (i) the model gets the largest performance improvement (from 39.8 mm to 35.1 mm) when adding BTTE. This demonstrates that the explicitly modeling of the body parts’ local motions is crucial. (ii) The fusion module plays an essential role in improving the MPJPE performance from 33.4 mm to 30.6 mm.

Moreover, we investigate two module aggregation strategies: consecutively aggregating the encoders (i) from local to global and (ii) from global to local. Table 6 reports their results. It is apparent that aggregating the modules from local to global is much better than the other. This rigorously proves that hierarchical modeling is effective, following the obvious kinesiological analysis (joints to body-parts, body-parts to poses). The correct hierarchical order helps to pass the crucial information for better performance, while the incorrect order jeopardizes the result.

| Model | STE | JTTE | BTTE | PTTE | Fusion | MPJPE |

|---|---|---|---|---|---|---|

| 1 | ✓ | ✗ | ✗ | ✗ | ✗ | 41.6 |

| 2 | ✓ | ✓ | ✗ | ✗ | ✗ | 39.8 |

| 3 | ✓ | ✓ | ✓ | ✗ | ✗ | 35.1 |

| 4 | ✓ | ✓ | ✓ | ✓ | ✗ | 33.4 |

| 5 | ✓ | ✓ | ✓ | ✗ | ✓ | 32.2 |

| 6 | ✓ | ✓ | ✓ | ✓ | ✓ | 30.6 |

| Aggregation strategy | MPJPE |

|---|---|

| (i) STE JTTE BTTE PTTE Fusion | 30.6 |

| (ii) PTTE BTTE JTTE STE Fusion | 37.4 |

| Params (M) | FLOPs (G) | MPJPE | ||

|---|---|---|---|---|

| 4 | 81 | 15.25 | 2.64 | 32.8 |

| 6 | 81 | 22.81 | 3.94 | 30.6 |

| 8 | 81 | 30.38 | 5.24 | 30.4 |

| 6 | 9 | 22.73 | 0.45 | 35.5 |

| 6 | 27 | 22.75 | 1.31 | 34.4 |

| 6 | 43 | 22.77 | 2.10 | 31.7 |

| 9 | 27 | 43 | 81 | |||||||||||||

| 1 | 3 | 5 | 7 | 1 | 3 | 5 | 7 | 1 | 3 | 5 | 7 | 1 | 3 | 5 | 7 | |

| MPJPE | 35.5 | 34.4 | 33.4 | 32.5 | 34.4 | 32.3 | 31.2 | 30.4 | 31.7 | 30.2 | 29.3 | 28.7 | 30.6 | 28.9 | 28.3 | 27.8 |

Effect of Architecture Hyper-Parameters. Table 7 reports the results of different settings of the hyper-parameter (the number of TE layers) and (the input length). It can be seen that although and can get a little better performance than and , we select the latter one instead of the former one as the final setting because the former one introduces more parameters (30.38M vs 22.81M) and requires more computation (5.24G vs 3.94G). Table 8 reports the results of different settings of the hyper-parameter (the input length) and (the sample interval). It is obvious that the model performance of MPJPE is improved as the frame sampling interval becomes larger while fixing the input length. We also validate the setting of and in our model and find that the performance of MPJPE is not better than the setting of and . Therefore, we select in this work. This demonstrates that we can improve the performance but without introducing extra computation cost and model complexity by fixing the input length and adopting a large sample interval, so as to obtain a relatively large temporal receptive field.

5 Conclusions

In this paper, we present HSTFormer, a novel framework based on hierachical spatial-temporal transformers for 3D human pose estimation from 2D pose sequence. HSTFormer processes pose sequence in a hierarchical paradigm, from joints to body-parts, and eventually to the entire pose. Four transformer encoders are concatenated following the kinesiological orders: spatial transformer encoder, joint temporal transformer encoder, body-part temporal transformer encoder, and pose temporal transformer encoder. In addition, a fusion model gathers all layers is used in predict the 3D poses. Extensive experiments and detailed ablation studies are conducted. A superiror and consistent performance is demonstrated with the effectiveness of the hierarchical design. Our method outperforms state-of-the-art approaches by a large margin on the challenging and MPI-INF-3DHP dataset with complex outdoor scenes.

Appendix A Comparison of Computational Complexity

Table 9 lists the number of parameters, floating-point operations (FLOPs) at inference, and MPJPE evaluated on Human3.6M [12] and MPI-INF-3DHP[20] with GT poses as input to compare the computational complexity of different methods. The parameter number and FLOPs are calculated using the functions (parameter_count_table() and FlopCountAnalysis()) from the fvcore package111https://github.com/facebookresearch/fvcore. The best MPJPEs reported in their papers are listed in the last column for reference.

From Table 9, there are two observations. First, the HSTFormer achieves the best performance on both datasets compared to the others except MixSTE on Human3.6M. Three temporal-attention modules are added in our method. This leads to that the proposed HSTFormer has more parameters than PoseFormer [40] and P-STMO [26] but is still less than MHFormer [14] and MixSTE [38]. This demonstrates that the effectiveness of the proposed HSTFormer is not only because of introducing more parameters, but more importantly because of its capability of learning the multi-level spatial-temporal correlations of joints comprehensively and structurally.

Second, HSTFormer outperforms MixSTE on MPI-INF-3DHP by a large margin, e.g., decreasing MPJPE by 24.6% (from 54.9 mm to 41.4 mm), but gets worse results than MixSTE on Human3.6M. The possible reason is that MixSTE is prone to overfit on the relative saturated Human3.6M dataset and our method has a high generalization ability on the challenging MPI-INF-3DHP dataset, which has been discussed in the manuscript.

| Method | Params (M) | FLOPs (G) | MPJPE |

| PoseFormer [40] (=81/9) | 9.6/9.6 | 0.8/0.1 | 31.3/77.1 |

| MHFormer [14] (=351/9) | 31.5/19.1 | 8.2/0.2 | 30.5/58.0 |

| MixSTE [38] (=243/27) | 33.8/33.7 | 147.9/15.6 | 21.6/54.9 |

| HSTFormer (=81/81) | 22.72/22.72 | 2.12/2.12 | 27.8/41.4 |

| P-STMO [26] (=243/81) | 8.9/8.0 | 1.4/0.5 | 29.3/32.2⋆ |

| HSTFormer (=81/81) | 22.72/22.72 | 2.12/2.12 | 27.8/28.3⋆ |

Appendix B More Qualitative Results on the Human3.6M and MPI-INF-3DHP Datasets

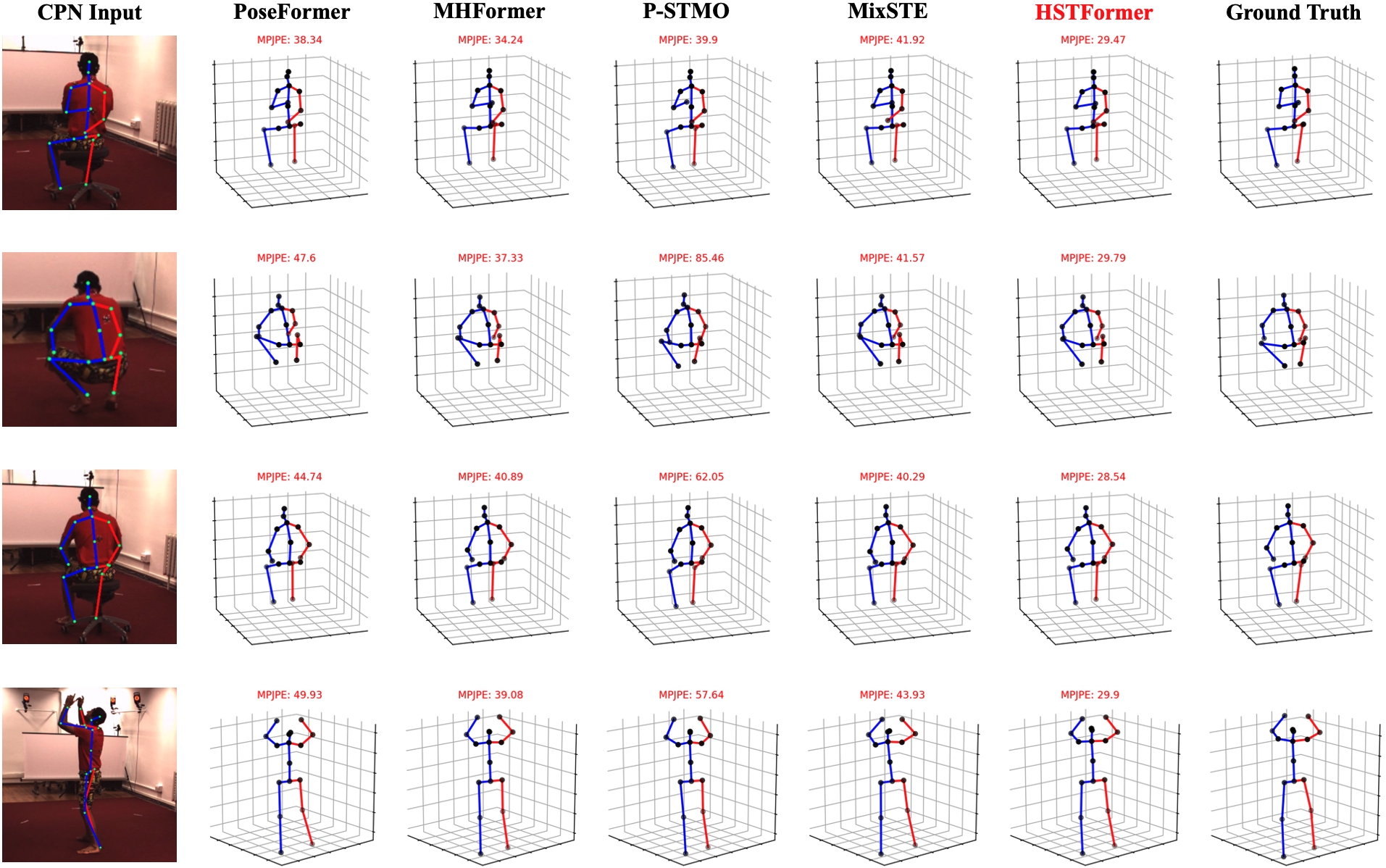

The existing approaches PoseFormer [40], MHFormer [14], MixSTE [38], and P-STMO [26] release their trained models on Human3.6M with CPN poses as input. Thus, we provide various qualitative comparisons of the propsoed HSTFormer and the aforementioned SOTA approaches on Human3.6M. This is shown in Figure 6.

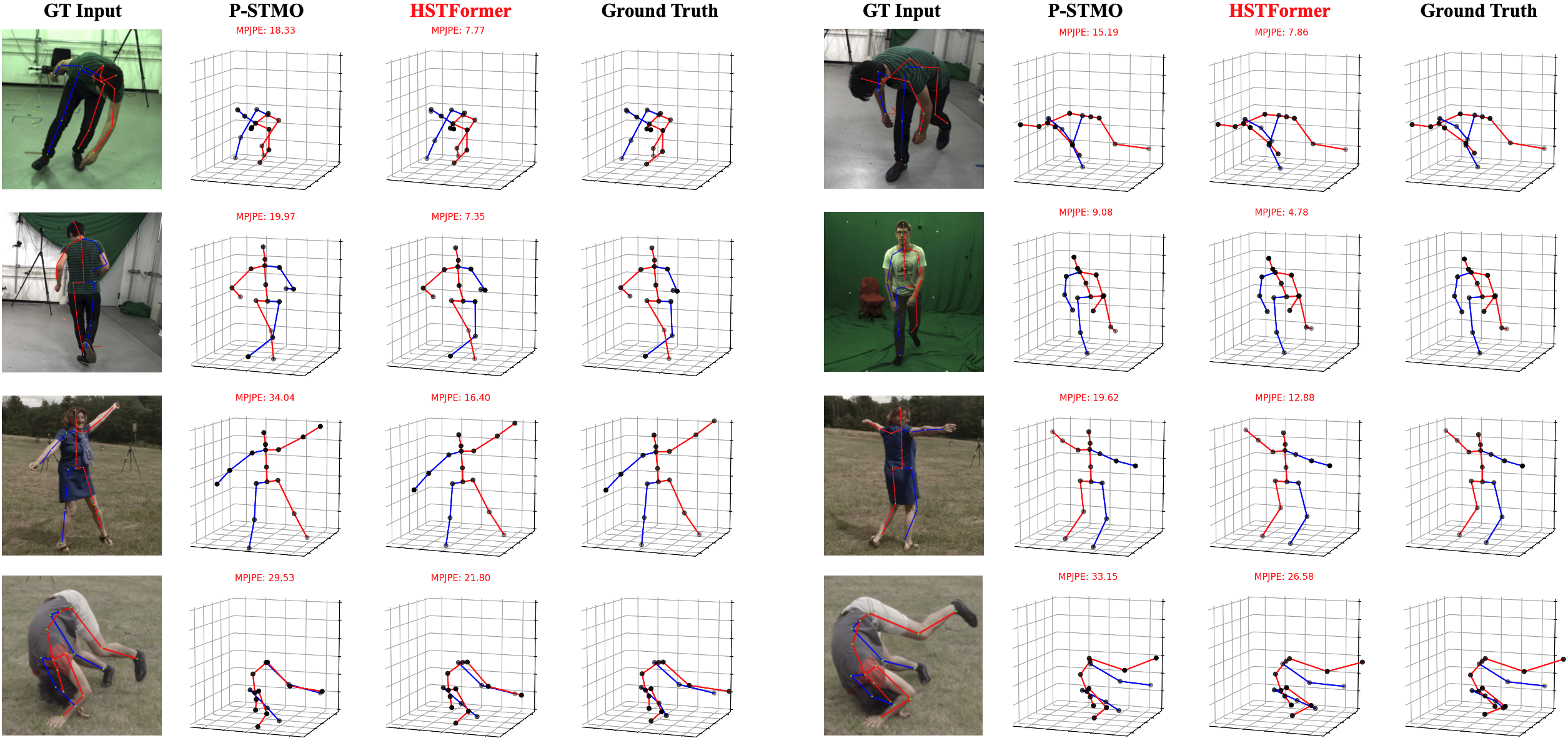

In addition, we take analysis on the the MPI-INF-3DHP dataset as well. Since only the P-STMO [26] provides the trained model on MPI-INF-3DHP with GT poses as input. Thus, we compare the qualitative results of P-STMO and ours on MPI-INF-3DHP, as shown in Figure 7.

From Figure 6 and Figure 7, it can be seen that our method is able to produce accurate 3D poses in both constrained indoor and challenging outdoor scenes/activities, especially on the parts of limbs that may move in various speed, as well as be occluded.

B.1 Video Demos

We attached three videos222https://drive.google.com/drive/folders/1nffpLTzBrvmZp0MBH6WEoLu_YVtxWwpw?usp=share_link with following filenames:

-

–

“Human3.6M_Indoor_Video.mp4”

-

–

“3DHP_Indoor_Video.mp4”

-

–

“3DHP_Outdoor_Video.mp4”

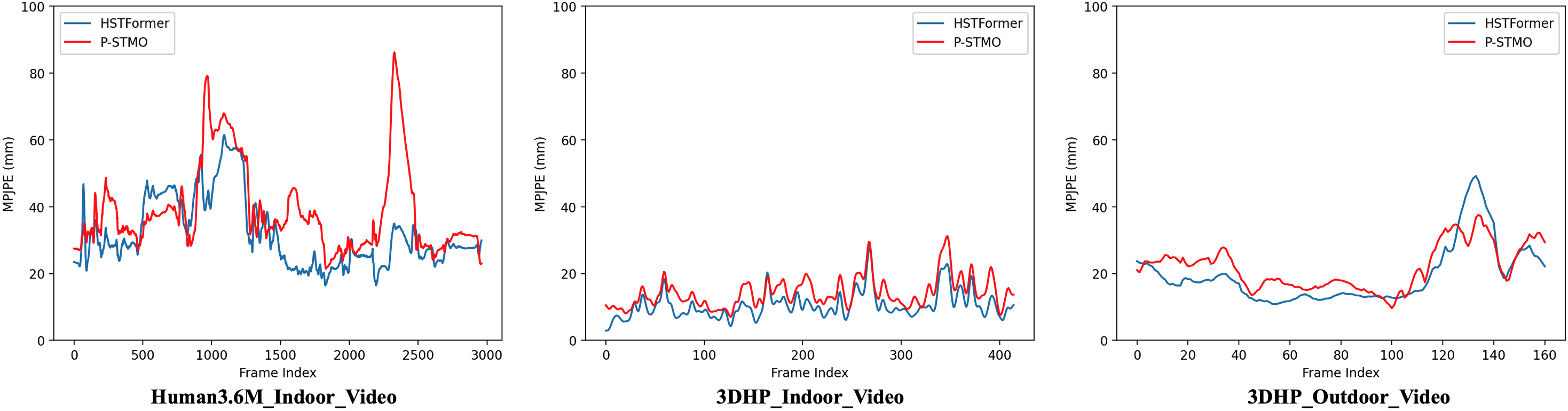

with side-by-side comparison of the proposed HSTFormer and P-STMO. In order to further demonstrate the superior performance of the proposed HSTFormer, we further plot frame-wise average MPJPE across all estimated joints which re-depicted in Fig. 8. These results are associated with the video demo filenames in the following, respectively.

-

–

“Human3.6M_Indoor_Video.mp4”

-

–

“3DHP_Indoor_Video.mp4”

-

–

“3DHP_Outdoor_Video.mp4”

Our model (blue line) achieves better performance than P-STMO. Specifically, our model reconstruct 3D pose with lower MPJPE in most frames. It also shows that our model is more stable and robust with better consistency.

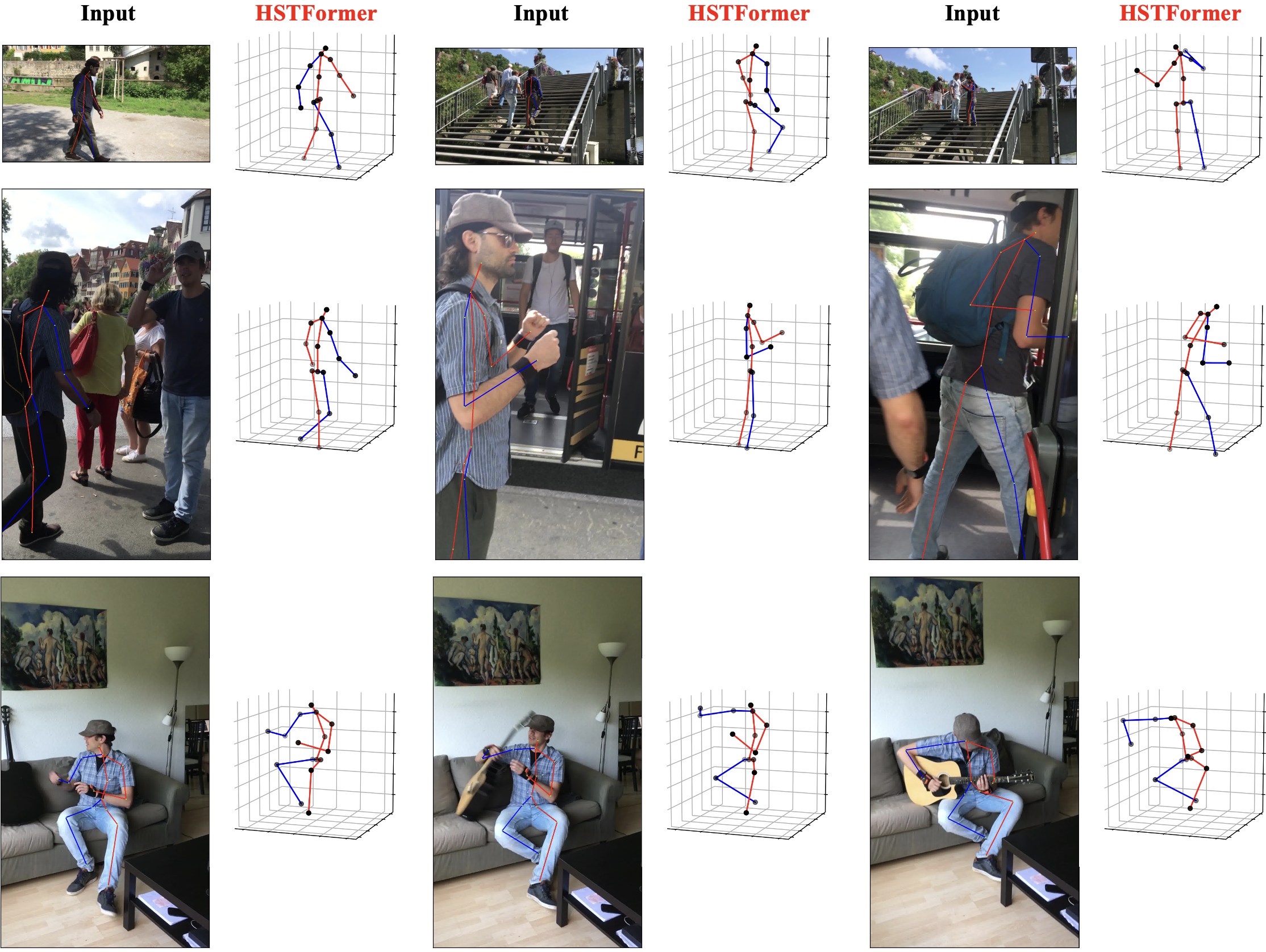

Appendix C Qualitative Results on “in-the-wild” Videos

To verify the effectiveness and generalization ability of the proposed method on “in-the-wild” videos that often contain unseen poses/activities in the training set, we run our method HSTFormer on the 3DPW [29] dataset. Here, HSTFormer is trained using the MPI-INF-3DHP training set. Figure 9 shows some visual examples of 3D pose estimation results produced by HSTFormer. Additionally, two video demos are also included in the supplementary material, named “3DPW_Video_1.mp4” and “3DPW_Video_2.mp4”. From them, we can see that HSTFormer can generalize well to “in-the-wild” videos, even for the unseen poses/activities in the training set.

References

- [1] Yujun Cai, Liuhao Ge, Jun Liu, Jianfei Cai, Tat-Jen Cham, Junsong Yuan, and Nadia Magnenat Thalmann. Exploiting spatial-temporal relationships for 3d pose estimation via graph convolutional networks. In ICCV, pages 2272–2281, 2019.

- [2] Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. End-to-end object detection with transformers. In ECCV, pages 213–229, 2020.

- [3] Tianlang Chen, Chen Fang, Xiaohui Shen, Yiheng Zhu, Zhili Chen, and Jiebo Luo. Anatomy-aware 3d human pose estimation with bone-based pose decomposition. IEEE TCSVT, 32(1):198–209, 2021.

- [4] Tianlang Chen, Chen Fang, Xiaohui Shen, Yiheng Zhu, Zhili Chen, and Jiebo Luo. Anatomy-aware 3d human pose estimation with bone-based pose decomposition. IEEE TCSVT, 2021.

- [5] Yilun Chen, Zhicheng Wang, Yuxiang Peng, Zhiqiang Zhang, Gang Yu, and Jian Sun. Cascaded pyramid network for multi-person pose estimation. In CVPR, pages 7103–7112, 2018.

- [6] Rishabh Dabral, Anurag Mundhada, Uday Kusupati, Safeer Afaque, Abhishek Sharma, and Arjun Jain. Learning 3d human pose from structure and motion. In ECCV, pages 668–683, 2018.

- [7] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [8] Rıza Alp Güler, Natalia Neverova, and Iasonas Kokkinos. Densepose: Dense human pose estimation in the wild. In CVPR, pages 7297–7306, 2018.

- [9] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- [10] Mir Rayat Imtiaz Hossain and James J Little. Exploiting temporal information for 3d human pose estimation. In ECCV, pages 68–84, 2018.

- [11] Zhanyuan Huang, Yang Liu, Yajun Fang, and Berthold KP Horn. Video-based fall detection for seniors with human pose estimation. In UV, pages 1–4. IEEE, 2018.

- [12] Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE TPAMI, 36(7):1325–1339, 2013.

- [13] Lei Jin, Chenyang Xu, Xiaojuan Wang, Yabo Xiao, Yandong Guo, Xuecheng Nie, and Jian Zhao. Single-stage is enough: Multi-person absolute 3d pose estimation. In CVPR, pages 13086–13095, 2022.

- [14] Wenhao Li, Hong Liu, Hao Tang, Pichao Wang, and Luc Van Gool. Mhformer: Multi-hypothesis transformer for 3d human pose estimation. In CVPR, pages 13147–13156, 2022.

- [15] Jiahao Lin and Gim Hee Lee. Trajectory space factorization for deep video-based 3d human pose estimation. arXiv preprint arXiv:1908.08289, 2019.

- [16] Tsung-Yi Lin, Piotr Dollár, Ross Girshick, Kaiming He, Bharath Hariharan, and Serge Belongie. Feature pyramid networks for object detection. In CVPR, pages 2117–2125, 2017.

- [17] Mengyuan Liu and Junsong Yuan. Recognizing human actions as the evolution of pose estimation maps. In CVPR, pages 1159–1168, 2018.

- [18] Ruixu Liu, Ju Shen, He Wang, Chen Chen, Sen-ching Cheung, and Vijayan Asari. Attention mechanism exploits temporal contexts: Real-time 3d human pose reconstruction. In CVPR, pages 5064–5073, 2020.

- [19] Julieta Martinez, Rayat Hossain, Javier Romero, and James J Little. A simple yet effective baseline for 3d human pose estimation. In CVPR, pages 2640–2649, 2017.

- [20] Dushyant Mehta, Helge Rhodin, Dan Casas, Pascal Fua, Oleksandr Sotnychenko, Weipeng Xu, and Christian Theobalt. Monocular 3d human pose estimation in the wild using improved cnn supervision. In 3DV. IEEE, 2017.

- [21] Dushyant Mehta, Srinath Sridhar, Oleksandr Sotnychenko, Helge Rhodin, Mohammad Shafiei, Hans-Peter Seidel, Weipeng Xu, Dan Casas, and Christian Theobalt. Vnect: Real-time 3d human pose estimation with a single rgb camera. ACM TOG, 36(4):1–14, 2017.

- [22] Tewodros Legesse Munea, Yalew Zelalem Jembre, Halefom Tekle Weldegebriel, Longbiao Chen, Chenxi Huang, and Chenhui Yang. The progress of human pose estimation: a survey and taxonomy of models applied in 2d human pose estimation. IEEE Access, 8:133330–133348, 2020.

- [23] Georgios Pavlakos, Xiaowei Zhou, Konstantinos G Derpanis, and Kostas Daniilidis. Coarse-to-fine volumetric prediction for single-image 3d human pose. In CVPR, pages 7025–7034, 2017.

- [24] Dario Pavllo, Christoph Feichtenhofer, Michael Auli, and David Grangier. Modeling human motion with quaternion-based neural networks. IJCV, 128(4):855–872, 2020.

- [25] Dario Pavllo, Christoph Feichtenhofer, David Grangier, and Michael Auli. 3d human pose estimation in video with temporal convolutions and semi-supervised training. In CVPR, pages 7753–7762, 2019.

- [26] Wenkang Shan, Zhenhua Liu, Xinfeng Zhang, Shanshe Wang, Siwei Ma, and Wen Gao. P-stmo: Pre-trained spatial temporal many-to-one model for 3d human pose estimation. In Shai Avidan, Gabriel Brostow, Moustapha Cissé, Giovanni Maria Farinella, and Tal Hassner, editors, ECCV, pages 461–478, 2022.

- [27] Leonid Sigal, Alexandru O Balan, and Michael J Black. Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. IJCV, 87(1):4–27, 2010.

- [28] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [29] Timo Von Marcard, Roberto Henschel, Michael J Black, Bodo Rosenhahn, and Gerard Pons-Moll. Recovering accurate 3d human pose in the wild using imus and a moving camera. In ECCV.

- [30] Jingbo Wang, Sijie Yan, Yuanjun Xiong, and Dahua Lin. Motion guided 3d pose estimation from videos. In ECCV, pages 764–780. Springer, 2020.

- [31] Jingbo Wang, Sijie Yan, Yuanjun Xiong, and Dahua Lin. Motion guided 3d pose estimation from videos. In ECCV, pages 764–780. Springer, 2020.

- [32] Wenhai Wang, Enze Xie, Xiang Li, Deng-Ping Fan, Kaitao Song, Ding Liang, Tong Lu, Ping Luo, and Ling Shao. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In ICCV, pages 568–578, 2021.

- [33] Tianhan Xu and Wataru Takano. Graph stacked hourglass networks for 3d human pose estimation. In CVPR, pages 16105–16114, 2021.

- [34] Sen Yang, Zhibin Quan, Mu Nie, and Wankou Yang. Transpose: Keypoint localization via transformer. In ICCV, pages 11802–11812, 2021.

- [35] Ailing Zeng, Xiao Sun, Fuyang Huang, Minhao Liu, Qiang Xu, and Stephen Lin. Srnet: Improving generalization in 3d human pose estimation with a split-and-recombine approach. In ECCV, pages 507–523. Springer, 2020.

- [36] Ailing Zeng, Xiao Sun, Lei Yang, Nanxuan Zhao, Minhao Liu, and Qiang Xu. Learning skeletal graph neural networks for hard 3d pose estimation. In ICCV, pages 11436–11445, 2021.

- [37] Yu Zhan, Fenghai Li, Renliang Weng, and Wongun Choi. Ray3d: ray-based 3d human pose estimation for monocular absolute 3d localization. In CVPR, pages 13116–13125, 2022.

- [38] Jinlu Zhang, Zhigang Tu, Jianyu Yang, Yujin Chen, and Junsong Yuan. Mixste: Seq2seq mixed spatio-temporal encoder for 3d human pose estimation in video. In CVPR, pages 13232–13242, 2022.

- [39] Weixi Zhao, Weiqiang Wang, and Yunjie Tian. Graformer: Graph-oriented transformer for 3d pose estimation. In CVPR, pages 20438–20447, 2022.

- [40] Ce Zheng, Sijie Zhu, Matias Mendieta, Taojiannan Yang, Chen Chen, and Zhengming Ding. 3d human pose estimation with spatial and temporal transformers. In ICCV, pages 11656–11665, October 2021.

- [41] Zhiming Zou and Wei Tang. Modulated graph convolutional network for 3d human pose estimation. In ICCV, pages 11477–11487, 2021.