How far is Language Model from 100% Few-shot Named Entity Recognition in Medical Domain

Abstract

Recent advancements in language models (LMs) have led to the emergence of powerful models such as Small LMs111We define SLMs as pre-trained models with fewer parameters compared to models like GPT-3/3.5/4, such as T5, BERT, and others. (e.g., T5) and Large LMs (e.g., GPT-4). These models have demonstrated exceptional capabilities across a wide range of tasks, such as name entity recognition (NER) in the general domain. Nevertheless, their efficacy in the medical section remains uncertain and the performance of medical NER always needs high accuracy because of the particularity of the field. This paper aims to provide a thorough investigation to compare the performance of LMs in medical few-shot NER and answer How far is LMs from 100% Few-shot NER in Medical Domain, and moreover to explore an effective entity recognizer to help improve the NER performance. Based on our extensive experiments conducted on 16 NER models spanning from 2018 to 2023, our findings clearly indicate that LLMs outperform SLMs in few-shot medical NER tasks, given the presence of suitable examples and appropriate logical frameworks. Despite the overall superiority of LLMs in few-shot medical NER tasks, it is important to note that they still encounter some challenges, such as misidentification, wrong template prediction, etc. Building on previous findings, we introduce a simple and effective method called RT (Retrieving and Thinking), which serves as retrievers, finding relevant examples, and as thinkers, employing a step-by-step reasoning process. Experimental results show that our proposed RT framework significantly outperforms the strong open baselines on the two open medical benchmark datasets222The source code, data are available at https://github.com/ToneLi/RT-Retrieving-and-Thinking..

1 Introduction

Researchers are increasingly interested in applying information extraction to mine a vast quantity of unstructured information from electronic medical records. These techniques can offer valuable perception and generate substantial benefits for clinical research, such as drug discovery Zhang et al. (2021), knowledge graphs building Li et al. (2020); Wu et al. (2023), question answering Li and Ji (2022); Pugachev et al. (2023) and link prediction Li et al. (2022); Zheng et al. (2023). Within the scope of medical text mining, one of the most essential tasks in medical text mining is medical named entity recognition (NER). However, existing supervised medical NER models necessitate a substantial amount of human-annotated (i.e. medical student, doctor) data. To tackle this issue, few-shot techniques have been introduced to perform NER in resource-constrained settings by leveraging auxiliary information or improving the discrimination between different labels.

To address the challenges posed by the scarcity of available medical data, recent studies propose to harness the power of SLMs or LLMs. When designing models based on SLMs, the current methods primarily utilize nearest neighbor inference Yang and Katiyar (2020); Das et al. (2022); Ji et al. (2022), prompt tuning Huang et al. (2022); Liu et al. (2022), contrastive learning Zhang et al. (2022); Das et al. (2022); Li et al. (2023) or generation methods Li and Huang (2023); Wang et al. (2023). On the other hand, when designing the models based on LLMs, the main approach is to employ the in-context learning (ICL) Min et al. (2022) method to stimulate the recognition ability of LLMs. Despite the impressive performance showcased by current works, there remains a lack of comprehensive investigation comparing the performance of LMs in medical few-shot NER. Otherwise, we found researcher tends to design the models to solve the NER problems in the general domain, become of the issues of secret data, even though these models can get high performance. More important, The NER task in the medical domain demand near-perfect (100%) accuracy due to their critical implications for human life. Consequently, determining the suitability of these models for the medical domain presents a challenging problem.

In this paper, our objective is to conduct a comprehensive evaluation of the advantage and disadvantages of SLMs and LLMs on the medical few-shot NER tasks, and then answer the following questions: 1) Which of the two, SLMs or LLMs performs better in the few-shot medical NER task? 2) Is it possible to transfer NER models trained for the general domain to the medical domain? 3) Do the quantity and quality of annotation have any impact on the performance of SLMs and LLMs? 4) How far is LM from 100% few-shot medical NER?

To answer these questions, we conduct an extensive empirical study involving 16 different few-shot NER models spanning from 2018 to 2023. Our study specifically focuses on evaluating these models using two well-established open medical NER datasets, ensuring a standardized and comprehensive analysis. The results show that 1) LLMs demonstrate superior performance over SLMs when the LLMs are provided with high-quality instructions. 2) Our findings indicate that in order to successfully transfer a NER model from the general domain to the medical domain, it is essential to pre-train the NER models specifically in the medical domain. The NER in the medical domain presents the same challenges, including flat/net entity recognition, abbreviation handling, and recognizing long-word entities, etc. The pre-training enables the model to acquire the necessary prior knowledge and context relevant to medical entities. 3) The quantity of samples has a greater impact on SLMs compared to LLMs. Additionally, when we examine the scenario where the training data is only partially annotated, we observe that LLMs exhibit more consistent and stable performance compared to SLMs. Regarding the aspect of quality, our findings contradict certain studies that suggest improved performance for LLMs with retrieved relevant samples instead of using random samples. Our results indicate that the effectiveness of this approach varies depending on the specific datasets employed in the evaluation. Otherwise, we found the effectiveness of LLMs is greatly influenced by the careful selection of appropriate examples and the application of sound entity recognition logic. In our latest findings, we found the choice between using a combination of positive and negative examples or exclusively positive examples has a definite influence on the overall performance of LLMs with ICL. 4) We have identified several factors that contribute to the inability of the current state-of-the-art model to achieve 100% entity recognition. One reason is that NER models face challenges in extracting long, special entities and Out-of-vocabulary entities. Furthermore, the superior performance of LLMs is highly reliant on pre-existing knowledge and contextual understanding, the failure to timely update the knowledge can have a significant impact on the overall performance and effectiveness of the entity recognition system. Otherwise, there are many entities annotated in the training or testing dataset has more than one entity type. For the full analyses, please check Section.7.

Based on some significant findings, we have proposed a novel few-shot entity extractor called RT (Retrieving and Thinking). The main focus of RT is to consider both the selection of appropriate examples and effective entity recognition logic. The basic idea of RT involves utilizing a basic LLM to identify the entity classes present in a given sentence. Subsequently, we employ K-nearest neighbors (KNN) to retrieve relevant examples for the sentences based on the recognized entity classes. Finally, following the approach suggested by Wei et al. (2022), we design a logical entity recognition process that enables the model to identify entities in a step-by-step manner. We perform extensive experiments on two standard medical datasets and 16 NER models for few-shot medical NER demonstrating the superiority of our method over prior state-of-the-art methods. Our contributions are the following:

-

•

We conduct an extensive empirical study comparing SLMs and LLMs on medical few-shot NER tasks across 16 NER models during 2018-2023.

-

•

We propose RT, a new framework that uses Retrieving and Thinking strategies to improve the performance of LLMs with ICLs on medical few-shot NER tasks.

-

•

We conduct a thorough analysis of our method, including an ablation study, demonstrating the ineffectiveness of RT.

2 Related Work

2.1 Small Language Models (SLMs) in Medical Few-Shot NER

Few-shot named entity recognition is a task that aims to predict the label (type) of an entity from insufficient labeled data. Most previous work in the medical domain prefer to directly use medical language models such as ClinicalBERT Huang et al. (2019), BioBERT Lee et al. (2020), and GatorTron Yang et al. (2022) to solve the few-shot NER problem. A few studies Fritzler et al. (2019); Ji et al. (2022) propose to utilize the prototype network Snell et al. (2017) to catch the few-shot medical NER tasks. Inspired by the nearest neighbor inference Wiseman and Stratos (2019), Yang and Katiyar (2020) proposes NNshot and Structshot, which use the nearest neighbor to search for the nearest label of each testing entity. In CONTaiNER Das et al. (2021), the authors use contrastive learning to increase the discrimination for each label and adopt Gaussian embeddings for each token to solve the Anisotropic property in the few-shot NER task. The prompt-based method is also explored in this task, such as Huang et al. (2022) uses the prompt to guide contrastive learning. Zhang et al. (2022) proposes a span-based contrastive learning method to handle the nested NER. In W-Procer Li et al. (2023), the authors use a weighted prototypical contrastive learning method to solve the class collision issue in few-shot medical NER. MetaNER Chen et al. (2023) proposes a novel approach to enhance the performance of NER by pre-training SLMs using instructions and demonstrations. As the popularity of LLMs continues to rise, it becomes crucial to assess the effectiveness of SLMs, so in our work, we provide a thorough investigation to compare the performance of SLMs and LLMs in the medical few-shot NER.

2.2 Large Language Models (LLMs) in Medical Few-Shot NER

There are few works to explore the effectiveness of LLMs in Few-Shot NER, so, in this section, we present relevant works exploring the use of Large Language Models (LLMs) in the general domain. Furthermore, we evaluate the performance of NER models using LLMs specifically in the medical domain. Ma et al. (2023) introduces a filter-then-rank method by combing the advantage of SLMs and LLMs. The SLM is employed to filter the candidate labels for each token, while the instruction method is utilized to predict the final answer, which is performed by the LLM. GPT-NER Wang et al. (2023) presents a novel approach that enhances in-context learning performance by employing entity-level embedding and self-verification. PromptNER Ashok and Lipton (2023) introduces the concept of a chain of thought into Named Entity Recognition. In our work, to improve the medical few-shot NER performance, we propose a method by retrieving the good samples and providing the right entity recognition logic.

3 Small LMs v.s. Large LMs

In this section, we aim to provide a thorough investigation to compare the performance of SLMs and LLMs in medical few-shot NER and answer How far are LMs from 100% Few-shot NER in Medical Domain. To this end, we evaluate 16 NER models on two standard few-shot NER datasets.

3.1 Problem Statement and Setups

In this section, we formalize the task of few-shot named entity recognition (NER) and present a standardized evaluation setup to ensure a fair comparison against previous SOTA models.

3.1.1 Few-Shot NER

In the traditional named entity recognition, given an input sentence of tokens , the NER model intends to assign each token to its corresponding label . In the few-shot NER task, we assume that there are two same label sets of length in the support set (training set) and query set (testing set). The -shot NER task is formally defined as follows: given the input sentence and a set of -shot entities for each label class in , it aims to find the best sequence for . -shot entities set contains entity examples for each entity class in .

3.1.2 Setups

For the evaluation scheme on few-shot NER, the current work Fritzler et al. (2019); Hou et al. (2020) adapted the episode evaluation method which just utilizes episode data from the test set. As shown in Das et al. (2021), sampling the test episodes from the actual test set perturbs the real distribution of testing data that may not reflect the actual performance. So, same as the existing studies Das et al. (2021); Yang and Katiyar (2020); Huang et al. (2022) about Few-shot NER, we employ the original test set of NCBI for our prediction on LLMs. Due to the expensive nature of the GPT API, we have chosen to sample 100 instances from the BC5CDR dataset as the test set for the LLMs. We randomly selected one LLM to evaluate the LLM has a similar performance under the whole test set and sampled 100 instances on BC5CDR. However, for the SLMs, we continue to utilize the original test set of BC5CDR and NCBI. we utilize greedy sampling Yang and Katiyar (2020) to sample the support set. To clarify the tagging scheme setup, we utilize the "IO" tagging scheme. Under this scheme, the "I" indicates that all tokens are inside an entity, while "O" refers to all other tokens.

3.2 Dataset

| Dataset | # Domain | # Class | # Sentence/ # Entity |

| BC5CDR | Medical | 2 | 13,938/ 28,545 |

| NCBI | Medical | 4 | 7,287/ 7,025 |

SLMs and LLMs are evaluated with two medical datasets, including BC5CDR Li et al. (2016) and NCBI Doğan et al. (2014). Due to concerns arising from the I2B2 Stubbs and Uzuner (2015) user agreement, we refrained from conducting a comparison of LLMs within the I2B2 dataset. Among these datasets, NCBI and BC5CDR consist of 798 and 1500 public medical abstracts separately, all of which are annotated with MeSH identifiers. Table 1 shows the detailed statistical information of these three datasets.

3.3 SLMs, LLMs and Evaluation Metrics

3.3.1 Small Language Models

We provide a thorough investigation with several strong baselines based on the state-of-the-art pre-trained few-shot NER models, including both Domain Transfer and Domain non-Transfer methods. Specifically, the Domain Transfer models have been trained on the OntoNotes 5.0 dataset, which comprises medical NER knowledge. The evaluation of these models is conducted using the support sets and test sets from BC5CDR, and NCBI. On the contrary, Domain non-Transfer models differ in that they do not undergo additional pre-training using other medical NER datasets.

we consider the following Domain Transfer models: (1) NNshotYang and Katiyar (2020) is a method that uses nearest neighbor classification. (2) StructshotYang and Katiyar (2020) is an improved version of NNshot that combines nearest neighbor classification, abstract transition matrix, and Viterbi algorithm. (3) ContaiNER Das et al. (2022) adopts contrastive learning to estimate the distributional distance between entities’ vectors, which are represented using Gaussian embeddings. (4) COPNER Huang et al. (2022) leverages contrastive learning with prompt tuning to identify entities. (5) EP-NET Ji et al. (2022) is a NER method based on the dispersedly distributed prototypes network. (6) MetaNER Chen et al. (2023) proposes a novel approach to enhance the performance of NER by pre-training SLMs using instructions and demonstration.

we also consider the following Domain non-Transfer models: (1) ProtoBERT Huang et al. (2022) is a few-shot NER method, which utilizes the prototypical network Snell et al. (2017) and BERT model to infer the entity label. (2) BINDER Zhang et al. (2022) employs span-based contrastive learning to effectively push the entities of different types by optimizing both the entity type encoder and sentence encoder. (3) LM-tagger (BERT Devlin et al. (2018), ClinicalBERT Huang et al. (2019), BioBERT Lee et al. (2020) and GatorTron Yang et al. (2022)) are traditional SLM-based methods that fine-tune the SLM on the support set with the label classifier. Same as Yang and Katiyar (2020); Das et al. (2022); Huang et al. (2022); Ji et al. (2022); Zhang et al. (2022), we evaluate all the models based on the generative evaluation metric, Micro F1.

| 1-shot | 5-shot | ||||

| Approach | BC5CDR | NCBI | BC5CDR | NCBI | |

| SLMs | BERT Devlin et al. (2018) | 10.58 | 12.33 | 35.60 | 26.532 |

| ClinicalBERT Huang et al. (2019) | 19.96 | 8.27 | 39.25 | 24.47 | |

| NNShot Yang and Katiyar (2020) | 32.96 | 11.82 | 39.30 | 16.22 | |

| BioBERT Lee et al. (2020) | 36.78 | 27.19 | 47.04 | 35.88 | |

| StructShot Yang and Katiyar (2020) | 16.09 | 4.63 | 30.97 | 13.89 | |

| ContaiNER Das et al. (2022) | 37.25 | 16.51 | 41.21 | 26.83 | |

| COPNER Huang et al. (2022) | 36.36 | 15.54 | 42.78 | 24.23 | |

| ProtoBERT Huang et al. (2022) | 23.61 | 17.24 | 40.58 | 34.18 | |

| GatorTron Yang et al. (2022) | 26.97 | 35.00 | 55.44 | 37.64 | |

| BINDER Zhang et al. (2023) | 1.86 | 2.95 | 51.79 | 31.95 | |

| W-Procer Li et al. (2023) | 40.26 | 38.86 | 56.02 | 40.90 | |

| MetaNER Chen et al. (2023) | – | 40.01 | – | 44.92 | |

| LLMs | Vanilla ICL Li et al. (2023) | 43.90 | 43.48 | 44.72 | 43.83 |

| PromptNER Ashok and Lipton (2023) | 92.84 | 81.29 | 93.33 | 84.06 | |

| GPT-NER* Wang et al. (2023) | 91.28 | 90.44 | 91.56 | 90.44 | |

| GPT-NER*(self-ve) Wang et al. (2023) | 91.28 | 90.79 | 91.63 | 90.67 | |

3.3.2 Large Language Models

We adapt the current strongest GPT-4333https://platform.openai.com/docs/models/gpt-4 rather than GPT-3.5444https://platform.openai.com/docs/models/gpt-3-5 in our experiments out of two primary reasons: (1) According to recent findings by Li et al. (2023), GPT-4 demonstrates superior performance in few-shot medical named entity recognition (NER) tasks compared to GPT-3.5. (2) GPT-4 is equipped with a more extensive set of pre-existing knowledge and a larger number of training parameters compared to its predecessor, GPT-3.5. By employing the respective source code, we have implemented a set of robust few-shot named entity recognition (NER) methods on the BC5CDR and NCBI datasets. All of these methods are specifically designed based on in-context learning principles, and GPT-4. Note that: The cost of running these models typically ranges from 6$ to 10$ per run by using the GPT-4.

1) Vanilla ICL Li et al. (2023) utilizes a set of common prompts that include instructions and demonstrations (examples). These examples are generated by randomly retrieving demonstrations from a database. 2) PromptNER Ashok and Lipton (2023) follows a similar sample retrieval strategy as Vanilla ICL Li et al. (2023). However, PromptNER incorporates the concept of a chain of thought to enhance entity recognition. 3) GPT-NER*. GPT-NER Wang et al. (2023) uses a method by retrieving the samples using the KNN method to improve the performance of in-context learning. In the original GPT-NER implementation, the authors did not provide distinct symbols for different labels. To address this, we introduced special symbols in the output of our modified version called GPT-NER*. We give an example in Figure 2. 4) GPT-NER* (self-verification) Wang et al. (2023) follows GPT-NER* and adopts a new strategy to verify the accuracy of the recognition.

4 Comparison Results

We evaluate 16 approaches, 12 SLM-base NER models, and 4 LLM-based NER models on 2 open medical NER datasets. We first conduct pivot experiments and observe LLMs without good instructions will reduce the performance of NER. As indicated in Table 2, the Vanilla ICL exhibit lower performance compared to MetaNER and W-PROCER on the NCBI 5-shot and BC5CDR 5-shot datasets, respectively. Conversely, PromptNER and GPT-NER achieve exceptionally high performance on these datasets when compared to the results of SLMs.

Can we transfer the NER models from the general domain to the medical domain. The utilization of BERT models trained on general encyclopedic data poses challenges in accurately identifying medical entities within the medical domain. Despite various research efforts to redesign NER models like NNshot and StructShot, traditional SLM in the general domain still struggle to achieve ideal results in this context. However, pre-training models specifically on medical datasets, such as BioBERT, have shown promising performance gains compared to traditional BERT models. Consequently, researchers have focused on enhancing medical NER performance by pre-training models in the medical domain, as observed in projects like GatorTron and MetaNER. This suggests that equipping language models with domain-specific prior knowledge is crucial for improving NER performance.

SLMs are more susceptible to the amount of training data. From Table 2, we can see that the quantity of samples has a greater impact on SLMs compared to LLMs. As an illustration, consider GPT-NER, which demonstrates comparable performance on both the NCBI 1-shot and 5-shot datasets. However, in the case of SLMs like MetaMER and ProtoBERT, the performance on the 5-shot dataset significantly surpasses that of the model performance on the 1-shot dataset. It shows that the SLMs are more susceptible to the amount of training data.

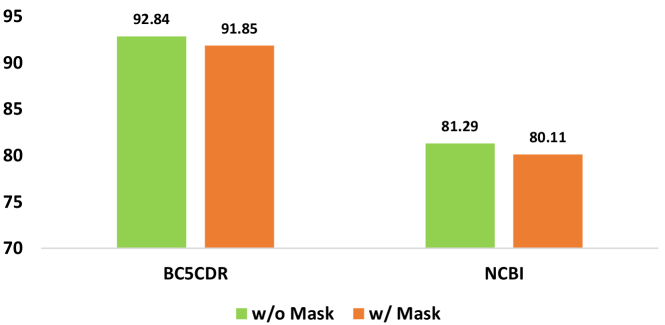

Handling problems related to partially annotated datasets is comparatively easier for LLM.

.

In the study conducted by Li et al. (2023), they experimented by masking certain labeled entities to simulate a partially annotated dataset. The authors observed a 3-4 point decrease in NER performance before and after the mask operation. So in this paper, to test the ability of LLM, we mask the labeled entities on the 5-shot dataset, to make sure each label just has one entity, and then compare the performance of LLMs on the masked dataset and source 1-shot dataset. We use PromptNER in this experiment, and on the dataset BC5CDR and NCBI. The results are shown in Figure. 1. The experiment revealed that the partially annotated dataset had a minimal impact on the LLM.

5 More Analysis on LLMs

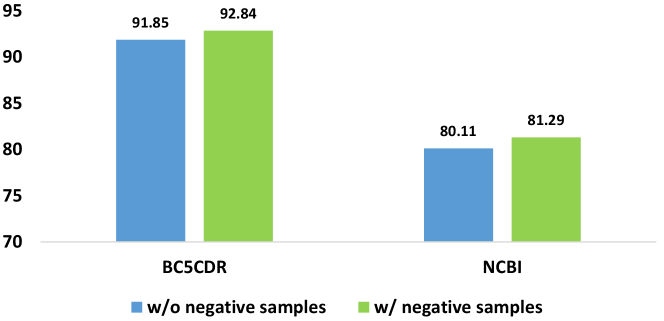

5.1 Positive and Negative Samples in the Chain-of-Thought

Certain LLMs, such as PromptNER, which emphasizes the utilization of the chain of thought in the NER task, incorporate a design that includes both positive and negative samples within the instructions. As shown in Figure 2, the positive example is "Apomorphine | True | as it is Chemical" and the negative example is "impact | False | as it is a verb". It is important to evaluate the necessary of using the negative samples. In this work, we set a simple experiment by comparing the model performance with the model that just uses the positive samples. As shown in Figure 3, the results show that the performance of the model using the negative sample and positive samples is better than the model just using the positive samples. However, this evaluation is based on the assumption that the model solely employs a chain-of-thought strategy to enhance its generation output. In more intricate scenarios, further examination and discussion will be discussed in the future.

5.2 The Influence of Different Output Examples

In Figure. 2, we give a detailed example of output on different ICL strategies, Vanilla ICL, the chain of thought, and the tree of thought Yao et al. (2023). For the idea of the tree of thought, we design the examples (samples) in the instruction as shown on the left of Figure 2. We have identified that the reason for the poorer performance on Vanilla ICL is the model’s difficulty in predicting the dictionary symbol, such as "", and"". By the comparison result in Figure 4 and Table 2, we found the chain of thought has a better performance than the tree of thought. The reason is that the tree of thought is more suitable for the complex reasoning task, such as mathematical calculation. The impressive results achieved by PromptNER and GPT-NER also highlight the superiority of the chain of thought and KNN retrieval method in the context of medical few-shot NER tasks.

5.3 LLMs are good for labeling entities, but not good for extracting entities

| 1-shot | 5-shot | |||

| BC5CDR | NCBI | BC5CDR | NCBI | |

| P1 | 92.84 | 81.29 | 93.33 | 84.06 |

| P2 | 99.06 | 95.92 | 99.06 | 95.92 |

The NER process consists of two crucial steps: entity recognition and entity labeling. In our study, as shown in Table 3, we have demonstrated that LLMs exhibit a strong capability for accurately labeling entities by comparing the results of P1 and P2. However, we have observed that recognizing the surface name or identifying the explicit mentions of relevant entities within a sentence can be challenging for LLMs. This particular finding is being left for further research.

6 Our Method

Based on the findings mentioned above, we introduce a novel approach called RT (Retrieving and Thinking) and present it in Figure 5. The RT method comprises two primary steps: (1) retrieving the most pertinent examples for the given test sentence, which are incorporated as part of the instruction in the ICL. This step is accomplished through the process of Retrieving. (2) guiding LLM to recognize the entity gradually, demonstrating this progression as Thinking. In the following sections, we provide a comprehensive explanation of each component.

| 1-shot | 5-shot | |||

| Approach | BC5CDR | NCBI | BC5CDR | NCBI |

| PromptNER Ashok and Lipton (2023) | 92.84 | 81.29 | 93.33 | 84.06 |

| GPT-NER* Wang et al. (2023) | 91.28 | 90.44 | 91.56 | 90.44 |

| GPT-NER*(self-ve) Wang et al. (2023) | 91.28 | 90.79 | 91.63 | 90.67 |

| RT (Our method) | 93.50 | 91.56 | 93.26 | 91.76 |

6.1 Retrieving

As depicted in Figure 5, the process of obtaining relevant examples involves two distinct steps. The first step is represented by the red line in Figure 5, while the second step is indicated by the blue line in Figure 5.

In the initial step, we employ a random selection of LLMs to determine the labels that correspond to the test sentence. For this purpose, we utilize the Vanilla ICL method proposed by Li et al. (2023). In Figure 5, the relevant labels about the input sentence are Chemical and Disease. Subsequently, we proceed to obtain candidate sentences (instances) for each label from the development dataset. This is done by considering sentences that contain an entity labeled as . So the candidate sentences for label can be represented as , where represents the number of candidate sentences for a given label .

In the subsequent step, we begin by embedding the input sentence into its corresponding sentence embedding . Additionally, we generate embeddings for the candidate sentences, denoted as . Next, we calculate the similarity between the sentence embedding and each candidate embedding for every label . Based on these similarity scores, we select the top most similar candidate sentences and add them to the retrieved sentence set for the input sentence. To ensure that each label has a limited number of entities (either one or five), we utilize the Greedy Sampling approach to select the final examples from the retrieved sentence set.

6.2 Thinking

In the implementation of this step, we incorporate thinking examples within the instruction, as depicted in Figure 5. These thinking examples aid the Language Labeling Model (LLM) in gradually recognizing medical entities. Specifically, following a similar approach as PromptNER Ashok and Lipton (2023), the first step involves making a judgment as to whether a particular entity belongs to the candidate labels. Subsequently, in the second step, the LLM provides a rationale explaining why the entity is associated with a specific label.

6.3 Main Results

Table 4 presents the experimental results of various approaches based on the Micro-F1. We have the following observations: (1) RT has an improved performance on BC5CDR-1-shot, NCBI-1-shot, NCBI-5-shot over the best baselines GPT-NER and PromptNER, and is almost neck to neck on BC5CDR-5-shot. Overall, RT outperforms or achieves comparable exact-match F1 performances to the other methods, demonstrating the effectiveness of our proposed method. (2) Our method evaluates the effectiveness by retrieving a good example and guiding the LLM to extract the entity by the right logic. (3) In our method, we observed that RT achieves better performance on the NCBI dataset when utilizing only positive examples (Choice 2 in Figure 5). Conversely, for the BC5CDR dataset, Choice 1 proves to be more effective. These findings indicate that different datasets have their own distinct examples that yield optimal performance. Therefore, it is crucial to adapt the choice of examples based on the specific dataset being addressed. By understanding and leveraging dataset-specific examples, we can enhance the performance and applicability of the RT method.

7 Error Analysis

We conducted an additional manual analysis to determine the reasons why RT fails to achieve 100% accuracy in medical entity recognition on the test sets of BC5CDR and NCBI. This analysis was performed separately for the 1-shot and 5-shot support sets. We have categorized the findings into different categories, and examples for each category are presented in Table 5.

-

•

Unable to extract entities: Our analysis indicates that RT struggles to comprehend the semantic information of input sentences and accurately identify the corresponding entities.

-

•

Misidentification: RT often struggles to recognize and generate the entire entity, instead only producing a partial representation of the gold entity.

-

•

Class collision: During our analysis, we observed that the test data is only partially annotated, which prevents the RT gets 100% entity recognition.

-

•

Multi-label entities: During our analysis, we observed that some entities have more than one label.

-

•

Unable to generate symbols: Despite RT’s ability to extract complex entities like severe combined immunodeficiency syndrome, it faces difficulties in generating special symbols within certain entities. such as the gold entity is B-cell non-Hodgkin’s lymphoma, the predicted entity is B-cell non-Hodgkin\"s lymphoma.

-

•

Wrong Template Prediction: Despite being provided with output templates and examples, RT still generates a considerable number of output errors that are not based on the provided template.

| Dataset | Unable to extract entities: | |

| NCBI | Test sentence: | Myotonic dystrophy protein kinase is involved in the modulation… |

| gold entities | Myotonic dystrophy, muscular disorder, DM | |

| predicted entities | DM | |

| Misidentification: | ||

| NCBI | gold entities | breast malignancies |

| predicted entity: | breast | |

| NCBI | gold entities | non-familial cancers" |

| predicted entity: | cancers | |

| BC5CDR | gold entities | ate-onset scleroderma renal crisis |

| predicted entity: | scleroderma renal crisis | |

| Class collision: | ||

| BC5CDR | error description: | acute cardiotoxicity is not annotated |

| Multi-label entities: | ||

| NCBI | situation description: | the entity DM has two labels SpecificDisease and Modifier |

| Unable to generate symbols: | ||

| BC5CDR | gold entity: | B-cell non-Hodgkin’s lymphoma |

| predicted entity: | B-cell non-Hodgkin\"s lymphoma | |

| Wrong template prediction: | ||

| NCBI | gold template: | </M>WD|True| as it is Modifier<M> |

| predicted template: | </M>WD|True|as it is Modifier<M> cannot be homologous to </M>" | |

8 Conclusion

We have conducted an extensive empirical study comparing 16 SLMs and LLMs on two open-domain medical NER datasets. We show that LLMs are good few-shot medical NER extractors with good examples and reasonable extraction logical. Building on some important findings, we propose a RT (Retrieving and Thinking) method to improve the performance of medical NER. We also found it is true that LLMs may encounter difficulties in extracting entities with 100% accuracy. There are several reasons for this, including misidentification, wrong template prediction, etc.

Acknowledgements

This work was supported by the National Institutes of Health’s National Center for Complementary and Integrative Health grant number R01AT009457 and National Institute on Aging grant number R01AG078154. The content is solely the responsibility of the authors and does not represent the official views of the National Institutes of Health.

References

- Ashok and Lipton (2023) Dhananjay Ashok and Zachary C Lipton. 2023. Promptner: Prompting for named entity recognition. arXiv preprint arXiv:2305.15444.

- Chen et al. (2023) Jiawei Chen, Yaojie Lu, Hongyu Lin, Jie Lou, Wei Jia, Dai Dai, Hua Wu, Boxi Cao, Xianpei Han, and Le Sun. 2023. Learning in-context learning for named entity recognition. arXiv preprint arXiv:2305.11038.

- Das et al. (2021) Sarkar Snigdha Sarathi Das, Arzoo Katiyar, Rebecca J Passonneau, and Rui Zhang. 2021. Container: Few-shot named entity recognition via contrastive learning. arXiv preprint arXiv:2109.07589.

- Das et al. (2022) Sarkar Snigdha Sarathi Das, Arzoo Katiyar, Rebecca J Passonneau, and Rui Zhang. 2022. Container: Few-shot named entity recognition via contrastive learning. arXiv preprint arXiv:2109.07589.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Doğan et al. (2014) Rezarta Islamaj Doğan, Robert Leaman, and Zhiyong Lu. 2014. Ncbi disease corpus: a resource for disease name recognition and concept normalization. Journal of biomedical informatics, 47:1–10.

- Fritzler et al. (2019) Alexander Fritzler, Varvara Logacheva, and Maksim Kretov. 2019. Few-shot classification in named entity recognition task. In Proceedings of the 34th ACM/SIGAPP Symposium on Applied Computing, pages 993–1000.

- Hou et al. (2020) Yutai Hou, Wanxiang Che, Yongkui Lai, Zhihan Zhou, Yijia Liu, Han Liu, and Ting Liu. 2020. Few-shot slot tagging with collapsed dependency transfer and label-enhanced task-adaptive projection network. arXiv preprint arXiv:2006.05702.

- Huang et al. (2019) Kexin Huang, Jaan Altosaar, and Rajesh Ranganath. 2019. Clinicalbert: Modeling clinical notes and predicting hospital readmission. arXiv preprint arXiv:1904.05342.

- Huang et al. (2022) Yucheng Huang, Kai He, Yige Wang, Xianli Zhang, Tieliang Gong, Rui Mao, and Chen Li. 2022. Copner: Contrastive learning with prompt guiding for few-shot named entity recognition. In Proceedings of the 29th International conference on computational linguistics, pages 2515–2527.

- Ji et al. (2022) Bin Ji, Shasha Li, Shaoduo Gan, Jie Yu, Jun Ma, and Huijun Liu. 2022. Few-shot named entity recognition with entity-level prototypical network enhanced by dispersedly distributed prototypes. arXiv preprint arXiv:2208.08023.

- Lee et al. (2020) Jinhyuk Lee, Wonjin Yoon, Sungdong Kim, Donghyeon Kim, Sunkyu Kim, Chan Ho So, and Jaewoo Kang. 2020. Biobert: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics, 36(4):1234–1240.

- Li et al. (2016) Jiao Li, Yueping Sun, Robin J Johnson, Daniela Sciaky, Chih-Hsuan Wei, Robert Leaman, Allan Peter Davis, Carolyn J Mattingly, Thomas C Wiegers, and Zhiyong Lu. 2016. Biocreative v cdr task corpus: a resource for chemical disease relation extraction. Database, 2016.

- Li et al. (2022) Mingchen Li, Junfan Chen, Samuel Mensah, Nikolaos Aletras, Xiulong Yang, and Yang Ye. 2022. A hierarchical n-gram framework for zero-shot link prediction. arXiv preprint arXiv:2204.10293.

- Li and Huang (2023) Mingchen Li and Lifu Huang. 2023. Understand the dynamic world: An end-to-end knowledge informed framework for open domain entity state tracking. arXiv preprint arXiv:2304.13854.

- Li and Ji (2022) Mingchen Li and Jonathan Shihao Ji. 2022. Semantic structure based query graph prediction for question answering over knowledge graph. arXiv preprint arXiv:2204.10194.

- Li et al. (2023) Mingchen Li, Yang Ye, Jeremy Yeung, Huixue Zhou, Huaiyuan Chu, and Rui Zhang. 2023. W-procer: Weighted prototypical contrastive learning for medical few-shot named entity recognition. arXiv preprint arXiv:2305.18624.

- Li et al. (2020) Mingchen Li, Zili Zhou, and Yanna Wang. 2020. Multi-fusion chinese wordnet (mcw): Compound of machine learning and manual correction. arXiv preprint arXiv:2002.01761.

- Liu et al. (2022) Andy T Liu, Wei Xiao, Henghui Zhu, Dejiao Zhang, Shang-Wen Li, and Andrew Arnold. 2022. Qaner: Prompting question answering models for few-shot named entity recognition. arXiv preprint arXiv:2203.01543.

- Ma et al. (2023) Yubo Ma, Yixin Cao, YongChing Hong, and Aixin Sun. 2023. Large language model is not a good few-shot information extractor, but a good reranker for hard samples! arXiv preprint arXiv:2303.08559.

- Min et al. (2022) Sewon Min, Xinxi Lyu, Ari Holtzman, Mikel Artetxe, Mike Lewis, Hannaneh Hajishirzi, and Luke Zettlemoyer. 2022. Rethinking the role of demonstrations: What makes in-context learning work? arXiv preprint arXiv:2202.12837.

- Pugachev et al. (2023) Alexander Pugachev, Ekaterina Artemova, Alexander Bondarenko, and Pavel Braslavski. 2023. Consumer health question answering using off-the-shelf components. In European Conference on Information Retrieval, pages 571–579. Springer.

- Snell et al. (2017) Jake Snell, Kevin Swersky, and Richard Zemel. 2017. Prototypical networks for few-shot learning. Advances in neural information processing systems, 30.

- Stubbs and Uzuner (2015) Amber Stubbs and Özlem Uzuner. 2015. Annotating longitudinal clinical narratives for de-identification: The 2014 i2b2/uthealth corpus. Journal of biomedical informatics, 58:S20–S29.

- Wang et al. (2023) Shuhe Wang, Xiaofei Sun, Xiaoya Li, Rongbin Ouyang, Fei Wu, Tianwei Zhang, Jiwei Li, and Guoyin Wang. 2023. Gpt-ner: Named entity recognition via large language models. arXiv preprint arXiv:2304.10428.

- Wei et al. (2022) Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Ed Chi, Quoc Le, and Denny Zhou. 2022. Chain of thought prompting elicits reasoning in large language models. arXiv preprint arXiv:2201.11903.

- Wiseman and Stratos (2019) Sam Wiseman and Karl Stratos. 2019. Label-agnostic sequence labeling by copying nearest neighbors. arXiv preprint arXiv:1906.04225.

- Wu et al. (2023) Xuehong Wu, Junwen Duan, Yi Pan, and Min Li. 2023. Medical knowledge graph: Data sources, construction, reasoning, and applications. Big Data Mining and Analytics, 6(2):201–217.

- Yang et al. (2022) Xi Yang, Aokun Chen, Nima PourNejatian, Hoo Chang Shin, Kaleb E Smith, Christopher Parisien, Colin Compas, Cheryl Martin, Anthony B Costa, Mona G Flores, et al. 2022. A large language model for electronic health records. npj Digital Medicine, 5(1):194.

- Yang and Katiyar (2020) Yi Yang and Arzoo Katiyar. 2020. Simple and effective few-shot named entity recognition with structured nearest neighbor learning. arXiv preprint arXiv:2010.02405.

- Yao et al. (2023) Shunyu Yao, Dian Yu, Jeffrey Zhao, Izhak Shafran, Thomas L Griffiths, Yuan Cao, and Karthik Narasimhan. 2023. Tree of thoughts: Deliberate problem solving with large language models. arXiv preprint arXiv:2305.10601.

- Zhang et al. (2021) Rui Zhang, Dimitar Hristovski, Dalton Schutte, Andrej Kastrin, Marcelo Fiszman, and Halil Kilicoglu. 2021. Drug repurposing for covid-19 via knowledge graph completion. Journal of biomedical informatics, 115:103696.

- Zhang et al. (2022) Sheng Zhang, Hao Cheng, Jianfeng Gao, and Hoifung Poon. 2022. Optimizing bi-encoder for named entity recognition via contrastive learning. arXiv preprint arXiv:2208.14565.

- Zhang et al. (2023) Sheng Zhang, Hao Cheng, Jianfeng Gao, and Hoifung Poon. 2023. Optimizing bi-encoder for named entity recognition via contrastive learning. arXiv preprint arXiv:2208.14565.

- Zheng et al. (2023) Kai Zheng, Xin-Lu Zhang, Lei Wang, Zhu-Hong You, Bo-Ya Ji, Xiao Liang, and Zheng-Wei Li. 2023. Sprda: a link prediction approach based on the structural perturbation to infer disease-associated piwi-interacting rnas. Briefings in Bioinformatics, 24(1):bbac498.