How do Minimum-Norm Shallow Denoisers Look in Function Space?

Abstract

Neural network (NN) denoisers are an essential building block in many common tasks, ranging from image reconstruction to image generation. However, the success of these models is not well understood from a theoretical perspective. In this paper, we aim to characterize the functions realized by shallow ReLU NN denoisers — in the common theoretical setting of interpolation (i.e., zero training loss) with a minimal representation cost (i.e., minimal norm weights). First, for univariate data, we derive a closed form for the NN denoiser function, find it is contractive toward the clean data points, and prove it generalizes better than the empirical MMSE estimator at a low noise level. Next, for multivariate data, we find the NN denoiser functions in a closed form under various geometric assumptions on the training data: data contained in a low-dimensional subspace, data contained in a union of one-sided rays, or several types of simplexes. These functions decompose into a sum of simple rank-one piecewise linear interpolations aligned with edges and/or faces connecting training samples. We empirically verify this alignment phenomenon on synthetic data and real images.

1 Introduction

The ability to reconstruct an image from a noisy observation has been studied extensively in the last decades, as it is useful for many practical applications (e.g., Hasinoff et al. (2010)). In recent years, Neural Network (NN) denoisers commonly replace classical expert-based approaches as they achieve substantially better results than the classical approaches (e.g., Zhang et al. (2017)). Beyond this natural usage, NN denoisers also serve as essential building blocks in a variety of common computer vision tasks, such as solving inverse problems (Zhang et al., 2021) and image generation (Song and Ermon, 2019; Ho et al., 2020). To better understand the role of NN denoisers in such complex applications, we first wish to theoretically understand the type of solutions they converge to.

In practice, when training denoisers, we sample multiple noisy samples for each clean image and minimize the Mean Squared Error (MSE) loss for recovering the clean image. Since we sample numerous noisy samples per clean sample, the number of training samples is typically larger than the number of parameters in the network. Interestingly, even in such an under-parameterized regime, the loss has multiple global minima corresponding to distinct denoiser functions which achieve zero loss on the observed data. To characterize these functions, we study, similarly to previous works (Savarese et al., 2019; Ongie et al., 2020), the shallow NN solutions that interpolate the training data with minimal representation cost, i.e., where the -norm of the weights (without biases and skip connections) is as small as possible. This is because we converge to such min-cost solutions when we minimize the loss with a vanishingly small regularization on these weights.

We first examine the univariate input case: building on existing results (Hanin, 2021), we characterize the min-cost interpolating solution and its generalization to unseen data. Next, we aim to extend this analysis to the multivariate case. However, this is challenging, since, to the best of our knowledge, there are no results that explicitly characterize these min-cost solutions for general multivariate shallow NNs — except in two basic cases. In the first case, the input data is co-linear (Ergen and Pilanci, 2021). In the second case, the input samples are identical to their target outputs, so the trivial min-cost solution is the identity function. The NN denoisers’ training regime is ‘near’ the second case: there, the input samples are noisy versions of the clean target outputs. Interestingly, we find that this regime leads to non-trivial min-cost solutions far from identity — even with an infinitesimally small input noise. We analytically investigate these solutions here.

Our Contributions.

We study the NN solutions in the setting of interpolation of noisy samples with min-cost, in a practically relevant “low noise regime” where the noisy samples are well clustered. In the univariate case,

-

•

We find a closed-form solution for the minimum representation cost NN denoiser. Then, we prove this solution generalizes better than the empirical minimum MSE (eMMSE) denoiser.

-

•

We prove this min-cost NN solution is contractive toward the clean data points, that is, applying the denoiser necessarily reduces the distance of a noisy sample to one of the clean samples.

In the multivariate case,

-

•

We derive a closed-form solution for the min-cost NN denoiser in multivariate case under various assumptions on the geometric configuration of the clean training samples. To the best of our knowledge, this is the first set of results to explicitly characterize a min-cost interpolating NN in a non-basic multivariate setting.

-

•

We illustrate a general alignment phenomenon of min-cost NN denoisers in the multivariate setting: the optimal NN denoiser decomposes into a sum of simple rank-one piecewise linear interpolations aligned with edges and/or faces connecting clean training samples.

2 Preliminaries and problem setting

The denoising problem.

Let be a noisy observation of , such that where and are statistically independent, and . Commonly, this noise is Gaussian with covariance matrix . The ultimate goal of a denoiser is to minimize the MSE loss over the joint probability distribution of the data and the noisy observation (“population distribution”), i.e., to minimize

| (1) |

The well-known optimal solution for (1) is the minimum mean square error (MMSE) denoiser, i.e.,

| (2) |

Since we do not have access to the distribution of the data, and hence not to the posterior distribution, we rely on a finite amount of clean data in order to learn a good approximation for the MMSE estimator. One approach is to assume an empirical data distribution and derive the optimal solution of (1), i.e., the empirical minimum mean square error (eMMSE) denoiser,

| (3) |

If the noise is Gaussian with a covariance of , an explicit solution to the eMMSE is given by

| (4) |

An alternative approach to computing the eMMSE directly is to draw noisy samples for each clean data point, as , where are independent and identically distributed, and to minimize the following loss function

| (5) |

Denoiser model and algorithms.

In practice, we approximate the optimal denoiser using a parametric model , typically a NN. We focus on a shallow ReLU network model with a skip connection of the form

| (6) |

where with and . We train the model on a finite set of clean data points . The common practical training method is based on an online approach. First, we sample a random batch (with replacement) from the data . Then, for each clean data point , we draw a noisy sample , where are independent of the clean data points and other noise samples. At each iteration out of iterations, we update the model parameters according to a stochastic gradient descent rule, with a vanishingly small regularization term , that is,

| (7) |

Another training method (Chen et al., 2014) is based on an offline approach. We sample noisy sample for each clean data point and minimize (5) plus a regularization term

| (8) |

Similarly to previous works (Savarese et al., 2019; Ongie et al., 2020), we assume an penalty on the weights, but not on the biases and skip connections, i.e.,

| (9) |

Low noise regime.

In this paper, we study the solution of the NN denoiser when the clusters of noisy samples around each clean point are well-separated, a setting which we refer to as the “low noise regime”. This is a rather relevant regime since denoisers are practically used when the noise level is mild. Indeed, common image-denoising benchmarks test on low (but not negligible) noise levels. For instance, in the commonly used denoising benchmark BSD68 (Roth and Black, 2009), the noise level is in the low noise regime.111The minimum distance between two images in BSD68 is about while the image resolution is . Also, the norm of the noise concentrates around the value of . Therefore, the clusters of noisy samples around each clean point are generally well-separated. Moreover, this setting is important, for example, in diffusion-based image generation, since at the end of the reverse denoising process, new images are sampled by denoising smaller and smaller noise levels.222Interestingly, it was suggested that the “useful” part of the diffusion dynamics happens only below some critical noise level (Raya and Ambrogioni, 2023).

3 Basic properties of neural network denoisers

Offline v.s. online NN solutions.

NN denoisers are traditionally trained in an online fashion (7), using a finite amount of iterations. Consequently, only a finite number of noisy samples are used for each clean data point. We empirically observe that the solutions in the offline and online settings are similar. Specifically, in the univariate case, we show in Figure 1 that denoisers based on offline and online loss functions converge to indistinguishable solutions. For the multivariate case, we observe (Figure 11 in Appendix D) that the offline and online solutions achieve approximately the same test MSE when trained on a subset of the MNIST dataset. The comparison is made using the same number of iterations for both training methods, while using much less noisy samples in the offline setting. Evidently, this lower number of samples does not significantly affect the generalization error. Hence, in the rest of the paper, we focus on offline training (i.e., minimizing the offline loss ), as it defines an explicit loss function with solutions that can be theoretically analyzed, as in (Savarese et al., 2019; Ongie et al., 2020).

The empirical MMSE denoiser.

The law of large numbers implies that the denoiser minimizing the offline loss approaches the eMMSE estimator in the limit of infinitely many noisy samples,

| (10) |

Therefore, it may seem that for a reasonable number of noise samples , a large enough model, and small enough regularization, the denoiser we get by minimizing the offline loss (6) will also be similar to the eMMSE estimator. However, Figure 1 shows that the eMMSE solution and the NN solutions (both online and offline) are quite different. The eMMSE denoiser has a much sharper transition and maps almost all inputs to a value of a clean data point. This is because in the case of low noise the eMMSE denoiser (4) approximates the one nearest-neighbor (-NN) classifier, i.e.,

| (11) |

In contrast, the NN denoiser maps each noisy sample to its corresponding clean sample only in a limited “noise ball” around the clean point, and interpolates near-linearly between the “noise balls”. Hence, we may expect that the smoother NN denoiser typically generalizes better than the eMMSE denoiser. We prove that this is indeed true for the univariate case in Section 4.

Why the NN denoiser does not converge to the eMMSE denoiser? Note that the limit in (10) is not practically relevant for the low-level noise regime, since we need an exponentially large in order to converge in this limit. For example, in the case of univariate Gaussian noise, we have that . Therefore, during training, we effectively observe only noisy samples that are in a bounded interval of size around each clean sample (see Figure 1). In other words, in the low-noise regime and for non-exponential , there is no way to distinguish if the noise is sampled from some distribution with limited support of from a Gaussian distribution. The denoiser minimizing the loss with respect to a bounded-support distribution can be radically different from the eMMSE denoiser in the regions outside the “noise balls” surrounding the clean samples, where the denoiser function is not constrained by the loss. This leads to a large difference between the NN denoiser and the MMSE estimator.

Alternatively, one may suggest that the NN denoiser does not converge to the eMMSE denoiser due to an approximation error (i.e., the shallow NN’s model capacity is too small to approximate the MMSE denoiser). Nevertheless, we provide empirical evidence indicating it is not the case. Specifically, recall that in the low noise regime, the eMMSE denoiser tends to the nearest-neighbor classifier, and such a solution does not generalize well to test data. Thus, if the NN denoiser would have converged to the eMMSE solution, then its test error would have increased with the network size, in contrast to what we observe in Figure 12 (Appendix D).

Therefore, in order to approximate the eMMSE with a NN, it seems we must have an exponentially large . Alternatively, we may converge to the eMMSE if we use a loss function marginalized over the Gaussian noise. This idea was previously suggested by Chen et al. (2014), with the goal of effectively increasing the number of noisy samples and thus improving the training performance of denoising autoencoders. Therein, this improvement was obtained by approximating the marginalized loss function by a Taylor series expansion. However, for shallow denoisers, we may actually obtain an explicit expression for this marginalized loss, without any approximation. Specifically, if we assume for simplicity, that the network does not have a linear unit () and its bias terms are zero (), then the marginalized loss for Gaussian noise, derived in Appendix A, is given by

| (12) |

where and , are defined in Appendix A. NN denoisers trained over this loss function will thus tend to the eMMSE solution as the network size is increased. However, as we explained above, this is not necessarily desirable, so we only mention (12) to show exact marginalization is feasible.

Regularization biases toward specific neural network denoisers.

To further explore the converged solution for offline training, we note that the offline loss function allows the network to converge to a zero-loss solution. This is in contrast to online training for which each batch leads to new realizations of noisy samples, and thus the training error is never exactly zero. Specifically, consider the low noise regime (well-separated noisy clusters). Then, the network can perfectly fit all the noisy samples using a finite number of neurons (see Section 4 for a more accurate description in the univariate case). Importantly, there are multiple ways to cluster the noisy data points with such neurons, and so there are multiple global training loss minima that the network can achieve with zero loss, each with a different generalization capability. In contrast to the standard case considered in the literature, this holds even in the under-parameterized case (where , the total number of noisy samples, is larger than the number of parameters).

Since there are many minima that perfectly fit the training data, we converge to specific minima which also minimize the regularization we use (even though we assumed it is vanishing). Specifically, in the limit of vanishing regularization , the minimizers of also minimize the representation cost.

Definition 1.

Let denote a shallow ReLU network of the form (6). For any function realizable as a shallow ReLU network, we define its representation cost as

| (13) |

and a minimizer of this cost, i.e. the ‘min-cost’ solution, as

| (14) |

where the second equality in (13) holds due to the -homogeneity of the ReLU activation function, and since the bias terms are not regularized (see (savarese2019infinite, Appendix A)). In the next sections, we examine which function we obtain by minimizing the representation cost in various settings.

4 Closed form solution for the NN denoiser function — univariate data

In this section, we prove that NN denoisers that minimize for univariate data have the specific piecewise linear form observed in Figure 1, and we discuss the properties of this form. We observe clean univariate data points , s.t. , and noisy samples (drawn from some known distribution) for each clean data point, such that . We denote by the maximal noise seen for data point , and by the minimal noise seen for data point , i.e.,

| (15) |

and assume the following,

Assumption 1.

Assume the data is well-separated after the addition of noise, i.e.,

| (16) |

and .

So we can state the following,

Proposition 1.

For all datasets such that Assumption 1 holds, the unique minimizer of is

| (17) |

The proof (which is based on Theorem 1.2. in (hanin2021ridgeless)) can be found in Appendix B.1. As can be seen from Figure 1, the empirical simulation matches Proposition 1. 333Notice that the training points in Figure 1 are used in the online setting (7) and in the offline setting (8) we observe less noisy samples. Proposition 1 states that (17) is a closed-form solution for (8) with minimal representation cost. Notice that the minimal number of neurons needed to represent using is , which is less than the number of the total training samples for .

In the case of univariate data, we can prove that the representation cost minimizer (linear interpolation) generalizes better than the optimal estimator over the empirical distribution (eMMSE) for low noise levels.

Theorem 1.

Let where and where and are statistically independent. Then for all datasets such that Assumption 1 holds, and for all density probability distributions with bounded second moment such that for all , the following holds,

See Appendix B.2 for the proof. We may deduce from Theorem 1 that for each density probability distribution there exists a critical noise level for which the the representation cost minimizer has strictly lower MSE than the eMMSE for all smaller noise levels (this is because the MSE is a continuous function of ). The critical noise level can change significantly depending on . For example, if has a high “mass” in between the training points then the critical noise level is large. However, if the density function has a low “mass” between the training points then the critical noise level is small. In Appendix D we show the MSE vs. the noise level on MNIST denoiser for NN denoiser and eMMSE denoiser (Figure 13). As can be seen there, the critical noise level in this case is not small ().

Intuitively, the difference between the NN denoiser and the eMMSE denoiser is how they operate on inputs that are not close to any of the clean samples (compared to the noise standard deviation). For such a point, the eMMSE denoiser does not take into account that the empirical distribution of the clean samples does not approximate well their true distribution. Thus, for small noise, it insists on “assigning” it to the closest clean sample point. By contrast, the NN denoiser generalizes better since it takes into account that, far from the clean samples, the data distribution is not well approximated by the empirical sample distribution. Thus, its operation there is near the identity function, with a small contraction toward the clean points, as we discuss next.

Minimal norm leads to contractive solutions on univariate data.

radhakrishnan2018memorization have empirically shown that Auto-Encoders (AE, i.e. NN denoisers without input noise), are locally contractive toward the training samples. Specifically, they showed that the clean dataset can be recovered when iterating the AE output multiple times until convergence. Additionally, they showed that, as we increase the width or the depth of the NN, the network becomes more contractive toward the training examples. In addition, radhakrishnan2018memorization proved that -layer AE models are locally contractive under strong assumptions (the weights of the input layer are fixed and the number of neurons goes to infinity). Next, we prove that a univariate shallow NN denoiser is globally contractive toward the clean data points without using the assumptions used by radhakrishnan2018memorization (i.e., the minimizer optimizes over both layers and has a finite number of neurons).

Definition 2.

We say that is contractive toward a set of points on if there exists a real number such that for any there exists so that

| (18) |

Lemma 1.

is contractive toward the clean training points on .

The proof can be found in Appendix B.3.

5 Minimal norm leads to alignment phenomenon on multivariate data

In the multivariate case, min-cost solutions of (14) are difficult to explicitly characterize. Even in the setting of fitting scalar-valued shallow ReLU networks, explicitly characterizing min-cost solutions under interpolation constraints remains an open problem, except in some basic cases (e.g., co-linear data (ergen2021convex)).

As an approximation to (14) that is more mathematically tractable, we assume the functions being fit are constant and equal to on a closed ball of radius centered at each , i.e., for all , such that the balls do not overlap. Letting denote the ball of radius centered at , we can write this constraint more compactly as . Consider minimizing the representation cost under this constraint:

| (19) |

However, even with this approximation, explicitly describing minimizers of (19) for an arbitrary collection of training samples remains challenging. Instead, to gain intuition, we describe minimizers of (19) assuming the training samples belong to simple geometric structures that yield explicit solutions. Our results reveal a general alignment phenomenon, such that the weights of the representation cost minimizer align themselves with edges and/or faces connecting data points. We also show that approximate solutions of (14) obtained numerically by training a NN denoiser with weight decay match well with the solutions of (19) having exact closed-form expressions.

5.1 Training data on a subspace

In the event that the clean training samples belong to a subspace, we show the representation cost minimizer depends only on the projection of the inputs onto the subspace containing the training data, and its output is also constrained to this subspace.

Theorem 2.

Assume the training samples belong to a linear subspace , and let denote the orthogonal projector onto . Then any minimizer of (19) satisfies for all .

The proof of this result and all others in this section is given in Appendix C.

Note the assumption that the dataset lies on a subpaces is practically relevant, since, in general, large datasets are (approximately) low rank, i.e., lie on a linear subspace (udell2019big). In Appendix D we also validated that common image datasets are (approximately) low rank (Table 1).

Specializing to the case co-linear training data (i.e., training samples belonging to a one-dimensional subspace) the min-cost solution is unique and is described by the following corollary:

Corollary 1.

In other words, the min-cost solution has a particularly simple form in this case: , where is a monotonic piecewise linear function. We call any function of this form a rank-one piecewise linear interpolator. Below we show that many other min-cost solutions can be expressed as superpositions of rank-one piecewise linear interpolators.

5.2 Training data on rays

As an extension of the previous setting, we now consider training data belonging to a union of one-sided rays sharing a common origin. Assuming the rays are well-separated (in a sense made precise below) we prove that the representation cost minimizer decomposes into a sum of rank-one piecewise linear interpolators aligned with each ray.

Theorem 3.

Suppose the training samples belong to a union of rays plus a sample at the origin: where for some unit vector and constants . Assume that the rays make obtuse angles with each other (i.e., for all ). Then the minimizer of (19) is unique and is given by

| (20) |

where has the form of the 1-D minimizer (17) with , .

Additionally, in the Appendix C.2.1 we show that this min-cost solution is stable with respect to small perturbations of the data. In particular, if the training data is perturbed from the rays, the functional form of the min-cost solution only changes slightly, such that the inner and outer-layer weight vectors align with the line segments connecting consecutive data points.

5.3 Special case: training data forming a simplex

Here, we study the representation cost minimizers for training points that form the vertices of a -simplex, i.e., a -dimensional simplex in (e.g., a -simplex is a triangle, a -simplex is a tetrahedron, etc.). As we will show, the angles between vertices of the simplex (e.g., an acute versus obtuse triangle in ) influences the functional form of the min-cost solution.

Our first result considers one extreme where the simplex has one vertex that makes an obtuse angle with all other vertices (e.g., an obtuse triangle for ).

Proposition 2.

Suppose the convex hull of the training points is a -simplex such that forms an obtuse angle with all other vertices, i.e., for all with . Then the minimizer of (19) is unique, and is given by

| (21) |

where , , with , , and for all .

This result is essentially a corollary of Theorem 3, since after translating to be the origin, the vertices of the simplex belong to rays making obtuse angles with each other, where there is exactly one sample per ray. Details of the proof are given in Appendix C.2.

At the opposite extreme, we consider the case where every vertex of the simplex is acute, meaning for all we have for all . In this case, we make following conjecture: the min-cost solution is instead a sum of rank-one piecewise linear interpolators, each aligned orthogonal to a different -dimensional face of the simplex.

Conjecture 1.

Suppose the convex hull of the training points is a -simplex where every vertex of the simplex is acute. Then the minimizer of (19) is unique, and is given by

| (22) |

where: is the projection of onto the unique -dimensional face of the simplex not containing ; is the weighted geometric median of the vertices specified by

, ; and with , , and .

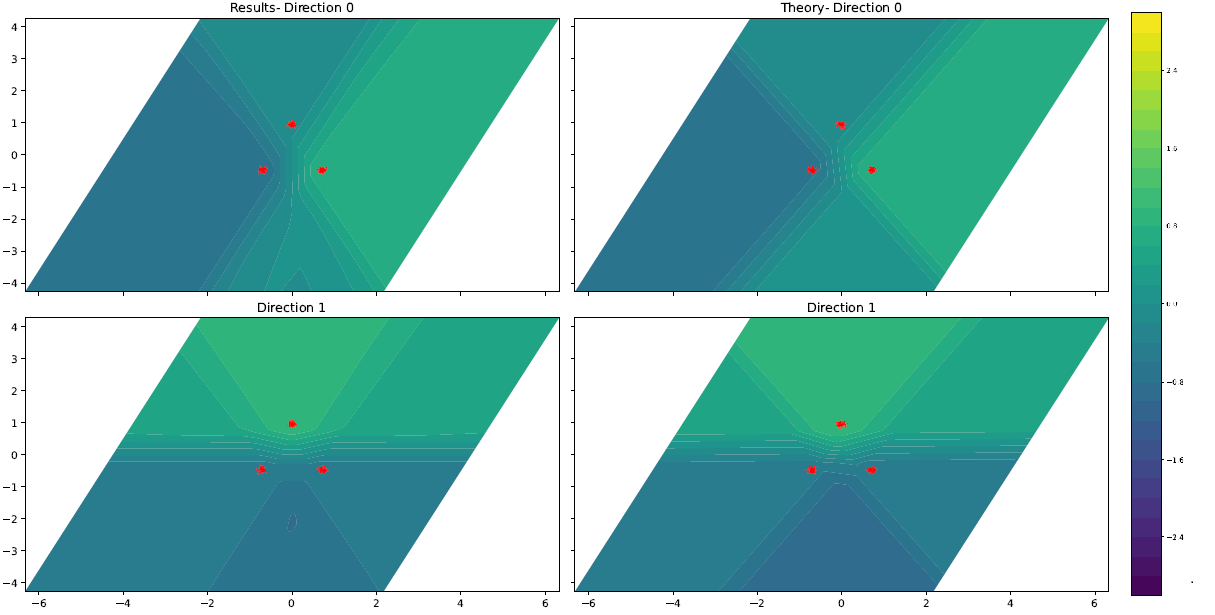

Justification for this conjecture is given in Appendix C.3.2. In particular, we prove that the interpolator given in (86) is a min-cost solution in the special case of three training points whose convex hull is an equilateral triangle. If true in general, this would imply a phase transition behavior in the min-cost solution when the simplex changes from having one obtuse vertex to all acute vertices, such that ReLU boundaries go from being aligned orthogonal to the edges connecting vertices, to being aligned parallel with the simplex faces. Figure 2 illustrates this for training points forming a triangle in dimensions. Moreover, Figure 2 shows that the empirical minimizer obtained using noisy samples and weight decay regularization agrees well with the form of the exact min-cost solution predicted by Proposition 2 and Conjecture 1.

In general, any given vertex of a simplex may make acute angles with some vertices and obtuse angles with others. This case is not covered by the above results. Currently, we do not have a conjectured form of the min-cost solution in this case, and we leave this as an open problem for future work.

6 Related works

Numerous methods have been proposed for image denoising. In last decade NN-based methods achieve state-of-the-art results (zhang2017beyond; zhang2021plug). See (doi:10.1137/23M1545859) for a comprehensive review of image denoising. sonthalia2023training empirically showed a double decent behavior in NN denoisers, and theoretically proved it in a linear model. Similar to a denoiser, an Auto-Encoder (AE) is a NN model whose output dimension equals its input dimension, and is trained to match the output to the input. For AE, the typical goal is to learn an efficient lower-dimensional representation of the samples. radhakrishnan2018memorization proved that a single hidden-layer AE that interpolates the training data (i.e., achieves zero loss), projects the input onto a nonlinear span of the training data. In addition, radhakrishnan2018memorization empirically demonstrated that a multi-layer ReLU AE is locally contractive toward the training samples by iterating the AE and showing that the points converge to one of the training samples. Denoising autoencoders inject noise into the input data in order to learn a good representation (alain2014regularized). The marginalized denoising autoencoder, proposed by pmlr-v32-cheng14, approximates the marginalized loss over the noise (which is equivalent to observing infinitely many noisy samples) by using a Taylor approximation. pmlr-v32-cheng14 demonstrated that by using the approximate marginalized loss we can achieve a substantial speedup in training and improved representation compared to standard denoising AE.

Many recent works aim to characterize function space properties of interpolating NN with minimal representation cost (i.e., min-cost solutions). Building off of the connection between weight decay and path-norm regularization identified in neyshabur2015norm; neyshabur2017exploring, savarese2019infinite showed that the representation cost of a function realizable as a univariate two-layer ReLU network coincides with the -norm of the second derivative of the function. Extensions to the multivariate setting were studied in Ongie2020A, which identified the representation cost of a multivariate function with its -norm, a Banach space semi-norm defined in terms of the Radon transform. Related work has extended the -norm to other activation functions parhi2021banach, vector-valued networks shenouda2023vector, and deeper architectures parhi2022kinds. A separate line of research studies min-cost solutions from a convex duality perspective ergen2021convex, incuding two-layer CNN denoising AEs sahiner2021convex. Recent work also studies properties of min-cost solutions in the case of arbitrarily deep NNs with ReLU activation jacot2022implicit; jacotNeurips.

Despite these advances in understanding min-cost solutions, there are few results explicitly characterizing their functional form. One exception is hanin2021ridgeless, which gives a complete characterization of min-cost solutions in the case of shallow univariate ReLU networks with unregularized bias. This characterization is possible because the univariate representation cost is defined in terms of the 2nd derivative, which acts locally. Therefore, global minimizers can be found by minimizing the representation cost locally over intervals between data points. An extension of these results to the case of regularized bias is studied in boursier2023penalising. In the multivariate setting, the representation cost involves the Radon transform of the function – a highly non-local operation – that complicates the analysis. parhi2021banach prove a representer theorem showing that there always exists a min-cost solution realizable as a shallow ReLU network with finitely many neurons, and ergen2021convex give an implicit characterization of min-cost NNs the solution to a convex optimization problem, and give explicit solutions in the case of co-linear training features. However, to the best of our knowledge, there are no results explicitly characterizing min-cost solutions in the case of non-colinear multivariate inputs, even for networks having scalar outputs.

7 Discussions

Conclusions.

We have explored the elementary properties of NN solutions for the denoising problem, while focusing on offline training of a one hidden-layer ReLU network. When the noisy clusters of the data samples are well-separated, there are multiple networks with zero loss, even in the case of under-parameterization, while having a different representation cost. In contrast, previous theoretical works focused on the over-parametrized regime. In the univariate case, we have derived a closed-form solution to such global minima with minimum representation cost. We also showed that the univariate NN solution generalizes better than the eMMSE denoiser. In the multivariate case, we showed that the interpolating solution with minimal representation cost is aligned with the edges and/or faces connecting the clean data points in several cases.

Limitations.

One limitation of our analysis in the multivariate case is that we assume the denoiser interpolates data on a full -dimensional ball centered at each clean training sample, where is the input dimension. In practical settings, often the number of noisy samples . A more accurate model would be to assume that denoiser interpolates over an -dimensional disc centered at each training sample. This model may still be a tractable alternative to assuming interpolation of finitely many noisy samples. Also, our results relate to NN denoisers trained with explicit weight decay regularization, which is not always used in practice. However, recent work shows that stable minimizers of SGD must have low representation cost mulayoff2021implicit; nacsonimplicit, and so some of our analysis may provide insight for unregularized training, as well. Finally, for mathematical tractability, we focussed on the case of fully-connected ReLU networks with one hidden-layer. Extending our analysis to deeper architectures and convolutional neural networks is an important direction for future work.

Acknowledgments

We thank Itay Hubara for his technical advice and valuable comments on the manuscript. The research of DS was Funded by the European Union (ERC, A-B-C-Deep, 101039436). Views and opinions expressed are however those of the author only and do not necessarily reflect those of the European Union or the European Research Council Executive Agency (ERCEA). Neither the European Union nor the granting authority can be held responsible for them. DS also acknowledges the support of Schmidt Career Advancement Chair in AI. The research of NW was supported by the Israel Science Foundation (ISF), grant no. 1782/22. GO was supported by the National Science Foundation (NSF) CRII award CCF-2153371.

References

- Alain and Bengio [2014] Guillaume Alain and Yoshua Bengio. What regularized auto-encoders learn from the data-generating distribution. The Journal of Machine Learning Research, 15(1):3563–3593, 2014.

- Boursier and Flammarion [2023] Etienne Boursier and Nicolas Flammarion. Penalising the biases in norm regularisation enforces sparsity. arXiv preprint arXiv:2303.01353, 2023.

- Chen et al. [2014] Minmin Chen, Kilian Weinberger, Fei Sha, and Yoshua Bengio. Marginalized denoising auto-encoders for nonlinear representations. In Eric P. Xing and Tony Jebara, editors, Proceedings of the 31st International Conference on Machine Learning, volume 32 of Proceedings of Machine Learning Research, pages 1476–1484, Bejing, China, 22–24 Jun 2014. PMLR. URL https://proceedings.mlr.press/v32/cheng14.html.

- Elad et al. [2023] Michael Elad, Bahjat Kawar, and Gregory Vaksman. Image denoising: The deep learning revolution and beyond—a survey paper. SIAM Journal on Imaging Sciences, 16(3):1594–1654, 2023. doi: 10.1137/23M1545859. URL https://doi.org/10.1137/23M1545859.

- Ergen and Pilanci [2021] Tolga Ergen and Mert Pilanci. Convex geometry and duality of over-parameterized neural networks. The Journal of Machine Learning Research, 22(1):9646–9708, 2021.

- Hanin [2021] Boris Hanin. Ridgeless interpolation with shallow relu networks in is nearest neighbor curvature extrapolation and provably generalizes on lipschitz functions. arXiv preprint arXiv:2109.12960, 2021.

- Hasinoff et al. [2010] Samuel W Hasinoff, Frédo Durand, and William T Freeman. Noise-optimal capture for high dynamic range photography. In 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 553–560. IEEE, 2010.

- Ho et al. [2020] Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33:6840–6851, 2020.

- Horrace [2015] William C Horrace. Moments of the truncated normal distribution. Journal of Productivity Analysis, 43:133–138, 2015.

- Jacot [2022] Arthur Jacot. Implicit bias of large depth networks: a notion of rank for nonlinear functions. In International Conference on Learning Representations (ICLR), 2022.

- Jacot et al. [2022] Arthur Jacot, Eugene Golikov, Clement Hongler, and Franck Gabriel. Feature learning in l_2-regularized dnns: Attraction/repulsion and sparsity. In Advances in Neural Information Processing Systems (NeurIPS), volume 35, pages 6763–6774, 2022.

- Manjunath and Wilhelm [2021] B. G. Manjunath and Stefan Wilhelm. Moments calculation for the doubly truncated multivariate normal density. Journal of Behavioral Data Science, 1(1):17–33, May 2021. doi: 10.35566/jbds/v1n1/p2. URL https://jbds.isdsa.org/index.php/jbds/article/view/9.

- Mulayoff et al. [2021] Rotem Mulayoff, Tomer Michaeli, and Daniel Soudry. The implicit bias of minima stability: A view from function space. Advances in Neural Information Processing Systems (NeurIPS), 34:17749–17761, 2021.

- Nacson et al. [2023] Mor Shpigel Nacson, Rotem Mulayoff, Greg Ongie, Tomer Michaeli, and Daniel Soudry. The implicit bias of minima stability in multivariate shallow relu networks. In International Conference on Learning Representations (ICLR), 2023.

- Neyshabur et al. [2015] Behnam Neyshabur, Ryota Tomioka, and Nathan Srebro. Norm-based capacity control in neural networks. In Conference on Learning Theory (COLT), pages 1376–1401. PMLR, 2015.

- Neyshabur et al. [2017] Behnam Neyshabur, Srinadh Bhojanapalli, David McAllester, and Nati Srebro. Exploring generalization in deep learning. Advances in Neural Information Processing Systems (NeurIPS), 30, 2017.

- Ongie et al. [2020] Greg Ongie, Rebecca Willett, Daniel Soudry, and Nathan Srebro. A function space view of bounded norm infinite width relu nets: The multivariate case. In International Conference on Learning Representations, 2020. URL https://openreview.net/forum?id=H1lNPxHKDH.

- Parhi and Nowak [2021] Rahul Parhi and Robert D Nowak. Banach space representer theorems for neural networks and ridge splines. Journal of Machine Learning Research, 22(1):1960–1999, 2021.

- Parhi and Nowak [2022] Rahul Parhi and Robert D Nowak. What kinds of functions do deep neural networks learn? insights from variational spline theory. SIAM Journal on Mathematics of Data Science, 4(2):464–489, 2022.

- Radhakrishnan et al. [2018] Adityanarayanan Radhakrishnan, Karren Yang, Mikhail Belkin, and Caroline Uhler. Memorization in overparameterized autoencoders. arXiv preprint arXiv:1810.10333, 2018.

- Raya and Ambrogioni [2023] Gabriel Raya and Luca Ambrogioni. Spontaneous symmetry breaking in generative diffusion models. arXiv preprint arXiv:2305.19693, 2023.

- Roth and Black [2009] Stefan Roth and Michael J. Black. Fields of experts. International Journal of Computer Vision, 82(2):205–229, 2009. doi: 10.1007/s11263-008-0197-6. URL https://doi.org/10.1007/s11263-008-0197-6.

- Sahiner et al. [2021] Arda Sahiner, Morteza Mardani, Batu Ozturkler, Mert Pilanci, and John M. Pauly. Convex regularization behind neural reconstruction. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=VErQxgyrbfn.

- Savarese et al. [2019] Pedro Savarese, Itay Evron, Daniel Soudry, and Nathan Srebro. How do infinite width bounded norm networks look in function space? In Conference on Learning Theory, pages 2667–2690. PMLR, 2019.

- Shenouda et al. [2023] Joseph Shenouda, Rahul Parhi, Kangwook Lee, and Robert D Nowak. Vector-valued variation spaces and width bounds for DNNs: Insights on weight decay regularization. arXiv preprint arXiv:2305.16534, 2023.

- Song and Ermon [2019] Yang Song and Stefano Ermon. Generative modeling by estimating gradients of the data distribution. Advances in neural information processing systems, 32, 2019.

- Sonthalia and Nadakuditi [2023] Rishi Sonthalia and Raj Rao Nadakuditi. Training data size induced double descent for denoising feedforward neural networks and the role of training noise. Transactions on Machine Learning Research, 2023. ISSN 2835-8856. URL https://openreview.net/forum?id=FdMWtpVT1I.

- Udell and Townsend [2019] Madeleine Udell and Alex Townsend. Why are big data matrices approximately low rank? SIAM Journal on Mathematics of Data Science, 1(1):144–160, 2019.

- Zhang et al. [2017] Kai Zhang, Wangmeng Zuo, Yunjin Chen, Deyu Meng, and Lei Zhang. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE transactions on image processing, 26(7):3142–3155, 2017.

- Zhang et al. [2021] Kai Zhang, Yawei Li, Wangmeng Zuo, Lei Zhang, Luc Van Gool, and Radu Timofte. Plug-and-play image restoration with deep denoiser prior. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(10):6360–6376, 2021.

Appendix A Marginalized loss

In this section, we derive the marginal loss for the case of a 1-hidden layer ReLU neural network. The loss function is,

We denote by

Note that since is a covariance matrix. Thus we get,

Lemma 2.

In the case of the ReLU activation function and Gaussian noise the following holds,

where are the density and cumulative distribution of standard normal distribution, and

where,

and

Notice that we denote by the probability that where .

Proof.

Let , then

Note that given the distribution of is truncated normal [horrace2015moments]. Therefore,

| (23) |

Note that given , is a Gaussian random vector. Therefore, given ,

and we obtain,

Let be a Gaussian random vector with444Note that .

then,

Given the distribution of is truncated multivariate normal distribution [Manjunath_Wilhelm_2021], therefore,

| (24) |

where is a density function of of Gaussian random vector with mean vector and covariance matrix at the point , and

We denote by thus,

Thus,

Similarly, we obtain,

Therefore,

∎

Next, we present in Figures 3 and 4 a numerical evaluation of (23) and (24). As can be seen, the Monte-Carlo simulations verify the analytical results.

Appendix B Proofs of Results in Section 4

B.1 Proof of Proposition 1

Proof.

Let

such that Assumption 1 holds. We define a reduced dataset, which only contains the noisy samples with the most extreme noises

We define the penalty on the weights,

According to Theorem 1.2. in [hanin2021ridgeless], since we have opposite discrete curvature on the intervals ,

where

Also note that,

since interpolates all the points in , and if we assume by contradiction that then , thus contradicting Theorem 1.2. in [hanin2021ridgeless]. Note that the minimal number of neurons needed to represent is . So, if then is the minimizer of the representation cost (i.e., ). ∎

B.2 Proof of Theorem 1

First we prove the following lemmas.

Lemma 3.

Let where then,

Proof.

Notice that the following holds,

In addition,

so we obtain,

Therefore,

∎

Lemma 4.

Let where and then,

Proof.

Note that,

Similarly,

thus

According to Lemma 3

almost surely. Note that,

Therefore, by the Dominated convergence theorem we obtain

Similarly,

Since and are bounded and . Therefore, we get

∎

Next, we prove Theorem 1.

Proof.

Assume, without loss of generality, that . Let be a probability density function with bounded second moment such that for all . According to Lemma 4

So we need to prove that

For the case of , the training set includes two data points . So we get,

where . Note that for all and . Therefore,

| (25) | |||

| (26) |

First, we derive (25):

Note that,

thus by the Dominated convergence theorem we obtain

since for all . Next, we derive (26)

Note that,

thus by the Dominated convergence theorem we obtain

since

∎

B.3 Proof of Lemma 1

Proof.

First we prove that for all . We find the intersection between the line and the linear part of (17) (the third branch)

| (27) | ||||

| (28) | ||||

| (29) | ||||

| (30) | ||||

| (31) | ||||

| (32) | ||||

Note that

| (33) |

Since,

| (34) | ||||

| (35) | ||||

| (36) | ||||

| (37) | ||||

| (38) |

which holds according to Assumption 1.

| (39) | ||||

| (40) | ||||

| (41) | ||||

| (42) | ||||

| (43) |

which holds according to Assumption 1.

Next we prove that is contractive toward a set of the clean datapoints on . In the case where or or therefore (18) holds for all . In the case where we choose ,

| (44) |

There exists such that

| (45) |

since for is bellow the line because is an affine function with slope larger than 1 and . Therefore there exists

| (46) |

since,

| (47) | ||||

| (48) |

In the case where we choose ,

| (49) |

There exists such that

| (50) |

since for is above the line because is an affine function with slope larger than 1 and . Therefore there exists

| (51) | ||||

| (52) |

since,

| (53) | ||||

| (54) |

Therefore (18) holds for . ∎

Appendix C Proofs of Results in Section 5

Let be any function realizable as a shallow ReLU network. Consider any parametrization of given by . We say such a parametrization is a minimal representative of if and for all , and . In particular, the units making up a minimal representative must be distinct in the sense that the hyperplanes describing ReLU boundaries are distinct, which implies that no units can be cancelled or combined.

We will also make use of the following lemma, which shows that representation costs are invariant to a translation of the training samples, assuming the function is suitably translated.

Lemma 5.

Let be any function realizable as a shallow ReLU net satisfying norm-ball interpolation constraints for all . Let . Then the function is such that and for all .

Proof.

First we show for all . Fix any , and let . Then for some , and so , as claimed.

To show that , let be any minimal representative of . Then

| (55) | ||||

| (56) |

with and . And so . A parallel argument with the roles of and reversed shows that , and so . ∎

In particular, this lemma shows that if is a norm-ball interpolating representation cost minimizer of the training samples , then is a norm-ball interpolating min-cost solution of the translated training samples .

C.1 Training data belonging to a subspace

The proof of Theorem 2 is a direct consequence of the following two lemmas:

Lemma 6.

Suppose the clean training samples belong to a -dimensional subspace , and let denote the orthogonal projector onto . Let be any function realizable as a shallow ReLU network satisfying for all . Define . Then for all , and , with strict inequality if .

Proof.

First, for any we have . Therefore, for all .

Now, let be a minimal representative of . Then . Since for all , we have .

Now we show implies . Observe that if any of the outer-layer weight vectors then , which implies . Hence, it suffices to show that implies some .

First, consider the case where and . Then in this case if only if for all , which implies for some , or equivalently .

Next, assume either or . Fix any training sample index , and let denote the index set of units active over the ball . Then, since is constant for all , the Jacobian for all . This gives . Therefore, if then at least one of the . On the other hand, if then for all we have

which implies , and since this implies some , proving the claim. ∎

Lemma 7.

Suppose the clean training samples belong to an -dimensional subspace , and let denote the orthogonal projector onto . Let be any network satisfying . Define . Then and , with strict inequality if .

Proof.

Define , i.e., the set of points in whose projection onto is contained in . Then clearly . Also, by properties of norm-balls, we have . Therefore, for all .

Next, let be a minimal representative of . Then . Since for all , we have .

Finally, we show that if , then . Observe that if any of the inner-layer weight vectors then , which implies . Hence, it suffices to show that implies some . First, consider the case . Then if and only if for some , or equivalently, . Next, consider the case . Fix any training sample index , and let denote the index set of units active over . Then, since is constant for all , the Jacobian for all . This gives . Therefore, if (i.e., at least one row of is not contained in ) then at least one of the , proving the claim. ∎

Proof of Theorem 2.

Proof of Corollary 1.

Suppose is one-dimensional, i.e., for some unit vector . Theorem 2 shows we can express any min-cost solution as

where

is such that . Therefore, minimizing the representation cost subject to norm-ball interpolation constraints is reduces to a univariate problem:

where . The minimizing is unique and coincides with the 1-D denoiser in (17) with and . ∎

C.2 Data along rays

We begin with a key lemma that is central to the proof of Theorem 3.

Lemma 8.

Suppose is a collection of unit vectors such that for all , and is a unit vector such that for all . Let be any vector. Then,

Before giving the proof of Lemma 8, we first prove an auxiliary result.

Lemma 9.

Let be a unit vector, and suppose is a collection of unit vectors such that for all and for all . Let . Then with strict inequality if .

Proof.

It suffices to show , since if this is the case then

| (57) |

which also holds with strict inequality when .

First, if , then , and so . Now assume . Then we have

| (58) | ||||

| (59) | ||||

| (60) | ||||

| (61) |

The first and last equalities hold by definition. The second equality holds because each is a unit vector. The last inequality holds because for

since and by assumption. ∎

Now we give the proof of Lemma 8.

Proof of Lemma 8.

Let . Then we have

WLOG, we may assume , in which case the lemma reduces to showing . By the Cauchy-Schwartz inequality, it suffices to show . Toward this end, let us write where and . By Lemma 9 we have and , and so

| (62) | ||||

| (63) | ||||

| (64) | ||||

| (65) |

Also, if either of the index sets or has cardinality greater than one then the lemma above guarantees or , and so , which gives .

It remains to show when or has cardinality less than or equal to one, i.e., or . We consider the various possibilities:

Case 1: & . Then and so .

Case 2: & or & . Then for some , and for all . By way of contradiction, assume that . Since , , and are all unit vectors, the only way this is possible is if and , which implies and , and so . However, this shows that for all , contradicting our assumption that for all . Therefore, in this case as well.

Case 3: & . Then for some and , and so

| (66) | ||||

| (67) | ||||

| (68) |

where the last inequality follows by the fact that . Finally, since the matrix is symmetric, its operator norm coincides with the maximum eigenvalue (in absolute value). Eigenvectors in the span of and have the form where satisfy the equation

| (69) |

where is the corresponding eigenvalue. Expanding and equating coefficients gives the system

| (70) |

which has non-trivial solutions iff

| (71) |

and so . Therefore, as claimed. And so . ∎

Proof of Theorem 3.

Let be any representation cost minimizer. We prove the claim by showing that if fails to have the form specified in (20) then it is possible to construct a norm-ball interpolant whose units are aligned with the rays that has strictly smaller representation cost than .

First, let be any minimal representative of . By properties of minimal representatives, none of the ReLU boundary sets can intersect any of the norm-balls centered at the training samples, since otherwise would be non-constant on one of the norm-balls. Also, we may alter this parameterization in such a way that the active set of every unit avoids the ball centered at the origin without changing the representation cost. In particular, suppose the active set of the th unit contains . Then, using the identity for all , we have

for all . The active set of the reversed unit does not intersect . Therefore, after applying this transformation to all units whose active sets contain , we can write where is a sum of ReLU units whose active sets do not contain and is an affine function that combines the original affine part with a sum of linear units of the form arising from the transformation above. However, because of the interpolation constraint , and by the fact that no units in are active over , for all we have

| (72) |

which implies , i.e., for all . Finally, note that the inner- and outer-layer weight vectors on the reversed units change only in sign. Therefore, this re-parameterization does not change the representation cost, and so is also a minimal representative.

Now we construct a new norm-ball interpolant as follows. Let be the function defined as the sum of all units making up the re-parametrized that are active on at least one norm-ball centered on the th ray. Also, let denote the orthogonal projector onto the th ray. Define where . Put in words, is constructed by “splitting” any units active over multiple rays into a sum of multiple units aligned with each ray; see Figure 6 for an illustration.

First, we prove that satisfies norm-ball interpolation constraints. Let denote any unit belonging to . Since this unit is active on a norm-ball centered on ray , this implies that , and since the unit is inactive on we must have . Also, because we assume for any , we see that the projected unit is active on norm-balls centered on ray and inactive on norm-balls centered on any other ray. In particular, if belongs to a norm-ball centered on ray , then for all . Therefore, if where denotes a training sample along ray , then

| (73) | ||||

| (74) | ||||

| (75) | ||||

| (76) | ||||

| (77) | ||||

| (78) |

which shows that satisfies norm-ball interpolation constraints, as claimed.

Next, we show that if , then has strictly smaller representation cost. Let denote any ReLU unit belonging to . Since we assume , the contribution of unit to the representation cost of is . Let index the subset of rays for which unit is active over at least one norm-ball centered on that ray. In constructing the interpolant , the unit get mapped to a sum of units:

whose contribution to the representation cost of is bounded above by

If , then by Lemma 8 guarantees . And if then , with strict inequality unless belong to the span of . This implies that any min-cost solution must have all its units aligned with the rays, i.e., must have the form , where each is a univariate function. The representation cost of any function in this form is the sum of the (1-D) representation costs of the . Therefore, must also be the 1-D min-cost solution of the norm-ball constraints projected onto ray , and so must have the form specified by (17).

∎

C.2.1 Perturbations of samples along rays

As an extension of the above setting, suppose the training samples along rays have been slightly perturbed, i.e., we consider training samples for some vectors with for some sufficiently small . In particular, we make the following two assumptions:

-

(A1)

Let be the collection of difference vectors between successive perturbed points along the th ray (with ). Assume that for all and for all with , we have .

-

(A2)

If is any halfspace not containing the origin that contains the ball , then also contains all successive balls along that ray, i.e., contains for .

Note that if the original points belong to rays that satisfy the conditions of Theorem 3, then for sufficiently small the perturbed samples will satisfy assumptions (A1)&(A2) above.

We show in this case the norm-ball interpolating representation cost minimizer is a perturbed version of the min-cost solution for the unperturbed samples as identified in Theorem 3. In particular, the ReLU boundaries align with the line segments connecting successive points along the rays. See Figure 7 for an illustration in the case of rays.

Proposition 3.

Suppose the training samples are a perturbation of data belong to a union of rays plus a sample at the origin, i.e., where for some unit vector , constants , and vectors . Assume (A1)&(A2) above hold. Then the minimizer of (19) is unique and is given by

| (79) |

where and with , , and .

Proof.

Let be any min-cost solution. Following the same steps as in the proof of Theorem 3, there is a minimal representative of having the form where the active sets of all units in this representation have empty intersection with the ball .

Let denote the sum of all units in whose active set boundary intersects the line segment connecting the training samples and . By assumption (A2), this is equivalent to the sum of units that are active over balls for , and inactive for the balls with . In particular, for , we have . This implies for all . Likewise, for all , and so on, such that for all we have for all .

Now we show how to construct a new interpolating function having representation cost less than or equal to . Let and define , the orthogonal projector onto the span of the difference vector . Note that the map is projection onto the affine line connecting samples and . Consider the function

where

Put in words, is constructed by aligning all inner-layer and outer-layer weights of units in with the line segment connecting successive data points over which that unit first activates. See Figure 8 for an illustration.

Now we show that satisfies norm-ball interpolation constraints. By assumption (A1), the function is non-zero only on the norm-balls for . This implies that for all we have .

Observe that since all functions are zero on . Also, for all we have , and so

Similarly, the only terms in active for are and , for which , and . This gives

Iterating this procedure for all we see that if then , and so , as claimed.

Following steps similar to the proof of Theorem 3, we can show that and with strict inequality if . First, if any unit in is active over balls centered on multiple rays , then in the construction of the unit gets mapped to a sum of multiple units:

for some with . The contribution of the sum of these units to the representation cost of is less than or equal to . By assumption (A1), we know for all and for all . Therefore, by Lemma 8 we know . This shows cannot have any units active over balls centered on different rays, since otherwise has strictly smaller representation cost. Therefore, we have shown as . Additionally, we see that , i.e., all inner- and outer-layer weight vectors of units in are aligned with , since otherwise the representation cost of could be reduced. Therefore, each must have the form . Minimizing the separately under interpolation constraints, we arrive at the unique solution given in (79). ∎

C.3 Simplex Data

C.3.1 Simplex with one obtuse vertex

Proof of Proposition 2.

Consider training samples whose convex hull is a -simplex such that makes an obtuse angle with all other vertices, i.e., for all . By Lemma 5, is a norm-ball interpolating min-cost solution if and only if is a norm-ball interpolating min-cost solution for the translated points . The latter configuration satisfies the hypotheses of Theorem 3 with a single training sample per ray. Therefore, the min-cost solution of the translated points has units whose inner- and outer-layer weight vetors are aligned with , , and likewise for , since it is translation of . ∎

C.3.2 Simplex with all acute vertices

Justification of Conjecture 1.

For concreteness we focus on the case of three points whose convex hull is an acute triangle, and let be open balls of radius centered at (respectively).

Let be any norm-ball interpolating min-cost solution, and let be any minimal representative of .

First, by properties of minimal representatives, none of the ReLU boundary sets intersect any of the balls centered at the training samples. Also, the active set of each unit in must contain either one or two norm-balls, since otherwise the unit is either inactive over all balls or active over all balls, in which cases the unit can be removed or absorbed into unregularized linear part while strictly reducing the representation cost. By “reversing units”, as in the proof of Theorem 3, we may transform the parameterization in such a way that the active set of every unit contains exactly one ball, and do this without changing the representation cost.

After this transformation, we may write

where is a sum of units active only on the ball and no others, and where is an affine function. Then we have

| (80) | ||||

| (81) | ||||

| (82) |

and so,

| (83) | ||||

| (84) | ||||

| (85) |

Finding the min-cost solution then amounts to minimizing of the representation cost of , , subject to the above constraints. This is made challenging by the fact that the constraints are coupled together by the parameters and of the affine part. However, under the assumption , we prove below that the min-cost solution must have the conjectured form as given in (86). However, since we cannot a priori assume , this does not constitute a full proof.

Before proceeding, we give a lemma that will be used to lower bound (assuming ).

Lemma 10.

Suppose is a sum of ReLU units such that on a closed convex region and is constant and equal to on a closed ball , and has a minimal representative where all its units are active on . Then

where .

Proof.

Let be a minimal representative where each unit is active on . For to be constant on it must be the case that . Let be any value for which , the operator norm of the Jacobian of , is maximized. Since is piecewise linear, we see that its Lipschitz constant . Let be the set of indices of active units at so that , and let be the complementary index set. Then because , we have , and so . Therefore,

Finally,

Combining this with the previous inequality gives the claim. ∎

Now, returning to the proof of the main claim, for , let be the closed convex region given by the intersection of all closed half-planes containing the balls and , , and , . By assumption, vanishes on and is constant and equal to on the closed ball . So, by Lemma 10, we see that

where . Therefore, for all we have

Let be the minimizer of

Then plugging in above, we have the lower bound

which is independent of .

Finally, simple calculations show that the conjectured min-cost solution specified in (86) has representation cost achieving this lower bound. Therefore, under the assumption , is a min-cost solution.

∎

Now we prove that, in the special case where the convex hull of the training points is an equilateral triangle, the norm-ball interpolator identified in Conjecture 1 is a min-cost solution (though not necessarily the unique min-cost solution). In this case, we may also give a more explicit form, as detailed below.

Proposition 4.

Suppose the convex hull of the training points is an equilateral triangle. Assume the norm-balls centered at each training point have radius , , where is the centroid of the triangle. Then a minimizer of (19) is given by

| (86) |

where with , , , and .

Proof.

By translation and scale invariance of min-cost solutions, without loss of generality we may assume are unit-norm vectors and mean-zero (i.e., the triangle centroid ). In this case, the assumption on translates to . Additionally, it suffices to prove the claim in the case . This is because, if are the vertices of an equilateral triangle whose centroid is at the origin, then these points are contained in a two-dimensional subspace . And if we let be a matrix whose columns are an orthonormal basis for , then by Theorem 2, is a min-cost solution if and only if , where is a min-cost solution under the constraints for all . Therefore, the problem reduces to finding a min-cost solution of the projected points whose convex hull is an equilateral triangle in .

So now let be any min-cost solution under the assumption are unit-norm, have zero mean, and . By reversing units as necessary, there exists a minimal representative of that can be put in the form , such that is a sum of ReLU units, all of which are active on , and all of which are inactive on for .

Let be the reflection matrix , which reflects points across the line spanned by . In particular, then while if then (and vice versa). Also, , , and . Define . Then it is easy to check that satisfies interpolation constraints, and since is unitary, we have . Furthermore, since is a min-cost solution, we must have . Additionally, since none of the units making up are active over more than one ball, neither are the units making up , which implies no pair of units belonging to combine to form an affine function. Therefore, we may write , where , such that each is a sum of ReLU units, all of which are active on , and all of which are inactive on for .

Let be a rotation matrix by degrees such that , , and . Consider the symmetrized version of given by ). Since for all we have (with indices understood modulo ), it is easy to verify that satisfies interpolation constraints. Also, since is unitary, we have , which implies since is a min-cost solution. Again, since none of the units making up are active over more than one ball, neither are the units making up , which implies no pair of units belonging to combine to form an affine function. And so, we may write , such that each is a sum of ReLU units, all of which are active on , and all of which are inactive on for .

Observe that . Also, we have . This implies commutes with , because it is easy to see that . Additionally, commutes with , because it is easy to see , and by properties of rotations/reflections we have , which implies

which shows commutes with . Now we show that any matrix that commutes with both and is a scaled identity matrix. Let denote a unit-vector perpendicular to , such that form an orthonormal basis for . First, we know has eigenvectors and with eigenvalues and , respectively. And so , while , which implies and for some , i.e., and are eigenvectors of with eigenvalues and , respectively. Furthermore, we have and . This implies and are also eigenvectors of with eigenvalues and , respectively. But since and are linearly independent, and likewise so are and , the only way this could be true is if , i.e., every vector in is an eigenvector of for the same eigenvalue , which implies for some .

Therefore, we have shown that every min-cost solution maps to a min-cost solution of the form

for some , where each is a sum of ReLU units, all of which are active on and all of which are inactive on , . In particular, for all and for all , where is the intersection of all half-planes containing the balls , .

This means we can write and for some index sets partitioning so that . Let denote the representation cost of a function computed without an unregularized linear part, i.e., define analogously to except where the model class in (6) is constrained to have . Then, since the realizations of the functions considered above do not have a linear part, we see that and so .

Now we show how lower bound for all . Let denote a unit-vector perpendicular to , such that form an orthonormal basis for . Consider the univariate functions and . Here is the projection of onto the line spanned by , and is a projection onto the line perpendicular to passing through the point . In particular, by the constraints on , we see that and satisfy the constraints

| (87) |

and .

Claim 1: For all , . Proof: Let be any realization of , whose representation cost is . Then realizations of and are given by

| (88) | ||||

| (89) |

whose representation costs and , respectively, are given by

| (90) | ||||

| (91) |

and by the Pythagorean Theorem we see that . Therefore, and finally minimizing over all realizations of gives the claim.

Claim 2: For all , , where and . Proof: By results in savarese2019infinite, where is the function satisfying the same constraints as given in (87) while linearly interpolating over the interval . From the formula as established in savarese2019infinite, we can show directly that , which gives the claimed bound.

Claim 3: For all , . Proof: The function with minimal -cost satisfying the constraint for is a single ReLU unit plus a constant: , which has -cost .

Putting the above claims together, we see that

| (92) | ||||

| (93) | ||||

| (94) | ||||

| (95) | ||||

| (96) |

where in the last inequality we used the fact that since and .

Therefore, . Also, the function given by

where

satisfies norm-ball interpolation constraints and . Hence, is a min-cost solution. ∎

Appendix D Additional simulations

We train a one-hidden-layer ReLU network without a skip connection using the setting we used in Figure 1. As can be seen from Figure 10 we get a similar result for the NN denoiser with and without the skip connection. Therefore in Appendix D.1 we use one-hidden-layer ReLU network without a skip connection.

D.1 MNIST

We use the MNIST dataset to verify various properties. First, the offline and online solutions achieve approximately the same test MSE when trained on a subset of the MNIST dataset (Figure 11). Second, to show that the fact that NN denoiser does not converge to the eMMSE denoiser is not due to approximation error (Figure 12). Lastly, to present the critical noise level in which representation cost minimizer has strictly lower MSE than the eMMSE, for all smaller noise levels (Figure 13).

D.2 Three non-colinear training samples

We show in Figures 14 and 15 that for training points from the MNIST dataset that forming a triangle in dimensions the empirical minimizer obtained using noisy samples and weight decay regularization agrees well with the form of the exact representation cost minimizer predicted by Proposition 2 and Conjecture 4.

D.3 Empirical validation of the subspace assumption

We validated that the following image datasets are (approximately) low rank:

-

•

CIFAR10

-

•

CINIC10

-

•

Tiny ImageNet (a lower resolution version of ImageNet, enabling us to use SVD)

-

•

BSD (a denoising benchmark composed from patches of size cropped from images [zhang2017beyond])

As can be seen from Table 1 all the datasets that we used are (approximately) low rank.

| Dataset | 95% | 99% | 99.9% |

|---|---|---|---|

| CIFAR10 | 0.8% | 7.5% | 30% |

| CINIC10 | 1% | 23% | 41% |

| Tiny ImageNet | 1.6% | 20% | 36% |

| BSD | 0.1% | 1.6% | 4.5% |