HistoKT: Cross Knowledge Transfer in Computational Pathology

Abstract

The lack of well-annotated datasets in computational pathology (CPath) obstructs the application of deep learning techniques for classifying medical images. Many CPath workflows involve transferring learned knowledge between various image domains through transfer learning. Currently, most transfer learning research follows a model-centric approach, tuning network parameters to improve transfer results over few datasets. In this paper, we take a data-centric approach to the transfer learning problem and examine the existence of generalizable knowledge between histopathological datasets. First, we create a standardization workflow for aggregating existing histopathological data. We then measure inter-domain knowledge by training ResNet18 models across multiple histopathological datasets, and cross-transferring between them to determine the quantity and quality of innate shared knowledge. Additionally, we use weight distillation to share knowledge between models without additional training. We find that hard to learn, multi-class datasets benefit most from pretraining, and a two stage learning framework incorporating a large source domain such as ImageNet allows for better utilization of smaller datasets. Furthermore, we find that weight distillation enables models trained on purely histopathological features to outperform models using external natural image data.

1 Introduction

Currently in the United States, there are a reported pathologists per people. In Canada, this number rises slightly to pathologists per people [1]. This severe scarcity of pathologists, combined with a rigorous set of duties that involves patient care and extraneous specimen diagnoses, results in decreased diagnosis quality and diminished patient experience [1]. To alleviate these burdens, the computational pathology (CPath) field has created numerous computer-aided diagnosis (CAD) tools to assist pathologist diagnoses [2]. These CAD tools utilize computer vision techniques and neural network architectures to solve a plethora of tasks, including classification, segmentation, and localization [2].

However, challenges in implementing CAD systems arise in part due to limitations in pathology datasets. Namely, despite the presence of large pathology datasets, the lack of proper annotations or labels hinders development of supervised neural networks [2]. Additionally, different standards for staining histology slides and varying optical configurations introduce further complications when creating CAD systems [3, 4, 5]. Combined, these factors result in a valuable data landscape comprised of sparse and non-comprehensive datasets.

Transfer learning is widely used in machine learning to compensate for the absence of comprehensive annotated datasets. Using transfer learning, knowledge gained from one source domain can be applied to problems on another target domain. In CPath, transfer learning using either natural image datasets or other pathology datasets enables networks to generalize to specific target domains where labeled data is scarce [6, 7, 8, 9].

The merits of transfer learning in CPath are clear: models achieve higher metrics on a target domain when pretrained on a relevant source domain dataset [6, 7, 8, 9]. These benefits are explored in previous works through model-centric approaches. While these approaches are useful in exploring quality in various model architectures, they ignore key issues introduced when these models are applied to other datasets. Due to a lack of standardized data preparation when transferring knowledge between datasets, models trained on two distinct datasets are likely to learn at two distinct biological scales. Previous work has shown that models trained from a dataset gathered by one pathology laboratory can underperform when applied to images gathered by a separate laboratory [5]. Furthermore, dataset choice for the source domain is not well explored, with little known about what makes a “good” dataset. These issues can only be resolved through a data-centric approach towards exploring the interactions of source domain data with target domain data under transfer learning.

In this paper we introduce the following contributions: i) we propose a standardized platform to aggregate learned histopathological knowledge, including an image standardization workflow, training, and a tuning pipeline ; ii) we examine the potential for aggregation of learned knowledge between multiple pathological datasets. Using cross transfer over nine classification task datasets, we evaluate both the quantity and quality of transferable information between datasets; iii) we propose weight distillation: a method for combining learned information from encoders trained on separate datasets. iv) we assess the utility of large natural image domains (ImageNet) as a source domain with two stage transfer learning; v) we visualize the transferred knowledge using t-SNE plots and Grad-CAM images.

| Original Dataset Information | Number of Extracted Patches | |||||||||||

| Dataset Name | Tissue Type | Diagnostic | Staining | Scanner | Classes | Dataset Size | Image Size | Pixel | Training | Validation | Test | |

| Resolution | Images | Images | Images | |||||||||

| ADP [10] | Multi-organ | Histology (healthy) | H&E | Huron TissueScope LE1.2 | 33 | 17668 | 272 272 | 1 | 14134 | 1767 | 1767 | |

| MHIST [11] | Colorectal polyps | Cancer | H&E | Aperio AT2 | 2 | 3152 | 224 224 | 1.25 | 1740 | 435 | 977 | |

| BACH [12] | Breast | Cancer | H&E | Leica ICC50 HD | 4 | 400 | 2048 1536 | 0.42 | 958 | 240 | 1199 | |

| AJ-Lymph [13] | Lymph nodes | Lymphoma | H&E | N/A | 3 | 374 | 1388 1040 | 0.25 | 299 | 37 | 38 | |

| PCam [14] | Lymph nodes | Lymphoma | H&E | Pannoramic 250 Flash II, NanoZoomer-XR Digital slide scanner C12000-01 | 2 | 294912 | 96 96 | 0.972 | 2000 | 400 | 32322 | |

| CRC [15] | Colon & rectum | Histopathology | H&E | Online | 7 | 107000 | 224 224 | 0.5 | 14000 | 1750 | 1750 | |

| GlaS [16] | Intestinal glands | Cancer | H&E | Zeiss MIRAX MIDI Slide Scanner | 2 | 165 | Various | 0.62 | 163 | 112 | 40 | |

| OS [17] | Bone | Osteosarcoma | H&E | N/A | 3 | 1144 | 1024 1024 | 1 | 13227 | 1653 | 1654 | |

| BCSS [18] | Breast | Histopathology | H&E | Online | 10 | 151 | Various | 0.25 | 14288 | 1786 | 1787 | |

2 Methods

We introduce our pipeline for knowledge transfer, which includes dataset preprocessing, model training, and evaluation. In our evaluation, we considered the databases summarized in Tab. 1. The overall HistoKT workflow is summarized in Fig. 1.

2.1 Preprocessing

Datasets were standardized according to our pipeline, consisting of rescaling, cropping, and reflection wrapping to match the benchmark dataset, ADP. ADP was chosen as a benchmark due to its coverage of various histological tissue types.

Each image in a given dataset was rescaled to the common pixel resolution of 1 using the scikit-image library. If the resultant image is larger than pixels in either dimension, the image is cropped into patches, with % overlap in either direction. If the rescaled image is smaller than pixels in either dimension, the image is reflection wrapped. After cropping, background images were filtered; images that had low contrast, with pixels falling between the th percentile and th percentile having less than % coverage of the colour span (), were removed.

This pipeline was chosen for maintaining biological scale across datasets, so that models trained on one dataset operate at the same scale when applied to another dataset.

2.2 Training

ResNet18 [19] was chosen as the baseline model due to its wide use throughout literature, as well as its relatively small number of parameters compared to other commonly selected networks.

For all experiments, training was conducted using PyTorch, utilizing NVIDIA Tesla V100 Tensor Core GPUs. For all baseline results, models were trained from random initialization on a given dataset, using the RMSGD optimizer [20], with an initial learning rate of , momentum of , weight decay of , and all other parameters left as default [21]. Multi-labeled datasets, ADP and BCSS, are trained with a weighted one-vs-all cross entropy loss, while all other datasets are trained with cross entropy loss. Baseline models were trained for epochs, with three trials for each dataset. The model with the highest validation accuracy for a given epoch was taken as the baseline weight for further evaluation.

2.3 Tuning

Three primary methods were tested for tuning on a target domain: no tuning, fine-tuning, and deep-tuning. For no tuning, we take the encoder trained on a source domain and evaluate the encoder on the same domain. We denote fine-tuning to be tuning with all layers frozen except the final fully connected (FC) layer, and deep-tuning as tuning where no layers are frozen.

Our tuning procedure uses the AdamP optimizer [22], with weight decay set to , and all other parameters left as default. Learning rates were determined through a grid search. A learning rate scheduler was used which reduced the learning rate by a factor of two every epochs. Models were trained for epochs, and three trials were run for every learning rate and target domain combination.

2.4 Transferability

Transferability is evaluated using comparison matrices, as shown in Tab. 2 and Tab. 3. Along the diagonals are the average top-1 test accuracies for each dataset trained from random initialization using the methodology described in the training section. We then pick the best baseline models with respect to top-1 test accuracy as the candidate model to perform tuning, and present the average test accuracy on the target datasets. All of the (off-diagonal) results are deep-tuned, as deep-tuning greatly outperforms fine-tuning in most datasets, as shown in Tab. 4. Deep-tuning also allows us to compare the difference in learned representations with t-SNE plots, as fine-tuning does not change the encoder weights.

| ADP | MHIST | BACH | AJ-LYMPH | PCam | CRC | GlaS | OS | BCSS | |

|---|---|---|---|---|---|---|---|---|---|

| ADP | 93.560.4 | 78.741.39 | 93.110.05 | 94.742.63 | 76.370.92 | 99.30.12 | 88.337.22 | 94.920.53 | 97.570.05 |

| MHIST | 93.350.07 | 80.831.41 | 84.350.91 | 91.234.02 | 77.680.61 | 98.930.12 | 80.833.82 | 93.110.37 | 97.550.04 |

| BACH | 93.520.03 | 77.350.43 | 90.440.85 | 90.355.48 | 79.360.66 | 98.820.07 | 77.510.9 | 93.710.31 | 97.560.09 |

| AJ | 93.370.02 | 75.540.99 | 81.510.19 | 87.724.02 | 78.340.9 | 98.650.17 | 76.676.29 | 92.760.36 | 97.390.07 |

| PCam | 93.620.04 | 76.221.32 | 86.541.21 | 85.961.52 | 78.41.39 | 98.670.26 | 82.59.01 | 92.540.21 | 97.50.03 |

| CRC | 94.220.04 | 79.81.13 | 94.160.58 | 97.370.0 | 78.392.34 | 99.030.06 | 92.50.0 | 94.580.07 | 97.440.06 |

| GlaS | 93.290.09 | 78.681.59 | 82.851.26 | 86.842.63 | 76.660.95 | 98.90.26 | 85.05.0 | 93.070.65 | 97.480.04 |

| OS | 94.270.12 | 77.990.74 | 93.720.24 | 93.863.04 | 77.672.11 | 99.20.23 | 90.832.89 | 94.740.3 | 97.530.02 |

| BCSS | 94.030.01 | 81.880.67 | 93.610.42 | 93.864.02 | 78.520.27 | 99.050.14 | 90.04.33 | 94.560.06 | 97.670.05 |

| ADP | MHIST | BACH | AJ-LYMPH | PCam | CRC | GlaS | OS | BCSS | |

|---|---|---|---|---|---|---|---|---|---|

| ADP | 93.030.28 | 76.420.21 | 91.630.88 | 96.491.52 | 77.361.42 | 99.120.18 | 86.673.82 | 93.710.44 | 97.440.03 |

| MHIST | 94.470.08 | 83.521.34 | 93.470.69 | 93.861.52 | 82.570.8 | 99.160.07 | 92.52.5 | 94.10.24 | 97.730.02 |

| BACH | 94.40.06 | 84.270.6 | 94.411.13 | 92.981.52 | 81.631.52 | 99.260.06 | 93.331.44 | 93.930.09 | 97.730.01 |

| AJ | 94.150.06 | 80.080.77 | 90.60.61 | 92.110.0 | 80.02.28 | 99.120.03 | 81.673.82 | 94.320.06 | 97.680.08 |

| PCam | 94.410.06 | 82.091.95 | 90.960.93 | 92.981.52 | 78.672.57 | 99.260.06 | 91.671.44 | 94.10.18 | 97.620.09 |

| CRC | 94.380.03 | 81.582.16 | 94.610.82 | 89.479.12 | 78.271.37 | 99.350.09 | 90.832.89 | 94.940.78 | 97.510.03 |

| GlaS | 94.50.03 | 85.361.85 | 93.550.17 | 95.611.52 | 84.41.81 | 99.030.2 | 93.332.89 | 94.420.45 | 97.740.1 |

| OS | 94.120.07 | 81.030.36 | 93.440.13 | 92.110.0 | 78.862.88 | 99.070.13 | 80.8311.81 | 94.20.53 | 97.520.1 |

| BCSS | 94.080.09 | 78.611.62 | 94.250.29 | 94.742.63 | 78.161.08 | 99.330.03 | 94.172.89 | 94.180.42 | 97.750.05 |

| MHIST | BACH | AJ-LYMPH | PCam | CRC | GlaS | Osteosarcoma | BCSS | |

|---|---|---|---|---|---|---|---|---|

| Deep-tuning | 78.032.20 | 93.630.46 | 95.614.02 | 76.421.16 | 99.160.07 | 86.675.20 | 94.520.15 | 97.570.04 |

| Fine-tuning | 69.531.75 | 65.300.22 | 71.935.48 | 77.040.18 | 80.920.82 | 80.833.82 | 82.300.37 | 87.750.07 |

2.5 Weight Distillation

Taking the baseline weights from top performing models, we perform weight distillation as a secondary method for evaluating the potential for knowledge aggregation. Since all of our models use the same architecture—ResNet18—each model differs only by the dataset it is trained on. For each layer of the model, we unfold the 4-D weight tensors , where is the layer number, into 2-D weight tensors , . To combine weights from models trained on different datasets, we stack the unfolded weight tensors from all source datasets on top of each other to create a new weight tensor. For example, a new weight tensor for the convolutional layer

is created using from layer five of a model trained from random initialization on ADP and from layer five of a model trained from random initialization on CRC. We then apply Singular Value Decomposition (SVD)

to create factorized matrices. We take the first rows of and the first rows and columns of to keep only the most important filter values in the combined weight tensor, i.e. and . In this way, . Then, we fold back to a 4-D tensor . For non-convolutional 1-D layers, which include batch normalization and linear layers, the resultant vector is the mean of the corresponding vectors in all input models. For 2-D linear weights, the same SVD process is carried out. The resulting model is deep tuned according to our tuning methodology.

2.6 Evaluation

We train our models on the training set and use the validation set to select the best performing model for each epoch trial. Based on the validation accuracy, a single best performing model is selected to be evaluated on the test set. All results reported in this paper are test set metrics averaged over three runs. To evaluate the model on the test set, we calculate the test accuracy. All results are summarized in the results section.

Moreover, we use t-Distributed Stochastic Neighbor Embedding (t-SNE) [23] and Grad-CAM [24] to visualize our results. t-SNE visualizes high-dimensional data by giving each datapoint a location in a 2-D map. We also use Grad-CAM to visualize activation heatmaps of the network on various classes, where a warmer colour intensity corresponds to the amount of influence an image region has on a model prediction.

3 Results

Single Stage Transfer Results. Tab. 2 shows the results with a single stage transfer learning process. The models with the highest top-1 test accuracies, shown on the main diagonal, are used to deep tune on the applied dataset. If a deep tuned model outperforms the baseline, the result is highlighted green, and if it underperforms compared to the baseline, the result is highlighted in red.

From the results, noticeable improvement from the baseline is seen when large datasets are used as a source domain: ADP, CRC, OS, and BCSS all display the ability to transfer knowledge to other, usually smaller, datasets. Training on smaller datasets like GlaS or AJ-Lymph consistently decreased performance compared to the baseline. Of the large source domains, ADP performs poorly in tasks focused on cancer detection, such as MHIST and PCam, likely due to these task being out of domain. Note that ADP is focused only on healthy tissue, while CRC, OS, and BCSS all have diseased classes. These results show that proper choice of a source domain can affect performance, and consideration of what classes in the source domain are shared with the target domain is vital.

Two Stage Transfer Results. Tab. 3 shows the top-1 test accuracy of all cross transferred datasets with a two-stage deep tuning process. Pretrained ImageNet weights are deep tuned using each dataset in the trained column, shown along the main diagonal, and these models are then deep tuned again with the applied dataset.

ImageNet pretraining improves overall performance for most datasets, and produces a higher peak performance in all datasets except AJ-Lymph, which is still within margin of error. Interestingly, datasets that negatively impacted most results in single stage transfer learning, such as GlaS, BACH, and AJ-Lymph, had many more positive interactions. We posit that this is due to ImageNet pretraining providing necessary low level features that were hard for models to learn from random initialization, due to the small size of the source domain dataset. Datasets that are multi-class and are initially hard to learn (low baseline top-1 accuracy) appear to benefit most from a transfer learning process, as shown in datasets such as ADP and AJ-Lymph.

The above results describe a preliminary analysis of how much knowledge is transferable between histopathological datasets. An increased accuracy could be obtained with an experimental search of the optimal neural architecture and hyperparameters for each case. However, such analysis would be outside the scope of the paper.

| Labels | Benign | In Situ | Invasive | Normal |

|---|---|---|---|---|

| Original |

|

|

|

|

| BACH Baseline |

|

|

|

|

| ADP Pretrained, BACH deep-tuned |

|

|

|

|

| ImageNet + BACH, Baseline |

|

|

|

|

| ImageNet + ADP, BACH deep-tuned |

|

|

|

|

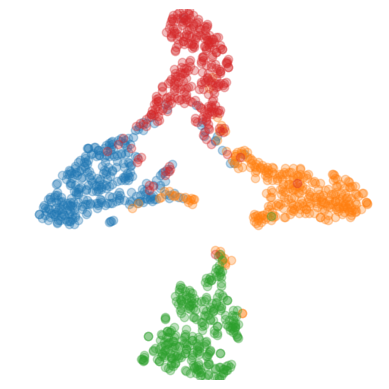

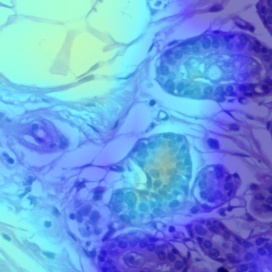

t-SNE and Grad-CAM Analysis. We chose to show t-SNE results to visualize changes to latent space representation, along with Grad-CAM to show an intuitive view on how a deep-tuned model classifies images. In Fig. 2, t-SNE plots for training and test sets for the BACH dataset are shown. Deep-tuning on the best performing dataset, CRC, we see that the representation for the test set becomes more disentangled for both single stage and two stage ImageNet training procedures.

Using Grad-CAM, visualizations of the baseline model and transfer learning models are shown in Fig. 3. During generation of Grad-CAM visualizations, both augmentation smoothing and eigen smoothing were used. All activations shown are of the model output prediction, which matched with ground truth for all classes. According to expert pathologists, the ADP source domain model initialized with ImageNet weights and tuned on BACH had the best correlating activation heat map with ground truth for Benign, Invasive, and Normal classes. In contrast, the BACH model initialized with ImageNet weights (baseline model) had poor heatmap correlation with ground truth. However, both the ADP source domain model and BACH baseline model yielded the same, correct predictions for Benign, Invasive, and Normal classes.

Weight Distillation. Fig. 4 displays top-1 accuracies of top performing models evaluated on the BACH dataset. All single dataset results, other than the baseline, are first trained on the specified dataset, and deep tuned on the BACH dataset. For the models created by weight distillation, we first apply our weight distillation workflow to combine two or more baseline weights (no tuning), and then deep tune the resulting combined model on BACH. T-SNE and Grad-CAM visualizations for weight distilled models can be found in the supplementary material.

Here, all models outperformed the BACH baseline, and all weight distilled models perform better than or equal to the one stage deep-tuning models, other than ADP + CRC + OS. We posit that this effect is due to different CPath datasets offering knowledge from different domains, shown through our Grad-CAM analysis where models trained on different source domains learned different approaches to the same task. This enables our weight distillation model to outperform even the highest performing ImageNet tuned model, demonstrating that CPath datasets hold valuable domain specific knowledge that cannot be seen in natural image datasets.

4 Conclusion

In this work, we proposed and tested a cross domain knowledge transfer pipeline consisting of dataset standardization, data augmentation, and training procedures over nine histopathological datasets. To assess transferred knowledge, we conducted experiments comparing source domain viability for each of the nine datasets and two stage transfer viability using ImageNet pretrained weights. To demonstrate the validity of our transfer learning framework and to visualize the learned knowledge from one dataset to another, we use t-SNE and Grad-CAM to show the change in latent space representation and class activations, respectively. Additionally, we apply weight distillation to top performing models to aggregate knowledge across datasets. We find that knowledge is transferred between histopathological datasets, and that hard to learn, multi-class datasets benefit most from transfer learning. Datasets that share a common organ class or common tasks tend to also share knowledge more effectively, especially when the constraint of learning low level features, governed by dataset size, is removed through ImageNet pretraining. These effects are also displayed through the t-SNE and Grad-CAM analysis, with more disentangled latent representations and more meaningful class activations, respectively. Weight distillation harnesses the different learned approaches by models trained on different source domains, allowing combined models to reach higher than ImageNet pretraining accuracies, with much less computational cost compared to training on ImageNet. We present these finding in an effort to push for a more data-driven approach to transfer learning in CPath, and to create a future where CPath knowledge can be shared between any number of datasets.

References

- [1] D. M. Metter, T. J. Colgan, S. T. Leung, C. F. Timmons, and J. Y. Park, “Trends in the US and Canadian Pathologist Workforces From 2007 to 2017,” JAMA Network Open, vol. 2, no. 5, pp. e194337–e194337, 05 2019.

- [2] G. Litjens, T. Kooi, B. E. Bejnordi, A. A. A. Setio, F. Ciompi, M. Ghafoorian, J. A. van der Laak, B. van Ginneken, and C. I. Sanchez, “A survey on deep learning in medical image analysis,” Medical Image Analysis, vol. 42, pp. 60–88, 2017.

- [3] S. Otálora, M. Atzori, V. Andrearczyk, A. Khan, and H. Müller, “Staining invariant features for improving generalization of deep convolutional neural networks in computational pathology,” Frontiers in Bioengineering and Biotechnology, vol. 7, pp. 198, 2019.

- [4] M. Cui and D. Zhang, “Artificial intelligence and computational pathology,” Laboratory Investigation, vol. 101, 01 2021.

- [5] D. Tellez, G. Litjens, P. Bándi, W. Bulten, J.-M. Bokhorst, F. Ciompi, and J. van der Laak, “Quantifying the effects of data augmentation and stain color normalization in convolutional neural networks for computational pathology,” Medical Image Analysis, vol. 58, pp. 101544, Dec 2019.

- [6] J. d. Matos, A. d. S. Britto, L. E. S. Oliveira, and A. L. Koerich, “Double transfer learning for breast cancer histopathologic image classification,” in Proc. of IJCNN, 2019, pp. 1–8.

- [7] J. Qu, N. Hiruta, K. Terai, H. Nosato, M. Murakawa, and H. Sakanashi, “Stepwise transfer of domain knowledge for computer-aided diagnosis in pathology using deep neural networks,” in Biomedical Engineering Systems and Technologies, A. Roque, A. Tomczyk, E. De Maria, F. Putze, R. Moucek, A. Fred, and H. Gamboa, Eds., Cham, 2020, pp. 105–119, Springer International Publishing.

- [8] M. S. Hosseini, L. Chan, W. Huang, Y. Wang, D. Hasan, C. Rowsell, S. Damaskinos, and K. N. Plataniotis, “On transferability of histological tissue labels in computational pathology,” in Proc. of ECCV, A. Vedaldi, H. Bischof, T. Brox, and J.-M. Frahm, Eds., Cham, 2020, pp. 453–469, Springer International Publishing.

- [9] Y. Kim, S. Kim, C. Cho, I. Song, H. J. Lee, S. Ahn, S. Park, G. Gong, and N. Kim, “Effectiveness of transfer learning for enhancing tumor classification with a convolutional neural network on frozen sections,” Scientific Reports, vol. 10, 12 2020.

- [10] M. S. Hosseini, L. Chan, G. Tse, M. Tang, J. Deng, S. Norouzi, C. Rowsell, K. N. Plataniotis, and S. Damaskinos, “Atlas of Digital Pathology: A generalized hierarchical histological tissue type-annotated database for deep learning,” in Proc. of CVPR, 2019, pp. 11739–11748.

- [11] J. Wei, A. Suriawinata, B. Ren, X. Liu, M. Lisovsky, L. Vaickus, C. Brown, M. Baker, N. Tomita, L. Torresani, J. Wei, and S. Hassanpour, “A petri dish for histopathology image analysis,” in Proc. of AIME, 2021.

- [12] G. Aresta, T. Araújo, S. Kwok, S. S. Chennamsetty, M. Safwan, V. Alex, B. Marami, M. Prastawa, M. Chan, M. Donovan, G. Fernandez, J. Zeineh, M. Kohl, C. Walz, F. Ludwig, S. Braunewell, M. Baust, Q. D. Vu, M. N. N. To, E. Kim, J. T. Kwak, S. Galal, V. Sanchez-Freire, N. Brancati, M. Frucci, D. Riccio, Y. Wang, L. Sun, K. Ma, J. Fang, I. Kone, L. Boulmane, A. Campilho, C. Eloy, A. Polónia, and P. Aguiar, “BACH: Grand challenge on breast cancer histology images,” Medical Image Analysis, vol. 56, pp. 122–139, 2019.

- [13] A. Janowczyk and A. Madabhushi, “Deep learning for digital pathology image analysis: A comprehensive tutorial with selected use cases,” Journal of Pathology Informatics, vol. 7, no. 1, pp. 29, 2016.

- [14] B. S. Veeling, J. Linmans, J. Winkens, T. Cohen, and M. Welling, “Rotation equivariant cnns for digital pathology,” CoRR, vol. abs/1806.03962, 2018.

- [15] J. N. Kather, J. Krisam, P. Charoentong, T. Luedde, E. Herpel, C.-A. Weis, T. Gaiser, A. Marx, N. A. Valous, D. Ferber, L. Jansen, C. C. Reyes-Aldasoro, I. Zörnig, D. Jäger, H. Brenner, J. Chang-Claude, M. Hoffmeister, and N. Halama, “Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study,” PLOS Medicine, vol. 16, no. 1, pp. 1–22, 01 2019.

- [16] K. Sirinukunwattana, J. P. Pluim, H. Chen, X. Qi, P.-A. Heng, Y. B. Guo, L. Y. Wang, B. J. Matuszewski, E. Bruni, U. Sanchez, A. Böhm, O. Ronneberger, B. B. Cheikh, D. Racoceanu, P. Kainz, M. Pfeiffer, M. Urschler, D. R. Snead, and N. M. Rajpoot, “Gland segmentation in colon histology images: The glas challenge contest,” Medical Image Analysis, vol. 35, pp. 489–502, 2017.

- [17] K. Clark, B. Vendt, K. Smith, J. Freymann, J. Kirby, P. Koppel, S. Moore, S. Phillips, D. Maffitt, M. Pringle, L. Tarbox, and F. Prior, “The cancer imaging archive (tcia): Maintaining and operating a public information repository,” Journal of digital imaging, vol. 26, 07 2013.

- [18] M. Amgad, H. Elfandy, H. Hussein, L. A. Atteya, M. A. T. Elsebaie, L. S. Abo Elnasr, R. A. Sakr, H. S. E. Salem, A. F. Ismail, A. M. Saad, J. Ahmed, M. A. T. Elsebaie, M. Rahman, I. A. Ruhban, N. M. Elgazar, Y. Alagha, M. H. Osman, A. M. Alhusseiny, M. M. Khalaf, A.-A. F. Younes, A. Abdulkarim, D. M. Younes, A. M. Gadallah, A. M. Elkashash, S. Y. Fala, B. M. Zaki, J. Beezley, D. R. Chittajallu, D. Manthey, D. A. Gutman, and L. A. D. Cooper, “Structured crowdsourcing enables convolutional segmentation of histology images,” Bioinformatics, vol. 35, no. 18, pp. 3461–3467, 02 2019.

- [19] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. of CVPR, 2016, pp. 770–778.

- [20] M. S. Hosseini and K. N. Plataniotis, “AdaS: Adaptive scheduling of stochastic gradients,” CoRR, vol. abs/2006.06587, 2020.

- [21] I. Sutskever, J. Martens, G. Dahl, and G. Hinton, “On the importance of initialization and momentum in deep learning,” in Proc. of ICML, S. Dasgupta and D. McAllester, Eds., Atlanta, Georgia, USA, 17–19 Jun 2013, vol. 28 of Proceedings of Machine Learning Research, pp. 1139–1147, PMLR.

- [22] B. Heo, S. Chun, S. J. Oh, D. Han, S. Yun, G. Kim, Y. Uh, and J.-W. Ha, “Adamp: Slowing down the slowdown for momentum optimizers on scale-invariant weights,” in Proc. of ICLR, 2021.

- [23] L. van der Maaten and G. Hinton, “Visualizing data using t-sne,” Journal of Machine Learning Research, vol. 9, no. 86, pp. 2579–2605, 2008.

- [24] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-CAM: Visual explanations from deep networks via gradient-based localization,” International Journal of Computer Vision, vol. 128, no. 2, pp. 336–359, Oct 2019.