High-rate discretely-modulated continuous-variable quantum key distribution

using quantum machine learning

Abstract

We propose a high-rate scheme for discretely-modulated continuous-variable quantum key distribution (DM CVQKD) using quantum machine learning technologies, which divides the whole CVQKD system into three parts, i.e., the initialization part that is used for training and estimating quantum classifier, the prediction part that is used for generating highly correlated raw keys, and the data-postprocessing part that generates the final secret key string shared by Alice and Bob. To this end, a low-complexity quantum -nearest neighbor (QNN) classifier is designed for predicting the lossy discretely-modulated coherent states (DMCSs) at Bob’s side. The performance of the proposed QNN-based CVQKD especially in terms of machine learning metrics and complexity is analyzed, and its theoretical security is proved by using semi-definite program (SDP) method. Numerical simulation shows that the secret key rate of our proposed scheme is explicitly superior to the existing DM CVQKD protocols, and it can be further enhanced with the increase of modulation variance.

- PACS numbers

-

42.50.St

pacs:

Valid PACS appear hereI introduction

Continuous-variable quantum key distribution Pirandola et al. (2020) is designed to implement point-to-point secret key distribution, its security is guaranteed by the fundamental laws of quantum physics Gisin et al. (2002). In a basic version of CVQKD Grosshans and Grangier (2002a), the sender, called Alice, encodes secret key bits in the phase space of coherent states and sends them to an insecure quantum channel, while the receiver, called Bob, measures these incoming signal states with coherent detection. After several steps of data post-processing, a string of secret keys can be finally shared by Alice and Bob. One of the advantages of CVQKD is that it is compatible with most existing commercial telecommunication technologies Gisin et al. (2004); Scarani et al. (2009); Chen et al. (2023), making it easier to integrate into real-world communication links.

In general, a CVQKD system is mainly composed of quantum signal processing and data-postprocessing Grosshans and Grangier (2002b). The former part corresponds to signal modulation, transmission, and measurement, aiming to generate a raw key, while the latter part corresponds to data reconciliation, parameter estimation, and privacy amplification, attempting to extract the final secret key from the raw key. In CVQKD, secret key rate and maximal transmission distance are generally a pair of crucial performance indicators. For a specific CVQKD system, however, there is tradeoff between the secret key rate and the maximal transmission distance: the longer the transmission distance, the lower the secret key rate, and vice versa. The main reason is that the continuous-variable quantum signal used to carry the secret key is extremely weak. Channel loss and excess noise rise as transmission distance increases, resulting in a reduction of signal-to-noise ratio (SNR) Lodewyck et al. (2007). This obliges a coherent detector hardly discriminate between the quantum signal and noise, decreasing the secret key rate. Although some solutions, such as adding an optical amplifier Fossier et al. (2009) and adopting non-Gaussian operation Guo et al. (2017); Li et al. (2016), can effectively improve the performance of the CVQKD system, its improvement seems limited as they are still largely constrained by the imperfect devices.

In recent years, CVQKD using machine learning technologies is becoming a research hotspot, as these technologies can be used for improving the performance of CVQKD without any extra device Liao et al. (2022). More importantly, machine learning technologies can automatically compensate for the negative effects caused by imperfect devices, effectively removing the performance restriction of the imperfect devices. For instance, Ref. Liao et al. (2018) reported a state-discrimination detector based on a Bayesian classification algorithm. This detector has the ability to surpass the standard quantum limit, which can be only achieved by conventional ideal detectors Becerra et al. (2013), so that the maximum transmission distance of the CVQKD system can be significantly increased. Reference Liao et al. (2020a) suggested a multi-label learning-based embedded classifier with the capability to precisely predict the location of the signal state in phase space, so that it can dramatically improve the performance of the CVQKD system as well.

Although these works do reveal that machine learning-based technologies can significantly improve the performance of CVQKD system, an underlying issue seems to be neglected. With an extremely large amount of signal data, the complexity of the majority of machine learning technologies is nearly unacceptable, and this situation is especially severe in high-speed CVQKD system Pirandola et al. (2015). For example, the core machine learning technology used in Ref. Liao et al. (2020a) is based on -nearest neighbor (NN) Cover and Hart (1967), which is one of the most mature classification algorithms. However, NN has a very high complexity as each unlabeled instance has to calculate all the distances between all labeled instances and itself, rendering extraordinary consumption in both time and space. These heavy costs have to be well addressed, otherwise CVQKD using machine learning technologies is unpractical, especially for the high-speed and/or real-time secret key distribution scenarios.

In past few years, quantum machine learning, which is based on quantum algorithms such as Shor’s algorithm Ekert and Jozsa (1996) and Grover’s algorithm Grover (1996), has been developed rapidly. For example, Ref. Wiebe et al. (2012) proposed a quantum linear regression algorithm based on the quantum HHL algorithm Harrow et al. (2009), which exponentially accelerates the classical algorithm. Reference Rebentrost et al. (2014) reported a quantum support vector machine (SVM) which used the high parallelism of quantum computing to improve the classical SVM, thus obtained an exponential speedup. References Aïmeur et al. (2007, 2013) showed that the classical -means algorithm can be accelerated by quantum minimal finding method. The above studies indicate that quantum algorithms contribute to speed up machine learning, thereby improving the computing efficiency.

Inspired by the merits of quantum algorithms, in this work, we propose a high-rate CVQKD scheme based on quantum machine learning. The proposed scheme is quite different from conventional discretely-modulated (DM) CVQKD Leverrier and Grangier (2009, 2011), which divides the whole CVQKD system into three parts, initialization, prediction and data-postprocessing. The initialization part is used for training and estimating quantum classifier, the prediction part is used for generating highly correlated raw keys, and the data-postprocessing part generates the final secret key string shared by Alice and Bob. In particular, a well-behaved quantum -nearest neighbor (QNN) algorithm is designed as a quantum classifier for distinguishing the lossy discretely-modulated coherent states (DMCSs) at Bob’s side. Different from classical NN algorithm, QNN simultaneously calculates all similarities in parallel and sorts them by taking advantages of Grover’s algorithm, thereby greatly reducing the complexity. The performance of the proposed QNN-based CVQKD especially in terms of machine learning metrics and complexity is analyzed, and its theoretical security is proved by using semi-definite program (SDP) method. Numerical simulation shows that the secret key rate of QNN-based CVQKD is explicitly superior to the existing DM CVQKD protocols, and it can be further enhanced with the increase of modulation variance. It indicates that the proposed QNN-based CVQKD is suitable for the high-speed metropolitan secure communication due to its advantages of high-rate and low-complexity.

This paper is structured as follows. In Sec. II, we briefly introduce the CVQKD protocol and classical NN algorithm. In Sec. III, we detail the proposed QNN-based CVQKD. Performance analysis and discussion are presented in Sec. IV and conclusions are drawn in Sec. V.

II CVQKD protocol and classical NN algorithm

In order to make the paper self-contained, in this section, we first retrospect the process of CVQKD protocol, and briefly introduce the classical NN algorithm.

II.1 Process of CVQKD protocol

In general, the whole process of one-way CVQKD protocol includes five steps, i.e., state preparation, measurement, parameter estimation, data reconciliation and privacy amplification. Figure 1 shows the process of conventional CVQKD, and each step is explained as follows.

State Preparation. Alice prepares a train of coherent states whose quadrature values and obey a bivariate Gaussian distribution. Then the modulated coherent states are sent to Bob through an untrusted quantum channel.

Measurement. The pulses sent by Alice are received by Bob who measures these incoming signal states with coherent detector. After enough rounds, a string of raw key can be shared between Alice and Bob.

Parameter Estimation. Alice and Bob disclose part of the raw key to calculate the transmittance and excess noise of the quantum channel. With these two parameters, the secret key rate can be estimated.

Data Reconciliation. If the estimated secret key rate is positive, the low density parity check (LDPC) code is applied to another part of the raw key, aiming to error correction.

Privacy Amplification. Finally, a privacy amplification algorithm is performed based on the hash function, so as to extract the final secret key that is entirely unknown to the eavesdropper Eve.

II.2 Classical NN algorithm

NN is a traditional lazy learning algorithm that uses a majority vote to classify (predict) the grouping of unlabeled data points. For an unlabeled data point and a training set containing labeled data points, NN first finds a set of labeled data points whose similarities with are the top highest among all data pints in training set, and then counts the number of each label, the label with biggest number will be assigned as the label of data point . Figure 2 depicts an example of NN algorithm in 2-dimensional feature space, in which a gray dot denotes an unlabeled data point and it is going to be labeled as green or blue. The gray dot will be assigned to the blue class when due to there are two of the three-nearest labeled data points belong to the blue class while only one point belongs to the green class. Similarly, it will be labeled as green class for as there are four of the seven-nearest labeled data points belong to the green class while only three point belongs to the blue class.

The specific steps of NN can be seen in Table 1. It is worth noting that the similarity is one of the crucial parameters of NN algorithm. Assuming and are the respective feature vectors of unlabeled data point and labeled data point , similarity can be measured by following ways.

Euclidean distance

| (1) |

Cosine similarity

| (2) |

In the above two similarities, Euclidean distance counts the absolute distance of each data point, revealing the difference of each data point’s location in the coordinate system Van Der Heijden et al. (2005). Cosine similarity counts the cosine of an angle between two vectors, revealing the directional difference of each vector Tan et al. (2016). In addition, Manhattan distance Stigler (1986), Hamming distance Waggener et al. (1995) and inner product also can be used as similarity in NN, one should select a proper way according to different applications.

| Input : training data points and their |

| labels , unlabeled data point and |

| hyper-parameter . |

| Output : predicted label of data point . |

| function Predict |

| Load all training data points and unlabeled data |

| point on the register. |

| for to do |

| Compute . |

| end for |

| Sort (descending or |

| ascending). |

| Choose neighbors which are nearest to . |

| Conduct majority voting and assign the label of |

| the majority to the data point . |

| return |

| end function |

III QNN-based CVQKD

Before we detail the proposed QNN-based CVQKD, several concepts need to be introduced. As shown in Fig. 3, the coherent states that Alice prepared are discretely modulated with 8 phase shift keying (8PSK), we equally divide the entire phase space into eight areas, and assign each area to a label . By doing so, each coherent state can be classified into a certain label according to its position. For example, coherent state belongs to label and coherent state belongs to label . Note that although our study is mainly based on 8PSK modulation strategy, other discrete modulation strategies can also be used for the proposed QNN-based CVQKD. Figure 4 shows the change of location in phase space of a modulated coherent state after passing an untrusted quantum channel. It depicts that the transmitted coherent state received by Bob is no longer identical with its initial signal state due to the phase drift and energy attenuation caused by the imperfect channel noise and loss. To detailedly describe this difference, we construct a multi-dimensional feature vector for each received coherent state by calculating Euclidean distances between the received coherent state and each standard 8PSK-modulated coherent state. By extracting these distance features, the features of received coherent state can be extended from three dimensions to eight dimensions . For now, the QNN-based CVQKD can be detailed below.

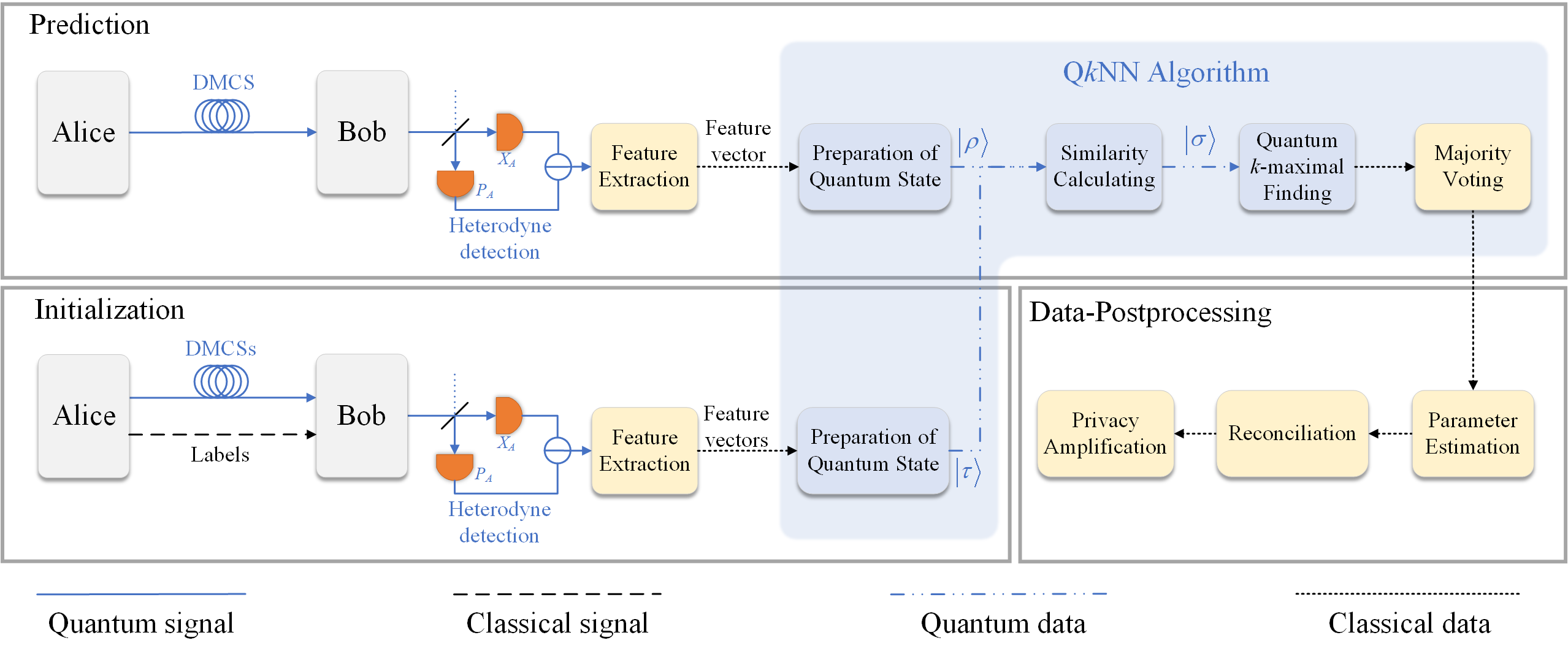

As shown in Fig. 5, the whole QNN-based CVQKD system can be divided into three parts, i.e., initialization, prediction and data-postprocessing.

Initialization. Alice first prepares DMCSs with 8PSK modulation and labels each DMCS which follows the rule shown in Fig. 3. Both DMCSs and their respective labels are sent to Bob who measures the received signals with heterodyne detection, and these DMCSs are called training data. Bob subsequently extracts features from each measurement result to obtain an eight-dimensional feature vector. After collecting enough feature vectors, Bob locally prepares a quantum state to carry the information of all feature vectors. Note that the aim of the initialization part is to prepare quantum state which stores feature information of all labeled DMCSs, so that once the quantum state has been successfully prepared, one does not need to perform this part repeatedly. To guarantee the security, the transmission of labels has to be done without eavesdropping. This can be implemented by multiple ways, such as security monitoring of the communication system when the information of labels is transmitting. Even if the information of labels is compromised, the initialization part can be rerun to prepare new DMCSs and their respective labels.

Prediction. Alice randomly prepares an unlabeled DMCS with 8PSK modulation and sent it to the untrusted quantum channel. Bob receives this incoming signal state and measures it with heterodyne detection. Similarly, an eight-dimensional feature vector for this signal state can be constructed according to its measurement result. Bob then locally prepares a quantum state to carry the information of this feature vector. For now, Bob holds both quantum state that stores the information of unlabeled DMCS and quantum state that stores the information of labeled DMCSs. He subsequently calculates the similarities between these quantum states and stores them into quantum state . After quantum -maximal finding, nearest neighbors of the unlabeled DMCS can be found. Finally, the label whose count is the biggest in nearest neighbors is assigned to the unlabeled DMCS, so that Bob can precisely recover the bit information sent by Alice. After enough rounds, Alice and Bob can share a string of raw key. Compared with the raw key generated by conventional DM CVQKD, the raw key generated by this part is more correlated, as quantum machine learning is introduced for better correcting the bit error caused by channel loss, excess noise and quantum attacks during the signal transmission.

Data-Postprocessing. This part is similar to the postprocessing of conventional CVQKD, which includes parameter estimation, data reconciliation and privacy amplification. Details about these steps can be found in Ref. Leverrier (2015).

With these three parts, secret key can be finally shared between Alice and Bob. In what follows, several critical steps of the proposed QNN-based CVQKD is detailed.

III.1 Preparation of quantum states

As we mentioned above, Bob needs to prepare quantum states ( or ) in both initialization part and quantum computing processing part. The difference is that quantum state is prepared in initialization part to carry the information of all feature vectors that extracted from labeled DMCSs, while quantum states is prepared in prediction part to carry the information of feature vector that extracted from an unlabeled DMCS. Assuming the normalized feature vectors that extracted from labeled DMCSs are and the normalized feature vector that extracted from an unlabeled DMCS is , the quantum states and can be respectively expressed by Wiebe et al. (2014)

| (3) |

and

| (4) |

where is the -th feature value of feature vector , is the dimension of the extracted features ( in our case) and is the number of labeled DMCSs. Obviously, is the superposition state that carries the information of all feature vectors for known (labeled) DMCSs, while is the quantum state that carries the information of feature vector for an unknown (unlabeled) DMCS.

In what follows, we show how to obtain these two quantum states. To prepare quantum state , we first need to prepare an initial state which can be expressed as

| (5) |

where state (or state ) can be obtained by a quantum circuit shown in Fig. 6. See Appendix A for the detailed derivation of initial state . Subsequently, an Oracle is applied to this initial state so that the resultant state can be expressed by

| (6) | ||||

After that, an unitary operation

| (7) |

is applied to the last quantum bit (qubit) of , so that the rotated state

| (8) | ||||

can be obtained. Finally, the quantum state can be obtained by removing the auxiliary qubit of using Oracle . Similarly, the quantum state can be prepared in the same way with initial state . As a result, the information of feature vectors can be stored in the amplitude of quantum states and .

III.2 Similarity calculating

For now, Bob possesses both the quantum state that carries the information of known DMCSs and the quantum state that carries the information of unknown DMCS. He first calculates the fidelity, which can be used as a measure of the similarity, between these quantum states and store it to the amplitude of qubit by performing a controlled-SWAP (c-SWAP) test shown in Fig. 7. The quantum circuit of c-SWAP test is composed of two Hadamard gate and a SWAP operation which obeys , where

| (9) |

Therefore, the function of c-SWAP test is that the last two states will be swapped if the control qubit is or they will not be swapped if the control qubit is .

After passing the c-SWAP test, the input state can be transformed to

| (10) | ||||

where

| (11) |

See Appendix B for the detailed derivation of . It is easy to find that is directly proportional to the fidelity , so that can be directly used for measuring the similarity between and . After times c-SWAP test, a superposition state whose amplitudes contain all similarities can be finally obtained as

| (12) |

In order to facilitate the processing of follow-up quantum search algorithm, amplitude estimation Brassard et al. (2002) is further applied to this superposition state so that the amplitudes of can be stored as a qubit string. Figure 8 shows the quantum circuit for implementing amplitude estimation, which includes two steps i.e., amplitude amplification and phase estimation. Specifically, amplitude amplification is implemented by an unitary operator where performs , obeys

| (13) |

and obeys

| (14) |

while phase estimation is implemented by an inverse quantum Fourier transform (IQFT) which is defined as

| (15) |

where , . After amplitude estimation, a quantum state

| (16) |

can be prepared, where and is the estimate of . See Appendix C for the detailed derivation of quantum state . For now, the similarities can be deemed to have been stored as a qubit string due to is also directly proportional to the fidelity .

III.3 Quantum -maximal finding

Bob now holds the quantum state whose qubit string contains the information of similarities between all known DMCSs and the unknown DMCS. He then finds nearest neighbors of the unknown DMCS by applying quantum -maximal finding algorithm, which is detailed as follows.

As shown in Fig. 9, Bob first randomly selects known DMCSs and records their corresponding indexes into an initial set , the rest indexes are recorded into a set . Then, the Grover’s algorithm Grover (1996) is applied to to find an index whose corresponding satisfying , where . In our case, the quantum circuit for implementing Grover’s algorithm is shown in Fig. 10, which includes a number of Grover iterations and a final measurement. Each iteration is composed of a unitary operator and a Grover diffusion operator . Specifically, denotes conditional phase shift transformation which obeys

| (17) |

where the function is defined as

| (18) |

The operator can be implemented by a CMP operator and a CNOT gate. After passing the CMP operation, the fourth input qubit is transformed to

| (19) |

Then, the CNOT gate is controlled by the above state so that the last input qubit is transformed to

| (20) | ||||

Therefore, the resultant state

| (21) | ||||

can be obtained after applying operator. The Grover diffusion operator is subsequently applied to the first qubit of the resultant state, so that its amplitude will be inversed about average Grover (1997). After the first Grover iteration, the state

| (22) |

where is the number of solutions of Grover’s algorithm, can be obtained. From Eq. (21) and Eq. (22), we can easily find that the amplitude of is amplified. After enough rounds of Grover iteration, the index can be obtained by the final measurement with a probability approaching 1 (Details about this iteration is presented in Appendix D). Bob then replaces with , and starts a new round of Grover’s algorithm. Finally, the indexes of nearest neighbors can be obtained from the final set .

IV Performance analysis and discussion

In this section, we first present the performance of the proposed QNN algorithm, followed by its complexity analysis. Subsequently, the security of the whole QNN-based CVQKD scheme is detailedly analyzed.

IV.1 Performance of QNN algorithm

| Predicted positive | Predicted negative | |

|---|---|---|

| Actual positive | ||

| Actual negative |

As the proposed QNN can be deemed a quantum version of NN algorithm, classical machine learning metrics can be used for evaluating its performance. In machine learning area, the confusion matrix is one of the most widely used concepts to analyze the performance of a certain classification algorithm. Table 2 shows the confusion matrix, in which the true positive () indicates the counts that actual positive data are predicted as positive, false positive () indicates the counts that actual negative data are predicted as positive, false negative () indicates the counts that actual positive data are predicted as negative, and true negative () indicates the counts that actual negative data are predicted as negative.

With confusion matrix, a machine learning metric called Precision () can be defined as

| (23) |

which indicates the proportion of actual positive data in all predicted positive data. This metric is important to our case as raw key is generated from the correct labels in all the predicted labels. Since there are eight labels existed in phase space, we further calculate the precision of each label and average them to obtain a metric Average Precision , i.e.

| (24) |

Obviously, the higher the value of , the more correlated the raw key. Figure 11 shows the average precision of the proposed QNN algorithm with different hyper-parameter . By and large, it can be found that the value of decreases with transmission distance increases, which indicates that the transmission distance is a crucial factor that impacts the performance of QNN algorithm. This is in line with our expectation, as the increased transmission distance will lead to more channel losses, resulting in extremely lower SNR of the received DMCSs. In addition, we find that fluctuates as the hyper-parameter varies, and this situation exists in all distances. To select a proper , we mark the peak value of each line out with a circle. It is observed that these peak values occur when is set from 11 to 17, e.g. at transmission distance of km when , which suggests that the value of should not be set too small or too large. This is because a small value of may lead to overfitting problem and a large value of may result in underfitting problem, while will be decreased by either of the two problems Hastie et al. (2001).

For now, we have investigated the performance of QNN in terms of precision. To comprehensively evaluate a classifier, however, a sole metric is inadequate. Therefore, an overall metric called macro-average Receiver Operating Characteristic (ROC) curve Fawcett (2006), which describes the average True Positive Rate () of a certain multi-class classifier as a function of its average False Positive Rate (), is introduced. For each class, indicates the proportion of the data that is correctly predicted as positive in all actual positive data, and indicates the proportion of the data that is incorrectly predicted as positive in all actual negative data. Their formulas are given by

| (25) |

| (26) |

Figure 12 shows the macro-average ROC curves of the proposed QNN with hyper-parameter . The dashed line is the result of random guess, which illustrates that there is no performance improvement without using any classification algorithm. With macro-average ROC curve, one can explicitly tell the quality of the multi-class classifier: the curve more close to point , the performance better. Obviously, the proposed QNN can dramatically improve the performance of predicting DMCSs. Moreover, it can be observed that these curves are away from the best point with the transmission distance increases, this trend is identical with our previous analysis, which further illustrates that channel loss is important for the performance of QNN algorithm. Besides, we further calculate the area under curve (AUC) Bradley (1997) for each distance and thus obtain AUC value , which is a probability value range from 0 to 1. As a numerical value, can be directly used for quantitatively evaluating classifier’s quality. It can be easily found that our proposed QNN algorithm achieves extremely high overall performance () with transmission distance is km. It is worth noting that the AUC value of random guess is 0.5, while it gets 0.8016 with the proposed QNN even the transmission distance is increased to km. This result demonstrates that the effectiveness of QNN in CVQKD system, therefore, the AUC value can be used to describe the efficiency of quantum classifier in the following security analysis of the proposed QNN-based CVQKD system.

IV.2 Complexity analysis for QNN algorithm

Before presenting the complexity analysis of QNN, let us briefly retrospect the complexity of classical NN first. Assuming there are training data points and each training data point is represented as an dimensional feature vector , the classical NN algorithm first needs to calculate all similarities between the unlabeled data point and each labeled data point , so that the complexity of this step is . Then, the similarities need to be sorted with a certain sorting algorithm such as quick sort, merge sort, heap sort, etc. In general, the complexity of above sorting algorithms is no less than Cormen et al. (2022). Finally, the majority voting requires times to count the number of labels and assigns the label to . As a result, the total complexity of classical NN can be expressed by

| (27) |

In what follows, let us discuss the complexity of the proposed QNN algorithm in detail. As shown in Fig. 5, the whole QNN is composed of four parts, i.e., preparation of quantum states, similarity calculating, quantum -maximal finding and majority voting. For preparation of quantum states, as we detailed in Sec. III.1, both quantum states and are prepared by 3 Oracles (i.e. , and ), so that the complexity of this part is . For similarity calculating, the two quantum states and are first passed through the c-SWAP test quantum circuit to obtain the quantum state that contains the similarity . Note that the complexity of calculating a is Hai et al. (2022). To obtain the superposition state whose amplitudes contain all similarities, the c-SWAP test needs to be performed times, the complexity is thereby increased to . Subsequently, the similarities need to be stored as a qubit string by amplitude estimation. Specifically, the operator is repeatedly performed to estimate the amplitude (see details in Appendix C), and the error probability for estimating satisfies Brassard et al. (2002)

| (28) |

where is the estimation of , and is the iteration times of operator . Obviously, needs to satisfy the following inequation, i.e.

| (29) |

if the error probability . That is to say, the operator needs to be performed at least times () to ensure that the error probability is less than or equal to . Therefore, the total complexity of similarity calculating is . For quantum -maximal finding, let be a set whose elements do not belong to set but are more similar to than some points in set , and the number of elements of set is . Obviously, to find out maximal values, the Grover’s algorithm and replacement need to be repeatedly performed until set is empty, i.e., . Reference Durr et al. (2006) shows that can be reduced to by performing iterations of Grover’s algorithm and replacement when , i.e., the Oracle needs to preform times. Once is reduced to , times Oracles in each round of Grover’s algorithm are required to ensure . Therefore,

| (30) |

times Oracles are required for reducing to 0. From Eq. (30), we can easily find that the complexity of quantum -maximal finding is . Till now, we have presented the complexity analysis of the former three parts, note that the last part of QNN, namely the majority voting, is similar to that of classical NN, so that its complexity remains . Therefore, the total complexity of our proposed QNN is

| (31) |

Figure 13 shows complexity comparisons between the proposed QNN and classical NN. As can be seen in Fig. 13(a), the total complexity of the proposed QNN is much less than that of classical NN, it illustrates that the proposed QNN algorithm could offer a significant speedup over classical NN algorithm. To figure out how the acceleration happens, we further mark each part with different colors and the result shows that the complexities of similarity calculating and -maximal finding (corresponding to sorting in classical NN algorithm) are dramatically decreased by the proposed QNN. It illustrates that the quantum parts of QNN are crucial for speedup. In addition, we find that the complexities for both QNN and classical NN algorithms are affected by several parameters, i.e., the dimension , the number of training data and the number of nearest neighbors . We therefore plot Fig. 13(b), Fig. 13(c) and Fig. 13(d) to investigate the respective influence of each parameter on complexity. As shown in Fig. 13(b) and Fig. 13(c), the complexity of classical NN rises quite sharply with the increase of or , while the complexity of QNN rises very slowly. It illustrates that the proposed QNN algorithm is of clear superiority in addressing high dimensional or large-size classification issues. Figure 13(d) shows that although the complexity of classical NN is apparently larger than that of QNN, the complexities of both algorithms are not sensitive to the hyper-parameter , which illustrates that is not a crucial parameter that heavily affects the complexities of both classical NN and QNN algorithms. It is worthy noting that, to explicitly show the respective trends, the scale of labeled data point is set to 128 in Fig. 13(a), Fig. 13(b) and Fig. 13(d). Actually, is usually far more than for a realistic CVQKD system Leverrier (2015). In such a practical scenario, the complexity gap between QNN and classical NN will be extremely large.

IV.3 Security analysis of QNN-based CVQKD

Till now, we have demonstrated the performance of QNN-based CVQKD in terms of machine learning metrics and have analyzed the complexity of QNN algorithm, both results have shown the advantages of our scheme. In what follows, we present the theoretical security proof for QNN-based CVQKD with semi-definite program (SDP) method Denys et al. (2021), detailed calculations can be found in Appendix E. As known, the asymptotic secret key rate of the conventional DM CVQKD with reverse reconciliation is given by Liao et al. (2020b)

| (32) |

where is the reconciliation efficiency, is the Shannon mutual information between Alice and Bob, and is the Holevo bound of the mutual information between Eve and Bob. However, Eq. (32) does not consider the influence of the introduction of QNN classifier for both legitimate users (Alice and Bob) and the eavesdropper (Eve), it, therefore, has to be amended to suitable for evaluating quantum machine learning-based CVQKD. Due to the data processing is quite different, Eq. (32) can be rewritten as the following form

| (33) |

The difference between Eq. (32) and Eq. (33) lies in two parts. First, the AUC value has to be considered as it describes the efficiency of quantum classifier. Higher AUC value implies higher correlation of raw key between Alice and Bob. Second, term , which represents the Holevo quantity for Eve’s maximum accessible information, is reduced to , where is the probability of discrete uniform distribution when variable . This is because Eve is no longer able to acquire as much information as before, due to some relationships are no longer fixed and public. To be specific, in conventional DM CVQKD, the relationship between each DMCS and its binary presentation is fixed and public. For instance, the key bits of state in QPSK CVQKD is always (0, 0) (see Fig. 1 in Ref. Ghorai et al. (2019)), so that Eve can precisely recover the correct key bits (0, 0) when she successfully intercepts the coherent state . Similarly for 8PSK CVQKD, Eve can precisely recover the correct key bits (0, 0, 0) when she successfully intercepts the coherent state . However, due to the special-designed process of QNN-based CVQKD, the above relationship is no longer fixed and public. Although each DMCS is assigned to a fixed label, such as the label of is and the labels of is shown in Fig. 3, the binary presentation for each label can be randomly assigned by Alice. Since the initialization part is secure, Bob will learn the assignment by the transmitted DMCSs at the end of initialization. While Eve who does not participate the initialization part is completely unaware of the assignment shared by Alice and Bob. She thus can only guess the correct label with the success probability for -PSK is . As can be seen in Fig. 14, in our proposed scheme, Eve’s maximum accessible information is apparently reduced when compared with conventional DM CVQKD, and it can be further decreased by using higher dimensional PSK modulation. This is opposite to the trend in conventional DM CVQKD in which Eve’s maximum accessible information will increase with higher dimensional PSK modulation. It illustrates that the proposed scheme can efficiently prevent eavesdropper from obtaining more useful information, thereby contributing to the increase of final secret key.

Figure 15 shows the performance comparison between QNN-based CVQKD and two conventional DM CVQKD protocols in asymptotic limit. The results demonstrate that the secret key rate of QNN-based CVQKD outperforms other DM CVQKD protocols at all channel losses. In addition, we find that the secret key rate of the proposed scheme can be further increased with the risen modulation variance . This is inconsistent with conventional DM CVQKD whose security has to be guaranteed by small modulation variance Ghorai et al. (2019); Liao et al. (2021). To investigate what caused this inconsistency, we plot Fig. 16, which shows the asymptotic secret key rates of above-mentioned schemes as a function of modulation variance. It can be easily found that the curves of both QPSK CVQKD and 8PSK CVQKD are arched and are located in certain ranges of small modulation variance, and the ranges are getting narrower with channel loss increases. Meanwhile, curves of QNN-based CVQKD are keep rising with the increase of modulation variance, it illustrates that small modulation variance is no longer needed for guaranteeing the security, so that the secret key rate of our proposed scheme can be further increased by setting proper larger modulation variance.

V Conclusion

In this work, we have proposed a high-rate continuous-variable quantum key distribution scheme based on quantum machine learning, called QNN-based CVQKD. The proposed scheme divides the whole process of conventional DM CVQKD protocol into three parts, i.e., initialization, prediction and data-postprocessing. The initialization part is used for training and estimating quantum classifier, the prediction part is used for generating highly correlated raw keys, and the data-postprocessing part generates the final secret key string shared by Alice and Bob. To this end, a specialized QNN algorithm was elegantly designed as a quantum classifier for distinguishing the incoming DMCSs. We then introduced several related machine learning-based metrics to estimate the performance of QNN, and compared its complexity with classical NN algorithm. The asymptotic security proof of QNN-based CVQKD was finally presented with SDP method.

We have comprehensively analyzed the performance of QNN-based CVQKD, the results indicate that our proposed scheme is suitable for the high-speed metropolitan secure communication due to its advantages of high-rate and low-complexity. Besides, it is worthy noticing that the quantum classifier is not limited to the proposed QNN, any other well-behaved quantum classifier can be used for improving CVQKD with the proposed processing framework.

In summary, QNN-based CVQKD is not only an improvement of CVQKD protocol, but also provides a novel thought for introducing various (quantum) machine learning-based methodologies to CVQKD field.

Acknowledgements.

This work was supported by the National Natural Science Foundation of China (Grant No. 62101180), Hunan Provincial Natural Science Foundation of China (Grant No. 2022JJ30163), the Open Research Fund Program of the State Key Laboratory of High Performance Computing, National University of Defense Technology (Grant No. 202101-25).Appendix A Derivation of the initial state

To clearly describe the preparation of initial state, the quantum circuit shown in Fig. 6 is divided into four phases P1-P4. Thereinto, is Hadamard gate which is defined by

| (34) |

we therefore have and . CMP is an unitary operation which obeys

| (35) |

where is the computational basis state that stores as a binary string.

The CMP operation can be implemented by a quantum circuit shown in Fig. 17, which is composed of three controlled NOT (CNOT) gates and four inverted controlled NOT (ICNOT) gates. The CNOT gate flips the controlled qubit if its control qubit is , while the ICNOT gate flips the controlled qubit if the control qubit is . By properly combining these quantum control gates, the controlled qubits can be flipped under certain conditions. For example, after passing the first combined control gate (blue dashed box shown in Fig. 17), the third qubit will be flipped when the other three control qubits are . To implement CMP operation, the quantum circuit of Fig. 17 needs to perform times, where .

In what follows, we present the derivation of preparing the quantum state . Assuming there are qubits’ binary value larger than , so we have . The states of qubits in different phases (P1-P4) and registers (R1-R4) are presented in Table 3. Let us start the derivation with the input quantum state . At first, a gate is applied to R1, the input quantum state is therefore transformed to

| (36) |

Then, a ICNOT gate is applied to R1 and R4, so that the resultant state can be expressed by

| (37) |

After that, the CMP operation is applied to R1, R2 and R3, the quantum state is finally transformed to

| (38) | ||||

From Eq. (38), it is easily to find that the probability of measurement outcome of (0,0) is . Therefore, the quantum state

| (39) |

can be obtained with the probability from R1.

Similarly, we can prepare the quantum state by replace the input qubit with . Then, the initial state

| (40) |

can be prepared.

| P1 | P2 | P3 | P4 | |||

|---|---|---|---|---|---|---|

| R1 |

|

|||||

| R2 | ||||||

| R3 | ||||||

| R4 |

Appendix B Derivation of the probability of measuring the top qubit of c-SWAP test

As shown in Fig. 7, the top qubit is firstly transformed to by a Hadamard gate. After passing the SWAP gate, the whole quantum system turns to . The top qubit subsequently operated by another Hadamard gate, resulting in the final quantum state

| (41) |

If we measure the top qubit of quantum state , the probability of outcome of is . The detailed derivation is as follows.

Let be measurement operator set, where

| (42) | |||

and obeys

| (43) | ||||

where is identity matrix. can be derived by

| (44) | ||||

where and .

Appendix C Derivation for obtaining quantum state by amplitude estimation

After times c-SWAP test, Bob now holds a superposition state (Eq. (12) in the main text) whose amplitudes contain all similarities between all known DMCSs and the unknown DMCS. The aim of amplitude estimation is to store these similarities from amplitudes to a quantum bit string, which is convenient for the processing of follow-up quantum search algorithm.

In fact, the superposition state can be decomposed as , where denotes good state that we needed and denotes bad state that we do not needed. Let denotes the probability that measuring produces a good state, amplitude amplification is first performed to the state by repeatedly applying the unitary operator , where and Brassard et al. (2002). We therefore can derive that

| (45) | ||||

From Eqs. (45), the operator can be further expressed as a matrix form

| (46) |

Assuming , the matrix can be rewritten as

| (47) |

so we have

| (48) | ||||

where and is Pauli Y operator that defined as

| (49) |

Therefore, we can derive that the eigenvalues of are and the eigenstates of are

| (50) | ||||

so that the quantum state can be expanded on the eigenstates basis of as

| (51) | ||||

As shown in Fig. 8, the Hadamard gates are first applied to qubits, so that a quantum state can be produced. Then the operator is controlled by this state to apply times of operator to quantum state , the resultant state is transformed to

| (52) | ||||

After amplitude amplification, phase estimation is subsequently used for estimating the phase information of the resultant state. Thus, after applying IQFT on register R1, the quantum state is transformed to

| (53) |

where is the estimate of . Let us define and , so that and . Thus, , so we have . Therefore, the state can be rewritten as

| (54) |

where

| (55) | ||||

As a result, the similarities between all known DMCSs and the unknown DMCS are stored as a qubit string after amplitude estimation. Note that the quantum state can always be obtained from R1.

Appendix D Grover iteration of Grover’s algorithm

As we mentioned in the main text, the Grover’s algorithm includes a number of Grover iterations and a final measurement. The Grover iteration is defined as

| (56) |

where and Boyer et al. (1998). Assuming there are different values of satisfying , the initialized quantum state can be expressed as

| (57) |

where and . After applying the Grover operator to the , it is transformed to

| (58) | ||||

Extending to the general situation, the quantum state can be obtained after iterations, where

| (59) | ||||

Let where , we have

| (60) | ||||

which can be verified by mathematical induction.

The task of the Grover iterations is to continuously amplify the amplitude , so that the probability of obtaining is infinitely close to 1, i.e., we have the following equation

| (61) | ||||

From Eq. (61), we can derive that

| (62) | ||||

Since can only be an integer, thus we have . In addition, we have when is large enough. Therefore, it needs Grover iterations to find the target index .

Appendix E Calculation of secret key rate for DM CVQKD protocol

In this section, we present the calculation of secret key rate for DM CVQKD protocol with SDP method Denys et al. (2021). As presented in Sec. IV.3, the asymptotic secret key rate of CVQKD protocol with reverse reconciliation and heterodyne detection under collective attack obeys

| (63) |

In the heterodyne detection case, the Shannon mutual information between Alice and Bob is given by

| (64) |

where is the variance of one half of a two-mode squeezed vacuum state, is the modulation variance of Alice, is the total noise referred to the channel input, is the transmission efficiency, is the excess noise, is efficiency of the detector and is noise due to detector electronics.

The Holevo bound of mutual information between Eve and Bob is given by

| (65) |

where , and are symplectic eigenvalues of states’ covariance matrices, which is given by

| (66) |

with

| (67) | ||||

and

| (68) |

with

| (69) | ||||

and the last symplectic eigenvalue . In the above equations, is the channel-added noise, is the detection-added noise, and is the correlation between Alice and Bob. In SDP method, is defined as

| (70) |

where is the annihilation operator, is the creation operator, and

| (71) |

where , and for an -PSK modulation,

| (72) |

with .

References

- Pirandola et al. (2020) S. Pirandola, U. L. Andersen, L. Banchi, M. Berta, D. Bunandar, R. Colbeck, D. Englund, T. Gehring, C. Lupo, C. Ottaviani, J. L. Pereira, M. Razavi, J. S. Shaari, M. Tomamichel, V. C. Usenko, G. Vallone, P. Villoresi, and P. Wallden, Adv. Opt. Photon. 12, 1012 (2020).

- Gisin et al. (2002) N. Gisin, G. Ribordy, W. Tittel, and H. Zbinden, Rev. Mod. Phys. 74, 145 (2002).

- Grosshans and Grangier (2002a) F. Grosshans and P. Grangier, Phys. Rev. Lett. 88 (2002a).

- Gisin et al. (2004) N. Gisin, G. Ribordy, H. Zbinden, D. Stucki, N. Brunner, and V. Scarani, Physics 6, 85 (2004).

- Scarani et al. (2009) V. Scarani, H. Bechmann-Pasquinucci, N. J. Cerf, M. Dušek, N. Lütkenhaus, and M. Peev, Rev. Mod. Phys. 81, 1301 (2009).

- Chen et al. (2023) Z. Chen, X. Wang, S. Yu, Z. Li, and H. Guo, npj Quantum Information 9 (2023), 10.1038/s41534-023-00695-8.

- Grosshans and Grangier (2002b) F. Grosshans and P. Grangier, Phys. Rev. Lett. 88, 057902 (2002b).

- Lodewyck et al. (2007) J. Lodewyck, M. Bloch, R. García-Patrón, S. Fossier, E. Karpov, E. Diamanti, T. Debuisschert, N. J. Cerf, R. Tualle-Brouri, S. W. McLaughlin, and P. Grangier, Phys. Rev. A 76, 042305 (2007).

- Fossier et al. (2009) S. Fossier, E. Diamanti, T. Debuisschert, R. Tualle-Brouri, and P. Grangier, J. Phys. B: At. Mol. Opt. Phys. 42 (2009).

- Guo et al. (2017) Y. Guo, Q. Liao, Y. Wang, D. Huang, P. Huang, and G. Zeng, Phys. Rev. A 95 (2017).

- Li et al. (2016) Z. Li, Y. Zhang, X. Wang, B. Xu, X. Peng, and H. Guo, Phys. Rev. A 93, 012310 (2016).

- Liao et al. (2022) Q. Liao, Z. Wang, H. Liu, Y. Mao, and X. Fu, Phys. Rev. A 106, 022607 (2022).

- Liao et al. (2018) Q. Liao, Y. Guo, D. Huang, P. Huang, and G. Zeng, New J. Phys. 20 (2018).

- Becerra et al. (2013) F. E. Becerra, J. Fan, G. Baumgartner, J. Goldhar, J. T. Kosloski, and A. Migdall, Nat. Photon. 7, 147 (2013).

- Liao et al. (2020a) Q. Liao, G. Xiao, H. Zhong, and Y. Guo, New J. Phys. 22, 083086 (2020a).

- Pirandola et al. (2015) S. Pirandola, C. Ottaviani, G. Spedalieri, C. Weedbrook, S. L. Braunstein, S. Lloyd, T. Gehring, C. S. Jacobsen, and U. L. Andersen, Nat. Photon. 9, 397 (2015).

- Cover and Hart (1967) T. Cover and P. Hart, IEEE Transactions on Information Theory 13, 21 (1967).

- Ekert and Jozsa (1996) A. Ekert and R. Jozsa, Rev. Mod. Phys. 68, 733 (1996).

- Grover (1996) L. K. Grover, in Proceedings of the twenty-eighth annual ACM symposium on Theory of computing (1996) pp. 212–219.

- Wiebe et al. (2012) N. Wiebe, D. Braun, and S. Lloyd, Phys. Rev. Lett. 109, 050505 (2012).

- Harrow et al. (2009) A. W. Harrow, A. Hassidim, and S. Lloyd, Phys. Rev. Lett. 103, 150502 (2009).

- Rebentrost et al. (2014) P. Rebentrost, M. Mohseni, and S. Lloyd, Phys. Rev. Lett. 113, 130503 (2014).

- Aïmeur et al. (2007) E. Aïmeur, G. Brassard, and S. Gambs, in Proceedings of the 24th international conference on machine learning (2007) pp. 1–8.

- Aïmeur et al. (2013) E. Aïmeur, G. Brassard, and S. Gambs, Machine Learning 90, 261 (2013).

- Leverrier and Grangier (2009) A. Leverrier and P. Grangier, Phys. Rev. Lett. 102, 180504 (2009).

- Leverrier and Grangier (2011) A. Leverrier and P. Grangier, Phys. Rev. A 83, 042312 (2011).

- Van Der Heijden et al. (2005) F. Van Der Heijden, R. P. Duin, D. De Ridder, and D. M. Tax, Classification, parameter estimation and state estimation: an engineering approach using MATLAB (John Wiley & Sons, 2005).

- Tan et al. (2016) P.-N. Tan, M. Steinbach, and V. Kumar, Introduction to data mining (Pearson Education India, 2016).

- Stigler (1986) S. M. Stigler, The history of statistics: The measurement of uncertainty before 1900 (Harvard University Press, 1986).

- Waggener et al. (1995) B. Waggener, W. N. Waggener, and W. M. Waggener, Pulse code modulation techniques (Springer Science & Business Media, 1995).

- Leverrier (2015) A. Leverrier, Phys. Rev. Lett. 114, 070501 (2015).

- Wiebe et al. (2014) N. Wiebe, A. Kapoor, and K. Svore, arXiv preprint arXiv:1401.2142 (2014).

- Brassard et al. (2002) G. Brassard, P. Hoyer, M. Mosca, and A. Tapp, Contemporary Mathematics 305, 53 (2002).

- Grover (1997) L. K. Grover, Phys. Rev. Lett. 79, 325 (1997).

- Hastie et al. (2001) T. Hastie, R. Tibshirani, J. H. Friedman, and J. H. Friedman, The elements of statistical learning: data mining, inference, and prediction, Vol. 2 (Springer, 2001).

- Fawcett (2006) T. Fawcett, Pattern Recognition Letters 27, 861 (2006).

- Bradley (1997) A. P. Bradley, Pattern Recognition 30, 1145 (1997).

- Cormen et al. (2022) T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein, Introduction to algorithms (MIT press, 2022).

- Hai et al. (2022) V. T. Hai, P. H. Chuong, et al., Informatica 46 (2022).

- Durr et al. (2006) C. Durr, M. Heiligman, P. Høyer, and M. Mhalla, SIAM Journal on Computing 35, 1310 (2006).

- Denys et al. (2021) A. Denys, P. Brown, and A. Leverrier, Quantum 5, 540 (2021).

- Liao et al. (2020b) Q. Liao, G. Xiao, C.-G. Xu, Y. Xu, and Y. Guo, Phys. Rev. A 102, 032604 (2020b).

- Ghorai et al. (2019) S. Ghorai, P. Grangier, E. Diamanti, and A. Leverrier, Phys. Rev. X 9, 021059 (2019).

- Liao et al. (2021) Q. Liao, H. Liu, L. Zhu, and Y. Guo, Phys. Rev. A 103, 032410 (2021).

- Boyer et al. (1998) M. Boyer, G. Brassard, P. Høyer, and A. Tapp, Fortschritte der Physik: Progress of Physics 46, 493 (1998).