High dimensional optimization under non-convex excluded volume constraints

Abstract

We consider high dimensional random optimization problems where the dynamical variables are subjected to non-convex excluded volume constraints. We focus on the case in which the cost function is a simple quadratic cost and the excluded volume constraints are modeled by a perceptron constraint satisfaction problem. We show that depending on the density of constraints, one can have different situations. If the number of constraints is small, one typically has a phase where the ground state of the cost function is unique and sits on the boundary of the island of configurations allowed by the constraints. In this case, there is a hypostatic number of marginally satisfied constraints. If the number of constraints is increased one enters a glassy phase where the cost function has many local minima sitting again on the boundary of the regions of allowed configurations. At the phase transition point, the total number of marginally satisfied constraints becomes equal to the number of degrees of freedom in the problem and therefore we say that these minima are isostatic. We conjecture that by increasing further the constraints the system stays isostatic up to the point where the volume of available phase space shrinks to zero. We derive our results using the replica method and we also analyze a dynamical algorithm, the Karush-Kuhn-Tucker algorithm, through dynamical mean-field theory and we show how to recover the results of the replica approach in the replica symmetric phase.

I Introduction

Several complex systems can be described by a large number of variables whose dynamics is trying to optimize some cost function under a set of constraints. In the most general setting, the cost function is arbitrary and the constraints may be either inequalities or equalities. In this work, we consider the generic situation in which there is a set of real variables and a set of inequality constraints

| (1) |

each characterized by a function and we consider a cost function .

An example of this situation is found in classification problems in machine learning. In the simplest setting of binary classification, one has a set of inequalities that enforce the correct separation of the training set into two classes. The parameters of the machine (that correspond to the variables here) must be adjusted to correctly satisfy these constraints. Therefore, the supervised learning task is recast into a continuous constraint satisfaction problem (CCSP). In many cases one wants to enforce that, once a solution of the problem is found, it satisfies some requirements. For example, in order to prevent numerical instabilities, one would like to have the parameters of the network be quantities that are of the good order of magnitude and do not explode to infinity. To achieve this, one typically uses regularization schemes as, for example, Ridge regularization. In other cases, one is interested in finding sparse networks in which many of the parameters are zero. This can also be achieved by imposing an appropriate cost function on the parameters Neyshabur (2020).

In all these cases, the machine performing the classification task is defined by the form of the functions and the regularizing cost function is given by which introduces some penalty to prevent that the parameters of the network behave in a way that is not the desired one. In this work, we will be rather generic and we will not focus on any practical application. We will rather focus on the characterization of the landscape of the cost function subjected to the constraints . In particular, we want to find the ground state of the cost function defined by

| (2) |

In order to have a well-posed problem, we will assume that there exists a region of the phase space of such that all constraints are satisfied. We will call this region the SAT region (the terminology comes from constraint satisfaction problems in computer science Franz et al. (2017)). Examples of optimization problems as Eq. (2) can be easily constructed and classified according to the properties of the corresponding cost function. We list here just a set of general cases in which the cost function is:

-

•

separable: this means that each degree of freedom tries to optimize a cost function that is independent of the configuration of the other degrees of freedom. An example in this class is

(3) but other non-convex potentials with local minima may be chosen.

-

•

non-separable and convex: the cost function of each degree of freedom depends on the configuration of part or the whole system and the global cost is a convex function when it is considered in the whole phase space (in absence of the excluded volume constraints). As an example one can consider:

(4) being i.i.d. random vectors. Another example is the following: consider the vector to be unconstrained. Then one wants to minimize the volume of the phase space of the vector , for example by minimizing , such that there is a configuration that satisfies all constraints. This problem is related to the packing problem and has been analyzed in Franz and Parisi (2016); Franz et al. (2015, 2017); Yoshino (2018); Brito et al. (2018); Franz et al. (2019a); Ikeda et al. (2019); Ikeda (2020), see also Geiger et al. (2020) for a review with the corresponding connection to deep learning.

-

•

non-separable and non-convex: we have again a non-separable cost which is also non-convex. As an example, one can think of high dimensional random Gaussian functions Castellani and Cavagna (2005)

(5) and the coupling constants are Gaussian variables of the order of . In other words, one can superimpose a spin glass landscape on top of a continuous constraint satisfaction problem (CCSP) identified by the set of constraints.

This class of optimization problems is quite vast. In the following, we will consider the simplest case of having a simple separable convex optimization cost and the simplest set of non-convex excluded volume constraints. We will show that the non-convexity in the constraints brings about glassiness and marginal stability even when the cost function is convex by itself.

II A toy model

To be concrete we consider a simple toy model. We assume that each variable wants to minimize a simple convex cost function but it is constrained to be in a possibly non-convex region of phase space. We consider the simplest cost function that is

| (6) |

and we want to minimize it under the constraints that

| (7) |

with

| (8) |

where is a random vector whose components are Gaussian random variables with zero mean and unit variance. Furthermore, we constrain to be on the -dimensional sphere . The constraints in Eqs. (7) and (8) define the perceptron continuous constraint satisfaction problem (CCSP). Eqs. (6), (7) and (8) define a quadratic programming problem with inequality constraints Boyd and Vandenberghe (2004). However, as we will see, the topology of the phase space and the nature of the constraints are such that the global optimization problem can become non-convex.

In Gardner (1988); Gardner and Derrida (1988); Franz and Parisi (2016); Franz et al. (2017) the properties of the space of solutions of this CCSP have been extensively studied. As a function of , one has a critical value of the density of constraints below which the CCSP is SAT, meaning that with probability one (for ) the phase space defined by the constraints has a finite positive volume, and above which the problem is UNSAT, meaning that there is no configuration of that satisfies all constraints.

In Franz et al. (2017) the UNSAT phase of the perceptron has been studied by associating a harmonic energy cost to the unsatisfied constraints. In Franz and Parisi (2016) the SAT phase of the perceptron CCSP has been characterized. The present setting, instead, is quite different: we still consider the SAT phase of the perceptron CCSP, corresponding to , but on top of it we want to optimize the cost function of Eq. (6). It is therefore a problem of constrained optimization.

The nature of the constraints changes completely when passes from being positive, where the CCSP is convex Franz and Parisi (2016), to a negative value, where the solution of the CCSP lies at the intersection of non-convex domains which may be in general non-convex.

In order to study the properties of the landscape of this optimization problem, we consider the partition function defined as

| (9) |

where is the inverse of the temperature and is the Heaviside step function. Given the partition function, one constructs the average free energy as

| (10) |

where the overline denotes the average over the random vectors s. To compute the properties of the landscape of the cost function, we need to study the behavior of the free energy in the zero-temperature limit .

The average of the logarithm of the partition function can be computed using the replica method Mézard et al. (1987)

| (11) |

The average over the -th power of the partition function can be obtained by considering to be integer and then taking the analytic continuation down to . Performing the integral over the random vectors s (the details are essentially very close to what is reported in Franz et al. (2017) and we will omit them) we obtain

| (12) |

where is an action and is an integration matrix. For we can evaluate the integral by saddle point. The expression of the function is given by

| (13) |

where we have assumed that independently on 111The fact that is independent on the replica index is a property that holds at saddle point. Indeed one can show that at the saddle point which is therefore independent of .. At the saddle point we have the following interpretation for the overlap matrix :

| (14) |

Therefore is an order parameter that quantifies the overlap between the configuration and the minimum of , while , for , is the overlap between the configurations of different replicas of the system. Note that the spherical constraint on imposes that . The local partition function is given by

| (15) |

where we have defined as the matrix obtained from by removing the first row and column. The saddle point equations for the matrix can be obtained in full generality following the same steps as in Franz et al. (2017). Here we will not repeat the computation and we will consider the replica symmetric solution and its stability.

II.1 The replica symmetric solution and its stability

The replica symmetric ansatz to the solution of the saddle point equations amounts to have for all . The order parameters and are then given by the following saddle point equations

| (16) |

where

| (17) |

and we used the notation according to which the prime is the derivative w.r.t. , denotes a convolution operation while

| (18) |

The stability condition of the replica symmetric solution is given by studying the Hessian of the function De Almeida and Thouless (1978); Mézard et al. (1987); Franz et al. (2017). The replica symmetric solution is stable if the saddle point solution satisfies the inequality

| (19) |

When Eq. (19) becomes a strict equality, the model enters in a glassy regime where multiple minima appear, and the model is characterized by replica symmetry breaking and marginal stability Mézard et al. (1987). The equations (16)-(19) have been derived in the finite temperature regime. However, we are interested in looking at the zero-temperature limit which corresponds to the ground state of the cost function. In order to take the limit, we assume that in this regime

| (20) |

which gives . Substituting this relation in the first equation of Eqs. (16) we get that satisfies

| (21) |

Furthermore, in the zero-temperature limit, we have

| (22) |

which gives

| (23) |

The marginal stability of the RS solution is given by

| (24) |

This relation defines the region in which replica symmetry breaking (RSB) appears. Note that Eq. (20) requires to be positive since is constrained to be . The phase diagram of the model is plotted in Fig. 1 where we considered only the case of which gives rise to non-convex excluded volume constraints.

The red line in the phase diagram represents the replica symmetric approximation for the SAT/UNSAT (jamming) transition line. Above this line, there is no configuration of that satisfies all constraints and therefore the optimization problem makes sense only in the region below this line. This line is simply obtained by taking the limit of the saddle point equations. Indeed, above this line no physically meaningful solution should exist. Therefore this line coincides with the one obtained in Franz and Parisi (2016). Another way to see that the is the right limit to get the SAT/UNSAT line is by noting that at saddle point . We expect that, on this line, only one solution of the CCSP is left and, by rotational invariance of the disorder, it is orthogonal to the global minimum of the cost function. This implies that .

The dashed green line instead corresponds to the line that separates a strictly stable phase below it, where the ground state of the cost function is unique and not critical, from a marginally stable phase where the landscape of the cost function is glassy and local minima are marginally stable. Note that the CCSP defined by the perceptron constraints also undergoes a replica symmetry breaking transition where the space of solutions disconnects before reaching the red line. However this line, plotted also in Fig. 1, is different from the one signaling the onset of glassiness of the cost function and, in particular, it is closer to the SAT/UNSAT transition line. Therefore we get that even if the CCSP is replica symmetric, the landscape of the cost function may be glassy.

II.2 The distribution of gaps

To characterize the phase diagram, we compute the distribution of the gaps defined as the values of computed in the configuration that represents the minimum of the cost function. Using the same approach of Franz et al. (2017), we can show that the distribution of is given by

| (25) |

In the replica symmetric region, we have that is given by

| (26) |

and

| (27) |

Therefore, when the replica symmetric solution becomes marginally stable, the system becomes isostatic, corresponding to , meaning that the number of marginally satisfied constraints equals the number of degrees of freedom in the system. At this point we may ask what happens in the glassy phase, beyond the instability line where the replica symmetric solution breaks down. We do not attempt the RSB solution of the model but we give a simple argument. At the jamming point we know that the allowed phase space of configurations shrinks to a point. This point is jamming critical and isostatic Franz et al. (2017). Therefore we get that, at jamming as well as at the instability transition point, the system is isostatic. We, therefore, conjecture that the RSB phase is again isostatic and jamming critical. This would imply that we have another optimization problem for which the landscape is critical everywhere far from jamming as it happens for other cases Franz et al. (2019b, 2020); Sclocchi and Urbani (2021).

III Karush-Kuhn-Tucker Dynamics

In order to solve constrained optimization problems, one can rely on the so called Karush-Kuhn-Tucker (KKT) conditions. We consider a dynamical version of such conditions given by the following dynamical system

| (28) |

where is a Lagrange multiplier needed to enforce the spherical constraint on the variables . It is simple to show that a fixed point of these equations is a solution of the optimization problem. Indeed if at long times , we have . Instead if we have we have . The variables , which are the Lagrange multipliers of the KKT conditions, are nothing but the forces that the constraints are exerting in order to fix the corresponding variables s to zero. Therefore this system of equations, when it reaches a fixed point, directly gives the statistics of forces corresponding to the that are identically equal to zero.

This set of dynamical equations can be analyzed exactly in the limit through dynamical mean-field theory. We first start by considering the case in which the dynamical system is initialized at random for the while we will fix which is a convenient choice. Then, to analyze the dynamics in the limit, we consider the Martin-Siggia-Rose-Jensenn-De-Dominicis dynamical path integral Castellani and Cavagna (2005) defined as

| (29) |

where the dynamical action is given by

| (30) |

and the overline stands for the average over the random patterns. Taking the average over these vectors gives

| (31) |

where we have introduced the dynamical order parameters

| (32) |

As usual, at the saddle point level by causality. The single gap effective process then gives the equation

| (33) |

where the statistics of the noise is Gaussian and given by

| (34) |

Finally we have

| (35) |

Note that Eq.(33) must be understood as a distributional equation. Furthermore, we have used that at the initial time forces are initialized to . This initialization does not affect the endpoint of the dynamics and provides a way to enforce that all forces are either zero or positive. The single-site effective process is instead

| (36) |

where the statistics of the Gaussian noise is given by

| (37) |

and the kernel is defined as

| (38) |

where the perturbation is an additive perturbation on the right hand side of Eq. (33). Therefore we obtain the following equations for correlation, response and magnetization

| (39) |

Note that the Lagrange multiplier can be obtained directly from the equation for .

From these dynamical equations it follows that

| (40) |

which is a relation connecting the response function to the magnetization .

III.1 The replica symmetric solution

Let us consider what happens in the region where replica symmetry is unbroken. If we define

| (41) |

we immediately have

| (42) |

Considering Eq.(33) and taking the limit we get that

| (43) |

being a normal Gaussian random variable. This equation tells us that if then is a Gaussian random variable constrained to be such that is positive. Therefore the gap distribution is

| (44) |

On the other hand, if then and its distribution coincides with the one of the random variable with the constraint that . Finally we need to establish an equation for and show that it coincides with the one coming from the replica approach. We consider the long time limit of the equation for which gives

| (45) |

where

| (46) |

On the other hand the long time limit of the equation for is obtained by considering the long time limit of the equation for . This gives

| (47) |

which can be combined with Eq.(45) to get

| (48) |

From the definition of we finally have

| (49) |

and therefore using Eqs. (48) and (49) we get Eq. (16). This concludes the derivation of the replica symmetric equation from the KKT dynamics.

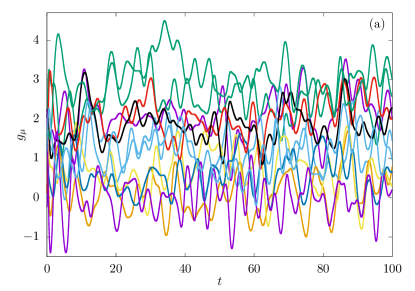

We implemented numerically the algorithm of Eq. (28) and we found that in the RS phase the algorithm goes very quickly to the solution of the optimization problem. In the RSB phase, instead, it seems that the algorithm does not converge and therefore we believe that in this region it makes a chaotic surf on the isostatic landscape.

IV Conclusions

We have considered the generic setting of optimization problems under non-convex excluded volume constraints. We have analyzed a simple example of this kind in which the cost function is separable and convex and in which the non-convex excluded volume constraints are modeled by a negative perceptron.

We have found that, when the number of constraints is low enough, the cost function has a simple ground state which is captured by the replica symmetric solution of the model. At high density of constraints, instead, the optimization landscape undergoes an RSB transition where minima become marginally stable. Remarkably, we find that the RSB transition point happens before the point in which the accessible phase space defined by the constraints splits into glassy regions. We have also shown how to recover these results from the dynamical mean-field theory of the KKT algorithm. We leave the analysis of more complex cost functions for future work.

Acknowledgements.

This work was supported by ”Investissements d’Avenir” LabExPALM (ANR-10-LABX-0039-PALM). A.S. thanks LPTMS where part of this work has been done and the Simons Foundation Grant (No. 454941 Silvio Franz and No. 454953 Matthieu Wyart) for support.References

- Neyshabur (2020) B. Neyshabur, in Advances in Neural Information Processing Systems, Vol. 33, edited by H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin (Curran Associates, Inc., 2020) pp. 8078–8088.

- Franz et al. (2017) S. Franz, G. Parisi, M. Sevelev, P. Urbani, and F. Zamponi, SciPost Physics 2, 019 (2017).

- Franz and Parisi (2016) S. Franz and G. Parisi, Journal of Physics A: Mathematical and Theoretical 49, 145001 (2016).

- Franz et al. (2015) S. Franz, G. Parisi, P. Urbani, and F. Zamponi, Proceedings of the National Academy of Sciences 112, 14539 (2015).

- Yoshino (2018) H. Yoshino, SciPost Phys 4, 040 (2018).

- Brito et al. (2018) C. Brito, H. Ikeda, P. Urbani, M. Wyart, and F. Zamponi, Proceedings of the National Academy of Sciences 115, 11736 (2018).

- Franz et al. (2019a) S. Franz, S. Hwang, and P. Urbani, Physical Review Letters 123, 160602 (2019a).

- Ikeda et al. (2019) H. Ikeda, P. Urbani, and F. Zamponi, Journal of Physics A: Mathematical and Theoretical 52, 344001 (2019).

- Ikeda (2020) H. Ikeda, Physical Review Research 2, 033220 (2020).

- Geiger et al. (2020) M. Geiger, L. Petrini, and M. Wyart, arXiv preprint arXiv:2012.15110 (2020).

- Castellani and Cavagna (2005) T. Castellani and A. Cavagna, Journal of Statistical Mechanics: Theory and Experiment 2005, P05012 (2005).

- Boyd and Vandenberghe (2004) S. Boyd and L. Vandenberghe, Convex optimization (Cambridge university press, 2004).

- Gardner (1988) E. Gardner, Journal of physics A: Mathematical and general 21, 257 (1988).

- Gardner and Derrida (1988) E. Gardner and B. Derrida, Journal of Physics A: Mathematical and general 21, 271 (1988).

- Mézard et al. (1987) M. Mézard, G. Parisi, and M. A. Virasoro, Spin glass theory and beyond (World Scientific, Singapore, 1987).

- De Almeida and Thouless (1978) J. De Almeida and D. J. Thouless, Journal of Physics A: Mathematical and General 11, 983 (1978).

- Franz et al. (2019b) S. Franz, A. Sclocchi, and P. Urbani, Physical review letters 123, 115702 (2019b).

- Franz et al. (2020) S. Franz, A. Sclocchi, and P. Urbani, SciPost Physics 9, 012 (2020).

- Sclocchi and Urbani (2021) A. Sclocchi and P. Urbani, SciPost Phys. 10, 13 (2021).

- Dormand and Prince (1980) J. R. Dormand and P. J. Prince, Journal of computational and applied mathematics 6, 19 (1980).