Hierarchical Knowledge Guided Learning for Real-world Retinal Diseases Recognition

Abstract

In the real world, medical datasets often exhibit a long-tailed data distribution (i.e., a few classes occupy the majority of the data, while most classes have only a limited number of samples), which results in a challenging long-tailed learning scenario. Some recently published datasets in ophthalmology AI consist of more than 40 kinds of retinal diseases with complex abnormalities and variable morbidity. Nevertheless, more than 30 conditions are rarely seen in global patient cohorts. From a modeling perspective, most deep learning models trained on these datasets may lack the ability to generalize to rare diseases where only a few available samples are presented for training. In addition, there may be more than one disease for the presence of the retina, resulting in a challenging label co-occurrence scenario, also known as multi-label, which can cause problems when some re-sampling strategies are applied during training. To address the above two major challenges, this paper presents a novel method that enables the deep neural network to learn from a long-tailed fundus database for various retinal disease recognition. Firstly, we exploit the prior knowledge in ophthalmology to improve the feature representation using a hierarchy-aware pre-training. Secondly, we adopt an instance-wise class-balanced sampling strategy to address the label co-occurrence issue under the long-tailed medical dataset scenario. Thirdly, we introduce a novel hybrid knowledge distillation to train a less biased representation and classifier. We conducted extensive experiments on four databases, including two public datasets and two in-house databases with more than one million fundus images. The experimental results demonstrate the superiority of our proposed methods with recognition accuracy outperforming the state-of-the-art competitors, especially for these rare diseases.

Index Terms:

Deep learning, retinal diseases, long-tailed classification, multi-label classification, fundus imagesI Introduction

Retinal diseases such as diabetic retinopathy (DR) and glaucoma are the leading causes of blindness [1, 2]. These diseases usually have mild manifestations in the early stages, but would cause irreversible damage to the optic nerves in the later stages [3]. Triage screening with a fundus camera or optical coherence tomography (OCT) instrument is essential for early detection of these retinal diseases. However, manual fundus screening requires ophthalmologists to review and report fundus images, which is time-consuming.

The automated screening of retinal diseases has long been recognized and has attracted much attention [4, 5]. Recent studies have demonstrated the success of deep learning-based models for screening retinal diseases such as diabetic retinopathy (DR) and glaucoma [2, 6]. Gargeya et al. [7] used features extracted from CNN and metadata information fed into a decision tree model for binary classification. Araújo et al. [8] proposed a multi-instance learning and uncertainty-based framework for DR grading. For glaucoma detection, the optic disc-to-cup (OD/OC) ratio is always considered. Dos et al. [9] designed a phylogenetic diversity index module for semantic feature extraction for glaucoma diagnosis. Fu et al. [6] proposed a novel disc-aware ensemble network, which integrates the deep hierarchical context of the global fundus image and the local optic disc region.

Many existing works proved to be effective for detecting some specific diseases and lesions under a controlled test environment [2, 6, 8]. However, the pathological changes in the fundus are diverse and heterogeneous in appearance, and comorbidity is difficult to define. For example, more than 40 types of retinal diseases have been categorized in modern ophthalmology research [11, 12], and many of them, such as Coats’ disease, retinoschisis, are rare diseases, resulting in a long-tail characteristic in terms of pathology distribution for modeling. From a machine learning perspective, those diseases and lesions that are underrepresented in the training set may not perform well in real clinical testing settings due to a lack of recognition of the corresponding pathologies. Furthermore, diagnosing the presence of multiple diseases is very critical in clinical practice, especially for those rare diseases that are easily ignored clinically.

Recently, the diagnosis of multiple retinal diseases, often defined as a multi-label classification task, using deep neural networks has attracted much attention. Wang et al. [13] developed a three-stream framework to detect 36 retinal lesions/diseases. Quellec et al. [14] used few-shot learning to perform rare pathology detection on the OPHDIAT dataset [15], which consists of 763,848 fundus images with 41 conditions. Ju et al. [12] used prior knowledge (e.g., the region information) to improve the detection of 48 retinal diseases. A recently published dataset [10] covers 53 classes of fundus diseases that may appear in clinical screening. As shown in Fig. 1, this dataset has a typical long-tailed distribution and the ratio of head classes to tail classes exceeds 100. The problem of severe class imbalance is very common in many medical datasets. Although the above methods show promising results for the diagnosis of various retinal diseases, there are obvious limitations: (1) The human expert knowledge in the medical domain/ophthalmology, such as hierarchical information, is underrepresented. Leveraging such information helps to alleviate the training difficulty in a more orderly way [13, 12]. (2) Some cases may have more than one retinal disease, leading to a label co-occurrence problem [16]. (3) Most existing works only train the model from a small database (less than one hundred thousand images), and therefore the generalization ability of the models in a real-world scenario is hardly guaranteed.

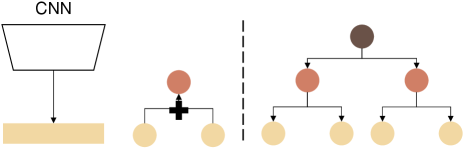

In this work, we propose a novel framework for real-world retinal disease recognition. Our proposed method is well applicable to learning from long-tailed data distributions. Our motivation is based on two main observations. First, incorporating the hierarchical information into the model would benefit the feature representation learning for those similar classes with shared features, which can come from majority and minority classes [17]. Second, the model learning from the original imbalanced distribution obtains a better feature representation, i.e., convolutional layers, while the model learning from a rebalanced distribution shows a fairer and more unbiased classifier [18, 19], i.e., a fully connected layer. Following these two observations, we first exploit the prior knowledge in retinal diseases, i.e., the hierarchical information for all categories organized from coarse to fine, to help the model learn better representations in a hierarchy-aware manner. Then, we use the regular instance-balanced sampling and a novel instance-wise class-balanced sampling to train two teacher models for the feature-balanced representation and less biased classifier, respectively. Finally, we distill the knowledge from the two teacher models into a unified student model. Our main contributions are summarized below:

-

1.

We propose to improve the learning of the feature representation during the model training by injecting hierarchical information as prior knowledge. This method can learn a well-generalized feature representation from semantic information, which facilitates the recognition of similar diseases.

-

2.

We propose a novel hybrid knowledge distillation method to help the model learn from multiple teacher models simultaneously. This results in a feature-balanced representation and a less biased classifier learned by the student model.

-

3.

Experimental results on two public and two in-house datasets demonstrate that our methods can generalize well to various datasets and surpass the existing state-of-the-art methods.

-

4.

To the best of our knowledge, this is the first work to recognize more than 50 kinds of retinal diseases from the in-house database of more than one million fundus images. We collaborate with more than 10 experts in ophthalmology and release a carefully designed three-level hierarchical tree covering more than 100 retinal diseases. We hope that it can contribute to the research community to address the challenges.

II Related Work

II-A Retinal Diseases Recognition

The computer-assisted diagnosis (CAD) methods for retinal diseases such as DR, Glaucoma and age-related macular degeneration (AMD) have been long recognized. Gulshan et al. [2] and Gargeya et al. [7] proposed to use a CNN for binary classification of with/without DR. Zhang et al. [20] presented an ensemble strategy to perform two-class and four-class classifications. Some methods [21, 22, 23] proposed to combine the segmentation results and grading results to provide the interpretability with the internal correlation between DR levels and lesions. Besides the OC/OD area-based method [6] mentioned above, there are some previous works that generate evidence maps for glaucoma diagnosis. Zhao et al. [24] proposed a two-stage cascaded approach that obtains unsupervised feature representation of fundus image with a CNN and cup-disc-ratio value regression by random forest regressor. The automated diagnosis of AMD has also been studied [25, 26]. Ju et al. [27] leveraged the common features between DR and AMD, then improve both accuracy under a knowledge distillation and multi-task manner.

Although some specific retinal diseases are fully studied, the condition of the fundus is complex. Most of the existing methods do not promise the robustness and ability to diagnose other diseases, especially rare ones. Kaggle EyePACS dataset [28] consists of 35,126 training images graded into five DR stages. However, it is found that there are at least more than 30 kinds of retinal diseases that are mislabeled by the original annotators [10]. Ignoring those out-of-distribution categories leads to a huge risk. Wang et al. [13] first used a multi-task framework to detect 36 kinds of retinal diseases, respectively. However, it requires extra annotations for the location of the optic disc and macula area. Quellec et al. [14] also took the features among diseases into consideration and leveraged the few-shot learning technique to perform rare pathologies recognition. The existing methods are inspiring but show some limitations: those methods consider the prior knowledge of retinal diseases, such as the common features (lesions) and locations shared by some diseases, but still lack direct insights for leveraging some prior knowledge or cognitive laws.

II-B Long-tailed Classification

We group existing methods for long-tailed learning into three main categories based on their main technical contributions, i.e., re-sampling, re-weighting, and feature sharing.

Re-smapling methods try to balance the distribution by over-sampling the minority-class samples or under-sampling the majority-class samples. Kang et al. [19] proposed a two-stage training strategy to train the feature extractor and classifier from a uniform and re-sampling distribution, respectively. Zhou et al. [18] proposed to design a two-branch network to achieve one-stage training with the same idea. Wang et al. [29] presented a curriculum learning-based sampling scheduler. Most of these works focus on single-label datasets. For multi-label datasets, over-sampling of minority class will sometimes sample the majority class at the same time due to the label co-occurrence and therefore leads to a new imbalanced condition. To handle this challenge, Wu et al. [16] extended the re-sampling strategy into a multi-label scenario and introduced a regularization term to overcome the over-suppression from negative samples.

Re-weighting methods aim to design robust loss functions for learning from imbalanced data. Focal loss [30] computes weight for each training sample and achieves Hard Samples Mining according to the prediction probabilities. The increase in the sample brings about a diminishing return on performance for those majority classes. Cui et al. [31] proposed to assign a new variable to increase the benefit from those effective samples. Cao et al. [32] proposed an effective training strategy, which allows the model to learn an initial representation while avoiding some of the complications associated with re-weighting or re-sampling.

Feature Sharing methods aim to learn the general knowledge from the majority classes and then transfer it to the minority classes. Liu et al. [33] proposed OLTR, which learns a set of dynamic meta embedding to transfer the visual information knowledge of the head to the tail category. Also, they designed a memory set that allows tail categories to utilize relevant head information by calculating the similarity. Xiang et al. [34] found that learning from a less-imbalanced subset suffers from little performance loss and proposed to train multiple shot-based teacher models, then guide the training of a unified student model. Li et al. [35] proposed balanced group softmax (BAGS) to improve long-tailed object detection by grouping the categories with similar numbers of training instances. BAGS implicitly modulates the head and tail classes are sufficiently trained, without requiring any extra sampling for the tail classes.

III Datasets

III-A Dataset Definition

To address the challenge of long-tailed retinal diseases recognition, we evaluate the proposed methods on two in-house and two public datasets with different scales, including the number of samples, the number of classes, the imbalance ratio, and the degree of label co-occurrence, e.g., label cardinality.

Here, we give the quantitative metrics for the main characteristics of long-tailed retinal disease recognition. The first is how imbalanced the original distribution is. Formally, for the original distribution where each indicates a feature vector and each is an associated one-hot label. The indexes of k kinds of categories are sorted from most to least with where denotes the number of instances of the category. Thus, the imbalance ratio can be simply described as . Moreover, as we claimed that there is label co-occurrence among the original long-tailed distribution, and most re-sampling strategies will lead to a new inner-class imbalance [16] (we have a detailed analysis of this in Sec. 6). Here, we introduce several useful indicators for measuring the label-occurrence [36, 37]. The first is label cardinality which indicates the average number of labels per example: . The characteristics of the four datasets are shown in Table I.

| Dataset | RFMiD | ODIR | Retina-100K | Retina-1M |

|---|---|---|---|---|

| Train | 1920 | 7000 | 75714 | 839890 |

| Val | 640 | 1000 | 9335 | 104981 |

| Test | 640 | 2000 | 9477 | 104987 |

| Class | 29 | 12 | 48 | 53 |

| 80 | 309.8 | 828.56 | 78782.86 | |

| 1.2864 | 1.1142 | 1.3439 | 1.5046 |

III-B Public Datasets

| Coarse | Fine |

|---|---|

| Normal | Normal |

| DR | NPDRI, NPDRII, |

| NPDRIII, PDR | |

| Cataract | Cataract |

| Glaucoma | Glaucoma |

| AMD | Dry AMD, Wet AMD |

| Myopia | Pathological myopia |

| Hypertensive retinopathy | Hypertensive retinopathy |

| Others | Others |

III-B1 RFMiD

The RFMiD dataset [38] consists of 3200 fundus images captured using three different fundus cameras with 46 conditions annotated through adjudicated consensus of two senior retinal experts. The RFMiD dataset was originally divided into 1,920 / 640 / 640 images for training / validation / testing. We followed the setting in the RFMiD challenge, the diseases with more than 10 images belong to an independent class and all other disease categories are merged as “OTHER”. This finally constitutes 29 classes (normal + 28 diseases or lesions) for disease classification. Depending on the ratio of the number of samples in a category to the category with the largest number of samples, we assign it to one of the groups many, medium, or few. In the RFMiD dataset, given sorted 29 classes, we have 6 / 8 / 15 classes for groups many / medium / few, respectively. Noted that the annotated classes in RFMiD are a blend of diseases and lesions. That is, a lesion may be present in more than one disease. To address this issue and to better utilize the proposed methods described in Sec. IV-B, we collaborated with experts with rich experience in ophthalmology and build a hierarchy tree with four levels for the RFMiD dataset. For more details, please refer to our supplementary files.

III-B2 ODIR

Ocular Disease Intelligent Recognition (ODIR) [39] is a structured ophthalmic database of 5,000 patients with age, color fundus photographs of left and right eyes, and doctors’ diagnostic keywords. Specifically, the ODIR dataset was originally divided into 8,000 / 1,000 / 2,000 images for training / off-site testing / on-site testing. Annotations were provided by trained human readers with quality control management. The classes of annotations can be divided into two levels: coarse and fine. There are 8 classes at the coarse level: Normal (N), Diabetes (D), Glaucoma (G), Cataract (C), Age-Related Macular Degeneration (A), Hypertension (H), Pathologic Myopia (M), and Other diseases/abnormalities (O), where one or more conditions are given on a patient-level diagnosis. The fine-level annotation is given on the left/right eye. In this work, we selected 12 fine classes to build the hierarchical tree, and the details are shown in Table II. In the ODIR dataset, given sorted 12 classes, we have 3 / 6 / 3 classes for groups many / medium / few, respectively. We directly exploit the coarse/fine annotation design for our proposed hierarchy-aware pre-training.

III-C In-house Datasets

Our collected private datasets were acquired from private hospitals over the time span of 10 years. Some commonly used datasets are also included, such as ODIR and RFMiD. Each image was labeled or relabeled by 3 - 8 senior ophthalmologists. A sample is retained only if more than half of the ophthalmologists are in agreement with the disease label, or the sample will be assigned to re-labeled processing with the discussion of all ophthalmologists. In this study, more than one million samples are selected to form the two datasets, Retina-100K and Retina-1M, which consist of more than 50 kinds of retinal diseases. The Retina-100 K dataset is randomly sampled from the Retina-1M dataset, some rare diseases with no more than 10 instances are not sampled, resulting in the number of categories being only 48. It should be noted that some low-quality fundus images are potentially at risk of being misdiagnosed as cataracts. Therefore, a group of quality measurement categories is also added. In addition, we found that some non-fundus images could also be mistakenly predicted as retinal diseases during the inference stage. Similarly, we added a category for non-fundus images to reduce this risk.

We would like to emphasize that this is the first study that attempts to train the DL-based model for retinal disease recognition from a database with more than one million samples. It can be found that the Retina dataset exhibits an extreme long-tailed distribution with closing to 80,000. It is noticed that, with the increase in the number of samples, the samples of various categories are also enriched, and the label co-occurrence is reduced (e.g., 1.3439 for Retina-100K and 1.5046 for Retina-1M in terms of .) However, there are also some samples that exhibit a high label co-occurrence (e.g., it is counted that 5,326 samples exist more than five diseases in Retina-1M).

The sorted classes are divided into many, medium, and few groups. The cut-off points and the number of samples ratio are many / medium / few 100 / 50 / 10 for Retina-100K; many / medium / few 1000 / 200 / 10 for Retina-1M.

Like the two public datasets mentioned above, all diseases are mapped into a semantic hierarchical tree. Specifically, we regard the 50+ kinds of diseases as the base/finest category, and we divide them into several groups according to their characteristics. There are three hierarchical levels for each base category in total, as shown in Fig. 2. For instance, a sample is labeled as big drusen, and it also belongs to drusen and AMD. There are also three other base categories in the drusen subset: small drusen, medium drusen, and non-macular drusen. For a better overview of how our hierarchies are conducted and further reproducibility, we give a detailed description of the group generation for different hierarchies in our supplementary files.

IV Methodology

In this section, we first define basic notations for long-tailed multi-label classification in retinal disease recognition. In Sec. IV-B, we introduce a novel hierarchy-aware pre-training, which leverages the pre-defined hierarchy of retinal diseases for more efficient representations training. In Sec. IV-C, we analyze how the naive resampling strategies can fail in a multi-label setting. Then we present an instance-wise class-balanced sampling technique to address this challenge. Finally, we propose to use hybrid multiple knowledge distillation to bridge the gap for feature representation and classifier bias following the two-stage methods [18, 19]. The overall training process is outlined in Algorithm 1.

IV-A Problem Definition

Suppose the original data distribution , where are the N instances, and denotes the associated labels. Specially, for a multi-label setting, each sample , where denotes the total number of possible categories and , i.e., = 1 indicates the presence of the category in image and = 0 otherwise. All indexes of category are sorted into in decreasing order with . Our goal is to improve the training of a deep neural network model (feature extractor and classifier ) from the long-tailed fundus database for the recognition of various retinal diseases.

IV-B Pre-Training with Hierarchical Information

The classes of objects in the real world naturally exhibit a hierarchical form, e.g., from domains to species in taxonomy. For instance, we show three datasets both of which define a hierarchy-tree structure for various categories. Here, we define the categories with the finest semantic meaning as child class, some child classes with common features or characteristics in semantics can be grouped into a coarse class, defined as parent class. For example, in COCO [40], the parent class Vehicle consists of several child classes such as bicycle and car. However, those classes share only a little semantic similarity. ETHEC [17] is a widely-used dataset for hierarchical classification. Although Pieris and colia belong to the family Pieridae, it is still difficult to connect them visually.

Compared with natural images, most retinal diseases can be divided into several subclasses as the lesions progress, such as diabetic retinopathy. Therefore, using the hierarchical information to train the retinal disease diagnosis model shows significant advantages. Different from previous work [12], which presented 3 kinds of 2-level relational subsets generation: shot-based, region-based and feature-based. In this study, after the discussion with more than 10 senior ophthalmologists, we refined the hierarchical mapping by taking both region information and feature information into consideration, as we presented in Sec. III-Datasets111We have included a detailed description of hierarchical mapping in our supplementary files.. In the following parts, we will give a detailed explanation of how to incorporate the defined hierarchical information into the training of deep neural networks.

Our main idea is to minimize the classification loss of all categories over different hierarchical levels. Assuming we have hierarchical levels, our target loss could be:

| (1) |

where BCE denotes the binary cross-entropy loss. A potential solution for hierarchy-aware constraints could be per-level classifiers [17]. The model explicitly outputs separate predictions per level for a given image using classifiers. However, per-level classifiers lose connection across different hierarchical levels since the parameters of each classifier are optimized independently.

In this study, we introduce Multi-label Marginalization classifier (MLMC) as an extension of the marginalization classifier [17], which is shown in Fig. 5, to inject the hierarchical information of retinal diseases into the model training. Formally, a single classifier outputs a probability distribution over the final level (level 1) of the class hierarchy (finest classes). Instead of building classifiers for the remaining parent classes of levels, we compute the probability distribution over each one by summing the probability of their corresponding child classes.

Given the parent category in level and its corresponding child classes in level , we can obtain its predicted probabilities for level :

| (2) |

where denotes the sigmoid activation function. Then, we calculate the loss of parent category using general BCE loss:

| (3) |

It should be noted that, for the prediction probabilities of the lowest hierarchy , we directly use the logits from the outputs of fully-connected layer :

| (4) |

Then, losses across all levels are summed for global optimization. Compared with per-level classifiers, although MLMC does not explicitly predict scores of parent classes, the models is still penalized for incorrect predictions across the levels. Also, as we mentioned in Sec. III-B1, for those child classes that could belong to more than one parent class, MLMC still has good generalization ability by simply summing the logits of the corresponding classes to different parent classes.

IV-C Instance-wise Class-balanced Sampling

In this section, we introduce two commonly used sampling strategies in the long-tailed multi-class classification: instance-balanced sampling and class-balanced sampling. Then we give an experimental analysis to explain why these two sampling ways do not work well in a multi-label setting. Finally, we propose to use an instance-wise class-balanced sampling to handle this scenario.

IV-C1 Instance-balanced Sampling

In the multi-label classification, we always train a deep neural network by minimizing a binary cross-entropy (BCE) loss:

| (5) |

where denotes the predicted results for the -th sample in the class of . It can be seen that each example obtains the same sampling probability in a mini-batch during the training. However, the head classes will be sampled more frequently than the tail classes in a long-tailed distribution dataset and the model tends to under-fit those classes with fewer samples, which results in a prediction bias.

IV-C2 Class-balanced Sampling

Given the sampling probability for each sample in a mini-batch, and we have the in an instance-balanced sampling. Instead, class-balanced sampling aims to assign an equal sampling probability for each category in a mini-batch (See Fig. 6-(b)). Class-balanced sampling is a simple but effective trick for training models on imbalanced data and has been demonstrated to be a necessary component in many state-of-the-art works [18, 19]. However, there are two disadvantages, especially for a multi-label setting. First, under an ideal condition, we have examples from kinds of categories in a mini-batch with a batch size of . In this case, the instance-level sampling probability becomes . Since , those examples from the head classes will be less exposed to the model during the training phase, so the learned feature space is incomplete. Second, due to the label co-occurrence, over-sampling an instance that belongs to both of a head class and a tail class can bring a new relative imbalance issue, as shown in Fig. 6-(c).

IV-C3 Instance-wise Class-balanced Sampling

As illustrated in Fig. 6-(c), wrongly sampling those samples with label co-occurrence can release new relative imbalance. To address the above limitations of sampling multi-label data, an intuitive solution is to ’ignore’ those samples covering too many categories and avoid sampling such samples as much as possible. Hence, we present the instance-wise class-balanced sampling (ICS) strategy as [16, 41]. Since we have the expected ideal sampling probability for one sample from category : and the actual sampling probability: . Then, a sampling factor is used to re-balanced the sampling probability , then the BCE loss becomes:

| (6) |

However, we find that some mild diseases which are also in the head class, such as tessellated fundus, exist in more than half of the samples from other categories, which push the sampling factor close to zero and complicates the optimization. Unlike [16] using two hyper-parameters to map the sampling factor near to one, we directly square it so as to increase rapidly, and have the sampling probability for those samples with different co-occurrence degrees discriminative. Hereafter, we indicate ICS as for simplification.

IV-D Hybrid Multiple Knowledge Distillation

Xiang et al. [34] and Ju et al. [12] have explored knowledge distillation in long-tailed classification. The original long-tailed distribution is divided into several subsets, which are in a relatively balanced status. Those subsets are used to train several teacher models, which are then distilled into a unified student model. However, there are some obvious shortcomings: (1) the divided subsets result in an incomplete distribution and limited features representation learned by the teacher model which may constraint the performance of the student model; (2) most performance improvements benefit from the knowledge distillation instead of learning from a relatively balanced subset; (3) some rare diseases can not directly be divided by independent feature-based or region-based rules proposed by [12].

Another observation is that, two-stage learning methods [19, 42] indicates that “training from imbalanced distribution produces a strong feature representation and the instance-based sampling produces a less-biased classifier.” To this end, we propose to enhance the two-stage learning and multiple knowledge distillation by introducing a novel hybrid multiple knowledge distillation method which can distill efficient information for long-tailed learning on both feature-level and classifier-level, i.e., logits-level [43].

Formally, we first trained two teacher models and using general classification loss (e.g., MLMC) and re-sampling classification loss (e.g., ICS-MLMC). Inspired by two-stage training [18, 19, 44] that model learns a good representation for feature extractor under an original distribution, we first leverage the feature distillation from to assist the student model training. Given the student model , The feature-level knowledge distillation can be formulated as follows:

| (7) |

where the extracted feature , and to calculate the cosine distance (similarity) between output features from teacher and student models. Then, the standard KD is used to distill the knowledge from to the student model, which can be formulated as:

| (8) |

where is the hyper-parameter for temperature scaling and is the Kullback-Leibler divergence loss: . Hence, we have the total loss:

| (9) |

where and are used to control the KD loss weights while for prevent from being close to zero. For the simplification of the hyper-parameters searches, we keep here.

Hybrid multiple knowledge distillation enables the teacher model to train from the original distribution using a traditional knowledge distillation manner [43]. In this way, teacher models maintain the complete feature representations, to prevent the student model’s performance from being limited by itself performance bottlenecks.

V Experiments

V-A Implementation Details

We use ResNet-50 [45] with pre-trained weights from ImageNet as our backbone network. The input size is 512 × 512 for two in-house datasets and 224 × 224 for two public datasets, respectively. We apply Adam to optimize the model. The learning rate starts at and decreases ten-fold when there is no drop in validation loss till with the patience of 5 epochs. The and are set as 0.2. The is set as 10. We apply regular data-augmentation transformations during the training phase, such as random crop and flip. Following most long-tailed multi-label works [16, 44], we use the mean average precision (mAP) as the evaluation metric. All the results are calculated after 5-time running with random seeds and a batch size of 128. In all results presented, we report the 5-trial average performance and mean standard deviation. All experiments are implemented using the PyTorch platform and 8 × NVIDIA RTX 3090 GPUs.

| Dataset | Retina-100K | Retina-1M | ||||||

|---|---|---|---|---|---|---|---|---|

| Methods | many | medium | few | average | many | medium | few | average |

| ERM | 70.89 (0.13) | 71.93 (1.26) | 35.90 (3.74) | 59.57 (1.71) | 84.70 (2.06) | 61.57 (1.85) | 41.83 (2.96) | 62.70 (2.29) |

| RS | 65.72 (2.12) | 67.17 (0.93) | 36.99 (1.21) | 56.63 (1.85) | 80.66 (0.82) | 58.45 (0.51) | 42.72 (1.73) | 60.61 (1.02) |

| RW | 71.32 (0.70) | 73.11 (4.67) | 39.03 (3.68) | 61.15 (3.02) | 84.02 (1.45) | 63.98 (3.31) | 43.69 (4.40) | 63.90 (3.05) |

| OLTR [33] | 70.22 (1.52) | 72.08 (0.60) | 39.00 (0.88) | 60.43 (1.00) | 80.10 (0.12) | 62.31 (0.35) | 42.01 (0.49) | 61.47 (0.32) |

| RSKD [12] | 70.65 (2.27) | 73.98 (1.38) | 41.56 (0.47) | 62.06 (1.38) | 80.22 (0.57) | 63.88 (0.40) | 44.10 (2.39) | 62.73 (1.12) |

| Focal Loss [30] | 72.84 (1.97) | 73.37 (1.42) | 39.13 (2.23) | 61.78 (1.87) | 85.74 (1.52) | 62.15 (0.05) | 42.99 (0.59) | 63.63 (0.72) |

| LDAM [32] | 71.24 (3.54) | 73.75 (3.68) | 39.12 (0.86) | 61.37 (2.70) | 85.62 (0.10) | 63.28 (0.69) | 42.05 (1.14) | 63.65 (0.64) |

| CBLoss-Focal [31] | 51.93 (1.30) | 50.66 (3.63) | 20.79 (0.42) | 41.13 (1.78) | 77.14 (0.78) | 50.69 (2.69) | 20.66 (1.10) | 49.50 (1.53) |

| DBLoss-Focal [16] | 72.61 (1.63) | 72.39 (0.53) | 38.59 (0.08) | 61.27 (0.74) | 85.99 (3.08) | 62.15 (2.08) | 43.22 (1.26) | 63.79 (2.14) |

| ASL [46] | 72.94 (0.56) | 73.67 (1.40) | 39.21 (1.63 | 61.94 (1.20) | 85.10 (2.60) | 63.79 (2.43) | 43.45 (1.29) | 64.11 (2.11) |

| baseline-original | 71.18 (1.32) | 72.33 (2.01) | 37.39 (2.35) | 60.29 (1.89) | 85.02 (2.56) | 62.27 (1.03) | 42.17 (1.91) | 63.15 (1.83) |

| baseline-ICS | 70.67 (1.03) | 73.40 (1.91) | 40.95 (2.08) | 61.67 (1.67) | 84.86 (1.12) | 62.39 (2.56) | 43.44 (1.78) | 63.56 (1.82) |

| Ours | 73.86 (1.23) | 74.75 (0.96) | 43.82 (1.36) | 64.14 (1.19) | 85.79 (1.64) | 64.00 (0.92) | 44.28 (1.38) | 64.69 (1.32) |

-

1

The sorted classes are divided into many, medium and few groups and mAPs are calculated accordingly.

-

2

For the global evaluation, we report the average performance of three groups (denoted by average).

-

3

The best and second best performance are marked in red and blue, respectively.

V-B Comparison Study on Two in-house Datasets

In this section, we give a comprehensive comparison study on two in-house datasets with various baselines including some state-of-the-art works for long-tailed classification: (1) Empirical Risk Minimization (ERM); (2) vanilla Re-sampling (RS) ; (3) vanilla Re-weighting (RW); (4) OLTR [33]; (6) Focal Loss [30] ; (7) LDAM [32]; (8) CBLoss [31] (9) DBLoss [16]; (10) ASL [46].

The overall results are shown in Table III. Different baseline methods are grouped by their model designs, such as loss functions. The results are presented by groups many, medium and few to test how each method reacts to classes with different numbers of samples. In the following, we also use the head and tail classes to refer to group many and group medium. We report the average results of the three groups to view the global performance, which is denoted as “average”. To better understand the trade-off between different shot-based groups for different comparison methods, we mark the best and second performance in red and blue, respectively. From the results reported in Table III, among all baselines, ASL achieves the best results - 61.94% mAP of average results over three groups and 52.15% mAP over all classes on Retina-100K, followed by Focal Loss - 61.78% mAP. Moreover, we find that although RS achieves a good performance in the group of few, it brings a catastrophic impact on the many-shot classes (70.89% mAP 65.72% mAP) and medium-shot classes (71.93% mAP 67.17% mAP), resulting from the label co-occurrence in a multi-label setting. However, we fail to obtain a satisfactory performance in terms of CBLoss, which was originally designed for multi-class long-tailed classification.

For our framework, we first train two vanilla teacher models using different sampling strategies under hierarchy-aware constraints as teacher models, denoted by “baseline-original” and “baseline-ICS”, respectively. Then we distill the knowledge from two baseline teacher models into a unified student model under a hybrid distillation manner. Take Retina-100K for instance, the results of “baseline-original” show that the hierarchical pre-training can benefit the ERM baseline model (from 59.57 %mAP to 60.29 %mAP) without using any specific re-sampling strategies. Furthermore, the ICS can further improve the overall performance (from 60.29 %mAP to 61.67 %mAP) with a marginal performance loss in the many-shot group setting (from 71.18 %mAP to 70.67 %mAP). The last row demonstrates the superior performance of our proposed methods, which outperform all competitors on both two datasets Retina-100K and Retina-1M and the superiority holds for all metrics.

| Dataset | RFMiD | |||

|---|---|---|---|---|

| Methods | many | medium | few | average |

| ERM | 70.93 (1.31) | 57.89 (1.22) | 14.85 (1.95) | 47.89 (1.49) |

| RS | 68.67 (1.23) | 61.48 (1.73) | 25.94 (2.51) | 52.03 (1.83) |

| RW | 70.27 (1.30) | 60.00 (1.79) | 18.71 (1.60) | 49.66 (1.56) |

| OLTR | 71.25 (1.13) | 60.22 (1.05) | 20.77 (1.33) | 50.75 (1.17) |

| RSKD | 70.55 (0.59) | 59.63 (0.23) | 22.15 (1.20) | 50.78 (0.67) |

| Focal | 70.65 (1.14) | 55.53 (1.61) | 16.42 (1.92) | 47.53 (1.56) |

| LDAM | 46.67 (2.28) | 3.19 (3.10) | 1.18 (3.36) | 17.01 (2.91) |

| CBLoss-Focal | 67.73 (1.30) | 50.89 (0.75) | 24.65 (1.12) | 47.77 (1.06) |

| DBLoss-Focal | 68.16 (2.52) | 55.27 (2.78) | 18.94 (2.49) | 47.46 (2.60) |

| ASL | 68.25 (1.93) | 58.25 (1.76) | 19.59 (1.67) | 48.70 (1.79) |

| Ours | 70.50 (1.25) | 59.95 (1.79) | 22.83 (1.38) | 51.09 (1.47) |

| Ours (RS) | 69.75 (1.82) | 61.26 (1.82) | 25.98 (1.91) | 52.33 (1.85) |

| Dataset | ODIR | |||||||

|---|---|---|---|---|---|---|---|---|

| Split | Off-Site | On-Site | ||||||

| Methods | many | medium | few | average | many | medium | few | average |

| ERM | 48.47 (0.73) | 46.80 (0.44) | 11.22 (0.56) | 35.50 (0.58) | 50.74 (1.64) | 36.46 (1.86) | 12.79 (1.49) | 33.33 (1.66) |

| RS | 46.34 (1.08) | 49.27 (1.59) | 9.07 (1.57) | 34.89 (1.41) | 47.91 (1.92) | 39.10 (1.53) | 15.35 (1.95) | 34.12 (1.80) |

| RW | 50.56 (0.32) | 48.12 (1.10) | 11.57 (1.91) | 36.75 (1.11) | 51.39 (1.67) | 37.86 (2.24) | 17.92 (1.26) | 35.72 (1.73) |

| OLTR | 47.37 (0.87) | 45.02 (0.56) | 11.86 (0.70) | 34.75 (0.71) | 50.11 (0.66) | 36.01 (1.45) | 20.78 (2.69) | 35.63 (1.60) |

| RSKD | 48.09 (0.20) | 47.78 (0.82) | 10.82 (1.44) | 35.56 (0.82) | 48.89 (1.20) | 38.61 (1.55) | 31.21 (2.64) | 39.57 (1.80) |

| Focal | 46.63 (1.76) | 46.89 (1.45) | 13.32 (2.47) | 35.61 (1.90) | 47.92 (2.52) | 35.41 (2.21) | 10.49 (2.08) | 31.27 (2.27) |

| LDAM | 41.14 (2.42) | 8.22 (2.94) | 0.48 (0.11) | 16.61 (1.82) | 42.97 (2.87) | 5.10 (2.26) | 0.55 (0.22) | 16.21 (1.78) |

| CBLoss-Focal | 39.30 (1.20) | 47.44 (2.50) | 10.00 (1.73) | 32.25 (1.81) | 43.40 (1.44) | 32.31 (1.29) | 8.60 (1.47) | 28.10 (1.40) |

| DBLoss-Focal | 48.39 (1.27) | 47.11 (1.41) | 27.83 (1.34) | 41.11 (1.34) | 50.06 (0.87) | 37.60 (1.07) | 12.96 (1.27) | 33.54 (1.06) |

| ASL | 47.93 (1.78) | 47.89 (1.50) | 18.57 (1.13) | 38.13 (1.47) | 51.69 (0.77) | 37.36 (1.46) | 23.70 (1.79) | 37.58 (1.34) |

| Ours | 49.02 (1.29) | 48.26 (1.68) | 28.05 (1.13) | 41.78 (1.37) | 51.58 (2.27) | 36.82 (1.67) | 28.98 (2.21) | 39.12 (2.05) |

| MLMC | ICS | cRT | Hybrid KD | 100K | 1M |

|---|---|---|---|---|---|

| 59.57 | 62.70 | ||||

| ✓ | 60.29 | 63.15 | |||

| ✓ | 60.44 | 62.88 | |||

| ✓ | ✓ | 61.67 | 63.56 | ||

| ✓ | ✓ | ✓ | 62.28 | 63.99 | |

| ✓ | ✓ | ✓ | 62.35 | 64.36 | |

| ✓ | ✓ | ✓ | ✓ | 64.14 | 64.69 |

V-C Comparison Study on Two Public Datasets

V-C1 RFMiD Dataset

Table IV summarizes the results for the RFMiD dataset. Different from the in-house datasets, it is surprisingly found that RS achieves the best performance among all competitors, which reveals that naive RS can well benefit the test accuracy on the distribution with small ratios, e.g. for the RFMiD dataset. As mentioned above, LDAM requires more time to train, but it still degrades performance terribly even when more training epochs are given. It is also worth noting that almost all of the performance improvements of the comparative methods come from the gains in the tail categories. The resampling-based approaches almost always sacrifice the performance of the head classes, e.g., RS and CBLoss, while the feature-sharing-based approaches almost maintain the performance of the head classes or have only a small loss, e.g., OLTR and RSKD. Our proposed method also achieves competitive results and outperforms all competitors with naive RS applied.

V-C2 ODIR Dataset

Table V summarizes the results for the ODIR dataset. Our proposed methods achieve the best performance for off-site testing and the second-best accuracy for on-site testing only after RSKD with only a small performance gap. We also observe that both LDAM and CBLoss fail to detect most of the categories, especially for those of group few. It indicates that effective sample-based methods do not work well for those datasets with limited available training samples and a high imbalance ratio. ASL achieves the third-best performance only after RSKD and our proposed methods. As a counterpart, we tried to replace it with ICS in our proposed framework, but no significant improvements were observed, and the same for the other loss functions. It indicates that ICS has compact features and is easier to plug and play.

V-D Ablation Study

V-D1 Components Analysis

To figure out which component makes our methods performant, we have performed an ablation study, and the results are shown in Table VI. As can be seen from Table III, we first test our proposed MLMC to have the hierarchical information embedded into the model pre-training. We observe that the overall mAP increases from 59.57% to 60.29%. The adoption of the ICS strategy can also bring a performance gain to the baseline ERM model, with an improvement of 0.87%. The result of ICS indicates that this sampling strategy can reduce the risk of oversampling on those samples with label co-occurrence. Furthermore, we test the effectiveness of combining the MLMC pre-training and ICS strategy, and the overall mAP reaches 61.67%. The “cRT” refers to whether we train the “baseline-ICS” model in a two-stage manner (e.g., cRT [19]), that is, whether we freeze the feature encoders in the second stage. Although cRT works well as an independent trick for training long-tailed datasets in most scenarios, it is optional in our proposed framework. It can be noted that cRT brings obvious improvements for the Retina-100K dataset, but marginal for a large dataset, i.e., Retina-1M. We first train the baseline model with MLMC, but using a regular sampling strategy (e.g., class-balanced sampling). Then we fine tune the model with the ICS strategy, and the overall mAP is 62.28% and 63.99%. Finally, the hybrid KD improves the model the most, with a 1.86% improvement in the average mAP. The same results can be observed in Retina-1M. However, ICS seems to have less performance improvement (only 0.18%) because it has more imbalanced and severe label co-occurrence problems.

V-D2 Distillation

In this section, we evaluate different knowledge distillation techniques. We show the overall results on Retina-100K in Fig. 7 and denote the two basic KD techniques as ’feature-level’ [47] and ’logits-level’ [43], respectively. Our proposed hybrid KD method consists of two basic KD methods with different intermediate outputs from the DL-based model, such as the features from the last convolutional layer (Eq. 7) and the logits from the last FC layer (Eq. 8). Note that since we keep in Eq. 9, the range of coefficient selection for and is between 0 and 0.5. From the results, we can see that both single KD techniques can benefit the baseline model, except when for the feature-level KD, with the 0.95% loss of accuracy.

Next, we investigate the effect of temperature scaling on model performance. Three values are considered and in Fig. 7 outperforms all counterparts when . The best result is obtained with and . However, if we set , the results do not exceed the baseline model for all values except . It can be concluded that a smaller value is beneficial for the KD phase.

V-E Empirical Analysis on Performance Bound

In this part, we aim to investigate the upper bound of the retinal diseases recognition model based on some empirical analysis when training from a long-tailed distribution.

V-E1 Backbones and Training Samples

First, to investigate how different capacities of a DL-based model would affect the performance, we use the variants of ResNet, which have the same concept but with a different number of layers: {ResNet-18, ResNet-34, ResNet-50, ResNet-101, ResNet-152}. We then fix the imbalance ratio and sample subsets from Retina-100K with different numbers of training samples: {10K, 20K, 50K}. The evaluated results are shown in Fig. 8. We have the following findings:

-

1.

When trained with a small-scale dataset, increasing the capacity of the model has minimum effect on the performance, e.g., from 10K / ResNet-18: 46.36% mAP to 10K / ResNet-152: 46.84% mAP with only 0.48% improvement in terms of average performance.

-

2.

In contrast, when using a small capacity version of the model, increasing the training samples only brings marginal performance gain, e.g., for ResNet-50, the available training samples are doubled from 50K to 100K, but only 1.32% mAP improvement in terms of average performance.

-

3.

Rare diseases become easily identifiable when sufficient training samples are provided, e.g., from 100K / ResNet-18: 33.99% mAP to 100K / ResNet-152: 39.78% mAP with 5.79% significant improvement in terms of performance on few-shot classes.

| many | medium | few | avg | |

| 10K / 10K | 61.64 | 59.53 | 18.81 | 46.66 |

| 10K / 100K | 65.74 | 63.67 | 25.94 | 51.78 |

| 20K / 20K | 67.25 | 64.64 | 25.52 | 52.47 |

| 20K / 100K | 68.25 | 66.40 | 29.17 | 54.61 |

| 50K / 50K | 70.16 | 69.91 | 34.67 | 58.25 |

| 50K / 100K | 70.58 | 70.99 | 35.22 | 58.93 |

| 100K / 100K | 70.89 | 71.93 | 35.90 | 59.57 |

V-E2 Decoupling the CNN Components

Here, we follow the decoupling idea [19] to explore the effectiveness of the bottleneck on the long-tailed challenge, which has been posted by [42]. Kang et al. [19] proposed to train the representation from the original imbalanced distribution, then freeze the representation layers and fine-tune on the rebalanced distribution. Zhang et al. [42] gave a detailed analysis of two-stage methods to investigate the performance bottleneck of two components by retraining the classifier from the original balanced distribution. They concluded that “training from an imbalanced distribution helps to learn a good representation, and the performance is largely limited by the biased decision boundary of the classifier.” Here, we perform experiments with similar settings to investigate the bottleneck of our retinal disease recognition model. Since it is difficult to find a balanced distribution due to the co-occurrence of disease labels, we first train the model on a smaller dataset, e.g. Retina-10K, then freeze the representation layers and fine-tune the classifier on a larger dataset, e.g. Retina-100K. The overall results are shown in Table VII. A / B denotes training on A and then fine-tuning on B. It can be seen that although the newly introduced training samples do not enrich the feature space (the representation layers are fixed), the classifier can be improved well. In particular, 50K / 100K reached a performance closer to the upper bound (100K / 100K), which indicates that “training from a relatively small dataset can obtain good and rich feature information, but the biased classifier dominates the decision bound, resulting in a huge performance gap.”

V-F Qualitative Analysis on Hierarchy-aware Pre-training

V-F1 tSNE visualization

To better understand how hierarchy-aware, i.e., MLMC constraints for retinal disease detection, we visualize the learned feature representations of the training results w/wo MLMC over tSNE in Fig. 9. It is surprising to find that the features of these similar classes have close distances at an early stage even without any hierarchy-aware constraints, as Fig. 9 - (a) shows. This may be due to the fact that the common features of these similar classes are naturally shared. However, as the training epochs increase, these similar classes are discriminated by their clear decision boundaries but lose relevance, i.e., larger distances in the feature space, as shown in Fig. 9 - (b). Fig. 9 visualizes the learned representation of our proposed methods. It can be observed that the hierarchy-aware constraints can help to induce closer distances on those similar classes while maintaining good discrimination between each other. Based on the observations, hierarchy-aware constraints can benefit the learning process across different semantic levels with more efficient sharing of common features among those similar classes.

V-F2 Robustness to Shifted Predictions

In this section, we investigate how hierarchy-aware pre-training helps to improve the robustness of the model, especially against these incorrect predictions from several examples. As Fig. 10 shows, we present the predicted probabilities of two models with and without MLMC at both the child and parent levels. The different classes are colored by their corresponding groups. Note that for the w/o MLMC model, we cannot directly obtain its predictions at the parent level. Here, we directly sum the logits of the child categories with a sigmoid activation function as the predicted probability of the corresponding parent category.

For the first case, whose ground truth is “NPDRII, Others”, it is incorrectly predicted as glaucoma and hypertensive retinopathy without MLMC. In contrast, the model with MLMC gives correct predictions at both the child and parent levels. Similarly, the second and third cases, whose ground truth is “Dry AMD, Glaucoma” and “Normal”, are also misdiagnosed as other diseases. MLMC shows robustness to such examples, which are highly correlated, with the additional constraints at the parent level. Note that for the last case, both models give incorrect predictions where the ground truth is “Others”. This shows a limitation of MLMC, which has difficulty recognizing diseases without obvious hierarchical information or shared features in the semantics. A possible solution is to improve the constraints of semantics and granularity of the hierarchical tree.

Hierarchy-aware pre-training shows great robustness to incorrect predictions. For example, in the first case, the categories with high prediction probability all belong to the same parent category “DR”. In this way, even if the model makes an incorrect prediction at the child level, but with the same parent category, e.g., NPDRII to NPDRI, it carries less risk for practical application compared to predicting as an irrelevant category, e.g., glaucoma. We can think of this as a “shifted prediction” rather than an “incorrect prediction”. Shifted predictions retain meaningful information for manual reference, and the risk of delayed referral can be reduced.

V-F3 Grad-CAM Visualization

To more directly reflect the effectiveness of hierarchy-aware pre-training, we are interested in what contributes most to the model’s predictions. Grad-CAM [48] is a technique that uses the gradients to compute the importance of regions on the images with respect to the final convolutional feature maps. The visualization results are shown in Fig. 11, and all examples selected from the ODIR dataset correctly predict at least one category. For the competitors, we focus mainly on three different approaches: ERM, training without hierarchical constraints but with an extended loss function, e.g. ASL, and training with hierarchical constraints, e.g. RSKD and our proposed methods. From the results, we observe that the ERM-based model can give correct predictions but always presents irrelevant regions. ASL successfully localizes some biomarkers for glaucoma and dry AMD, but still loses the ability to detect some inconspicuous lesions of DR. Compared to non-hierarchical methods, our methods and RSKD pay more attention to those lesions and biomarkers that are crucial for diagnosis. In addition, our proposed methods focus more on the independence between those lesions with different parent categories in a multi-label setting, rather than on large highlighted areas as in RSKD.

V-G Group-wise Analysis

V-G1 In Groups of Shots

In Fig. 12 we visualize the mAP increments of different classes from different shot-based groups to better see how different approaches affect the performance. The classical re-sampling strategy incorrectly samples the label co-occurrence distribution, resulting in a new relatively imbalanced status. Several categories in the tail classes obtain improvements in mAP, but the performance of almost all head and middle classes decreases, leading to an overall drop in performance. Moreover, we find that RW is agnostic to label co-occurrence, the learned weights of the classifier produce fairer predictions, but the improvements are still marginal. ASL and our proposed methods can help the model learn an overall well-generalized representation and a less biased classifier, contributing to the global performance gain.

V-G2 In Groups of Diseases

| Mild | Macula | Vessels | OD | Rare | |

|---|---|---|---|---|---|

| Original | 66.86 | 55.02 | 41.37 | 32.36 | 18.28 |

| HL [17] | 67.44 | 57.23 | 45.06 | 33.04 | 17.95 |

| Proposed | 71.33 | 58.22 | 49.32 | 38.63 | 27.58 |

Since we claim that hierarchy knowledge helps to train the model with a well-generalized representation by incorporating more semantic information during the training process, this information can naturally be shared across both coarse and fine categories. In Table VIII, we show how the proposed methods can improve performance in high/coarse-level classes. Here we select five representative coarse diseases, denoted by mild, macula, vessels, optic disc (OD), and rare diseases. Another widely used training loss in hierarchical classification Hierarchical Loss (HL) [17] is evaluated as a comparative study. We observe that hierarchy-aware pre-training can help improve most categories at a coarse level. However, it cannot solve the problem of data imbalance. Our proposed methods can effectively combine hierarchical learning and instance-wise resampling strategy, resulting in performance gain on these rare diseases.

VI Discussion & Conclusion

In this paper, we discuss the necessity and challenges of training a multiple retinal disease recognition model. We propose a novel framework that exploits the hierarchical information as prior knowledge for more efficient training on the feature representations. Moreover, an instance-wise class-balanced sampling strategy and hybrid knowledge distillation are introduced to address the multi-label long-tailed issue. For the first time, we train retinal diseases recognition models from two in-house and two public datasets, one of which includes more than one million fundus images covering more than 50 retinal diseases. The experiment results demonstrate the effectiveness of our proposed methods.

There are some limitations of this work. First, public fundus datasets for long-tailed retinal diseases are still scarce, and our proposed methods show less capacity for those datasets with limited training samples, e.g., ODIR. In addition to collecting more samples, a potential solution is to leverage the knowledge distillation technique to extract rich information from the pre-trained model, to improve the generalization ability of the model for downstream tasks, especially when the private training samples are not available. Second, our proposed methods are not end-to-end and some pre-training phases are needed, which may require more training time and additional adjustment of hyper-parameters towards different scenarios. Third, a human-designed hierarchical tree for retinal diseases is needed for our proposed hierarchy-aware pre-training. To this end, we also released a carefully designed three-level hierarchical tree covering more than 100 kinds of retinal diseases222Please refer to our supplementary files for more details.. We hope that it can contribute to the research community in addressing the multi-label long-tailed challenge of retinal disease recognition.

VII Acknowledgements

We would like to thank Dr. Danli Shi from Hong Kong Polytechnic University and Haodong Xiao from Xin Hua Hospital Affiliated to Shanghai Jiao Tong University School of Medicine, who helped to organize and design the hierarchical trees used in this work. We would also like to thank Airdoc for the philanthropic funding and the efforts in collecting and annotating the valuable in-house datasets.

References

- [1] B. Klein, “Overview of epidemiologic studies of diabetic retinopathy,” Ophthalmic Epidemiology, vol. 14, no. 4, pp. 179–183, 2007.

- [2] V. Gulshan, L. Peng, M. Coram, M. C. Stumpe, D. Wu, A. Narayanaswamy, S. Venugopalan, K. Widner, T. Madams, J. Cuadros et al., “Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs,” JAMA, vol. 316, no. 22, pp. 2402–2410, 2016.

- [3] I. Kocur and S. Resnikoff, “Visual impairment and blindness in europe and their prevention,” British Journal of Ophthalmology, vol. 86, no. 7, pp. 716–722, 2002.

- [4] O. Faust, A. U. Rajendra, E. Y. K. Ng, K. H. Ng, and J. S. Suri, “Algorithms for the automated detection of diabetic retinopathy using digital fundus images: A review,” Journal of Medical Systems, vol. 36, no. 1, pp. 145–157, 2012.

- [5] T. Li, W. Bo, C. Hu, H. Kang, H. Liu, K. Wang, and H. Fu, “Applications of deep learning in fundus images: A review,” Medical Image Analysis, p. 101971, 2021.

- [6] H. Fu, J. Cheng, Y. Xu, C. Zhang, D. W. K. Wong, J. Liu, and X. Cao, “Disc-aware ensemble network for glaucoma screening from fundus image,” IEEE transactions on medical imaging, vol. 37, no. 11, pp. 2493–2501, 2018.

- [7] R. Gargeya and T. Leng, “Automated identification of diabetic retinopathy using deep learning,” Ophthalmology, vol. 124, no. 7, pp. 962–969, 2017.

- [8] T. Araújo, G. Aresta, L. Mendonça, S. Penas, C. Maia, Â. Carneiro, A. M. Mendonça, and A. Campilho, “Dr— graduate: Uncertainty-aware deep learning-based diabetic retinopathy grading in eye fundus images,” Medical Image Analysis, vol. 63, p. 101715, 2020.

- [9] M. V. dos Santos Ferreira, A. O. de Carvalho Filho, A. D. de Sousa, A. C. Silva, and M. Gattass, “Convolutional neural network and texture descriptor-based automatic detection and diagnosis of glaucoma,” Expert Systems with Applications, vol. 110, pp. 250–263, 2018.

- [10] L. Ju, X. Wang, L. Wang, D. Mahapatra, X. Zhao, Q. Zhou, T. Liu, and Z. Ge, “Improving medical images classification with label noise using dual-uncertainty estimation,” IEEE transactions on medical imaging, 2022.

- [11] J. D. Steinmetz, R. R. Bourne, P. S. Briant, S. R. Flaxman, H. R. Taylor, J. B. Jonas, A. A. Abdoli, W. A. Abrha, A. Abualhasan, E. G. Abu-Gharbieh et al., “Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of avoidable blindness in relation to vision 2020: the right to sight: an analysis for the global burden of disease study,” The Lancet Global Health, vol. 9, no. 2, pp. e144–e160, 2021.

- [12] L. Ju, X. Wang, L. Wang, T. Liu, X. Zhao, T. Drummond, D. Mahapatra, and Z. Ge, “Relational subsets knowledge distillation for long-tailed retinal diseases recognition,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 3–12.

- [13] X. Wang, L. Ju, X. Zhao, and Z. Ge, “Retinal abnormalities recognition using regional multitask learning,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2019, pp. 30–38.

- [14] G. Quellec, M. Lamard, P.-H. Conze, P. Massin, and B. Cochener, “Automatic detection of rare pathologies in fundus photographs using few-shot learning,” Medical image analysis, vol. 61, p. 101660, 2020.

- [15] P. Massin, A. Chabouis, A. Erginay, C. Viens-Bitker, A. Lecleire-Collet, T. Meas, P.-J. Guillausseau, G. Choupot, B. André, and P. Denormandie, “Ophdiat©: A telemedical network screening system for diabetic retinopathy in the île-de-france,” Diabetes & metabolism, vol. 34, no. 3, pp. 227–234, 2008.

- [16] T. Wu, Q. Huang, Z. Liu, Y. Wang, and D. Lin, “Distribution-balanced loss for multi-label classification in long-tailed datasets,” in European Conference on Computer Vision. Springer, 2020, pp. 162–178.

- [17] A. Dhall, A. Makarova, O. Ganea, D. Pavllo, M. Greeff, and A. Krause, “Hierarchical image classification using entailment cone embeddings,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 836–837.

- [18] B. Zhou, Q. Cui, X.-S. Wei, and Z.-M. Chen, “Bbn: Bilateral-branch network with cumulative learning for long-tailed visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9719–9728.

- [19] B. Kang, S. Xie, M. Rohrbach, Z. Yan, A. Gordo, J. Feng, and Y. Kalantidis, “Decoupling representation and classifier for long-tailed recognition,” arXiv preprint arXiv:1910.09217, 2019.

- [20] W. Zhang, J. Zhong, S. Yang, Z. Gao, J. Hu, Y. Chen, and Z. Yi, “Automated identification and grading system of diabetic retinopathy using deep neural networks,” Knowledge-Based Systems, vol. 175, pp. 12–25, 2019.

- [21] Y. Yang, T. Li, W. Li, H. Wu, W. Fan, and W. Zhang, “Lesion detection and grading of diabetic retinopathy via two-stages deep convolutional neural networks,” in International conference on medical image computing and computer-assisted intervention. Springer, 2017, pp. 533–540.

- [22] W. M. Gondal, J. M. Köhler, R. Grzeszick, G. A. Fink, and M. Hirsch, “Weakly-supervised localization of diabetic retinopathy lesions in retinal fundus images,” in 2017 IEEE international conference on image processing (ICIP). IEEE, 2017, pp. 2069–2073.

- [23] A. Foo, W. Hsu, M. L. Lee, G. Lim, and T. Y. Wong, “Multi-task learning for diabetic retinopathy grading and lesion segmentation,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 08, 2020, pp. 13 267–13 272.

- [24] R. Zhao, X. Chen, X. Liu, Z. Chen, F. Guo, and S. Li, “Direct cup-to-disc ratio estimation for glaucoma screening via semi-supervised learning,” IEEE journal of biomedical and health informatics, vol. 24, no. 4, pp. 1104–1113, 2019.

- [25] A. Govindaiah, M. A. Hussain, R. T. Smith, and A. Bhuiyan, “Deep convolutional neural network based screening and assessment of age-related macular degeneration from fundus images,” in 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018). IEEE, 2018, pp. 1525–1528.

- [26] P. M. Burlina, N. Joshi, K. D. Pacheco, D. E. Freund, J. Kong, and N. M. Bressler, “Use of deep learning for detailed severity characterization and estimation of 5-year risk among patients with age-related macular degeneration,” JAMA ophthalmology, vol. 136, no. 12, pp. 1359–1366, 2018.

- [27] L. Ju, X. Wang, X. Zhao, H. Lu, D. Mahapatra, P. Bonnington, and Z. Ge, “Synergic adversarial label learning for grading retinal diseases via knowledge distillation and multi-task learning,” IEEE Journal of Biomedical and Health Informatics, 2021.

- [28] E. California Healthcare Foundation, “Diabetic retinopathy detection,” https://www.kaggle.com/c/diabetic-retinopathy-detection.

- [29] Y. Wang, W. Gan, J. Yang, W. Wu, and J. Yan, “Dynamic curriculum learning for imbalanced data classification,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 5017–5026.

- [30] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2980–2988.

- [31] Y. Cui, M. Jia, T.-Y. Lin, Y. Song, and S. Belongie, “Class-balanced loss based on effective number of samples,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 9268–9277.

- [32] K. Cao, C. Wei, A. Gaidon, N. Arechiga, and T. Ma, “Learning imbalanced datasets with label-distribution-aware margin loss,” arXiv preprint arXiv:1906.07413, 2019.

- [33] Z. Liu, Z. Miao, X. Zhan, J. Wang, B. Gong, and S. X. Yu, “Large-scale long-tailed recognition in an open world,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 2537–2546.

- [34] L. Xiang, G. Ding, and J. Han, “Learning from multiple experts: Self-paced knowledge distillation for long-tailed classification,” in European Conference on Computer Vision. Springer, 2020, pp. 247–263.

- [35] Y. Li, T. Wang, B. Kang, S. Tang, C. Wang, J. Li, and J. Feng, “Overcoming classifier imbalance for long-tail object detection with balanced group softmax,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 10 991–11 000.

- [36] J. Read, B. Pfahringer, G. Holmes, and E. Frank, “Classifier chains for multi-label classification,” Machine learning, vol. 85, no. 3, pp. 333–359, 2011.

- [37] M.-L. Zhang and Z.-H. Zhou, “A review on multi-label learning algorithms,” IEEE transactions on knowledge and data engineering, vol. 26, no. 8, pp. 1819–1837, 2013.

- [38] G. Quellec, M. Lamard, P.-H. Conze, P. Massin, and B. Cochener, “Rfmid challenge,” https://riadd.grand-challenge.org/Home/.

- [39] P. University, “Odir challenge,” https://odir2019.grand-challenge.org/.

- [40] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in European conference on computer vision. Springer, 2014, pp. 740–755.

- [41] L.-Z. Guo, Z. Zhou, J.-J. Shao, Q. Zhang, F. Kuang, G.-L. Li, Z.-X. Liu, G.-B. Wu, N. Ma, Q. Li et al., “Learning from imbalanced and incomplete supervision with its application to ride-sharing liability judgment,” in Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2021.

- [42] S. Zhang, Z. Li, S. Yan, X. He, and J. Sun, “Distribution alignment: A unified framework for long-tail visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 2361–2370.

- [43] G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” Computer Science, vol. 14, no. 7, pp. 38–39, 2015.

- [44] H. Guo and S. Wang, “Long-tailed multi-label visual recognition by collaborative training on uniform and re-balanced samplings,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 15 089–15 098.

- [45] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [46] E. Ben-Baruch, T. Ridnik, N. Zamir, A. Noy, I. Friedman, M. Protter, and L. Zelnik-Manor, “Asymmetric loss for multi-label classification,” arXiv preprint arXiv:2009.14119, 2020.

- [47] A. Iscen, A. Araujo, B. Gong, and C. Schmid, “Class-balanced distillation for long-tailed visual recognition,” arXiv preprint arXiv:2104.05279, 2021.

- [48] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-cam: Visual explanations from deep networks via gradient-based localization,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 618–626.